Model Provenance via Model DNA

Abstract

Understanding the life cycle of the machine learning (ML) model is an intriguing area of research (e.g., understanding where the model comes from, how it is trained, and how it is used). Our focus is on a novel problem within this domain, namely Model Provenance (MP). MP concerns the relationship between a target model and its pre-training model and aims to determine whether a source model serves as the provenance for a target model. In this paper, we formulate this new challenge as a learning problem, supplementing our exploration with empirical discussions on its connections to existing works. Following that, we introduce “Model DNA”, an interesting concept encoding the model’s training data and input-output information to create a compact machine-learning model representation. Capitalizing on this model DNA, we establish an efficient framework consisting of three key components: DNA generation, DNA similarity loss, and a provenance classifier, aimed at identifying model provenance. We conduct evaluations on both computer vision and natural language processing tasks using various models, datasets, and scenarios to demonstrate the effectiveness of our approach.

M2002

1 Introduction

In recent years, machine learning has become a ubiquitous presence in various fields, revolutionizing industries ranging from healthcare to finance [27, 7]. With the release of OpenAI’s GPT-4, the capabilities of machine learning are poised to reach even greater heights111https://openai.com/research/gpt-4. However, as the value of data as an emerging asset class becomes increasingly recognized, the issue of understanding the entire life cycle of the model, e.g., understanding where the model comes from, how it is trained, and how it is used, has become a pressing concern.

In this paper, we explore a novel and important problem within the research direction of understanding the machine learning model life cycle, namely Model Provenance (MP). This problem aims to investigate the relationship between two models, such as understanding whether a target model is derived from a source model. To illustrate this problem, consider a real-world business scenario in which an AI company seeks to protect the intellectual property of its machine learning model trained on private data using a significant amount of computing power. The company wishes to ensure that the model is only used by authorized users. However, in practice, authorized users may share the model with unauthorized users without the company’s permission, or the model may be stolen and used by an unauthorized user. The unauthorized user may then use the stolen model to develop their own products based on techniques such as continual learning [23]. This situation presents a significant challenge: how can an AI company identify whether a source model, owned by an authorized user, is the provenance of a target model developed by an unauthorized user?

A relevant area of study involves investigating the influence of pre-training models in continual learning or lifelong learning. For instance, recent work by [20] has shown that generic pre-training can implicitly counteract the negative effects of catastrophic forgetting when learning multiple tasks sequentially, particularly when compared to models initialized randomly. Additionally, research conducted by [32] highlights the occurrence of catastrophic forgetting in the context of continual learning scenarios. Furthermore, certain works have explored connections between the outputs of different models [6, 14]. While previous research has explored the relationship across various tasks to understand the phenomenon of catastrophic forgetting or the outputs of different models, there is currently no established method for identifying the relationship between different models across diverse tasks, to the best of our knowledge.

In this paper, we address the problem of model provenance and begin by formalizing this problem and conducting an empirical study to evaluate the performance of the target model on the source model’s training data (Section 3), whose results inspire us to ask whether we can create a description to explain the relationship between the source and target models. Then, we introduce a novel concept of model DNA, which represents the unique characteristics of a machine learning model, and propose a framework for model DNA generation and model provenance identification. The effectiveness of our approach is demonstrated through both Computer Vision (CV) and Natural Language Processing (NLP) tasks.

The contributions of the paper are summarized as follows:

-

•

This paper investigates a critical aspect of understanding the machine learning models’ life cycle - identifying the homologous relations between a source model and its subsequent target versions. We first formulate this challenge as a learning problem and provide empirical discussions on its relation to existing works.

-

•

We propose a novel machine learning model representation, called Model DNA, which combines data-driven and model-driven approaches to encode the training data and input-output information as a compact representation of the model (Section 4.1). In DNA space, we can easily measure the similarity between models and track their usage and evolution over time. Based on this idea, we introduce the Model Provenance framework, which includes Model DNA generation and provenance identification, providing a practical solution for identifying relationships between models and better understanding the provenance of machine learning models.

-

•

We perform experiments on various commonly used CV and NLP benchmark datasets along with DNN structure models to assess the effectiveness of our proposed framework. To enhance the understanding, we present comprehensive visualizations of the distribution of Model DNA in the DNA space (Section 5).

2 Related work

Lifelong learning. Lifelong machine learning is a learning paradigm that continuously accumulates past knowledge and adapts it to future learning and problem-solving [16, 5, 23]. This involves performing a sequence of learning tasks, , each with its corresponding dataset of different types and from different domains. When faced with a new task (called the current task) with its data , the learner can use past knowledge to aid in learning. One of the major challenges in lifelong learning is catastrophic forgetting, where the model forgets previously learned information when learning new tasks. To mitigate this phenomenon, approaches can be categorized into three groups: (1) regularization-based approaches [13, 36], (2) memory-based approaches [18, 3, 34], and (3) network expansion-based approaches [26, 1, 29]. This paper aims to distinguish the relationship between the model from the previous task and the current task, which is an under-explored direction in existing lifelong learning works.

Representation Learning. Representation learning refers to the process of learning a parametric mapping from the raw input data domain to a feature vector, in the hope of capturing and extracting more abstract and useful concepts that can improve performance on a range of downstream tasks. The aim of learning representations of the data is to make it easier to extract useful information when building classifiers or other predictors. Representation learning has played a tremendous role in different frameworks [2]. Recently, Contrastive Representation Learning (CRL) is widely used in NLP and CV [17]. It can be considered as self-supervised learning by comparing among different samples [4, 33]. In this paper, we explore a way of machine learning model representation. Our approach involves a data-driven and model-driven representation learning framework that constructs a model representation in a latent space. Additionally, there are some works to investigate the relationship of Neural Network representations [6, 14]. This work is novel since it is the first time, as far as we know, that such an approach is proposed for representing the ML model.

Data Provenance. The area of data provenance is also relevant and falls under the provenance research family. In general, the problem is defined by auditing if a certain piece of data has been used to train a machine learning model [30, 22, 19]. It is also called membership inference attacks [28, 10]. A data provenance technique (i.e., shadow training) has been widely studied, which can successfully audit deep learning-based models [28, 30]. The main idea is to use multiple shadow models to imitate the behavior of the target model. As the training data for the shadow models are known, the target model can be trained using the labeled outputs of the shadow models. However, this is a data-level provenance problem, whereas our focus is on the model-level provenance problem.

3 Model Provenance (MP)

3.1 Definition

Consider a dataset where each is a data instance and is its associated class label, a machine learning model is learned from by using an algorithm architecture . Let be a dataset used to learn a machine learning model . If is learned using the pre-training model with the same algorithm architecture , we refer to as the source model and as the homologous model of . Similarly, let be a machine learning model learned from the dataset but based on random initialization or pre-training without using . We refer as non-homologous to .

The problem of Model Provenance (MP) is to find a function such that for a given source model and any target model ,

| (1) |

When , we say is the provenance of .

Remark: The problem of Model Provenance (MP) can have various variations based on the conditions applied to the target model . For example, there would be a lot of downstream models obtained by fine-tuning, distillation, pruning, etc. In this paper, our primary focus is on the MP scenario within the fine-tuning setting, specifically when shares the same structure as , and its training solely depends on the pre-training model . Our exploration of MP is initially focused on this easier condition, and we intend to explore more complex scenarios in future research.

3.2 Discussion

In the field of continual learning, there have been several studies focused on quantifying the relationship between homologous models, such as the analysis of catastrophic forgetting [21, 11, 20]. For example, [21] provided a theoretical bound on forgetting in sequential learning. Let be the loss on the training dataset with model parameter , and and be the optimal or convergent parameters after training the source and homologous models sequentially. Specifically, they showed that

| (2) |

where and , respectively, represent the losses on source training dataset with parameters and , , and is the largest eigenvalue of the Hessian matrix . The eigenvalues of the Hessian matrix are indicative of the curvature of the loss function [11], where smaller eigenvalues imply a flatter loss function. Thus, is considered as a proxy for the flatness of the loss function (lower is flatter). Meanwhile, empirical studies conducted by [20] and [21] show that initializing models with pre-trained weights results in a relatively flat task minima, and flatter models tend to have smaller than less flat ones.

These existing works have motivated us to a straightforward solution for model provenance, i.e., using the difference of to distinguish between homologous and non-homologous models. We can expect that would be smaller for homologous models compared to non-homologous models based on the above analysis. However, we found that this solution did not work well in practice. The main issue was that it is impossible to know how flat the target model is, which makes it difficult to use the difference of as a reliable indicator of model provenance.

Furthermore, we conducted experiments using the standard continual learning setup on the image classification task [32, 24] to show the relationship between homologous models. Specifically, we examined two model architectures, ResNet18 [9] and AlexNet [15], on the CIFAR10 and CIFAR100 datasets. A source model is first trained on CIFAR10. Then we randomly select ten classes from CIFAR100 to train target models . Note that is learned by initializing its parameters using those of the source model . In Figure 1, we present the results of the experiments conducted on ResNet18, and the results for AlexNet can be found in [mu2023model]. The background color indicates the different training phases. The red color represents the training of source model on the CIFAR10 dataset, while the blue color represents the training of target model on the CIFAR100 dataset. The red line corresponds to the accuracy of testing the model on the training data of CIFAR10, while the blue line represents the accuracy of testing the model on the training data of CIFAR100. In the blue background part, we train the model based on the parameters of the model obtained in the red background part.

Figure 1 (a) demonstrates that the predictive accuracy of is significantly reduced when evaluated on the CIFAR10 dataset. The red section represents a model (called source model) trained on the source domain (CIFAR10), while the blue section represents this model (called target model) continuously trained on the target domain (CIFAR100). The red line indicates model evaluation on CIFAR10, and the blue line represents model evaluation on CIFAR100. This observation suggests that the training data of the source model may have been severely forgotten in the homologous model (i.e., large ). Figures 1 (b)-(d) provide further insight into the relationship between the source model and the homologous model . They show the predictive accuracy of on the CIFAR10 dataset when different numbers of the last layers of are replaced with corresponding layers in . In Figure 1 (b), within the blue section, we replace the model’s last layer with the model from the red section. Consequently, the model exhibits reduced performance when evaluated on CIFAR10 (red line). Similarly, in Figures 1 (c) and (d), we extended this by replacing the model’s last two/three layers with the ones from the red section, respectively. We can observe that as we replace more layers in with those from , the predictive accuracy of on the CIFAR10 dataset increases. In Figure 1 (e), we consider a scenario where in the blue section, the (target) model is not initialized by the (source) model from the red section. Instead, the (target) model is trained with random initialization. In this setting, we replace the model’s last three layers with the corresponding layers from the (source) model in the red section (as depicted in Figure 1 (a)). Figure 1 (e) shows that even with the last layers replaced, the predictive accuracy of is still poor. The green line in Figure 1 (e) represents the training accuracy of . Figures 1 (b) and (e) illustrate the presence of a relationship between the source model and its homologous model. However, non-homologous models, do not exhibit any discernible relationship even by replacing the layers between them.

Overall, our discussion has demonstrated the existence of a relationship between the source model and its homologous model, as shown by the improvement in predictive accuracy when replacing the last layers of the target model with those of the source model. These results led us to pose the question: “Can we establish a relationship between a source model and its homologous or non-homologous model based on the training data of the source model?” and motivated us to introduce the “Model DNA” and Model Provenance framework.

4 The proposed framework

4.1 Model DNA

In the field of biology, deoxyribonucleic acid (DNA) is known as the molecule responsible for carrying genetic information essential for the growth and operation of organisms [25]. In the context of Machine Learning (ML), we introduce the concept of model DNA as a form of representation for an ML model. Drawing parallels to prior research in representation learning [2], this model representation aids in identifying differences among various model iterations across diverse tasks. Furthermore, it facilitates comparisons and assessments of similarity between different models.

In the domain of machine learning, a typical process involves training an ML model using a dataset and an algorithm architecture . With this understanding, our approach to DNA generation factors in both the impact of the dataset and the model’s input-output relationship. Thus, we present the model DNA definition:

Definition 1.

(Model DNA): Let be a set of training data of a machine learning model . We define the model DNA as a set of DNA fragment , where each DNA fragment is corresponding to a training sample of the model. It can be generated as where is an approach for DNA generation. A DNA fragment is generated by for .

Through the conceptualization of model DNA, we create a latent space of model representations encompassing the diverse DNA of various ML models. In this space, we can quantify the relationships between different ML models. Here, we assume that a DNA fragment is a vector by , where . It is important to note that we generally assume that the DNA of homologous models will be positioned closer, while the DNA of non-homologous models will be relatively distant from each other. This latent space leads to several key properties of model DNA, as described below.

Let be the model DNA of any model and represent the distance function such that is the distance between DNA fragments of any two models and . The following properties of DNA latent space are desired:

-

•

Different ML models have different DNA: If , .

-

•

The homologous models should have similar DNA fragments and vice versa: , where , and . is trained based on the pre-training model , whereas is trained without using .

-

•

The DNA fragments from the same ML model should be similar: , should be minimized for .

In the following, we present our framework aimed at tackling the MP problem. This framework has been meticulously crafted to adhere to the aforementioned model DNA properties. Our approach takes into account not only the training data of the source model but also the model’s input-output to produce a comprehensive model DNA representation.

4.2 MGMP

We introduce a framework termed Model DNA Generation and Model Provenance identification (MGMP), which operates on the principles of deep representation learning. The MGMP framework comprises three primary components: DNA generation, DNA similarity loss, and provenance classifier, as depicted in Figure 2. The process commences with the source model’s training data, which is fed into the DNA generator alongside the source and target models. This concatenation produces outputs from the DNA generator, source model, and target model, which are then amalgamated to generate the model DNA, exemplified by the integration of DNA generator outcomes and model predictions. Subsequently, we formulate the model DNA for both the source and target models. To preserve information relevant to homologous and non-homologous models, a DNA similarity loss is incorporated. Finally, we exploit the model DNA to train a binary prediction network, allowing us to infer provenance outcomes. Each component of the MGMP is described in subsequent sections.

4.2.1 DNA generation

The process of DNA generation involves a combination of data-driven and model-driven techniques aimed at acquiring a representation for an ML model. Inspired by [8], we initiate the process by initializing a generator using a standard deep neural network architecture (it’s important to emphasize that the generator could potentially be constructed using more intricate deep generative models). This generator encompasses multiple hidden layers, employing the rectified linear unit (ReLU) activation function.

Given an input data point , the generator produces a latent representation denoted as (e.g., the output of the last layer). Here, signifies the learned DNA generation function realized through the deep network. The MGMP framework utilizes the training data of the source model as its own training data. Concretely, each training data point is individually fed into the DNA generator, source model, and target model, as follows:

| (3) |

Model pool. The primary objective of the proposed framework is to establish a representation space, enabling the comparison of distinct models, encompassing both those that are homologous and those that are not. To realize this objective, we utilize a model pool that encompasses a variety of models, each demonstrating different relationships with the source model. In each training mini-batch, we select a pair of models to represent the target model (as shown in Figure 2), where is learned based on the source model , while is learned based on random initialization.

For each input , the framework produces four distinct outputs. These include the foundational DNA representation , the output from the source model, and the outputs and from the homologous and non-homologous target models respectively.

DNA assemble. After that, the outputs of the DNA generator and the source/target models are merged to create a model DNA fragment for each input . The specific approach for combining these outputs can vary. In this study, we assume that the outputs are of the same dimension and are combined through addition. As a result, for each input , we can generate three distinct types of DNA fragments:

| (4) |

4.2.2 DNA similarity loss

The DNA similarity loss is designed with a specific purpose: to measure the similarity between the DNA of two models and to satisfy the properties of DNA latent space. We achieve this by employing a metric that ensures that the similarity of in the latent space is not disclosed to , which is inspired by Contrastive Learning [4, 35]. In more detail, our approach does not just preserve the relationships like and based on a single input . Instead, it takes into account the amalgamation of information across relationships present in , , and . This implies that we incorporate more relationships into the loss function, depicted in Figure 3. The solid line represents relationships used in prior works, while the dashed line signifies the relationship between distinct DNA fragments within a model.

| Source | Model pool | Evaluation | Performance | |||

| Data | Model | Data | Model | Data | Model | Accuracy |

| CIFAR10 | ResNet18 | CIFAR100 (3 6 8 9 5) | ResNet18 | CIFAR100 (1 2 4 7 0) | ResNet18 | 0.9193 0.0232 (0.75290.0347) |

| CIFAR10 | ResNet18 | CIFAR100 (6 7 0 1 2) | ResNet18 | CIFAR100 (3 4 5 8 9) | ResNet18 | 0.9511 0.0432 (0.82190.0332) |

| CIFAR10 | ResNet18 | CIFAR100 (9 3 7 8 1) | ResNet18 | CIFAR100 (4 2 5 0 6) | ResNet18 | 0.9607 0.0483 (0.8030 0.0451) |

| CIFAR10 | AlexNet | CIFAR100 (3 6 8 9 5) | AlexNet | CIFAR100 (1 2 4 7 0) | AlexNet | 0.9025 0.0472 (0.72200.0687 ) |

| CIFAR10 | AlexNet | CIFAR100 (6 7 0 1 2) | AlexNet | CIFAR100 (3 4 5 8 9) | AlexNet | 0.9247 0.0392 (0.84710.0417) |

| CIFAR10 | AlexNet | CIFAR100 (9 3 7 8 1) | AlexNet | CIFAR100 (4 2 5 0 6) | AlexNet | 0.9106 0.0331 (0.82310.0442) |

| CIFAR10 | ViT-small | CIFAR100 (3 6 8 9 5) | ViT-small | CIFAR100 (1 2 4 7 0) | ViT-small | 0.91640.0224 (0.83260.0306) |

| Source | Model pool | Evaluation | Performance | |||

| Data | Model | Data | Model | Data | Model | Accuracy |

| AGNews | DistilBERT | Amazon / DBPedia/YahooQA | DistilBERT | 20Newsgroups/Yelp | DistilBERT | 0.83770.0778 (0.72030.0936) |

| YahooQA | DistilBERT | 20Newsgroup/DBPedia/Amazon | DistilBERT | AGNews/Yelp | DistilBERT | 0.86250.0903 (0.78750.1003) |

| 20Newsgroups | DistilBERT | YahooQA/Yelp/20Newsgroups | DistilBERT | AGNews/DBPedia | DistilBERT | 0.93950.0554 (0.83710.0964) |

| AGNews | BERT-base | Amazon / DBPedia/YahooQA | BERT-base | 20Newsgroup/Yelp | BERT-base | 0.8656 0.0802 (0.70740.0918) |

Let denote the dot product between normalized vectors and (i.e. cosine similarity). We consider a training dataset of examples and define a contrastive DNA generation loss on pairs of and . The loss function is as follows:

| (5) |

where denotes a temperature parameter [4].

We also consider the DNA distance in each model side:

| (6) | ||||

The DNA similarity loss is then computed using

| (7) |

Here, quantifies the disparity between the DNA of the source model and the target model, adhering to the first and second properties of the DNA latent space. On the other hand, assesses the DNA stemming from the same model, aligning with the third property of the DNA latent space. The final term corresponds to regularization applied to the model parameters of the DNA generator.

Remark: In practice, after assembling the DNA fragments, we consider a cosine distance in our final decision (Eqn. (5) and (6)). Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths. It follows that the cosine similarity does not depend on the magnitudes of the vectors, but only on their angle. Therefore, through DNA assemble with addition, the angles between the representations of the source and target models change even though the vectors only linearly move. That is why the generator can improve the results. Meanwhile, we emphasize that the part of DNA assemble is flexible, which means it can be replaced by different combining methods, e.g., concatenation or adding some networks (e.g., fully connected layers).

4.2.3 Provenance classifier

The outcome prediction network (i.e., a classifier) is employed to estimate the results of provenance prediction by taking DNA representations as input. Let denote the function learned by the outcome prediction network. We concatenate and (or and ) as input . The loss function is as follows:

| (8) |

where is the truth label on each input and . Note that if and are homologous and otherwise.

4.2.4 Joint optimization

Both the DNA generation and the outcome prediction network are conventional feed-forward neural networks, enriched with Dropout [31] and the Rectified Linear Unit (ReLU) activation function. The global optimization problem is tackled by jointly optimizing the overall loss functions described in Eqn. (7) and (8). Adam [12] is adopted to solve the optimization problem.

4.2.5 Prediction

In the prediction phase, our framework is equipped to provide predictions at various levels of granularity concerning the data. Specifically, we can choose any model (e.g., ) to ascertain its homology with the source model , based on either the DNA fragment or the DNA set . For predictions at the level of DNA fragments, the process is carried out as follows:

| (9) |

where is the concatenation of DNA fragment and corresponding to for . For the granularity of the model DNA set, we can compute the average results by considering the whole DNA fragments: if , and otherwise. In practice, we can also employ a threshold for determining the final prediction, i.e., . and can be seen as a degree of similarity of DNA. The choice of threshold depends on the specific context and requirements of the problem at hand.

5 Experiments

To evaluate the effectiveness of our proposed framework, we conduct experiments on several commonly used benchmark datasets in Computer Vision and Natural Language Processing.

| Task | Generator | || | || |

| CV | ResNet50 | 10 | 20 |

| NLP | DistilBERT | 768 | 1536 |

5.1 Experimental settings.

All experiments were conducted using Python programming language on a machine equipped with an Intel Core CPU, 64 GB of memory, and an NVIDIA Corporation GV100GL [Tesla V100 SXM2 32GB] GPU. We show the details of our proposed framework MGMP in Table 3. In the Computer Vision (CV) task, the generator’s structure is set using the ResNet50 architecture. ResNet50 is a popular Convolutional Neural Network (CNN) architecture commonly used for image classification tasks. It consists of 50 layers, including convolutional layers, pooling layers, and fully connected layers. The model parameters, such as the learning rate, are set to their default values. In the NLP task, the structure of the generator is set as DistilBERT, and the model parameters are also set to their default values. We employ three fully connected layers as the classifier in each task. Details regarding the impact of various generators can be found in Section 5.5.

| Dataset Name | Number of Samples | Features |

| CIFAR-10 | 50,000 | RGB (3232) |

| CIFAR-100 | 50,000 | RGB (3232) |

| AGNews | 20,000 | Text (4 classes) |

| YahooQA | 20,000 | Text (10 classes) |

| 20 Newsgroups | 13,370 | Text (20 classes) |

| DBPedia | 20,000 | Text (14 classes) |

| Amazon Reviews | 20,000 | Text (5 classes) |

| Yelp Review | 20,000 | Text (5 classes) |

Table 4 summarizes the datasets used in this study, including the dataset name, a brief description, the number of samples, and the types of features in each dataset. To accommodate the computational requirements and ensure efficient experimentation, we sampled a subset (20,000) from some NLP datasets as the training data.

5.2 Evaluation on CV tasks

Setup. In the CV experiment, we focus on a common image classification task. Here’s an example to illustrate the process: we start by initializing the model architecture, such as ResNet18 [9], and then train the source model using CIFAR10. Then we utilize CIFAR100 to create model pools and evaluation datasets. To achieve this, we partition CIFAR100 into 10 disjoint 10-way classification subsets. Out of these, we randomly select 5 subsets (e.g., 3, 6, 8, 9, 5) to train 10 models (consisting of 5 homologous and 5 non-homologous models) that become part of the model pool. The remaining subsets are used to train multiple homologous and non-homologous models, which then serve as evaluation data. This setup is further detailed in the first row of Table 1.

Experimental results. Table 1 presents the results of our proposed method under ResNet18, AlexNet, and ViT-small model structures on various datasets. We show performance on the granularity of the DNA fragment. Let be the total number of training data of the source model and () be the total number of data that can be identified correctly if we test the homologous (non-homologous) model as the target model. The test accuracy is defined as . Our method’s performance is consistently superior across all datasets, demonstrating its effectiveness in verifying the provenance of models.

To the best of our knowledge, no method has been designed for the MP task. We use MGMP with and without the DNA generator module (Figure 2) as a baseline method and observe that the inclusion of the DNA generator improves the provenance prediction accuracy. Specifically, the test accuracy obtained without the DNA generator (shown in parentheses) is lower, highlighting the importance of the DNA generator module in our proposed framework.

To provide a thorough insight into how DNA fragments contribute to the overall prediction, we analyze their influence by assessing performance at the DNA fragment level. Additionally, for assessing performance in terms of the DNA level, a threshold (e.g., 0.9) can be utilized to make final predictions. As demonstrated in Table 1, we consistently achieve the right provenance prediction in each row.

5.3 Evaluation on NLP tasks

Setup. For text classification tasks on various benchmark NLP datasets, we utilize the DistilBERT and BERT-base architectures as shown in Table 2. Similar to the experimental setup for CV tasks, we randomly select one dataset to train the source model, and three datasets to form the model pool. For evaluation, we use two datasets to train homologous or non-homologous models.

Experimental results. The results in Table 2 demonstrate that MGMP performs exceptionally well on the text classification task when applied to the DistilBERT model. Similarly, MGMP without the DNA generator achieves worse accuracy than the original MGMP. These results are consistent with previous evaluations on CV task. The consistent findings across both the text classification and computer vision tasks emphasize the robustness and generalizability of MGMP.

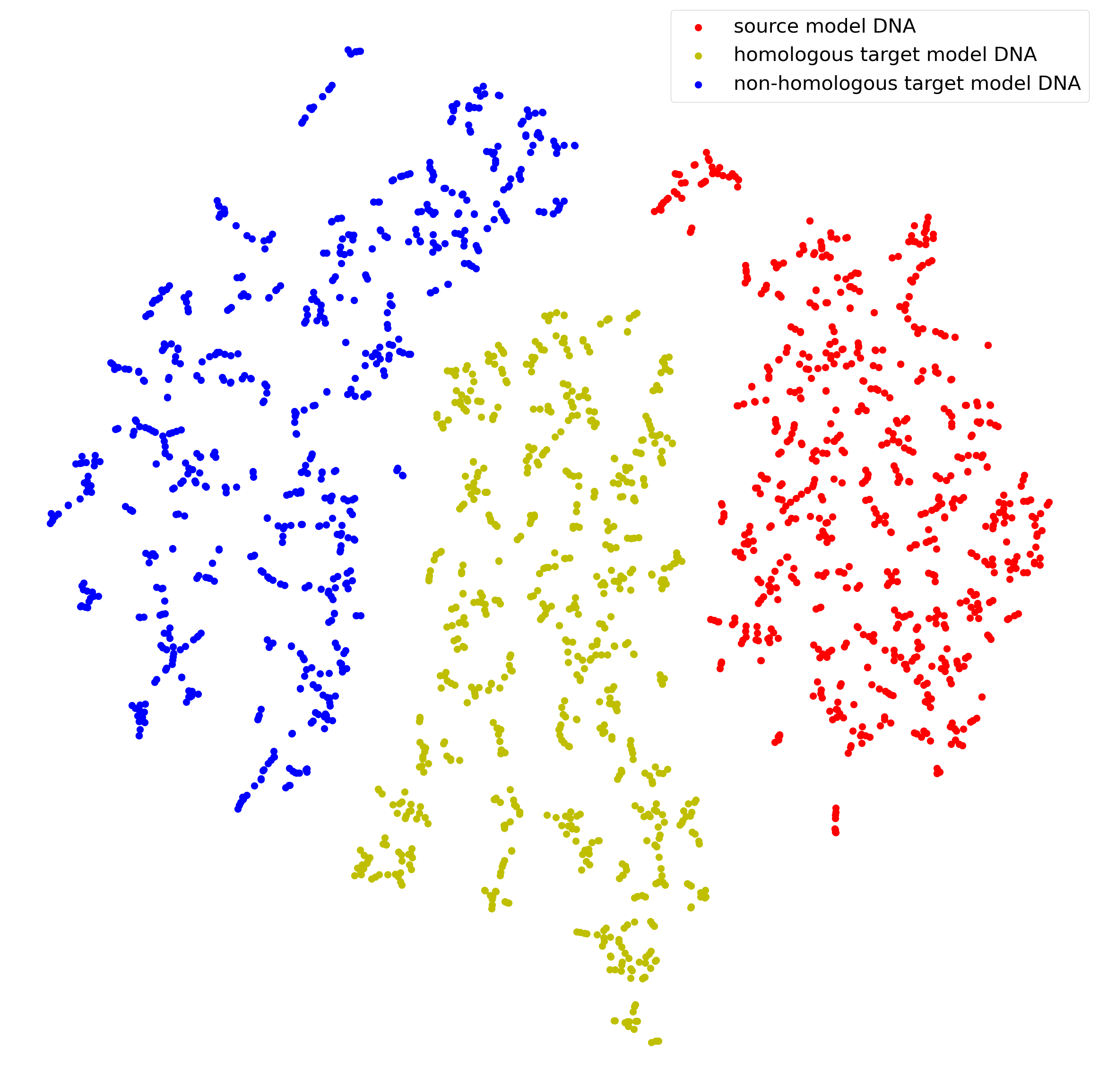

5.4 Experimental visualization.

We visualize the generated DNA fragments of the first CV experiment (i.e., the first row of Table 1). Figure 4 shows T-SNE visualizations of the space of DNA fragments results. The red points represent the generated DNA of the source model, the yellow ones are DNA from homologous target models, and the blue ones are DNA from non-homologous target models. We observe that the points belonging to homologous models are almost much closer to each other than points from non-homologous models. Specifically, the distance between the red and yellow points is much smaller than the distance between the red and blue points. This suggests that our framework is effective in capturing the similarity between homologous models and distinguishing them from non-homologous models.

5.5 Ablation study

The analysis of the MGMP framework aims to gain a comprehensive understanding of the individual components and processes within the approach. In this study, we specifically investigate the influence of different generator structures and various methods of DNA assembly. To isolate the effects of these factors, we conduct separate evaluations where one component is varied while keeping other components fixed. To establish a baseline experimental set, we consider the first row of Table 1 as the initial configuration.

We observed that the performance of different generator structures remained consistent across various evaluation metrics. We found that the DNA assembly process had a notable effect on the results. Different ways of assembling the DNA fragments resulted in variations in performance. Our results indicate that increasing the dimensionality of the DNA representation can lead to improved results within the MGMP framework. By incorporating additional dimensions into the DNA, we can capture more nuanced information and potentially enhance the performance of the model. By analyzing the results in Table 5, we gain insights into the contributions of individual components and their interactions within the MGMP framework. This analysis helps us identify the optimal configuration and provides valuable guidance for future improvements and refinements of the approach.

| Component | Performance | |

| Generator | DNA assemble () | Accuracy |

| ResNet50 | addition (10) | 0.9193 |

| ViT-samll | addition (10) | 0.9004 |

| ViT-base | addition (10) | 0.9137 |

| ResNet50 | concatenate (20) | 0.9264 |

| ResNet50 | concatenate (60) | 0.9416 |

| ResNet50 | concatenate (110) | 0.9478 |

6 Conclusion

In this paper, we present an efficient model representation learning framework for tackling an important problem, namely Model Provenance. We introduce a new idea of model DNA to represent a machine learning model. The proposed framework first constructs the model DNA space which preserves similarity information between homologous models and enhances the differences between non-homologous models, and then uses model DNA to obtain provenance outcomes. Experimental results on different tasks show that our method achieves good performance in the MP task.

References

- Aljundi et al. [2017] R. Aljundi, P. Chakravarty, and T. Tuytelaars. Expert gate: Lifelong learning with a network of experts. In CVPR, pages 7120–7129, 2017.

- Bengio et al. [2013] Y. Bengio, A. C. Courville, and P. Vincent. Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence., 35(8):1798–1828, 2013.

- Chaudhry et al. [2019] A. Chaudhry, M. Ranzato, M. Rohrbach, and M. Elhoseiny. Efficient lifelong learning with A-GEM. In ICLR, 2019.

- Chen et al. [2020] T. Chen, S. Kornblith, M. Norouzi, and G. E. Hinton. A simple framework for contrastive learning of visual representations. In ICML, pages 1597–1607, 2020.

- Chen and Liu [2018] Z. Chen and B. Liu. Lifelong Machine Learning, Second Edition. Synthesis Lectures on Artificial Intelligence and Machine Learning. Morgan & Claypool Publishers, 2018.

- Csiszárik et al. [2021] A. Csiszárik, P. Korösi-Szabó, Á. K. Matszangosz, G. Papp, and D. Varga. Similarity and matching of neural network representations. In NeurIPS, pages 5656–5668, 2021.

- Dixon et al. [2020] M. F. Dixon, I. Halperin, and P. Bilokon. Machine learning in Finance, volume 1170. Springer, 2020.

- Goodfellow et al. [2020] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. C. Courville, and Y. Bengio. Generative adversarial networks. Communications of the ACM, 63(11):139–144, 2020.

- He et al. [2016] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- Hu et al. [2021] H. Hu, Z. Salcic, G. Dobbie, and X. Zhang. Membership inference attacks on machine learning: A survey. CoRR, abs/2103.07853, 2021.

- Keskar et al. [2017] N. S. Keskar, D. Mudigere, J. Nocedal, M. Smelyanskiy, and P. T. P. Tang. On large-batch training for deep learning: Generalization gap and sharp minima. In ICLR, 2017.

- Kingma and Ba [2015] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. In Y. Bengio and Y. LeCun, editors, ICLR, 2015.

- Kirkpatrick et al. [2017] J. Kirkpatrick, R. Pascanu, N. Rabinowitz, J. Veness, G. Desjardins, A. A. Rusu, K. Milan, J. Quan, T. Ramalho, A. Grabska-Barwinska, et al. Overcoming catastrophic forgetting in neural networks. Proceedings of the national academy of sciences, 114(13):3521–3526, 2017.

- Kornblith et al. [2019] S. Kornblith, M. Norouzi, H. Lee, and G. E. Hinton. Similarity of neural network representations revisited. In ICML, pages 3519–3529, 2019.

- Krizhevsky et al. [2012] A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In NIPS, pages 1106–1114, 2012.

- Lange et al. [2022] M. D. Lange, R. Aljundi, M. Masana, S. Parisot, X. Jia, A. Leonardis, G. G. Slabaugh, and T. Tuytelaars. A continual learning survey: Defying forgetting in classification tasks. IEEE TPAMI, 44(7):3366–3385, 2022.

- Le-Khac et al. [2020] P. H. Le-Khac, G. Healy, and A. F. Smeaton. Contrastive representation learning: A framework and review. IEEE Access, 8:193907–193934, 2020.

- Lopez-Paz and Ranzato [2017] D. Lopez-Paz and M. Ranzato. Gradient episodic memory for continual learning. In NIPS, pages 6467–6476, 2017.

- Maini et al. [2021] P. Maini, M. Yaghini, and N. Papernot. Dataset inference: Ownership resolution in machine learning. In ICLR, 2021.

- Mehta et al. [2021] S. V. Mehta, D. Patil, S. Chandar, and E. Strubell. An empirical investigation of the role of pre-training in lifelong learning. CoRR, abs/2112.09153, 2021.

- Mirzadeh et al. [2020] S. Mirzadeh, M. Farajtabar, R. Pascanu, and H. Ghasemzadeh. Understanding the role of training regimes in continual learning. In NeurIPS, 2020.

- Mu et al. [2022] X. Mu, M. Pang, and F. Zhu. Data provenance via differential auditing. CoRR, abs/2209.01538, 2022.

- Parisi et al. [2019] G. I. Parisi, R. Kemker, J. L. Part, C. Kanan, and S. Wermter. Continual lifelong learning with neural networks: A review. Neural Networks, 113:54–71, 2019.

- Paul et al. [2021] M. Paul, S. Ganguli, and G. K. Dziugaite. Deep learning on a data diet: Finding important examples early in training. In NeurIPS, pages 20596–20607, 2021.

- Ridley [2000] M. Ridley. Genome: the autobiography of a species in 23 chapters. Nature Medicine, 6(1):11–11, 2000.

- Rusu et al. [2016] A. A. Rusu, N. C. Rabinowitz, G. Desjardins, H. Soyer, J. Kirkpatrick, K. Kavukcuoglu, R. Pascanu, and R. Hadsell. Progressive neural networks. CoRR, abs/1606.04671, 2016.

- Shehab et al. [2022] M. Shehab, L. Abualigah, Q. Shambour, M. A. Abu-Hashem, M. K. Y. Shambour, A. I. Alsalibi, and A. H. Gandomi. Machine learning in medical applications: A review of state-of-the-art methods. Computers in Biology and Medicine, 145:105458, 2022.

- Shokri et al. [2017] R. Shokri, M. Stronati, C. Song, and V. Shmatikov. Membership inference attacks against machine learning models. In S&P, pages 3–18, 2017.

- Sodhani et al. [2020] S. Sodhani, S. Chandar, and Y. Bengio. Toward training recurrent neural networks for lifelong learning. Neural Computing, 32(1):1–35, 2020.

- Song and Shmatikov [2019] C. Song and V. Shmatikov. Auditing data provenance in text-generation models. In KDD, pages 196–206, 2019.

- Srivastava et al. [2014] N. Srivastava, G. E. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(1):1929–1958, 2014.

- Toneva et al. [2019] M. Toneva, A. Sordoni, R. T. des Combes, A. Trischler, Y. Bengio, and G. J. Gordon. An empirical study of example forgetting during deep neural network learning. In ICLR, 2019.

- van den Oord et al. [2018] A. van den Oord, Y. Li, and O. Vinyals. Representation learning with contrastive predictive coding. CoRR, abs/1807.03748, 2018.

- Wang et al. [2020] Z. Wang, S. V. Mehta, B. Póczos, and J. G. Carbonell. Efficient meta lifelong-learning with limited memory. In EMNLP, pages 535–548, 2020.

- Yuan et al. [2021] X. Yuan, Z. Lin, J. Kuen, J. Zhang, Y. Wang, M. Maire, A. Kale, and B. Faieta. Multimodal contrastive training for visual representation learning. In CVPR, pages 6995–7004, 2021.

- Zenke et al. [2017] F. Zenke, B. Poole, and S. Ganguli. Continual learning through synaptic intelligence. In ICML, pages 3987–3995, 2017.

Appendix A Discussion

Appendix B The experimental visualization on NLP task.