Model Predictive Impedance Control with Gaussian Processes for Human and Environment Interaction

Abstract

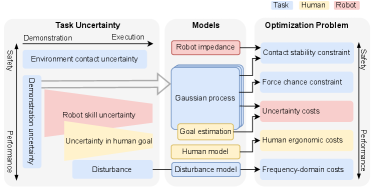

Robotic tasks which involve uncertainty–due to variation in goal, environment configuration, or confidence in task model–may require human input to instruct or adapt the robot. In tasks with physical contact, several existing methods for adapting robot trajectory or impedance according to individual uncertainties have been proposed, e.g., realizing intention detection or uncertainty-aware learning from demonstration. However, isolated methods cannot address the wide range of uncertainties jointly present in many tasks.

To improve generality, this paper proposes a model predictive control (MPC) framework which plans both trajectory and impedance online, can consider discrete and continuous uncertainties, includes safety constraints, and can be efficiently applied to a new task. This framework can consider uncertainty from: contact constraint variation, uncertainty in human goals, or task disturbances. An uncertainty-aware task model is learned from a few () demonstrations using Gaussian Processes. This task model is used in a nonlinear MPC problem to optimize robot trajectory and impedance according to belief in discrete human goals, human kinematics, safety constraints, contact stability, and frequency-domain disturbance rejection. This MPC formulation is introduced, analyzed with respect to convexity, and validated in co-manipulation with multiple goals, a collaborative polishing task, and a collaborative assembly task.

1 Introduction

Manipulation with physical contact is essential for robotics, where impedance control111Impedance is used to refer to general physical interaction control, i.e., both impedance and admittance control. is often paramount to robust and safe execution. This is especially critical in tasks that involve physical human-robot interaction (HRI), where the robot compliance allows the human co-worker to safely affect robot motion for teaching or online collaboration. Impedance control defines the characteristics of physical interaction by stiffness, damping, and inertia parameters [1]. These parameters must be appropriately adapted to an application, with a large number of proposed methods to determine appropriate impedance parameters. Nevertheless, in practice, the tuning of these parameters is often done with ad-hoc or application-specific methods.

Some recent works propose generalizable approaches to adapting impedance parameters based on machine learning and human-in-the-loop approaches. A common theme in impedance adaptation is the degree of uncertainty. From the literature, we identify five key categories related to uncertainty and impedance control. 1) In learning from demonstration (LfD), uncertainty derived from the variability in demonstrations of trajectories can be used to infer lower robot stiffness, since the human demonstrator does not seem to care about precision in that section of motion [2, 3]. 2) In LfD, the robot’s available confidence in the skill is used, where stiffness can be reduced when entering unexplored regions as actions might be unsafe [4]. 3) When faced with uncertainty in environment constraints in task execution, the robot should have lower stiffness in order not to produce dangerously high forces when the contact is unexpected [5, 6, 7]. 4) In case of uncertain external perturbations the robot should increase the impedance to ensure the accuracy of position tracking task [8, 9]. 5) When there is uncertainty in human goal during collaborative tasks, the robot should be compliant to let them determine a specific degree of freedom (DoF). For example, when the human should lead the collaborative carrying of an object [10], or when the human should guide a polishing machine that is carried by the robot [11].

Nevertheless, scaling up impedance control to different tasks, conditions and environments while accounting for human goals in real-time is still a major challenge in physical HRI. The existing methods typically focus on either one or a couple of these uncertainty categories, although many tasks feature several of them. We aim to develop a framework which can account for multiple types of uncertainties for a unified physical HRI approach. The goal is for robots to be able to plan trajectories and adapt impedance for semi-structured tasks in physical contact with humans and environments, without needing an analytical model of environment/human or large amounts of data. To attain that goal, we propose a planning and optimization framework with probabilistic models of external forces over robot state. This acts as an outer loop controller, adapting robot trajectory and impedance parameters. The main contributions of this work are:

-

•

A taxonomy of uncertainty in physical interaction, including their sources, consequences for safety and performance, and a unified problem formulation to address these with adaptive impedance control.

-

•

Unlike existing approaches that use GPs as direct robot reference behaviour generators (e.g., [12]), we use them as intermediate task models which combine with the robot impedance in a Model Predictive Control (MPC) problem, allowing explicit objectives and constraints which depend on the trajectory uncertainty.

-

•

Unlike existing sampling-based approaches to adapting impedance online (e.g., [13]), we propose the first gradient-based optimization of impedance, which allows constraints such as maximum contact force and contact stability.

-

•

The approach is experimentally validated, allowing human goal inference, increasing impedance damping for contact stability, and reducing impedance in directions of task variation, with Hz MPC rates. The code222https://gitlab.cc-asp.fraunhofer.de/hanikevi/gp-mpc-impedance-control and video333https://youtu.be/Of2O3mHfM94 are available.

The uncertainty category 1) related to variability in demonstrations can be accounted for in the proposed framework through GP covariance, but we note this can produce unsafe impedance skills when the low demonstration variance arises from contact with the environment [7]. The proposed framework also addresses the uncertainty category 2) related to confidence in skill by leveraging on GP model uncertainty in cost function and optimization constraints. The uncertainty category 3) related to environment constraints is handled by the increase in GP covariance induced by fitting hyperparameters on data with contact variance. An additional frequency-domain perturbation model is used to resolve the uncertainty category 4) related to external perturbations. The uncertainty category 5) related to human goal estimation is addressed by taking the expectation over the belief in human goal, inferred from the GP.

A preliminary study was presented at the 2022 IEEE International Conference on Robotics and Automation [14]. This paper significantly expands upon the previous study by an extended theoretical formulation of the method that accounts for all identified uncertainties, adding two types of MPC constraints, a new theoretical analysis of impedance optimization, and a new experimental evaluation with three additional practical tasks involving physical HRI (collaborative rail assembly, double peg-in-the-hole, and polishing) where we examine new aspects (task uncertainty, goal uncertainty, and human ergonomics).

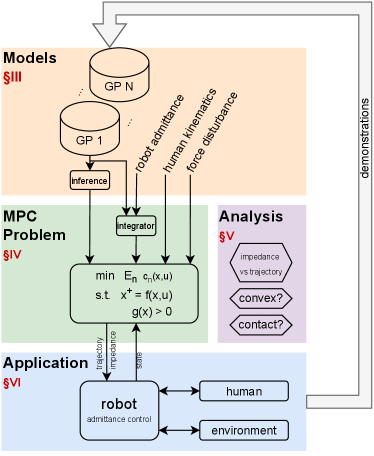

The remainder of the paper is structured as follows (see also Fig. 2 for overview). The related work is given in Section 2. The modeling using GP is described in Section 3. The MPC problem formulation is provided in Section 4. This is followed by a theoretical analysis in Section 5. Finally, experimental validation on practical tasks is described in Section 6.

2 Related work

Here we examine the proposed method with respect to related work in the literature. We first outline the work done in physical human-robot interaction where human intention modeling plays a critical role in seamless collaboration. Then we proceed to robot impedance adaptation, which is a fundamental part of the robot’s low-level actions as a result of the inferred intention. Finally, we review planning in contact tasks.

2.1 Physical human-robot interaction

An important challenge in physical HRI is the ability of robots to infer human co-worker’s goals and states. To address this challenge, several recent works have proposed inference techniques and applications. The study in [15] showed that robot adaptation to the human task execution can improve task-related performance. Robot adaption to the human state can also improve ergonomics [11]. Finally, user satisfaction can also be improved by robot adaptation [16]. Nevertheless, to adapt to these different aspects, the robot should be able to infer the human state, which often requires complex sensory setups [17, 18]. However, complex measurement systems are not always available or feasible for practical applications, thus some specific states can be modeled, such as the desired motion of the robot/payload [19, 20, 21, 22], human actions [23], preferences [24, 25], human physical fatigue [11], cognitive stress [26], and suitability of shared workspace [27, 28, 29, 30].

For seamless collaboration, the adaption of the robot to the human should be prompt, and thus the human intent or goal must be inferred during the task execution [31, 11]. Human intent is often considered as a continuous variable [15, 22], however, it can also reflect discrete changes in the task [32], e.g., a collection of possible goals. Discrete human state has been considered for virtual fixtures [33], Dynamic Movement Primitives (DMPs) [32], and impedance control [34].

When the robot has the knowledge of the human state and intent, it must respond promptly with appropriate actions to facilitate the collaborative task execution. This typically means generation of motion trajectories and often simultaneous impedance adaptation. The trajectories can be chosen to optimize task-related objectives: reducing trajectory jerk [35, 36], minimize positioning error [8, 37, 38], or render appropriate velocity response [39, 40]. In addition, human operator-related objectives can be optimized as well, such as minimizing interaction forces [41] or metabolic cost [42]. A common rule is that the robot can take over aspects of the task that require precision, while the human can handle adaption to variations. The ‘minimum intervention principle’ [43] follows this rule, where uncertain DOF should have a lower stiffness [44, 45], which can also be interpreted as a risk-sensitive control. Nevertheless, such an approach is not reasonable for all interactive tasks that involve physical constraints [7].

The literature can address the human-related properties (e.g., actions, preferences, ergonomics, etc.), real-time intention processing, and uncertainty in an isolated manner. However, a unified framework that can effectively combine all these aspects for practical manufacturing tasks is still missing.

| Principle | Robot impedance should: | Co-manip. | Env. Contact | Autonomy | |

| Dynamics | complementarity [46] | be inverse of environment | - | - | - |

| stability [47, 9] | stabilize unstable dyn | [8] | [48] | full | |

| well-damped [39] | reduce oscillation or jerk | [39, 10] | [49] | interact, full | |

| Optimal Control | min intervention [2] | stiff where low variance | [50, 43, 51] | [51] safetya [7] | interact, full |

| game theory [52] | min coupled cost function | [53] | - | interact | |

| reinf. learning | min cost function | [36] | [54, 55] | full | |

| Transfer Hum Imp | human hand imp [3] | match human impedance | - | [56] | full |

| co-contraction [57] | prop or inv to co-contraction | - | [57] | interact | |

| demo dynamics [34] | which explains demos | [34] | - | interact | |

aThis relates to the robot being stiff while being rigidly constrained by a potentially high-stiffness environment, which can cause contact instability.

2.2 Impedance adaptation

Physical HRI requires a safe and robust low-level control approach to account for the uncertainty of practical tasks [57]. Classic position or force controllers often fail to perform well in such complex settings since they only focus on controlling either one of the variables, but not the characteristics of the interaction that define their relationship, i.e., impedance. Impedance adaption is empirically important for safety and robustness in manipulation, and has been shown to improve sample efficiency of higher-level learning control [58]. Accordingly, a wide range of techniques has been proposed for adjusting impedance parameters. Recent surveys provide a thorough overview of this field [59, 60], so we present only an abbreviated summary in Table 1.

Dynamics-based objectives are often based on continuous-time dynamic models, considering factors like stability and damping of the coupled system. A challenge is that environment dynamics (e.g., stiffness) are application-specific. In some cases, the environment dynamics are known or controlled [47], in other cases properties of the dynamics (e.g., damping) can be found from trajectories [61].

Other approaches plan to minimize a general cost function, which may include the human or environment state [53]. Such approaches allow consideration of uncertainty, but typically also require models of humans or the environment, which are typically simplified such as assuming human force is a PD controller [43]. Data-driven methods such as reinforcement learning can be applied [54, 55], but often require lots of trial and error.

An approach in [4] uses GP models to encode demonstrated desired robot movements and then leverages on the measure of uncertainty in the modeled skill to modulate the stiffness through a direct expression. If the robot experiences unexpected external perturbations, the impedance controller forces the motion into the regions of higher certainty to be more confident in the given skill. If the robot still ends up in a region of high uncertainty, the stiffness is reduced as there might be insufficient confidence in the skill to safely perform the movements there. Nevertheless, there was no higher-level planner (e.g., MPC) to exploit given learned trajectories to generate new ones.

From this, we conclude that there is a wide range of objectives and models–it seems unlikely that a single principle would be suited for all or a large majority of these areas. Accordingly, we propose a framework for optimizing impedance, capable of accommodating a range of models and objectives.

2.3 Planning in contact tasks

Intention modeling and low-level impedance control alone can work well for simplified tasks. However, for a more flexible robot behavior, a higher-level planner is required. MPC is becoming increasingly popular for manipulation [62, 13], and is already a standard tool for locomotion and whole-body control, capable of planning through contact models with either linear complementarity [63] or switching dynamic modes [64]. Contact can be considered as fully rigid constraints, using time-stepping approaches to resolve the contact impulse over an integrator time step [65], avoiding the issue of unbounded contact forces in rigid contact. A dynamic model for the environment has also been proposed for manipulation [66], relaxing kinematic constraints into a stiffness. In most contact planning situations, signed distance functions are needed–typically, this requires geometry information typically given by CAD, although it has been learned on simplified objects [67].

We make two observations from the perspective of manipulation: 1) signed distance functions are difficult with the complex geometries typical in assembly and manipulation tasks, and 2) compliance is both common and critical–be it physical or by control. To address this, we use a compliant contact model here (regressing contact force over robot position) which does not require a signed distance function, and we consider the optimization of impedance parameters in the MPC problem.

3 Models

This section presents the models used: GP models of force, human kinematics, robot admittance, and force disturbance. This aspect is highlighted by the orange part in Fig. 2.

3.1 Force models

For a robot with a tool-center point (TCP) pose , we consider three types of external forces:

Human forces are a function of robot state and human mode , where indexes possible human behavior (e.g., goals).

Contact forces depend on an environment configuration which is typically unmeasured and may vary between iterations, such as a contact or constraint position.

Disturbance forces such as polishing, cutting, or other process forces which should not affect robot position. We assume they can be represented in a frequency-domain model , e.g., they are predominantly high-frequency.

We use one force/torque (F/T) sensor, such that demonstrations measure total force . While multiple F/T sensors can separate human and environment forces [56, 55], a single F/T sensor reduces complexity. A disadvantage of a single F/T sensor is that in co-manipulation with contact, contact may be more difficult to identify, although this can be partly addressed by how the data is collected. Another disadvantage of this approach is that forces are regressed over position, which may be challenging in stiff contact. We validate both these aspects in Section 5.3 and Fig. 5.

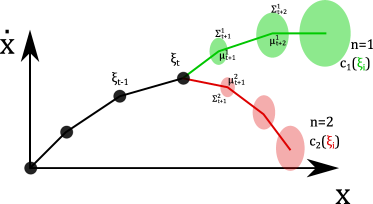

3.1.1 GP Models

GPs are used to regress force over pose for each mode , based on a dataset of measured pose and force . We fit a GP Model [68, p13] that gives a Gaussian variable for a pose , given a mean function , kernel function , and observed data . The GP models for each dimension of are fit independently and the covariance is therefore diagonal. In the sequel, we consider the fitting of a single dimension of with corresponding scalars , , .

Here, we use a squared exponential kernel of the form

| (1) |

with hyperparameters for the noise variance and as the length scale. The GP then defines a marginal Gaussian distribution for a test point as

| (2) |

where mean , covariance are built from kernel matrices and , where the element is .

A straightforward way to incorporate prior knowledge is a mean function. A common mean function is the zero function, . To better model contact forces , a hinge function is also used for the mean:

| (3) |

where is the element of which matches the dimension of the force being fit , and constants are hyperparameters which are fit from data.

The data for these models are collected from initial demonstrations where the robot is manually guided by the human. These models are built and the hyperparameters are fit (according to negative log-likelihood) independently for each mode and dimension of . By implementing them in an automatic differentiation framework, the gradient of the mean and covariance with respect to pose can be efficiently calculated for the MPC problem.

3.2 Robot admittance dynamics

This subsection develops the dynamics of a robot with a Cartesian admittance controller. An admittance is used as the hardware implementation here uses a position-controlled industrial robot. As impedance and admittance are just inverse mathematical viewpoints of the interactive dynamics, the dynamics here can be readily adapted to impedance-controlled robots. Consider a rendered admittance in the TCP frame which approximates the continuous-time dynamics of

| (4) |

with pose , velocity , acceleration , measured force and force reference . The impedance parameters are inertia , damping , and stiffness matrices, which are here all diagonal, and which is the rest position of the spring. The rotational elements of and are the angles and torques, respectively, about the three axes of the TCP frame. In the sequel, and are dropped as it was found empirically that the quasi-static tracking can be handled by the MPC adjusting .

3.2.1 Integrator

To formulate an MPC problem, the dynamics in (4) have to be discretized. Let be the sample time, subscript denote the value at time , and state vector .

3.2.2 Stochastic dynamics

We can rewrite (5) in the form of

| (6) |

where system matrices , depend on impedance parameters . Consider a Gaussian distribution over state , we find

| (7) | |||||

| (8) |

where denotes the value of at time step . Let the vectorization of the covariance matrix be denoted and similarly . Vectorizing (8) gives [69, (520)]

| (9) |

where is the Kronecker product.

3.3 Human kinematics

Ergonomic costs are more naturally expressed in terms of human joint torques [18, 30], so we also consider a 4-DOF kinematic model of the human arm with three rotational DOF at the shoulder and one rotational joint for the elbow extension. This model has parameters of and , for the length of the upper and lower arm, and for the spatial position of the human shoulder. Using these parameters and the human joint angles , we can calculate the human hand position with forward kinematics as

| (10) |

Forces measured at the end-effector can be translated into human joint torques as

| (11) |

where are linear forces at the human hand, and is the standard Jacobian matrix of (10) with respect to .

Task-specific knowledge can be used to model or . In most cases, we simplify the human kinematics by assuming the internal/external rotation of the arm is zero, , and that , i.e., the human hand is at the robot TCP, allowing us to write closed-form inverse kinematics and evaluate (11).

3.4 Inference of mode

The GPs are then used in a Bayesian estimate of the current mode, where a belief at time is denoted as , , and . This belief is updated recursively as

| (12) |

where and are the mean and covariance of the GP model in mode evaluated at , and is normalized after the update such that .

4 MPC Problem

This section presents the MPC optimization problem: the constraints, cost functions, and multiple shooting formulation. This aspect is highlighted by the green part in Fig. 2.

| Feature | Functionality | Defined | Experiment | Rate (Hz) | |

| baseline | - | ||||

| Human | discrete modes | human goal estimation | dual peg-in-hole §6.1 | ||

| human joint torque | human ergonomics | (11) | polishing §6.3 | ||

| model variance | repeatable task DOF | (1) | rail §6.2 | ||

| Task | constraint uncertainty | (1) | contact §6.1 | ||

| disturbance reject | task performance | (16) | polishing §6.3 | ||

| well-damped | contact stability | (20) | contact §6.1 | ||

| chance constraint | safety | (18) | contact §6.1 |

4.1 Optimization Problem

The MPC problem is formulated using multiple-shooting transcription with a generic problem statement of

| (13) |

where is the planning horizon, is the range of allowed inputs, the slack for the continuity constraints (the inequality is applied element-wise), is from (7), following (9), and are inequality constraints to be presented in 4.3. The cost function is shown with it’s parameter argument and decision variable , the detailed cost function provided in (4.2.1). Initial covariance is set as it’s assumed that the robot state is fully observed.

The constraints in (13) are nonlinear, so an interior-point nonlinear optimization solver is used. While nonlinear, the problem is writtein in an automatic differentiation framework, allowing calculation of the gradient and Hessian of the objective and constraints, significantly improving convergence.

The MPC framework here allows for different choices of decision variable , which can include any of the following:

where and are a change in the impedance parameters and .

4.2 Costs

4.2.1 Stage cost function

The general stage cost function is defined as

| (14) |

where and are mean and covariance for the predicted state in mode , and are the mean and covariance of the GP forces in mode , are the human joint torques from (11), are control inputs, and are the related weight-matrices for each cost factor. State cost includes just a small penalty on velocity, the trajectory following is implicit in GP mean and covariance. In some applications (e.g. the rail assembly here 5), replacing with is used to match the human demonstrations.

4.2.2 Human Joint Torque Cost

Human arm manipulability plays a key role in ergonomics [28, 30], as it relays the information about how well the joint torques can be transferred into the end-effector force where the task is produced. Therefore, we defined the ergonomic cost as

| (15) |

where, when , defines the standard manipulability ellipsoid. This is a general formulation and the composition of the human end-effector force can be specified based on the task. For example, in the case of a polishing task, the structure to be polished itself supports the gravity force, which thus does not play a key role. Here, the dominant force component is related to the movement of the robot along a path, therefore we use the damping forces of the robot , where provides a weighting according to the direction of the expected forces. This term explicitly includes the impedance parameter, allowing the damping to be optimized to direction of expected motion and ergonomics. Nevertheless, the proposed formulation is general and can include gravity force and other static forces for tasks such as carrying and drilling.

4.2.3 Disturbance Rejection

In many tasks, certain forces are external perturbations and should not influence the robot position, e.g., forces arising from the rotation of a polishing disk in a polishing task. In some cases, these forces may be more easily distinguished in the frequency domain, where typical human forces are low-frequency, and disturbance forces may be medium- to high-frequency. Frequency-dependent costs can be considered through an augmented state model [70], but this increases state dimension (and thus computational complexity) and requires the cost can be expressed from the inputs or state. Here, measured force is not an input to the MPC problem, thus a new approach is taken.

We consider a disturbance force model in Laplace domain as , where and adjust the magnitude and cut-off frequency. This model is a simple high-pass filter, distinguishing from lower-frequency human input. This disturbance results in robot position deviation according to the admittance dynamics , where . Recall the equivalency of the two-norm in time and Laplace domain, by Parseval’s theorem [71], and that can be found by the observability grammian as

| (16) | |||||

| (17) |

where are a state-space realization of [71]. The Lyapunov equation in (17) is differentiable [72] with respect to admittance parameters , allowing the efficient calculation of the Jacobian and Hessian of this objective for the optimization problem. Note that the naïve solution of (17), by vectorizing and a linear solve may have numerical issues when the matrices are poorly conditioned, in such case using specialized solvers like Bartels-Stewart can improve performance.

4.3 Constraints

Impedance parameters affect contact safety. To improve contact safety, constraints for well-damped contact and force limit are formulated.

4.3.1 Force limit chance constraint

A fundamental concern in contact is avoiding excessive force; with a model of force over robot pose, this constraint can be directly transcribed. This force model may have uncertainty arising from:

-

1.

Variation in environment constraint location

-

2.

Exploration of a new region or confidence in the skill

Both these uncertainties are captured in the GP regression here. For 1), the GP will fit hyperparameters which increase the uncertainty in the force model (validated in §5.3). For 2), when farther from the training data, the GP will revert to a baseline variance given by hyperparameter .

A chance constraint is a constraint applied to a random variable which holds for a specified degree of certainty. For a scalar Gaussian variable , a chance constraint can be re-written over mean and covariance as

| (18) |

where is an upper bound on the force, is the inverse of the error function and is a tuning parameter for the confidence that the chance constraint should hold [73].

It can be seen that the uncertainty adds a conservatism to the force bound; as gets larger, the mean force must be kept smaller. This constraint is applied element-wise on the linear forces, evaluating the GP at the final MPC trajectory point to reduce computational complexity.

4.3.2 Well-damped contact constraint

One challenge in admittance control is contact stability with environment constraints, where a higher-stiffness environment typically requires higher virtual damping [74]. Ad hoc methods can increase damping near contact [75], but make assumptions such as the robot is slowing down before contact. Here, the environment stiffness can be estimated from the mean function of the GP model as

| (19) |

where is the estimated environment stiffness in the direction at pose . With this estimated stiffness, the dynamic parameters can be directly constrained using the condition for well-damped 2nd order systems

| (20) |

where is critically-damped, and introduces a safety margin which can help account for the non-idealized admittance dynamics. This constraint is applied to the entire MPC trajectory .

5 Analysis

This section, shown in purple in Fig. 2 shows some properties of the MPC problem: how trajectory and impedance optimization differ, necessary conditions for convexity, and investigates the ability to model contact with GPs.

5.1 Optimization of Impedance vs Trajectory

Why should we optimize impedance parameters instead of just the trajectory ? Taking the differential of the linear-Gaussian dynamics (7) and (9),

| (21) |

where prefix indicates total differential and the partial derivative with respect to . We note two properties: i) input doesn’t affect the covariance , and ii) state is determined by both control input and impedance parameters , i.e., different combinations of force and impedance parameters can realize a certain change in , as previously noted in [54].

When the robot increases stiffness, the mean and variance of the robot’s trajectory are affected, for example reducing response to force disturbances. However, changing the robot’s force reference only affects the mean trajectory. This shows that explicit consideration of trajectory covariance is important for impedance, thus the MPC trajectories should be stochastic when optimizing impedance.

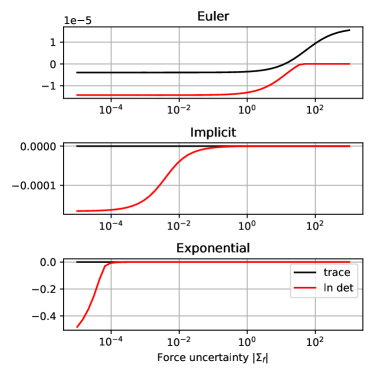

5.2 Convexity over impedance parameters

For the optimization problem to be efficient, the objective should be convex with respect to the decision variables [76]. As optimizing with respect to parameters of the dynamics (e.g., impedance parameters) is not studied, we check the convexity of a toy cost function with respect to impedance parameters, denoted . As impedance parameters mostly affect covariance, we consider a simplified objective in a single step shooting problem of , where by results from [69],

The convexity of this objective will depend on the impedance parameter values, the choice of the integrator (which affects and ), and the relative magnitude of and . Note that , which gives . Thus increasing can increase the minimum eigenvalue of the Hessian of the objective (5.2). The terms for damping are more complex, thus numerical analysis is carried out.

These results are validated numerically to see what matrix cost and integrator have better convexity. The minimum eigenvalue of the Hessian and are compared for three different integrator schemes: explicit Euler , implicit , and exponential . We can see in Fig. 4 that the minimum eigenvalue generally increases as increases. Using a is unilaterally better than , and is thus used in the cost function here. The implicit integrator has better average performance over the range of —notably, the performance of the explicit Euler integrator is poor; the Hessian is indefinite for typical parameter values. Thus, here we use the trace of matrix costs with an implicit integrator. Also note that convexity improves as increases, as suggested in the above analysis.

5.3 Contact modeling

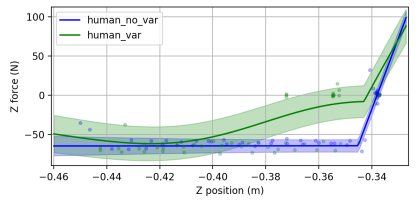

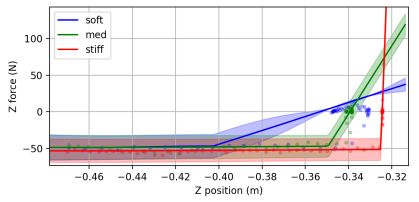

We investigated the ability of the GPs to model contact by verifying 1) that GPs can identify the contact in human-guided contact scenarios, 2) the impact of variation in the contact position, and 3) the impact of environment stiffness on the ability to regress the models. These together allow the direct modeling of environment constraint uncertainty in realistic contact. The hyperparameters in the following were all fit to minimize negative log-likelihood from the same initial values.

When the human guides the robot into contact, the sum of human and environmental forces is measured. We collect human-guided contact and autonomous contact data (i.e. the robot makes contact without human forces), and compare the GPs which are fit to both of them. In Fig. 5a, we see that both models identify the contact location with the hinge mean function, but the human data identifies a lower stiffness and a contact position cm too high. In the applications here, this error was acceptable, but this is application-specific.

Next, the contact position was varied between trials, with a total variation of cm over three trials. The result can be seen in Fig. 5b, where the three contact positions can be seen and the higher covariance in the fit GP (green) can be seen compared to the constant contact position (blue).

Finally, contact with environments of various stiffnesses was made, with corresponding stiffness of N/mm. The resulting quality of the fit can be seen in Fig. 5c, where the hinge function can readily identify the changes in contact position and stiffness.

6 Validation

This section presents four sets of experiments to validate the various features of the proposed framework. These validation experiments should provide useful pointers for the application of the proposed method in real-world scenarios (the blue part in Fig. 2). Videos are attached as multimedia and can be found at https://youtu.be/Of2O3mHfM94. MPC and GP parameters used for each experiment can be found at the repository https://gitlab.cc-asp.fraunhofer.de/hanikevi/gp-mpc-impedance-control

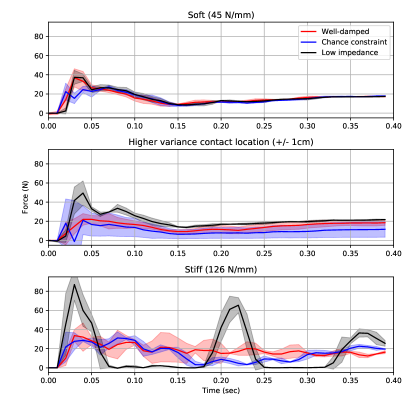

6.1 Contact uncertainty and stiffness

We first used a contact task to validate safe contact with the environment. In particular, we examined the effects of force chance constraint and well-damped constraint as contact variance or stiffness increases. The robot was taught a task moving from free space into contact, which resulted in GP models as seen in Section 5.3. The contact location could be varied by adding blocks (Fig. 5b), and the environment made stiff by locking out a compliant element (Fig. 5c). For each contact condition–soft, high variance, and stiff–the corresponding model from Section 5.3 was used, and only the MPC parameters relating to the chance constraint (, , chosen by hand tuning) and well-damped constraint () are changed. The low impedance test condition kept the minimum mass Kg and damping Ns/m.

The force trajectories during contact are shown in Fig. 6, averaged over three trials, where variance is indicated by the shaded area. In the soft contact condition, the difference is small as the constraints were not violated. As the variance in the environment increased, the low impedance MPC had a higher contact force, but both constraints reduced this peak force. The chance constraint had more oscillation, but the well-damped constraint was smoother. As the stiffness of the environment increased, the low impepdance MPC had a higher peak contact force, and had unstable contact (i.e., lost contact due to oscillation). The well-damped constraint had more stable contact with fewer oscillations, compared to the chance-constrained MPC.

In the supplementary video, it can be seen that these constraints also affected the behavior approaching contact. Once the chance constraint was activated, the robot pulled back from the contact approach. This was typically accompanied by infeasible optimization problems (i.e., no solution meeting constraints could be found). This problem was empirically more pronounced when using a GP with a hinge function, thus the poor conditioning of stiff contact was identified as a contributing cause. Alternatively, the well-damped constraint paused to allow the damping to increase before making contact. Also, the well-damped constraint was found empirically to have lower computational cost, with an average MPC rate of compared with for the chance constraint (Table 2).

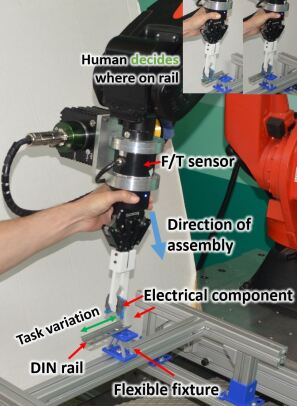

6.2 Rail Assembly

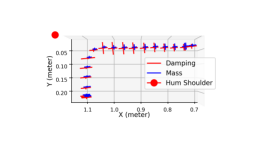

Here we present a rail assembly task, where the location of the switch along the rail may vary according to factors not known to the robot, such as existing parts. However, the rail mounting itself is repeatable. This task was taught in three demonstrations from various initial conditions, with varying goal locations along the rail. The desired behavior is that this division of repeatable task DOF and variation can be automatically extracted from data, such that the robot allows the human to more easily manipulate this DOF, i.e., has a lower impedance in the DOF that varies.

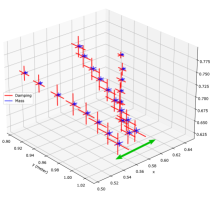

The results can be seen in Fig. 8, which shows the linear impedance parameters of mass and damping over space in three validation experiments. It can be seen that the impedance initialized at low values, but as the rail was approached, the impedance in the repeatable DOF was increased substantially, while kept low in the DOF which varied. The supplementary video shows that this results in a human easily intervening to adjust position along the rail. The task could also be achieved autonomously after the demonstrations when a cost was added to keep a positive force reference in the approach direction .

6.3 Polishing

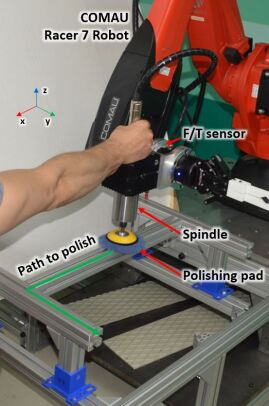

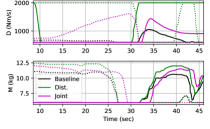

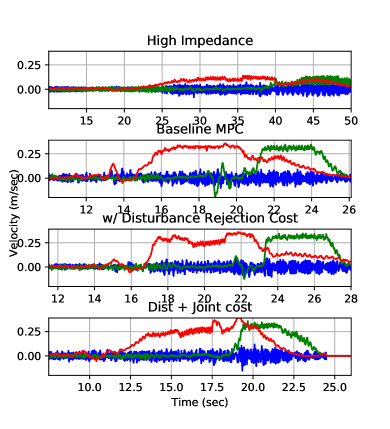

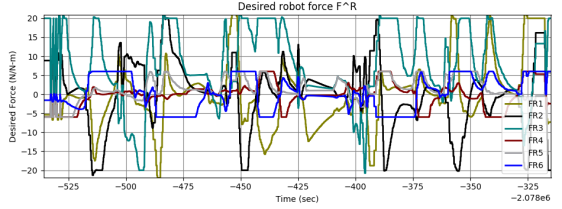

We also examined a polishing task where the human had to guide a robot-supported polishing spindle along a path. In this case, the human was determining speed along the path, while polishing vibration should not affect the robot’s trajectory. To balance the rejection of polishing force disturbance against the need for the human to easily manipulate the robot, a force disturbance cost was added to the MPC problem. An ergonomic cost is also added, based on the assumed human arm geometry, and tested. The supplementary video shows polishing on a flat surface, corresponding to Fig. 7b, and a round object to show the ability to handle orientation.

| MPC type | High imp. | Baseline | Dist. | Dist. + Jt. |

| High-freq RMS | 5.4e-6 | 7.3e-6 | 6.5e-6 | 7.6e-6 |

| Low-freq RMS | 0.8e-4 | 4.2e-4 | 4.2e-4 | 4.1e-4 |

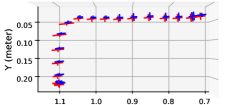

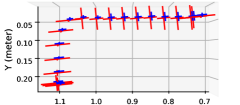

As seen in Fig. 9, the different cost function terms affected the rendered impedance along the polishing path. When the frequency-domain disturbance was added, the directionality of the impedance was significantly affected, resulting in a higher impedance cross to the direction of motion, reducing oscillation cross from the direction of motion. This resulted in a reduction of high-frequency velocity, as seen in Fig. 10 and Table 3. Adding a penalty on human joint torques reduced the impedance when farther from the human, as an equivalent force at a greater distance requires more shoulder joint torques due to poor force manipulability. However, the human joint cost did not improve the vibration suppression as significantly as the disturbance cost as seen in Table 3.

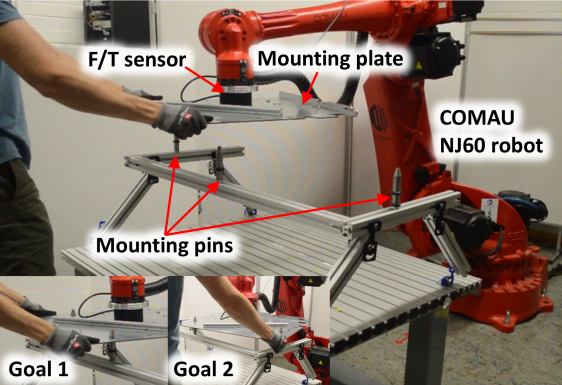

6.4 Double Peg-in-Hole

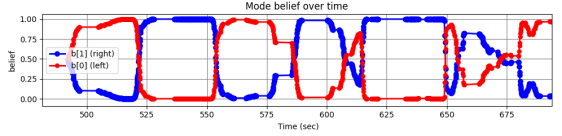

This task involved multiple possible goals from the human, with the two goals indicated in Fig. 11(a). While the human objective was not known in advance, the belief was updated online as seen in Fig. 11(b). This update in the belief resulted in different force trajectories, as seen in Fig. 11(c). This allowed the planned robot trajectory to respond online to changes in the human intent, providing 6-DOF assistance to the human.

7 Conclusion

This paper presented an MPC framework for impedance control in contact with humans and the environment. The use of Gaussian Processes to model external force allows explicit consideration of variability in demonstrations (rail assembly), confidence in the skill (higher covariance outside demonstrations), and uncertain environment constraints (Fig. 5b). By using a GP model per human goal, a discrete goal can be estimated online to handle uncertainty in human goal. This approach can also consider frequency-domain disturbance models for external perturbations. By using stochastic trajectories, the impedance parameters can be directly optimized.

While flexible, the approach has some limitations, mostly computational. Many of these limits arise from contact. While the hinge function in GPs allows better modeling of stiff contact, the integration over these stiff contact models requires a small step size, even with implicit integrators. Without suitable integrator parameters, the contact may be lost in the future trajectory and constraints such as chance or well-damped lose effectiveness. These inequality constraints are effectively solved in an interior-point method, but care must be taken to keep the problem feasible, i.e., allowing fast changes in damping to react to fast changes in projected environment stiffness.

Further limitations arise from the GP models, which cannot model certain types of uncertainty–e.g., variation in noise model over the state space. Optimization of the GP parameters needs strong regularization, especially to keep comparable between DOF or different GP models.

References

- [1] N. Hogan, “Impedance Control: An Approach to Manipulation: Part II—Implementation,” Journal of Dynamic Systems, Measurement, and Control, vol. 107, no. 1, pp. 8–16, Mar. 1985.

- [2] S. Calinon, I. Sardellitti, and D. G. Caldwell, “Learning-based control strategy for safe human-robot interaction exploiting task and robot redundancies,” in 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2010, pp. 249–254.

- [3] K. Kronander, E. Burdet, and A. Billard, “Task transfer via collaborative manipulation for insertion assembly,” in Workshop on Human-Robot Interaction for Industrial Manufacturing, Robotics, Science and Systems. Citeseer, 2014.

- [4] G. Franzese, A. Mészáros, L. Peternel, and J. Kober, “ILoSA: Interactive learning of stiffness and attractors,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp. 7778–7785.

- [5] D. S. Walker, J. K. Salisbury, and G. Niemeyer, “Demonstrating the benefits of variable impedance to telerobotic task execution,” in 2011 IEEE International Conference on Robotics and Automation. IEEE, 2011, pp. 1348–1353.

- [6] A. Ajoudani, N. Tsagarakis, and A. Bicchi, “Tele-impedance: Teleoperation with impedance regulation using a body–machine interface,” The International Journal of Robotics Research, vol. 31, no. 13, pp. 1642–1656, 2012.

- [7] L. Peternel, T. Petrič, and J. Babič, “Robotic assembly solution by human-in-the-loop teaching method based on real-time stiffness modulation,” Autonomous Robots, vol. 42, no. 1, pp. 1–17, Jan. 2018.

- [8] C. Yang, G. Ganesh, S. Haddadin, S. Parusel, A. Albu-Schaeffer, and E. Burdet, “Human-like adaptation of force and impedance in stable and unstable interactions,” IEEE transactions on robotics, vol. 27, no. 5, pp. 918–930, 2011.

- [9] A. Naceri, T. Schumacher, Q. Li, S. Calinon, and H. Ritter, “Learning optimal impedance control during complex 3d arm movements,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 1248–1255, 2021.

- [10] V. Duchaine and C. M. Gosselin, “General model of human-robot cooperation using a novel velocity based variable impedance control,” in EuroHaptics Conference, 2007 and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics 2007. Second Joint. IEEE, 2007, pp. 446–451.

- [11] L. Peternel, N. Tsagarakis, D. Caldwell, and A. Ajoudani, “Robot adaptation to human physical fatigue in human–robot co-manipulation,” Autonomous Robots, pp. 1–11, 2018.

- [12] G. Franzese, A. Mészáros, L. Peternel, and J. Kober, “Ilosa: Interactive learning of stiffness and attractors,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp. 7778–7785.

- [13] L. Roveda, J. Maskani, P. Franceschi, A. Abdi, F. Braghin, L. M. Tosatti, and N. Pedrocchi, “Model-based reinforcement learning variable impedance control for human-robot collaboration,” Journal of Intelligent & Robotic Systems, pp. 1–17, 2020.

- [14] K. Haninger, C. Hegeler, and L. Peternel, “Model Predictive Control with Gaussian Processes for Flexible Multi-Modal Physical Human Robot Interaction,” arXiv preprint arXiv:2110.12433, 2021.

- [15] A. Takagi, G. Ganesh, T. Yoshioka, M. Kawato, and E. Burdet, “Physically interacting individuals estimate the partner’s goal to enhance their movements,” Nature Human Behaviour, vol. 1, no. 3, p. 0054, Mar. 2017.

- [16] B. Corteville, E. Aertbeliën, H. Bruyninckx, J. De Schutter, and H. Van Brussel, “Human-inspired robot assistant for fast point-to-point movements,” in Proceedings 2007 IEEE International Conference on Robotics and Automation. IEEE, 2007, pp. 3639–3644.

- [17] A. Ajoudani, A. M. Zanchettin, S. Ivaldi, A. Albu-Schäffer, K. Kosuge, and O. Khatib, “Progress and prospects of the human–robot collaboration,” Autonomous Robots, pp. 1–19, 2017.

- [18] P. Maurice, A. Malaisé, C. Amiot, N. Paris, G.-J. Richard, O. Rochel, and S. Ivaldi, “Human movement and ergonomics: An industry-oriented dataset for collaborative robotics,” The International Journal of Robotics Research, vol. 38, no. 14, pp. 1529–1537, 2019.

- [19] Y. Demiris, “Prediction of intent in robotics and multi-agent systems,” Cognitive Processing, vol. 8, no. 3, pp. 151–158, Sep. 2007.

- [20] Y. Li and S. S. Ge, “Human–Robot Collaboration Based on Motion Intention Estimation,” IEEE/ASME Transactions on Mechatronics, vol. 19, no. 3, pp. 1007–1014, Jun. 2014.

- [21] C. Wang, Y. Li, S. S. Ge, and T. H. Lee, “Reference adaptation for robots in physical interactions with unknown environments,” IEEE transactions on cybernetics, vol. 47, no. 11, pp. 3504–3515, 2017.

- [22] G. Kang, H. S. Oh, J. K. Seo, U. Kim, and H. R. Choi, “Variable Admittance Control of Robot Manipulators Based on Human Intention,” IEEE/ASME Transactions on Mechatronics, vol. 24, no. 3, pp. 1023–1032, Jun. 2019.

- [23] S. Jain and B. Argall, “Recursive Bayesian human intent recognition in shared-control robotics,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 3905–3912.

- [24] R. K. Jain, S. Majumder, B. Ghosh, and S. Saha, “Design and manufacturing of mobile micro manipulation system with a compliant piezoelectric actuator based micro gripper,” Journal of Manufacturing Systems, vol. 35, pp. 76–91, Apr. 2015.

- [25] A. Bajcsy, D. P. Losey, M. K. O’Malley, and A. D. Dragan, “Learning Robot Objectives from Physical Human Interaction,” p. 10, 2017.

- [26] C. Messeri, G. Masotti, A. M. Zanchettin, and P. Rocco, “Human-robot collaboration: Optimizing stress and productivity based on game theory,” IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 8061–8068, 2021.

- [27] N. Vahrenkamp, H. Arnst, M. Wächter, D. Schiebener, P. Sotiropoulos, M. Kowalik, and T. Asfour, “Workspace analysis for planning human-robot interaction tasks,” in 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids). IEEE, 2016, pp. 1298–1303.

- [28] S. Gopinathan, S. Otting, and J. Steil, “A user study on personalized adaptive stiffness control modes for human-robot interaction,” in The 26th IEEE International Symposium on Robot and Human Interactive Communication, 2017, pp. 831–837.

- [29] N. Mansfeld, M. Hamad, M. Becker, A. G. Marin, and S. Haddadin, “Safety map: A unified representation for biomechanics impact data and robot instantaneous dynamic properties,” IEEE Robotics and Automation Letters, vol. 3, no. 3, pp. 1880–1887, 2018.

- [30] L. Peternel, D. T. Schøn, and C. Fang, “Binary and hybrid work-condition maps for interactive exploration of ergonomic human arm postures,” Frontiers in Neurorobotics, p. 114, 2021.

- [31] H. Ben Amor, G. Neumann, S. Kamthe, O. Kroemer, and J. Peters, “Interaction primitives for human-robot cooperation tasks.” IEEE, May 2014, pp. 2831–2837.

- [32] M. Khoramshahi and A. Billard, “A dynamical system approach to task-adaptation in physical human–robot interaction,” Autonomous Robots, vol. 43, no. 4, pp. 927–946, 2019.

- [33] G. Raiola, S. S. Restrepo, P. Chevalier, P. Rodriguez-Ayerbe, X. Lamy, S. Tliba, and F. Stulp, “Co-manipulation with a library of virtual guiding fixtures,” Autonomous Robots, vol. 42, no. 5, pp. 1037–1051, Jun. 2018.

- [34] L. Rozo, S. Calinon, D. G. Caldwell, P. Jimenez, and C. Torras, “Learning physical collaborative robot behaviors from human demonstrations,” IEEE Transactions on Robotics, vol. 32, no. 3, pp. 513–527, 2016.

- [35] Y. Maeda, T. Hara, and T. Arai, “Human-robot cooperative manipulation with motion estimation,” in Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), vol. 4. Ieee, 2001, pp. 2240–2245.

- [36] F. Dimeas and N. Aspragathos, “Reinforcement learning of variable admittance control for human-robot co-manipulation,” in Intelligent Robots and Systems (IROS), 2015 IEEE/RSJ International Conference On. IEEE, 2015, pp. 1011–1016.

- [37] E. Gribovskaya, A. Kheddar, and A. Billard, “Motion learning and adaptive impedance for robot control during physical interaction with humans,” in Robotics and Automation (ICRA), 2011 IEEE International Conference On. IEEE, 2011, pp. 4326–4332.

- [38] H. Lee, B. Lee, W. Kim, M. Gil, J. Han, and C. Han, “Human-robot cooperative control based on pHRI (Physical Human-Robot Interaction) of exoskeleton robot for a human upper extremity,” International Journal of Precision Engineering and Manufacturing, vol. 13, no. 6, pp. 985–992, Jun. 2012.

- [39] T. Tsumugiwa, R. Yokogawa, and K. Hara, “Variable impedance control based on estimation of human arm stiffness for human-robot cooperative calligraphic task,” in Robotics and Automation, 2002. Proceedings. ICRA’02. IEEE International Conference On, vol. 1. IEEE, 2002, pp. 644–650.

- [40] V. Duchaine, B. M. St-Onge, D. Gao, and C. Gosselin, “Stable and intuitive control of an intelligent assist device,” IEEE transactions on haptics, vol. 5, no. 2, pp. 148–159, 2012.

- [41] X. Lamy, F. Colledani, F. Geffard, Y. Measson, and G. Morel, “Achieving efficient and stable comanipulation through adaptation to changes in human arm impedance,” in Robotics and Automation, 2009. ICRA’09. IEEE International Conference On. IEEE, 2009, pp. 265–271.

- [42] J. R. Koller, D. H. Gates, D. P. Ferris, and C. D. Remy, “’Body-in-the-Loop’Optimization of Assistive Robotic Devices: A Validation Study.” in Robotics: Science and Systems, 2016.

- [43] J. R. Medina, D. Lee, and S. Hirche, “Risk-sensitive optimal feedback control for haptic assistance,” in Robotics and Automation (ICRA), 2012 IEEE International Conference On. IEEE, 2012, pp. 1025–1031.

- [44] S. Calinon, D. Bruno, M. S. Malekzadeh, T. Nanayakkara, and D. G. Caldwell, “Human–robot skills transfer interfaces for a flexible surgical robot,” Computer methods and programs in biomedicine, vol. 116, no. 2, pp. 81–96, 2014.

- [45] E. Pignat and S. Calinon, “Learning adaptive dressing assistance from human demonstration,” Robotics and Autonomous Systems, vol. 93, pp. 61–75, 2017.

- [46] N. Hogan, “On the stability of manipulators performing contact tasks,” Robotics and Automation, IEEE Journal of, vol. 4, no. 6, pp. 677–686, 1988.

- [47] E. Burdet, R. Osu, D. W. Franklin, T. E. Milner, and M. Kawato, “The central nervous system stabilizes unstable dynamics by learning optimal impedance,” Nature, vol. 414, no. 6862, pp. 446–449, 2001.

- [48] Y. Li, G. Ganesh, N. Jarrassé, S. Haddadin, A. Albu-Schaeffer, and E. Burdet, “Force, Impedance, and Trajectory Learning for Contact Tooling and Haptic Identification,” IEEE Transactions on Robotics, vol. 34, no. 5, pp. 1170–1182, 2018.

- [49] F. Ferraguti, C. Talignani Landi, L. Sabattini, M. Bonfè, C. Fantuzzi, and C. Secchi, “A variable admittance control strategy for stable physical human–robot interaction,” The International Journal of Robotics Research, vol. 38, no. 6, pp. 747–765, May 2019.

- [50] P. Kormushev, S. Calinon, and D. G. Caldwell, “Robot motor skill coordination with EM-based reinforcement learning,” in Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference On. IEEE, 2010, pp. 3232–3237.

- [51] S. Calinon, D. Bruno, and D. G. Caldwell, “A task-parameterized probabilistic model with minimal intervention control,” in 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 3339–3344.

- [52] Y. Li, J.-Y. Zhu, R. Tedrake, and A. Torralba, “Connecting Touch and Vision via Cross-Modal Prediction,” arXiv:1906.06322 [cs], Jun. 2019.

- [53] Y. Li, G. Carboni, F. Gonzalez, D. Campolo, and E. Burdet, “Differential game theory for versatile physical human–robot interaction,” Nature Machine Intelligence, vol. 1, no. 1, pp. 36–43, 2019.

- [54] F. Stulp, J. Buchli, A. Ellmer, M. Mistry, E. A. Theodorou, and S. Schaal, “Model-free reinforcement learning of impedance control in stochastic environments,” IEEE Transactions on Autonomous Mental Development, vol. 4, no. 4, pp. 330–341, 2012.

- [55] C. Chang, K. Haninger, Y. Shi, C. Yuan, Z. Chen, and J. Zhang, “Impedance Adaptation by Reinforcement Learning with Contact Dynamic Movement Primitives,” in 2022 IEEE Intl. Conf. on Advanced Intelligent Mechatronics (AIM), 2022.

- [56] T. Tang, H.-C. Lin, and M. Tomizuka, “A learning-based framework for robot peg-hole-insertion,” in Dynamic Systems and Control Conference, vol. 57250. American Society of Mechanical Engineers, 2015, p. V002T27A002.

- [57] L. Peternel, N. Tsagarakis, and A. Ajoudani, “A Human–Robot Co-Manipulation Approach Based on Human Sensorimotor Information,” IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 25, no. 7, pp. 811–822, Jul. 2017.

- [58] M. Ulmer, E. Aljalbout, S. Schwarz, and S. Haddadin, “Learning Robotic Manipulation Skills Using an Adaptive Force-Impedance Action Space,” arXiv:2110.09904 [cs], Oct. 2021.

- [59] F. J. Abu-Dakka and M. Saveriano, “Variable impedance control and learning – A review,” arXiv:2010.06246 [cs], Oct. 2020.

- [60] M. Sharifi, A. Zakerimanesh, J. K. Mehr, A. Torabi, V. K. Mushahwar, and M. Tavakoli, “Impedance Variation and Learning Strategies in Human-Robot Interaction,” IEEE Transactions on Cybernetics, pp. 1–14, 2021.

- [61] F. Ferraguti, N. Preda, M. Bonfe, and C. Secchi, “Bilateral teleoperation of a dual arms surgical robot with passive virtual fixtures generation,” in Intelligent Robots and Systems (IROS), 2015 IEEE/RSJ International Conference On. IEEE, 2015, pp. 4223–4228.

- [62] M. Rubagotti, T. Taunyazov, B. Omarali, and A. Shintemirov, “Semi-autonomous robot teleoperation with obstacle avoidance via model predictive control,” IEEE Robotics and Automation Letters, vol. 4, no. 3, pp. 2746–2753, 2019.

- [63] Z. Manchester and S. Kuindersma, “Variational Contact-Implicit Trajectory Optimization,” in Robotics Research, N. M. Amato, G. Hager, S. Thomas, and M. Torres-Torriti, Eds. Cham: Springer International Publishing, 2020, vol. 10, pp. 985–1000.

- [64] J.-P. Sleiman, F. Farshidian, M. V. Minniti, and M. Hutter, “A unified mpc framework for whole-body dynamic locomotion and manipulation,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 4688–4695, 2021.

- [65] D. E. Stewart, “Rigid-Body Dynamics with Friction and Impact,” SIAM Review, vol. 42, no. 1, pp. 3–39, Jan. 2000.

- [66] M. V. Minniti, R. Grandia, K. Fäh, F. Farshidian, and M. Hutter, “Model Predictive Robot-Environment Interaction Control for Mobile Manipulation Tasks,” arXiv:2106.04202 [cs], Jun. 2021.

- [67] S. Pfrommer, M. Halm, and M. Posa, “ContactNets: Learning of Discontinuous Contact Dynamics with Smooth, Implicit Representations,” arXiv preprint arXiv:2009.11193, 2020.

- [68] C. E. Rasmussen, “Gaussian processes for machine learning.” MIT Press, 2006.

- [69] K. B. Petersen, M. S. Pedersen et al., “The matrix cookbook,” Technical University of Denmark, vol. 7, p. 15, 2008.

- [70] R. Grandia, F. Farshidian, A. Dosovitskiy, R. Ranftl, and M. Hutter, “Frequency-Aware Model Predictive Control,” IEEE Robotics and Automation Letters, pp. 1–1, 2019.

- [71] K. Zhou, J. C. Doyle, and K. Glover, Robust and Optimal Control. Prentice hall New Jersey, 1996, vol. 40.

- [72] T.-C. Kao and G. Hennequin, “Automatic differentiation of Sylvester, Lyapunov, and algebraic Riccati equations,” arXiv:2011.11430 [cs, math], Nov. 2020.

- [73] L. Hewing, J. Kabzan, and M. N. Zeilinger, “Cautious model predictive control using gaussian process regression,” IEEE Transactions on Control Systems Technology, vol. 28, no. 6, pp. 2736–2743, 2019.

- [74] A. Albu-Schäffer, C. Ott, U. Frese, and G. Hirzinger, “Cartesian impedance control of redundant robots: Recent results with the dlr-light-weight-arms,” in Robotics and Automation, 2003. Proceedings. ICRA’03. IEEE International Conference On, vol. 3. IEEE, 2003, pp. 3704–3709.

- [75] F. Ficuciello, L. Villani, and B. Siciliano, “Variable impedance control of redundant manipulators for intuitive human–robot physical interaction,” IEEE Transactions on Robotics, vol. 31, no. 4, pp. 850–863, 2015.

- [76] J. B. Rawlings, D. Q. Mayne, and M. Diehl, Model Predictive Control: Theory, Computation, and Design. Nob Hill Publishing Madison, 2017, vol. 2.