Model Identification and Control of a Low-Cost Wheeled Mobile Robot Using Differentiable Physics

Abstract

We present the design of a low-cost wheeled mobile robot, and an analytical model for predicting its motion under the influence of motor torques and friction forces. Using our proposed model, we show how to analytically compute the gradient of an appropriate loss function, that measures the deviation between predicted motion trajectories and real-world trajectories, which are estimated using Apriltags and an overhead camera. These analytical gradients allow us to automatically infer the unknown friction coefficients, by minimizing the loss function using gradient descent. Motion trajectories that are predicted by the optimized model are in excellent agreement with their real-world counterparts. Experiments show that our proposed approach is computationally superior to existing black-box system identification methods and other data-driven techniques, and also requires very few real-world samples for accurate trajectory prediction. The proposed approach combines the data efficiency of analytical models based on first principles, with the flexibility of data-driven methods, which makes it appropriate for low-cost robots. Using the learned model and our gradient-based optimization approach, we show how to automatically compute motor control signals for driving the robot along pre-specified curves.

Index Terms:

model identification, differentiable physics, wheeled mobile robot, trajectory estimation and controlI Introduction

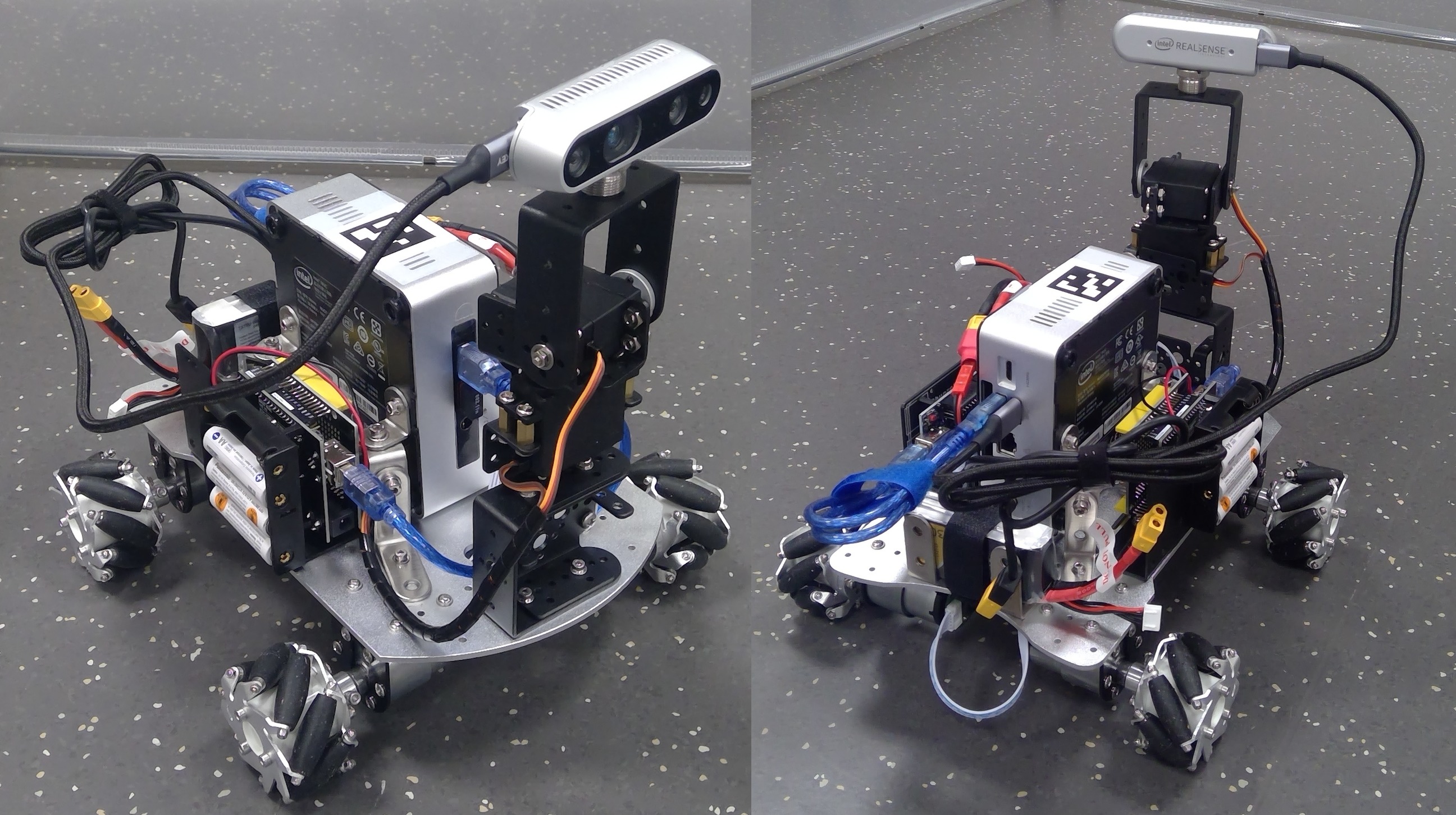

With the availability of affordable 3D printers and micro-controllers such as Arduino [1], Beaglebone Black [2], and Raspberry Pi [3], light-weight high-performance computing platforms such as Intel’s Next Unit of Computing (NUC) [4] and NVIDIA’s Jetson Nano [5], and programmable RGB-D cameras, such as Intel’s Realsense [6], there is renewed interest in building low-cost robots for various tasks [7]. Motivated by these developments, the long-term goal of the present work is to develop affordable mobile robots that can be easily assembled using off-the-shelf components and 3D-printed parts. The assembled affordable robots will be used for exploration and scene understanding in unstructured environments. They can also be augmented with affordable robotic arms and hands for manipulating objects. Our ultimate objective is to remove the economic barrier to entry that has so far limited research in robotics to a relatively small number of groups that can afford expensive robot hardware. To that end, we have designed our own wheeled mobile robot for exploration and scene understanding, illustrated in Figure 1. The first contribution of this work is then the hardware and software design of the proposed robot.

High-end robots can easily be controlled using software tools provided by manufacturers. Their physical properties, such as inertial and frictional parameters, are also precisely measured, which eliminates the need for further calibrations. Affordable robots assembled and fabricated in-house are significantly more difficult to control due to uncertainties in the manufacturing process, which result in differences in size, weight and inertia according to the manufacturing technique. Due to this uncertainty, hand-crafting precise and shared models for these robots is challenging. For example, the wheels of the robot in Figure 1 cannot be precisely modeled manually because of their complex structure and uncertain material properties. Moreover, the frictions between the wheels and the terrain vary largely when the robot is deployed on an unknown non-uniform terrain. Statistical learning tools such as Gaussian processes and neural networks have been largely used in the literature to deal with this uncertainty and to learn dynamic and kinematic models directly from data. While such methods have the advantage of being less brittle than classical analytical models, they typically require large amounts of training data collected from each individual robot and for every type of terrain.

In this work, we propose a hybrid data-driven approach that combines the versatility of machine learning techniques with the data-efficiency of physics models derived from first principles. The main component of the proposed approach is a self-tuning differentiable physics simulator of the designed robot. The proposed simulator takes as inputs the robot’s pose and generalized velocity at a given time, a sequence of control signals, and returns a trajectory of predicted future poses and velocities. After executing the sequence of controls on the real robot, the resulting ground-truth trajectory of the robot is recorded and systematically compared to the predicted one. The difference, known as the reality gap, between the predicted and the ground-truth trajectories is then used to automatically identify the unknown coefficients of friction between each of the robot’s wheels and the present terrain. Since the identification process must happen on the fly and in real time, black-box optimization tools cannot be effectively used. Instead, we show how to compute analytically the derivatives of the reality gap with respect to each unknown coefficient of friction, and how to use these derivatives to identify the coefficients by following the gradient-descent technique. A key novelty of our approach is the integration of a differentiable forward kinematic model with a neural-network dynamic model. The kinematic model represents the part of the system that can be modeled analytically in a relatively easy manner, while the dynamics neural network is used for modeling the more complex relation between the frictions and the velocities. But since both parts of the system are differentiable, the gradient of the simulator’s output is back-propagated all the way to the coefficients of friction and used to update them.

The time and data efficiency of the proposed technique are demonstrated through two series of experiments that we have performed with the robot illustrated in Figure 1. The first set of experiments consists in executing different control signals with the robot, and recording the resulting trajectories. Our technique is then used to identify the friction coefficients of each individual wheel, and to predict future trajectories accordingly. The second set of experiments consists in using the identified parameters in a model-predictive control loop to select control signals that allow the robot to track predefined trajectories. The proposed gradient-based technique is shown to be more efficient computationally than black-box optimization methods, and more accurate than a neural network trained using the same small amount of data.

II Related Work

The problem of learning dynamic and kinematic models of skid-steered robots has been explored in several past works. Vehicle model identification by integrated prediction error minimization was proposed in [8]. Rather than calibrate the system differential equation directly for unknown parameters, the approach proposed in [8] calibrates its first integral. However, the dynamical model of the robot is approximated linearly using numerical first-order derivatives, in contrast with our approach that computes the gradient of the error function analytically. A relatively similar approach was used in [9] for calibrating a kinematic wheel-ground contact model for slip prediction. The present work builds on the kinematic model for feedback control of an omnidirectional wheeled mobile robot proposed in [10].

A learning-based Model Predictive Control (MPC) was used in [11] to control a mobile robot in challenging outdoor environments. The proposed model uses a simple a priori vehicle model and a learned disturbance model, which is modeled as Gaussian Process and learned from local data. An MPC technique was also applied to the autonomous racing problem in simulation in [12]. The proposed system identification technique consists in decomposing the dynamics as the sum of a known deterministic function and noisy residual that is learned from data by using the least mean square technique. The approach proposed in the present work shares some similarities with the approach presented in [13], wherein the dynamics equations of motion are used to analytically compute the mass of a robotic manipulator. A neural network was also used in a prior work to calibrate the wheel–terrain interaction frictional term of skid-steered dynamic model [14]. An online estimation method that identifies key terrain parameters using on-board robot sensors is presented in [15]. A simplified form of classical terramechanics equations was used along with a linear-least squares method for terrain parameters estimation. Unlike in the present work that considers a full body simulation, [15] considered only a model of a rigid wheel on deformable terrains. A dynamic model is also presented in [16] for omnidirectional wheeled mobile robots, including surface slip. However, the friction coefficients in [16] were experimentally measured, unlike in the present work where the friction terms are automatically tuned from data by using the gradient of the distance between simulated trajectories and the observed ones.

Classical system identification builds a dynamics model by minimizing the difference between the model’s output signals and real-world response data for the same input signals [17, 18]. Parametric rigid body dynamics models have also been combined with non-parametric model learning for approximating the inverse dynamics of a robot [19].

There has been a recent surge of interest in developing natively differentiable physics engines. For example, [20] used the Theano framework to develop a physics engine that can be used to differentiate control parameters in robotics applications. The same framework can be altered to differentiate model parameters instead. This engine is implemented for both CPU and GPU, and it has been shown how such an engine speeds up the optimization process for finding optimal policies. A combination of a learned and a differentiable simulator was used to predict action effects on planar objects [21]. A differentiable framework for integrating fluid dynamics with deep networks was also used to learn fluid parameters from data, perform liquid control tasks, and learn policies to manipulate liquids [22]. Differentiable physics simulations were also used for manipulation planning and tool use [23]. Recently, it has been observed that a standard physical simulation formulated as a Linear Complementary Problem (LCP) is also differentiable and can be implemented in PyTorch [24]. In [25], a differentiable contact model was used to allow for optimization of several locomotion and manipulation tasks. The present work is another step toward the adoption of self-tuning differentiable physics engines as both a data-efficient and time-efficient tool for learning and control in robotics.

III Robot Design

The design of our mobile robot consists of the hardware assembly, and the software drivers that provide control signals for actuation. These components are described below.

III-A Hardware Assembly

The robot is built from various components, as shown in Figure 2, which comprise: (a) a central chassis with four Mecanum omni-directional wheels that are run by DC motors, (b) two rail mount brackets for Arduino, (c) two Arduino UNOs, (d) Arduino shield for the DC motors, (e) servo motor drive shield for Arduino, (f) two MG996R servos, (g) two 2DOF servo mount brackets, (h) Intel Realsense D435, (i) Intel NUC5i7RYH, and (j) two mAh, DC batteries. One battery powers the DC motors, while the other one powers the Intel NUC. The servo motors are powered separately with a DC source. All these components can be purchased from different vendors through Amazon.

III-B Control Software

The two Arduinos that drive the servos and the DC motors are connected to the Intel NUC via USB cables. To send actuation commands from the NUC to the Arduinos, we use the single byte writing Arduino method Serial.write(), following the custom Serial protocol developed in [26]. Each message is encoded as one byte, and the corresponding message parameters are then sent byte per byte, which are reconstructed upon reception using bitwise shift and mask operations. The limited buffer size of the Arduino is also accounted for, by having the Arduino “acknowledge” receipt of each message. We have generalized the implementation in [26] to support two Arduinos, two servo motors, and the differential drive mechanism for the wheels.

IV Analytical Model

Consider a simplified cylindrical model for the wheel. Let be the scalar component of its (diagonal) inertia tensor matrix about the rotational axis of the motor shaft (we discard the components in the plane orthogonal to this axis, as the wheel is not allowed to rotate in this plane). Then, its equation of motion can be written as:

| (1) |

where is the motor stall torque, is the wheel’s angular velocity, is the desired angular velocity (specified by the PWM signal), is the coefficient of friction, is the acceleration due to gravity, is the wheel radius, and is the mass of the robot. We assume that the weight of the robot is balanced uniformly by all four wheels (thus, the term ). The first term on the right hand side was derived in [27]. Equation (1) can be analytically integrated to obtain:

| (2) |

Since we are interested in large time spans for mobile robot navigation, only the steady state terms in equation (2) are important. Thus, we discard the transient terms to arrive at:

| (3) |

which gives us an expression for the wheel’s angular velocity as a function of the ground friction forces. We assume that the friction coefficient for each wheel is different. The chassis of our mobile robot is similar to that of the Uranus robot, that was developed in the Robotics Institute at Carnegie Mellon University [10]. The following expression was derived in [10] for the linear and angular velocity of the Uranus robot, using the wheel angular velocities as input:

V Loss Function

Let be the generalized position of the mobile robot at time , and be its generalized velocity. Then, its predicted state at time can be computed as:

| (5) |

where . The loss function computes the simulation-reality gap, defined as the divergence of a predicted robot’s trajectory from an observed ground-truth one. The loss is defined as follows,

| (6) |

where are the ground-truth position values at time-step , estimated using Apriltags [28] and an overhead camera. are the positions predicted in simulation by using Equation 5 and the same sequence of control signals as the ones provided to the real robot. The video is recorded using the overhead camera at fps, whereas the PWM signal is sent to the motor at a 3-4 higher frequency. Thus, there are several simulation time steps between two consecutive ground truth position values.

In practice, we observed that the predicted state can often “lag behind” the ground truth values, even when the robot is exactly following the overall path, leading to a high loss value. Thus, we fit a spline curve to the ground truth values and compute another loss function that uses the point closest to this curve from the predicted state at time-step :

| (7) |

Note that the loss alone is not sufficient, as it does not penalize the simulated robot for not moving at all from its starting position. Thus, we use a weighted linear combination of and as our actual loss function, as given below:

| (8) |

where we set , and . The pseudocode for loss computation is shown in Algorithm 1. Vector quantities are shown in bold. is defined in Equation 4, and is a vector of the friction coefficients of the different wheels. Line 4 uses component-wise vector multiplication. is the total number of time-steps, and is the time when the PWM signal was applied to the motor at time-step . The function IsGroundTruthSample checks if a ground truth Apriltag estimate exists at time , and if so, then the function GroundTruthIndex returns the index of that estimate.

VI Differentiable Physics

To minimize the loss function in equation (8) with respect to unknown parameters , corresponding to the friction values for the four wheels, we derive analytical expressions for the gradient of the loss with respect to each variable :

| (9) |

Let and denote the length . Then the second term in equation (9) can be expanded using the chain rule as follows:

| (10) |

The derivatives in equation (10) can be computed using equations (4), (5) at the higher frequency of the PWM signal sent to the motors, used for predicting the next state, as:

| (11) | |||||

| (12) | |||||

| (13) |

where is the entry in the matrix , as defined in equation (4). The derivative can be computed using equation (3). The expression for in equation (9) can be derived similarly. Note that, strictly speaking, the closest point on the spline curve is a function of the point . However, we have empirically found that estimating its derivative is not necessary and can be ignored, when computing the term , for .

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

| (e) | (f) | (g) | (h) |

As shown in Section VII, using the gradients derived in Section VI to minimize the loss function in equation (8) yields friction parameters that give good agreements with the experimental trajectory that is estimated using Apriltags. However, we observed that the computed friction parameters can differ in values for two different trajectories (with different control signals). This implies that the friction for each wheel is not a constant, but a function of the applied control signal. Thus, we first generate a sequence of trajectories with fixed control signals, and estimate friction parameters for each of them by separately minimizing the loss function in equation (8) using gradient descent. We then train a small neural network with 4 input nodes, 16 hidden nodes, and 4 output nodes. The input to the neural network are the applied control signals to the wheels, and the output are the friction parameters estimated using gradient descent. As shown in Section VII, a sequence of only input trajectories is enough to obtain reasonable predictions from the neural network, and allowed us to autonomously drive the mobile robot along an “eight curve” with high precision.

VII Experimental Results

We remotely controlled the robot for 6-8 seconds and collected 8 different trajectories, with 2 samples for each trajectory that were estimated using Apriltags [28], as shown in Figure 5 in red and blue. The control signals applied to the wheels were constant for the entirety of each trajectory, but different per trajectory. This was done for simplicity, as changing the control signals changes the direction of motion, and the robot may have driven out of the field of view. A video of these experiments is attached to the present paper as a supplementary material.

VII-A Model Identification

To estimate the unknown friction coefficients, we use the L-BFGS-B optimization method, where the gradient is computed using Algorithm 2. The result of our method is shown in Figure 5 in green. We additionally imposed the constraints that all estimated friction values should lie in the interval . Such constraints are naturally supported by L-BFGS-B. Our chosen values for ensure that as for . For comparison, we show the result of the Nelder-Mead method in yellow, which is an unconstrained derivative-free optimization technique. To ensure that our problem is still well-defined in an unconstrained setting, we slightly modified equation (3) as:

| (14) |

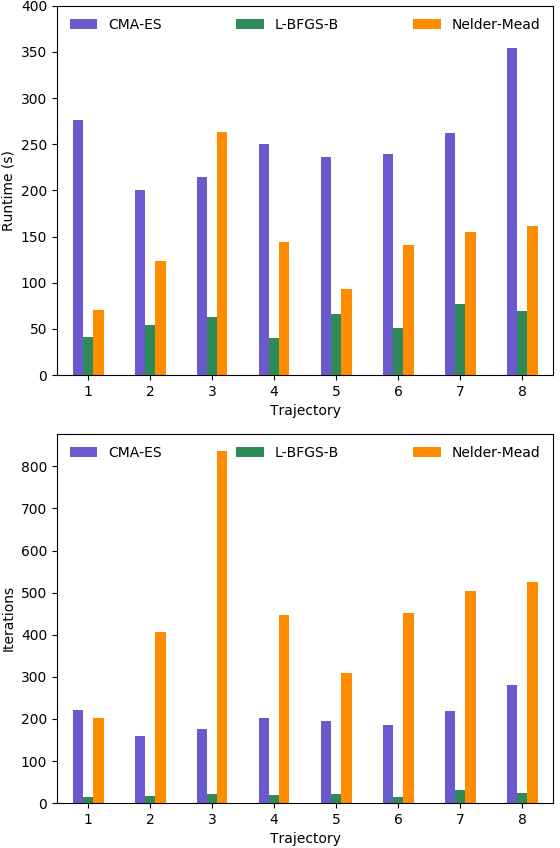

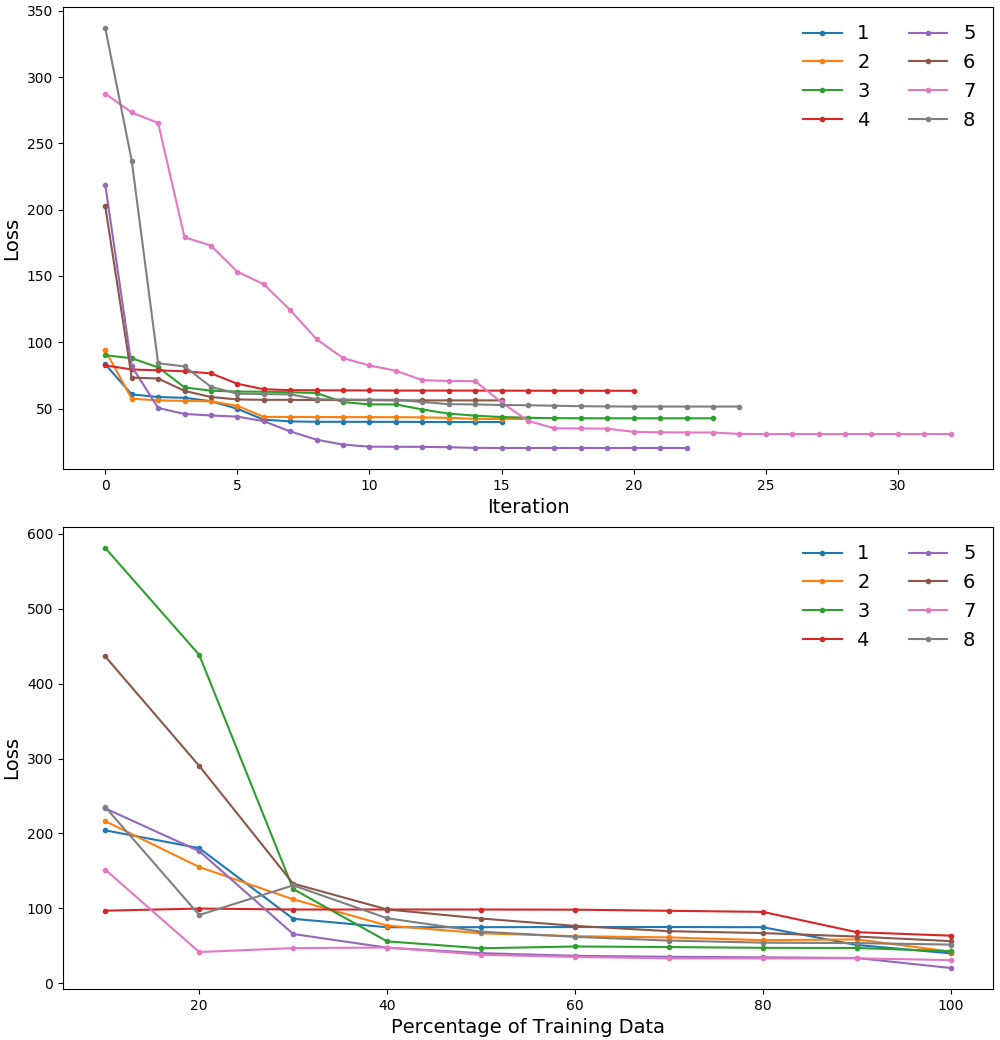

where is the sigmoid function, which always lies in the interval for all values of . We also show the trajectories computed from the original Uranus motion model [10], defined by equation (4). Since it does not account for friction, its predictions significantly deviate from the observed trajectories. The total run-time and iteration counts for our method, Nelder-Mead, and CMA-ES [29], which is also a derivative-free optimization method, are highlighted in Figure 6. As shown, our method requires very few iterations to converge, and is generally faster than both Nelder-Mead, which sometimes fails to converge (in 3 out of our 8 cases), and CMA-ES. We did not show the trajectories predicted using CMA-ES in Figure 5 to avoid clutter, as it converges to the same answer as L-BFGS-B, just takes longer, as shown in Figure 6. Figure 7(top) shows the loss value with increasing iteration counts of L-BFGS-B. The use of accurate analytic gradients allows for rapid progress in the initial few iterations itself. Figure 7(bottom) shows the effect on loss when the training data is reduced according to the percentage on the X-axis. As shown, our method converges to almost the final loss value with only of the total data, making it data efficient, and potentially applicable in real-time settings for dynamically detecting changes in the friction of the terrain.

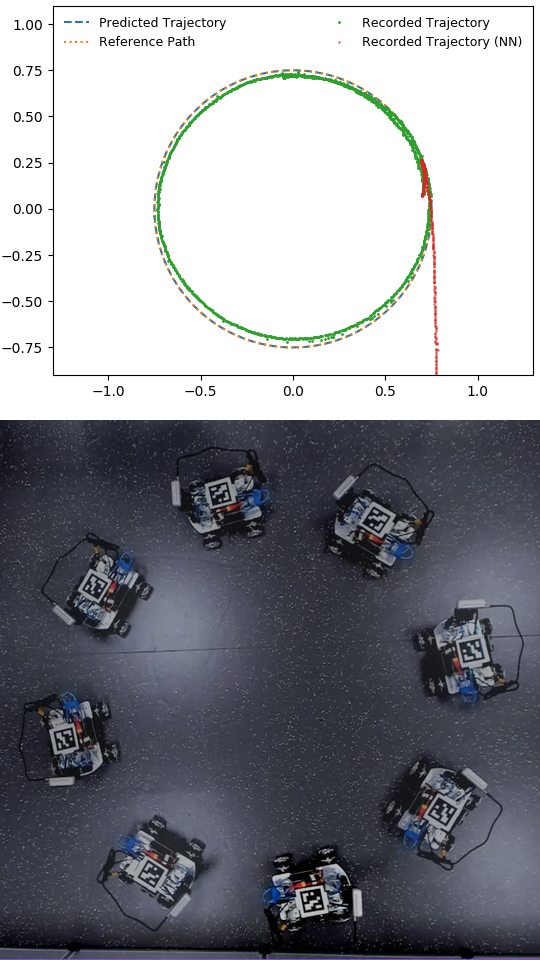

VII-B Path Following

We also used our learned model to compute control signals, such that the robot could autonomously follow pre-specified curves within a given time budget . We take as input the number of way-points that the robot should pass in a second, and discretize the given curve with way-points. We assume that the control signal is constant between consecutive way-points. To compute the control signals, we again use L-BFGS-B, but modify Algorithms 1 and 2 to optimize for the control signals , instead of the friction coefficients . Apart from changing the primary variable from to , the only other change required is to use the derivative of equation (3) with respect to in Algorithm 2.

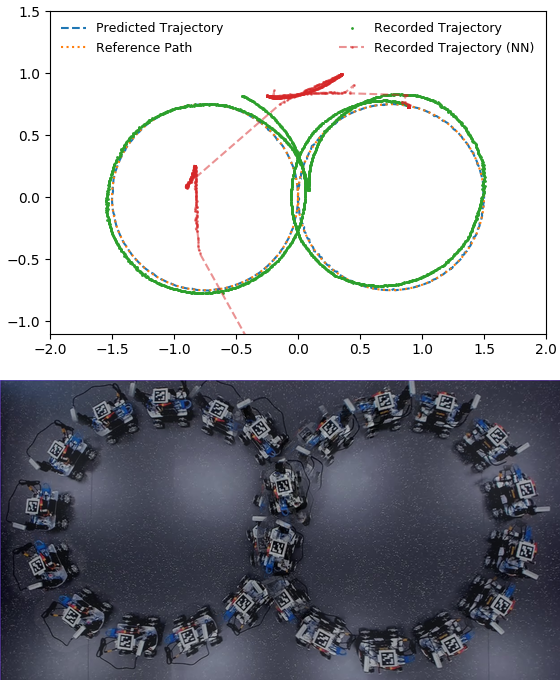

Figures 8 and 9 illustrate our results when the specified path is a circle, and a more challenging “figure 8”. Shown are the reference path, the path predicted by using the control signals computed from our learned model, and the real robot trajectory estimated with Apriltags after applying these control signals. Our learned model is accurate enough that the robot can successfully follow the specified path very closely. To test the robustness of our model, we parametrized the 8-curve such that the robot drives the right lobe backwards, and the left lobe forwards. The slight overshooting of the actual trajectory beyond the specified path is to be expected, as we only optimized for position constraints, but not for velocity constraints, when computing the control signals.

For comparison, we also trained a data-driven dynamics model using a neural network, whose input was the difference in generalized position values between the current state and the next state, and whose output was the applied control signals. It had 3 input nodes, 32 hidden nodes, and 4 output nodes. The output was normalized to lie in the interval during training time, and then rescaled during testing. We trained this neural network using the recorded trajectories shown in Figure 5, and used it to predict the control signals required to move between consecutive way-points. These results are shown in red in Figures 8 and 9 (also see accompanying video). The motion is very fast, and the robot quickly goes out of the field of view. We conclude that the training data is not sufficient for the neural network to learn the correct robot dynamics. In contrast, our method benefits from the data efficiency of analytical models.

VIII Conclusion and Future Work

We presented the design of a low-cost mobile robot, as well as an analytical model that closely describes real-world recorded trajectories. To estimate unknown friction coefficients, we designed a hybrid approach that combines the data efficiency of differentiable physics engines with the flexibility of data-driven neural networks. Our proposed method is computationally efficient and more accurate than existing methods. Using our learned model, we also showed the robot drive autonomously along pre-specified paths.

In the future, we would like to improve our analytical model to also account for control signals that are not powerful enough to induce rotation of the wheels. In such cases, the wheel is not completely static and can still turn during robot motion, due to inertia. This causes the robot to change orientations in a manner that cannot be predicted by our current model. Accounting for such control signals would allow for more versatile autonomous control of our robot.

References

- [1] M. Banzi, Getting Started with Arduino, ill ed. Sebastopol, CA: Make Books - Imprint of: O’Reilly Media, 2008.

- [2] C. A. Hamilton, BeagleBone Black Cookbook. Packt Publishing, 2016.

- [3] G. Halfacree and E. Upton, Raspberry Pi User Guide, 1st ed. Wiley Publishing, 2012.

- [4] Intel Next Unit of Computing (NUC). [Online]. Available: https://en.wikipedia.org/wiki/Next“˙Unit“˙of“˙Computing.

- [5] NVIDIA Jetson Nano Developer Kit. [Online]. Available: https://developer.nvidia.com/embedded/jetson-nano-developer-kit.

- [6] Intel Realsense Depth & Tracking Cameras. [Online]. Available: https://www.intelrealsense.com/.

- [7] Adeept: High Quality Arduino & Raspberry Pi Kits. [Online]. Available: https://www.adeept.com/.

- [8] N. Seegmiller, F. Rogers-Marcovitz, G. A. Miller, and A. Kelly, “Vehicle model identification by integrated prediction error minimization,” International Journal of Robotics Research, vol. 32, no. 8, pp. 912–931, July 2013.

- [9] N. Seegmiller and A. Kelly, “Enhanced 3d kinematic modeling of wheeled mobile robots,” in Proceedings of Robotics: Science and Systems, July 2014.

- [10] P. F. Muir and C. P. Neuman, “Kinematic modeling for feedback control of an omnidirectional wheeled mobile robot,” in Autonomous Robot Vehicles, 1987.

- [11] C. Ostafew, J. Collier, A. Schoellig, and T. Barfoot, “Learning-based nonlinear model predictive control to improve vision-based mobile robot path tracking,” Journal of Field Robotics, vol. 33, 06 2015.

- [12] U. Rosolia, A. Carvalho, and F. Borrelli, “Autonomous racing using learning model predictive control,” CoRR, vol. abs/1610.06534, 2016. [Online]. Available: http://arxiv.org/abs/1610.06534

- [13] C. Xie, S. Patil, T. Moldovan, S. Levine, and P. Abbeel, “Model-based reinforcement learning with parametrized physical models and optimism-driven exploration,” in Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2016.

- [14] C. Ordonez, N. Gupta, B. Reese, N. Seegmiller, A. Kelly, and E. G. Collins, “Learning of skid-steered kinematic and dynamic models for motion planning,” Robotics and Autonomous Systems, vol. 95, pp. 207 – 221, 2017. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0921889015302207

- [15] K. Iagnemma, Shinwoo Kang, H. Shibly, and S. Dubowsky, “Online terrain parameter estimation for wheeled mobile robots with application to planetary rovers,” IEEE Transactions on Robotics, vol. 20, no. 5, pp. 921–927, 2004.

- [16] R. L. Williams, B. E. Carter, P. Gallina, and G. Rosati, “Dynamic model with slip for wheeled omnidirectional robots,” IEEE Transactions on Robotics and Automation, vol. 18, no. 3, pp. 285–293, 2002.

- [17] J. Swevers, C. Ganseman, D. B. Tukel, J. De Schutter, and H. Van Brussel, “Optimal robot excitation and identification,” IEEE TRO-A, vol. 13, no. 5, pp. 730–740, 1997.

- [18] L. Ljung, Ed., System Identification (2Nd Ed.): Theory for the User. Upper Saddle River, NJ, USA: Prentice Hall PTR, 1999.

- [19] D. Nguyen-Tuong and J. Peters, “Using model knowledge for learning inverse dynamics,” in ICRA. IEEE, 2010, pp. 2677–2682.

- [20] J. Degrave, M. Hermans, J. Dambre, and F. Wyffels, “A differentiable physics engine for deep learning in robotics,” CoRR, vol. abs/1611.01652, 2016.

- [21] A. Kloss, S. Schaal, and J. Bohg, “Combining learned and analytical models for predicting action effects,” CoRR, vol. abs/1710.04102, 2017.

- [22] C. Schenck and D. Fox, “Spnets: Differentiable fluid dynamics for deep neural networks,” CoRR, vol. abs/1806.06094, 2018.

- [23] M. Toussaint, K. R. Allen, K. A. Smith, and J. B. Tenenbaum, “Differentiable physics and stable modes for tool-use and manipulation planning,” in Proc. of Robotics: Science and Systems (R:SS 2018), 2018.

- [24] F. de Avila Belbute-Peres and Z. Kolter, “A modular differentiable rigid body physics engine,” in Deep Reinforcement Learning Symposium, NIPS, 2017.

- [25] I. Mordatch, E. Todorov, and Z. Popović, “Discovery of complex behaviors through contact-invariant optimization,” ACM Trans. Graph., vol. 31, no. 4, pp. 43:1–43:8, July 2012. [Online]. Available: http://doi.acm.org/10.1145/2185520.2185539

- [26] Autonomous Racing Robot With an Arduino and a Raspberry Pi. [Online]. Available: https://becominghuman.ai/autonomous-racing-robot-with-an-arduino-a-raspberry-pi-and-a-pi-camera-3e72819e1e63.

- [27] R. Rojas, “Models for DC motors,” Unpublished Document. [Online]. Available: http://www.inf.fu-berlin.de/lehre/WS04/Robotik/motors.pdf

- [28] J. Wang and E. Olson, “Apriltag 2: Efficient and robust fiducial detection,” in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October 2016.

- [29] N. Hansen, S. D. Müller, and P. Koumoutsakos, “Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES),” Evol. Comput., vol. 11, no. 1, p. 1–18, Mar. 2003.