Model-Free Design of Stochastic LQR Controller from Reinforcement Learning and Primal-Dual Optimization Perspective

Abstract

To further understand the underlying mechanism of various reinforcement learning (RL) algorithms and also to better use the optimization theory to make further progress in RL, many researchers begin to revisit the linear-quadratic regulator (LQR) problem, whose setting is simple and yet captures the characteristics of RL. Inspired by this, this work is concerned with the model-free design of stochastic LQR controller for linear systems subject to Gaussian noises, from the perspective of both RL and primal-dual optimization. From the RL perspective, we first develop a new model-free off-policy policy iteration (MF-OPPI) algorithm, in which the sampled data is repeatedly used for updating the policy to alleviate the data-hungry problem to some extent. We then provide a rigorous analysis for algorithm convergence by showing that the involved iterations are equivalent to the iterations in the classical policy iteration (PI) algorithm. From the perspective of optimization, we first reformulate the stochastic LQR problem at hand as a constrained non-convex optimization problem, which is shown to have strong duality. Then, to solve this non-convex optimization problem, we propose a model-based primal-dual (MB-PD) algorithm based on the properties of the resulting Karush-Kuhn-Tucker (KKT) conditions. We also give a model-free implementation for the MB-PD algorithm by solving a transformed dual feasibility condition. More importantly, we show that the dual and primal update steps in the MB-PD algorithm can be interpreted as the policy evaluation and policy improvement steps in the PI algorithm, respectively. Finally, we provide one simulation example to show the performance of the proposed algorithms.

keywords:

Stochastic linear-quadratic regulator; model-free design; reinforcement learning; optimization; duality., , , ,

1 Introduction

Reinforcement learning (RL), a branch of machine learning, aims to learn policies to optimize a long-term decision-making process in a dynamic environment [1, 2]. In general, there are two main characteristics, which are also readily considered as the difficulties of the RL setting [1]. First, the value of a state is the total amount of reward/cost an agent expects to accumulate over the future, starting from that state. That is, early decisions affect the outcomes both near and far in the future. Second, the learner’s knowledge about the environment is incomplete, e.g., the underlying mechanism governing the dynamics of the state-transition trajectories is unknown. To overcome these difficulties, a lot of effective RL algorithms have been developed [3, 4, 5], and these algorithms have been successfully used in numerous fields, including smart grids [6], multi-robot systems [7], intelligent transportation[8], and networked control [9]. From these works, we know that in spite of the fact that RL cannot be regarded as a standard optimization problem due to the lack of an explicit objective function [10], it still has strong ties to the optimization formulation, since its goal is to optimize the long-term cumulative performance [11]. In view of this, existing fruitful optimization algorithms and theories might enable researchers to make further progress in RL, so that the integration of elementary RL methods with optimization frameworks is worthwhile to study. Some efforts towards this direction have been made, see, for example, [12, 13].

It is worth mentioning that following this line, numerous researchers begin to revisit the linear-quadratic regulator (LQR) problem [14, 15, 16, 17, 18, 19], the task of which is to determine an optimal feedback controller minimizing a quadratic cost function for linear systems [20]. One important reason for the revisit of the LQR problem is that it is simple and yet captures the two characteristics of RL problems mentioned in the previous paragraph. Such properties of the LQR problem provide convenience for understanding the behavior and the underlying mechanism of various RL algorithms. Another reason is that the traditional methods for addressing the LQR problem require to directly solve an algebraic Riccati equation (ARE), which depends upon exact information of the system matrices; however, the exact system matrices are usually unknown in real applications. Although model identification provides a way to obtain the estimated system model, the propagation and accumulation of modeling errors may cause undesirable system behaviors. Therefore, it is meaningful to study the model-free design of the LQR controller with the consideration of the integration of RL algorithms with optimization theories. Some relevant works can be found in [15, 16, 19, 18, 17].

Specifically, in [15] and [16], the authors reformulate the LQR problem as a non-convex optimization problem, and merge ideas from optimal control theory, mathematical optimization, and sampled-based RL to solve it. To seek the solutions to the resulting non-convex optimization problem, model-free stochastic policy gradient algorithms along with zeroth-order optimization techniques are developed with provable global convergence [15] and explicit convergence rate [16]. The work [17] considers distributed RL for decentralized LQR problem, and proposes a zeroth-order distributed policy optimization algorithm to learn the local optimal controllers in a distributed manner, by leveraging the ideas of policy gradient, zeroth-order optimization, as well as consensus algorithms. Note that in the above mentioned works, the key to realizing model-free control is to use the zeroth-order optimization approach to estimate the policy gradient. This approach often suffers from large variance, as it involves the randomness of an entire trajectory. To address this difficulty, some different algorithms have been proposed [18, 19]. The work [18] develops a model-free approximate policy iteration algorithm based on Q-function, in which the way to guarantee a good regret is also discussed. In [19], an on-policy actor-critic algorithm, which is an online alternating update algorithm for bilevel optimization, is developed with a non-asymptotic convergence analysis, and then it is extended to the off-policy case to alleviate the data-hungry problem.

Despite the fact that extensive research has been carried out on the LQR problem when considering the integration of RL frameworks with optimization techniques, these works, such as [15, 16, 19, 18, 17], mainly focus on the theoretical understanding of the performance of common RL algorithms. They do not provide any explanations for the proposed RL algorithms from the perspective of optimization or establish any relationship between RL and optimization-based methods. Different from the above mentioned works, the authors in [21] reformulate the LQR problem as a new optimization problem, and further explain its duality results from a Q-learning viewpoint. The duality results proposed in [21] are based on the results given in [22], where the duality for the stochastic LQR problem pertaining to linear systems with stochastic noise is investigated in terms of semidefinite programming (SDP). These works motivate some questions. Can we formulate the stochastic LQR problem as an optimization problem whose optimal points can be interpreted from the perspective of various RL algorithms? Can we explain the RL algorithms used to solve stochastic LQR problem from the perspective of optimization?

To answer these questions, we investigate the model-free design of stochastic LQR controller from the perspective of both RL and optimization. We first solve the stochastic LQR problem in a model-free way from the perspective of RL. Then, we reconsider this problem by some reformulation and transformations, and solve it from the perspective of primal-dual optimization. Besides that, we also provide a theorem to show that the algorithms developed from the above two perspectives are equivalent in the sense that there is a corresponding relation in the involved iterations. The contributions of this work are summarized as follows:

-

1.

To solve the stochastic LQR problem, we develop a model-free off-policy policy iteration (MF-OPPI) algorithm and a model-free primal-dual (MB-PD) algorithm from the perspective of RL and primal-dual optimization, respectively. Both algorithms can be implemented in a model-free way, so that one can circumvent model identification, which may cause undesirable behaviors due to the propagation and accumulation of modeling errors. More importantly, we show that the dual and primal update steps in the MB-PD algorithm can be interpreted as the policy evaluation and policy improvement steps in the classical policy iteration (PI) algorithm, respectively. This result provides a novel primal-dual optimization perspective to understand the classical PI algorithm.

-

2.

From the perspective of RL, we develop a new MF-OPPI algorithm to address the stochastic LQR problem, in which the data generated by exerting the behavior policy on the real system is repeatedly used to evaluate the target policy so as to alleviate the data-hungry problem to some extent. Compared with the off-policy actor-critic algorithm proposed in [19], the newly developed MF-OPPI algorithm is simple and easy to implement, since it does not require to use neural networks for function approximation.

-

3.

From the optimization perspective, we first reformulate the stochastic LQR problem as a constrained non-convex optimization problem, based on the works [21] and [22]. However, due to the consideration of the influence of stochastic noise and the relation with RL algorithms, the proposed optimization formulation is different from the ones given in [21] and [22]. Such differences bring about some challenges in the equivalent formulation of primal-dual problems as well as the analysis for the strong duality. To address the resulting constrained non-convex optimization problem, we develop a new MB-PD algorithm with a model-free implementation, by using the properties of the resulting Karush-Kuhn-Tucker (KKT) conditions. This algorithm circumvents the requirement of probing noise exerted on the system for persistently exciting, which is necessary for the newly proposed MF-OPPI algorithm and other RL-based algorithms proposed in [23, 24, 25], thereby avoiding undesirable oscillation.

The rest of this paper is organized as follows. We first formulate the problem of interest and provide some preliminaries in Section 2. Subsequently, we investigate this problem from the perspective of RL and primal-dual optimization in Section 3 and Section 4, respectively. Then, in Section 5, we show that the algorithms developed from the above two perspectives are equivalent in the sense that there is a corresponding relation in the involved iterations. Next, we provide a simulation example in Section 6. Some concluding remarks are finally given in Section 7.

Notations: We use and to denote an identity matrix and a null matrix in , respectively. Denote by a zero vector of dimensions. A set of symmetric matrices of dimensions is denoted by . () is a set of symmetric positive semidefinite (positive definite) matrices. For a matrix , we use to denote the element in the -th row and the -th column. is the trace of a matrix , and denotes its spectral radius. A symmetric positive (semi-)definite matrix is denoted by .

For a symmetric matrix , denotes a row vector consisting of diagonal entries and non-diagonal terms , , . Specifically, . For a symmetric matrix , denotes a column vector composed of diagonal entries of and distinct sums , i.e., . For a non-square matrix , consists of the stack of each column’s elements. That is, .

2 Problem Formulation and Preliminaries

Consider the following discrete-time linear time-invariant system

| (1) |

where , is the state vector of the system plant, is the control action, is the system noise generated according to the Gaussian distribution , and and are constant matrices of appropriate dimensions.

The initial state is denoted by , which is assumed to be a random variable. In this paper, we consider the state-feedback control law, that is, , where is the feedback gain. denotes the solution to the dynamical system (1) under the control input while starting from . It is assumed that the noise is independent of the initial state as well as the noise for all .

Under the state-feedback control law , the cost function is denoted by

| (6) |

where is the discount factor, and .

Define , which is the set of stabilizing state-feedback gains for the matrix pair . Now, we are ready to formally give the problem of interest, which is called the stochastic LQR problem.

Problem 1 (Stochastic LQR Problem).

The optimal feedback gain is defined as . The corresponding optimal cost for a given initial state is denoted by .

The followings are some assumptions that will be used in the remaining contents.

Assumption 1.

Assume that

-

1.

is not known;

-

2.

An initial stabilizing feedback gain is known;

-

3.

and ;

-

4.

The matrix pair is stabilizable;

-

5.

The matrix pair is detectable, where is a matrix such that .

For an stabilizing feedback gain , define the value function as

| (7) |

The optimal value function is given by

| (8) |

Lemma 1 ([26, Lemma 3]).

For any stabilizing state-feedback gain , the stochastic LQR problem, i.e., Problem 1, is well-posed111The stochastic LQR problem is said to be well-posed if the optimal value function satisfies . and the corresponding value function is

| (9) |

where is the unique solution to the Lyapunov equation

| (10) |

From the definition of the value function (7), one has

| (11) |

which is called the Bellman equation. Define the Hamiltonian as

The first-order necessary condition for optimality gives

It follows that the unique optimal control gain is given by

| (12) |

with being the unique solution to the following ARE

| (13) |

By substituting the optimal feedback gain (12) into the value function (11), one can obtain that

| (18) |

where

| (21) |

In the following analysis, let , , and .

Based on the above analysis, we know that calculating the optimal feedback gain requires both the system matrix and the control input matrix . In the following two sections, we provide two approaches from a RL-based view and an optimization-based view, respectively, to compute the optimal feedback gain without using the matrices and .

3 RL-Based Method

The goal of this section is to solve Problem 1 by designing RL algorithms.

3.1 Classical PI Algorithm

| (22) |

| (23) |

Note that by replacing with , the ARE (13) is identical to that obtained from a standard LQR problem [27, Chapter 2.1.4], in which the dynamical system is not influenced by the stochastic noise. There have been various algorithms to seek the solutions to the ARE, one of which is the policy iteration algorithm. A classical PI algorithm proposed in [27, Chapter 2.2] to solve (13) is given below, cf. Algorithm 1.

The iterations (22) and (23) in Algorithm 1 rely upon repeatedly solving the Lyapunov equation (10). Hence, Algorithm 1 is an offline algorithm that requires complete knowledge of the system matrices and . Note that Algorithm 1 is also called Hewer’s algorithm, and it has been shown to converge to the solution of the ARE (13) in [28].

3.2 MF-OPPI Algorithm

In this subsection, we intend to propose an off-policy algorithm that can calculate the optimal feedback gain (12) without using the system matrices.

To this end, we first rewrite the original system (1) as

| (24) |

where is the target policy being learned and updated by iterations, and is the behavior policy that is exerted on the system to generate data for learning.

which is termed the off-policy Bellman equation.

Then, we use (LABEL:off-2) for policy evaluation, and propose an MF-OPPI algorithm, i.e., Algorithm 2.

| (26) | ||||

| (27) |

Theorem 1.

Proof.

Substituting (24) into the left-hand side of (LABEL:off-PE), it follows that

Therefore, it holds that

Since , one can obtain that for ,

| (28) | ||||

From the dynamics (1), it holds that for ,

Due to the positive definiteness of and , the above equation implies that is positive definite. Thus, one can obtain from (LABEL:off-3) that

| (29) |

which is exactly the update in policy evaluation step of Algorithm 1.

3.3 Implementation of Algorithm 2

To implement Algorithm 2, a natural question is how to solve the matrices , , and from (LABEL:off-PE). In this subsection, we provide an effective way for the implementation of Algorithm 2, where a numerical average is adopted to approximate the mathematical expectation and a batch least square (BLS) method [29] is employed to estimate the unknown matrices.

Specifically, by vectorization, the policy evaluation step (LABEL:off-PE) in Algorithm 2 becomes

| (30) |

The equation (3.3) has unknown parameters. Thus, at least sample points are required to solve , , and from (3.3). Sample the data generated under the behavior policy for time steps, and define

| (35) | ||||

| (39) |

with

which can be solved by

| (40) | ||||

Note that to guarantee the solvability of the BLS estimator (40), a probing noise is required to add into the behavior policy to guarantee the invertibility of . It is worthwhile to point out that adding a probing noise does not influence the iteration results of Algorithm 2, which can be regarded as an advantage of the off-policy algorithm compared with the on-policy one [24]. The detailed implementation of Algorithm 2 is given in Algorithm 3.

Algorithm 3 includes two main phases. In the first phase, we exert the behavior policy on the real system for time steps, and sample the resulting system states and control actions. Then, a numerical average of trajectories is used to calculate the mathematical expectation. In the second phase, we implement policy evaluation and policy improvement steps by reusing the data sampled in the first phase. The reuse of data is an important advantage of the proposed MF-OPPI algorithm when compared with the on-policy RL algorithms, since the latter are required to collect the state-input data when applying every iterated control policies [5, 30]. Therefore, the proposed MF-OPPI algorithm is able to alleviate the data-hungry problem to some extent.

4 Optimization-Based Method

In this section, we reformulate Problem 1 as a constrained optimization problem, and resolve it from the primal-dual optimization perspective.

4.1 Problem Reformulation

For technical reasons, we need to make some modifications on Problem 1. To do this, some new notations are required.

We introduce the augmented state vector , and the resulting augmented system is described by

| (41) |

where and .

The following is a useful property that will be used in the remaining part of this paper.

Lemma 2 (Property of Spectral Radius[21, Lemma 1]).

It holds that .

Let for be different samples from the random variable . Let for be different initial stabilizing control laws. different initial states for the augmented system (41) are denoted by for . Denote by the solution of (41) starting from the initial state and evolving under the control , .

A new cost function is defined as

| (42) |

Note that different from the cost function defined in (6), where the initial state-feedback control law is considered, the initial control used in is arbitrary, as long as it is stabilizable.

The new problem is formally formulated as below.

Problem 2 (Modified Stochastic LQR Problem).

Suppose that , , and for , are chosen such that , with being a positive constant. The following problem,

is called a modified stochastic LQR problem. The corresponding optimal feedback gain is defined as . The resulting optimal value is denoted by .

Proposition 1.

The optimal solution obtained by solving Problem 2 is unique, and it is identical to .

Proof.

From the results in Section 2, we know that although has different values under different initial state , the optimal control gain , whose explicit form is given in (12), does not rely upon . It follows that for any initial states and , , satisfying .

Based on the definitions of and , an algebraic manipulation leads to

where . Since the last two terms on the right-hand side of the above equation are constants, it holds that .

From the above, it follows that . Moreover, the uniqueness of guarantees that is unique. ∎

The followings are two conclusions that will be frequently used in the following contents.

Lemma 3 (Lyapunov Stability Theorems [31, Chapter 3]).

Let . Then,

-

1.

if , then for each given matrix , there exists a unique matrix satisfying ;

-

2.

if and only if for each given matrix , there exists a unique matrix such that .

4.2 Primal-Dual Formulation

From the previous subsection, we know that Problem 2 is a non-convex optimization problem with the objective function , the static constraint , and the dynamic constraint (1). In this subsection, we propose an equivalent non-convex optimization formulation of Problem 2, and show that its dual gap is zero.

We first give the definition of the non-convex optimization formulation.

Primal Problem 1.

Solve

| (43) | ||||

| (44) |

It is obvious to see that Primal Problem 1 is a single-objective multi-variable optimization problem consisting of a linear objective function, a linear inequality constraint, and a quadratic equality constraint [32]. Hence, it is a non-convex optimization problem.

Proposition 2.

Proof.

By the properties of trace, we rewrite the objective function of Problem 2 as

with

| (45) |

From (41), we have

| (46) |

Using the equation (46) and the fact that is the i.i.d. random noise for each , we can obtain that for any and , it holds

Thus, in (45) becomes

| (47) |

By an algebraic manipulation, one can obtain that

which implies that satisfies the Lyapunov equation (44).

Since , it follows from Lemma 2 and the second statement of Lemma 3 that if and only if there exists a unique such that the constraint (44) holds. Therefore, we can replace the constraint in Problem 2 by without changing its optimal solution.

From the above discussions, we know that if is the optimal solution of Primal Problem 1 and the corresponding optimal cost is , then it holds that and . From Lemma 2, and is a feasible point of Problem 2. Thereby, is lower bounded by the optimal value of Problem 2, i.e., . In addition, if is the unique solution of (44) with , then the resulting objective function of Primal Probelm 1 is . Thus, we can conclude that is the solution of Primal Problem 1 and . The uniqueness of can be shown by the uniqueness of . ∎

For any fixed , , define the Lagrangian function as

| (48) | ||||

where and are Lagrangian multipliers. The Lagrangian dual function is defined as

Then, the dual problem corresponding to Primal Problem 1 is defined as

where is as defined in (48).

The weak duality [32, Chapter 5] guarantees that holds, where is called the duality gap. When the duality gap is zero, we say that the optimization problem has strong duality [32, Chapter 5]. The following theorem shows that Primal Problem 1 has strong duality.

Theorem 2 (Strong Duality).

The strong duality holds for Primal Problem 1, that is, .

Proof.

See Appendix A. ∎

4.3 MB-PD Algorithm

It is well-known that for a non-convex optimization problem, if its objective and constraints functions are differentiable and it has strong duality, then any primal and dual optimal points must satisfy the corresponding KKT conditions, but not vise versa[32, Chapter 5.5.3]. However, if the solution to the KKT conditions is unique, then this solution must be the primal and dual optimal points. In view of this, we first derive the KKT conditions and show the uniqueness of their solutions in this subsection, and then design an algorithm to seek the solution to Problem 2 according to the solutions to the KKT conditions.

Lemma 4.

Suppose that is the primal optimal point and is the dual optimal point of Primal Problem 1. Then, satisfies the KKT conditions for

| (49) | |||

| (50) | |||

| (51) | |||

| (52) | |||

| (53) |

Proof.

From the conclusions in [32, Chapter 5.9.2], the KKT conditions of Primal Problem 1 are as follows:

-

1.

primal feasibility condition:

-

2.

dual feasibility condition:

-

3.

complementary slackness condition:

-

4.

stationary conditions:

By the second statement of Lemma 3, and guarantee that , which further implies that the only solution to the equation is . Hence, the KKT conditions in (49)-(53) can be obtained. According to [32, Chapter 5.5.3], the strong duality guarantees that the solutions to the KKT conditions must be the primal and dual optimal points. ∎

The following lemma is given to show that the uniqueness of the solutions to the KKT conditions (49)-(53), which guarantees that the solutions satisfying the KKT conditions must be the primal and dual optimal points.

Lemma 5.

Proof.

By observation, we can know that the sufficient condition for (53) is . To show this lemma, we first show that satisfying , , and is exactly with . Using the same argument as in the proof of Lemma 7, we have . From Proposition 1 and Proposition 2, it holds that . In view of this, we only need to show that satisfying , , and is exactly .

By substituting into (52), it follows that

Define . The above equation becomes , the expansion of which can be written as

i.e., , , and . Substituting these expressions into the definition yields that

Note that to calculate the primal optimal point , one needs to know , which is uniquely determined by solving (52). However, (52) is dependent upon the primal optimal point , which means that the stationary conditions (52) and (53) are coupled with each other, so that and cannot be obtained independently. In what follows, we propose an algorithm, cf. Algorithm 4, to iteratively solve (52) and (53). Note that once and are attained, one can uniquely determine the primal variable by solving (49).

We have the following convergence result:

Lemma 6.

Given an initial stabilizing control gain . Consider the two sequences and obtained from Algorithm 4. We have the following properties:

-

1.

is a non-increasing sequence, that is, ;

-

2.

obtained at each iteration step is stabilizing, that is, for each ;

- 3.

4.4 Model-Free Implementation of Algorithm 4

From the equation (45) that is defined in the proof of Proposition 2, can be equivalently written as

| (54) |

We truncate the time horizon by , and define

| (55) |

Note that for any , it holds that due to the positive definiteness of . In view of this, the stationary condition (52) holds if and only if , which can be rewritten as the following linear matrix equation

| (56) |

where

| (57) |

Therefore, for a fixed and stabilizing control gain , the corresponding dual variable can be obtained by solving the linear matrix equation (56).

Based on the above analysis, we give a detailed model-free implementation of the proposed MB-PD algorithm, and summarize it in Algorithm 5. Similar to Algorithm 3, the mathematical expectations are approximated by the numerical averages herein.

Note that different from the Algorithm 3, the primal-dual optimization-based algorithm developed in this section has the advantage of averting the requirement of the excitation signal, which may cause undesirable system oscillation. However, this is at the cost of the requirement of more data. Specifically, it is assumed that we can sample the state-input trajectories generated under a certain set of initial vectors which are linearly independent in .

5 Equivalency Between PI and MB-PD Algorithms

In the previous two sections, we solve the stochastic LQR problem from the perspective of RL and primal-dual optimization, respectively. The goal of this section is to show the equivalency of the algorithms developed before. Specifically, we show that classical PI and MB-PD algorithms are equivalent in the sense that there is a corresponding relation in the involved iterations. The specific relationship is described in the following theorem.

Theorem 3.

Proof.

By pre- and post-multiplying by and its transpose, one can obtain that

| (58) | ||||

Define . Then, (58) becomes

which has a unique solution since and is positive semidefinite. By observation, we know that the dual update step in Algorithm 4 is equivalent to the policy evaluation step in Algorithm 1 in the sense that .

Let with , , and . Expanding yields that

| (59) |

Expanding the left-hand side of (22) yields that

| (60) | ||||

Example 1.

Considering the following discrete-time linear system:

The weight matrices and discount factor are selected as , , and . The system noise is assumed to be generated according to the Gaussian distribution . We choose as the initial control gain matrix. Suppose that the system matrices and are known.

By implementing the classical PI algorithm, i.e., Algorithm 1, for steps, one can obtain that

From , it follows that

By simple observation and calculation, we can verify the correctness of the conclusion given in Theorem 3.

6 Simulations

In this section, we provide a numerical example to evaluate the performance of the proposed model-free algorithms.

Considering the following discrete-time linear system:

The weight matrices and discount factor are selected as , , and . The system noise is assumed to be generated according to the Gaussian distribution . When one knows the exact system matrices and , the optimal control gain can be obtained by solving the ARE (13). The result is .

6.1 Performance of Algorithm 3

When implement Algorithm 3, the initial state is randomly generated. Inspired by [24], the probing noise is considered as

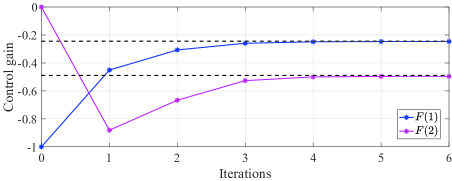

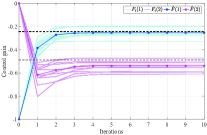

Initial stabilizing control gain is chosen as . We use the numerical average of trajectories to approximate the mathematical expectation, and sample data points at each trajectory for the BLS method. The tolerant error is . We can observe from Fig. 1 that Algorithm 3 stops after iterations and returns the estimated control gain .

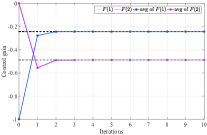

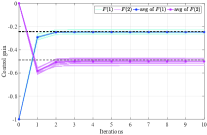

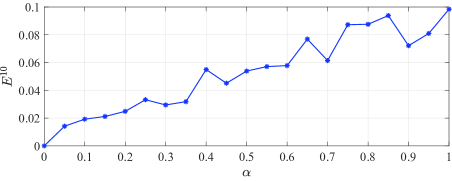

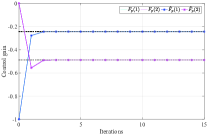

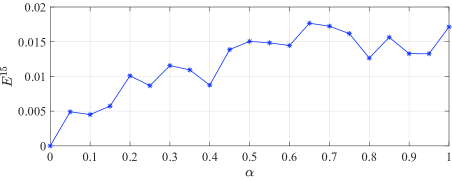

Considering that the system noise is randomly generated, we use Monte-Carlo method to validate the effectiveness of Algorithm 3, as well as to show the influence of the variance of the system noise. We implement Algorithm 3 for times, and each experiment is stopped when . The average value of the resulting control gain from experiments is denoted by , where is the control gain obtained at the -th step from the -th experiment. We use to denote the average approximation error of the -th iteration step from experiments. Fig. 2 shows the value of the control gain at each experiment as well as the resulting average gain , when the variance of the system noise is set as with being , , , and , respectively. Note that denotes the average approximation error on convergence, that is, the average deviation of the obtained control gain at the -th experiment from the optimal control gain . Fig. 3 shows the variation of the average approximation error , when is increased from to . From Fig. 2 and Fig. 3, we conclude that roughly speaking, the resulting approximation errors are increased as the increase of the variance of the system noise. That is, a stronger system noise may lead to a higher control gain approximation error. Moreover, when , obtained from Algorithm 3 converges to the optimal feedback gain .

6.2 Performance of Algorithm 5

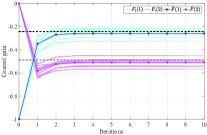

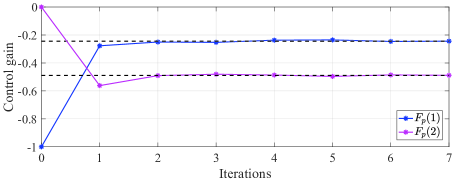

When implement Algorithm 5, we truncate the time horizon by and let . Three pairs of initial states and initial control inputs are , , , , , . The initial stabilizing control gain is chosen as . We use the numerical average of trajectories to approximate the mathematical expectation. The desired convergence precision is set as . We can observe from Fig. 4 that Algorithm 5 stops after iterations and returns the estimated control gain .

Similar to the previous subsection, we use Monte-Carlo method to discuss the influence of the system noise herein. We implement Algorithm 5 for times, and stop each experiment when . We use to denote the average value of the resulting control gain from experiments, where is the control gain obtained at the -th step from the -th experiment. Correspondingly, the average deviation of the approximation error at the -th iteration step obtained from experiments is denoted by . In Fig. 2, we show the value of at each experiment as well as the resulting average value , when the variance of the system noises is set as , , , and , respectively. By observation, we know that in these four cases, is in a small neighborhood of the optimal feedback gain after about iterations, and the range of the neighborhood is increased as the increase of the variance of the system noise. This implies that when the variance of the system noise is large, Algorithm 5 may not converge under a small convergence precision. In spite of this, we can also see from Fig. 2 that the average control gain is close to the optimal one. Let , which is the average derivation of the control gain obtained at the -th iteration step of the -th experiment from the optimal control gain . Fig. 6 shows the values of when is increased from to . Roughly speaking, the resulting approximation errors are increased as the increase of the variance of the system noise.

7 Conclusion

In this paper, we have revisited the stochastic LQR problem, and have developed a MF-OPPI algorithm and a MB-PD algorithm from the perspective of RL and primal-dual optimization, respectively. Both algorithms can be implemented without using the information of system matrices, such that one can circumvent model identification, which may cause undesirable behaviors due to the propagation and accumulation of modeling errors. Furthermore, we have shown that the dual and primal update steps in the MB-PD algorithm can be interpreted as the policy evaluation and policy improvement steps in the classical PI algorithm. This advances our understanding of common RL algorithms and might contribute to develop various RL algorithms based on optimization formulations.

Appendix A A Proof of Theorem 2

From the proof of Proposition 2, we know that the constraints and are equivalent. Based on this, we introduce the following auxiliary problem for further analysis:

Auxiliary Problem 1.

Solve

| (61) | ||||

| (62) |

This is a non-convex optimization problem since the set is not convex and the equality constraint (62) is a quadratic function of . Note that we can easily deduce from the proof of Proposition 2 that the optimal value of Auxiliary Problem 1 is equivalent to that of Problem 2, i.e., .

The Lagrangian function of Auxiliary Problem 1 is defined as

| (63) | ||||

where is the Lagrange multiplier. The corresponding Lagrangian dual function is defined as

We take the constraint as the domain of the variable in the Lagrangian formulation, instead of directly writing it in the Lagrangian function, since the constraint is not an explicit equality or inequality constraint.

Then, the dual problem of Auxiliary Problem 1 is given as follows:

where is as defined in (LABEL:Lagrangian-1).

Since and , it holds that . By weak duality, we have . In view of this, we complete the proof of Theorem 2 by the following two parts:

- 1.

- 2.

A.1 Proof for

We first introduce another auxiliary optimization problem which can be shown to be equivalent to the modified stochastic LQR problem, i.e., Problem 2.

Auxiliary Problem 2.

Solve

| (64) | ||||

| (65) |

Proposition 3.

Proof.

By the equation (46) and the fact that is the i.i.d. random noise for each , we can obtain that for any , it holds that

Therefore, the objective function of Problem 2 becomes

with

We can easily verify that both and satisfy the Lyapunov equation (65). By the first statement of Lemma 3, there exists a unique such that , which implies that .

Since the optimal solution of Auxiliary Problem 2 is one feasible point of Problem 2, it holds that . Furthermore, if , and is the unique solution to the constraint (65), then the resulting objective function of Auxiliary Problem 2 is exactly . Therefore, we can conclude that is the solution of Auxiliary Problem 2 and . The uniqueness of can be obtained by the uniqueness of . ∎

To show the strong duality of Auxiliary Problem 1, the following lemmas will be used.

Lemma 7.

The optimal point of Auxiliary Problem 2 satisfies the following:

-

1.

;

-

2.

, , and .

Proof.

Based on the definitions in (12) and (21), direct calculation yields that . Then, from (21), it follows that . Since , i.e., , there exists a unique satisfying the above equation.

Now, we are ready to show the strong duality of Auxiliary Problem 1.

Lemma 9.

The dual gap for Auxiliary Problem 1 is zero, that is, .

Proof.

For a given matrix , the Lagrangian dual function is

where .

Next, we show that the optimal solution of Auxiliary Problem 2 is an element of , and thus the set is nonempty. By Lemma 7, , where . Then, by Lemma 8, it holds that for all .

Therefore, the dual problem is equivalent to

| (66) | ||||

For , we have

| (67) |

A.2 Proof for

Lemma 10.

There holds .

Proof.

For any given , , the Lagrangian dual function is

where .

Therefore, the dual problem corresponding to Primal Problem 1 is equivalent to

| (68) | ||||

We can observe from (68) that affects the dual optimal value by adjusting the size of the set . To obtain the supremum of the Lagrangian dual function, the constraint should be relaxed as much as possible. We can easily observe from the definition of the set that its maximum is obtained when . Furthermore, if , then it holds that . As a result, it yields that

Since , it holds that , which implies that . ∎

References

- [1] R. S. Sutton, A. G. Barto. (1998). Reinforcement Learning: An introduction, MIT Press, Cambridge, MA, USA.

- [2] D. P. Bertsekas. (2019). Reinforcement Learning and Optimal Control, Athena Scientific, Nashua, USA.

- [3] K. Arulkumaran, M. P. Deisenroth, M. Brundage, A. A. Bharath. (2017). Deep reinforcement learning: A brief survey, IEEE Signal Processing Magazine, 34 (6), 26–38.

- [4] B. Recht. (2019). A tour of reinforcement learning: The view from continuous control, Annual Review of Control, Robotics, and Autonomous Systems, 2, 253–279.

- [5] M. Li, J. Qin, N. M. Freris, D. W. C. Ho, Multiplayer Stackelberg-Nash game for nonlinear system via value iteration-based integral reinforcement learning, IEEE Transactions on Neural Networks and Learning Systems, DOI: 10.1109/TNNLS.2020.3042331.

- [6] F. Li, J. Qin, W. X. Zheng. (2019). Distributed Q-learning-based online optimization algorithm for unit commitment and dispatch in smart grid, IEEE Transactions on Cybernetics, 50 (9), 4146–4156.

- [7] B. Wang, Z. Liu, Q. Li, A. Prorok. (2020). Mobile robot path planning in dynamic environments through globally guided reinforcement learning, IEEE Robotics and Automation Letters, 5 (4), 6932–6939.

- [8] A. Haydari, Y. Yilmaz, Deep reinforcement learning for intelligent transportation systems: A survey, IEEE Transactions on Intelligent Transportation Systems, DOI: 10.1109/TITS.2020.3008612.

- [9] A. S. Leong, A. Ramaswamy, D. E. Quevedo, H. Karl, L. Shi, Deep reinforcement learning for wireless sensor scheduling in cyber–physical systems, Automatica, DOI: https://doi.org/10.1016/j.automatica.2019.108759.

- [10] A. Naik, R. Shariff, N. Yasui, R. S. Sutton, Discounted reinforcement learning is not an optimization problem, arXiv preprint arXiv:1910.02140.

- [11] R. Zhang, T. Yu, Y. Shen, H. Jin, C. Chen. (2019). Text-based interactive recommendation via constraint-augmented reinforcement learning, in: Advances in Neural Information Processing Systems, Vancouver, Canada, pp. 15214–15224.

- [12] Z. Qin, W. Li, F. Janoos. (2014). Sparse reinforcement learning via convex optimization, in: International Conference on Machine Learning, Beijing, China, pp. 424–432.

- [13] N. Vieillard, O. Pietquin, M. Geist, On connections between constrained optimization and reinforcement learning, arXiv preprint arXiv:1910.08476.

- [14] H. Schlüter, F. Solowjow, S. Trimpe, Event-triggered learning for linear quadratic control, IEEE Transactions on Automatic Control, DOI: 10.1109/TAC.2020.3030877.

- [15] M. Fazel, R. Ge, S. M. Kakade, M. Mesbahi. (2018). Global convergence of policy gradient methods for the linear quadratic regulator, in: International Conference on Machine Learning, Stockholm, Sweden, pp. 1467–1476.

- [16] D. Malik, A. Pananjady, K. Bhatia, K. Khamaru, B. L. Peter, W. J. Martin. (2019). Derivative-free methods for policy optimization: Guarantees for linear quadratic systems, in: The 22nd International Conference on Artificial Intelligence and Statistics, Stockholm, Sweden, pp. 2916–2925.

- [17] Y. Li, Y. Tang, R. Zhang, N. Li, Distributed reinforcement learning for decentralized linear quadratic control: A derivative-free policy optimization approach, DOI: arXiv preprint arXiv:1912.09135.

- [18] K. Karl, S. Tu. (2019). Finite-time analysis of approximate policy iteration for the linear quadratic regulator, in: International Conference on Machine Learning, Vancouver, Canada, pp. 8514–8524.

- [19] Z. Yang, Y. Chen, M. Hong, Z. Wang. (2019). Provably global convergence of actor-critic: A case for linear quadratic regulator with ergodic cost, in: Advances in Neural Information Processing Systems, Vancouver, Canada, pp. 8353–8365.

- [20] R. Mitze, M. Mönnigmann, A dynamic programming approach to solving constrained linear–quadratic optimal control problems, Automatica, DOI: https://doi.org/10.1016/j.automatica.2020.109132.

- [21] D. Lee, J. Hu. (2018). Primal-dual Q-learning framework for LQR design, IEEE Transactions on Automatic Control, 64 (9), 3756–3763.

- [22] D. D. Yao, S. Zhang, X. Y. Zhou. (2001). Stochastic linear-quadratic control via semidefinite programming, SIAM Journal on Control and Optimization, 40 (3), 801–823.

- [23] Y. Jiang, Z.-P. Jiang. (2012). Computational adaptive optimal control for continuous-time linear systems with completely unknown dynamics, Automatica, 48 (10), 2699–2704.

- [24] B. Kiumarsi, F. L. Lewis, Z.-P. Jiang. (2017). control of linear discrete-time systems: Off-policy reinforcement learning, Automatica, 78, 144–152.

- [25] J. Qin, M. Li, Y. Shi, Q. Ma, W. X. Zheng. (2019). Optimal synchronization control of multiagent systems with input saturation via off-policy reinforcement learning, IEEE Transactions on Neural Networks and Learning Systems, 30 (1), 85–96.

- [26] J. Lai, J. Xiong, Z. Shu, Model-free optimal control of discrete-time systems with additive and multiplicative noises, arXiv preprint arXiv:2008.08734.

- [27] D. Vrabie, K. G. Vamvoudakis, F. L. Lewis. (2013). Optimal Adaptive Control and Differential Games by Reinforcement Learning Principles, IET Press, Stevenage, U.K.

- [28] G. Hewer. (1971). An iterative technique for the computation of the steady state gains for the discrete optimal regulator, IEEE Transactions on Automatic Control, 16 (4), 382–384.

- [29] D. Graupe, V. K. Jain, J. Salahi. (1980). A comparative analysis of various least-squares identification algorithms, Automatica, 16 (6), 663–681.

- [30] D. Vrabie, O. Pastravanu, M. Abu-Khalaf, F. L. Lewis. (2009). Adaptive optimal control for continuous-time linear systems based on policy iteration, Automatica, 45 (2), 477–484.

- [31] G. Gu. (2012). Discrete-Time Linear Systems: Theory and Design with Applications, Springer-Verlag, London, U.K.

- [32] S. Boyd, B. Vandenberghe. (2004). Convex Optimization, Cambridge University Press, Cambridge, U.K.