Model-Based Manipulation of Linear Flexible Objects with Visual Curvature Feedback

Abstract

Manipulation of deformable objects is a desired skill in making robots ubiquitous in manufacturing, service, healthcare, and security. Deformable objects are common in our daily lives, e.g., wires, clothes, bed sheets, etc., and are significantly more difficult to model than rigid objects. In this study, we investigate vision-based manipulation of linear flexible objects such as cables. We propose a geometric modeling method that is based on visual feedback to develop a general representation of the linear flexible object that is subject to gravity. The model characterizes the shape of the object by combining the curvatures on two projection planes. In this approach, we achieve tracking of the position and orientation (pose) of a cable-like object, the pose of its tip, and the pose of the selected grasp point on the object, which enables closed-loop manipulation of the object. We demonstrate the feasibility of our approach by completing the Plug Task used in the 2015 DARPA Robotics Challenge Finals, which involves unplugging a power cable from one socket and plugging it into another. Experiments show that we can successfully complete the task autonomously within 30 seconds.

I INTRODUCTION

Manipulation of linear flexible objects is of great interest in many applications including manufacturing, service, health and disaster response. Indeed, the topic has been the focus of many research studies in recent years. Ropes, clothes, organs and cables are common flexible objects used in deformable object manipulation [1, 2, 3, 4, 5, 6].

Cables are linear flexible objects that are common in industrial and domestic environments. The 2015 DARPA Robotics Challenge (DRC) Finals was aimed at advancing the capabilities of human-robot teams in responding to natural and man-made disasters. The Plug Task in this challenge required the robot pulling a power cable out of a socket and plug it into another [7]. Among six teams that completed the task, Team WPI-CMU had the fastest completion time with 5 minutes and 7 seconds [8]. Their approach used teleoperation by the human operator to grasp the plug and to complete the task [9]. The other five teams also did not automate this task, and they took longer times to perform the task.

In most of the related literature of manipulating cable-like objects, the physical model of the deformed object needs to be known before the manipulation such that the deformation of the object can be predicted. Chen and Zheng [10] used a cubic spline function to estimate the contour of a flexible aluminum beam in the 2-D plane, and a non-linear model was applied to describe the deformation based on the material characteristics identified by vision sensors. A systematic method to model the bend, twist, and extensional deformations of flexible linear objects was presented in [11], and the stable deformed shape of the flexible object was characterized by minimizing the potential energy under the geometric constraints. Nakagaki et al. [12] extended this work and proposed a method to estimate the force on a wire based on its shape observed by stereo vision. This method was employed for an insertion task of a flexible wire into a hole. Caldwell et al. [13] presented a technique to model a flexible loop by a chain of rigid bodies connected by torsional springs. Yoshida et al. [14] proposed a method for planning motions of a ring-shaped object based on precise simulation using Finite Element Method (FEM). These work are based on a priori knowledge of the deformation model of flexible objects. However, for different cables with different thickness, material, or function, the physical and deformation models vary substantially. So, these methods are difficult to be generalized to different cable-like objects.

There are also approaches which are not based on the physical model of the flexible object, and our method falls into this category. Navarro-Alarcon et al. [15, 16, 17] proposed a framework for automatic manipulation of deformable objects with an adaptive defomation model in 3-D space. Cartesian features composed of points, lines, and angles have been used to represent the deformation. Recently, Navarro-Alarcon and Liu [18] proposed a representation of the object’s shape based on a truncated Fourier series, and this model allows the robotic arm to deform the soft objects to desired contours in 2-D plane. Recently, Zhu et al. [19] extended the work in [18] and used the Fourier-based method to control the shape of a flexible cable in 2-D space. Compared with the Fourier-based visual servoing method, the SPR-RWLS method proposed by Jin et al. [20] took visual tracking uncertainties into consideration and showed robustness in the presence of outliers and occlusions for cable manipulation.

Inspired by the existing work, we propose a method for geometrical modeling of general linear flexible objects. Moreover, we use this method to systematically complete the DRC Plug Task. In order to detect and track customized small objects, such as cables, in 3-D space, [21] used a neural network-based detection method, and we extend their detection and tracking methods by introducing color detection into the loop. This paper has the following contributions: (1) it introduces a novel feedback characterization of linear flexible objects subject to gravity by combining curvatures on two projection planes which enables tracking of the position and orientation (pose) of a cable-like object, the pose of its tip, and the pose of the selected grasp point on the object, and a robust pose alignment controller which has the ability to control the shape of the object; and (2) it presents an autonomous system framework for accomplishing the DRC Plug Task. It should be noted that DRC Plug Task has been selected as the validation study in this research for two reasons: (i) Our team participated in the DRC Finals [9], and (ii) The DRC Plug Task provides a well-defined set of requirements to generalize the approach presented here to other applications.

This paper is organized as follows. Section II presents the problem statement and system framework. Section III describes the methodology of obtaining a reliable geometrical model of the cables on-the-fly and a robust pose alignment controller. In Section IV, we show the feasibility of our method experimentally and discuss the experimental results. Section V includes the conclusion and directions for future work.

II PROBLEM STATEMENT AND TASK DESCRIPTION

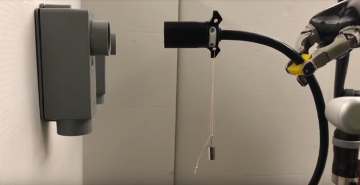

The DRC Plug Task involves unplugging a power cable from one socket and plugging it into another. The problem involves detecting a flexible cable in a noisy environment, grasping the cable with a feasible grasp pose, controlling the shape of the cable to match with the target pose, and inserting the cable-tip to the target socket. A benchmarking setup is used in this study shown in Fig. 2. The setup consists of a power cable (Deka Wire DW04914-1) with a plug (Optronics A7WCB) at the tip, two power sockets (McMaster-Carr 7905k35) attached on the wall, two neodymium disk magnets (DIYMAG HLMAG03) glued to the socket and the plug to provide a suction force [7]. Our system framework consists of a 6-DOF JACO v2 arm with three fingers, and two RGB-D cameras, i.e., Realsense D435 and Microsoft Kinect. The Realsense camera is used to model and track the shape of the cable, while the Kinect camera is used to estimate the socket pose and filter the point cloud. This scenario corresponds to a humanoid robot equipped with a depth camera at its left arm wrist and another depth camera at its head. One arm performing the task while the other is providing the perception feedback. In addition, a custom attachment is mounted on the fingers of the gripper to enable a form-closure grasp around the cable cross section. Figure 3 shows this attachment in SolidWorks and mounted on the fingers.

From a system design perspective, in order to complete the task autonomously, the system needs to be able to model a linear flexible object, to keep track of the cable configuration and to detect the pose of the target socket through pose estimation, to plan motions to move the robot to desired configurations, and to align the cable-tip with the target socket by a controller. A flow of the proposed system architecture is presented in Fig. 4.

Similar to the one-handed cable manipulation by a human (Fig. 1), we can divide the entire process into five phases: Initialize, Grasp, Unplug, Pre-insert, and Insert. Our goal is to give the robot the ability to automatically switch the phases. From the Initialize phase to the Grasp phase, the system needs to sense the environment, including estimating the socket pose, filtering the point cloud, and modeling the cable. After getting the model of the cable, the system will estimate the poses of the cable and cable-tip, and select the grasp point. Then the robot will go and grasp the cable. From the Unplug phase to the Pre-insert phase, the robot needs to align the pose of the cable-tip with the target pose. From the Grasp phase to the Unplug phase, a planned motion perpendicular to the target socket hole plane and away from the target socket is executed by the robot, and another planned motion perpendicular to the socket hole plane but close to the socket is executed by the robot in order to transfer from the Pre-insert phase to the Insert phase.

III METHODOLOGY

Our approach relies on five main robot-centric capabilities: (1) Target pose estimation, (2) Real-time point cloud filtering, (3) Modeling of a linear flexible object, (4) Cable/cable-tip pose estimation, (5) Pose alignment controller.

III-A Target pose estimation

The target socket in Fig. 2 is the final goal for the cable-tip. We used the Hough Circle Transform [22] to detect the hole of the target socket, as shown in Fig. 5 (left). The center of the detected red circle is marked by a green dot and we denote the coordinate of the center as (). If we denote the 3-D positions of the target socket center as (), we can get directly by reading the depth information at the coordinates () from the Kinect camera, and () can be calculated by using the perspective projection:

| (1) |

where is the focal length of the camera. In order to get the orientation of the target socket hole, the orientation of vector that is normal to the wall surface is used since the target socket hole plane is parallel to the wall. Random sample consensus (RANSAC) method [23] is applied to detect the wall plane from the point cloud that is obtained from Kinect camera, as seen in Fig. 5 (middle). We can then find the normal vector to the wall plane. And the axis of the target socket frame is set along this normal vector. Because the target socket hole has the round shape, we can pick any rotation angle about the axis (we set it to 0) to define the target socket frame. The corresponding frame (named as “Target_Socket”) is published through ROS, as shown in Fig. 5 (right).

III-B Real-time point cloud filtering

It is challenging to find specific irregular objects in a noisy environment. In this study, a real-time object detection method called YOLO [24] is used for detecting the flexible power cable in the 2-D images (Fig. 6 (left)). The process requires training a convolutional neural network (CNN) with self-labeled images. We trained the network for 40,000 steps with 100 labeled images. The output of YOLO is a bounding box that narrows the region down to a search for the cable. To get the pixels corresponding to the object, we then use the color information and detect the black pixels in this region (Fig. 6 (middle)). These pixels are stored in a set in the order from top to bottom and left to right, and we define the middle pixel as the center pixel of the object which is marked as “Center” in Fig. 6 (left). By using (1) we mentioned above, we can get the 3-D positions of the object center. A PassThrough filter from the point cloud library (pcl) [25] was used to filter the point cloud from three dimensions as shown in Fig. 6 (right). The point cloud in the narrowed bounding box served to model the cable. It is published through ROS in a frequency of 30 .

III-C Modeling of a linear flexible object

In Fig.6 (right), the remaining points in the filtered point cloud are shown in white with respect to the world frame. The , , and axes of the world frame are represented by the red, green, and blue bars, respectively. Modeling the cable in 3-D space is still a research problem in linear flexible object manipulation. On the other hand, modeling a curved line in 2-D space is a solved problem. We can exploit this by projecting the 3-D curve onto 2-D spaces. In this study, we project the 3-D point cloud onto the - and - planes. But the projection in 2D may have multiple corresponding or values to the coordinate. For example, if the cable has a “” shape, in order to get an unique projection model, we filtered out the point cloud below the rightmost point. And we assume that the cable is not in more complex shapes, e.g., “S” or “” shape. Due to the relatively high stiffness and large bending radius of the power cables, we found that a quadratic polynomial equation is sufficient to represent their shapes:

| (2) |

| (3) |

The polynomial coefficients , , , , , and are estimated by using the least squares method, and they continuously change based on the shape of the power cable. In order to model the cable in 3-D, we uniformly sample the points in Fig. 6 (right) along the -axis. The corresponding and values can be calculated by using (2) and (3). This projection method is efficient, and the model can be published through ROS in a frequency of 29.997 .

Figure 7 shows an example modeling result with 10 sample points. The power cable is visualized in Rviz by markers. Green markers represent the sampling points, consecutive points are connected by blue lines, and vertical upward red lines are used to illustrate the displacements between the points.

III-D Cable/cable-tip pose estimation

In order for the robot to detect and track the location of the cable and cable-tip, we propose a piece-wise linear model for the object. In Fig. 7, we denote the leftmost point as and the remaining points as from left to right, where is the number of sample points. We denote the leftmost blue line that connects and as and rest of the blue lines as . We assume that the line is the tangent at the point . Because the cable-tip has the round shape, its roll angle can be neglected (we set it to 0). After setting the point as the origin and defining the axis to the tangent of the point , the “cable_tip” frame is defined in Fig. 8 (left).

Now that the positions of all the sample points and the pose of the cable-tip are known with respect to the robot frame, a feasible grasp pose for the robot can be calculated to grasp the cable. Because the plug is inside the socket, we consider only the sampling points on the cable except the cable-tip as the grasp point candidates. To select the grasp point on the cable, the following trade off needs to be taken into account. As the grasp point gets closer to the cable-tip, the risk of the robot colliding with the socket or the wall increases and the number of points in the filtered point cloud reduces. On the other hand, when the grasp point is too far away from the cable-tip, the robot can not align the cable-tip pose with the target socket pose because the cable will dangle substantially. Thus, the grasp point needs to be selected empirically to meet these two constraints. For this purpose, we pre-define a minimum distance from the grasp point to the cable-tip () along the cable as , and a maximum distance as . and are pre-defined with the robot holding the cable before the experiment. With this step, the cable deformation characteristics can be ignored for selecting the grasp point, and this vision-only method is satisfied for selecting a feasible grasp point. Since we can calculate the length of the lines , we can also calculate the distance from each sampling point to the cable-tip by using:

| (4) |

The grasp point needs to be selected such that in order for the robot to be able to align the cable-tip pose with the target pose. By using the same method of estimating the “cable_tip” frame, we can get the “cable” frame (Fig. 8 (right)), which is used to get the grasp pose of the robot’s end-effector. The grasp pose is then used as the target of the motion planner, which is MoveIt! [26] in our case.

Once grasping of the cable is completed, a pulling motion along the axis of the “world” frame is generated to unplug the cable. Figure 9 (a) shows the “cable_tip” frame after the robot unplugs the power cable. We filter out the point cloud of the plug during modeling which improves the accuracy of the model because the plug is a straight, rigid object.

unplugging.

pose alignment.

III-E Pose alignment controller

In order to finish the insertion task, the robot needs to adjust the pose of the cable-tip to match with the pose of the target socket. A pre-insert frame right in front of the target socket is defined as our target pose for the pose alignment controller.

To explain this algorithm in a succinct way, we denote the transformation matrix from the “cable_tip” frame to the pre-insert frame as , the transformation matrix from the pre-insert frame to the “end-effector” frame as , the transformation matrix from the “cable_tip” frame to the “end-effector” frame as .

are the translational (, , ) and rotational (, , ) deviations in the “end-effector” frame which can be calculated as

| (5) |

where , , , , , and are translational and rotational terms from the transformation in the “end-effector” frame, , , , , , and are translational and rotational terms from the transformation in the “end-effector” frame.

Then the linear and angular velocities in the “end-effector” frame can be calculated [27]:

| (6) |

| (7) |

where is the execution time, are linear and angular velocities in the “end-effector” frame, which will be used as inputs for our PD controller.

A PD controller denoted as pose alignment controller was designed to calculate the Cartesian velocity () for the robot’s end-effector:

| (8) |

where is the error, which is , and are proportional and derivative terms. Our designed values for and are 2.0 and 0.2.

After getting the Cartesian velocity from our PD controller, a velocity controller built in the Jaco robotic arm generates the controlled joint velocity () by using robot Jacobian matrix ():

| (9) |

Algorithm 1 shows the control loop. The control loop keeps running until the translational deviations , , and the rotational deviations , , from the “cable_tip” frame to the pre-insert frame () satisfy the thresholds which are () and (). A successful example of the robot aligning the cable to pre-insert frame is shown in Fig. 9 (b). For the final insertion step, a translation along the axis of the “cable_tip” frame is applied. The insertion is facilitated by the magnets on the plug and in the socket.

IV EXPERIMENTAL VALIDATION

Our system framework is evaluated by running the DRC Plug Task for 20 trials. The minimal, maximum, and average completion time are 29.3823 , 33.9710 , and 31.5288 .

In order to validate the performance of our modeling method and pose alignment controller, we implement three experiments. The first experiment is for testing the grasp functionality with different initial poses of the cable. The second experiment shows the performance of the pose alignment controller with two different cables. The last experiment shows the robustness of our pose alignment controller under external disturbances.

Recalling the trade-off mentioned in Section III-D, we need to pick values of and for different cables. For the power cable (with plug), we define = 18 and = 30 . For the HDMI cable, these parameters are selected as = 12 and = 24 . Our method will prioritize the sample point closest to the midpoint in this range as grasp point when performing tasks. Figure 10 shows the robot is able to grasp the cable with three different initial states. Table I shows the model parameters for these states.

| Poses | ||||||

|---|---|---|---|---|---|---|

| left | -1.882 | -7.295 | -5.773 | -6.041 | -20.004 | -15.528 |

| middle | -0.385 | -2.670 | -2.191 | -1.917 | -6.981 | -5.243 |

| right | 0.006 | -1.377 | -1.125 | -0.979 | -3.955 | -2.806 |

The second experiment is to test the performance of our pose alignment controller. Due to the limitation of point cloud publishing frequency (30 ), we set the constraints for linear and angular velocities in the Cartesian space as 1.5 and 0.6 to prevent the substantial deformation of the cable in the interval of the point cloud update. We implement the pose alignment controller with two different cables (10 trials/each). Figures 11 shows two successful runs during these experiments. Our pose adjustment control loop ends when the pose differences meet the thresholds which is 0.01 for translational terms and 0.02 for rotational terms. In this experiment, we conclude that the HDMI cable (Avg. elapsed time : 12.26 ) needs more time than the power cable (Avg. elapsed time : 5.59 ) to adjust the pose. A potential reason might be that the HDMI cable has more deformation than the power cable.

Another experiment is performed for evaluating the robustness of the pose alignment controller by adding disturbances to the pose alignment control loop and testing whether our algorithm can still achieve the target. Figure 12 shows that different disturbances added to the cable-tip while the system is in the pose alignment loop and the final pose of the cable after the loop, which demonstrates that our pose alignment controller is able to resist such external disturbances.

These results demonstrate that our method for modeling linear flexible objects can provide a 3-D model with adaptive parameters based on the visual feedback. This model is capable of representing various shapes of the cable during the Plug Task. More importantly, our pose alignment algorithm is robust to handle different cable-like objects and is capable of resisting disturbances.

V CONCLUSIONS

In this paper, we have proposed a geometrical modeling method based on the curves on two projection planes for linear flexible objects subject to gravity. The method is capable of tracking the 3-D curvature of the linear flexible object, the pose of the tip and the pose of the selected grasp point on the object. A robust pose alignment controller based on the geometrical model with adaptive parameters can bring the cable-tip to a desired pre-insert position. We have detailed how we formulate and use this method to accomplish the DRC Plug Task autonomously. Moreover, we have performed experiments and demonstrated the versatility, reliability, and robustness of our approach.

Future work will focus on different model representations of the cable shapes in the case of more complex deformations. Also, we will apply our approach to different robot platforms (e.g., a dual-arm system) or to other flexible object manipulation tasks (e.g., a cable routing assembly task).

VI Acknowledgements

This research is supported by the National Aeronautics and Space Administration under Grant No. NNX16AC48A issued through the Science and Technology Mission Directorate, by the National Science Foundation under Award No. 1451427, 1544895, 1928654 and by the Office of the Secretary of Defense under Agreement Number W911NF-17-3-0004.

References

- [1] J. Sanchez, J.-A. Corrales, B.-C. Bouzgarrou, and Y. Mezouar, “Robotic manipulation and sensing of deformable objects in domestic and industrial applications: a survey,” The International Journal of Robotics Research, p. 0278364918779698, 2018.

- [2] A. X. Lee, H. Lu, A. Gupta, S. Levine, and P. Abbeel, “Learning force-based manipulation of deformable objects from multiple demonstrations,” in 2015 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2015, pp. 177–184.

- [3] A. Nair, D. Chen, P. Agrawal, P. Isola, P. Abbeel, J. Malik, and S. Levine, “Combining self-supervised learning and imitation for vision-based rope manipulation,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017, pp. 2146–2153.

- [4] S. Miller, J. Van Den Berg, M. Fritz, T. Darrell, K. Goldberg, and P. Abbeel, “A geometric approach to robotic laundry folding,” The International Journal of Robotics Research, vol. 31, no. 2, pp. 249–267, 2012.

- [5] V. Mallapragada, N. Sarkar, and T. K. Podder, “Toward a robot-assisted breast intervention system,” IEEE/ASME Transactions on Mechatronics, vol. 16, no. 6, pp. 1011–1020, 2011.

- [6] J. R. White, P. E. Satterlee Jr, K. L. Walker, and H. W. Harvey, “Remotely controlled and/or powered mobile robot with cable management arrangement,” Apr. 12 1988, uS Patent 4,736,826.

- [7] M. Spenko, S. Buerger, and K. Iagnemma, The DARPA Robotics Challenge Finals: Humanoid Robots To The Rescue. Springer, 2018, vol. 121.

- [8] R. Cisneros, S. Nakaoka, M. Morisawa, K. Kaneko, S. Kajita, T. Sakaguchi, and F. Kanehiro, “Effective teleoperated manipulation for humanoid robots in partially unknown real environments: team aist-nedo’s approach for performing the plug task during the drc finals,” Advanced Robotics, vol. 30, no. 24, pp. 1544–1558, 2016.

- [9] M. DeDonato, F. Polido, K. Knoedler, B. P. Babu, N. Banerjee, C. P. Bove, X. Cui, R. Du, P. Franklin, J. P. Graff, et al., “Team wpi-cmu: Achieving reliable humanoid behavior in the darpa robotics challenge,” Journal of Field Robotics, vol. 34, no. 2, pp. 381–399, 2017.

- [10] C. Chen and Y. F. Zheng, “Deformation identification and estimation of one-dimensional objects by vision sensors,” Journal of robotic systems, vol. 9, no. 5, pp. 595–612, 1992.

- [11] H. Wakamatsu, S. Hirai, and K. Iwata, “Modeling of linear objects considering bend, twist, and extensional deformations,” in Proceedings of 1995 IEEE International Conference on Robotics and Automation, vol. 1. IEEE, 1995, pp. 433–438.

- [12] H. Nakagaki, K. Kitagi, T. Ogasawara, and H. Tsukune, “Study of insertion task of a flexible wire into a hole by using visual tracking observed by stereo vision,” in Proceedings of IEEE International Conference on Robotics and Automation, vol. 4. IEEE, 1996, pp. 3209–3214.

- [13] T. M. Caldwell, D. Coleman, and N. Correll, “Optimal parameter identification for discrete mechanical systems with application to flexible object manipulation,” in 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2014, pp. 898–905.

- [14] E. Yoshida, K. Ayusawa, I. G. Ramirez-Alpizar, K. Harada, C. Duriez, and A. Kheddar, “Simulation-based optimal motion planning for deformable object,” in 2015 IEEE International Workshop on Advanced Robotics and its Social Impacts (ARSO). IEEE, 2015, pp. 1–6.

- [15] D. Navarro-Alarcón, Y.-H. Liu, J. G. Romero, and P. Li, “Model-free visually servoed deformation control of elastic objects by robot manipulators,” IEEE Transactions on Robotics, vol. 29, no. 6, pp. 1457–1468, 2013.

- [16] D. Navarro-Alarcon, Y.-h. Liu, J. G. Romero, and P. Li, “On the visual deformation servoing of compliant objects: Uncalibrated control methods and experiments,” The International Journal of Robotics Research, vol. 33, no. 11, pp. 1462–1480, 2014.

- [17] D. Navarro-Alarcon, H. M. Yip, Z. Wang, Y.-H. Liu, F. Zhong, T. Zhang, and P. Li, “Automatic 3-d manipulation of soft objects by robotic arms with an adaptive deformation model,” IEEE Transactions on Robotics, vol. 32, no. 2, pp. 429–441, 2016.

- [18] D. Navarro-Alarcon and Y.-H. Liu, “Fourier-based shape servoing: a new feedback method to actively deform soft objects into desired 2-d image contours,” IEEE Transactions on Robotics, vol. 34, no. 1, pp. 272–279, 2018.

- [19] J. Zhu, B. Navarro, P. Fraisse, A. Crosnier, and A. Cherubini, “Dual-arm robotic manipulation of flexible cables,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 479–484.

- [20] S. Jin, C. Wang, and M. Tomizuka, “Robust deformation model approximation for robotic cable manipulation,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019, pp. 6586–6593.

- [21] D. De Gregorio, R. Zanella, G. Palli, S. Pirozzi, and C. Melchiorri, “Integration of robotic vision and tactile sensing for wire-terminal insertion tasks,” IEEE Transactions on Automation Science and Engineering, no. 99, pp. 1–14, 2018.

- [22] H. Yuen, J. Princen, J. Illingworth, and J. Kittler, “Comparative study of hough transform methods for circle finding,” Image and vision computing, vol. 8, no. 1, pp. 71–77, 1990.

- [23] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, vol. 24, no. 6, pp. 381–395, 1981.

- [24] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788.

- [25] R. B. Rusu and S. Cousins, “Point cloud library (pcl),” in 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 1–4.

- [26] S. Chitta, I. Sucan, and S. Cousins, “Moveit![ros topics],” IEEE Robotics & Automation Magazine, vol. 19, no. 1, pp. 18–19, 2012.

- [27] W. Khalil and E. Dombre, Modeling, identification and control of robots. Butterworth-Heinemann, 2004.