Mobility-Aware Cooperative Caching in Vehicular Edge Computing Based on Asynchronous Federated and Deep Reinforcement Learning

Abstract

The vehicular edge computing (VEC) can cache contents in different RSUs at the network edge to support the real-time vehicular applications. In VEC, owing to the high-mobility characteristics of vehicles, it is necessary to cache the user data in advance and learn the most popular and interesting contents for vehicular users. Since user data usually contains privacy information, users are reluctant to share their data with others. To solve this problem, traditional federated learning (FL) needs to update the global model synchronously through aggregating all users’ local models to protect users’ privacy. However, vehicles may frequently drive out of the coverage area of the VEC before they achieve their local model trainings and thus the local models cannot be uploaded as expected, which would reduce the accuracy of the global model. In addition, the caching capacity of the local RSU is limited and the popular contents are diverse, thus the size of the predicted popular contents usually exceeds the cache capacity of the local RSU. Hence, the VEC should cache the predicted popular contents in different RSUs while considering the content transmission delay. In this paper, we consider the mobility of vehicles and propose a cooperative Caching scheme in the VEC based on Asynchronous Federated and deep Reinforcement learning (CAFR). We first consider the mobility of vehicles and propose an asynchronous FL algorithm to obtain an accurate global model, and then propose an algorithm to predict the popular contents based on the global model. In addition, we consider the mobility of vehicles and propose a deep reinforcement learning algorithm to obtain the optimal cooperative caching location for the predicted popular contents in order to optimize the content transmission delay. Extensive experimental results have demonstrated that the CAFR scheme outperforms other baseline caching schemes.

Index Terms:

cooperative caching, VEC, asynchronous federated learning, deep reinforcement learningI Introduction

With the development of the internet of vehicles (IoV) and cloud computing, caching technology facilitates various real-time vehicular applications for vehicular users (VUs), such as automatic navigation, pattern recognition and multimedia entertainment [1] [2]. For the standard caching technology, the cloud caches various contents like data, video and web pages. In this scheme, vehicles transmit the required contents to a macro base station (MBS) connected to a cloud server, and could fetch the contents from the MBS, which would cause high content transmission delay from the MBS to vehicles due to the communication congestion caused by frequently requested contents from vehicles [3]. The content transmission delay can be effectively reduced by the emergence of vehicular edge computing (VEC), which caches contents in the road side unit (RSU) deployed at the edge of vehicular networks (VNs) [4]. Thus, vehicles can fetch contents directly from the local RSU, to reduce the content transmission delay. In the VEC, since the caching capacity of the local RSU is limited, if some vehicles cannot fetch their required contents, a neighboring RSU who has the required contents could forward them to the local RSU. The worst case is that vehicles need to fetch contents from the MBS due to both local and neighboring RSUs not having cached the requested contents.

In the VEC, it is critical to design a caching scheme to cache the popular contents. The traditional caching schemes cache contents based on the previously requested contents [5]. However, owing to the high-mobility characteristics of vehicles in VEC, the previously requested contents from vehicles may become outdated quickly, thus the traditional caching schemes may not satisfy all the VUs’ requirement. Therefore, it is necessary to predict the most popular contents in the VEC and cache them in the suitable RSUs in advance. Machine learning (ML) as a new tool, can extract hidden features by training user data to efficiently predict popular contents[6]. However, the user data usually contains privacy information and users are reluctant to share their data directly with others, which make it difficult to collect and train users’ data. Federated learning (FL) can protect the privacy of users by sharing their local models instead of data[7]. In traditional FL, the global model is periodically updated by aggregating all vehicles’ local models[8] -[10]. However, vehicles may frequently drive out of the coverage area of the VEC before they update their local models and thus the local models cannot be uploaded in the same area, which would reduce the accuracy of the global model as well as the probability of getting the predicted popular contents. Hence, it motivates us to consider the mobility of vehicles and propose an asynchronous FL to predict accurate popular contents in VEC.

Generally, the predicted popular contents should be cached in their local RSU of vehicles to guarantee a low content transmission delay. However, the caching capacity of each local RSU is limited and the popular contents may be diverse, thus the size of the predicted popular contents usually exceeds the cache capacity of the local RSU. Hence, the VEC has to determine where the predicted popular contents are cached and updated. The content transmission delay is an important metric for vehicles to provide real-time vehicular application. The different popular contents cached in the local and neighboring RSUs would impact the way vehicles fetch contents, and thus affect the content transmission delay. In addition, the content transmission delay of each vehicle is impacted by its channel condition, which is affected by vehicle mobility. Therefore, it is necessary to consider the mobility of vehicles to design a cooperative caching scheme, in which the predicted popular contents can be cached among RSUs to optimize the content transmission delay. In contrast to some conventional decision algorithms, deep reinforcement learning (DRL) is a favorable tool to construct the decision-making framework and optimize the cooperative caching for the contents in complex vehicular environment [11]. Therefore, we shall employ DRL to determine the optimal cooperative caching to reduce the content transmission delay of vehicles.

In this paper, we consider the vehicle mobility and propose a cooperative Caching scheme in VEC based on Asynchronous Federated and deep Reinforcement learning (CAFR). The main contributions of this paper are summarized as follows.

-

1)

By considering the mobility characteristics of vehicles including the positions and velocities, we propose an asynchronous FL algorithm to improve the accuracy of the global model.

-

2)

We propose an algorithm to predict the popular contents based on the global model, where each vehicle adopts the autoencoder (AE) to predict its interested contents based on the global model, while the local RSU collects the interested contents of all vehicles within the coverage area to catch the popular contents.

-

3)

We elaborately design a DRL framework (dueling deep Q-network (DQN)) to illustrate the cooperative caching problem, where the state, action and reward function have been defined. Then the local RSU can determine optimal cooperative caching to minimize the content transmission delay based on the dueling DQN algorithm.

The rest of the paper is organized as follows. Section II reviews the related works on content caching in VNs. Section III briefly describes the system model. Section IV proposes a mobility-aware cooperative caching in the VEC based on asynchronous federated and deep reinforcement learning method. We present some simulation results in Section V, and then conclude them in Section VI.

II Related Work

In this section, we first review the existing works related to the content caching in vehicular networks (VNs), and then survey the current state of art of the cooperative content caching schemes in VEC.

In [12], Dai et al. proposed a distributed content caching framework with empowering blockchain to achieve security and protect privacy, and considered the mobility of vehicles to design an intelligent content caching scheme based on DRL framework. In [13], Yu et al. proposed a mobility-aware proactive edge caching scheme in VNs that allows multiple vehicles with private data to collaboratively train a global model for predicting content popularity, in order to meet the requirements for computationally intensive and latency-sensitive vehicular applications. In [14], Zhao et al. optimized the edge caching and computation management for service caching, and adopted Lyapunov optimization to deal with the dynamic and unpredictable challenges in VNs. In [15], Jiang et al. constructed a two-tier secure access control structure for providing content caching in VNs with the assistance of edge devices, and proposed the group signature-based scheme for the purpose of anonymous authentication. In [16], Tang et al. proposed a new optimization method to reduce the average response time of caching in VNs, and then adopted Lyapunov optimization technology to constrain the long-term energy consumption to guarantee the stability of response time. In [17], Dai et al. proposed a VN with digital twin to cache contents for adaptive network management and policy arrangement, and designed an offloading scheme based on the DRL framework to minimize the total offloading delay. However, the above content caching schemes in VNs did not take into account the cooperative caching in the VEC environment.

There are some works considering cooperative content caching schemes in VEC. In [18], Qiao et al. proposed a cooperative edge caching scheme in VEC and constructed the double time-scale markov decision process to minimize the content access cost, and employed the deep deterministic policy gradient (DDPG) method to solve the long-term mixed-integer linear programming problems. In [19], Chen et al. proposed a cooperative edge caching scheme in VEC which considered the location-based contents and the popular contents, while designing an optimal scheme for cooperative content placement based on an ant colony algorithm to minimize the total transmission delay and cost. In [20], Yao et al. designed a cooperative edge caching scheme with consistent hash and mobility prediction in VEC to predict the path of each vehicle, and also proposed a cache replacement policy based on the content popularity to decide the priorities of collaborative contents. In [21], Wang et al. proposed a cooperative edge caching scheme in VEC based on the long short-term memory (LSTM) networks, which caches the predicted contents in RSUs or other vehicles and thus reduces the content transmission delay. In [22], Gupta et al. proposed a cooperative caching scheme that jointly considers cache location, content popularity and predicted rating of contents to make caching decision based on the non-negative matrix factorization, where it employs a legitimate user authorization to ensure the secure delivery of cached contents. In [23], Yao et al. proposed a cooperative caching scheme based on the mobility prediction and drivers’ social similarities in VEC, where the regularity of vehicles’ movement behaviors are predicted based on the hidden markov model to improve the caching performance. In [24], Wu et al. proposed a hybrid service provisioning framework and cooperative caching scheme in VEC to solve the profit allocation problem among the content providers (CPs), and proposed an optimization model to improve the caching performance in managing the caching resources. In [25], Yao et al. proposed a cooperative caching scheme based on mobility prediction, where the popular contents may be cached in the mobile vehicles within the coverage area of hot spot. They also designed a cache replacement scheme according to the content popularity to solve the limited caching capacity problem for each edge cache device. In [26], Zhang et al. proposed a cooperative edge caching architecture that focuses on the mobility-aware caching, where the vehicles cache the contents with base stations collaboratively. They also introduced a vehicle-aided edge caching scheme to improve the capability of edge caching. In [27], Liu et al. designed a cooperative caching scheme that allows vehicles to search the unrequested contents. This scheme facilitates the content sharing among vehicles and improves the service performance. In [28], Wang et al. proposed a VEC caching scheme to reduce the total transmission delay. This scheme extends the capability of the data center from the core network to the edge nodes by cooperatively caching popular contents in different CPs. It minimizes the VUs’ average delay according to an iterative ascending price method. In [29], Liu et al. proposed a real-time caching scheme in which edge devices cooperate to improve the caching resource utilization. In addition, they adopted the DRL framework to optimize the problem of searching requests and utility models to guarantee the search efficiency. In [30], Ko et al. proposed an adaptive scheduling scheme consisting of the centralized scheduling mechanism, ad hoc scheduling mechanism and cluster management mechanism to exploit the ad hoc data sharing among different RSUs. In [31], Cui et al. proposed a privacy-preserving data downloading method in VEC, where the RSUs can find popular contents by analyzing encrypted requests of nearby vehicles to improve the downloading efficiency of the network. In [32], Luo et al. designed a communication, computation and cooperative caching framework, where computing-enabled RSUs provide computation and bandwidth resource to the VUs to minimize the data processing cost in VEC.

As mentioned above, no other works has considered the vehicle mobility and privacy of VUs simultaneously to design cooperative caching schemes in VEC, which motivates us to propose a mobility-aware cooperative caching in VEC based on the asynchronous FL and DRL.

III System Model

III-A System Scenario

As shown in Fig. 1, we consider a three-tier VEC in an urban scenario that consists of a local RSU, a neighboring RSU, a MBS attached with a cloud and some vehicles moving in the coverage area of the local RSU. The top tier is the MBS deployed at the center of the VEC, while middle tier is the RSUs deployed in the coverage area of the MBS. They are placed on one side of the road. The bottom tier is the vehicles driving within the coverage area of the RSUs.

Each vehicle stores a large amount of VUs’ historical data, i.e., local data. Each data is a vector reflecting different information of a VU, including the VU’s personal information such as identity (ID) number, gender, age and postcode, the contents that the VU may request, as well as the VU’s ratings for the contents where a larger rating for a content indicates that the VU is more interested in the content. Particularly, the rating for a content may be , which means that it is not popular or is not requested by VUs. Each vehicle randomly chooses a part of the local data to form a training set while the rest is used as a testing set. The time duration of vehicles within the coverage area of the MBS is divided into rounds. For each round, each vehicle randomly selects contents from its testing set as the requested contents, and sends the request information to the local RSU to fetch the contents at the beginning of each round. We consider the MBS has abundant storage capacity and caches all available contents, while the limited storage capacity of each RSU can only accommodate part of contents. Therefore, the vehicle fetches each of the requested content from the local RSU, neighboring RSU or MBS in different conditions. Specifically,

III-A1 Local RSU

If a requested content is cached in the local RSU, the local RSU sends back the requested content to the vehicle. In this case the vehicle fetches the content from the local RSU.

III-A2 neighboring RSU

If a requested content is not cached in the local RSU, the local RSU transfers the request to the neighboring RSU, and the neighboring RSU sends the content to the local RSU if it caches the requested content. Afterward, the local RSU sends back the content to the vehicle. In this case the vehicle fetches the content from the neighboring RSU.

III-A3 MBS

If a content is neither cached in the local RSU nor the neighboring RSU, the vehicle sends the request to the MBS that directly sends back the requested content to the vehicle. In this case, the VU fetches the content from the MBS.

III-B Mobility Model of Vehicles

The model assumes that all vehicles drive in the same direction and vehicles arrive at a local RSU, following a Poisson distribution with the arrival rate . Once a vehicle enters the coverage of the local RSU, it sends request information to the local RSU. Each vehicle keeps the same mobility characteristics including position and velocity within a round and may change its mobility characteristics at the beginning of each round. The velocity of different vehicles follows an independent identically distribution. The velocity of each vehicle is generated by a truncated Gaussian distribution, which is flexible and consistent with the real dynamic vehicular environment. For round , the number of vehicles driving in the coverage area of the local RSU is . The set of vehicles are denoted as , where is vehicle driving in the local RSU . Let be the velocities of all vehicles driving in the local RSU, where is velocity of . According to [33], the probability density function of is expressed as

| (1) |

where and are the maximum and minimum velocity threshold of each vehicle, respectively, and is the Gauss error function of under the mean and variance .

III-C Communication Model

The communications between the local RSU and neighboring RSU adopt the wired link. Each vehicle keeps the same communication model during a round and changes its communication model for different rounds. When the round is , the channel gain of is modeled as [34]

| (2) | |||

where means the local RSU and means the MBS, is the distance between the local RSUMBS and , is the path loss between the local RSUMBS and , and is the shadowing channel fading between the local RSUMBS and , which follows a Log-normal distribution.

Each RSU communicates with the vehicles in its coverage area through vehicle to RSU (V2R) link, while the MBS communicates with vehicles through vehicle to base station (V2B) link. Since the distances between the local RSUMBS and are different in different rounds, V2RV2B link suffers from different channel impairments, and thus transmit with different transmission rates in different rounds. The transmission rates under V2R and V2B link are calculated as follows.

According to the Shannon theorem, the transmission rate between the local RSU and is calculated as [35]

| (3) |

where is the available bandwidth, is the transmit power level used by the local RSU and is the noise power.

Similarly, the transmission rate between the MBS and is calculated as

| (4) |

where is the transmit power level used by MBS.

IV Cooperative Caching Scheme

In this section, we propose a cooperative cache scheme to optimize the content transmission delay in each round . We first propose an asynchronous FL algorithm to protect VU’s information and obtain an accurate model. Then we propose an algorithm to predict the popular contents based on the obtained model. Finally, we present a DRL based algorithm to determine the optimal cooperative caching according to the predicted popular contents. Next, we will introduce the asynchronous FL algorithm, the popular content prediction algorithm and the DRL-based algorithm, respectively.

IV-A Asynchronous Federated Learning

As shown in Fig. 2, the asynchronous FL algorithm consists of 5 steps as follows.

IV-A1 Select Vehicles

The main goal of this step is to select the vehicles whose staying time in the local RSU is long enough to ensure they can participate in the asynchronous FL and complete the training process.

Each vehicle first sends its mobility characteristics including its velocity and position (i.e., the distance to the local RSU and distance it has traversed within the coverage of the local RSU), then the local RSU selects vehicles according to the staying time that is calculated based on the vehicle’s mobility characteristics. The staying time of in the local RSU is calculated as

| (5) |

where is the coverage range of the local RSU, is the distance that has traversed within the coverage of the local RSU.

The staying time of should be larger than the sum of the average training time and inference time to guarantee that can complete the training process. Therefore, if , the local RSU selects to participate in asynchronous FL training. Otherwise, is ignored.

IV-A2 Download Model

In this step, the local RSU will generate the global model . For the first round, the local RSU initializes a global model based on the AE, which can extract the hidden features used for popular content prediction. In each round, the local RSU updates the global model and transfers the global model to all the selected vehicles in the end.

IV-A3 Local Training

In this step, each vehicle in the local RSU sets the downloaded global model as the initial local model and updates the local model iteratively through training. Afterward, the updated local model will be the feedback to the local RSU. For each iteration , randomly samples some training data from the training set. Then, it uses to train the local model based on the AE that consists of an encoder and a decoder. Let and be the weight matrix and bias vector of the encoder for iteration , respectively, and be the weight matrix and bias vector of the decoder for iteration , respectively. Thus the local model of for iteration is expressed as . For each training data in , the encoder first maps the original training data to a hidden layer to obtain the hidden feature of , i.e., . Then the decoder calculates the reconstructed input , i.e., , where and are the nonlinear and logical activation function [36]. Afterward, the loss function of data under the local model is calculated as

| (6) |

where .

After the loss functions of all the data in are calculated, the local loss function for iteration is calculated as

| (7) |

where is the number of data in .

Then the regularized local loss function is calculated to reduce the deviation between the local model and global model to improve the algorithm convergence, i.e.,

| (8) |

where is the regularization parameter.

Let be the gradient of , which is referred to as the local gradient. In the previous round, some vehicles may upload the updated local model unsuccessfully due to the delayed training time, and thus adversely affect the convergence of global model [37][38][39]. Here, these vehicles are called stragglers and the local gradient of a straggler in the previous round is referred to as the delayed local gradient. To solve this problem, the delayed local gradient will be aggregated into the local gradient of the current round . Thus, the aggregated local gradient can be calculated as

| (9) |

where is the decay coefficient and is the delayed local gradient. Note that if uploads successfully in the previous round.

Then the local model for the next iteration is updated as

| (10) |

where is the local learning rate in round , which is calculated as

| (11) |

where is the initial value of local learning rate.

Then iteration is finished and randomly samples some training data again to start the next iteration. When the number of iterations reaches the threshold , completes the local training and upload the updated local model to the local RSU.

IV-A4 Upload Model

Each vehicle uploads its updated local model to the local RSU after it completes local training.

IV-A5 Asynchronous aggregation

If the local model of , i.e., , is the first model received by the local RSU, the upload is successful and the local RSU updates the global model. Otherwise, the local RSU drops and thus the upload is not successful.

When the upload is successful, the local RSU updates the global model by weighted averaging as follows:

| (12) |

where is the size of local data in , is the total local data size of the selected vehicles and is the weight of the asynchronous aggregation for . The weight of the asynchronous aggregation is calculated by considering the traversed distance of in the coverage area of the local RSU and the content transmission delay from local RSU to to improve the accuracy of the global model and reduce the content transmission delay. Specifically, if the traversed distance of is large, it may have long available time to participate in the training, thus its local model should occupy large weight for aggregation to improve the accuracy of the global model. In addition, the content transmission delay from local RSU to is important because would finally download the content from the local RSU when the content is either cached in the local or neighboring RSU. Thus, if the content transmission delay from local RSU to is small, its local model should also occupy large weight for aggregation to reduce the content transmission delay. The weight of the asynchronous aggregation is calculated as

| (13) |

where and are coefficients of the position weight and transmission weight, respectively (i.e., ), is the size of each content. Thus, the content transmission delay from local RSU to is affected by the transmission rate between the local RSU and , i.e., . We can further calculate based on the normalized and , i.e.,

| (14) |

Since the local RSU knows and for each vehicle at the beginning of the asynchronous FL, the local RSU can calculate according to Eqs. (LABEL:eq2) and (3), and further calculate according to Eq. (13).

Up to now, the asynchronous FL in round is finished and the updated global model is obtained. The process of the asynchronous FL algorithm is shown in Algorithm 1 for ease of understanding, where is the maximum number of rounds, is the maximum number of local epochs. Then, the local RSU sends the obtained model to each vehicle to predict popular contents.

IV-B Popular Content Prediction

In this subsection, we propose an algorithm to predict the popular contents. As shown in Fig. 3, the popular content prediction algorithm consists of the 4 steps as follows.

IV-B1 Data Preprocessing

The VU’s rating for a content is when VU is uninterested in the content or has not requested a content. Thus, it is difficult to differentiate if a content is an interesting one for the VU when its rating is . Marking all contents with rating as uninterested contents is a bias prediction. Therefore, we adopt the obtained model to reconstruct the rating for each content in the first step, which is described as follows.

Each vehicle abstracts a rating matrix from the data in the testing set, where the first dimension of the matrix is VUs’ ID and the second dimension is VU’s ratings for all contents. Denote the rating matrix of as . Then, the AE with the obtained model is adopted to reconstruct . The rating matrix is used as the input data for the AE that outputs the reconstructed rating matrix . Since is reconstructed based on the obtained model which reflects the hidden features of data, can be used to approximate the rating matrix . Then, similar to the rating matrix, each vehicle also abstracts a personal information matrix from the data of the testing set, where the first dimension of the matrix is VUs’ ID and the second dimension is VU’s personal information.

IV-B2 Cosine Similarity

counts the number of the nonzero ratings for each VU in and marks the VUs with the largest numbers as active VUs. Then, each vehicle combines and the personal information matrix (denoted as ) to calculate the similarity between each active VU and other VUs. The similarity between an active VU and is calculated according to cosine similarity [40]

| (15) | |||

where and are the vectors corresponding to the active VU and in the combined matrixes, respectively, and are the 2-norm of and , respectively. Then for each active VU , selects the VUs with the largest similarities as the neighboring VUs of VU . The ratings of the neighboring VUs also reflect the preferences of VU to a certain extent.

IV-B3 Interested Contents

After determining the neighboring VUs of active VUs, in , the vectors of neighboring VUs for each active VU are abstracted to construct a matrix , where the first dimension of is the IDs of the neighboring VUs for active VUs, while the second dimension of is the ratings of the contents from neighboring VUs. In , a content with a VU’s nonzero rating is regarded as the VU’s interested content. Then the number of interested contents is counted for each VU, where the counted number of a content is referred to as the content popularity of the content. selects the contents with the largest content popularity as the predicted interested contents.

IV-B4 Popular Contents

After vehicle in the local RSU uploads their predicted interested contents, the local RSU collects and compares the predicted interested contents uploaded from all vehicles to select the contents with the largest content popularity as the popular contents. The proposed popular content prediction algorithm is illustrated in Algorithm 2, where is the set of the popular contents and is the set of interested contents of .

The cache capacity of the each RSU , i.e., the largest number of contents that each RSU can accommodate, is usually smaller than . Next, we will propose a cooperative caching to determine where the predicted popular contents can be cached.

IV-C Cooperative Caching Based on DRL

We consider the computation capability of each RSU is powerful and the cooperative caching can be determined within a short time. The main goal is to find an optimal cooperative caching based on DRL to minimize the content transmission delay. Next, we will formulate the DRL framework and then introduce the DRL algorithm.

IV-C1 DRL Framework

The DRL framework includes state, action and reward. The training process is divided into slots. For the current slot , the local RSU observes the current state and decides the current action based on according to a policy , which is used to generate the action based on the state at each slot. Then the local RSU can obtain the current reward and observes the next state that is transited from the current state . We will design , and , respectively, for this DRL framework.

State

We consider the contents cached by the local RSU as the current state . In order to focus on the contents with high popularity, the contents of the state space are sorted in descending order based on the predicted content popularity of the popular contents, thus the current state can be expressed as , where is the th most popular content.

Action

Action represents whether the contents cached in the local RSU need to be relocated or not. In the predicted popular contents, the contents that are not cached in the local RSU form a set . If , the local RSU randomly selects contents from and exchanges them with the lowest popular contents cached in the local RSU, and then sorts the contents in a descending order based on their content popularity to get . Neighboring RSU also randomly samples contents from popular contents that do not belong to as the cached contents of the neighboring RSU within the next slot . We denote the contents cached by the neighboring RSU as . If , the contents cached in the local RSU will not be relocated and the neighboring RSU also determines its cached contents, similar to the case when .

Reward

The reward function is designed to minimize the total content transmission delay to fetch the contents requested by vehicles. Note that the local RSU has recorded all the contents requested by the vehicles. The content transmission delays to fetch a requested content are different when the content is cached in different places.

If content is cached in the local RSU, i.e., , the local RSU transmits content to , thus the content transmission delay is calculated as

| (16) |

where is the transmission rate between the local RSU and , which has been calculated by Eq. (3).

If content is cached in the neighboring RSU, i.e., , the neighboring RSU sends the content to the local RSU that forwards the content to , thus the transmission delay is calculated as

| (17) |

where is the transmission rate between the local RSU and neighboring RSU, which is a constant transmission rate in the wired link.

If content is neither cached in the local RSU nor in the neighboring RSU, i.e., , the MBS transmits content to , thus the content transmission delay is expressed as

| (18) |

where is the transmission rate between the MBS and , which is calculated according to Eq. (4).

In order to clearly distinguish the content transmission delays under different conditions, we set the reward that fetches content at slot as

| (19) |

where and .

Thus the reward function is calculated as

| (20) |

where is the number of requested contents from .

IV-C2 DRL Algorithm

As mentioned above, the next state will change when the action is . The dueling DQN algorithm is a particular algorithm which works for the cases where the partial actions have no relevant effects on subsequent states [41]. Specifically, the dueling DQN decomposes the Q-value into two functions and . Function is the state value function that is unrelated to the action, while is the action advantage function that is related to the action. Therefore, we adopt the dueling DQN algorithm to solve this problem.

The dueling DQN includes a prediction network, a target network and a replay buffer. The prediction network evaluates the current state-action value (Q-value) function, while the target network generates the optimal Q-value function. Each of them consists of three layers, i.e., the feature layer, the state-value layer, and the advantage layer. The replay buffer is adopted to cache the transitions for each slot. The dueling DQN algorithm is illustrated in Algorithm 3 and is described in detail as follow.

Firstly, the parameters of the prediction network and the parameters of the target network are initialized randomly. The requested contents from all vehicles in the local RSU for round as input (lines 1-2).

Then the algorithm is executed for episodes. At the beginning of each episode, the local RSU randomly selects contents from popular contents, and the neighboring RSU randomly selects contents from popular contents that are not cached in the local RSU. Then the algorithm is executed iteratively from slots to . In each slot , the local RSU first observes state and then input to the prediction network, in which it goes through the feature layer, state-value layer and advantage layer, respectively. In the end, the prediction network outputs the state value function and the action advantage function under each action , i.e., , respectively, where . Furthermore, the Q-value function of prediction network under each action is calculated as

| (21) |

In Eq. (21), the range of Q-values can be narrowed to remove redundant degrees of freedom by calculating the difference between the action advantage function and the average value of the action advantage functions under all actions, i.e., . Thus, the stability of the algorithm can be improved.

Then action is chosen by the method, which is calculated as

| (22) |

Particularly, action is initialized as at slot .

The local RSU calculates the reward according to Eqs. (16) - (20) and state transits to the next state , then the local RSU observes . Next, the neighboring RSU randomly samples popular contents that are not cached in as its cached contents, which is denoted as . The transition from to is denoted as tuple , which is then stored in the replay buffer . When the number of the stored tuples in the replay buffer is larger than , the local RSU randomly samples tuples from to form a minibatch. Let be the -th tuple in the mini-batch. Then input each tuple into the prediction network and the target network (lines 3-12).

Next, we will introduce how parameters of prediction network are updated. For tuple , the local RSU inputs into the target network, where it goes through the feature layer and outputs its feature. Then the feature is input to the state-value layer and the advantage layer, respectively, which output state value function and action advantage function under each action , respectively. Thus, the Q-value function of target network of tuple under each action is calculated as

| (23) |

Then the target Q-value of the target network of tuple is calculated as

| (24) |

where is the discount factor. The loss function is calculated as follows

| (25) |

The gradient of loss function for all sampled tuples is calculated as

| (26) |

At the end of slot , the parameters of the prediction network are updated as

| (27) |

where is the learning rate of prediction network.

Up to now, the iteration in slot is completed, which will be repeated. During the iterations, the parameters of target network are updated after a certain number of slots (), as the parameters of prediction network . When the number of slots reaches , this episode is finished and then the local RSU randomly caches contents from popular contents to start the next episode. When the number of episodes reaches , the algorithm will be terminated (lines 13-22). The flow diagram of the dueling DQN algorithm is shown in Fig. 4.

Finally, the local RSU and neighboring RSU cache popular contents according to the optimal cooperative caching, and then each vehicle fetches contents from the VEC. This round is finished after each vehicle has fetched contents and then the next round is started.

V Simulation and Analytical Results

| Parameters of System Model | |||

| Parameter | Value | Parameter | Value |

| kHz | |||

| dBm | |||

| dBm | Mbps | ||

| bytes | s | ||

| s | km/h | ||

| km/h | km/h | ||

| km/h | dBm | ||

| Parameters of Asynchronous FL | |||

| Parameter | Value | Parameter | Value |

| m | |||

| Parameters of DRL | |||

| Parameter | Value | Parameter | Value |

We have evaluated the performance of the proposed CAFR scheme in this section.

V-A Settings and Dataset

We simulate a VEC environment on the urban road as shown in Fig. 1 and the simulation tool is Python . The communications between vehicle and RSU/MBS employ the 3rd Generation Partnership Project (3GPP) cellular V2X (C-V2X) architecture, where the parameters are set according to the 3GPP standard [34]. The simulation parameters are listed in Table I. A real-world dataset from the MovieLens website, i.e., MovieLens 1M, is used in the experiments. MovieLens 1M contains rating values for movies from anonymous VUs with movie ratings ranging from to , where each VU rates at least movies [42]. MovieLens lM also provides personal information about VUs including ID number, gender, age and postcode. We randomly divide MovieLens lM data set to each vehicle as its local data. Each vehicle randomly chooses data from its local data as its training set and data as its testing set. For each round, each vehicle randomly samples a part of the movies from testing set as its requested contents.

V-B Performance Evaluation

We use cache hit ratio and the content transmission delay as performance metrics to evaluate the CAFR scheme. The cache hit rate is defined as the probability of fetching requested contents from the local RSU [43]. If a requested content is cached in the local RSU, it can be fetched directly from the local RSU, which is referred to as a cache hit, otherwise, it is referred to as a cache miss. Thus, the cache hit rate is calculated as

| (28) |

The content transmission delay indicates the average delay for all vehicles to fetch contents, which is calculated as

| (29) |

where is the delay for all vehicles to fetch contents, and it is calculated by aggregating the content transmission delay for every vehicle to fetch contents.

We compare the CAFR scheme with other baseline schemes such as:

-

•

Random: Randomly selecting contents from the all contents to cache in the local and neighboring RSU.

-

•

c--greedy: Selecting the contents with largest numbers of requests based on probability and selecting contents randomly based on probability to cache in the local RSU. In our simulation, .

-

•

Thompson sampling: For each round, the contents cached in the local RSU is updated based on the number of cache hits and cache misses in the previous round [9], and contents with the highest value are selected to cache in the local RSU.

-

•

FedAVG: Federated averaging (FedAVG) is a typical synchronous FL scheme where the local RSU needs to wait for the local model updates to update its global model according to weighted average method:

(30) -

•

CAFR without DRL: Compared with the CAFR scheme, this scheme does not adopt the DRL algorithm to optimize caching scheme. Specifically, after predicting the popular contents, contents are randomly selected from the predicted popular contents to cache in the local RSU and neighboring RSU, respectively.

Now, we will evaluate the performance of the CAFR scheme through simulation experiments. In the following performance evaluation, each result is the average value of five experiments.

Fig. 5 shows the cache hit ratio of different schemes under different cache capacities of each RSU, where the result of CAFR is obtained when the vehicle density is vehicles/km (i.e., the number of vehicles is 15 per kilometer), and the results of other schemes are independent with the vehicle density. It can be seen that the cache hit ratio of all schemes increases with a larger capacity. This is because that the local RSU caches more contents with a larger capacity, thus the requested contents of vehicles are more likely to be fetched from the local RSU. Moreover, it is seen that the random scheme provides the worst cache hit ratio, because the scheme just selects contents randomly without considering the content popularity. In addition, CAFR and c--greedy outperform the random scheme and the thompson sampling. This is because that random and thompson sampling schemes do not predict the caching contents through learning, whereas CAFR and c--greedy decide the caching contents by observing the historical requested contents. Furthermore, CAFR outperforms c--greedy. This is because that CAFR captures useful hidden features from the data to predict the accurate popular contents.

Fig. 6 shows the content transmission delay of different schemes under different cache capacities of each RSU, where the vehicle density is vehicles/km. It is seen that the content transmission delays of all schemes decrease as the cache capacity increases. This is because that each RSU caches more contents as the cache capacity increases, and each vehicle fetches contents from local RSU and neighboring RSU with a higher possibility, thus reducing the content transmission delay. Moreover, the content transmission delay of CAFR is smaller than other schemes. This is because that the cache hit rate of CAFR is better than those of schemes, and more vehicles can fetch contents from local RSU directly, thus reducing the content transmission delay.

Fig. 7 shows the cache hit ratio and the content transmission delay of the CAFR scheme under different vehicle densities when the cache capacity of each RSU is . As shown in this figure, the cache hit rate increases as the vehicle density increases. This is because when more vehicles enter the coverage area of the RSU, the global model of the local RSU is trained based on more data, and thus can predict accurately. In addition, the content transmission delay decreases as the vehicle density increases. This is because the cache hit rate increases when the vehicle density increases, which enables more vehicles to fetch contents directly from local RSU.

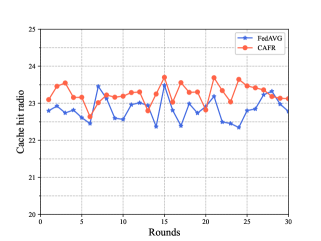

Fig. 8 compares the cache hit rate of the CAFR scheme and the FedAVG scheme under different rounds when the vehicle density is vehicles/km and the cache capacity of each RSU is contents. It can be seen that the cache hit radio of CAFR fluctuates between and within rounds, while the cache hit rate of FedAVG scheme fluctuates between and within rounds. This indicates that the CAFR scheme is slightly better than the FedAVG scheme. This is because the CAFR scheme has considered the vehicles’ mobility characteristics including the positions and velocities to select vehicles and aggregate the local model, thus improving the accuracy of the global model.

Fig. 9 shows the training time of CAFR and FedAVG schemes for each round when the vehicle density is vehicles/km and the cache capacity of each RSU is contents. It can be seen that the training time of CAFR scheme for each round is within s and s, while the training time of FedAVG scheme for each round is within s and s. This indicates that CAFR scheme has a much smaller training time than the FedAVG scheme. This is because the FedAVG scheme needs to aggregate all vehicles’ local models for the global model updating in each round, while the CAFR scheme aggregates as soon as a vehicle’s local model is received for each round.

Fig. 10 shows the cache hit rate and content transmission delay of each episode in the DRL of the CAFR scheme when the vehicle density is vehicles/km and the cache capacity of RSU is . As the episode increases, the cache hit rate gradually increases and the content transmission delay decreases gradually in the first ten episodes. This is because the local RSU and neighboring RSU gradually cache appropriate popular contents in the first ten episodes. In addition, it is seen that the cache hit rate and content transmission delay converge at around episode . This is because the local RSU is able to learn the policy to perform optimal cooperative caching at around episodes.

Fig. 11 compares the cache hit ratio of the CAFR scheme with CAFR scheme without DRL under different cache capacities of each RSU when the vehicle density is vehicles/km. As shown in Fig. 11, the cache hit ratio of CAFR outperforms the CAFR without DRL. This is because DRL can determine the optimal cooperative caching according to the predicted popular contents, and thus more suitable popular contents can be cached in the local RSU.

Fig. 12 compares the content transmission delay of the CAFR scheme with CAFR scheme without DRL under different cache capacities of each RSU when the vehicle density is vehicles/km. As shown in Fig. 12, the content transmission delay of CAFR is less than that of CAFR without DRL. This is because the cache hit ratio of CAFR outperforms the CAFR without DRL and more vehicles can fetch contents from local RSU directly.

VI Conclusions

In this paper, we considered the vehicle mobility and proposed a cooperative caching scheme CAFR to reduce the content transmission delay and improve the cache hit radio. We first proposed an asynchronous FL algorithm to obtain an accurate global model, and then proposed an algorithm to predict the popular contents based on the global model. Afterwards, we proposed a cooperative caching scheme to minimize the content transmission delay based on the dueling DQN algorithm. Simulation results have demonstrated that the CAFR scheme outperforms other baseline caching schemes. According to the theoretical analysis and simulation results, the conclusions can be summarized as follows:

-

•

CAFR scheme can learn from the local data of vehicles to capture useful hidden features and predict the accurate popular contents.

-

•

CAFR greatly reduces the training time for each round by aggregating the local model of a single vehicle in each round. In addition, CAFR considers vehicles’ mobility characteristics including the positions and velocities to select vehicles and aggregate the local model, which can improve the accuracy of the training model.

-

•

The DRL in the CAFR scheme determines the optimal cooperative caching policy according to the predicted popular contents, and thus more suitable popular contents are cached in the local RSU and neighboring RSU to reduce the content transmission delay.

References

- [1] L. Liu, C. Chen, Q. Pei, S. Maharjan and Y. Zhang, "Vehicular Edge Computing and Networking: A Survey," Mob. Networks Appl., vol. 26, pp. 1145-1168, 2021.

- [2] Q. Wu, Y. Zhao and Q. Fan, "Time-Dependent Performance Modeling for Platooning Communications at Intersection," IEEE IoT-J, doi: 10.1109/JIOT.2022.3161028.

- [3] Y. Dai, D. Xu, S. Maharjan, G. Qiao and Y. Zhang, "Artificial Intelligence Empowered Edge Computing and Caching for Internet of Vehicles," IEEE Wireless Commun. Mag., vol. 26, no. 3, pp. 12-18, Jun. 2019.

- [4] M. A. Javed and S. Zeadally, "AI-Empowered Content Caching in Vehicular Edge Computing: Opportunities and Challenges," IEEE Network, vol. 35, no. 3, pp. 109-115, May/June 2021.

- [5] A. Narayanan, S. Verma, E. Ramadan, P. Babaie, and Z.-L. Zhang, "DeepCache: A deep learning based framework for content caching," Proc. Workshop Netw. Meets AI ML NetAI, 2018, pp. 48–53.

- [6] M. Yan, C. A. Chan, W. Li, L. Lei, A. F. Gygax and C. -L. I, "Assessing the Energy Consumption of Proactive Mobile Edge Caching in Wireless Networks," IEEE Access, vol. 7, pp. 104394-104404, 2019, doi: 10.1109/ACCESS.2019.2931449.

- [7] M. Chen, Z. Yang, W. Saad, C. Yin, H. V. Poor and S. Cui, "A Joint Learning and Communications Framework for Federated Learning Over Wireless Networks," IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 269-283, Jan. 2021, doi: 10.1109/TWC.2020.3024629.

- [8] X. Wang, C. Wang, X. Li, V. C. M. Leung and T. Taleb, "Federated Deep Reinforcement Learning for Internet of Things With Decentralized Cooperative Edge Caching," IEEE IoT-J, vol. 7, no. 10, pp. 9441-9455, Oct. 2020, doi: 10.1109/JIOT.2020.2986803.

- [9] L. Cui, X. Su, Z. Ming, Z. Chen, S. Yang, Y. Zhou and W. Xiao, "CREAT: Blockchain-assisted Compression Algorithm of Federated Learning for Content Caching in Edge Computing," IEEE J-IoT, doi: 10.1109/JIOT.2020.3014370.

- [10] R. Cheng, Y. Sun, Y. Liu, L. Xia, D. Feng and M. Imran, "Blockchain-empowered Federated Learning Approach for An Intelligent and Reliable D2D Caching Scheme," IEEE J-IoT, doi: 10.1109/JIOT.2021.3103107.

- [11] H. Zhu, Q. Wu, X. -J. Wu, Q. Fan, P. Fan and J. Wang, "Decentralized Power Allocation for MIMO-NOMA Vehicular Edge Computing Based on Deep Reinforcement Learning," IEEE J-IoT, doi: 10.1109/JIOT.2021.3138434.

- [12] Y. Dai, D. Xu, K. Zhang, S. Maharjan and Y. Zhang, "Deep Reinforcement Learning and Permissioned Blockchain for Content Caching in Vehicular Edge Computing and Networks," IEEE Trans. Veh. Technol., vol. 69, no. 4, pp. 4312-4324, April 2020, doi: 10.1109/TVT.2020.2973705.

- [13] Z. Yu, J. Hu, G. Min, Z. Zhao, W. Miao and M. S. Hossain, "Mobility-Aware Proactive Edge Caching for Connected Vehicles Using Federated Learning," IEEE Trans. Intell. Transp. Syst., vol. 22, no. 8, pp. 5341-5351, Aug. 2021, doi: 10.1109/TITS.2020.3017474.

- [14] J. Zhao, X. Sun, Q. Li and X. Ma, "Edge Caching and Computation Management for Real-Time Internet of Vehicles: An Online and Distributed Approach," IEEE Trans. Intell. Transp. Syst., vol. 22, no. 4, pp. 2183-2197, April 2021, doi: 10.1109/TITS.2020.3012966.

- [15] S. Jiang, J. Liu, L. Huang, H. Wu and Y. Zhou, "Vehicular Edge Computing Meets Cache: An Access Control Scheme for Content Delivery," ICC 2020 - 2020 IEEE International Conference on Communications (ICC), 2020, pp. 1-6, doi: 10.1109/ICC40277.2020.9148755.

- [16] C. Tang, C. Zhu, H. Wu, Q. Li and J. J. P. C. Rodrigues, "Toward Response Time Minimization Considering Energy Consumption in Caching-Assisted Vehicular Edge Computing," IEEE J-IoT, vol. 9, no. 7, pp. 5051-5064, 1 April1, 2022, doi: 10.1109/JIOT.2021.3108902.

- [17] Y. Dai and Y. Zhang, "Adaptive Digital Twin for Vehicular Edge Computing and Networks," Journal of Communications and Information Networks (JCIN), vol. 7, no. 1, pp. 48-59, March 2022.

- [18] G. Qiao, S. Leng, S. Maharjan, Y. Zhang and N. Ansari, "Deep Reinforcement Learning for Cooperative Content Caching in Vehicular Edge Computing and Networks," IEEE J-IoT , vol. 7, no. 1, pp. 247-257, Jan. 2020, doi: 10.1109/JIOT.2019.2945640.

- [19] J. Chen, H. Wu, P. Yang, F. Lyu and X. Shen, "Cooperative Edge Caching With Location-Based and Popular Contents for Vehicular Networks," IEEE Trans. Veh. Technol., vol. 69, no. 9, pp. 10291-10305, Sept. 2020, doi: 10.1109/TVT.2020.3004720.

- [20] L. Yao, X. Xu, J. Deng, G. Wu and Z. Li, "A Cooperative Caching Scheme for VCCN With Mobility Prediction and Consistent Hashing," IEEE Trans. Intell. Transp. Syst., doi: 10.1109/TITS.2022.3171071.

- [21] R. Wang, Z. Kan, Y. Cui, D. Wu and Y. Zhen, "Cooperative Caching Strategy With Content Request Prediction in Internet of Vehicles," IEEE J-IoT, vol. 8, no. 11, pp. 8964-8975, 1 June1, 2021, doi: 10.1109/JIOT.2021.3056084.

- [22] D. Gupta, S. Rani, S. H. Ahmed, S. Garg, M. Jalil Piran and M. Alrashoud, "ICN-Based Enhanced Cooperative Caching for Multimedia Streaming in Resource Constrained Vehicular Environment," IEEE Trans. Intell. Transp. Syst., vol. 22, no. 7, pp. 4588-4600, July 2021, doi: 10.1109/TITS.2020.3043593.

- [23] L. Yao, Y. Wang, X. Wang and G. WU, "Cooperative Caching in Vehicular Content Centric Network Based on Social Attributes and Mobility," IEEE Trans. Mob. Comput., vol. 20, no. 2, pp. 391-402, 1 Feb. 2021, doi: 10.1109/TMC.2019.2944829.

- [24] R. Wu, G. Tang, T. Chen, D. Guo, L. Luo and W. Kang, "A Profit-Aware Coalition Game for Cooperative Content Caching at the Network Edge," IEEE J-IoT, vol. 9, no. 2, pp. 1361-1373, 15 Jan.15, 2022, doi: 10.1109/JIOT.2021.3087719.

- [25] L. Yao, A. Chen, J. Deng, J. Wang and G. Wu, "A Cooperative Caching Scheme Based on Mobility Prediction in Vehicular Content Centric Networks," IEEE Trans. Veh. Technol., vol. 67, no. 6, pp. 5435-5444, June 2018, doi: 10.1109/TVT.2017.2784562.

- [26] K. Zhang, S. Leng, Y. He, S. Maharjan and Y. Zhang, "Cooperative Content Caching in 5G Networks with Mobile Edge Computing," IEEE Wirel. Commun., vol. 25, no. 3, pp. 80-87, JUNE 2018, doi: 10.1109/MWC.2018.1700303.

- [27] K. Liu, J. K. -Y. Ng, J. Wang, V. C. S. Lee, W. Wu and S. H. Son, "Network-Coding-Assisted Data Dissemination via Cooperative Vehicle-to-Vehicle/-Infrastructure Communications," IEEE Trans. Intell. Transp. Syst., vol. 17, no. 6, pp. 1509-1520, June 2016, doi: 10.1109/TITS.2015.2495269.

- [28] S. Wang, Z. Zhang, R. Yu and Y. Zhang, "Low-latency caching with auction game in vehicular edge computing," 2017 IEEE/CIC International Conference on Communications in China (ICCC), 2017, pp. 1-6, doi: 10.1109/ICCChina.2017.8330526.

- [29] M. Liu, D. Li, H. Wu, F. Lyu and X. S. Shen, "Real-Time Search-Driven Caching for Sensing Data in Vehicular Networks," IEEE J-IoT, doi: 10.1109/JIOT.2021.3134964.

- [30] B. Ko, K. Liu, S. H. Son and K. -J. Park, "RSU-Assisted Adaptive Scheduling for Vehicle-to-Vehicle Data Sharing in Bidirectional Road Scenarios," IEEE Trans. Intell. Transp. Syst., vol. 22, no. 2, pp. 977-989, Feb. 2021, doi: 10.1109/TITS.2019.2961705.

- [31] J. Cui, L. Wei, H. Zhong, J. Zhang, Y. Xu and L. Liu, "Edge Computing in VANETs-An Efficient and Privacy-Preserving Cooperative Downloading Scheme," IEEE J. Sel. Areas Commun., vol. 38, no. 6, pp. 1191-1204, June 2020, doi: 10.1109/JSAC.2020.2986617.

- [32] Q. Luo, C. Li, T. H. Luan and W. Shi, "Collaborative Data Scheduling for Vehicular Edge Computing via Deep Reinforcement Learning," IEEE J-IoT, vol. 7, no. 10, pp. 9637-9650, Oct. 2020, doi: 10.1109/JIOT.2020.2983660.

- [33] Y. AlNagar, S. Hosny, and A. A. El-Sherif, "Towards mobility-aware proactive caching for vehicular ad hoc networks," Proc. IEEE Wireless Commun. Netw. Conf. Workshop (WCNCW), Apr. 2019, pp. 1–6.

- [34] "Study on LTE-based V2X Services," 3rd Generation Partnership Project (3GPP), Technical Specification (TS) 36.885, June 2016, version 14.0.0.

- [35] J. Chen, H. Wu, P. Yang, F. Lyu and X. Shen, "Cooperative Edge Caching With Location-Based and Popular Contents for Vehicular Networks," IEEE Trans. Veh. Technol., vol. 69, no. 9, pp. 10291-10305, Sept. 2020, doi: 10.1109/TVT.2020.3004720.

- [36] A. Ng, "Sparse autoencoder," CS294A Lecture notes, vol. 72, no. 2011, pp. 1–19, 2011.

- [37] Y. Chen, Y. Ning, M. Slawski and H. Rangwala, "Asynchronous online federated learning for edge devices with non-IID data," 2020 IEEE International Conference on Big Data (Big Data), pp. 15-24, 2020.

- [38] C. Xie, S. Koyejo, and I. Gupta, "Asynchronous federated optimization," arXiv preprint arXiv:1903.03934, 2019.

- [39] H. -S. Lee and J. -W. Lee, "Adaptive Transmission Scheduling in Wireless Networks for Asynchronous Federated Learning," IEEE J. Sel. Areas Commun., vol. 39, no. 12, pp. 3673-3687, Dec. 2021, doi: 10.1109/JSAC.2021.3118353.

- [40] Z. Yu, J. Hu, G. Min, H. Lu, Z. Zhao, H. Wang and N. Georgalas, "Federated Learning Based Proactive Content Caching in Edge Computing," 2018 IEEE Global Communications Conference (GLOBECOM), 2018, pp. 1-6, doi: 10.1109/GLOCOM.2018.8647616.

- [41] Z. Wang, T. Schaul, M. Hessel, H. Hasselt, M. Lanctot and N. Freitas, "Dueling Network Architectures for Deep Reinforcement Learning," ArXiv, abs/1511.065811 (2016): n. pag.

- [42] F. Harper and J. Konstan, "The movielens datasets: History and context," ACM Trans. Interact. Intell. Syst., vol. 5, no. 4, p. 19, 2016.

- [43] S. Müller, O. Atan, M. van der Schaar and A. Klein, "Context-Aware Proactive Content Caching With Service Differentiation in Wireless Networks," IEEE Trans. Wireless Commun., vol. 16, no. 2, pp. 1024-1036, Feb. 2017.