Misspecification-robust Sequential Neural Likelihood for Simulation-based Inference

Abstract

Simulation-based inference techniques are indispensable for parameter estimation of mechanistic and simulable models with intractable likelihoods. While traditional statistical approaches like approximate Bayesian computation and Bayesian synthetic likelihood have been studied under well-specified and misspecified settings, they often suffer from inefficiencies due to wasted model simulations. Neural approaches, such as sequential neural likelihood (SNL) avoid this wastage by utilising all model simulations to train a neural surrogate for the likelihood function. However, the performance of SNL under model misspecification is unreliable and can result in overconfident posteriors centred around an inaccurate parameter estimate. In this paper, we propose a novel SNL method, which through the incorporation of additional adjustment parameters, is robust to model misspecification and capable of identifying features of the data that the model is not able to recover. We demonstrate the efficacy of our approach through several illustrative examples, where our method gives more accurate point estimates and uncertainty quantification than SNL.

1 Introduction

Statistical inference for complex models can be challenging when the likelihood function is infeasible to evaluate numerous times. However, if the model is computationally inexpensive to simulate given parameter values, it is possible to perform approximate parameter estimation by so-called simulation-based inference (SBI) techniques (e.g. Cranmer et al. (2020)). The difficulty of obtaining reliable inferences in the SBI setting is exacerbated when the model is misspecified (e.g. Cannon et al. (2022); Frazier et al. (2020b)).

SBI methods are widely employed across numerous scientific disciplines, such as cosmology (Hermans et al., 2021), ecology (Hauenstein et al., 2019), mathematical biology (Wang et al., 2024), neuroscience (Confavreux et al., 2023; Gonçalves et al., 2020), population genetics (Beaumont, 2010) and in epidemiology to model the spread of infectious agents such as S. pneumoniae (Numminen et al., 2013) and COVID-19 (Ferguson et al., 2020; Warne et al., 2020). Model building is an iterative process involving fitting tentative models, model criticism and model expansion; Blei et al. (2017) have named this process the “Box Loop” after pioneering work by Box (1976). We refer to Gelman et al. (2020) as a guide to Bayesian model building. Although methods for statistical model criticism are well-developed, there is less work on reliably refining models for SBI methods. One reason is that SBI methods are susceptible to model misspecification, which may be especially prevalent in the early stages of modelling when complexity is progressively introduced. A misspecified model may lead to misleading posterior distributions, and the available tools for model criticism may also be unreliable (Schmitt et al., 2023a). Ensuring that misspecification is detected and its negative impacts are mitigated is important if we are to iterate and refine our model reliably. However, this is often overlooked in SBI methods.

Posterior predictive checks (PPCs) are frequently used for model evaluation and provide a tool to check for model misspecification (Gabry et al., 2019). For SBI, if a model is well-specified, we should be able to generate data that resembles the observed data. However, as noted in Schmitt et al. (2023a), PPCs rely on the fidelity of the posterior and can only serve as an indirect measure of misspecification. Similarly, scoring rules are another way to assess how well a probabilistic model matches the observed data (Gneiting & Raftery, 2007).

In this paper, we focus on the type of misspecification where the model is unable to recover the observed summary statistics as the sample size diverges. This form of misspecification is referred to as incompatibility by Marin et al. (2014). Statistical approaches for SBI, such as approximate Bayesian computation (ABC, Sisson et al. (2018)) and Bayesian synthetic likelihood (BSL, Price et al. (2018)) have been well studied, both empirically (e.g. Drovandi & Frazier (2022)) and theoretically (e.g. Li & Fearnhead (2018), Frazier et al. (2018), David T. Frazier & Kohn (2023)). Wilkinson (2013) reframes the approximate ABC posterior as an exact result for a different distribution that incorporates an assumption of model error (i.e. model misspecification). Ratmann et al. (2009) proposes an ABC method that augments the likelihood with unknown error terms, allowing model criticism by detecting summary statistics that require high tolerances. Frazier et al. (2020a) incorporate adjustment parameters, inspired by RBSL, in an ABC context. These approaches often rely on a low-dimensional summarisation of the data to manage computational costs. ABC aims to minimise the distance between observed and simulated summaries, whereas BSL constructs a Gaussian approximation of the model summary to form an approximate likelihood. In the case of model misspecification, there may be additional motivation to replace the entire dataset with summaries, as the resulting model can then be trained to capture the broad features of the data that may be of most interest; see, e.g., Lewis et al. (2021) for further discussion.

Neural approaches, such as SNL and neural posterior estimation (NPE), have been shown to exhibit poor empirical performance under model misspecification (e.g. Bon et al. (2023); Cannon et al. (2022); Schmitt et al. (2023a); Ward et al. (2022)). Thus, there is a critical need to develop these neural approaches so they are robust to model misspecification. Ward et al. (2022) develop robust NPE (RNPE), a method to make NPE robust to model misspecification and useful for model criticism. Cannon et al. (2022) develop robust SBI methods by using machine learning techniques to handle out-of-distribution (OOD) data. Cranmer et al. (2020) advise incorporating additional noise directly into the simulator if model misspecification is suspected.

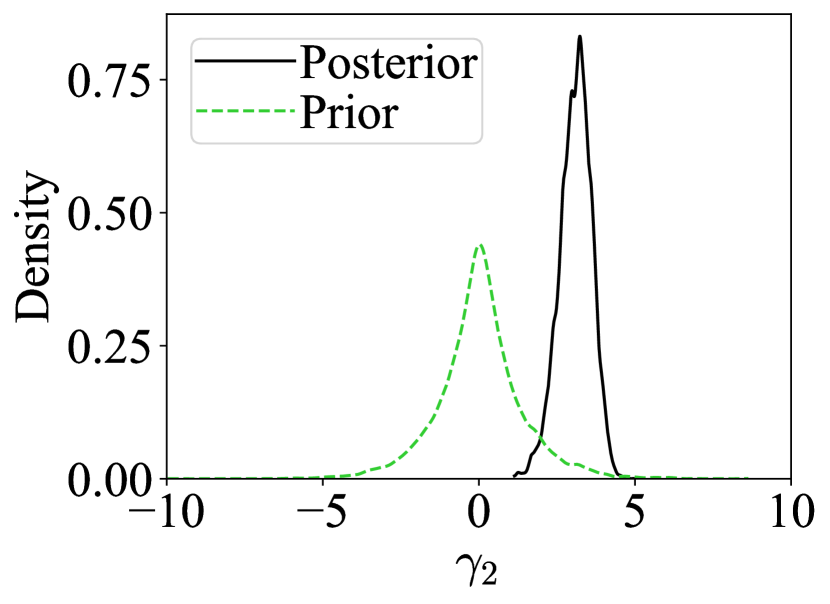

We develop a robust version of SNL, inspired by the mean adjustment approach for BSL (Frazier & Drovandi, 2021). Unlike Ward et al. (2022), who consider NPE, we focus on neural likelihood estimation, which is useful for problems where the likelihood is easier to emulate than the posterior. Our method is the first sequential neural approach that simultaneously detects and corrects for model misspecification. By shifting incompatible summary statistics using adjustment parameters, our method matches quite closely the posterior obtained when only considering compatible summary statistics. This is demonstrated in Figure 1, on an example discussed further in Section 4, where our method is shown to closely match the posterior density obtained using the compatible summary statistic. We further demonstrate the reliable performance of our approach on several illustrative examples.

2 Background

Let denote the observed data and define as the true unknown distribution of . The observed data is assumed to be generated from a class of parametric models, . The posterior density of interest is given by

| (1) |

where is the likelihood function and is the prior distribution. In this paper, we are interested in models for which is analytically or computationally intractable, but from which we can easily simulate pseudo-data for any where is dimensional.

2.1 Simulation-based Inference

The traditional statistical approach to conducting inference on in this setting is to use ABC methods. Using the assumed DGP, these methods search for values of that produce pseudo-data which is “close enough” to , and then retain these values to build an approximation to the posterior. The comparison is generally carried out using summaries of the data to ensure the problem is computationally feasible. Let , denote the vector summary statistic mapping used in the analysis, where , and .

Two prominent statistical approaches for SBI are ABC and BSL. ABC approximates the likelihood for the summaries via the following:

where measures the discrepancy between observed and simulated summaries and is a kernel that allocates higher weight to smaller . The bandwidth of the kernel, , is often referred to as the tolerance in the ABC literature. The above integral is intractable, but can be estimated unbiasedly by drawing mock datasets and computing

It is common to set and choose the indicator kernel function,

Using arguments from the exact-approximate literature (Andrieu & Roberts, 2009), unbiasedly estimating the ABC likelihood leads to a Bayesian algorithm that samples from the approximate posterior proportional to . As is evident from the above integral estimator, ABC non-parametrically estimates the summary statistic likelihood. Unfortunately, this non-parametric approximation causes ABC to exhibit the “curse of dimensionality”, meaning that the probability of a proposed parameter being accepted decreases dramatically as the dimension of the summary statistics increases (Barber et al., 2015; Csilléry et al., 2010).

In contrast, BSL uses a parametric estimator. The most common BSL approach approximates using a Gaussian:

where and denote the mean and variance of the model summary statistic at . In almost all practical cases and are unknown, but we can replace these quantities with those estimated from independent model simulations, using for example the sample mean and variance:

and where each simulated data set , , is generated i.i.d. from . The synthetic likelihood is then approximated as

Unlike ABC, is not an unbiased estimator of . David T. Frazier & Kohn (2023) demonstrate that if the summary statistics are sub-Gaussian, then the choice of is immaterial so long as diverges as diverges. The insensitivity to is supported empirically in Price et al. (2018), provided that is chosen large enough so that the plug-in synthetic likelihood estimator has a small enough variance to ensure that MCMC mixing is not adversely affected. The Gaussian assumption can be limiting; however, neural likelihoods provide a more flexible alternative to BSL while retaining the advantages of a parametric approximation.

Unfortunately, both ABC and BSL are inefficient in terms of the number of model simulations required to produce posterior approximations. In particular, most algorithms for ABC and BSL are wasteful in the sense that they use a relatively large number of model simulations associated with rejected parameter proposals. In contrast, methods have been developed in machine learning that utilise all model simulations to learn either the likelihood (e.g. Papamakarios et al. (2019)), posterior (e.g. Greenberg et al. (2019); Papamakarios & Murray (2016)) or likelihood ratio (e.g. Durkan et al. (2020); Hermans et al. (2020); Thomas et al. (2022)). We consider the SNL method of Papamakarios et al. (2019) in more detail in Section 3.

Neural SBI methods have seen rapid advancements, with various approaches approximating the likelihood (Boelts et al., 2022; Wiqvist et al., 2021). These methods include diffusion models for approximating the score of the likelihood (Simons et al., 2023), energy-based models for surrogating the likelihood (Glaser et al., 2023) and a “neural conditional exponential family” trained via score matching (Pacchiardi & Dutta, 2022). Bon et al. (2022) present a method for refining approximate posterior samples to minimise bias and enhance uncertainty quantification by optimising a transform of the approximate posterior, which maximises a scoring rule.

One way to categorise neural SBI methods is by differentiating between amortised and sequential sampling schemes. These methods differ in their proposal distribution for . Amortised methods estimate the neural density for any within the support of the prior predictive distribution. This allows the trained flow to approximate the posterior for any observed statistic, making it efficient when analysing multiple datasets. However, this requires using the prior as the proposal distribution. When the prior and posterior differ significantly, there will be few training samples of close to , resulting in the trained flow potentially being less accurate in the vicinity of the observed statistic.

2.2 SBI and Model Misspecification

When we apply a summary statistic mapping, we need to redefine the usual notion of model misspecification, i.e., no value of such that , as it is still possible for to generate summary statistics that match the observed statistic even if the model is incorrect (Frazier et al., 2020b). We define and as the expected values of the summary statistic with respect to the probability measures and , respectively. The meaningful notion of misspecification in SBI is when no satisfies , implying there is no parameter value for which the expected simulated and observed summaries match. This is the definition of incompatibility proposed in Marin et al. (2014).

Wilkinson (2013) reframes the approximate ABC posterior as an exact result for a different distribution that incorporates an assumption of model error (i.e. model misspecification). Ratmann et al. (2009) proposes an ABC method that augments the likelihood with unknown error terms, allowing model criticism by detecting summary statistics that require high tolerances. Frazier et al. (2020a) incorporate adjustment parameters, inspired by RBSL, in an ABC context.

The behaviour of ABC and BSL under incompatibility is now well understood. In the context of ABC, we consider the model to be misspecified if

for some metric , and the corresponding pseudo-true parameter is defined as

Frazier et al. (2020b) show, under various conditions, the ABC posterior concentrates onto for large sample sizes, providing an inherent robustness to model misspecification. However, they also demonstrate that the asymptotic shape of the ABC posterior is non-Gaussian and credible intervals lack valid frequentist coverage; i.e., confidence sets do not have the correct level under .

In the context of BSL, Frazier et al. (2021) show that when the model is incompatible, i.e. , the Kullback-Leibler divergence between the true data generating distribution and the Gaussian distribution associated with the synthetic likelihood diverges as diverges. In BSL, we say that the model is incompatible if

We define

The behaviour of BSL under misspecification depends on the number of roots of . If there is a single solution, and under various assumptions, the BSL posterior will concentrate onto the pseudo-true parameter with an asymptotic Gaussian shape, and the BSL posterior mean satisfies a Bernstein von-Mises result. However, if there are multiple solutions to , then the BSL posterior will asymptotically exhibit multiple modes that do not concentrate on . The number of solutions to for a given problem is not known a priori and is very difficult to explore.

In addition to the theoretical issues faced by BSL under misspecification, there are also computational challenges. Frazier & Drovandi (2021) point out that under incompatibility, since the observed summary lies in the tail of the estimated synthetic likelihood for any value of , the Monte Carlo estimate of the likelihood suffers from high variance. Consequently, a significantly large value of is needed to enable the MCMC chain to mix and avoid getting stuck, which is computationally demanding.

Due to the undesirable properties of BSL under misspecification, Frazier & Drovandi (2021) propose RBSL as a way to identify incompatible statistics and make inferences more robust simultaneously. RBSL is a model expansion that introduces auxiliary variables, represented by the vector . These variables shift the means (RBSL-M) or inflate the variances (RBSL-V) in the Gaussian approximation, ensuring that the extended model is compatible by absorbing any misspecification. This approach guarantees that the observed summary does not fall far into the tails of the expanded model. However, the expanded model is now overparameterised since the dimension of the combined vector is larger than the dimension of the summary statistics.

To regularise the model, Frazier & Drovandi (2021) impose a prior distribution on that favours compatibility. However, each component of ’s prior has a heavy tail, allowing it to absorb the misspecification for a subset of the summary statistics. This method identifies the statistics the model is incompatible with while mitigating their influence on the inference. Frazier & Drovandi (2021) demonstrate that under compatibility, the posterior for is the same as its prior, so that incompatibility can be detected by departures from the prior.

The mean adjusted synthetic likelihood is denoted

| (2) |

where is the vector of estimated standard deviations of the model summary statistics, and denotes the Hadamard (element-by-element) product. The role of is to ensure that we can treat each component of as the number of standard deviations that we are shifting the corresponding model summary statistic.

Frazier & Drovandi (2021) suggest using a prior where and are independent, with the prior density for being a Laplace prior with scale for each . The prior is chosen because it is peaked at zero but has a moderately heavy tail. Sampling the joint posterior can be done using a component-wise MCMC algorithm that iteratively updates using the conditionals and . The update for holds the model simulations fixed and uses a slice sampler, resulting in an acceptance rate of one without requiring tuning a proposal distribution. Frazier & Drovandi (2021) find empirically that sampling over the joint space does not slow down mixing on the -marginal space. In fact, in cases of misspecification, the mixing is substantially improved as the observed value of the summaries no longer falls in the tail of the Gaussian distribution.

Recent developments in SBI have focused on detecting and addressing model misspecification for both neural posterior estimation (Ward et al., 2022) and for amortised and sequential inference (Schmitt et al., 2023a). Schmitt et al. (2023a) employs a maximum mean discrepancy (MMD) estimator to detect a “simulation gap” between observed and simulated data, while Ward et al. (2022) detects and corrects for model misspecification by introducing an error model . Noise is added directly to the summary statistics during training in Bernaerts et al. (2023). Huang et al. (2023) noted that as incompatibility is based on the choice of summary statistics, if the summary statistics are learnt via a NN, training this network with a regularised loss function that penalises statistics with a mismatch between the observed and simulated values will lead to robust inference. Glöckler et al. (2023), again focused on the case of learnt (via NN) summaries, propose a scheme for robustness against adversarial attacks (i.e. small worst-case perturbations) on the observed data. Schmitt et al. (2023c) introduce a meta-uncertainty framework that blends real and simulated data to quantify uncertainty in posterior model probabilities, applicable to SBI with potential model misspecification

Generalised Bayesian inference (GBI) is an alternative class of methods suggested to handle model misspecification better than standard Bayesian methods (Knoblauch et al., 2022). Instead of targeting the standard Bayesian posterior, the GBI framework targets a generalised posterior,

where is some loss function and is a tuning parameter that needs to be calibrated appropriately (Bissiri et al., 2016). Various approaches have applied GBI to misspecified models with intractable likelihoods (Chérief-Abdellatif & Alquier, 2020; Matsubara et al., 2022; Pacchiardi & Dutta, 2021; Schmon et al., 2021). Gao et al. (2023) extends GBI to the amortised SBI setting, using a regression neural network to approximate the loss function, achieving favourable results for misspecified examples. Dellaporta et al. (2022) employ similar ideas to GBI for an MMD posterior bootstrap.

3 Robust Sequential Neural Likelihood

SNL is within the class of SBI methods that utilise a neural conditional density estimator (NCDE). An NCDE is a particular class of neural network, , parameterised by , which learns a conditional probability density from a set of paired data points. This is appealing for SBI since we have access to pairs of but lack a tractable conditional probability density in either direction. The idea is to train on and use it as a surrogate for the unavailable density of interest. NCDEs have been employed as surrogate densities for the likelihood (Papamakarios et al., 2019) and posterior (Papamakarios & Murray, 2016; Greenberg et al., 2019) or both simultaneously (Radev et al., 2023; Schmitt et al., 2023b; Wiqvist et al., 2021). Most commonly, a normalizing flow is used as the NCDE, and we do so here.

Normalising flows are a useful class of neural network for density estimation. Normalising flows convert a simple base distribution , e.g. a standard normal, to a complex target distribution, , e.g. the likelihood, through a sequence of diffeomorphic transformations (bijective, differentiable functions with a differentiable inverse), . The density of , where is,

| (3) |

where is the Jacobian of . Autoregressive flows, such as neural spline flow used here, are one class of normalising flow that ensure that the Jacobian is a triangular matrix, allowing fast computation of the determinant in Equation 3. We refer to Papamakarios et al. (2021) for more details. Normalising flows are also useful for data generation, although this has been of lesser importance for SBI methods.

Sequential approaches aim to update the proposal distribution so that more training datasets are generated closer to , resulting in a more accurate approximation of for a given simulation budget. In this approach, training rounds are performed, with the proposal distribution for the current round given by the approximate posterior from the previous round. As in Papamakarios et al. (2019), the first round, , proposes , then for subsequent rounds , a normalising flow, is trained on all generated . The choice of amortised or sequential methods depends on the application. For instance, in epidemiology transmission models, we typically have a single set of summary statistics (describing the entire population of interest), relatively uninformative priors, and a computationally costly simulation function. In such cases, a sequential approach is more appropriate.

Neural-based methods can efficiently sample the approximate posterior using MCMC methods. The evaluation of the normalising flow density is designed to be fast. Since we are using the trained flow as a surrogate function, no simulations are needed during MCMC sampling. With automatic differentiation, one can efficiently find the gradient of an NCDE and use it in an effective MCMC sampler like the No-U-Turn sampler (NUTS) (Hoffman & Gelman, 2014).

Recent research has found that neural SBI methods behave poorly under model misspecification (Bon et al., 2023; Cannon et al., 2022; Schmitt et al., 2023a; Ward et al., 2022), prompting the development of more robust approaches. We propose robust SNL (RSNL), a sequential method that adapts to model misspecification by incorporating an approach similar to that of Frazier & Drovandi (2021). Our approach adjusts the observed summary based on auxiliary adjustment parameters, allowing it to shift to a region of higher surrogate density when the summary falls in the tail. RSNL evaluates the adjusted surrogate likelihood as estimating the approximate joint posterior,

| (4) |

where we set and independently of each other.

The choice of prior, , is crucial for RSNL. Drawing inspiration from RBSL-M, we impose a Laplace prior distribution on to promote shrinkage. The components of are set to be independent, . We find that the standard Laplace(0, 1) prior works well for low to moderate degrees of misspecification. However, it lacks sufficient support for high degrees of misspecification, leading to undetected misspecification and prior-data conflict (Evans & Jang, 2011). To address this, we employ a data-driven prior that, similar to a weakly informative prior, provides some regularisation without imposing strong assumptions for each component:

| (5) |

where is the -th standardised observed summary (discussed later). Large observed standardised summaries indicate a high degree of misspecification, and including this in the prior helps the expanded model detect it. We set for the initial round and update in each round by recomputing . This approach detects highly misspecified summaries while introducing minimal noise for well-specified summaries. Setting adjusts the scale, with larger values allowing larger adjustments. Through empirical observation, we find to work well. The adjustment parameters can map a misspecified summary to the mean of simulated summaries, regardless of its position in the tail. We consider this prior in more detail in Appendix E.

Standardising the simulated and observed summaries is necessary to account for varying scales, and it is done after generating additional model simulations at each training round. Since all generated parameters are used to train the flow, standardisation is computed using the entire dataset . Standardisation serves two purposes: 1) facilitating the training of the flow, and 2) ensuring that the adjustment parameters are on a similar scale to the summaries. It is important to note that standardisation is performed unconditionally, using the sample mean and sample standard deviation calculated from all simulated summary statistics in the training set. For complex DGPs where the variance of the summary depends on , conditional standardisation based on may be necessary. We discuss possible extensions in Section 5.

Algorithm 1 outlines the complete process for sampling the RSNL approximate posterior. The primary distinction between SNL and RSNL lies in the MCMC sampling, which now targets the adjusted posterior as a marginal of the augmented joint posterior for and . As a result, RSNL can be easily used as a substitute for SNL. This comes at the additional computational cost of targeting a joint posterior of dimension , rather than one of dimension . Nonetheless, this increase in computational cost is generally marginal compared to the expense of running simulations for neural network training, an aspect not adversely affected by RSNL. Since RSNL, like SNL, can efficiently evaluate both the neural likelihood and the gradient of the approximate posterior, we employ NUTS for MCMC sampling. This is in contrast to Ward et al. (2022), who use mixed Hamiltonian Monte Carlo, an MCMC algorithm for inference on both continuous and discrete variables, due to their use of a spike-and-slab prior.

Once we obtain samples from the joint posterior, we can examine the and posterior samples separately. The samples can be used for Bayesian inference on functions of relevant to the specific application. In contrast, the approximate posterior samples can aid in model criticism.

RSNL can be employed for model criticism similarly to RBSL. When the assumed and actual DGP are incompatible, RSNL is expected to behave like RBSL, resulting in a discrepancy between the prior and posterior distributions for the components of . Visual inspection should be sufficient to detect such a discrepancy, but researchers can also use any statistical distance function for assessment (e.g. total variation distance). This differs from the approach in RNPE, which assumes a spike and slab error model and uses the posterior frequency of being in the slab as an indicator of model misspecification.

4 Examples and Results

In this section, we demonstrate the capabilities of RSNL on five benchmark misspecified problems of increasing complexity and compare the results obtained to SNL and RNPE. The same hyperparameters were applied to all tasks, as detailed in Appendix C. Further details and results for some examples can be found in Appendix D. The breakdown of computational times for each example is shown in Appendix B.

The expected coverage probability, a widely used metric in the SBI literature (Hermans et al., 2022), measures the frequency at which the true parameter lies within the HDR. The HDR is the smallest volume region containing 100(1-)% of the density mass in the approximate posterior, where 1- represents the credibility level. The HDR is estimated following the approach of Hyndman (1996). Conservative posteriors are considered more scientifically reliable (Hermans et al., 2022) as falsely rejecting plausible parameters is generally worse than failing to reject implausible parameters. To calculate the empirical coverage, we generate observed summaries at , where represents the “true” data generating parameter and . Using samples from the approximate posterior obtained using RSNL, we use kernel density estimation to give . The empirical coverage is calculated using:

| (6) |

The mean posterior log density at the true (or pseudo-true) parameters is another metric used in the SBI literature (Lueckmann et al., 2021). We consider the approximate posterior log density at for each . As in Ward et al. (2022), we find that non-robust SBI methods can occasionally fail catastrophically. Consequently, we also present boxplots to offer a more comprehensive depiction of the results.

In Figure 2, we illustrate the coverage and log-density at of SNL, RSNL and RNPE across four simulation examples. Figure 2 illustrates the performance of SNL and RSNL, with RSNL exhibiting more conservative coverage and higher density around . While RSNL is better calibrated than SNL on these misspecified examples, RSNL can still be overconfident, as observed in the contaminated normal and contaminated SLCP examples. We find that RNPE coverage is similar to RSNL. This further reassures that the differing coverage is an artefact of the misspecified examples, in which case we do not have any guarantees of accurate frequentist coverage. There is also a high degree of underconfidence on two of the benchmark tasks, but this is preferable to the misleading overconfidence displayed by SNL in misspecified models. The boxplots reveal that RSNL consistently delivers substantial support around . At the same time, SNL has a lower median log density and is highly unreliable, often placing negligible density around .

4.1 Contaminated Normal

Here we consider the contaminated normal example from Frazier & Drovandi (2021) to assess how SNL and RSNL perform under model misspecification. In this example, the DGP is assumed to follow:

where . However, the actual DGP follows:

The sufficient statistic for under the assumed DGP is the sample mean, . For demonstration purposes, let us also include the sample variance, . When , we are unable to replicate the sample variance under the assumed model. We use the prior, and . The actual DGP is set to and , and hence the sample variance is incompatible. Since is sufficient, so is , and one might still be optimistic that useful inference will result. We thus want our robust algorithm to concentrate the posterior around the sample mean. Under the assumed DGP we have that , for all . We thus have meaning our model is misspecified. We include additional results for the contaminated normal, included in Appendix D, due to the ease of obtaining an analytical true posterior. We also consider this example with no summarisation (i.e. using 100 draws directly) to see how RSNL scales as we increase the number of summaries, with results found in Appendix F.

For the contaminated normal example, Figure 1 demonstrates that RSNL yields reliable inference, with high posterior density around the true parameter value, . In contrast, SNL provides unreliable inference, offering minimal support near the true value. The posteriors for the components of are also shown. The prior and posterior for (linked to the compatible summary statistic) are nearly identical, aligning with RBSL behaviour. For (associated with the incompatible statistic), misspecification is detected as the posterior exhibits high density away from 0. A visual inspection suffices for modellers to identify the misspecified summary and offers guidance for adjusting the model. Further, we observed that in the well-specified case, the effect of the adjustment parameters on the coverage is minimal (see Appendix D).

4.2 Misspecified MA(1)

We follow the misspecified moving average (MA) of order 1 example in Frazier & Drovandi (2021), where the assumed DGP is an MA(1) model, , and . However, the true DGP is a stochastic volatility model of the form:

where , and . We generate the observed data using the parameters , and . The data is summarised using the autocovariance function, , where is the number of observations and is the lag. We use the prior and set .

It can be shown that for the assumed DGP that . Under the true DGP, . As evidently , the model is misspecified. We also have a unique pseudo-true value with minimised at . The desired behaviour for our robust algorithm is to detect incompatibility in the first summary statistic and centre the posterior around this pseudo-true value. As the first element of goes from , increases, and the impact of model misspecification becomes more pronounced. We consider a specific run where we observe .

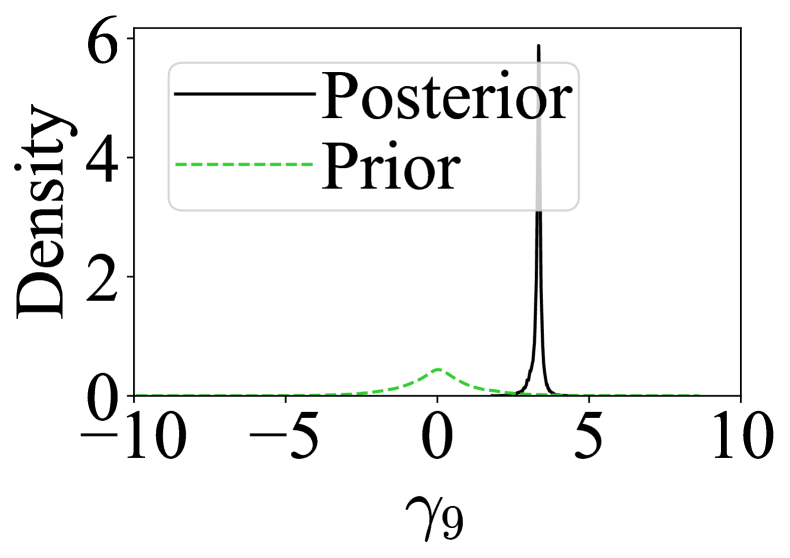

Figure 3 shows that RSNL both detects the incompatible sample variance statistic and ensures that the approximate posterior concentrates onto the parameter value that favours matching of the compatible statistic, i.e. . RNPE has a similar concentration around the pseudo-true value. SNL, however, is biased and has less support for the pseudo-true value.

As expected, (corresponding to the incompatible statistic) has significant posterior density away from 0 as seen in Figure 3. Also, the posterior for (corresponding to the compatible statistic) closely resembles the prior. The computational price of making inferences robust for the misspecified MA(1) model is minimal, with RSNL taking around 20 minutes to run and SNL taking around 10 minutes.

We may be concerned with the under-confidence of RSNL (and RNPE) on the misspecified MA(1) example as illustrated in the coverage plot in Figure 2. But from the posterior plots in Figure 3, we see that, for the misspecified MA(1) benchmark example, SNL is not only over-confident but it is over-confident for a point in the parameter space where the actual summaries and observed summaries are very different. In contrast, RSNL is under-confident, in that its posteriors are inflated relative to the standard level of frequentist coverage, however, it is under-confident for the right point: the values over which the RSNL posterior are centred deliver simulated summaries that are as close as possible to the observed summaries in the Euclidean norm. We also note that, in general when models are misspecified, Bayesian inference does not deliver valid frequentist coverage (Kleijn & van der Vaart, 2012).

4.3 Contaminated SLCP

The simple likelihood complex posterior (SLCP) model devised in Papamakarios et al. (2019) is a popular example in the SBI literature. The assumed DGP is a bivariate normal distribution with the mean vector, , and covariance matrix:

where , and . This results in a nonlinear mapping from , for . The posterior is “complex", having multiple modes due to squaring and vertical cutoffs from the uniform prior that we define in more detail later. Hence, the likelihood is expected to be easier to emulate than the posterior, making it suitable for an SNL approach. Four draws are generated from this bivariate distribution giving the likelihood, for . No summarisation is done, and the observed data is used in place of the summary statistic. We generate the observed data at parameter values, , and place an independent prior on each component of .

To impose misspecification on this illustrative example, we draw a contaminated 5-th observation, and use the observed data . Contamination is introduced by applying the (stochastic) misspecification transform considered in Cannon et al. (2022), , where , and . The assumed DGP is incompatible with this contaminated observation, and ideally, the approximate posterior would ignore the influence of this observation. Due to the stochastic transform, there is a small chance that the contaminated draw is compatible with the assumed DGP. However, the results presented here consider a specific example, where the observed contaminated draw is , which is very unlikely under the assumed DGP.

We thus want our inference to only use information from the four draws from the true DGP. The aim is to closely resemble the SNL posterior, where the observed data is the four non-contaminated draws. Figure 4 shows the estimated posterior densities for SNL (for both compatible and incompatible summaries) and RSNL for the contaminated SLCP example. When including the contaminated 5-th draw, SNL produces a nonsensical posterior with little useful information. Similarly, RNPE cannot correct the contaminated draw and does not give useful inference. Conversely, the RSNL posterior has reasonable density around the true parameters and has identified the separate modes.

The first eight compatible statistics are shown in Figure 6. The prior and posteriors reasonably match each other. In contrast, the observed data from the contaminated draw is recognised as being incompatible and has significant density away from 0, as evident in Figure 6. Again, there is no significant computational burden induced to estimate the adjustment parameters, with a total computational time of around 6 hours to run RSNL and around 4 hours for SNL.

Figure 2 shows that while RSNL is reasonably well-calibrated, RNPE does not give reliable inference. This is because RNPE could not correct for the high model misspecification of the contaminated draw. Additionally, approaches that emulate the likelihood rather than the posterior typically give better inference for this example (Lueckmann et al., 2021).

4.4 Misspecified SIR

We follow the misspecified susceptible-infected-recovered (SIR) model described in Ward et al. (2022). SIR models are a simple compartmental model for the spread of infectious diseases. The standard SIR model has an infection rate, , and recovery rate, . The population consists of three states: susceptible (), infected () and recovered (). The standard SIR model is defined by:

| (7) |

The assumed DGP is a stochastic extension of the deterministic SIR model with a time-varying infection rate, . This allows the SIR model to better capture heterogeneous disease outcomes, virus mutations and mitigation strategies (Spannaus et al., 2022). In addition to the equations in 7, the assumed SIR model is also parameterised by a time-varying effective reproduction number, ,

where is the reversion to , is the volatility and is Brownian motion. We set and as in Ward et al. (2022), and use priors, and . We use and for all observed data. The assumed DGP is run for 365 days, and only the daily number of infected individuals is considered. The initial number of infected individuals is 100. The infected counts are scaled by 100,000 to represent a larger population. A visualisation of the observed DGP is shown in Appendix D.

The true DGP has a reporting lag in recorded infections. Weekend days have the number of recorded infections reduced by 5%, being recorded on Monday, which sees an increase of 10%. Six summary statistics are considered: mean, median, max, max day (day of max infections), half day (day when half of the total infections was reached), and the autocorrelation of lag 1.

The model is misspecified, as the assumed SIR model cannot replicate the observed autocorrelation summary. We want our robust algorithm to detect misspecification in the autocorrelation summary and deliver useful inferences. We consider a specific example where the observed autocorrelation is 0.9957. Under the assumed DGP, the simulated autocorrelation is tightly centred around 0.9997. Despite the seemingly minor difference between the observed and simulated summaries, the observed summary lies far in the tails post standardisation.

As shown in Figure 7, RSNL produces useful inference with high density around the true parameters. Inspecting the adjustment parameters in Figure 8, we can see that the misspecification has been detected for the autocorrelation summary statistic and adjusted accordingly. Consequently, the modeller can further refine the simulation model to better capture the observed autocorrelation. This refinement could be achieved by recognising that the assumed DGP fails to account for the reporting lag evident in the observed data. RNPE behaves similarly to RSNL and concentrates around the true data-generating parameters. SNL, however, focuses overconfidently in an inconsequential region of the parameter space.

As the main computational cost in this example is to run the SIR simulations, there is negligible impact from the addition of adjustment parameters. In the example considered in Figures 7 and 8, SNL and RSNL both took approximately 24 hours.

4.5 Toad movement model

We consider here the animal movement model by Marchand et al. (2017) to simulate the dispersal of Fowler’s toads (Anaxyrus fowleri). This is an individual-based model that encapsulates two main behaviours that have been observed in amphibians: high site fidelity and a small possibility of long-distance movement. The assumed behaviour is for each toad to act independently, stay at a refuge site during the day and move to forage at night. After foraging, the toad either stays in its current location or returns to a previous refuge site. A toad returning to a previous refuge site occurs with a constant probability . We concentrate on “model 2” in Marchand et al. (2017), which models the behaviour of returning to its nearest previous refuge. This specific model was chosen as there is evidence of model misspecification, allowing us to assess our method on a misspecified example with real data.

The dispersal distance is modelled using a Lévy alpha-stable distribution, parameterised by a stability factor and scale factor . This distribution was chosen for its heavy tails, which allow for occasional long-distance movement while still being symmetric around zero. Although the Lévy alpha-stable distribution lacks a closed form, it is straightforward to simulate. Thus the model is governed by three parameters: . We assume the following uniform prior distributions for the model parameters: and .

The Marchand et al. (2017) GPS data was collected from 66 individual toads, with the daytime location (i.e. while resting refuge) being recorded. The number of recorded days varied across toads, with a maximum of 63 days. The two-dimensional GPS data is converted to a one-dimensional movement component, resulting in an observed matrix. The observed matrix was summarised using four sets of displacement vectors with time lags of 1, 2, 4 and 8 days. For each lag, the number of absolute displacements less than 10m, the median of the absolute displacements greater than 10m, and the log difference of the of the absolute displacements greater than 10m are calculated, resulting in a total of 48 summary statistics (12 for each time lag).

In addition to SNL and RNPE, we evaluate our method against RBSL, as we are assessing performance on the real observed data, and the ground truth is unavailable for direct comparison. We examined plots for RSNL, SNL, RNPE and RBSL using real and simulated data from the toad example. In Figure 9, the estimated model parameter posteriors are conditioned on real observed summary statistics, with RBSL as the baseline for comparison with RSNL. The marginal plots reveal that RSNL closely resembles RBSL, while SNL differs, showing minimal density around the RSNL and RBSL maximum a posteriori estimates. RNPE also gives similar marginal posteriors to RSNL and RBSL. The 95% credible intervals for each parameter are displayed in Table 1. However, when considering simulated data (see Appendix D), SNL yields similar inferences to the robust methods. This suggests that SNL’s differing results in the real data scenario arise from incompatible summary statistics.

The most incompatible summary statistics were identified as the number of returns with a lag of 1 and the first quantile differences for lags 4 and 8. These were determined via MCMC output and visual inspection. We depict the posteriors for the adjustment parameters corresponding to these incompatible summaries in Figure 10, alongside the first three posteriors for compatible summaries, which are expected to closely match their priors. This example illustrates the advantage of carefully selecting summary statistics that hold intrinsic meaning for domain experts. For example, the insight that the current model cannot adequately capture the low number of observed toad returns—particularly while also fitting other movement behaviours—has direct meaning to the domain expert modeller, enabling them to refine the model accordingly.

In Figure 11, we present distributions for the log distance travelled by non-returning toads derived from the (pre-summarised) observation matrix. The simulated distances closely align with, and are tightly distributed around, the observed distances. Differences between the simulated and observed log distances appear most noticeable at lags 4 and 8 for shorter distances, aligning with the identified incompatible summaries. Figure 12 displays boxplots for the number of returns across the four lags. As confirmed by the first incompatible summary, the model has difficulties replicating the observed number of toad returns while accurately capturing other summary statistics. Overall, the posterior predictive distributions largely agree with observed data, with discrepancies coinciding with the incompatible summaries identified.

For the toad movement model, as the focus is on the performance of the methods on real data (with no ground-truth parameter), we instead consider the posterior predictive distribution. To compare predictive performance across methods, we computed the MMD between the observed summary statistic and samples from the posterior predictive, as presented in Table 1. Implementation details for the MMD can be found in Appendix D. We highlight that RSNL has a lower discrepancy than SNL and similar results to RNPE. While RBSL is the best-performing method in this example, it requires orders of magnitude more model simulations. We also note that the most incompatible summary statistics identified via the adjustment parameters (see results in Appendix D) agree with those found in Frazier & Drovandi (2021).

| Method | Number simulations | (2.5% - 97.5%) | (2.5% - 97.5%) | (2.5% - 97.5%) | MMD |

|---|---|---|---|---|---|

| RSNL | 10000 | (1.28 - 1.77) | (35.21 - 47.34) | (0.61 - 0.76) | 0.006 |

| SNL | 10000 | (1.63 - 1.95) | (44.61 - 53.36) | (0.63 - 0.74) | 0.015 |

| RNPE | 10000 | (1.28 - 1.84) | (31.45 - 48.68) | (0.60 - 0.80) | 0.007 |

| RBSL-M | 25000000 | (1.35 - 1.80) | (35.67 - 47.48) | (0.59 - 0.73) | 0.001 |

5 Discussion

In this work, we introduced RSNL, a robust neural SBI method that detects and mitigates the impact of model misspecification, making it the first method of its kind that uses a surrogate likelihood or sequential sampling. RSNL demonstrated robustness to model misspecification and efficient inference on several illustrative examples.

RSNL provides useful inference with significantly fewer simulation calls than ABC and BSL methods. For instance, only 10,000 model simulations were needed for the RSNL posterior in the contaminated normal model, while RBSL in Frazier & Drovandi (2021) required millions of simulations. A more comprehensive comparison, such as the benchmarks in Lueckmann et al. (2021), could assess the robustness of ABC, BSL, and neural SBI methods to model misspecification and evaluate their performance across different numbers of simulations. Ideally, such a benchmark would include challenging real-world data applications, showcasing the utility of these methods for scientific purposes.

In this paper, we consider summaries that are carefully specified by the modeller, as opposed to neural networks or algorithms that learn the summaries (e.g. via an autoencoder (Albert et al., 2022)). While learnt summaries can be valuable, including addressing model misspecification (Huang et al., 2023), interpretable summary statistics chosen by domain experts to be meaningful to them are often crucial to model development. We see model fitting under misspecification as having two main functions. The first is to minimise harm from fitting a misspecified model, where inaccurate uncertainty quantification will lead the domain expert to extract misleading insights into the phenomena of interest. The second is to enable more meaningful model criticism allowing a better model to be developed. For the first function, the purpose of the model must be taken into account, and this can take the form of deciding which of a set of interpretable summaries it is important to match in the application. For the second function, knowing which of a set of interpretable summary statistics cannot be matched is insightful to experts for the purpose of model refinement and improvement. It needs to be clarified how current methods for obtaining learnt summary statistics allow the modeller to refine the current model further. However, our adjustment parameter approach could also be applied when the summaries are learnt.

Our relevant form of model misspecification, incompatibility, could be interpreted as an issue of OOD data. OOD data refers to data drawn from a different distribution than the one used to train the neural network. It is an important issue in the ML community to detect the presence of OOD data (Hendrycks & Gimpel, 2017; Yang et al., 2022). Normalising flows struggle with OOD data (Kirichenko et al., 2020). Mechanically, this is exactly what happens when we train a surrogate normalising flow using simulated data from the misspecified model and evaluate it on the observed data from reality. Favourable results across numerous neural SBI methods have been achieved in Cannon et al. (2022) using OOD detection methods based on ensemble posteriors (Lakshminarayanan et al., 2017) and sharpness-aware minimisation (Foret et al., 2021). Combining these OOD methods with adjustment parameters could enhance their benefits. Another strategy to counteract the effects of OOD data that has been considered in a non-SBI context involves employing a physics-based correction to the latent distribution of the conditional normalising flow that learns the posterior (Siahkoohi et al., 2021; 2023).

We highlight one example where the model misspecification is not incompatible summaries but rather an inappropriate choice of summary statistics. Consider the contaminated normal described in Section 4 but with only the sample mean. The sample mean is a sufficient and compatible summary statistic, and we can match it with the assumed univariate normal distribution despite it being generated from two normal distributions with different standard deviations. So, we can have compatible summaries where the assumed DGP misrepresents reality. However, in such instances, we can expect SBI algorithms to be “well-behaved” in that they will produce meaningful inference on the unknown model parameters (Frazier et al., 2020b; David T. Frazier & Kohn, 2023). It is difficult to derive the same theoretical backing for neural SBI methods as has been done for ABC and synthetic likelihood; however, given the expressive power of normalising flows (Papamakarios et al., 2021), we may expect similar results to hold when the observed data is “in-distribution” of the simulated data. This stands in contrast to the case of incompatibility, where it has already been observed that various SBI methods, such as synthetic likelihood (Frazier et al., 2021), and neural methods, such as SNL (Cannon et al., 2022), can give nonsensical results under incompatibility. In this described instance, looking at more detailed features of the full data would have revealed the deficiencies of the assumed DGP. In general, the modeller can use the posterior predictive distribution to generate the full data and probe for any aspects the assumed model is unable to explain (e.g. the log-distance plots for the toad movement model in Figure 11). This highlights the importance of judiciously selecting summary statistics when building models in SBI. Ideally, the summaries would be selected to capture key aspects of the data (Lewis et al., 2021), and there is a broad literature on choosing appropriate summaries (Prangle, 2018). Our proposed method would assist in selecting summaries, as it ensures more reliable inference and provides diagnostics when the summaries are incompatible.

RBSL-M accounts for the different summary scales and the fact that these scales could be -dependent by adjusting the mean using , where is a vector of estimated standard deviations of the model summaries at . RBSL estimates these standard deviations from the model simulations generated based on . Analogously, we could consider a similar approach in RSNL and define the target

The question then becomes, how do we estimate in the context of RSNL? In the MCMC phase, we do not want to generate more model simulations as this would be costly. If we believed that the standard deviation of the model summaries had little dependence on , we could set where is some reasonable point estimate of the parameter. Another approach would, for each proposed in the MCMC, estimate using surrogate model simulations generated using the fitted normalising flow. This would be much faster than actual model simulations but could still slow down the MCMC phase substantially. Instead of using a normalising flow, we could train a mixture density network (Bishop, 1994) to emulate the likelihood, which would then lead to an analytical expression for . A multivariate mixture density network could replace the flow completely, or the multivariate flow for the joint summary could be retained and a series of univariate mixture density networks applied to each summary statistic for the sole purpose of emulating . We plan to investigate these options in future research.

The introduction of adjustment parameters in RSNL might raise concerns about introducing noise into the estimated posterior. However, our empirical findings indicate that the impact of this noise is negligible, particularly when using our chosen prior. This observation aligns with the RBSL results presented in Frazier & Drovandi (2021). Furthermore, Hermans et al. (2022) noted that SBI methods, including SNL, often produce overconfident posterior approximations. Thus, it is unlikely that the minor noise introduced by adjustment parameters would lead to excessively conservative posterior estimates. Recent work has proposed solutions to neural SBI overconfident posterior approximations (Delaunoy et al., 2022; 2023; Falkiewicz et al., 2023). It would be interesting to see if these methods could be combined with our proposed method to have both correctly calibrated posteriors and robustness to model misspecification, however this is beyond the scope here.

Evaluating misspecified summaries can be done by comparing the prior and posterior densities for each component of . In our examples, we used visual inspection for this purpose. However, for cases with a large number of summaries, this method may become cumbersome. Instead, an automated approach could be implemented to streamline the process and efficiently identify misspecified summaries. While the posteriors of the adjustment parameters can be used to diagnose misspecification, RSNL lacks many of the diagnostic tools available to amortised methods (e.g.Talts et al. (2018); Hermans et al. (2022)) due to its sequential sampling scheme.

There are two primary scenarios where our proposed prior may not be suitable. First, if a summary is incompatible but becomes very close to 0 after standardisation, this seems unlikely but may occur when simulated summaries generate a complex multi-modal distribution at a specific parameter value. However, flow-based neural likelihood methods generally exhibit poor performance in such scenarios (Glaser et al., 2023). In this case, RSNL will behave similarly to SNL for that particular summary. Second, a summary is correctly specified but lies in the tails. This is also unlikely and would increase noise introduced by the adjustment parameters. If concerns arise, researchers can visualise the summary statistic plots generated at a reasonable model parameter point or examine posterior predictive distributions. If necessary, an alternative prior can be employed.

The choice of was found to be crucial in practice. Our prior choice was based on the dual requirements to minimise noise introduced by the adjustment parameters if the summaries are compatible and to be capable of shifting the summary a significant distance from the origin if they are incompatible. The horseshoe prior is an appropriate choice for these requirements. Further work could consider how to implement this robustly in a NUTS sampler. Another approach is the spike-and-slab prior as in Ward et al. (2022). This type of prior is a mixture of two distributions: one that encourages shrinkage (the spike) and another that allows for a wider range of values (the slab). Further research is needed to determine the most appropriate prior choice for RSNL and similar methods, which could involve a comparative study of different prior choices and their effects on the robustness and efficiency of the resulting inference.

Modellers constructing complex DGPs for real-world data should address model misspecification. The machine learning and statistics community must develop tools for practitioners to conduct neural SBI methods without producing misleading results under model misspecification. We hope that our proposed method contributes to the growing interest in addressing model misspecification in SBI.

References

- Albert et al. (2022) Carlo Albert, Simone Ulzega, Firat Ozdemir, Fernando Perez-Cruz, and Antonietta Mira. Learning summary statistics for Bayesian inference with autoencoders. SciPost Physics Core, 5(3):043, 2022.

- Andrieu & Roberts (2009) Christophe Andrieu and Gareth O. Roberts. The pseudo-marginal approach for efficient Monte Carlo computations. The Annals of Statistics, 37(2):697–725, 2009. URL http://www.jstor.org/stable/30243645.

- Barber et al. (2015) Stuart Barber, Jochen Voss, and Mark Webster. The rate of convergence for approximate Bayesian computation. Electronic Journal of Statistics, 9(1):80–105, 2015. doi: 10.1214/15-EJS988. URL https://doi.org/10.1214/15-EJS988.

- Beaumont (2010) Mark A. Beaumont. Approximate Bayesian computation in evolution and ecology. Annual Review of Ecology, Evolution, and Systematics, 41(1):379–406, 2010. ISSN 1543-592X. doi: 10.1146/annurev-ecolsys-102209-144621. URL https://dx.doi.org/10.1146/annurev-ecolsys-102209-144621.

- Bernaerts et al. (2023) Yves Bernaerts, Michael Deistler, Pedro J Goncalves, Jonas Beck, Marcel Stimberg, Federico Scala, Andreas S Tolias, Jakob H Macke, Dmitry Kobak, and Philipp Berens. Combined statistical-mechanistic modeling links ion channel genes to physiology of cortical neuron types. bioRxiv, pp. 2023–03, 2023.

- Bishop (1994) Christopher M. Bishop. Mixture density networks. Technical Report, Aston University, 1994.

- Bissiri et al. (2016) Pier Giovanni Bissiri, Chris C Holmes, and Stephen G Walker. A general framework for updating belief distributions. Journal of the Royal Statistical Society Series B: Statistical Methodology, 78(5):1103, 2016.

- Boelts et al. (2022) Jan Boelts, Jan-Matthis Lueckmann, Richard Gao, and Jakob H Macke. Flexible and efficient simulation-based inference for models of decision-making. eLife, 11:e77220, 2022.

- Bon et al. (2022) Joshua J Bon, David J Warne, David J Nott, and Christopher Drovandi. Bayesian score calibration for approximate models. arXiv preprint arXiv:2211.05357, 2022.

- Bon et al. (2023) Joshua J Bon, Adam Bretherton, Katie Buchhorn, Susanna Cramb, Christopher Drovandi, Conor Hassan, Adrianne L Jenner, Helen J Mayfield, James M McGree, Kerrie Mengersen, et al. Being Bayesian in the 2020s: opportunities and challenges in the practice of modern applied Bayesian statistics. Philosophical Transactions of the Royal Society A, 381(2247):20220156, 2023.

- Bradbury et al. (2018) James Bradbury, Roy Frostig, Peter Hawkins, Matthew James Johnson, Chris Leary, Dougal Maclaurin, George Necula, Adam Paszke, Jake VanderPlas, Skye Wanderman-Milne, and Qiao Zhang. JAX: composable transformations of Python+NumPy programs, 2018. Python package version 0.3.13, URL https://github.com/google/jax.

- Cannon et al. (2022) Patrick Cannon, Daniel Ward, and Sebastian M. Schmon. Investigating the impact of model misspecification in neural simulation-based inference, 2022. URL https://arxiv.org/abs/2209.01845. arXiv preprint arXiv:2209.01845.

- Chérief-Abdellatif & Alquier (2020) Badr-Eddine Chérief-Abdellatif and Pierre Alquier. MMD-Bayes: robust Bayesian estimation via maximum mean discrepancy. In Symposium on Advances in Approximate Bayesian Inference, pp. 1–21. PMLR, 2020.

- Confavreux et al. (2023) Basile Confavreux, Poornima Ramesh, Pedro J. Goncalves, Jakob H. Macke, and Tim P. Vogels. Meta-learning families of plasticity rules in recurrent spiking networks using simulation-based inference. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=FLFasCFJNo.

- Cranmer et al. (2020) Kyle Cranmer, Johann Brehmer, and Gilles Louppe. The frontier of simulation-based inference. Proceedings of the National Academy of Sciences, 117(48):30055–30062, 2020.

- Csilléry et al. (2010) Katalin Csilléry, Michael G.B. Blum, Oscar E. Gaggiotti, and Olivier François. Approximate Bayesian computation (ABC) in practice. Trends in Ecology & Evolution, 25(7):410–418, 2010. ISSN 0169-5347. doi: https://doi.org/10.1016/j.tree.2010.04.001. URL http://www.sciencedirect.com/science/article/pii/S0169534710000662.

- David T. Frazier & Kohn (2023) Christopher Drovandi David T. Frazier, David J. Nott and Robert Kohn. Bayesian inference using synthetic likelihood: asymptotics and adjustments. Journal of the American Statistical Association, 118(544):2821–2832, 2023. doi: 10.1080/01621459.2022.2086132. URL https://doi.org/10.1080/01621459.2022.2086132.

- Delaunoy et al. (2022) Arnaud Delaunoy, Joeri Hermans, François Rozet, Antoine Wehenkel, and Gilles Louppe. Towards reliable simulation-based inference with balanced neural ratio estimation. In Alice H. Oh, Alekh Agarwal, Danielle Belgrave, and Kyunghyun Cho (eds.), Advances in Neural Information Processing Systems, 2022. URL https://openreview.net/forum?id=o762mMj4XK.

- Delaunoy et al. (2023) Arnaud Delaunoy, Benjamin Kurt Miller, Patrick Forré, Christoph Weniger, and Gilles Louppe. Balancing simulation-based inference for conservative posteriors. arXiv preprint arXiv:2304.10978, 2023.

- Dellaporta et al. (2022) Charita Dellaporta, Jeremias Knoblauch, Theodoros Damoulas, and François-Xavier Briol. Robust Bayesian inference for simulator-based models via the MMD posterior bootstrap. In International Conference on Artificial Intelligence and Statistics, pp. 943–970. PMLR, 2022.

- Drovandi & Frazier (2022) Christopher Drovandi and David T Frazier. A comparison of likelihood-free methods with and without summary statistics. Statistics and Computing, 32(3):1–23, 2022.

- Durkan et al. (2019) Conor Durkan, Artur Bekasov, Iain Murray, and George Papamakarios. Neural spline flows. In Advances in Neural Information Processing Systems 32, 2019. URL https://proceedings.neurips.cc/paper/2019/file/7ac71d433f282034e088473244df8c02-Paper.pdf.

- Durkan et al. (2020) Conor Durkan, Iain Murray, and George Papamakarios. On contrastive learning for likelihood-free inference. In The 37-th International Conference on Machine Learning, pp. 2771–2781. PMLR, 13–18 Jul 2020. URL https://proceedings.mlr.press/v119/durkan20a.html.

- Evans & Jang (2011) Michael Evans and Gun Ho Jang. Weak informativity and the information in one prior relative to another. Statistical Science, 26(3):423 – 439, 2011. doi: 10.1214/11-STS357. URL https://doi.org/10.1214/11-STS357.

- Falkiewicz et al. (2023) Maciej Falkiewicz, Naoya Takeishi, Imahn Shekhzadeh, Antoine Wehenkel, Arnaud Delaunoy, Gilles Louppe, and Alexandros Kalousis. Calibrating neural simulation-based inference with differentiable coverage probability. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=wLiMhVJ7fx.

- Ferguson et al. (2020) Neil M Ferguson, Daniel Laydon, Gemma Nedjati-Gilani, Natsuko Imai, Kylie Ainslie, Marc Baguelin, Sangeeta Bhatia, Adhiratha Boonyasiri, Zulma Cucunubá, Gina Cuomo-Dannenburg, et al. Impact of non-pharmaceutical interventions (NPIs) to reduce COVID-19 mortality and healthcare demand. Technical Report, Imperial College COVID-19 Response Team London, 2020. DOI 10.25561/77482.

- Foret et al. (2021) Pierre Foret, Ariel Kleiner, Hossein Mobahi, and Behnam Neyshabur. Sharpness-aware minimization for efficiently improving generalization. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=6Tm1mposlrM.

- Frazier et al. (2018) D T Frazier, G M Martin, C P Robert, and J Rousseau. Asymptotic properties of approximate Bayesian computation. Biometrika, 105(3):593–607, 06 2018. ISSN 0006-3444. doi: 10.1093/biomet/asy027. URL https://doi.org/10.1093/biomet/asy027.

- Frazier & Drovandi (2021) David T. Frazier and Christopher Drovandi. Robust approximate Bayesian inference with synthetic likelihood. Journal of Computational and Graphical Statistics, 30(4):958–976, 2021. doi: 10.1080/10618600.2021.1875839. URL https://doi.org/10.1080/10618600.2021.1875839.

- Frazier et al. (2020a) David T Frazier, Christopher Drovandi, and Ruben Loaiza-Maya. Robust approximate Bayesian computation: an adjustment approach. arXiv preprint arXiv:2008.04099, 2020a.

- Frazier et al. (2020b) David T. Frazier, Christian P. Robert, and Judith Rousseau. Model misspecification in approximate Bayesian computation: consequences and diagnostics. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 82(2):421–444, 2020b. doi: https://doi.org/10.1111/rssb.12356. URL https://rss.onlinelibrary.wiley.com/doi/abs/10.1111/rssb.12356.

- Frazier et al. (2021) David T Frazier, Christopher Drovandi, and David J Nott. Synthetic likelihood in misspecified models: consequences and corrections. arXiv preprint arXiv:2104.03436, 2021.

- Gabry et al. (2019) Jonah Gabry, Daniel Simpson, Aki Vehtari, Michael Betancourt, and Andrew Gelman. Visualization in Bayesian workflow. Journal of the Royal Statistical Society Series A: Statistics in Society, 182(2):389–402, 01 2019. ISSN 0964-1998. doi: 10.1111/rssa.12378. URL https://doi.org/10.1111/rssa.12378.

- Gao et al. (2023) Richard Gao, Michael Deistler, and Jakob H. Macke. Generalized Bayesian inference for scientific simulators via amortized cost estimation. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=ZARAiV25CW.

- Gelman et al. (2020) Andrew Gelman, Aki Vehtari, Daniel Simpson, Charles C Margossian, Bob Carpenter, Yuling Yao, Lauren Kennedy, Jonah Gabry, Paul-Christian Bürkner, and Martin Modrák. Bayesian workflow. arXiv preprint arXiv:2011.01808, 2020.

- Glaser et al. (2023) Pierre Glaser, Michael Arbel, Arnaud Doucet, and Arthur Gretton. Maximum likelihood learning of energy-based models for simulation-based inference, 2023. URL https://openreview.net/forum?id=gL68u5UuWa.

- Glöckler et al. (2023) Manuel Glöckler, Michael Deistler, and Jakob H. Macke. Adversarial robustness of amortized Bayesian inference. In Proceedings of the 40th International Conference on Machine Learning, ICML’23. JMLR.org, 2023.

- Gneiting & Raftery (2007) Tilmann Gneiting and Adrian E Raftery. Strictly proper scoring rules, prediction, and estimation. Journal of the American statistical Association, 102(477):359–378, 2007.

- Gonçalves et al. (2020) Pedro J Gonçalves, Jan-Matthis Lueckmann, Michael Deistler, Marcel Nonnenmacher, Kaan Öcal, Giacomo Bassetto, Chaitanya Chintaluri, William F Podlaski, Sara A Haddad, Tim P Vogels, et al. Training deep neural density estimators to identify mechanistic models of neural dynamics. Elife, 9:e56261, 2020.

- Greenberg et al. (2019) David Greenberg, Marcel Nonnenmacher, and Jakob Macke. Automatic posterior transformation for likelihood-free inference. In The 36-th International Conference on Machine Learning, pp. 2404–2414. PMLR, 09–15 Jun 2019. URL https://proceedings.mlr.press/v97/greenberg19a.html.

- Gretton et al. (2012) Arthur Gretton, Karsten M. Borgwardt, Malte J. Rasch, Bernhard Schölkopf, and Alexander Smola. A kernel two-sample test. Journal of Machine Learning Research, 13(25):723–773, 2012. URL http://jmlr.org/papers/v13/gretton12a.html.

- Hauenstein et al. (2019) Severin Hauenstein, Julien Fattebert, Martin U. Grüebler, Beat Naef-Daenzer, Guy Pe’Er, and Florian Hartig. Calibrating an individual-based movement model to predict functional connectivity for little owls. Ecological Applications, 29(4):e01873, 2019. ISSN 1051-0761. doi: 10.1002/eap.1873. URL https://dx.doi.org/10.1002/eap.1873.

- Hendrycks & Gimpel (2017) Dan Hendrycks and Kevin Gimpel. A baseline for detecting misclassified and out-of-distribution examples in neural networks. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net, 2017. URL https://openreview.net/forum?id=Hkg4TI9xl.

- Hermans et al. (2020) Joeri Hermans, Volodimir Begy, and Gilles Louppe. Likelihood-free MCMC with amortized approximate ratio estimators. In The 37-th International Conference on Machine Learning, pp. 4239–4248. PMLR, 13–18 Jul 2020. URL https://proceedings.mlr.press/v119/hermans20a.html.

- Hermans et al. (2021) Joeri Hermans, Nilanjan Banik, Christoph Weniger, Gianfranco Bertone, and Gilles Louppe. Towards constraining warm dark matter with stellar streams through neural simulation-based inference. Monthly Notices of the Royal Astronomical Society, 507(2):1999–2011, 08 2021. ISSN 0035-8711. doi: 10.1093/mnras/stab2181. URL https://doi.org/10.1093/mnras/stab2181.

- Hermans et al. (2022) Joeri Hermans, Arnaud Delaunoy, François Rozet, Antoine Wehenkel, Volodimir Begy, and Gilles Louppe. A crisis in simulation-based inference? Beware, your posterior approximations can be unfaithful. Transactions on Machine Learning Research, 2022. ISSN 2835-8856. URL https://openreview.net/forum?id=LHAbHkt6Aq.

- Hoffman & Gelman (2014) Matthew Hoffman and Andrew Gelman. The No-U-Turn Sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. Journal of Machine Learning Research, 15(1):1593–1623, 2014. ISSN 1532-4435.

- Huang et al. (2023) Daolang Huang, Ayush Bharti, Amauri H Souza, Luigi Acerbi, and Samuel Kaski. Learning robust statistics for simulation-based inference under model misspecification. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=STrXsSIEiq.

- Hunter (2007) John D Hunter. Matplotlib: a 2D graphics environment. Computing in science & engineering, 9(03):90–95, 2007.

- Hyndman (1996) Rob J. Hyndman. Computing and graphing highest density regions. The American Statistician, 50(2):120–126, 1996. doi: 10.1080/00031305.1996.10474359. URL https://www.tandfonline.com/doi/abs/10.1080/00031305.1996.10474359.

- Kelly (2022) Ryan P. Kelly. Implementing Bayesian synthetic likelihood within the engine for likelihood-free inference, 2022. URL https://eprints.qut.edu.au/233759/. Master of Philosophy Thesis. Queensland University of Technology.

- Kidger (2021) Patrick Kidger. On neural differential equations. PhD thesis, University of Oxford, 2021.

- Kingma & Ba (2015) Diederik P. Kingma and Jimmy Ba. Adam: a method for stochastic optimization. In Yoshua Bengio and Yann LeCun (eds.), The 3rd International Conference on Learning Representations, ICLR, 2015. URL http://arxiv.org/abs/1412.6980.

- Kirichenko et al. (2020) Polina Kirichenko, Pavel Izmailov, and Andrew Gordon Wilson. Why normalizing flows fail to detect out-of-distribution data. In Advances in Neural Information Processing Systems 34, 2020.

- Kleijn & van der Vaart (2012) B.J.K. Kleijn and A.W. van der Vaart. The Bernstein-Von-Mises theorem under misspecification. Electronic Journal of Statistics, 6(none):354 – 381, 2012. doi: 10.1214/12-EJS675. URL https://doi.org/10.1214/12-EJS675.

- Knoblauch et al. (2022) Jeremias Knoblauch, Jack Jewson, and Theodoros Damoulas. An optimization-centric view on Bayes’ rule: reviewing and generalizing variational inference. Journal of Machine Learning Research, 23(132):1–109, 2022.

- Kumar et al. (2019) Ravin Kumar, Colin Carroll, Ari Hartikainen, and Osvaldo Martin. ArviZ a unified library for exploratory analysis of Bayesian models in Python. Journal of Open Source Software, 4(33):1143, 2019. doi: 10.21105/joss.01143. URL https://doi.org/10.21105/joss.01143.

- Lakshminarayanan et al. (2017) Balaji Lakshminarayanan, Alexander Pritzel, and Charles Blundell. Simple and scalable predictive uncertainty estimation using deep ensembles. In Advances in Neural Information Processing Systems 31, 2017.

- Lewis et al. (2021) John R. Lewis, Steven N. MacEachern, and Yoonkyung Lee. Bayesian restricted likelihood methods: conditioning on insufficient statistics in Bayesian regression (with discussion). Bayesian Analysis, 16(4):1393–2854, 2021. doi: 10.1214/21-BA1257. URL https://doi.org/10.1214/21-BA1257.

- Li & Fearnhead (2018) Wentao Li and Paul Fearnhead. On the asymptotic efficiency of approximate Bayesian computation estimators. Biometrika, 105(2):285–299, 01 2018. ISSN 0006-3444. doi: 10.1093/biomet/asx078. URL https://doi.org/10.1093/biomet/asx078.

- Lintusaari et al. (2018) J Lintusaari, H Vuollekoski, A Kangasrääsiö, K Skytén, M Järvenpää, P Marttinen, M Gutmann, A Vehtari, J Corander, and S Kaski. ELFI: Engine for likelihood-free inference. Journal of Machine Learning Research, 19(1), 2018. doi: 10.5555/3291125.3291141. URL https://dx.doi.org/10.5555/3291125.3291141.

- Lopez-Paz & Oquab (2017) David Lopez-Paz and Maxime Oquab. Revisiting classifier two-sample tests. In International Conference on Learning Representations, 2017. URL https://openreview.net/forum?id=SJkXfE5xx.

- Lueckmann et al. (2021) Jan-Matthis Lueckmann, Jan Boelts, David Greenberg, Pedro Goncalves, and Jakob Macke. Benchmarking simulation-based inference. In The 24-th International Conference on Artificial Intelligence and Statistics, pp. 343–351. PMLR, 13–15 Apr 2021. URL https://proceedings.mlr.press/v130/lueckmann21a.html.

- Marchand et al. (2017) Philippe Marchand, Morgan Boenke, and David M. Green. A stochastic movement model reproduces patterns of site fidelity and long-distance dispersal in a population of Fowler’s toads (Anaxyrus fowleri). Ecological Modelling, 360:63–69, 2017. ISSN 0304-3800. doi: 10.1016/j.ecolmodel.2017.06.025. URL https://dx.doi.org/10.1016/j.ecolmodel.2017.06.025.

- Marin et al. (2014) Jean-Michel Marin, Natesh S Pillai, Christian P Robert, and Judith Rousseau. Relevant statistics for Bayesian model choice. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76(5):833–859, 2014.

- Matsubara et al. (2022) Takuo Matsubara, Jeremias Knoblauch, François-Xavier Briol, and Chris J Oates. Robust generalised Bayesian inference for intractable likelihoods. Journal of the Royal Statistical Society Series B: Statistical Methodology, 84(3):997–1022, 2022.

- Numminen et al. (2013) Elina Numminen, Lu Cheng, Mats Gyllenberg, and Jukka Corander. Estimating the transmission dynamics of Streptococcus pneumoniae from strain prevalence data. Biometrics, 69(3):748–757, 2013. doi: https://doi.org/10.1111/biom.12040. URL https://onlinelibrary.wiley.com/doi/abs/10.1111/biom.12040.

- Pacchiardi & Dutta (2021) Lorenzo Pacchiardi and Ritabrata Dutta. Generalized Bayesian likelihood-free inference using scoring rules estimators. arXiv preprint arXiv:2104.03889, 2021.

- Pacchiardi & Dutta (2022) Lorenzo Pacchiardi and Ritabrata Dutta. Score matched neural exponential families for likelihood-free inference. Journal of Machine Learning Research, 23, 2022. ISSN 1532-4435.

- Papamakarios & Murray (2016) George Papamakarios and Iain Murray. Fast -free inference of simulation models with Bayesian conditional density estimation. In Advances in Neural Information Processing Systems 29, 2016. URL https://proceedings.neurips.cc/paper/2016/file/6aca97005c68f1206823815f66102863-Paper.pdf.

- Papamakarios et al. (2019) George Papamakarios, David Sterratt, and Iain Murray. Sequential neural likelihood: fast likelihood-free inference with autoregressive flows. In The 22nd International Conference on Artificial Intelligence and Statistics, pp. 837–848. PMLR, 2019. URL https://proceedings.mlr.press/v89/papamakarios19a.html.

- Papamakarios et al. (2021) George Papamakarios, Eric Nalisnick, Danilo Jimenez Rezende, Shakir Mohamed, and Balaji Lakshminarayanan. Normalizing flows for probabilistic modeling and inference. Journal of Machine Learning Research, 22(57), 2021. ISSN 1532-4435.

- Park et al. (2016) Mijung Park, Wittawat Jitkrittum, and Dino Sejdinovic. K2-ABC: approximate Bayesian computation with kernel embeddings. In Arthur Gretton and Christian C. Robert (eds.), Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, volume 51 of Proceedings of Machine Learning Research, pp. 398–407, Cadiz, Spain, 09–11 May 2016. PMLR. URL https://proceedings.mlr.press/v51/park16.html.

- Phan et al. (2019) Du Phan, Neeraj Pradhan, and Martin Jankowiak. Composable effects for flexible and accelerated probabilistic programming in NumPyro. In Program Transformations for ML Workshop at NeurIPS 2019, 2019. URL https://openreview.net/forum?id=H1g1niFhIB.

- Prangle (2018) Dennis Prangle. Summary statistics. In Handbook of Approximate Bayesian Computation, pp. 125–152. Chapman and Hall/CRC, 2018.