MisMatch: Calibrated Segmentation via Consistency on Differential Morphological Feature Perturbations with Limited Labels

Abstract

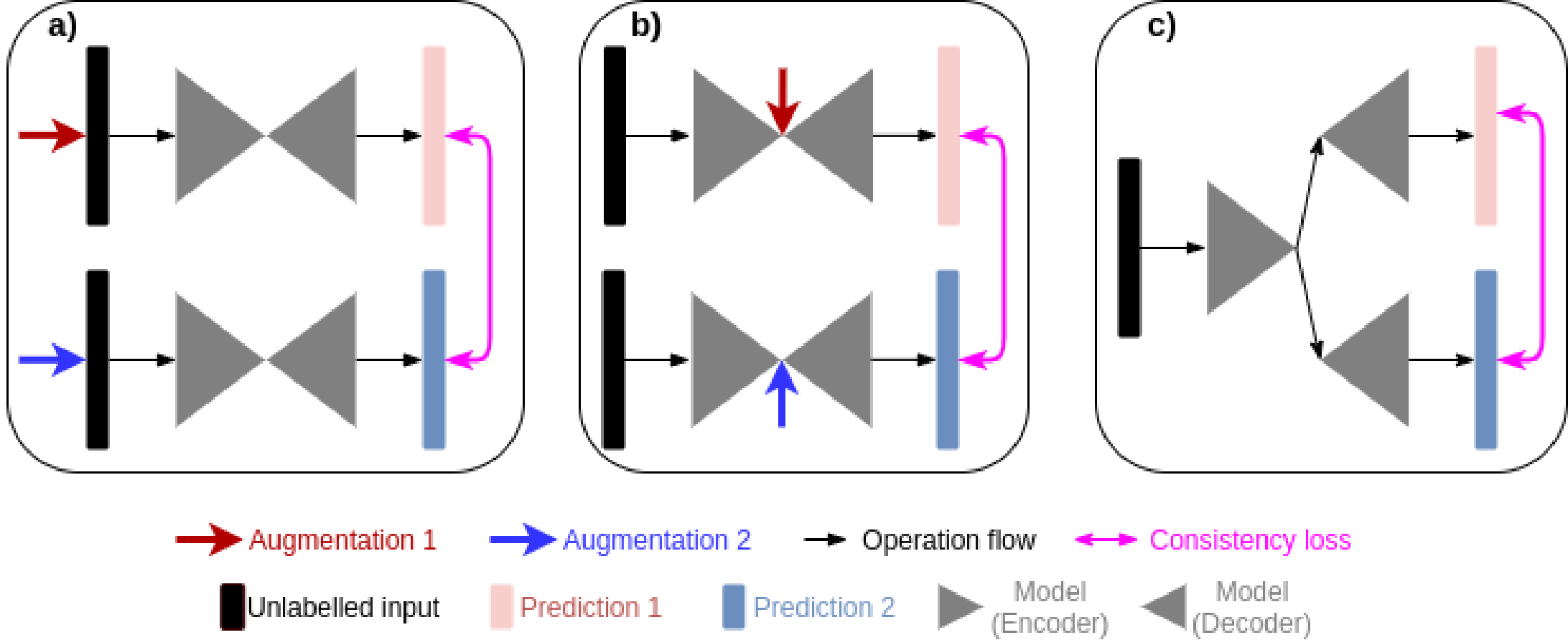

Semi-supervised learning (SSL) is a promising machine learning paradigm to address the ubiquitous issue of label scarcity in medical imaging. The state-of-the-art SSL methods in image classification utilise consistency regularisation to learn unlabelled predictions which are invariant to input level perturbations. However, image level perturbations violate the cluster assumption in the setting of segmentation. Moreover, existing image level perturbations are hand-crafted which could be sub-optimal. In this paper, we propose MisMatch, a semi-supervised segmentation framework based on the consistency between paired predictions which are derived from two differently learnt morphological feature perturbations. MisMatch consists of an encoder and two decoders. One decoder learns positive attention for foreground on unlabelled data thereby generating dilated features of foreground. The other decoder learns negative attention for foreground on the same unlabelled data thereby generating eroded features of foreground. We normalise the paired predictions of the decoders, along the batch dimension. A consistency regularisation is then applied between the normalised paired predictions of the decoders. We evaluate MisMatch on four different tasks. Firstly, we develop a 2D U-net based MisMatch framework and perform extensive cross-validation on a CT-based pulmonary vessel segmentation task and show that MisMatch statistically outperforms state-of-the-art semi-supervised methods. Secondly, we show that 2D MisMatch outperforms state-of-the-art methods on an MRI-based brain tumour segmentation task. We then further confirm that 3D V-net based MisMatch outperforms its 3D counterpart based on consistency regularisation with input level perturbations, on two different tasks including, left atrium segmentation from 3D CT images and whole brain tumour segmentation from 3D MRI images. Lastly, we find that the performance improvement of MisMatch over the baseline might originate from its better calibration. This also implies that our proposed AI system makes safer decisions than the previous methods.

Semi-supervised segmentation, Calibration, Differential Morphological Augmentations, Consistency Regularisation

1 Introduction

Training of deep learning models requires a large amount of labelled data. However, in applications such as in medical image analysis, anatomic/pathologic labels are prohibitively expensive and time-consuming to obtain, with the result that label scarcity is almost inevitable. Advances in the medical image analysis field requires the development of label efficient deep learning methods and accordingly, semi-supervised learning (SSL) has become a major research interest within the community. Among the myriad SSL methods used, consistency regularisation based methods have achieved the state-of-the art in classification [1, 2, 3, 4], thus we focus on this genre in this paper.

Existing consistency regularisation methods [1, 2, 3, 4, 6, 7, 5, 8] are mainly focusing on producing predictions which are invariant against different input level perturbations. In other words, we can interpret that consistency regularisation methods aim at training networks which generate confidence invariant predictions. For example, if we apply weak augmentation such as flipping on an input image, the model will assign a high probability of this image belonging to its correct label, hence, the prediction of the weakly augmented image is with high confidence; if we apply strong augmentation such as rotation on an input image, then the testing is much more difficult and the model might assign a low probability of this image to its correct label, therefore, such a prediction of a strongly augmented image is with low confidence. A consistency regularisation is enforced to align the paired predictions. The relationship between consistency regularisation and confidence invariant predictions imply that such networks should be having better calibration, which will be empircally verified in section 8. However, data augmentation techniques used in existing semi-supervised learning are typically hand-crafted which might be sub-optimal. Practically, such augmentation techniques are not adaptive across pixels which may be problematic as spatial correlations amongst pixels are crucial for segmentation, e.g. neighbouring pixels might belong to the same category. Most importantly, direct adaption of input level perturbations in segmentation violates the cluster assumption which is the foundation of semi supervised learning, we will explain this issue further in later section 3.

In this paper, we propose an end-to-end learning framework to generate predictions with different confidences. In order to change prediction confidences at a pixel-wise level in a realistic way, we use two different attention mechanisms to respectively dilate and erode foreground features which correspond to the areas of “ground truth”. A preliminary version of this manuscript has been presented at MIDL 2022 [9]. Comparing to the previous MIDL version, we now included extra experiments on two 3D data sets using a different base network; a more detailed explanation of the motivation; a more principled method section under the guidance of the theory of effective receptive field. The code is here: https://github.com/moucheng2017/MisMatchSSL. Our contributions are summarised as:

-

•

We provide an intuition of the relationship between consistency regularisation and semi-supervised learning, and why consistency regularisation with data augmentation wouldn’t work well in segmentation.

-

•

We propose a framework called MisMatch for semi supervised segmentation, by combining differential morphological feature perturbations with consistency regularisation.

-

•

We discovered that our consistency regularisation improves model calibration, leading to safer AI deployment for medicine.

-

•

We intensively evaluated our framework on four medical applications including: 1) 2D segmentation of lung vessel of CT images; 2) 2D segmentation of brain tumour of MR images; 3) 3D segmentation of left atrium of MR images; 4) 3D segmentation of whole tumour from MRI images. We conclude that our consistency regularisation on feature perturbations is more effective than consistency on input level perturbations.

2 Related work

Popular classes of common SSL methods have been compared on a benchmark in [10]. A direct application of smoothness assumption is called label propagation which propagate the labels to unlabelled data according to the similarity between labelled and unlabelled data [11], obviously, those similarity graphs need computationally heavy Laplacian matrices which encounter scalability issue. Another common method is called entorpy minimisation method which drives models to attain low entropy predictions on unlabelled data [12] [13]. One drawback of entropy minimisation method is the risk at overfitting leading to wrong decision boundary for data points close to low density regions (see Appendix E in [10]). Other attempts include generative models such as the one in [14] which combines GAN in training, suffering from unstable training. The state-of-the-art methods are dominated by consistency regularisation methods becuase they are easy to use and effective across different tasks. Of the consistency regularisation methods, Mean-Teacher [1] is the most representative example, containing two identical models which are fed with inputs augmented with different Gaussian noises. The first model learns to match the target output of the second model, while the second model uses an exponentially moving average of parameters of the first model. One of the state-of-the-art SSL methods [3] [2] combines entropy minimisation and consistency regularisation.

SSL in segmentation In semi-supervised image segmentation, consistency regularisation is commonly used [15] [16] [17] [18] [19] [5] where different data augmentation techniques are applied at the input level. Another related work [8] forces the model to learn rotation invariant predictions. Apart from augmentation at the input level, recently, feature level augmentation has gained popularity for consistency based SSL segmentation [6, 7]. There also have been attempts of creating perturbations via using dual network branch [20] [21]. Different from [20] and [21], the perturbations we use are also learnt via network itself. Apart from consistency regularisation methods in medical imaging, there also have been other attempts, including the use of generative models for creating pseudo data points for training [22] [23] and different auxiliary tasks as regularisation [24] [25]. Since our method is a new consistency regularisation method, we focus on comparing with state-of-the-art consistency regularisation methods.

3 Motivations

Cluster assumption In this section, we will explain the cluster assumption for semi-supervised classification and how it is violated if we straightforwardly transfer existing consistency regularisation methods from classification to segmentation. The cluster assumption is a variant of smoothness assumption. The smoothness assumption states that if two data points ( and ) are adjacent to each other, their outputs or labels ( and ) should also be close to each other. The cluster assumption directly derives from the smoothness assumption, for example, if there is a dense population of data points in a space, then highly likely that cluster of those densely neighbouring data points are in the same class. In other words, the cluster assumptions implies there exists low density regions among different classes or different clusters of data points and the correct decision boundary should lie at the low-density regions. Equivalently, the key is to find the low-density regions which leads to rightful decision boundary.

Consistency with Data Augmentation in Classification We start with a classical two moon example to explain how consistency regularisation with data augmentation works in semi-supervised classification. Each moon represents a class and each dot represents an image for semi-supervised classification. As shown in the two moons example in Fig. 2(a), if there are very limited labelled data points such as two data points, any decision boundary between the two labelled data points is possible, for example, the examplar decision boundary shown in Fig. 2(a) can wrongly classify half of the data points. The two moon example in Fig. 2(a) and (b) is also a perfect example for cluster assumption that the low density region between the two moons can separate the two moons from each other. In Fig. 2(b), let’s focus on the two images and which are from upper moon class and lower moon class respectively. If we apply two random augmentations (directional arrows in Fig. 2(b)) on the images, we will get and from , and from . Since is closer to the low-density region, the augmented could across the decision boundary thereby could be wrongly classified as the lower moon class, meanwhile, still stays in the cluster of upper moon class. In this case, although they are derived from the same data point . The difference between and will be more than 0 which can be back-propagated to optimise the model parameters. On the contrary, the image is closer to the centre of the cluster of lower moon class, that and are the same, resulting in 0 differences which does not affect the model parameters. Hence, it is easy to tell that the consistency regularisation with data augmentation makes the model parameters sensitive to the images closer to the low-density regions. This property will naturally drive the model to locate the low-density regions which happen to be the correct decision boundary.

Consistency with Data Augmentation in Segmentation However, consistency regularisation with data augmentation will have clear limitations in segmentation. In segmentation, as shown in Fig. 2(c), now we have each dot as a pixel and all of the pixels are densely distributed across the image space. In Fig. 2(c) and (d), we highlight the object boundary with continuous red and blue dots along the two sides of the boundary respectively. As there are hardly low-density regions between objects, it becomes hard to align the objects boundaries with low-density regions. If we have only two labelled pixels from each class, we will not be able to locate the correct decision boundary as illustrated in Fig. 2(c). If we apply two different augmentations on and with consistency regularisation as shown in Fig. 2(d), although the model can still locate the pixels which are sensitive to the consistency regularisation, due to the lack of clear low-density regions, the model will not correctly locate the right decision boundaries.

Practical Limitations of Strong Data Augmentations in Segmentation Common strong data augmentation techniques typically distort the spatial characterisation of the objects such as shearing.

As shown in Fig.3, the image-wise label stay the same, regardless of the data augmentation is applied. However, strong data augmentation will modify the pixel-wise labels, leading to difficulty of applying consistency regularisation at pixel-wise if two different strong data augmentations are applied on the same image. To avoid this practical issue, specific strong data augmentation such as CutMix was chosen in order to use consistency regularisation in segmentation [5]. In our paper, we propose an alternative solution. We use augmentation at the feature level in lieu of augmentation of the data level, to completely avoid this practical issue.

Proposal Although the low-density regions do not align with the objects boundaries anymore, a few evidences in [6, 5] suggested that the low-density regions actually align well with the objects boundaries in the feature space. Meaning that it might be possible to use consistency regularisation on the predictions which are invariant to feature perturbations to identify the correct decision boundaries in segmentation. This directly inspired us to focus on feature perturbations in our work that we want to design learnable feature perturbations which are realistic and semantically meaningful. More specifically, we decide to apply morphological-alike perturbations on the features. In the following sections, we show how to use inductive biases of neural network topology to ask the networks to end-to-end learn morphological feature perturbations.

4 Methods

4.1 Background: ERF and the foreground

Effective Receptive Field We introduce how to control the size of the foreground features by controlling the effective receptive field (ERF). ERF [26] measures the size of the effective area at the centre of receptive field and it impacts the most on the prediction confidence of the central pixel of the receptive field, which should overlap with the foreground objects with the highest confidence at the foreground central pixel. If we want to apply morphological operations on features of foreground objects, equivalently, we need to adjust the ERF on the foreground. As found in [26], larger ERF means the model can effectively take a larger area of the image into account during inference of decision making, resulting in higher prediction confidence at the centre, meanwhile, smaller ERF leads to less confident prediction on the central pixel due to the lack of visual information of neighbouring pixels. More importantly, ERF is highly affected by the network architecture. In particular, the dilated convolutional layer can increase the ERF to an extent dependent on the dilation rate [26]. Skip-connections conversely can shrink the ERF, though the extent of this effect is as yet unknown [26]. We are therefore inspired by [26] to design a network to control the ERF, in order to deliberately change the prediction confidence to morph the foreground features.

Overview of MisMatch In this paper, we learn to realistically morph the foreground features by controlling the ERF for consistency regularisation. In order to create a paired predictions with different confidences for consistency regularisation, our strategy is to dilate the foreground features and erode the foreground features, we also compare our strategy with other possible strategies in an ablation study in later section 6. As introduced in the last section, the prediction confidence can be affected by the ERF while the ERF is decided by the network topology. More specifically, we use the dilated convolutional layer to raise the ERF on one hand to dilate the foreground features, and we use skip-connections to decrease the ERF on the other hand to erode the features of foreground. However, we do not know how much confidence should be changed at each pixel. To address this, we introduce soft attention mechanism to learn the magnitude of the confidence change for each pixel. Now we introduce how we achieve this in the next section.

Differences between proposed methods and classical morphological operations We also would like to highlight the difference between our approach at feature space and the classical morphological operations at image space. Traditional morphological operations simply remove/add boundary pixels using local neighbouring information which is not differentiable, in contrast, our approach is differentiable and can be fully integrated in neural networks.

4.2 Architecture of Mismatch

As shown in Fig.4, MisMatch is a framework which can be integrated into any encoder-decoder based segmentation architecture. In this section, we use 2D U-net [27] due to its popularity in medical imaging, although later we also have an experiment using a MisMatch based on a 3D V-net. Our U-net based MisMatch (Fig 4) has two components, an encoder () and a two-head decoder ( and ). The first decoder () comprises of a series of Positive Attention Shifting Blocks, which shifts more attention towards the foreground area, resulting in dilating high-confidence predictions on the foreground. The second decoder () containing a series of Negative Attention Shifting Blocks, shifts less attention towards the foreground, resulting in eroding high-confidence predictions on the foreground.

4.3 Positive Attention Shifting Block

Positive Attention Shifting Block aims at increasing the ERF of the foreground, therefore dilating the foreground features. In a standard U-net, a block () in the decoder comprises two consecutive convolutional layers with kernel size () 3 followed by ReLU and normalisation layers. If the input of is and the output of is , to increase the ERF of , we would aim to generate an attention mask with a larger ERF than the ERF of . To do so, we add a parallel side branch next to the main branch . The side branch intakes but outputs with a larger ERF. We apply Sigmoid on the output of the side branch as an attention mask to increase the confidence of . The new block containing both and is our proposed Positive Attention Shifting Block (PASB). The side branch of the PASB is a dilated convolutional layer with dilation rate 5.

4.3.1 ERF size in Positive Attention Shifting Block

Given the size of ERF of layer as, [26], which is the input , as output from the previous layer. The ERF of is . To make sure the ERF of is larger than :

| (1) |

From Eq1, we find . We double the condition as our design choice, then is 9 when . However, the large kernel sizes significantly increase model complexity. To avoid this, we use a dilated convolutional layer to achieve at 9, which requires a dilation rate 5. As the side branch has a larger ERF than the main branch, it can raise the confidence on the foreground of the main branch. Previous work [28, 29] has reported similar uses of a dilated convolutional layer to increase the ERF for other applications, without explaining the rationale for their use. See visual evidence in Fig 4(q) and (r).

4.4 Negative Attention Shifting Block

Negative Attention Shifting Block aims at decreasing the ERF on the foreground, therefore eroding the foreground features. Following PASB, we design the Negative Attention Shifting Block (NASB) again as two parallel branches. In NASB, we aim to shrink the ERF of the in order to produce a smaller ERF than the one from the main branch. In the side branch in NASB, we use the same architecture as the main branch, but with skip-connections as skip-connections restrict the growth of the ERF with increasing depth [26].

4.4.1 ERF size in Negative Attention Shifting Block

Neural networks with residual connections are equivalent to an ensemble of networks with short paths where each path follows a binomial distribution [30]. If we define as the probability of the model going through a convolutional layer and as the probability of the model skipping the layer, then each short path has a portion of , contributing to the final ERF. If we assume is 0.5, the ERF of the side branch is guaranteed to be smaller than the ERF of the main branch, see Eq.2.

| (2) |

As the side branch has a smaller ERF than the main branch, it can reduce the confidence on the foreground of the main branch. See visual evidence in Fig 4(u) and (v).

4.5 Loss Functions

For experiments on BRATS 2018 and CARVE 2014, We use a streaming training setting to avoid over-fitting on limited labelled data so the model doesn’t repeatedly see the labelled data during each epoch. When a label is available, we apply a standard Dice loss [31] between the output of each decoder and the label. When a label is not available, we apply a mean squared error loss between the outputs of the two decoders. This consistency regularisation is weighted by hyper-parameter . For experiments on LA 2018, we train simultaneously on labelled and unlabelled images by combine consistency regularisation loss with Dice loss.

5 Experiments

We perform a few sets of experiments: 1) comparisons with baselines including supervised learning and state-of-the-art SSLs [2, 1, 25, 6] using either data or feature augmentation; 2) investigation of the impact of the amount of labelled data and unlabelled data on MisMatch performance; 3) ablation study of the decoder architectures; 4) ablation study on the hyper-parameter such as

5.1 Data sets & Pre-processing

CARVE 2014 The Classification of pulmonary arteries and veins (CARVE) dataset [32] has 10 fully annotated non-contrast low-dose thoracic CT scans. Each case has between 399 and 498 images, acquired at various spatial resolutions between (282 x 426) to (302 x 474). 10-fold cross-validation on the 10 labelled cases is performed. In each fold, we split cases as: 1 for labelled training data, 3 for unlabelled training data, 1 for validation and 5 for testing. We only use slices containing more than 100 foreground pixels. We prepare datasets with differing amounts of labelled slices: 5, 10, 30, 50, 100. We crop 176 176 patches from four corners of each slice. Full label training uses 4 training cases. Normalisation was performed at case wise.

BRATS 2018 BRATS 2018 [33] has 210 high-grade glioma and 76 low-grade glioma MRI cases, each case containing 155 slices. We focus on binary segmentation of whole tumours in high grade cases. We randomly select 1 case for labelled training, 2 cases for validation and 40 cases for testing. We centre crop slices at 176 176. For labelled training data, we extract the first 20 slices containing tumours with areas of more than 5 pixels. To see the impact of the amount of unlabelled training data, we use 3100, 4650 and 6200 slices respectively. Case-wise normalisation was performed and all modalities were concatenated. We train each model 3 times and take the average.

LA 2018 Atrial Segmentation Challenge Data set [34] has 100 volumes of 3D gadolimium-enhanced MR scans with corresponding left atrium segmentation masks. Each scan is isotropic with resolution at 0.625 x 0.625 x 0.625 . We follow [35] and split 100 scans into 80 for training and 20 for testing. We also directly use the pre-processing from [35] to normalise the centre crop each scan.

Task 01 Brain Tumour Task01 Brain Tumour from Medical Segmentation Decathlon consortium [36] is based on BRATS 2017 with different naming format from BRATS 2018. Each case in The Task01 Brain Tumour has 155 slices with 240 x 240 spatial dimension. We merge all of the tumour classes into one tumour class for simplicity. We do not apply centre cropping in the pre-processing here. In the training, we randomly crop volumes on the fly with size of 96 x 96 x 96. We separate the original training cases as labelled training data and testing data. We use the original testing cases as unlabelled data. For the labelled training data, we use 8 cases with index number from 1 to 8. We have 476 cases for testing and 266 cases for unlabelled training data. We apply normalisation with statistics of intensities across the whole training data set. We keep all of the MRI modalities as 4 channel input.

| Supervised | Semi-Supervised | |||||||

|---|---|---|---|---|---|---|---|---|

| Labelled | Sup1 | Sup2 | MTA | MT | FM | CCT | Morph | MM |

| Slices | [27](2015) | Ours(2021) | [25](2019) | [1](2017) | [2](2020) | [6](2020) | 2021 | Ours(2021) |

| 5 | 48.324.97 | 50.752.0 | 54.911.82 | 56.562.38 | 49.301.81 | 52.541.74 | 52.932.19 | 60.253.77 |

| 10 | 53.382.83 | 55.554.42 | 57.783.66 | 57.992.57 | 51.533.72 | 55.252.52 | 57.082.96 | 60.043.64 |

| 30 | 52.091.41 | 53.984.42 | 60.784.63 | 60.463.74 | 55.165.93 | 60.814.09 | 60.194.97 | 63.594.46 |

| 50 | 60.692.51 | 64.793.46 | 68.113.39 | 67.213.05 | 62.916.99 | 65.063.42 | 64.883.25 | 69.393.74 |

| 100 | 68.741.84 | 73.11.51 | 72.481.61 | 71.481.57 | 72.581.84 | 72.071.75 | 72.111.88 | 74.831.52 |

| Param. (M) | 1.8 | 2.7 | 2.1 | 1.88 | 1.88 | 1.88 | 2.54 | 2.7 |

| Infer.Time(s) | 4.1e-3 | 1.8e-1 | 7.2e-3 | 4.3e-3 | 4.5e-3 | 1.5e-1 | 8e-3 | 1.8e-1 |

| Supervised | Semi-Supervised | |||||||

|---|---|---|---|---|---|---|---|---|

| Unlabelled | Sup1 | Sup2 | MTA | MT | FM | CCT | Morph | MM |

| Slices | [27](2015) | Ours(2021) | [25](2019) | [1](2017) | [2](2020) | [6](2020) | 2021 | Ours(2021) |

| 3100 | 53.7410.19 | 55.7611.03 | 50.538.76 | 55.2910.21 | 57.9212.35 | 56.6111.7 | 53.889.99 | 58.9411.41 |

| 4650 | 53.7410.19 | 55.7611.03 | 47.366.65 | 58.3212.07 | 54.299.69 | 56.9410.93 | 55.8211.03 | 60.7412.96 |

| 6200 | 53.7410.19 | 55.7611.03 | 50.118.00 | 56.9212.20 | 56.7811.39 | 57.3711.74 | 54.59.75 | 58.8112.18 |

5.2 Implementation

We use Adam optimiser [37]. Hyper-parameters are: , batch size 1 (GPU memory: 2G), learning rate 2e-5, 50 epochs. Each complete training on CARVE takes about 3.8 hours. The final output is the average of the outputs of the two decoders. In testing, we take an average of models saved over the last 10 epochs across experiments. Our code is implemented using Pytorch 1.0 [38].

5.3 Baselines

In the current study the backbone is a 2D U-net [27] with 24 channels in the first encoder. To ensure a fair comparison we use the same U-net as the backbone across all baselines. The first baseline utilises supervised training on the backbone, is trained with labelled data, augmented with flipping and Gaussian noise and is denoted as “Sup1”. To investigate how unlabelled data improves performance, our second baseline “Sup2” utilises supervised training on MisMatch, with the same augmentation. Because MisMatch uses consistency regularisation, we focus on comparisons with five consistency regularisation SSLs: 1) “mean-teacher” (MT) [1], with Gaussian noise, which has inspired most of the current state-of-the-art SSL methods; 2) the current state-of-the-art model called “FixMatch” (FM) [2]. To adapt FixMatch for a segmentation task, we use Gaussian noise as weak augmentation and “RandomAug” [39] without shearing for strong augmentation. We do not use shearing for augmentation because it impairs spatial correspondences of pixels of paired dense outputs; 3) a state-of-the-art model with multi-head decoder [6] for segmentation (CCT), with random feature augmentation in each decoder [6]. This baseline is also similar to models recently developed [5, 7]; 4) a further recent model in medical imaging [25] using image reconstruction as an extra regularisation (MTA), augmented with Gaussian noise; 5) a U-net with two standard decoders, where we respectively apply erosion and dilation on the features in each decoder, augmented with Gaussian noise (Morph)”; 6) an uncertainty aware mean-teacher based SSL segmentation model [35]. Our MisMatch model has been trained without any augmentation.

6 Segmentation Results

MisMatch consistently and substantially outperforms supervised baselines, the improvement is especially obvious in low data regime. For example, on 5 labelled slices with CARVE, MisMatch achieves 24% improvement over Sup1. MisMatch consistently outperforms previous SSL methods [2, 1, 25, 6] in Table 1, across different data sets. Particularly, there exists statistical difference between Mismatch and other baselines when 6.25% labels (100 slices comparing to 1600 slices of full label) are used on CARVE (Table 3). Qualitatively, we observed in Fig 8 that, the main performance boost of MisMatch comes from the reduction of false positive detection and the increase of true positive detection.

Interestingly, we found that Sup2 (supervised training on MisMatch without unlabelled data) is a very competitive baseline comparing to previous semi-supervised methods. This might imply that MisMatch can potentially help with the supervised learning as well.

We also found data diversity of training data highly affects the testing performance (Fig 6) in cross-validation experiments. For example, in fold 3, 7 and 8 on CARVE, MisMatch outperforms or performs on-par with the full label training, whereas in the rest folds, MisMatch performs marginally inferior to the full label training. Additionally, more labelled training data consistently produces a higher mean IoU and lower standard deviation (Table 2). Lastly, we noticed more unlabelled training data can help with generalisation, until it dominates training and impedes performance (Table 2).

We further verify that consistency regularisation on feature perturbations is better than consistency regularisation on input perturbations by comparing MisMatch against UA-MT [35] which is an representative example of the methods using input perturbations. We compare MisMatch against UA-MT on two 3D datasets left atrium and whole tumour areas (see section 5.1). On the segmentation on left atrium, our method not just outperform UA-MT but also converges faster, as illustrated in Fig.9.

During testing of trained models on the whole tumour segmentation from the Task01 Brain Tumour data set[36], we noticed one emerging property of our model that the our model achieves better performance when it is tested on volumes larger than the size of the training volumes (see Table 7 and Table 8). Also if the testing size is smaller than the training size, the performance becomes worse (see Table 7 and Table 9).

| Sup1 | Sup2 | MTA | MT | FM | CCT | Morph |

| 9.13e-5 | 1.55e-2 | 4.5e-3 | 4.3e-4 | 1.05e-2 | 1.8e-3 | 2.2e-3 |

6.1 Ablation Studies

We performed ablation studies on the architecture of the decoders of MisMatch with cross-validation on 5 labelled slices of CARVE: 1) “MM-a”, a two-headed U-net with standard convolutional blocks in decoders, the prediction confidences of these two decoders can be seen as both normal confidence, however, they are essentially slightly different because of random initialisation, we denote the decoder of U-net as ; 2) “MM-b”, a standard decoder of U-net and a negative attention shifting decoder , this one can be seen as between normal confidence and less confidence; 3) “MM-c”, a standard decoder of U-net and a positive attention shifting decoder , this one can be seen as between normal confidence and higher confidence; 4) “MM”, and (Ours). As shown in Fig 7, our MisMatch (”MM”) outperforms other combinations in 8 out of 10 experiments and it performs on par with the others in the rest 2 experiments. Among the results when MisMatch outperforms, MisMatch outperforms MM-a by 2%-14%; outperforms MM-b by 3%-18%; outperforms MM-c by 4%-22%. We also tested at 0, 0.0005, 0.001, 0.002, 0.004 with the same experimental setting. The optimal appears at 0.002 in Table 4. We also found that gradient cutting helps to improve segmentation performance too, see Table 6. In terms of network topology, as shown in Table 5 , it seems that larger dilation is not always beneficial.

| alpha | 0.0 | 0.0005 | 0.001 | 0.002 | 0.004 |

| IoU | 50.75 | 59.16 | 59.45 | 60.25 | 58.89 |

| Iteration | 2000 | 3000 | 4000 | 5000 |

|---|---|---|---|---|

| Dilation 6 | 0.6571 | 0.6621 | 0.6699 | 0.6561 |

| Dilation 9 | 0.7363 | 0.7283 | 0.7180 | 0.6561 |

| Dilation 12 | 0.6980 | 0.6957 | 0.6889 | 0.6561 |

| Iteration | 2000 | 3000 | 4000 | 5000 |

|---|---|---|---|---|

| Stop gradient | 0.6896 | 0.7148 | 0.7090 | 0.7057 |

| Gradient | 0.6717 | 0.6944 | 0.6952 | 0.6837 |

| Metrics | Dice () | Jac () | HD () | ASD () |

|---|---|---|---|---|

| UA-MT | 0.5454 | 0.3864 | 55.25 | 22.74 |

| MisMatch (Ours) | 0.57 | 0.4197 | 49.07 | 22.86 |

| Metrics | Dice () | Jac () | HD () | ASD () |

|---|---|---|---|---|

| UA-MT | 0.2926 | 0.1769 | 72.66 | 31.98 |

| MisMatch (Ours) | 0.3133 | 0.1944 | 85.35 | 39.27 |

| Metrics | Dice () | Jac () | HD () | ASD () |

|---|---|---|---|---|

| UA-MT | 0.5945 | 0.4390 | 54.88 | 22.15 |

| MisMatch (Ours) | 0.6086 | 0.4650 | 47.66 | 23.58 |

7 Visualisation of the effectiveness of Learnt Attention Masks

We visualise the confidences of feature maps before and after attention, attention weights and how much the confidences are changed in Fig5 on CARVE. We focus on zoomed-in area of one vessel which is one region-of-interest. As shown in (c) and (e), the confidence outputs between the two decoders are different, the one from the positive attention decoder has more detected high confidence areas on the top of the anatomy of the interest. As illustrated in (j) and (n), the attention weights in the two decoders are drastically different from each other. More specifically, the attention weights in the negative attention decoder have relatively low values around the edges, as shown in green and blue colours, on the contrary, the attention weights in the positive attention decoder have high values in most of the regions of the interest.

Another evidence supporting the effectiveness of attention blocks are the changes of the confidences as shown in (r) and (v). After positive attention weights are applied on (g), it is clear to see in (r) that the surrounding areas of the originally detected contours are now also detected as regions of the interest. Besides, in (v), we observe expected negative changes of the confidences around edges caused by the negative attention shifting.

The histograms of the feature maps also support the effectiveness of our learnt attention masks. Between the histograms in (j) and (m), for the high confidence interval between 0.9 and 1.0, the negative attention block has more high confidence pixels than the positive attention block. This is because the negative attention block decreases confidence on foreground, thereby ending up with increasing confidence on background, where background class is the majority class naturally containing more pixels than the foreground class.

8 Confidence and Calibration of Mismatch

Expected Calibration Error To qualitatively study the confidence of MisMatch, we adapt two mostly used metrics in the community, which are Reliability Diagrams and Expected Calibration Error (ECE) [40]. Following [41], we first prepare M interval bins of predictions. In our binary setting to classify the foreground, we use 5 intervals between 0.5 to 1. Say is the subset of all pixels whose prediction confidence is in interval . We define accuracy as how many pixels are correctly classified in each interval. The accuracy of is formally:

| (3) |

Where is the predicted label and is the ground truth label at pixel in . The average confidence within is defined with the use of which is the raw probability output of the network at each pixel:

| (4) |

Ideally, we would like to see , which means the network is perfectly calibrated and the predictions are completely trustworthy. To assess how convincing the prediction confidences are, we calculate the gap between confidence and accuracy as Expected Calibration Error (ECE):

| (5) |

MisMatch is well-calibrated and effectively learns to change prediction confidence As shown in Fig11, both positive attention shifting decoder and negative attention shifting decoder are better calibrated than the plain U-net. Especially, positive attention shifting decoder produces over-confident predictions. Meanwhile, negative attention shifting decoder produces under-confident predictions for a few confidence intervals. This verifies again that MisMatch can effectively learn to differently change the prediction confidences of the same testing images.

Robustness of MisMatch Against Calibration Errors As shown in the scatter plot (Fig10) of paired IoU and corresponding Expected Calibration Error (ECE) of all of the testing images in cross-validation experiments on 50 labelled slices of CARVE, higher calibration errors correlate positively with low segmentation accuracy. In general, MisMatch has predictions with less calibration errors and higher IoU values. As shown in the 2nd order regression curves for each trend, MisMatch appears to be more robust against calibration error, as the fitted curve of U-net has a much more steep slope than MisMatch. In other words, with the increase of calibration error, MisMatch suffers less performance drops.

9 Limitations and future work

Computational burden Although MisMatch achieves superior performance over previous methods, it suffers from increased model complexity. Parameter sharing should be incorporated in the future work. For example, the main branch can be shared across the two decoders.

Extensions Future work will extend MisMatch to multi-class 3D tasks. Consistency on multi-class predictions might bring in extra regularisation leading to better performances. We also aim to enhance MisMatch by combining it with existing temporal ensemble techniques [1]

10 Conclusion

We propose MisMatch, an augmentation-free SSL, to overcome the limitations associated with consistency-driven SSL in medical image segmentation. In lung vessel segmentation tasks, the acquisition of labels can be prohibitively time-consuming. For example each case may take 1.5 hours of manual refinement with semi-automatic segmentation[32]. Longer timeframes may be required for cases with severe disease. MisMatch however shows strong clinical utility by reducing the number of training labels requried by more than 90%. MisMatch requires 100 slices of one case for training whereas the fully labelled dataset comprises 1600 slices across 4 cases. MisMatch when trained on just 10% of labels achieves a similar performance (IoU: 75%) to models that are trained with all available labels (IoU: 77%).

References

- [1] Antti Tarvainen and Harri Valpola “Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results” In Neural Information Processing Systems (NeurIPS), 2017

- [2] Kihyuk Sohn et al. “FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence” In Neural Information Processing Systems (NeurIPS), 2020

- [3] David Berthelot et al. “REMIXMATCH: SEMI-SUPERVISED LEARNING WITH DISTRIBUTION ALIGNMENT AND AUGMENTATION ANCHORING” In International Conference On Learning Representation (ICLR), 2020

- [4] Ben Athiwaratkun, Marc Finzi, Pavel Izmailov and Andrew Gordon Wilson “There Are Many Consistent Explanations of Unlabeled Data: Why You Should Average” In International Conference on Learning Representations (ICLR), 2019

- [5] Geoff French et al. “Semi-supervised semantic segmentation needs strong, varied perturbations” In British Machine Vision Conference (BMVC), 2020

- [6] Yassine Ouali, Céline Hudelot and Myriam Tami “Semi-Supervised Semantic Segmentation with Cross-Consistency Training” In Computer Vision and Pattern Recognition (CVPR), 2020

- [7] Zhanghan Ke, Di Qiu, Qiong Yan and Rynson W.H. Lau “Guided Collaborative Training for Pixel-wise Semi-Supervised Learning” In European Conference on Computer Vision (ECCV), 2020

- [8] Xiaomeng Li et al. “Semi-supervised Skin Lesion Segmentation via Transformation Consistent Self-ensembling Model” In British Machine Vision Conference (BMVC), 2018

- [9] Mou-Cheng Xu et al. “Learning Morphological Feature Perturbations for Calibrated Semi-Supervised Segmentation” In International Conference on Medical Imaging with Deep Learning (MIDL), 2022

- [10] Avital Oliver et al. “Realistic Evaluation of Deep Semi-Supervised Learning Algorithms” In Neural Information Processing Systems (NeurIPS), 2018

- [11] Ahmet Iscen, Giorgos Tolias, Yannis Avrithis and Ondˇrej Chum “Label Propagation for Deep Semi-supervised Learning” In Computer Vision and Pattern Recognition (CVPR), 2019

- [12] Yves Grandvalet and Yoshua Bengio “Semi-supervised Learning by Entropy Minimization” In Neural Information Processing Systems (NeurIPS), 2004

- [13] Dong-Hyun Lee “Pseudo-Label : The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks” In ICML workshop on Challenges in Representation Learning, 2013

- [14] Diederik P. Kingma, Danilo J. Rezende, Shakir Mohamed and Max Welling “Semi-supervised Learning with Deep Generative Models” In Advanced in Neural Information Processing System (NeurIPS), 2014

- [15] Junshen Xu et al. “Semi-supervised Learning for Fetal Brain MRI Quality Assessment with ROI Consistency” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2020

- [16] Kang Li, Shujun Wang, Lequan Yu and Pheng-Ann Heng “Dual-Teacher: Integrating Intra-domain and Inter-domain Teachers for Annotation-Efficient Cardiac Segmentation” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2020

- [17] Wenhui Cui et al. “Semi-Supervised Brain Lesion Segmentation with an Adapted Mean Teacher Model” In Information Processing in Medical Imaging (IPMI), 2019

- [18] Wenlong Hang et al. “Local and Global Structure-Aware Entropy Regularized Mean Teacher Model for 3D Left Atrium Segmentation” In International conference on medical image computing and computer assisted intervention (MICCAI), 2020

- [19] Kang Fang and Wu-Jun Li “DMNet: Difference Minimization Network for Semi-supervised Segmentation in Medical Images” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2020

- [20] Xiangde Luo, Jieneng Chen, Song Tao and Guotai Wang “Semi-supervised Medical Image Segmentation through Dual-task Consistency” In International Conference on Artificial Intelligence (AAAI), 2021

- [21] Yinghuan Shi et al. “Inconsistency-Aware Uncertainty Estimation for Semi-Supervised Medical Image Segmentation” In IEEE Transactions on Medical Imaging (TMI), 2022

- [22] Krishna Chaitanya et al. “Semi-supervised and Task-Driven Data Augmentation” In Information Processing In Medical Imaging (IPMI), 2019

- [23] Chen Chen et al. “Realistic Adversarial Data Augmentation for MR Image Segmentation” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2020

- [24] Hoel Kervadec, Jose Dolz, Granger and Ismail Ben Ayed “Curriculum Semi-supervised Segmentation” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2019

- [25] Shuai Chen et al. “Multi-task Attention-Based Semi-supervised Learning for Medical Image Segmentation” In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2019

- [26] Wenjie Luo, Yujia Li, Raquel Urtasun and Richard Zemel “Understanding the Effective Receptive Field in Deep Convolutional Neural Networks” In Neural Information Processing Systems (NeurIPS), 2016

- [27] Olaf Ronneberger, Philipp Fischer and Thomas Brox “U-Net: Convolutional Networks for Biomedical Image Segmentations” In International conference on medical image computing and computer assisted intervention (MICCAI), 2015

- [28] Mou-Cheng Xu, Neil P Oxtoby, Daniel C Alexander and Joseph Jacob “Learning To Pay Attention To Mistakes” In British Machine Vision Conference (BMVC), 2020

- [29] Yunchao Wei et al. “Revisiting Dilated Convolution: A Simple Approach for Weakly- and SemiSupervised Semantic Segmentation” In Computer Vision and Pattern Recognition (CVPR), 2018

- [30] Andreas Veit, Michael Wilber and Serge Belongie “Residual Networks Behave Like Ensembles of Relatively Shallow Networks” In Neural Information Processing Systems (NeurIPS), 2016

- [31] Fausto Milletari, Nassir Navab and Seyed-Ahmad Ahmadi “V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation” In International Conference on 3D Vision (3DV), 2016

- [32] Jean-Paul Charbonnier et al. “Automatic Pulmonary Artery-Vein Separation and Classification in Computed Tomography Using Tree Partitioning and Peripheral Vessel Matching” In IEEE Transaction on Medical Imaging, 2015

- [33] Bjoern H. Menze et al. “The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS)” In IEEE Transaction on Medical Imaging, 2015

- [34] Zhaohan Xiong et al. “A Global Benchmark of Algorithms for Segmenting Late Gadolinium-Enhanced Cardiac Magnetic Resonance Imaging” In Medical Image Analysis, 2020

- [35] Lequan Yu et al. “Uncertainty-aware self ensembleing model for semi supervised 3D left atrium segmentation” In International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), 2019

- [36] Michela Antonelli et al. “The Medical Segmentation Decathlon” In Nature Communications, 2022

- [37] Diederik P. Kingma and Jimmy Ba “Adam: A Method for Stochastic Optimization” In International Conference on Learning Representation (ICLR), 2015

- [38] Adam Paszke et al. “PyTorch: An Imperative Style, High-Performance Deep Learning Library” In Neural Information Processing System (NeurIPS), 2019

- [39] Ekin D. Cubuk, Barret Zoph, Jonathon Shlens and Quoc V. Le “RandAugment: Practical automated data augmentation with a reduced search space” In Neural Information Processing Systems (NeurIPS), 2020

- [40] Chuan Guo, Geoff Pleiss, Yu Sun and Kilian Q. Weinberger “On Calibration of Modern Neural Networks” In International Conference on Machine Learning (ICML), 2017

- [41] Morris DeGroot and Stephen Feinberg “The comparison and evaluation of forecasters” In The statistician, 1983