MIPI 2022 Challenge on RGB+ToF Depth Completion: Dataset and Report

Abstract

Developing and integrating advanced image sensors with novel algorithms in camera systems is prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge including five tracks focusing on novel image sensors and imaging algorithms. In this paper, RGB+ToF Depth Completion, one of the five tracks, working on the fusion of RGB sensor and ToF sensor (with spot illumination) is introduced. The participants were provided with a new dataset called TetrasRGBD, which contains 18k pairs of high-quality synthetic RGB+Depth training data and 2.3k pairs of testing data from mixed sources. All the data are collected in an indoor scenario. We require that the running time of all methods should be real-time on desktop GPUs. The final results are evaluated using objective metrics and Mean Opinion Score (MOS) subjectively. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022

Keywords:

RGB+ToF, Depth Completion, MIPI challengeMIPI 2022 challenge website: http://mipi-challenge.org/

1 Introduction

RGB+ToF Depth Completion uses sparse ToF depth measurements and a pre-aligned RGB image to obtain a complete depth map. There are a few advantages of using sparse ToF depth measurements. First, the hardware power consumption of sparse ToF (with spot illumination) is low compared to full-field ToF (with flood illumination) depth measurements, which is very important for mobile applications to prevent overheating and fast battery drain. Second, the sparse depth has higher precision and long-range depth measurements due to the focused energy in each laser bin. Third, the Multi-path Interference (MPI) problem that usually bothers full-field iToF measurement is greatly diminished in the sparse ToF depth measurement. However, one obvious disadvantage of sparse ToF depth measurement is the depth density. Take iPhone 12 Pro [21] for example, which is equipped with an advanced mobile Lidar (dToF), the maximum raw depth density is only 1.2%. If directly use as is, the depth would be too sparse to be applied to typical applications like image enhancement, 3D reconstruction, and AR/VR applications.

In this challenge, we intend to fuse the pre-aligned RGB and sparse ToF depth measurement to obtain a complete depth map. Since the power consumption and processing time are still important factors to be balanced, in this challenge, the proposed algorithm is required to process the RGBD data and predict the depth in real-time, i.e. reaches speeds of 30 frames per second, on a reference platform with a GeForce RTX 2080 Ti GPU. The solution is not necessarily deep learning solution, however, to facilitate the deep learning training, we provide a high-quality synthetic depth training dataset containing 20,000 pairs of RGB and ground truth depth images of 7 indoor scenes. We provide a data loader to read these files and a function to simulate sparse depth maps that are close to the real sensor measurements. The participants are also allowed to use other public-domain dataset, for example, NYU-Depth v2 [28], KITTI Depth Completion dataset [32], Scenenet [23], Arkitscenes [1], Waymo open dataset [30], etc. A baseline code is available as well to understand the whole pipeline and to wrap up quickly. The testing data comes from mixed sources, including synthetic data, spot-iToF, and samples that are manually subsampled from iPhone 12 Pro processed depth which uses dToF. The algorithm performance will be ranked by objective metrics: relative mean absolute error (RMAE), edge-weighted mean absolute error (EWMAE), relative depth shift (RDS), and relative temporal standard deviation (RTSD). Details of the metrics are described in the Evaluation section. For the final evaluation, we will also evaluate subjectively in metrics that could not be measured effectively using objective metrics, for example, XY-resolution, edge sharpness, smoothness on flat surfaces, etc.

This challenge is a part of the Mobile Intelligent Photography and Imaging (MIPI) 2022 workshop and challenges which emphasize the integration of novel image sensors and imaging algorithms, which is held in conjunction with ECCV 2022. It consists of five competition tracks:

-

1.

RGB+ToF Depth Completion uses sparse, noisy ToF depth measurements with RGB images to obtain a complete depth map.

-

2.

Quad-Bayer Re-mosaic converts Quad-Bayer RAW data into Bayer format so that it can be processed with standard ISPs.

-

3.

RGBW Sensor Re-mosaic converts RGBW RAW data into Bayer format so that it can be processed with standard ISPs.

-

4.

RGBW Sensor Fusion fuses Bayer data and a monochrome channel data into Bayer format to increase SNR and spatial resolution.

-

5.

Under-display Camera Image Restoration improves the visual quality of image captured by a new imaging system equipped with under-display camera.

2 Challenge

To develop an efficient and high-performance RGB+ToF Depth Completion solution to be used for mobile applications, we provide the following resources for participants:

-

•

A high-quality and large-scale dataset that can be used to train and test the solution;

-

•

The data processing code with data loader that can help participants to save time to accommodate the provided dataset to the depth completion task;

-

•

A set of evaluation metrics that can measure the performance of a developed solution;

-

•

A suggested requirement of running time on multiple platforms that is necessary for real-time processing.

2.1 Problem Definition

Depth completion [11, 8, 9, 12, 13, 16, 20, 25, 3, 6, 22, 24, 15] aims to recover dense depth from sparse depth measurements. Earlier methods concentrate on retrieving dense depth maps only from the sparse ones. However, these approaches are limited and not able to recover depth details and semantic information without the availability of multi-modal data. In this challenge, we focus on the RGB+ToF sensor fusion, where a pre-aligned RGB image is also available as guidance for depth completion.

In our evaluation, the depth resolution and RGB resolution are fixed at , and the input depth map sparsity ranges from to . As a reference, the KITTI depth completion dataset [32] has sparsity around . The target of this challenge is to predict a dense depth map given the sparsity depth map and a pre-aligned RGB image at the allowed running time constraint (please refer to Section 2.5 for details).

2.2 Dataset: TetrasRGBD

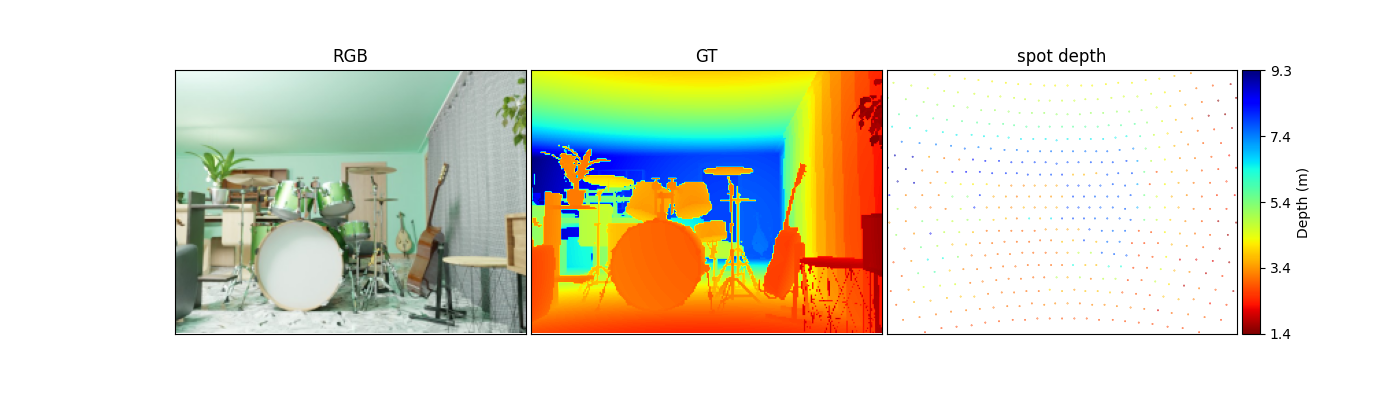

The training data contains 7 image sequences of aligned RGB and ground-truth dense depth from 7 indoor scenes (20,000 pairs of RGB and depth in total). For each scene, the RGB and the ground-truth depth are rendered along a smooth trajectory in our created 3D virtual environment. RGB and dense depth images in the training set have a resolution of 640480 pixels. We also provide a function to simulate the sparse depth maps that are close to the real sensor measurements††https://github.com/zhuqingpeng/MIPI2022-RGB-ToF-depth-completion. A visualization of an example frame of RGB, ground-truth depth, and simulated sparse depth is shown in Fig. 1.

The testing data contains, a) Synthetic: a synthetic image sequence (500 pairs of RGB and depth in total) rendered from an indoor virtual environment that differs from the training data; b) iPhone dynamic: 24 image sequences of dynamic scenes collected from an iPhone 12Pro (600 pairs of RGB and depth in total); c) iPhone static: 24 image sequences of static scenes collected from an iPhone 12Pro (600 pairs of RGB and depth in total); d) Modified phone static: 24 image sequences of static scenes (600 pairs of RGB and depth in total) collected from a modified phone. Please note that depth noises, missing depth values in low reflectance regions, and mismatch of field of views between RGB and ToF cameras could be observed from this real data. RGB and dense depth images in the entire testing set have the resolution of 256192 pixels. RGB and spot depth data from the testing set are provided and the GT depth are not available to participants. The depth data in both training and testing sets are in meters.

2.3 Challenge Phases

The challenge consisted of the following phases:

-

1.

Development: The registered participants get access to the data and baseline code, and are able to train the models and evaluate their running time locally.

-

2.

Validation: The participants can upload their models to the remote server to check the fidelity scores on the validation dataset, and to compare their results on the validation leaderboard.

-

3.

Testing: The participants submit their final results, code, models, and factsheets.

2.4 Scoring System

2.4.1 Objective Evaluation

We define the following metrics to evaluate the performance of depth completion algorithms.

-

•

Relative Mean Absolute Error (RMAE), which measures the relative depth error between the completed depth and the ground truth, i.e.

(1) where and denote the height and width of depth, respectively. and represent the ground-truth depth and the predicted depth, respectively.

-

•

Edge Weighted Mean Absolute Error (EWMAE), which is a weighted average of absolute error. Regions with larger depth discontinuity are assigned higher weights. Similar to the idea of Gradient Conduction Mean Square Error (GCMSE) [19], EWMAE applies a weighting coefficient to the absolute error between pixel in ground-truth depth and predicted depth , i.e.

(2) where the weight coefficient is computed in the same way as in [19].

-

•

Relative Depth Shift (RDS), which measures the relative depth error between the completed depth and the input sparse depth on the set of pixels where there are valid depth values in the input sparse depth, i.e.

(3) where represents the input sparse depth. denotes the set of the coordinates of all spot pixels, i.e. pixels with valid depth value. denotes the cardinality of .

-

•

Relative Temporal Standard Deviation (RTSD), which measures the temporal standard deviation normalized by depth values for static scenes, i.e.

(4) where denotes the number of frames.

RMAE, EWMAE, and RDS will be measured on the testing data with GT depth. RTSD will be measured on the testing data collected from static scenes. We will rank the proposed algorithms according to the score calculated by the following formula, where the coefficients are designed to balance the values of different metrics,

| (5) |

For each dataset, we report the average results over all the processed images belonging to it.

2.4.2 Subjective Evaluation

For subjective evaluation, we adapt the commonly used Mean Opinion Score (MOS) with blind evaluation. The score is on a scale of 1 (bad) to 5 (excellent). We invited 16 expert observers to watch videos and give their subjective score independently. The scores of all subjects are averaged as the final MOS.

2.5 Running Time Evaluation

The proposed algorithms are required to be able to process the RGB and sparse depth sequence in real-time. Participants are required to include the average run time of one pair of RGB and depth data using their algorithms and the information in the device in the submitted readme file. Due to the difference of devices for evaluation, we set different requirements of running time for different types of devices according to the AI benchmark data from the website††https://ai-benchmark.com/ranking_deeplearning_detailed.html. Although the running time is still far from real-time and low power consumption on edge computing devices, we believe this could set up a good starting point for researchers to further push the limit in both academia and industry.

3 Challenge Results

From registered participants, teams submitted their results in the validation phase, teams entered the final phase and submitted the valid results, code, executables, and factsheets. Table 1 summarizes the final challenge results. Team 5 (ZoomNeXt) shows the best overall performance, followed by Team 1 (GAMEON) and Team 4 (Singer). The proposed methods are described in Section 4 and the team members and affiliations are listed in Appendix 0.A.

| Team No. | Team name/User name | RMAE | EWMAE | RDS | RTSD | Objective | Subjective | Final |

|---|---|---|---|---|---|---|---|---|

| 5 | ZoomNeXt/Devonn, k-zha14 | 0.02935 | 0.13928 | 0.00004 | 0.00997 | 0.81763 | 3.53125 | 0.76194 |

| 1 | GAMEON/hail_hydra | 0.02183 | 0.13256 | 0.00736 | 0.01127 | 0.80723 | 3.55469 | 0.75908 |

| 4 | Singer/Yaxiong_Liu | 0.02651 | 0.13843 | 0.00270 | 0.01012 | 0.81457 | 3.32812 | 0.74010 |

| 0 | NPU-CVR/Arya22 | 0.02497 | 0.13278 | 0.00011 | 0.00747 | 0.84071 | 3.14844 | 0.73520 |

| 2 | JingAM/JingAM | 0.03767 | 0.13826 | 0.00459 | 0.00000 | 0.83545 | 2.96094 | 0.71382 |

| 6 | NPU-CVR/jokerWRN | 0.02547 | 0.13418 | 0.00101 | 0.00725 | 0.83729 | 2.75781 | 0.69443 |

| 8 | Anonymous/anonymous | 0.03015 | 0.13627 | 0.00002 | 0.01716 | 0.78497 | 2.76562 | 0.66905 |

| 3 | MainHouse113/renruixdu | 0.03167 | 0.14771 | 0.01103 | 0.01162 | 0.76781 | 2.71875 | 0.65578 |

| 7 | UCLA Vision Lab/laid | 0.03890 | 0.14731 | 0.00014 | 0.00028 | 0.83990 | 2.09375 | 0.62933 |

To analyze the performance on different testing dataset, we also summarized the objective score (RMAE) and the subjective score (MOS) per dataset in Table 2, namely Synthetic, iPhone dynamic, iPhone static, and Modified phone static. Note that due to there is no RTSD score for dynamic datasets and the RDS in the submitted results is usually very small if participants used hard depth replacement, we only present the RMAE score in this table as an objective indicator. Team ZoomNeXt performs the best in the Modified phone static subset, and moderate in other subsets. Team GAMEON performs the best in the subset of Synthetic, iPhone dynamic, and iPhone static, however, obvious artifacts could be observed in the Modified phone static subset.

| Team No. | Team Name/User Name | Synthetic | iPhone dynamic | iPhone static | Mod. phone static | ||||

| RMAE | MOS | RMAE | MOS | RMAE | MOS | RMAE | MOS | ||

| 5 | ZoomNeXt/Devonn, k-zha14 | 0.06478 | 3.37500 | 0.01462 | 3.53125 | 0.01455 | 3.40625 | / | 3.8125 |

| 1 | GAMEON/hail_hydra | 0.05222 | 4.18750 | 0.00919 | 3.96875 | 0.00915 | 3.84375 | / | 2.21875 |

| 4 | Singer/Yaxiong_Liu | 0.06112 | 3.34375 | 0.01264 | 3.68750 | 0.01154 | 2.71875 | / | 3.56250 |

| 0 | NPU-CVR/Arya22 | 0.05940 | 3.50000 | 0.01099 | 3.09375 | 0.01026 | 3.25000 | / | 2.75000 |

| 2 | JingAM/JingAM | 0.0854 | 2.50000 | 0.01685 | 3.50000 | 0.01872 | 3.28125 | / | 2.56250 |

| 6 | NPU-CVR/jokerWRN | 0.06061 | 3.15625 | 0.01116 | 2.62500 | 0.01050 | 2.87500 | / | 2.37500 |

| 8 | Anonymous/anonymous | 0.06458 | 3.87500 | 0.01589 | 2.75000 | 0.01571 | 2.53125 | / | 1.90625 |

| 3 | MainHouse113/renruixdu | 0.06928 | 2.90625 | 0.01718 | 3.06250 | 0.01482 | 2.03125 | / | 2.87500 |

| 7 | UCLA Vision Lab/laid | 0.08677 | 1.71875 | 0.01997 | 2.18750 | 0.01793 | 2.12500 | / | 2.34375 |

Fig. 2 shows a single frame visualization of all the submitted results in the test dataset. Top-left pair is from Synthetic subset, top-right pair is from iPhone dynamic subset, bottom-left pair is from iPhone static subset, and bottom-right pair is from Modified phone static subset. It can be observed that all the models could reproduce a semantically meaningful dense depth map. Team 1 (GAMEON) shows the best XY-resolution, where the tiny structures (chair legs, fingers, etc.) could be correctly estimated. Team 5 (ZoomNeXt) and Team 4 (Singer) show the most stable cross-dataset performance, especially in the modified phone dataset.

The running time of submitted methods on their individual platforms is summarized in Table 3. All methods satisfied the real-time requirements when converted to a reference platform with a GeForce RTX 2080 Ti GPU.

| Team No. | Team name/User name | Inference Time | Testing Platform | Upper Limit |

|---|---|---|---|---|

| 5 | ZoomNeXt/Devonn, k-zha14 | 18ms | Tesla V100 GPU | 34ms |

| 1 | GAMEON/hail_hydra | 33ms | GeForce RTX 2080 Ti | 33ms |

| 4 | Singer/Yaxiong_Liu | 33ms | GeForce RTX 2060 SUPER | 50ms |

| 0 | NPU-CVR/Arya22 | 23ms | GeForce RTX 2080 Ti | 33ms |

| 2 | JingAM/JingAM | 10ms | GeForce RTX 3090 | / |

| 6 | NPU-CVR/jokerWRN | 24ms | GeForce RTX 2080 Ti | 33ms |

| 8 | Anonymous/anonymous | 7ms | GeForce RTX 2080 Ti | 33ms |

| 3 | MainHouse113/renruixdu | 28ms | GeForce 1080 Ti | 48ms |

| 7 | UCLA Vision Lab/laid | 81ms | GeForce 1080 Max-Q | 106ms |

4 Challenge Methods

In this section, we describe the solutions submitted by all teams participating in the final stage of MIPI 2022 RGB+ToF Depth Completion Challenge. A brief taxonomy of all the methods is in Table 4.

| Team name | Fusion | Multi-scale | Refinement | Inspired from | Ensemble | Additional data |

| ZoomNeXt | Late | No | SPN series | FusionNet [33] | No | No |

| GAMEON | Early | Yes | SPN series | NLSPN [24] | No | ARKitScenes [1] |

| Singer | Late | Yes | SPN series | Sehlnet [18] | No | No |

| NPU-CVR | Mid | No | Deformable Conv | GuideNet [31] | No | No |

| JingAM | Late | No | No | FusionNet [33] | No | No |

| Anonymous | Early | No | No | FCN | Yes (Self) | No |

| MainHouse113 | Early | Yes | No | MobileNet [10] | No | No |

| UCLA Vision Lab | Late | No | Bilateral Filter | ScaffNet [34], FusionNet [33] | Yes (Network) | SceneNet [23] |

4.1 ZoomNeXt

Team ZoomNeXt proposes a lightweight and efficient multimodal depth completion (EMDC) model, shown in Fig. 3, which carries their solution to better address the following three key problems.

-

1.

How to fuse multi-modality data more effectively? For this problem, the team adopted a Global and Local Depth Prediction (GLDP) framework [11, 33]. For the confidence maps used by the fusion module, they adjusted the pathways of the features to have relative certainty of global and local depth predictions. Furthermore, the losses calculated on the global and local predictions are adaptively weighted to avoid model mismatch.

-

2.

How to reduce the negative effects of missing values regions in sparse modality? They first replaced the traditional upsampling in the U-Net in global network with pixel-shuffle [27], and also removed the batch normalization in local network, as they are fragile to features with anisotropic distribution and degrade the results.

-

3.

How to better recover scene structures for both objective metrics and subjective quality? They proposed key innovations in terms of SPN [5, 4, 24, 17, 12] structure and loss function design. First, they proposed the funnel convolutional spatial propagation network (FCSPN) for depth refinement. FCSPN can fuse the point-wise results from large to small dilated convolutions in each stage, and the maximum dilation at each stage is designed to be gradually smaller, thus forming a funnel-like structure stage by stage. Second, they also proposed a corrected gradient loss to handle the extreme depth (0 or inf) in the ground-truth.

4.2 GAMEON

GAMEON team proposed a multi-scale architecture to complete the sparse depth map with high performance and fast speed. Knowledge distillation method is used to distill information from large models. Besides, they proposed a stability constraint to increase the model stability and robustness.

They adopt NLSPN [24] as their baseline, and improve it in the following several aspects. As shown in the left figure of Fig. 4, they adopted a multi-scale scheme to produce the final result. At each scale, the proposed EDCNet is used to generate the dense depth map at the current resolution. The detailed architecture of EDCNet is shown in the right figure of Fig. 4, where NLSPN is adopted as the base network to produce the dense depth map at 1/2 resolution of the input, then two convolution layers are used to refine and generate the full resolution result. To enhance the performance, they further adopt knowledge distillation to distill information from Midas (DPT-Large) [26]. They distill information from the penultimate layer of Midas to the penultimate layer of the depth branch of NLSPN. A convolution layer of kernel size is used to solve the channel dimension gap. Furthermore, they also propose a stability constraint to increase the model stability and robustness. In particular, given a training sample, they adopt the thin plate splines (TPS) [7] transformation (very small perturbations) on the input and ground-truth to generate the transformed sample. After getting the outputs for these two samples, they apply the same transformation to the output of the original sample, which is constrained to be the same as the output of the transformed sample.

4.3 Singer

Team Singer proposed a depth completion approach that separately estimates high- and low-frequency components to address the problem of how to sufficiently utilize of multimodal data. Based on their previous work [18], they proposed a novel Laplacian pyramid-based [29, 2, 14] depth completion network, which estimates low-frequency components from sparse depth maps by downsampling and contains a Laplacian pyramid decoder that estimates multi-scale residuals to reconstruct complex details of the scene. The overall architecture of the network is shown in Fig. 5.

They use two independent mobilenetv2 to extract features from RGB and Sparse ToF depth. The features from two decoders are fused in a resnet-based encoder. To recover high-frequency scene structures while saving computational cost, the proposed Laplacian pyramid representation progressively adds information on different frequency bands so that the scene structure at different scales can be hierarchically recovered during reconstruction. Instead of the simple upsampling and summation of the two parts in two frequency bands, they proposed a global-local refinement network (GLRN) to fully use different levels of features from the encoder to estimate residuals at various scales and refine them. To save the computational cost of using spatial propagation networks, they introduced a dynamic mask for the fixed kernel of CSPN termed as Affinity Decay Spatial Propagation Network (AD-SPN), which is used to refine the estimated depth maps at various scales through spatial propagation.

4.4 NPU-CVR

Team NPU-CVR submitted two results with a difference in network structural parameter setting. To efficiently fill the sparse and noisy depth maps captured by the ToF camera into dense depth maps, they propose an RGB-guided depth completion method. The overall framework of their method shown in Fig. 6 is based on residual learning. Given a sparse depth map, they first fill it into a coarse dense depth map by pre-processing. Then, they obtain the residual by the network based on the proposed guided feature fusion block, and the residual is added to the coarse depth map to obtain the fine depth map. In addition, they also propose a depth map optimization block that enables deformable convolution to further improve the performance of the method.

4.5 JingAM

Team JingAM improves on the FusionNet proposed in the paper [33]. This paper takes RGB map and sparse depth map as input. The network structure is divided into global network and local network. The global information is obtained through the global network. In addition, the confidence map is used to combine the two inputs according to the uncertainty in the later fusion method. On this basis, they added the skip-level structure and more bottlenecks, and canceled the guidance map and replaced it with the global depth prediction. The loss function weights of the prediction map, global map, and local map are 1, 0.2, and 0.1 respectively. In terms of data enhancement, the brightness, saturation, and contrast were randomly adjusted from 0.1 to 0.8. The sampling step size of the depth map is randomly adjusted between 5 and 12, and change the input size from fixed size to random size. The series of strategies they adopted significantly improved RMSE and MAE compared to the paper [33].

4.6 Anonymous

Team Anonymous proposed their method based on a fully convolutional neural network (FCN) which consists of 2 downsampling convolutional layers, 12 residual blocks, and 2 upsampling convolutional layers to produce the final result as shown in Fig. 7. During the training, 25 sequential images and their sparse depth maps from one scene of the provided training dataset will be selected as inputs, then the FCN will predict 25 dense depth maps. The predicted dense depth maps and their ground truth will be used to calculate element-wise training loss consisting of L1 loss, L2 loss, and RMAE loss. Furthermore, RTSD will be calculated with the predicted dense depth maps and regarded as one item of the training loss. During the evaluation, the input RGB image and the sparse depth map will also be concatenated along the channel dimension and sent to the FCN to predict the final dense depth map.

4.7 MainHouse113

As shown in Fig. 8, Team Mainhouse113 uses a multi-scale joint prediction network (MSPNet) that inputs RGB image and sparse depth image, and simultaneously predicts the dense depth image and uncertainty map. They introduce the uncertainty-driven loss to guide network training and leverage a course-to-fine strategy to train the model. Second, they use an uncertainty attention residual learning network (UARNet), which inputs RGB image, sparse depth image and dense depth image from MSPNet, and outputs residual dense depth image. The uncertainty map from MSPNet serves as an attention map when training the UARNet. The final depth prediction is the element-sum of the two dense depth images from the two networks above. To meet the speed requirement, they use depthwise separable convolution instead of ordinary convolution in the first step, which may lose a few effects.

4.8 UCLA Vision Lab

Team UCLA Vision Lab proposed a method that aims to learn a prior on the shapes populating the scene from only sparse points. This is realized as a light-weight encoder-decoder network (ScaffNet) [34] and because it is only conditioned on the sparse points, it generalizes across domains. A second network, also an encoder-decoder network (FusionNet) [31], takes the putative depth map and the RGB colored image as input using separate encoder branches and outputs the residual to refine the prior. Because the two networks are extremely light-weight (13ms per forward pass), they are able to instantiate an ensemble of them to yield robust predictions. Finally, they perform an image-guided bilateral filtering step on the output depth map to smooth out any spurious predictions. The overall model architecture during inference time is shown in Fig. 9.

5 Conclusions

In this paper, we summarized the RGB+ToF Depth Completion challenge in the first Mobile Intelligent Photography and Imaging workshop (MIPI 2022) held in conjunction with ECCV 2022. The participants were provided with a high-quality training/testing dataset, which is now available for researchers to download for future research. We are excited to see the new progress contributed by the submitted solutions in such a short time, which are all described in this paper. The challenge results are reported and analyzed. For future works, there is still plenty room for improvements including dealing with depth outliers/noises, precise depth boundaries, high depth resolution, dark scenes, as well as low latency and low power consumption, etc.

6 Acknowledgements

We thank Shanghai Artificial Intelligence Laboratory, Sony, and Nanyang Technological University to sponsor this MIPI 2022 challenge. We thank all the organizers and all the participants for their great work.

Appendix 0.A Teams and Affiliations

ZoomNeXt Team

Title: Learning An Efficient Multimodal Depth Completion Model

Members:

1Dewang Hou ([email protected]), 2Kai Zhao

Affiliations:

1Peking University, 2Tsinghua University

GAMEON Team

Title: A multi-scale depth completion network with high stability

Members:

1Liying Lu ([email protected]), 2Yu Li,

1Huaijia Lin,

3Ruizheng Wu,

3Jiangbo Lu,

1Jiaya Jia

Affiliations:

1The Chinese University of Hong Kong, 2International Digital Economy Academy (IDEA),

3SmartMore

Singer Team

Title:

Depth Completion Using Laplacian Pyramid-Based Depth Residuals

Members:

Qiang Liu ([email protected]), Haosong Yue, Danyang Cao, Lehang Yu, Jiaxuan Quan, Jixiang Liang

Affiliations:

BeiHang University

NPU-CVR Team

Title:

An efficient residual network for depth completion of sparse

Time-of-Flight depth maps

Members:

Yufei Wang ([email protected]), Yuchao Dai, Peng Yang

Affiliations:

School of Electronics and Information, Northwestern Polytechnical University

JingAM Team

Title:

Depth completion with RGB guidance and

confidence

Members:

Hu Yan ([email protected]), Houbiao Liu, Siyuan Su, Xuanhe Li

Affiliations:

Amlogic, Shanghai, China

Anonymous Team

Title:

Prediction consistency is learning from yourself

MainHouse113 Team

Title:

Uncertainty-based deep learning framework with depthwise separable convolution for depth completion

Members:

Rui Ren ([email protected]), Yunlong Liu, Yufan Zhu

Affiliations:

Xidian University

UCLA Vision Lab Team

Title:

Learning shape priors from synthetic data for depth completion

Members:

1Dong Lao ([email protected]), Alex Wong, 1Katie Chang

Affiliations:

1UCLA, 2Yale University

References

- [1] Baruch, G., Chen, Z., Dehghan, A., Dimry, T., Feigin, Y., Fu, P., Gebauer, T., Joffe, B., Kurz, D., Schwartz, A., et al.: Arkitscenes–a diverse real-world dataset for 3d indoor scene understanding using mobile rgb-d data. arXiv preprint arXiv:2111.08897 (2021)

- [2] Chen, X., Chen, X., Zhang, Y., Fu, X., Zha, Z.J.: Laplacian pyramid neural network for dense continuous-value regression for complex scenes. IEEE Transactions on Neural Networks and Learning Systems 32(11), 5034–5046 (2020)

- [3] Chen, Z., Badrinarayanan, V., Drozdov, G., Rabinovich, A.: Estimating depth from rgb and sparse sensing. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 167–182 (2018)

- [4] Cheng, X., Wang, P., Guan, C., Yang, R.: Cspn++: Learning context and resource aware convolutional spatial propagation networks for depth completion. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 34, pp. 10615–10622 (2020)

- [5] Cheng, X., Wang, P., Yang, R.: Depth estimation via affinity learned with convolutional spatial propagation network. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 103–119 (2018)

- [6] Cheng, X., Wang, P., Yang, R.: Learning depth with convolutional spatial propagation network. IEEE Transactions on Pattern Analysis and Machine Intelligence 42(10), 2361–2379 (2019)

- [7] Duchon, J.: Splines minimizing rotation-invariant semi-norms in sobolev spaces. In: Constructive Theory of Functions of Several Variables, pp. 85–100. Springer (1977)

- [8] Eldesokey, A., Felsberg, M., Holmquist, K., Persson, M.: Uncertainty-aware cnns for depth completion: Uncertainty from beginning to end. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12014–12023 (2020)

- [9] Eldesokey, A., Felsberg, M., Khan, F.S.: Confidence propagation through cnns for guided sparse depth regression. IEEE Transactions on Pattern Analysis and Machine Intelligence 42(10), 2423–2436 (2019)

- [10] Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

- [11] Hu, J., Bao, C., Ozay, M., Fan, C., Gao, Q., Liu, H., Lam, T.L.: Deep depth completion: A survey. arXiv preprint arXiv:2205.05335 (2022)

- [12] Hu, M., Wang, S., Li, B., Ning, S., Fan, L., Gong, X.: Penet: Towards precise and efficient image guided depth completion. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). pp. 13656–13662. IEEE (2021)

- [13] Imran, S., Long, Y., Liu, X., Morris, D.: Depth coefficients for depth completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12438–12447. IEEE (2019)

- [14] Jeon, J., Lee, S.: Reconstruction-based pairwise depth dataset for depth image enhancement using cnn. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 422–438 (2018)

- [15] Lee, B.U., Lee, K., Kweon, I.S.: Depth completion using plane-residual representation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 13916–13925 (2021)

- [16] Li, A., Yuan, Z., Ling, Y., Chi, W., Zhang, C., et al.: A multi-scale guided cascade hourglass network for depth completion. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. pp. 32–40 (2020)

- [17] Lin, Y., Cheng, T., Zhong, Q., Zhou, W., Yang, H.: Dynamic spatial propagation network for depth completion. arXiv preprint arXiv:2202.09769 (2022)

- [18] Liu, Q., Yue, H., Lyu, Z., Wang, W., Liu, Z., Chen, W.: Sehlnet: Separate estimation of high-and low-frequency components for depth completion. In: 2022 International Conference on Robotics and Automation (ICRA). pp. 668–674. IEEE (2022)

- [19] López-Randulfe, J., Veiga, C., Rodríguez-Andina, J.J., Farina, J.: A quantitative method for selecting denoising filters, based on a new edge-sensitive metric. In: 2017 IEEE International Conference on Industrial Technology (ICIT). pp. 974–979. IEEE (2017)

- [20] Lopez-Rodriguez, A., Busam, B., Mikolajczyk, K.: Project to adapt: Domain adaptation for depth completion from noisy and sparse sensor data. In: Proceedings of the Asian Conference on Computer Vision (2020)

- [21] Luetzenburg, G., Kroon, A., Bjørk, A.A.: Evaluation of the apple iphone 12 pro lidar for an application in geosciences. Scientific Reports 11(1), 1–9 (2021)

- [22] Ma, F., Cavalheiro, G.V., Karaman, S.: Self-supervised sparse-to-dense: Self-supervised depth completion from lidar and monocular camera. In: 2019 International Conference on Robotics and Automation (ICRA). pp. 3288–3295. IEEE (2019)

- [23] McCormac, J., Handa, A., Leutenegger, S., Davison, A.J.: Scenenet rgb-d: Can 5m synthetic images beat generic imagenet pre-training on indoor segmentation? In: Proceedings of the IEEE International Conference on Computer Vision. pp. 2678–2687 (2017)

- [24] Park, J., Joo, K., Hu, Z., Liu, C.K., So Kweon, I.: Non-local spatial propagation network for depth completion. In: Proceedings of the European Conference on Computer Vision. pp. 120–136. Springer (2020)

- [25] Qu, C., Nguyen, T., Taylor, C.: Depth completion via deep basis fitting. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. pp. 71–80 (2020)

- [26] Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., Koltun, V.: Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence (2020)

- [27] Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 1874–1883 (2016)

- [28] Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from rgbd images. In: Proceedings of the European Conference on Computer Vision. pp. 746–760. Springer (2012)

- [29] Song, M., Lim, S., Kim, W.: Monocular depth estimation using laplacian pyramid-based depth residuals. IEEE Transactions on Circuits and Systems for Video Technology 31(11), 4381–4393 (2021)

- [30] Sun, P., Kretzschmar, H., Dotiwalla, X., Chouard, A., Patnaik, V., Tsui, P., Guo, J., Zhou, Y., Chai, Y., Caine, B., et al.: Scalability in perception for autonomous driving: Waymo open dataset. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2446–2454 (2020)

- [31] Tang, J., Tian, F.P., Feng, W., Li, J., Tan, P.: Learning guided convolutional network for depth completion. IEEE Transactions on Image Processing 30, 1116–1129 (2020)

- [32] Uhrig, J., Schneider, N., Schneider, L., Franke, U., Brox, T., Geiger, A.: Sparsity invariant cnns. In: International Conference on 3D Vision (3DV) (2017)

- [33] Van Gansbeke, W., Neven, D., De Brabandere, B., Van Gool, L.: Sparse and noisy lidar completion with rgb guidance and uncertainty. In: 2019 16th International Conference on Machine Vision Applications (MVA). pp. 1–6. IEEE (2019)

- [34] Wong, A., Cicek, S., Soatto, S.: Learning topology from synthetic data for unsupervised depth completion. IEEE Robotics and Automation Letters 6(2), 1495–1502 (2021)