Minimizing Moments of AoI for Both Active and Passive Users through Second-Order Analysis

Abstract

In this paper, we address the optimization problem of moments of Age of Information (AoI) for active and passive users in a random access network. In this network, active users broadcast sensing data while passive users only receive signals. Collisions occur when multiple active users transmit simultaneously, and passive users are unable to receive signals while any active user is transmitting. Each active user follows a Markov process for their transmissions. We aim to minimize the weighted sum of any moments of AoI for both active and passive users in this network. To achieve this, we employ a second-order analysis to analyze the system. Specifically, we characterize an active user’s transmission Markov process by its mean and temporal process. We show that any moment of the AoI can be expressed a function of the mean and temporal variance, which, in turn, enables us to derive the optimal transmission Markov process. Our simulation results demonstrate that this proposed strategy outperforms other baseline policies that use different active user transmission models.

I Introduction

Recently, there has been a significant increase in the use of time-sensitive applications such as Internet of Things, vehicular networks, sensor networks, and drone systems for surveillance and reconnaissance. While traditional research has focused on optimizing the reliability and throughput of communication, these efforts often fall short in meeting the demands for fresh data. To address this, a performance metric known as age-of-information (AoI) was introduced [1] and has received increasing attention in recent literature [2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32].

Previous studies on AoI have commonly considered centralized control over transmission decisions [8, 9, 7, 10, 11, 12, 13] and queuing problems without transmission collisions [15, 18, 16, 19, 17]. However, centralized policies and collisions can lead to large overhead or extra communication, which can be a significant drawback in delay-sensitive applications such as the Internet of Things and transmission-sensitive applications like satellite communications.

To address this issue, we consider a random access network, where active users broadcast sensed data and multiple transmissions at the same time can cause collisions. Since there are no acknowledgements in broadcast, active users do not have any feedback information on collisions. This makes recent feedback-based algorithms for AoI optimization [22, 23, 24, 25, 21, 26] inapplicable.

Additionally, we consider that the network has silent observers, referred to as passive users, who share the same channel as active users. Passive users aim to observe out-of-network radio activities in the same channel but do not make any transmissions. Examples of passive users include sensors detecting malicious jammers, receivers of satellite communications, and radio telescopes tracking celestial objects. The coexistence of passive and active users can improve channel utilization, as passive users can obtain valuable observations when no active user is transmitting.

As AoI depends on previous successful transmissions, memoryless random access algorithms, such as slotted ALOHA, are not suitable for optimizing AoI. Therefore, we consider that each active user follows a Markov process to determine its transmission activities. Furthermore, instead of only considering the mean of AoI, we consider the moments of AoI, which can offer insights into the variance and the maximum value of AoI.

The goal of this paper is to minimize the weighted sum of any moment of AoI for both active users and passive users. There are several challenges that need to be addressed. First, since the transmission strategy of an active user is Markovian instead of i.i.d., the temporal correlation of its transmission activities needs to be explicitly taken into account. Second, active users and passive users have different behavior, which leads to different definitions of AoI between the two types of users. Third, most existing studies on moments of AoI, such as [17, 18, 19], focus only on simple queueing systems and are not applicable to random access networks. Finally, most existing studies on AoI in random access networks only focus on the first moment of AoI and can only obtain the optimal solution in the asymptotic sense.

To address above challenges and solve the optimization problem, we propose to investigate the system behavior through a second-order analysis. We analyze the mean and temporal variance of delivery process to characterize the system. Then, we formulate the expressions of moments of AoI based on these mean and temporal variance, and then further formulate them as functions of state change probabilities in Markov process. By revealing special properties in expressions of moments of AoI for both active and passive users, we find that the optimal action for an active user, under some minor conditions, is to become silent immediately after one attempt of transmission. The simulation results show that our formulation of moments of AoI is valid and our solution outperforms other baseline algorithms that have different active user activity models.

The rest of the paper is organized as follows. Section II introduces our system model and the optimization problem. Section III formulates expressions of moments of AoI based for both active and passive users based on a second-order model. Section IV solves the optimal transmission strategies. Some simulation results are presented in Section V. Finally, Section VI concludes the paper.

II System setting

We consider a wireless system with one channel and two types of users, active users and passive users. Each active user monitors a particular field of interest and uses the wireless channel to transmit its surveillance information to its neighboring receivers. Passive users do not make any wireless transmissions and instead monitor the radio activities in the wireless channel. For example, in a battlefield scenario, an active user can be a surveillance drone that monitors a certain area in the battlefield and broadcasts its video feed to all nearby units. A passive user can be a signal detector that aims to identify and locate enemy jammers or communication devices operating in the same channel.

Next, we discuss the interference relationship among users. We assume that active users are grouped into clusters and each cluster has () active users. Active users in the same cluster interfere with each other but do not suffer from interference from other clusters. An active user can successfully deliver a packet if it is the only device in the cluster that is transmitting. If multiple active users in the same cluster transmit at the same time, then a collision occurs and none of the packets are received. On the other hand, since passive users need to detect enemy devices that are potentially far away, we assume that a passive user is interfered by all active users and it can only detect radio activities if no active user is transmitting.

The performance of each user is determined by its associated AoI. We assume that time is slotted and the duration of one time slot is the time needed to transmit one packet. Each active user generates a new surveillance packet in each slot. Hence, the AoI of an active user at time is defined by following recursion:

Since passive users can only detect radio activities when no active users are transmitting, the AoI of passive user at time is defined by

We now discuss the transmission strategy of each active user. We assume that the strategy of an active user is governed by a Markov process with two states, a TX state and an Idle state. An active user will transmit a packet at time if and only if it is in the TX state. If an active user is in the TX state at time , then its state will change to Idle at time with probability . If an active user is in the Idle state at time , then its state will change to TX at time with probability . Fig. 1 presents this Markov process. We note that this strategy generalizes the ALOHA network. In particular, if , then an active user will transmit in each time slot with probability independently.

We aim to minimize the moments of AoI for both active users and passive users. Specifically, let be the random variable of an active user’s AoI and let be the random variable of a passive user’s AoI in steady state. For a fixed integer and a fixed real number , we aim to find and that solve the following optimization problem:

| (1) | ||||

| s.t. | (2) |

where is the expectation function.

III The Second Order Model for AoI

A key challenge in solving the optimization problem (1)-(2) is that it is hard to express and as functions of and . We propose to adopt the framework of second-order network optimization in Guo et al. [30] to address this challenge. The framework in [30] is only applicable to centrally scheduled systems and the mean of AoI. In this section, we will extend this framework to model random access networks and moments of AoI.

We first define the second-order model that describes the performance of both active and passive users. For an active user , Let be the indicator function that successfully delivers a packet at time , that is, is the only active user in its cluster that transmits at time . We call the delivery process of . Due to symmetry, the delivery processes of all active users follow the same distribution. We then define the mean and temporal variance of the delivery process of an active user by

respectively, where the expectation is taken over all sample paths.

For a passive user , we let be the indicator function that no active user transmits, and hence the passive user can monitor radio activities without interference, at time . We call the passive detection process. The mean and temporal variance of the passive detection process are defined as and , respectively.

The second-order model uses the means and temporal variances to capture and approximate all aspects of the system. To show its utility, we first derive the closed form expressions of , , , and . Let and , we have the following theorem:

Theorem 1.

Proof.

See Appendix -A. ∎

Next, we show that the moments of AoI of both active users and passive users can be approximated as functions of and , respectively.

Due to space limitation, we focus on discussing the derivation of moments of AoI of active users.

Let be the -th time that an active user successfully deliveries its packet, and define . For active user , its AoI from to is from to . So, the summation of -th moment of AoI from to is . Let be the total number of deliveries for active user . Then, summing over all , we have the sum of all -th moment of AoI for active user , . Next, divided by the whole time and choosing the expectation, we have . By using the Faulhaber’s formula, we have

| (3) |

where is the Bernoulli number.

To approximate , we construct an alternative sequence that have the same mean and temporal variance as . Let be a Brownian motion random process with mean and variance . The sequence is defined as

| (4) |

where . Intuitively, we assume that a packet delivery occurs in the alternative sequence every time increases by one since the last packet delivery in this sequence. Hence, is close to , and the process has mean and temporal variance .

Let , where is the -th time that . Since has the same mean and temporal variance as , we propose to approximate by . Moreover, can be regarded as the time needed for to increase by , which is equivalent to the first-hitting time for the Brownian motion random process with level . Thus, follows the inverse Gaussian distribution ([33, 34]). By using the moment generating function of inverse Gaussian distribution in [35], we have .

For a passive user , let be the -th time that and . By combining the derivations above, we obtain the following:

Theorem 2.

Let be the Bernoulli number, then

| (5) |

and

| (6) |

where and .

For example, we have and .

IV AoI Optimization in Second Order Model

In the previous section, we approximate and as functions of and . In this section, we further study the optimal behavior of active users and solve the optimal and . We find the interesting property that, when , the optimal solution requires an active user to the Idle state every time it makes a transmission, i.e., . This result is formally stated below.

Theorem 3.

When , there exists a such that choosing and minimizes .

In addition to showing the interesting behavior that, under the optimal solution, an active user will never transmit in two consecutive slots, this theorem also simplifies the search for the optimal solution. In particular, it shows that one only needs to find the optimal , which can be done through a simple line search, in order to obtain the optimal solution.

Recall that and , or, equivalently, and . Before proving Theorem 3, we first establish some properties about the optimal and . We start by the following lemma:

Lemma 1.

For any positive integer , is an increasing function of and , and is an increasing function of and .

Proof.

For active users, we note that each term in (5) is a constant multiplying , where . For , we have

| (7) |

whose -th term is . Since , this -th term in (7) is an increasing function of and . Thus, is an increasing function of and , for any . Therefore, is an increasing function of and .

Similarly, is an increasing function of and . ∎

Based on Lemma 1, we further have

Lemma 2.

For any , The optimal that minimizes is in range .

Sketch of proof.

Recall and . When , it is easy to verify that and decreases as increases.

In Appendix -B, we further show that, when , and .

Therefore, when , (, , , all increase as increases. Since and are increasing functions of and respectively, the optimal is less than or equal to . ∎

Next, we analyze the optimal . The lemma below derives the optimal when is given and fixed.

Lemma 3.

When is fixed, setting , or, equivalently, setting and , minimizes and , where and are the smallest positive roots of

respectively.

Sketch of proof.

When is determined, and are determined. Hence, by Theorem 2, and are increasing functions of and respectively.

In Appendix -C, we further establish that when , and when . So, when , minimum will minimize and . In Appendix -C, we also show that , and hence the minimum is achieved when and .

Therefore, when is fixed, choosing and minimizes and . ∎

A limitation of Lemma 3 is that it requires . In the following lemma, we establish lower bounds of and .

Lemma 4.

when , and when .

Sketch of proof.

In Appendix -D, we prove that and both have only one root in .

We further show that when , and when , in Appendix -D. Since and , we have when , and when . ∎

We are now ready to prove Theorem 3.

V Simulation Results

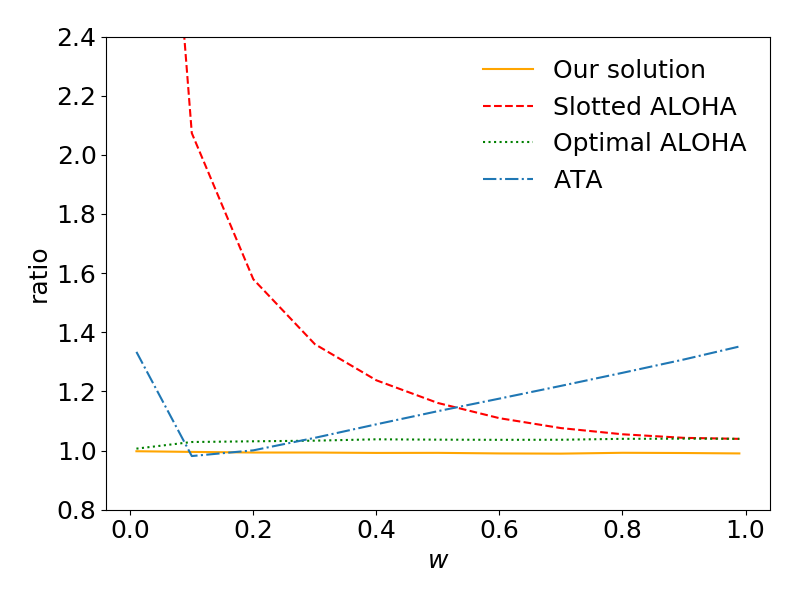

This section presents simulation results for applying our solution of and . The performance function is the optimization objective .

The results of our solution are compared with three other baseline policies. We provide descriptions of all four policies, along with necessary modifications to fit the simulation setting.

- •

-

•

Slotted ALOHA: When , our active user model is reduced to be slotted ALOHA, which is a famous and commonly used model for transmissions with no acknowledgment. According to solutions in [5], we choose and .

-

•

Optimal ALOHA: After introducing passive users, the optimal in slotted ALOHA is no longer . Thus, we simulate all possible with precision , and then choose the best to obtain optimal slotted ALOHA. It should be noticed that optimal ALOHA is impossible to implement in real world since its is determined after the whole process is finished.

-

•

Age Threshold ALOHA (ATA): Age threshold ALOHA is proposed by Yavascan and Uysal [24], which is a slotted ALOHA algorithm with an age threshold. If the AoI of one active user is larger than a threshold, this active user will follow slotted ALOHA transmission with probability . Otherwise, this active user will stay silent. Since there is no acknowledgment in the system, we let active users assume their transmissions are always successful. As suggested in [24], and the age threshold is .

We simulate different scenarios with varying values of , where ranges from to . We set , or , and or . Once , and are determined, we can set the values of and based on policy designs. The system is then simulated for individual runs, each with time slots. The final results are the average of these runs. We present the value of for all four algorithms under each setting. Simulation results are shown in Fig. 2. As some values are extremely large, only results that have ratio below are shown in figures.

The results show that the average mismatches between theoretical and actual of our solution are about when and when . Furthermore, we notice the actual value is always lower than theoretical value. These results indicate that our approximation is useful in practice.

Comparing the performance of different algorithms in Figure 2a and Figure 2b, we can observe that our strategy outperforms other three policies in all settings. Even when compared to the optimal ALOHA, which is not practical in real-world scenarios, our strategy shows a reduction when and a reduction when . Additionally, the increase in the reduction of our strategy between and indicates that our strategy can better optimize the variance of AoI.

VI Conclusion

This paper studies an optimization problem of moments of AoI for both active and passive users in a random access network. In this network, active users broadcast their transmissions following a Markov process and will interfere with each other. By applying a second-order model, we formulate moments of AoI as functions of state change probabilities in the Markov process. Next, we reveal the special properties of these functions and optimize moments of AoI for both active and passive users. The optimal solution indicates that the best strategy for active users is to become silent immediately after one transmission. Simulations show that this transmission strategy outperforms three baseline algorithms under various settings.

Acknowledgment

This material is based upon work supported in part by NSF under Award Number ECCS-2127721, in part by ARL and ARO under Grant Number W911NF-22-1-0151, and in part by ONR under Contract N00014-21-1-2385.

References

- [1] S. Kaul, R. Yates, and M. Gruteser, “Real-time status: How often should one update?,” in 2012 Proceedings IEEE INFOCOM, pp. 2731–2735, IEEE, 2012.

- [2] L. Huang and E. Modiano, “Optimizing age-of-information in a multi-class queueing system,” in 2015 IEEE international symposium on information theory (ISIT), pp. 1681–1685, IEEE, 2015.

- [3] K. Chen and L. Huang, “Age-of-information in the presence of error,” in 2016 IEEE International Symposium on Information Theory (ISIT), pp. 2579–2583, IEEE, 2016.

- [4] A. Kosta, N. Pappas, A. Ephremides, and V. Angelakis, “Age and value of information: Non-linear age case,” in 2017 IEEE International Symposium on Information Theory (ISIT), pp. 326–330, IEEE, 2017.

- [5] R. D. Yates and S. K. Kaul, “Status updates over unreliable multiaccess channels,” in 2017 IEEE International Symposium on Information Theory (ISIT), pp. 331–335, IEEE, 2017.

- [6] Y.-P. Hsu, E. Modiano, and L. Duan, “Age of information: Design and analysis of optimal scheduling algorithms,” in 2017 IEEE International Symposium on Information Theory (ISIT), pp. 561–565, IEEE, 2017.

- [7] I. Kadota, A. Sinha, E. Uysal-Biyikoglu, R. Singh, and E. Modiano, “Scheduling policies for minimizing age of information in broadcast wireless networks,” IEEE/ACM Transactions on Networking, vol. 26, no. 6, pp. 2637–2650, 2018.

- [8] R. D. Yates, “Status updates through networks of parallel servers,” in 2018 IEEE International Symposium on Information Theory (ISIT), pp. 2281–2285, IEEE, 2018.

- [9] Y. Sun, E. Uysal-Biyikoglu, and S. Kompella, “Age-optimal updates of multiple information flows,” in IEEE INFOCOM 2018-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), pp. 136–141, IEEE, 2018.

- [10] J. Sun, Z. Jiang, B. Krishnamachari, S. Zhou, and Z. Niu, “Closed-form whittle’s index-enabled random access for timely status update,” IEEE Transactions on Communications, vol. 68, no. 3, pp. 1538–1551, 2019.

- [11] A. Maatouk, S. Kriouile, M. Assaad, and A. Ephremides, “Asymptotically optimal scheduling policy for minimizing the age of information,” in 2020 IEEE International Symposium on Information Theory (ISIT), pp. 1747–1752, IEEE, 2020.

- [12] P. Zou, O. Ozel, and S. Subramaniam, “Optimizing information freshness through computation–transmission tradeoff and queue management in edge computing,” IEEE/ACM Transactions on Networking, vol. 29, no. 2, pp. 949–963, 2021.

- [13] A. Jaiswal and A. Chattopadhyay, “Minimization of age-of-information in remote sensing with energy harvesting,” in 2021 IEEE International Symposium on Information Theory (ISIT), pp. 3249–3254, IEEE, 2021.

- [14] R. D. Yates and S. K. Kaul, “Age of information in uncoordinated unslotted updating,” in 2020 IEEE International Symposium on Information Theory (ISIT), pp. 1759–1764, IEEE, 2020.

- [15] M. Moltafet, M. Leinonen, and M. Codreanu, “Average age of information for a multi-source m/m/1 queueing model with packet management,” in 2020 IEEE International Symposium on Information Theory (ISIT), pp. 1765–1769, IEEE, 2020.

- [16] C.-C. Wang, “How useful is delayed feedback in aoi minimization—a study on systems with queues in both forward and backward directions,” in 2022 IEEE International Symposium on Information Theory (ISIT), pp. 3192–3197, IEEE, 2022.

- [17] Y. Inoue, H. Masuyama, T. Takine, and T. Tanaka, “The stationary distribution of the age of information in fcfs single-server queues,” in 2017 IEEE International Symposium on Information Theory (ISIT), pp. 571–575, IEEE, 2017.

- [18] M. Moltafet, M. Leinonen, and M. Codreanu, “Aoi in source-aware preemptive m/g/1/1 queueing systems: Moment generating function,” in 2022 IEEE International Symposium on Information Theory (ISIT), pp. 139–143, IEEE, 2022.

- [19] M. Moltafet, M. Leinonen, and M. Codreanu, “Moment generating function of age of information in multisource m/g/1/1 queueing systems,” IEEE Transactions on Communications, vol. 70, no. 10, pp. 6503–6516, 2022.

- [20] S. Banerjee, R. Bhattacharjee, and A. Sinha, “Fundamental limits of age-of-information in stationary and non-stationary environments,” in 2020 IEEE International Symposium on Information Theory (ISIT), pp. 1741–1746, IEEE, 2020.

- [21] J. He, D. Zhang, S. Liu, Y. Zhou, and Y. Zhang, “Decentralized updates scheduling for data freshness in mobile edge computing,” in 2022 IEEE International Symposium on Information Theory (ISIT), pp. 3186–3191, IEEE, 2022.

- [22] X. Chen, K. Gatsis, H. Hassani, and S. S. Bidokhti, “Age of information in random access channels,” in 2020 IEEE International Symposium on Information Theory (ISIT), pp. 1770–1775, 2020.

- [23] H. Pan, T.-T. Chan, J. Li, and V. C. Leung, “Age of information with collision-resolution random access,” IEEE Transactions on Vehicular Technology, vol. 71, no. 10, pp. 11295–11300, 2022.

- [24] O. T. Yavascan and E. Uysal, “Analysis of slotted aloha with an age threshold,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 5, pp. 1456–1470, 2021.

- [25] A. Munari, “Modern random access: An age of information perspective on irregular repetition slotted aloha,” IEEE Transactions on Communications, vol. 69, no. 6, pp. 3572–3585, 2021.

- [26] K. Saurav and R. Vaze, “Game of ages in a distributed network,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 5, pp. 1240–1249, 2021.

- [27] H. H. Yang, C. Xu, X. Wang, D. Feng, and T. Q. Quek, “Understanding age of information in large-scale wireless networks,” IEEE Transactions on Wireless Communications, vol. 20, no. 5, pp. 3196–3210, 2021.

- [28] S. Kriouile and M. Assaad, “Minimizing the age of incorrect information for real-time tracking of markov remote sources,” in 2021 IEEE International Symposium on Information Theory (ISIT), pp. 2978–2983, IEEE, 2021.

- [29] X. Chen, R. Liu, S. Wang, and S. S. Bidokhti, “Timely broadcasting in erasure networks: Age-rate tradeoffs,” in 2021 IEEE International Symposium on Information Theory (ISIT), pp. 3127–3132, IEEE, 2021.

- [30] D. Guo, K. Nakhleh, I.-H. Hou, S. Kompella, and C. Kam, “A theory of second-order wireless network optimization and its application on aoi,” in IEEE INFOCOM 2022-IEEE Conference on Computer Communications, pp. 999–1008, IEEE, 2022.

- [31] G. Yao, A. Bedewy, and N. B. Shroff, “Age-optimal low-power status update over time-correlated fading channel,” IEEE Transactions on Mobile Computing, 2022.

- [32] A. Maatouk, M. Assaad, and A. Ephremides, “Analysis of an age-dependent stochastic hybrid system,” in 2022 IEEE International Symposium on Information Theory (ISIT), pp. 150–155, IEEE, 2022.

- [33] E. Schrödinger, “Zur theorie der fall-und steigversuche an teilchen mit brownscher bewegung,” Physikalische Zeitschrift, vol. 16, pp. 289–295, 1915.

- [34] J. L. Folks and R. S. Chhikara, “The inverse gaussian distribution and its statistical application—a review,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 40, no. 3, pp. 263–275, 1978.

- [35] K. Krishnamoorthy, Handbook of statistical distributions with applications. Chapman and Hall/CRC, 2006.

-A Proof of Theorem 1

Let be the indicator that the active user is in TX state in time slot .

First, we calculate the mean for active user in cluster and passive user . We have

and .

Then, we derive the temporal variance for active user and passive user . According to the central limit theorem of Markov process, we have

We first calculate . Since is a Bernoulli random variable, it has

Assume the active user is in cluster , we have .

Let and , we have and

can be calculated by

for , and . By , we have

For , we also have

for , and . Solving the recursive equation, we have . Therefore,

Now, we calculate . Sine is also a Bernoulli random variable in the system, we have

Let , we have and

can be calculated by

for , and . This expression is similar to that of . Thus, solving the recursive equation, we have . Thus,

-B Proof of Lemma 2

First, we show that we only need to consider the case . The partial derivative of with respect to when is fixed is

When , it is easy to verify that the partial derivative is positive. Similarly, we also have the partial derivative of with respect to is positive when . Thus, the optimal is non-positive, and hence we only consider in the following proof.

We first prove when . Based on Theorem 1, we have

Let be the -th term in the above infinite summation. The following part is to prove or .

Based on definition of and , we first have and .

Consider the special case . can only be achieved when . Thus, equals to when is even, and equals to when is odd. In this case, . Thus, for any , and hence .

Now, we discuss the case .

We first consider , which means . In this case, is non-negative for all even , and is possible negative for odd (when is larger, equation becomes positive).

Consider from to infinite, we compare the value with an even and its following odd . As , we have and . Since , when is even, we have and . When is odd, we have and . Hence, , for all . Let . Based on above results, it is easy to verify , and hence .

The remaining term is and . Summing them, denote , we have . The partial derivative of with respect to is , which is an increasing function of (convex function in ). Choose , when . Choosing , the partial derivative becomes .

If , . Note and . Thus, in this case.

If , the minimum is achieved at smallest and . Since , , and hence , and . Thus, . Taking derivative, we have , which is positive when . The derivative of is . Combined with , the value of is larger than the value of choosing (when or the value of plus the derivative at times (when ). The value at is . When , the value of plus the derivative at times is . Thus, in this case.

As in above all cases, and hence .

Next, we consider . Solving the inequation, we have . Note when , for both odd and even , and hence the summation is nonnegative for these . So, we only need to analyze the case , in which when is odd and when is even. Therefore, we consider . Note in this case.

Recall the function , we have . Note and . The remaining is to compare and . Divide these two terms, we have .

When , we consider the bound and , and hence . By doing the partial derivative with respect to , we have and when . Thus, is a decreasing function of . Taking into this function, we obtain the lower bound, which is a function of only one variable . By doing the partial derivative, it can be verified that it is an increasing function of . Taking , we can verify . Hence for .

When , we should consider both bound for , and , which comes from the inequation condition . For , we separate its domain to be and , where . It can be verified that, when , for every that , using the same strategy as that for and the only change is the upper bound for . When , we analyze the sign of . Since , by doing the partial derivative, the upper bound of is a decreasing function of . Hence, the maximum is achieved when and then , where is a variable only related to . Thus, , which, verified by doing partial derivative, is a decreasing function of . Then, taking , we can verify that for every that . Therefore, when

As for both and , , and hence when .

Since when and when , we have, when ,

Now, we discuss passive users. Based on Theorem 1, we have

Since and for all ,

When , , and hence

When , , and then

Therefore, when ,

-C Proof of Lemma 3

The partial derivative of with respect to when is determined is

Let be the th term in the above equation.

We first show that . Choosing and , we have , and . Therefore, there must exists one root that in for both and . Thus, and hence .

Therefore, , and . So, only when and is even.

The expression of is

When , it is easy to verify that since .

When , we have

Define and as follows:

Then, .

First, we prove . Subtracting from , we have

As is the smallest positive root of and , when . Note .

Now we check whether is positive. The derivative of is and hence it achieves minimum when . Choosing , we have .

Since , and are all non-negative when , we have

Next, we prove .

We know that from binomial approximation. Let . The derivative of is . As when , we have is increasing when . Hence, , which means , when .

Now, we want to find whether is between and . The derivative of is . Thus, when , . Therefore, . As and , we have and when .

Thus, when ,

Then,

When , , and hence

Therefore,

So, when , . Thus,

Hence, and achieves its minimum value when is minimized. Thus, choosing and minimizes .

Since is an increasing function of , choosing and also minimizes .

Next, we consider passive users.

Recall the expression of ,

For this expression, it is easy to verify that . Therefore, reaches its minimum in the region .

The partial derivative of with respect to is

Let be the term of the partial derivative above. When , we can find that when is odd and when is even. Thus, is positive if for all positive integer .

Plugging in the formula of ,

Since and when , we have

Define as follows.

When ,

Note , , and is the smallest positive root of . Thus, if . When , and , and hence

Therefore, if ,

So, and achieves its minimum value when is minimized and . Thus, choosing and minimizes .

-D Proof of Lemma 4

Let us rewrite the expression of .

The derivative of is

Let , we have and . Thus, the roots of are either not existing or all negative. Since there is no root when and , is always negative when . Therefore, is always decreasing when . Considering and , must exist between and , and if and only if , when .

Let , we have

Solving , we obtain the only real root, which is approximately . Since when , we have when .

Therefore, , and hence when .

Now we proof when . Let us rewrite here,

The analysis of depends on the sign of .

When , . There are two roots for , which are and . Note must be when and . Thus, .

When , the derivative of is

Let , we obtain two roots, one is , and the other one is . Look at the term in the square root, . It has one negative and one positive root . Thus, does not have real roots when , and hence is always a decreasing function in this case. Therefore, only has one root, which is . In this case, if and only if .

When , , , , and . Thus, there must exist three roots, one is less than , one is between and , and one is larger than . Then, there is just one root, , between and , and if and only if when .

Therefore, if for a certain , we have when or . Taking in , we have

When ,

Therefore, when .