Minimizing over norms on the gradient

Abstract

In this paper, we study the minimization on the gradient for imaging applications. Several recent works have demonstrated that is better than the norm when approximating the norm to promote sparsity. Consequently, we postulate that applying on the gradient is better than the classic total variation (the norm on the gradient) to enforce the sparsity of the image gradient. To verify our hypothesis, we consider a constrained formulation to reveal empirical evidence on the superiority of over when recovering piecewise constant signals from low-frequency measurements. Numerically, we design a specific splitting scheme, under which we can prove subsequential and global convergence for the alternating direction method of multipliers (ADMM) under certain conditions. Experimentally, we demonstrate visible improvements of over and other nonconvex regularizations for image recovery from low-frequency measurements and two medical applications of MRI and CT reconstruction. All the numerical results show the efficiency of our proposed approach.

Keywords: minimization, piecewise constant images, minimum separation, alternating direction method of multipliers

1 Introduction

Regularization methods play an important role in inverse problems to refine the solution space by prior knowledge and/or special structures. For example, the celebrated total variation (TV) [1] prefers piecewise constant images, while total generalized variation (TGV) [2] and fractional-order TV [3, 4] tend to preserve piecewise smoothness of an image. TV can be defined either isotropically or anisotropically. The anisotropic TV [5] in the discrete setting is equivalent to applying the norm on the image gradient. As the norm is often used to enforce a signal being sparse, one can interpret the TV regularization as to promote the sparsity of gradient vectors.

To find the sparsest signal, it is straightforward to minimize the norm (counting the number of nonzero elements), which is unfortunately NP-hard [6]. A popular approach involves the convex relaxation of using the norm to replace the ill-posed norm, with the equivalence between and for sparse signal recovery given in terms of restricted isometry property (RIP) [7]. However, Fan and Li [8] pointed out that the approach is biased towards large coefficients, and proposed to minimize a nonconvex regularization, called smoothly clipped absolute deviation (SCAD). Subsequently, various nonconvex functionals emerged such as minimax concave penalty (MCP) [9], capped [10, 11, 12], and transformed [13, 14, 15]. Following the literature on sparse signal recovery, there is a trend to apply a nonconvex regularization on the gradient to deal with images. For instance, Chartrand [16] discussed both the norm with for sparse signals and on the gradient for magnetic resonance imaging (MRI), while MCP on the gradient was proposed in [17].

Recently, a scale-invariant functional was examined, which gives promising results in recovering sparse signals [18, 19, 20] and sparse gradients [21]. In this paper, we rely on a constrained formulation to characterize some scenarios, under which the quotient of the and norms on the gradient performs well. In particular, we borrow the analysis of a super-resolution problem, which refers to recovering a sparse signal from its low-frequency measurements. Candés and Fernandez-Granda [22] proved that if a signal has spikes (locations of nonzero elements) that are sufficiently separated, then the minimization yields an exact recovery for super-resolution. We innovatively design a certain type of piecewise constant signals that lead to well-separated spikes after taking the gradient. Using such signals, we empirically demonstrate that the TV minimization can find the desired solution under a similar separation condition as in [22]. We also illustrate that can deal with less separated spikes in gradient, and is better at preserving image contrast than . These empirical evidences show holds great potentials in promoting sparse gradients and preserving image contrasts. To the best of our knowledge, it is the first time to relate the exact recovery of gradient-based methods to minimum separation and image contrast in a super-resolution problem.

Numerically, we consider the same splitting scheme used in an unconstrained formulation [21] to minimize the on the gradient, followed by the alternating direction method of multipliers (ADMM) [23]. We formulate the linear constraint using an indicator function, which is not strongly convex. As a result, the convergence analysis in the unconstrained model [21] is not directly applicable to this problem. We utilize the property of indicator function as well as the optimality conditions for constrained optimization problems to prove that the sequence generated by the proposed algorithm has a subsequence converging to a stationary point. Under a stronger assumption, we can establish the convergence of the entire sequence, referred to as global convergence.

We present some algorithmic insights on computational efficiency of our proposed algorithm for nonconvex optimization. In particular, we show an additional box constraint not only prevents the solution from being stuck at local minima, but also stabilizes the algorithm. Furthermore, we discuss algorithmic behaviors on two types of applications: MRI and computed tomography (CT). For the MRI reconstruction, a subproblem in ADMM has a closed-form solution, while an iterative solver is required for CT. As the accuracy of the subproblem varies between MRI and CT, we shall alter internal settings of our algorithm accordingly. In summary, this paper relies on a constrained formulation to discuss theoretical and computational aspects of a nonconvex regularization for imaging problems. The major contributions are three-fold:

-

(i)

We reveal empirical evidences towards exact recovery of piecewise constant signals and demonstrate the superiority of on the gradient over TV.

-

(ii)

We establish the subsequential convergence of the proposed algorithm and explore the global convergence under the certain assumptions.

-

(iii)

We conduct extensive experiments to characterize computational efficiency of our algorithm and discuss how internal settings can be customized to cater to specific imaging applications, such as MRI and limited-angle CT reconstruction. Numerical results highlight the superior performance of our approach over other gradient-based regularizations.

The rest of the paper is organized as follows. Section 2 defines the notations that will be used through the paper, and gives a brief review on the related works. The empirical evidences for TV’s exact recovery and advantages of the proposed model are given in Section 3. The numerical scheme is detailed in Section 4, followed by convergence analysis in Section 5. Section 6 presents three types of imaging applications: super-resolution, MRI and CT reconstruction problems. Finally, conclusions and future works are given in Section 7.

2 Preliminaries

We use a bold letter to denote a vector, a capital letter to denote a matrix or linear operator, and a calligraphic letter for a functional space. We use to denote the component-wise multiplication of two vectors. When a function (e.g., sign, max, min) applies to a vector, it returns a vector with corresponding component-wise operation.

We adopt a discrete setting to describe the related models. Suppose a two-dimensional (2D) image is defined on an Cartesian grid. By using a standard linear index, we can represent a 2D image as a vector, i.e., the -th component denotes the intensity value at pixel We define a discrete gradient operator,

| (1) |

where are the finite forward difference operator with periodic boundary condition in the horizontal and vertical directions, respectively. We denote and the Euclidean spaces by , then and . We can apply the standard norms, e.g., on vectors and For example, the norm on the gradient, i.e., , is the anisotropic TV regularization [5]. Throughout the paper, we use TV and “ on the gradient” interchangeably. Note that the isotropic TV [1] is the norm, i.e., although Lou et al. [24] claimed to consider a weighted difference of anisotropic and isotropic TV based on the - functional [25, 26, 27, 28] (isotropic TV is not the norm on the gradient.)

We examine the penalty on the gradient in a constrained formulation,

| (2) |

One way to solve for (2) involves the following equivalent form

| (3) |

with two auxiliary variables and . For more details, please refer to [18] that presented a proof-of-concept example for MRI reconstruction. Since the splitting scheme (3) involves two block variables of and , the existing ADMM convergence results [29, 30, 31] are not applicable. An alternative approach was discussed in our preliminary work [21] for an unconstrained minimization problem,

| (4) |

where is a weighting parameter. By only introducing one variable the new splitting scheme (4) can guarantee the ADMM framework with subsequential convergence.

In this paper, we incorporate the splitting scheme (4) to solve the constrained problem (2), which is crucial to reveal theoretical properties of the gradient-based regularizations for image reconstruction, as elaborated in Section 3. Another contribution of this work lies in the convergence analysis, specifically for different optimality conditions of the constrained problem, as opposed to unconstrained formulation presented in [21]. It is true that the constrained formulation limits our experimental design in a noise-free fashion, but it helps us to draw conclusions solely on the model, ruling out the influence from other nuisances such as noises and tuning parameters. Our model (2) is parameter-free, while there is a parameter in the unconstrained problem (4).

3 Empirical studies

We aim to demonstrate the superiority of on the gradient over TV for a super-resolution problem [32], in which a sparse vector can be exactly recovered via the minimization. A mathematical model for super-resolution is expressed as

| (5) |

where is the imaginary unit, is a vector to be recovered, and consists of the given low frequency measurements with . Recovering from is referred to as super-resolution in the sense that the underlying signal is defined on a fine grid with spacing , while a direct inversion of frequency data yields a signal defined on a coarser grid with spacing . For simplicity, we use matrix notation to rewrite (5) as , where is a sampling matrix that collects the required low frequencies and is the Fourier transform matrix. A sparse signal can be represented by where is the -th canonical basis in , is the support set of , and are coefficients. Following the work of [32], the sparse spikes are required to be sufficiently separated to guarantee the exact recovery of the minimization. To make the paper self-contained, we provide the definition of minimum separation in Definition 1 and an exact recovery condition in Theorem 1.

Definition 1.

(Minimum Separation [32]) For an index set , the minimum separation (MS) of is defined as the closest wrap-around distance between any two elements from ,

| (6) |

Theorem 1.

[32, Corollary 1.4] Let be the support of . If the minimum separation of obeys

| (7) |

then is the unique solution to the constrained minimization problem,

| (8) |

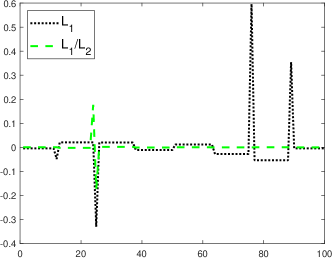

We empirically extend the analysis from sparse signals to sparse gradients. For this purpose, we construct a one-bar step function of length 100 with the first and the last elements taking value 0, and the remaining elements equal to 1, as illustrated in Figure 1. The gradient of such signal is 2-sparse with MS to be due to wrap-around distance. By setting we only take low frequency measurements, and reconstruct the signal by minimizing either or on the gradient. For simplicity, we adopt the CVX MATLAB toolbox [33] for solving the TV model,

| (9) |

where we use to be consistent with our setting (2). Note that the TV model (9) is parameter free, while we need to tune an algorithmic parameter for . Please refer to Section 4 for more details on the minimization, in which one subproblem can be solved by CVX, and Section 6.1 for sensitivity analysis on this parameter.

|

|

|

|

By varying the value of that changes MS of the spikes in gradient, we compute the relative errors between the reconstructed solutions and the ground-truth signals. If we define an exact recovery for its relative error smaller than , we observe in Figure 1 that the exact recovery by occurs at which implies that MS is larger than or equal to . This phenomenon suggests that Theorem 1 might hold for sparse gradients by replacing the norm with the total variation. Figure 1 also shows the exact recovery by at meaning that can deal with less separated spikes than . Moreover, we further study the reconstruction results at where both models fail to find the true sparse signal. The restored solutions by these two models as well as the different plots between restored and ground truth are displayed in Figure 2, showing that our ratio model has smaller relative errors than .

|

|

|

|

Figure 2 illustrates that the TV solution can not reach the top of the bar in the ground-truth, which is referred to as loss-of-contrast. Motivated by this well-known drawback of TV, we postulate that the signal contrast may affect the performance of and . To verify, we examine a two-bar step function, in which the contrast varies by the relative heights of the two bars. Following MATLAB’s notation, we set and the value of remaining elements uniformly as ; see Figure 3 for a general setting. We fix , and vary the value of to generate signals with different intensity contrasts. Considering four spikes in the gradient, we set or equivalently 9 low-frequency measurements to reconstruct the signal. The reconstruction errors are plotted in Figure 3, which shows that fails in all the cases, and can find the signals except for . We further examine a particular case of in Figure 4, where both models fail to get an exact recovery, but yields smaller oscillations than near the edges. Figures 3 and 4 demonstrate that is better at preserving image contrast than .

We verify that all the solutions of and satisfy the linear constraint with high accuracy thanks for CVX. We further investigate when the approach fails, and discover that it yields a solution that has a smaller norm compared to the norm of the ground-truth, which implies that is not sufficient to enforce gradient sparse. On the other hand, solutions often have higher objective value than the ground-truth, which calls for a better algorithm that can potentially find the ground-truth. We also want to point out that solutions depend on initial conditions. In Figure 1 and Figure 3, we present the smallest relative errors among 10 random vectors for initial values.

The minimum separation distance in 1D (Definition 1) can be naturally extended to 2D. In fact, there are two types of minimum separation definitions in 2D: one uses the norm to measure the distance [32], while another definition is called Rayleigh regularity [34, 35]. The exact recovery for 2D sparse vectors was characterized in [35] with additional restriction of positive signals. Both distance definitions were empirically examined in [12] for point-source super-resolution. When extending to 2D sparse gradient, one can compute the gradient norm at each pixel, and separation distance can be defined as the distance between any two locations with non-zero gradient norm. To the best of our knowledge, there is no analysis on the exact recovery of sparse gradients, no matter whether it is in 1D or 2D. In Section 3, we devote some empirical evidences, showing a similar relationship between sparse gradient recovery and minimum separation as Theorem 1, which calls for a theoretical justification in the future. Once the extension from 1D sparse vectors to 1D sparse gradients is established, it is expected that the analysis can be applied to sparse gradients in 2D to facilitate theoretical analysis in imaging applications.

4 The proposed approach

Starting from (2), we incorporate an additional box constraint in the model, i.e.,

| (10) |

The notation means that every element of is bounded by The box constraint is reasonable for image processing applications [36, 37], since pixel values are usually bounded by or . On the other hand, the box constraint is particularly helpful for the model to prevent its divergence [19].

We use the indicator function to rewrite (10) into the following equivalent form

| (11) |

where denotes the indicator function that forces to belong to a feasible set , i.e.,

| (12) |

The augmented Lagrangian function corresponding to (11) can be expressed as,

| (13) |

where is a dual variable and is a positive parameter. Then ADMM iterates as follows,

| (14) |

The update for is the same as in [18], which has a closed-form solution of

| (15) |

where is a random vector with the norm being and for

We elaborate on the -subproblem in (14), which can be expressed by the box constraint, i.e.,

| (16) |

To solve for (16), we introduce two variables, for the box constraint and for the gradient, thus getting

| (17) |

The augmented Lagrangian function corresponding to (17) becomes

| (18) |

where are dual variables and are positive parameters. Here we have in the superscript of to indicate that it is the Lagrangian for the -subproblem in (14) at the -th iteration. The ADMM framework to minimize (17) leads to

| (19) |

where the subscript represents the inner loop index, as opposed to the superscript for outer iterations in (14). By taking derivative of with respect to , we obtain a closed-form solution given by

| (20) |

where stands for the identity matrix. When the matrix involves frequency measurements, e.g. in super-resolution and MRI reconstruction, the update in (20) can be implemented efficiently by the fast Fourier transform (FFT) for periodic boundary conditions when defining the derivative operator in (1). For a general system matrix , we adopt the conjugate gradient descent iterations [38] to solve for (20).

The -subproblem in (19) also has a closed-form solution, i.e.,

| (21) |

where is called soft shrinkage and denotes element-wise multiplication. We update by a projection onto the -box constraint, which is given by

In summary, we present an ADMM-based algorithm to minimize the on the gradient subject to a linear system with the box constraint in Algorithm 1. If we only run one iteration of the -subproblem (19), the overall ADMM iteration (14) is equivalent to the previous approach [18].

5 Convergence analysis

We intend to establish the convergence of Algorithm 1 with the box constraint, which is extensively tested in the experiments. Since our ADMM framework (14) share the same structure with the unconstrained formulation, we adapt some analysis in [21] to prove the subsequential convergence for the proposed model (10). For example, we make the same assumptions as in [21],

Assumption 1.

Remark 1.

We have in the model as the denominator shall not be zero. It is true that does not imply a uniform lower bound of such that in Assumption 1. Here we can redefine the divergence of an algorithm by including the case of , which can be checked numerically with a pre-set value of .

Unlike the unconstrained model (4), the strong convexity of with respect to does not hold due to the indicator function . Besides, we have additional dual variable which is not in the unconstrained model. To avoid redundancies to [21], we focus on the different strategies to the unconstrained case, such as optimality conditions and subgradient of the indicator function, when proving convergence for the constrained problem, e.g., in Lemmas 1-2 and Theorem 2.

Lemma 1.

Proof.

Denote as the smallest eigenvalue of the matrix We show is strictly positive. If there exists a nonzero vector such that . It is straightforward that and , so one shall have and , which contradicts in 1. Therefore, there exists a positive such that

By letting and using , we have

| (23) |

We express the -subproblem in (14) equivalently as

The optimality conditions state that and there exist three sets of vectors such that

| (24) |

with . By the complementary slackness, we have and

| (25) |

which also holds for Using the definition of subgradient, , and (23)-(25), we obtain that

where . The bounds of and exactly follow [21, Lemma 4.3] for the unconstrained formulation, and hence we omit the rest of the proof. ∎

Remark 2.

Lemma 1 requires to be sufficiently large so that two parameters and are positive. Following the proof of [21, Lemma 4.3], and can be explicitly expressed as

| (26) |

where . Note that the assumption on is a sufficient condition to ensure the convergence, and we observe in practice that a relatively small often yields good performance.

Lemma 2.

(subgradient bound) Under 1, there exists a vector and a constant such that

| (27) |

Proof.

We define

Clearly by the subgradient definition, we can prove that and which implies that Combining the definition of with (24) leads to

| (28) |

To estimate an upper bound of we apply the chain rule of sub-gradient, i.e., , where thus leading to . Therefore, we have

It further follows from (28) that

| (29) |

We can also define such that

and estimate the upper bounds of and By denoting the remaining proof is the same as in [21, Lemma 4.4]. ∎

Theorem 2.

(subsequential convergence) Under Assumption 1 and a sufficiently large , the sequence generated by (14) always has a subsequence convergent to a stationary point of , namely, .

Proof.

Since is bounded, then is bounded; i.e., there exists a constant such that The optimality condition of the -subproblem in (14) leads to

| (30) |

where . Using the dual update we have

| (31) |

Due to in 1, we get

which implies the boundedness of . It follows from the -update (15) that is also bounded. Therefore, the Bolzano-Weierstrass Theorem guarantees that the sequence has a convergent subsequence, denoted by as . In addition, we can estimate that

which gives a lower bound of owing to the boundedness of and . It further follows from Lemma 1 that converges due to its monotonicity.

Lastly, we discuss the global convergence, i.e., the entire sequence converges, which is stronger than the subsequential convergence as in Theorem 2, under a stronger assumption that the augmented Lagrangian has the Kurdyka-Łojasiewicz (KL) property [39]; see Definition 2. The global convergence of the proposed scheme (14) is characterized in Theorem 3, which can be proven in a similar way as [40, Theorem 4]. Unfortunately, the KL property is an open problem for the functional, not to mention on the gradient.

Definition 2.

(KL property, [41]) We say a proper closed function satisfies the KL property at a point if there exist a constant , a neighborhood of and a continuous concave function with such that

-

(i)

is continuously differentiable on with on ;

-

(ii)

for every with , it holds that

where denotes the distance from a point to a closed set measured in with a convention of .

Theorem 3.

Proof.

The proof is almost the same as [40, Theorem 4], thus omitted here.∎

6 Experimental results

In this section, we test the proposed algorithm on three prototypical imaging applications: super-resolution, MRI reconstruction, and limited-angle CT reconstruction. As analogous to Section 3, super-resolution refers to recovering a 2D image from low-frequency measurements, i.e., we restrict the data within a square in the center of the frequency domain. The data measurements for the MRI reconstruction are taken along radial lines in the frequency domain; such a radial pattern [42] is referred to as a mask. The sensing matrix for the CT reconstruction is the Radon transform [43], while the term “limited-angle” means the rotating angle does not cover the entire circle [44, 45, 46].

We evaluate the performance in terms of the relative error (RE) and the peak signal-to-noise ratio (PSNR), defined by

where is the restored image, is the ground truth, and is the maximum peak value of

To ease with parameter tuning, we scale the pixel value to for the original images in each application and rescale the solution back after computation. Hence the box constraint is set as . We start by discussing some algorithmic behaviors regarding the box constraint, the maximum number of inner iterations, and sensitivity analysis on algorithmic parameters in Section 6.1. The remaining sections are organized by specific applications. We compare the proposed approach with total variation ( on the gradient) [1] and two nonconvex regularizations: for and - for on the gradient as suggested in [24]. To solve for the model, we replace the soft shrinkage (21) by the proximal operator corresponding to that was derived in [47], and apply the same ADMM framework as the minimization. To have a fair comparison, we incorporate the box constraint in , , -, and models. We implement all these competing methods by ourselves and tune the parameters to achieve the smallest RE to the ground-truth. Due to the constrained formulation, no noise is added. We set the initial condition of to be a zero vector for all the methods. The stopping criterion for the proposed Algorithm 1 is when the relative error between two consecutive iterates is smaller than for both inner and outer iterations. All the numerical experiments are carried out in a desktop with CPU (Intel i7-9700F, 3.00 GHz) and MATLAB 9.8 (R2020a).

6.1 Algorithmic behaviors

We discuss three computational aspects of the proposed Algorithm 1. In particular, we want to analyze the influence of the box constraint, the maximum number of inner iterations (denoted by jMax), and the algorithmic parameters on the reconstruction results of MRI and CT problems. We use MATLAB’s built-in function phantom, which is called the Shepp-Logan (SL) phantom, to test on 6 radial lines for MRI and scanning range for CT. The analysis is assessed in terms of objective values and RE versus the CPU time.

| MRI (jMax = 5) | CT (jMax = 1) |

|---|---|

|

|

|

|

In Figure 5, we present algorithmic behaviors of the box constraint for both MRI and CT problems, in which we set jMax to be 5 and 1, respectively (we will discuss the effects of inner iteration number shortly.) In the MRI problem, the box constraint is critical; without it, our algorithm converges to another local minimizer, as RE goes up. With the box constraint, the objective values decrease faster than in the no-box case, and the relative errors drop down monotonically. In the CT case, the influence of box is minor but we can see a faster decay of RE than the no-box case after 200 seconds. In the light of these observations, we only consider the algorithm with a box constraint for the rest of the experiments.

| MRI (jMax = 1,3,5,10) | CT (jMax = 1,3,5,10) |

|---|---|

|

|

|

|

We then study the effect of jMax on MRI/CT reconstruction problems in Figure 6. We fix the maximum outer iterations as 300, and examine four possible jMax values: 1, 3, 5 and 10. In the case of MRI, jMax = 10 causes the slowest decay of both objective value and RE. Besides, we observe that only one inner iteration, which is equivalent to our previous approach [18], is not as efficient as more inner iterations to reduce the RE in the MRI problem. The CT results are slightly different, as one inner iteration seems sufficient to yield satisfactory results. The disparate behavior of CT to MRI is probably due to inexact solutions by CG iterations. In other words, more inner iterations do not improve the accuracy. Following Figure 6, we set jMax to be 5 and 1 in MRI and CT, respectively, for the rest of the experiments.

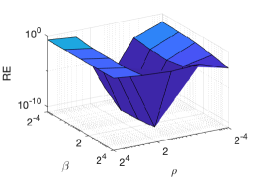

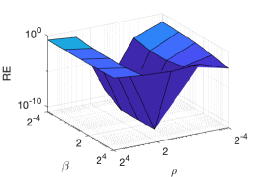

Lastly, we study the sensitivity of the parameters in our proposed algorithm to provide strategies for parameter selection. For simplicity, we set as their corresponding auxiliary variables represent . In the MRI reconstruction problem, we examine three values of and two settings of the number of maximum outer iterations, i.e., kMax For each combination of and kMax, we vary parameters for and plot the RE in Figure 7. We observe that small values of work well in practice, although we need to assume a sufficiently large value for when proving the convergence results in Theorem 2. Besides, a larger kMax value leads to larger valley regions for the lowest RE, which verifies that only and affect the convergence rate. Figure 7 suggests that our algorithm is generally insensitive to all these parameters and as long as is small. Similarly in the CT reconstruction, we set kMax , and for Figure 8 shows that and can be selected in a wide range, especially for large number of outer iterations. But our algorithm is sensitive to for the CT problem, as or yields larger errors than . In the light of this sensitivity analysis, we can tune parameters by finding the optimal combination among a candidate set for each problem, specifically paying attention to the value of in the limited-angle CT reconstruction.

|

|

|

|

|

|

|

|

|

|

|

|

6.2 Super-resolution

We use an original image from [48] of size to illustrate the performance of super-resolution. As super-resolution is similar to MRI in the sense of frequency measurements, we set up the maximum iteration number as 5 according to Section 6.1. We restrict the data within a square in the center of the frequency domain (corresponding to low-frequency measurements), and hence varying the sizes of the square leads to different sampling ratios. In addition to regularized methods, we include a direct method of filling in the unknown frequency data by zero, followed by inverse Fourier transform, which is referred to as zero-filling (ZF). The visual results of are presented in Figure 9, showing that both and are superior over ZF, , and - Specifically, can recover these thin rectangular bars, while and - lead to thicker bars with white background, which should be gray. In addition, and can recover the most of the letter ‘a’ in the bottom of the image, compared to the other methods, while is better than with more natural boundaries along the six dots in the middle left of the image. One drawback of is that it produces white artifacts near the third square from the left as well as around the letter ‘a’ in the middle. We suspect is not very stable, and the box constraint forces the black-and-white regions near edges. We do not present quantitative measures for this example, as four noisy squares on the right of the image lead to meaningless comparison, considering that all the methods return smooth results.

| Ground truth | ZF | |

|

|

|

| - | ||

|

|

|

6.3 MRI reconstruction

To generate the ground-truth MRI images, we utilize a simulated brain database [49, 50] that has full three-dimensional data volumes obtained by an MRI simulator [51] in different modalities such as T1 and T2 weighted images. As a proof of concept, we extract one slice from the 3D T1 and T2 data as testing images and take frequency data along radial lines. The visual comparisons are presented for 25 radial lines (about 13.74% measurements) in Figure 10. We include the zero-filled method as mentioned in super-resolution, which unfortunately fails to recover the contrast for both T1 and T2. The other regularization methods yield more blurred results than the proposed approach. Particularly worth noticing is that our proposed model can effectively separate the gray matter and white matter in the T1 image, as highlighted in the zoom-in regions. Furthermore, we plot the horizontal and vertical profiles in Figure 11, where we can see clearly that the restored profiles via are closer to the ground truth than the other approaches, especially near these peaks that can be reached by - and , but not As a further comparison, we present the MRI reconstruction results under various number of lines (20, 25, and 30) in Table 1, which demonstrates significant improvements of over the other models in term of PSNR and RE.

| Image | Line | ZF | - | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | RE | PSNR | RE | PSNR | RE | PSNR | RE | PSNR | RE | ||

| T1 | 20 | 21.26 | 22.13% | 27.20 | 11.17% | 27.24 | 11.11% | 27.41 | 10.90% | 29.94 | 8.15% |

| 25 | 23.42 | 17.26% | 30.32 | 7.80% | 30.06 | 8.04% | 30.34 | 7.78% | 33.21 | 5.59% | |

| 30 | 24.07 | 16.02% | 31.92 | 6.48% | 31.63 | 6.70% | 31.70 | 6.65% | 34.84 | 4.63% | |

| T2 | 20 | 17.89 | 33.91% | 21.12 | 23.37% | 21.13 | 23.34% | 21.74 | 21.75% | 23.50 | 17.76% |

| 25 | 18.83 | 30.44% | 22.92 | 18.99% | 23.23 | 18.33% | 23.59 | 17.58% | 25.80 | 13.63% | |

| 30 | 19.42 | 28.43% | 24.27 | 16.26% | 24.76 | 15.36% | 25.10 | 14.78% | 27.60 | 11.09% | |

6.4 Limited-angle CT reconstruction

Lastly, we examine the limited-angle CT reconstruction problem on two standard phantoms: Shepp-Logan (SL) by Matlab’s built-in command (phantom) and FORBILD (FB) [52]. Notice that the FB phantom has a very low image contrast and we display it with the grayscale window of [1.0, 1.2] in order to reveal its structures; see Figure 12. To synthesize the CT projected data, we discretize both phantoms at a resolution of . The forward operator is generated as the discrete Radon transform with a parallel beam geometry sampled at over a range of , resulting in a sub-sampled data of size . Note that we use the same number of projections when we vary ranges of projection angles. The simulation process is available in the IR and AIR toolbox [53, 54]. Following the discussion in Section 6.1, we set jMax for the subproblem. We compare the regularization models with a clinical standard approach, called simultaneous algebraic reconstruction technique (SART) [55].

As the SL phantom has relatively simpler structures than FB, we present an extremely limited angle of only scanning range in Table 2, which shows that achieves significant improvements over SART, , , and - in terms of PSNR and RE. Visually, we present the CT reconstruction results of projection range for SL (SL-) and for FB (FB-) in Figure 12. In the first case (SL-), SART fails to recover the ellipse inside of the skull with such a small range of projection angles. All the regularization methods perform much better owing to their sparsity promoting property. However, the model is unable to restore the bottom skull and preserve details of some ellipses in the middle. The proposed method leads an almost exact recovery with a relative error of and visually no difference to the ground truth. In the second case (FB-), we show the reconstructed images with the window of [1.0, 1.2], and observe some fluctuations inside of the skull. performs the best, while restores the most details of the image among the competing methods. We plot the horizontal and vertical profiles in Figure 13, which illustrates that leads to the smallest fluctuations compared to the other methods. We also observe a well-known artifact of the method, i.e., loss-of-contrast, as its profile fails to reach the height of jump on the intervals such as in the left plot and in the right plot of Figure 13, while has a good recovery in these regions.

|

|

| phantom | range | SART | - | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | RE | PSNR | RE | PSNR | RE | PSNR | RE | PSNR | RE | ||

| SL | 15.66 | 66.95% | 28.32 | 15.57% | 40.25 | 3.95% | 38.15 | 5.02% | 60.77 | 0.37% | |

| 16.08 | 63.78% | 33.33 | 8.75% | 44.06 | 2.54% | 63.34 | 0.28% | 70.42 | 0.12% | ||

| 16.48 | 60.92% | 43.37 | 2.75% | 46.50 | 1.92% | 80.19 | 0.04% | 73.46 | 0.09% | ||

| FB | 15.61 | 40.16% | 25.43 | 12.96% | 58.01 | 0.30% | 26.24 | 11.81% | 46.97 | 1.09% | |

| 16.14 | 37.79% | 28.84 | 8.76% | 59.02 | 0.27% | 29.49 | 8.13% | 49.30 | 0.83% | ||

| 16.64 | 35.68% | 69.68 | 0.08% | 62.05 | 0.19% | 75.67 | 0.04% | 70.57 | 0.07% | ||

7 Conclusions and future works

In this paper, we considered the use of on the gradient as an objective function to promote sparse gradients for imaging problems. We started from a series of 1D piecewise signal recovery and demonstrated the superiority of the ratio model over , which is widely known as the total variation. To facilitate the discussion on the empirical evidences, we focused on a constrained model, and proposed a splitting algorithm scheme that has provable convergence for ADMM. We conducted extensive experiments to demonstrate that our approach outperforms the state-of-the-art gradient-based approaches. Motivated by the empirical studies in Section 3, we will devote ourselves to the exact recovery of the TV regularization with respect to the minimum separation of the gradient spikes. We are also interested in extending the analysis to the unconstrained formulation, which is widely applicable in image processing.

Acknowledgments

C. Wang was partially supported by HKRGC Grant No.CityU11301120 and NSF CCF HDR TRIPODS grant 1934568. M. Tao was supported in part by the Natural Science Foundation of China (No. 11971228) and the Jiangsu Provincial National Natural Science Foundation of China (No. BK20181257). C-N. Chuah was partially supported by NSF CCF HDR TRIPODS grant 1934568. J. Nagy was partially supported by NSF DMS-1819042 and NIH 5R01CA181171-04. Y. Lou was partially supported by NSF grant CAREER 1846690.

References

References

- [1] Rudin L, Osher S, Fatemi E. Nonlinear total variation based noise removal algorithms. Physica D. 1992;60:259–268.

- [2] Bredies K, Kunisch K, Pock T. Total generalized variation. SIAM J Imaging Sci. 2010;3(3):492–526.

- [3] Chen D, Chen YQ, Xue D. Fractional-order total variation image denoising based on proximity algorithm. Appl Math Comput. 2015;257(1):537–545.

- [4] Zhang J, Chen K. A total fractional-Order variation model for image restoration with nonhomogeneous boundary conditions and its numerical solution. SIAM J Imaging Sci. 2015;8(4):2487–2518.

- [5] Osher SJ, Esedoglu S. Decomposition of images by the anisotropic Rudin-Osher-Fatemi model. Comm Pure Appl Math. 2003;57:1609–1626.

- [6] Natarajan BK. Sparse approximate solutions to linear systems. SIAM J Comput. 1995:227–234.

- [7] Candès EJ, Romberg J, Tao T. Stable signal recovery from incomplete and inaccurate measurements. Comm Pure Appl Math. 2006;59:1207–1223.

- [8] Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc. 2001;96(456):1348–1360.

- [9] Zhang C. Nearly unbiased variable selection under minimax concave penalty. Ann Stat. 2010:894–942.

- [10] Zhang T. Multi-stage convex relaxation for learning with sparse regularization. In: Adv. Neural. Inf. Process. Syst.; 2009. p. 1929–1936.

- [11] Shen X, Pan W, Zhu Y. Likelihood-based selection and sharp parameter estimation. J Am Stat Assoc. 2012;107(497):223–232.

- [12] Lou Y, Yin P, Xin J. Point source super-resolution via non-convex based methods. J Sci Comput. 2016;68:1082–1100.

- [13] Lv J, Fan Y. A unified approach to model selection and sparse recovery using regularized least squares. Ann Appl Stat. 2009:3498–3528.

- [14] Zhang S, Xin J. Minimization of transformed penalty: closed form representation and iterative yhresholding algorithms. Comm Math Sci. 2017;15:511–537.

- [15] Zhang S, Xin J. Minimization of transformed penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. Math Program. 2018;169:307–336.

- [16] Chartrand R. Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process Lett. 2007;10(14):707–710.

- [17] You J, Jiao Y, Lu X, Zeng T. A nonconvex model with minimax concave penalty for image restoration. J Sci Comput. 2019;78(2):1063–1086.

- [18] Rahimi Y, Wang C, Dong H, Lou Y. A scale invariant approach for sparse signal recovery. SIAM J Sci Comput. 2019;41(6):A3649–A3672.

- [19] Wang C, Yan M, Rahimi Y, Lou Y. Accelerated schemes for the minimization. IEEE Trans Signal Process. 2020;68:2660–2669.

- [20] Tao M. Minimization of L1 over L2 for sparse signal recovery with convergence guarantee; 2020. http://www.optimization-online.org/DB_HTML/2020/10/8064.html.

- [21] Wang C, Tao M, Nagy J, Lou Y. Limited-angle CT reconstruction via the minimization. SIAM J Imaging Sci. 2021;14(2):749–777.

- [22] Candès EJ, Fernandez-Granda C. Super-resolution from noisy data. J Fourier Anal Appl. 2013;19(6):1229–1254.

- [23] Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn. 2011 Jan;3(1):1–122.

- [24] Lou Y, Zeng T, Osher S, Xin J. A weighted difference of anisotropic and isotropic total variation model for image processing. SIAM J Imaging Sci. 2015;8(3):1798–1823.

- [25] Yin P, Esser E, Xin J. Ratio and Difference of and Norms and Sparse Representation with Coherent Dictionaries. Comm Info Systems. 2014;14:87–109.

- [26] Lou Y, Yin P, He Q, Xin J. Computing sparse representation in a highly coherent dictionary based on difference of and . J Sci Comput. 2015;64(1):178–196.

- [27] Ma T, Lou Y, Huang T. Truncated - models for sparse recovery and rank minimization. SIAM J Imaging Sci. 2017;10(3):1346–1380.

- [28] Lou Y, Yan M. Fast - minimization via a proximal operator. J Sci Comput. 2018;74(2):767–785.

- [29] Guo K, Han D, Wu T. Convergence of alternating direction method for minimizing sum of two nonconvex functions with linear constraints. Int J of Comput Math. 2017;94(8):1653–1669.

- [30] Pang JS, Tao M. Decomposition methods for computing directional stationary solutions of a class of nonsmooth nonconvex optimization problems. SIAM J Optim. 2018;28(2):1640–1669.

- [31] Wang Y, Yin W, Zeng J. Global convergence of ADMM in nonconvex nonsmooth optimization. J Sci Comput. 2019;78(1):29–63.

- [32] Candès EJ, Fernandez-Granda C. Towards a mathematical theory of super-resolution. Comm Pure Appl Math. 2014;67(6):906–956.

- [33] Grant M, Boyd S. CVX: Matlab Software for Disciplined Convex Programming, version 2.1; 2014. http://cvxr.com/cvx.

- [34] Donoho DL. Superresolution via sparsity constraints. SIAM J Math Anal. 1992;23(5):1309–1331.

- [35] Morgenshtern VI, Candes EJ. Super-resolution of positive sources: The discrete setup. SIAM Journal on Imaging Sciences. 2016;9(1):412–444.

- [36] Chan RH, Ma J. A multiplicative iterative algorithm for box-constrained penalized likelihood image restoration. IEEE Trans Image Process. 2012;21(7):3168–3181.

- [37] Kan K, Fung SW, Ruthotto L. PNKH-B: A Projected Newton-Krylov Method for Large-Scale Bound-Constrained Optimization. arXiv preprint arXiv:200513639. 2020.

- [38] Nocedal J, Wright SJ. Numerical Optimization. Springer; 2006.

- [39] Bolte J, Daniilidis A, Lewis A. The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J Optim. 2007;17(4):1205–1223.

- [40] Li G, Pong TK. Global convergence of splitting methods for nonconvex composite optimization. SIAM J Optim. 2015;25(4):2434–2460.

- [41] Attouch H, Bolte J, Redont P, Soubeyran A. Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka-Łojasiewicz inequality. Mathematics of operations research. 2010;35(2):438–457.

- [42] Candès EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inf Theory. 2006;52(2):489–509.

- [43] Avinash C, Malcolm S. Principles of computerized tomographic imaging. Philadelphia, PA, USA: Society for Industrial and Applied Mathematics; 2001.

- [44] Chen Z, Jin X, Li L, Wang G. A limited-angle CT reconstruction method based on anisotropic TV minimization. Phys Med Biol. 2013;58(7):2119.

- [45] Zhang Y, Chan HP, Sahiner B, Wei J, Goodsitt MM, Hadjiiski LM, et al. A comparative study of limited-angle cone-beam reconstruction methods for breast tomosynthesis. Med Phys. 2006;33(10):3781–3795.

- [46] Wang Z, Huang Z, Chen Z, Zhang L, Jiang X, Kang K, et al. Low-dose multiple-information retrieval algorithm for X-ray grating-based imaging. Nucl Instrum Methods Phys. 2011;635(1):103–107.

- [47] Xu Z, Chang X, Xu F, Zhang H. Regularization: A Thresholding Representation Theory and a Fast Solver. IEEE Trans Neural Netw Learn Syst. 2012;23:1013–1027.

- [48] Gonzalez RC, Woods RE, Eddins SL. Digital image processing using MATLAB. Pearson Education India; 2004.

- [49] Cocosco CA, Kollokian V, Kwan RKS, Pike GB, Evans AC. BrainWeb: Online Interface to a 3D MRI Simulated Brain Database. NeuroImage. 1997;5:425.

- [50] Kwan RS, Evans AC, Pike GB. MRI simulation-based evaluation of image-processing and classification methods. IEEE Trans Med Imaging. 1999;18(11):1085–1097.

- [51] Kwan RKS, Evans AC, Pike GB. An extensible MRI simulator for post-processing evaluation. In: International Conference on Visualization in Biomedical Computing. Springer; 1996. p. 135–140.

- [52] Yu Z, Noo F, Dennerlein F, Wunderlich A, Lauritsch G, Hornegger J. Simulation tools for two-dimensional experiments in x-ray computed tomography using the FORBILD head phantom. Phys Med Biol. 2012;57(13):N237.

- [53] Gazzola S, Hansen PC, Nagy JG. IR Tools: a MATLAB package of iterative regularization methods and large-scale test problems. Numer Algorithms. 2019;81(3):773–811.

- [54] Hansen PC, Saxild-Hansen M. AIR tools — MATLAB package of algebraic iterative reconstruction methods. J Comput Appl Math. 2012;236(8):2167–2178.

- [55] Andersen AH, Kak AC. Simultaneous algebraic reconstruction technique (SART): a superior implementation of the ART algorithm. Ultrason Imaging. 1984;6(1):81–94.