MIME: MIMicking Emotions for Empathetic Response Generation

Abstract

Current approaches to empathetic response generation view the set of emotions expressed in the input text as a flat structure, where all the emotions are treated uniformly. We argue that empathetic responses often mimic the emotion of the user to a varying degree, depending on its positivity or negativity and content. We show that the consideration of this polarity-based emotion clusters and emotional mimicry results in improved empathy and contextual relevance of the response as compared to the state-of-the-art. Also, we introduce stochasticity into the emotion mixture that yields emotionally more varied empathetic responses than the previous work. We demonstrate the importance of these factors to empathetic response generation using both automatic- and human-based evaluations.

1 Introduction

Empathy is a fundamental human trait that reflects our ability to understand and reflect the thoughts and feelings of the people we interact with. In the social sciences, research on empathy has evolved into an entire field of study, addressing the social underpinning of empathy Singer09The, the cognitive and emotional aspects of empathy Smith06Cognitive, and its connection to personal and demographic traits Dymond50Personality; Eisenberg14; Krebs75Empathy. The study of empathy has found a wide range of applications in healthcare, including psychotherapy Bohart97Empathy or more broadly as a mechanism to improve the quality of care Mercer02Empathy.

Computational models of empathy have been proposed only in recent years, partly because of the complexity of this behavior which makes it difficult to emulate with computational approaches. In natural language processing, the methods proposed to date address the tasks of understanding expressions of empathy in newswire Buechel18Modeling, counseling conversations Perez-Rosas17Understanding, or generating empathy in dialogue Shen20Counseling; lin-etal-2019-moel. Work has also been done on the construction of empathy lexicons Sedoc20Learning or large empathy dialogue datasets rashkin2018empathetic.

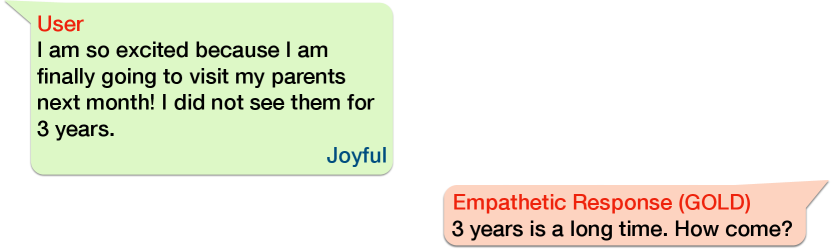

In this paper, we address the task of generating empathetic responses that mimic the emotion of the speaker while accounting for their affective charge (positive or negative). We adopt the idea of emotion mixture, as the state-of-the-art MoEL lin-etal-2019-moel, to achieve the appropriate balance of emotions in positive and negative emotion groups. However, inspired by vhred, we introduce stochasticity into the mixture at the emotion-group level for varied responses. This becomes particularly important in cases where the input utterance can be responded with ambivalent, yet befitting, utterances. Fig. 1 shows one such example where the response to a positive utterance is ambivalent.

The paper makes two important contributions. First, it introduces a new approach for the empathetic generation that encodes context and emotions and uses emotion stochastic sampling and emotion mimicry to generate responses that are appropriate and empathetic for positive or negative statements. We show that this approach leads to performance exceeding the state-of-the-art when trained and evaluated on a large empathy dialogue dataset. Second, through extensive feature ablation experiments, we shed light on the role played by emotion mimicry and emotion grouping for the task of empathetic response generation.

2 Related Work

Open domain conversational models have made good progress in recent years serban2016generative; vinyals2015neural; wolf2019transfertransfo. Many of them can generate persona-consistent zhang-etal-2018-personalizing and diverse cai2018skeletontoresponse responses, but those are not necessarily empathetic.

Producing empathetic responses requires apt handling of emotions and sentiments fung-etal-2016-zara-supergirl; Winata2017NoraTE; bertero-etal-2016-real. zhou2018emotional model psychological concepts as memory states in LSTM HochSchm97 and employ emotion-category embeddings in the decoding process. ijcai2018-618 presents a GAN goodfellow2014generative based framework with emotion-specific generators. On a larger scale, zhou-wang-2018-mojitalk use the emojis in Twitter posts as emotion labels and introduce an attention-based luong2015effective Seq-to-Seq sutskever2014sequence model with Conditional Variational Autoencoder sohn2015learning for emotional response generation. wu2019simple introduce a dual-decoder network to generate responses with a given sentiment (positive or negative). inproceedings formulate a reinforcement learning problem to maximize the user’s sentimental feeling towards the generated response. lin-etal-2019-moel present an encoder-decoder model with each emotion having a dedicated decoder.

Variational Bayes kingma2013auto; rezende2014stochastic has been widely adopted into natural language generation tasks bowman2015generating and successfully extended to dialog generation tasks vhred; wen2017latent.The prominent approach by Hierarchical Encoder-Decoder (VHRED) vhred integrates VAE with the sequence-to-sequence decoder based on Markov assumptions.

3 Methodology

Our model MIME is based on the assumption that empathetic responses often mimic the emotion of the speaker Carr5497 — in our case, the human subject or user. For example, positively-charged utterances are usually responded with positive emotions, but it can be ambivalent as illustrated in Fig. 1. On the other hand, responding to negatively-charged utterances often requires composite emotions that agree with the user’s emotion, but also tries to comfort them with some positivity, such as hopefulness or silver lining. As such, we strive to balance the mimicry of context/user emotion during empathetic response generation.

To this end, we first obtain context representation using the transformer encoder NIPS2017_7181. Similar to the state-of-the-art (SOTA) model MoEL lin-etal-2019-moel, we enforce emotion understanding in the context representation by classifying user emotion during training. For the response emotion, we first group the 32 emotions into two groups containing positive and negative emotions (Section 3.3). Next, a probability distribution of emotions is sampled for each of these groups that corresponds to the emotion of the response. Positive and negative response emotion representations are formed from these distributions and emotion embeddings. These two representations are appropriately combined to balance the two kinds of emotions to form the emotional representation that drives the emotional state during response generation using transformer decoder NIPS2017_7181. Fig. 2 shows the architecture of our model.

3.1 Task Definition

Given the context utterances — utterance consists of maximum words —, the task is to generate empathetic response to the last utterance, , that is always from the target speaker or user. All the odd-numbered () and even-numbered () utterances belong to the user and the empathetic agent, respectively. Optionally, the context/user emotion can be predicted for emotion understanding. The emotions are listed in Table 1.

3.2 Context Encoding

Following lin-etal-2019-moel, firstly, the contextual utterances are sequentially concatenated into a string of () number of words , where is concatenation operator.

As MoEL, each word is represented as a sum of three embeddings (): semantic word embedding (), positional embedding (), and speaker embedding (), where . Therefore, the context is represented as

| (1) |

where .

As MoEL, transformer encoder NIPS2017_7181 is used for context propagation within the utterances and words in . Moreover, inspired by BERT devlin-etal-2019-bert, one additional token is prepended to the context sequence to encode the entirety of the context:

| (2) |

where is the transformer encoder of output size and contains the context-enriched representations of the contextual words. Context-enriched representation of token, , is taken as the overall context representation:

| (3) |

Emotion Embedding and Classification.

As Rashkin2018IKT and MoEL, to explicitly infuse emotion into the context representation , we train a emotion classifier on . We train emotion embeddings ( is the number of emotion classes) to represent each emotion. We maximize the similarity between and user-emotion representation , being the user-emotion label, using cross-entropy loss :

| (4) | ||||

| (5) | ||||

| (6) |

where and .

3.3 Response Generation (Decoder)

Our primary assumption behind this model is that the empathetic agent mimics the user’s emotion to some degree during the response. Specifically, positive emotion is often responded with a closely positive response. Negative emotion, however, is likely responded with negativity mixed with some positivity to uphold the moral.

Emotion Grouping.

We split the 32 emotion types into two groups containing 13 positive and 19 negative emotions, as listed in Table 1. This split is guided by our intuition.

| Positive | Negative |

| confident, joyful, grateful, impressed, proud, excited, trusting, hopeful, faithful, prepared, content, surprised, caring | afraid, angry, annoyed, anticipating, anxious, apprehensive, ashamed, devastated, disappointed, disgusted, embarrassed, furious, guilty, jealous, lonely, nostalgic, sad, sentimental, terrified |

Response-Emotion Sampling.

There are more than one correct way to respond empathetically. However, we observed that the SOTA model, MoEL, often resorts to generic and repetitive, although empathetic, responses. Therefore, inspired by vhred, we introduce stochasticity in the response-emotion determination that resulted in emotionally more varied responses in our experiments. To this end, we sample response-emotion distributions and , from the context — specifically, in Eq. 3 —, for both positive and negative emotion groups, respectively. Hence, we sample an unnormalized distribution () from distribution . This is passed to a fully-connected layer () with softmax activation to obtain the normalized distribution ( and ):

| (7) | ||||

| (8) | ||||

| (9) |

The emotion representation for each emotion group, , is obtained by pooling the corresponding emotion embeddings using the respective distribution :

| (10) |

where are emotion embeddings in the emotion group — as defined in Table 1.

Sampling from distribution is reparameterized as follows:

| (11) | ||||

| (12) | ||||

| (13) | ||||

| (14) | ||||

| (15) |

where , are fully-connected layers with output sizes . Following vhred, is obtained by maximizing evidence lower-bound ():

| (16) |

where is the approximate posterior distribution, defined as

| (17) |

which is similarly reparameterized, for sampling during the training only, as , except is concatenated to .

Emotion Mimicry.

Following Carr5497, it is reasonable to assume that the empathetic response to an emotional utterance would likely mimic the emotion of the user to some degree. Responding empathetically to positive utterances usually requires positivity — there can be ambivalence (Fig. 1). On the other hand, the responses to negative utterances should contain some empathetic negativity, but mixed with some positivity to soothe the user’s negativity. Thus, we generate two distinct response-emotion-refined context representations — mimicking and non-mimicking — that are appropriately merged to obtain response-decoder input.

Naturally, mimicking and non-mimicking emotion representations — and — are defined as follows:

| (18) | ||||

| (19) |

Firstly, response-emotion representations — and — are concatenated to the context-enriched word representations in (Eq. 2) to provide the context () the cues on the response emotion:

| (20) | ||||

| (21) |

where are fed to a transformer encoder () to obtain mimicking and non-mimicking response-emotion-refined context representations and , respectively:

| (22) | ||||

| (23) |

where .

Response-Emotion-Refined Context Fusion.

Enabling mixture of positive and negative emotions, inspired by lin-etal-2019-moel and the instance in Fig. 1, could lead to diverse response generation as compared to considering exclusively positive or negative emotion. To achieve this mixture, we concatenate and at word level, as opposed to sequence level, to obtain . Then, is fed to a gate consisting of a fully-connected layer () with sigmoid activation, resulting that determines the contribution of postive and negative response-emotion-refined contexts to the response to be generated. Subsequently, is multiplied with the gate output, yielding the refined context that is fed to another fully-connected layer to obtain the fused response-emotion-refined context :

| (24) | ||||

| (25) | ||||

| (26) | ||||

| (27) |

Response Decoding.

For the final response generation from the response-emotion-refined context , following MoEL, a transformer decoder (), with as key and as value, is employed:

| (28) | |||

| (29) | |||

| (30) |

where , is the number tokens in response , is a fully-connected layer of output size — also the vocabulary size —, , and is the probability distribution on each response token.

Finally, categorical cross-entropy quantifies the generation loss with respect to the gold response :

| (31) |

3.4 Training

Naturally, we combined all the losses for model training:

| (32) |

Total loss is optimized using Adam DBLP:journals/corr/KingmaB14 optimizer with learning-rate, patience, and batch-size set to 0.0001, 2, and 16, respectively. Loss weights, and are set to 1. For the sake of comparability with the SOTA, the semantic word embeddings () are initialized with GloVe DBLP:conf/emnlp/PenningtonSM14 embeddings. All the hyper-parameters are optimized using grid search on the validation set, resulting and beam-size being 300 and 5, respectively.

4 Experimental Settings

During inference, we use the emotion classifier (Eq. 5) with emotion grouping (Table 1) to determine the positivity or negativity of the context that is necessary for the mimicking and non-mimicking emotion representations.

4.1 Dataset

We evaluate our method on EmpatheticDialogues111https://github.com/facebookresearch/EmpatheticDialogues Rashkin2018IKT, a dataset that contains 24,850 open-domain dyadic conversations, between two users, where one responds emphatically to the other. For our experiments, we use the 8:1:1 train/validation/test split, defined by the authors of this dataset. Each sample consists of a context — defined by an excerpt of a full conversation and the emotion of the user — and the empathetic response to the last utterance in the context. There are a total of 32 different emotion categories roughly uniformly distributed across the dataset.

4.2 Baselines and State of the Art

We do not compare MIME with affective response generation models zhou2018emotional as they require the response emotion to be explicitly provided, and the response may not necessarily be empathetic. As such, MIME is compared against the following models:

Multitask-Transformer Network (Multi-TR).

Following Rashkin2018IKT, a transformer encoder-decoder NIPS2017_7181 generates a response as the user emotion is classified from the encoder output — equivalent to in Eq. 3.

Mixture of Empathetic Listeners (MoEL).

This state-of-the-art method lin-etal-2019-moel performs user-emotion classification as Multi-TR. However, in contrast to our method, it employs emotion-specific decoders whose outputs are aggregated and fed to a final decoder to generate the empathetic response.

4.3 Evaluation

Although BLEU papineni-etal-2002-bleu has long been used to compare system-generated response against the human-gold response, liu-etal-2016-evaluate argues against its efficacy in open-domain where the gold response is not necessarily the only correct response. Therefore, as MoEL, we keep BLEU mostly as a reference. Following MoEL and Rashkin2018IKT, we rely on human-evaluated metrics:

Human Ratings.

Firstly, we randomly sample four instances of each of the 32 emotion labels from the test set, resulting in a total of 128 instances, compared to the 100 instances used for the evaluation of MoEL lin-etal-2019-moel. Next, we ask three human annotators to rate each sub-sampled model response on a scale from 1 to 5 (best score) on three distinct attributes: empathy (How much emotional understanding does the response show?), relevance (How much topical relevance does the response have to the context?), and fluency (How much linguistic clarity does the response have?). Scores across 128 samples and three annotators are averaged to obtain the final rating.

Human A/B Test.

Given two models A and B — in our case MoEL and MIME (our model), respectively — we ask three human annotators to pick the model with the best response for each of the 128 sub-sampled test instances. The annotators can select a Tie if the responses from both models are deemed equal. The final verdict on each instance is determined by majority voting. In case no two annotators agree on a selection – that is all three annotators reached three distinct conclusions: MoEL, MIME, and Tie – we bring in a fourth annotator. From this, we calculate the percentage of samples where A or B generates a better response and where A and B are equal.

5 Results and Discussions

5.1 Response-Generation Performance

| Methods | #params. | BLEU | Human Ratings | ||

|---|---|---|---|---|---|

| Emp. | Rel. | Flu. | |||

| Multi-TR | 16.95M | 2.92 | 3.67 | 3.47 | 4.30 |

| MoEL (SOTA) | 23.10M | 2.90 | 3.71 | 3.32 | 4.31 |

| MIME | 17.89M | 2.81 | 3.87 | 3.60 | 4.28 |

Following Table 2, responses from MIME show improved empathy over MoEL and Multi-TR. We surmise this was achieved by modeling our primary intuition of appropriately mimicking the user’s emotion in the context thorough stochasticity and positive/negative grouping. Moreover, the usage of trained emotion embeddings (), shared between the emotion classifier and response decoder, seems to encode refined context-invariant emotional and emotion-specific linguistics cues that may lead to empathetically-improved response generation. The SOTA model, MoEL, does train a similar emotion embedding, but it is setup as the key of a key-value memory miller-etal-2016-key which leads to a weaker connection with the decoder, resulting in a less emotional-context flow. We believe this embedding sharing further leads to improved relevance rating for MIME, since contextual information flow is now shared between emotion embeddings and encoder output (Eq. 2). This sharing intuitively leads to refinement in context flow.

However, we also observe that the responses from our model have worse fluency than the other models, including MoEL. This might be attributed to the very structure of the decoder, which seems to refine emotional context well. This may have coerced the final transformer decoder to focus more on emotionally-apt tokens of the response than appropriate stop-words that have no intrinsic emotional content but lead to grammatical clarity.

Human A/B Test.

Based on the results in Table 3, we note that the responses from MIME are more often preferable to humans than the responses from MoEL and Multi-TR. This correlates with the results in Table 2 that indicate better empathy and contextual relevance for MIME. The inter-annotator agreement was 0.33 of the kappa score (fair).

| Tie | |||

|---|---|---|---|

| Multi-TR | 42.25% | 24.60% | 33.15% |

| MoEL | 38.82% | 28.32% | 32.86% |

Performance on Positive and Negative User Emotions.

| Ratings | Multi-TR | MoEL | MIME | |||

|---|---|---|---|---|---|---|

| Pos | Neg | Pos | Neg | Pos | Neg | |

| Empathy | 3.77 | 3.61 | 3.73 | 3.76 | 4.00 | 3.80 |

| Relevance | 3.51 | 3.45 | 3.21 | 3.40 | 3.77 | 3.49 |

| Fluency | 4.33 | 4.28 | 4.35 | 4.30 | 4.33 | 4.26 |

We observe (Table 4) that the responses generated by MIME for both positive and negative user emotions are generally better in terms of empathy and fluency. Interestingly, MoEL seems to perform better on responding to negative emotions than to positive emotions in terms of empathy and fluency. We posit this stems from the abundance of negative samples in the dataset as compared to positive samples — 13 positive and 19 negative emotions roughly uniformly distributed. This may suggest that MoEL is more sensitive to positive/negative context imbalance in the dataset than MIME and Multi-TR.

5.2 Ablation Study

| Emotion | Emotion | BLEU | Human Ratings | ||

|---|---|---|---|---|---|

| Mimicry | Grouping | Emp. | Rel. | Flu. | |

| ✗ | ✗ | 2.45 0.01 | 3.14 | 3.58 | 4.23 |

| ✗ | ✓ | 2.96 0.02 | 3.67 | 3.63 | 4.09 |

| ✓ | ✓ | 2.81 0.01 | 3.87 | 3.60 | 4.28 |

Effect of Emotion Mimicry.

To assess the contribution of user-emotion mimicry, we disabled it by passing (Eq. 10) directly to Eqs. 20 and 21. This results in a substantial drop in empathy, by 0.2 as per Table 5. We delve deeper by plotting the emotion embeddings produced with and without emotion mimicry in Fig. 3(a) and Fig. 3(b), respectively. It is evident that the separation of positive and negative emotions clusters is much clearer with emotion mimicry than otherwise, suggesting better emotion understanding in the prior case through emotion disentanglement. On the other hand, we observe a slight increase of relevance, by 0.03. We surmise this is caused by the absence of the confounding effect of swapping the value of and , in Eqs. 18 and 19, depending on the user emotion type. This may coerce the same set of parameters to learn processing both positive and negative emotions.

Effect of Emotion Grouping.

Looking at Table 5, we observe a performance drop in both empathy and relevance, by 0.73 and 0.02, respectively, in the absence of emotion grouping. This indicates the importance of having positive and negative emotions treated separately, rather than huddling them into a single distribution. We posit that the latter case causes all the emotions to compete for the importance which may lead to emotion uniformity in some cases or one emotion-type overwhelming the other in other cases. This in turn may lead to emotionally mundane and generic responses.

5.3 Case Study

Context Capturing.

Based on the comparative results for relevance shown in (Table 2), MIME appears to generate responses that are a closer fit to the context than MoEL does. Fig. 4 shows a test instance where MIME pulls key information from the context — the word ‘interview’ — to generate an empathetic and relevant response. The response from MoEL is also empathetic, but somehow more generic. We surmise that this can be attributed to the two-way context flow through the emotion embedding sharing and encoder output, as discussed in Section 5.1.

Similarly, Fig. 5 shows a conversation with an apprehensive user who shares a frightening story with a positive outcome. Here, MoEL fixates on the initial negative emotion of the user and replies with an unwarranted negatively empathetic response. MIME, however, responds with appropriate positivity hinted at the last utterance. Moreover, it can correctly interpret the events described as a ‘beautiful memory’, which is truly empathetic and relevant. Again, a strong mixture of context and emotion, facilitated by the emotion embedding sharing, is likely to be responsible for this.

5.4 Error Analysis

Low Fluency.

As evidenced by Table 2, MIME falters in fluency as compared to MoEL. Fig. 6 shows an instance where MoEL generates an empathetic, yet somewhat generic, and fluent response. In contrast, the first response utterance from MIME — “I would have been to the police” — does make contextual sense. However, the second utterance “I would be a little better” reads incoherent and semantically unclear. Perhaps the model meant something like ‘I would have felt a little safer’. We repeatedly observed such errors, leading to poor fluency. Given the empathy- and relevance-focused structure of our model, we think MIME focused on its learning on empathy and relevance, at the cost of fluency. We believe this issue could be mitigated with additional training samples.

Response to Surprised User Context.

In our experiments, we assumed the emotion surprised to be positive (Table 1), and thus MIME responds with positivity to most test instances incurring surprise as a user emotion. However, this is not accurate, as one can be both positive and negatively surprised — “I recently found out that a person I […] admired did not feel the same way. I was pretty surprised” vs “This mother’s day was amazing!”. We posit that re-annotating the instances with a negatively-surprised user with a new negative emotion, namely shocked, should help alleviate this issue significantly.

Emotion Classification.

The {top-1, top-2, top-5} emotion-classification accuracies for MoEL are {38%, 63%, 74%}, as compared to {34%, 58%, 77%} for MIME. Since the emotion embeddings are shared between encoder and decoder in MIME, it supposedly also encodes some generation-specific information in addition to pure emotion as discussed in Section 5.1, thereby hindering the overall emotion-classification performance. Notably, MIME also performs well on top-5 classification. This is likely due to MIME’s ability to discern positive and negative emotion types — as indicated by Fig. 3(a) — that comes into prominence as you add more likely-labels into the consideration of top- classification by raising .

6 Conclusion

This paper introduced a novel empathetic generation strategy that relies on two key ideas: emotion grouping and emotion mimicry. Also, stochasticity was applied to emotion mixture for varied response generation. We have shown through several human evaluations and ablation studies that our model is better equipped for empathetic response generation than the existing models. However, there remains much room for improvement, particularly, in terms of fluency where our model falters.

The implementation of MIME is publicly available at https://github.com/xxxx/xxxx.