MILPaC: A Novel Benchmark for Evaluating Translation of Legal Text to Indian Languages

Abstract.

Most legal text in the Indian judiciary is written in complex English due to historical reasons. However, only a small fraction of the Indian population is comfortable in reading English. Hence legal text needs to be made available in various Indian languages, possibly by translating the available legal text from English. Though there has been a lot of research on translation to and between Indian languages, to our knowledge, there has not been much prior work on such translation in the legal domain. In this work, we construct the first high-quality legal parallel corpus containing aligned text units in English and nine Indian languages, that includes several low-resource languages. We also benchmark the performance of a wide variety of Machine Translation (MT) systems over this corpus, including commercial MT systems, open-source MT systems and Large Language Models. Through a comprehensive survey by Law practitioners, we check how satisfied they are with the translations by some of these MT systems, and how well automatic MT evaluation metrics agree with the opinions of Law practitioners.

1. Introduction

Most legal text in the Indian judiciary is written in English due to historical reasons, and legal English is particularly complex. However, only about 10% of the general Indian population is comfortable in reading English.111https://censusindia.gov.in/nada/index.php/catalog/42561 Additionally, since different states in India have different local languages, a person residing in a non-native state may need to understand legal text written in a language with which the person is not familiar. For these reasons, when the common Indian confronts a legal situation, this language barrier is a frequent problem. Thus, it is important that legal text be made available in various Indian languages, to improve access to justice for a very large fraction of the Indian population (especially the financially weaker sections). The Indian government is also promoting the need for legal education in various Indian languages.222https://pib.gov.in/PressReleaseIframePage.aspx?PRID=1882225

Since most legal text (e.g., laws of the land, manuals for legal education) is already available in English, the most practical way is to translate legal text from English to Indian languages.

For this translation, one of the several existing Machine Translation (MT) systems can be used, including commercial systems such as the Google Translation system333https://cloud.google.com/translate/docs/samples/translate-v3-translate-text, Microsoft Azure Translation API444https://azure.microsoft.com/en-us/products/cognitive-services/translator, and so on.

Also there has been a lot of academic research on developing MT systems to and between Indian languages (Ramesh et al., 2022; Siripragada et al., 2020; Kunchukuttan, 2020; Haddow and Kirefu, 2020).

However, to our knowledge, very few prior works have focused on Indian language translations in the legal domain (see Section 2 for a description of these works).

Hence, with respect to translation in the legal domain, some important questions that need to be answered are –

(i) how good are the existing Machine Translation (MT) systems for translating legal text to Indian languages? and

(ii) how well do standard MT performance metrics agree with the opinion of Law practitioners regarding the quality of translated legal text?

Till date, there has not been any attempt to answer the questions stated above, and there does not exist any benchmark for evaluating the quality of the translation of legal text to Indian languages.

In this work, we bridge this gap by making the following contributions:

We develop MILPaC (Multilingual Indian Legal Parallel Corpus), one of the first parallel corpus in Indian languages in the legal domain.

The corpus consists of three high-quality datasets carefully compiled from reliable sources of legal information in India, containing parallel aligned text units in English and various Indian languages, including both Indo-Aryan languages (Hindi, Bengali, Marathi, Punjabi, Gujarati, & Oriya) and Dravidian languages (Tamil, Telugu, & Malayalam).555In this paper, we collectively refer to these languages as Indian languages. Note that many of these are low-resource languages.

MILPaC can be used for evaluating MT performance in translating legal text from English to various Indian languages, or from one Indian language to another.

Different from existing parallel corpora in the Indian legal domain (see Section 2), MILPaC is carefully curated from verified legal sources and ratified by Law experts, making it far more reliable for evaluating MT systems in the Indian legal domain. Moreover, MILPaC’s versatility allows its use in other tasks such as cross-lingual question answering, further distinguishing it from other datasets.

We benchmark the performance of several MT systems over MILPaC, including commercial systems, systems developed by the academic community, and the recently developed Large Language Models.

The best performance is found for two commercial systems by Google and Microsoft Azure, and an academic translation system IndicTrans (Ramesh et al., 2022).

A comprehensive survey is also carried out using Law students to understand how satisfied they are with the outputs of current MT systems and what errors are frequently committed by these systems.

We also draw insights into the agreement of automatic translation evaluation metrics (such as BLEU, GLEU, and chrF++) with the opinion of Law students. We find mostly positive but low correlation of automatic metrics with the scores given by Law students for most Indian languages, although high correlation is observed for English-to-Hindi translations.

A flowchart describing the methodology followed in this work is given in Figure 1. The MILPaC corpus is publicly available at https://github.com/Law-AI/MILPaC under a standard non-commercial license. We believe that this corpus, as well as the insights derived in this paper, is an important step towards improving machine translation of legal text to Indian languages, a goal that is important for making Law accessible to a very large fraction of the Indian population.

2. Related Works

Parallel corpora for Indian and non-Indian languages (for non-legal domains): Many parallel corpora exist for translations in the Indian languages. Ramesh et al. (2022) released Samanantar, the largest publicly available parallel corpora for 11 Indian languages. They also released IndicTrans, a transformer-based MT model trained on this dataset. Another parallel corpus for 10 Indian languages was released by Siripragada et al. (2020). The FLORES-200 corpora for evaluation of MT in resource-poor languages was released by Facebook (Costa-jussà et al., 2022). IndoWordNet corpus (Kunchukuttan, 2020) and PMIndia corpus (Haddow and Kirefu, 2020) are other parallel corpora for Indian languages. Also, there are several bilingual English-Indic corpora available, such as the IITB English-Hindi corpus (Kunchukuttan et al., 2018), the BUET English-Bangla corpus (Hasan et al., 2020), the English-Tamil (Ramasamy et al., 2012) and English-Odia (Parida et al., 2020) corpus. Recently, Haulai and Hussain (2023) created the first Mizo–English parallel corpus which covers the low-resource language Mizo.

There are several non-legal parallel corpora consisting of low-resource non-Indian languages as well, such as English-Kurmanji and English-Sorani (Ahmadi et al., 2022) bilingual corpora, KreolMorisienMT (Dabre and Sukhoo, 2022) which consists of two low-resource parallel corpora English-KreolMorisien and French-KreolMorisien, and ChrEn (Zhang et al., 2020) which covers the endangered language Cherokee. Also, a new English-Arabic parallel corpus has been recently introduced by Salloum et al. (2023) to deal with phishing emails. The above-mentioned works are outlined in Table 1. Note that none of the parallel corpora stated above are in the legal domain.

| Corpus | Languages | Description |

|---|---|---|

| Samanantar (Ramesh et al., 2022) | 11 Indian languages | Largest publicly available parallel corpora for Indian languages |

| Parallel corpora by Siripragada et al. (2020) | 10 Indian languages | Sentence aligned parallel corpora |

| IndoWordNet corpus (Kunchukuttan, 2020) | 18 Indian languages | Contains about 6.3 million parallel segments |

| PMIndia corpus (Haddow and Kirefu, 2020) | 13 Indian languages | Corpus includes up to 56K sentences for each language pair |

| IITB English-Hindi corpus (Kunchukuttan et al., 2018) | English-Hindi | Bilingual corpus for MT task |

| BUET English-Bangla corpus (Hasan et al., 2020) | English-Bangla | Bilingual corpus for MT task |

| English-Tamil corpus (Ramasamy et al., 2012) | English-Tamil | Bilingual corpus for MT task |

| English-Odia corpus (Parida et al., 2020) | English-Odia | Bilingual corpus for MT task |

| English-Mizo corpus (Haulai and Hussain, 2023) | English-Mizo | First parallel corpus for the low-resource language Mizo |

| FLORES-200 (Costa-jussà et al., 2022) | 200 languages | Corpora that includes many resource-poor languages |

| Kurdish parallel corpus (Ahmadi et al., 2022) | Sorani-Kurmanji, English-Kurmanji, English-Sorani | Parallel corpora for the two major dialects of Kurdish – Sorani and Kurmanji |

| KreolMorisienMT (Dabre and Sukhoo, 2022) | English-KreolMorisien, French-KreolMorisien | Parallel corpora for low-resource KreolMorisien language |

| ChrEn corpus (Zhang et al., 2020) | English-Cherokee | Covers the endangered language Cherokee |

| English-Arabic corpus (Salloum et al., 2023) | English-Arabic | Parallel corpus for phishing emails detection |

Parallel corpora in the legal domain for non-Indian languages: Organizations such as the United Nations (UN) and the European Union (EU) have developed several parallel corpora in the legal domain for MT. The UN Parallel Corpus v1.0 contains parliamentary documents and their translations over 25 years, in six official UN-languages (Ziemski et al., 2016). Again, based on the European parliament proceedings, Koehn (2005) released Europarl, a parallel corpus in 11 official languages of the EU. Höfler and Sugisaki (2014) released a multilingual corpus - Bilingwis Swiss Law Text collection based on the classified compilation of Swiss federal legislations. The EU also released the EUR-Lex parallel corpora including multiple parallel corpora across 24 European languages (Baisa et al., 2016). These works are summarized in Table 2. In comparison, there has only been little effort in the Indian legal domain towards the development of parallel corpora for translation.

| Corpus | Languages | Description |

|---|---|---|

| UN Parallel Corpus v1.0 (Ziemski et al., 2016) | 6 official UN languages | Parliamentary documents and their translations over 25 years |

| Europarl (Koehn, 2005) | 11 official EU languages | Based on the European parliament proceedings |

| Bilingwis Swiss Law corpus (Höfler and Sugisaki, 2014) | 4 official Swiss languages | Based on the classified compilation of Swiss federal legislations |

| EUR-Lex parallel corpus (Baisa et al., 2016) | 24 European languages | Largest parallel corpus built from European Legal documents |

| Corpus | Languages | Description |

|---|---|---|

| LTRC Hindi-Telegu parallel corpus (Mujadia and Sharma, 2022) | Hindi-Telugu | Small parallel corpus for legal text, only in the 2 Indian languages Hindi and Telegu |

| Anuvaad parallel corpus | 9 Indian languages | Synthetic parallel corpus, suitable for training but not for evaluating since their mappings can be inaccurate |

| MILPaC (developed in this work) | 9 Indian languages | High-quality corpus curated from verified legal sources; suitable for evaluating and benchmarking MT systems; can also be utilized for cross-lingual question answering |

Parallel corpora in the legal domain for Indian languages: To our knowledge, there are only two parallel corpora for the Indian legal domain (summarized in Table 3). First, the LTRC Hindi-Telegu parallel corpus (Mujadia and Sharma, 2022) contains a small parallel corpus from the legal domain, but only in the 2 Indian languages Hindi and Telegu (whereas the MILPaC corpus developed in this work covers as many as 9 Indian languages). Second, the Anuvaad666https://github.com/project-anuvaad/anuvaad-parallel-corpus parallel corpus for legal text in 9 Indian languages. But the corpus seems synthetically created, i.e., the texts of different languages are mapped automatically. Hence, this corpus can be used for training MT models but is not reliable for evaluating MT models due to potential inaccuracies in mappings. Moreover, the legal data sources are not well documented, and there is no detail regarding the quality / expert evaluation of the corpus. Also, Anuvaad does not have the Oriya language. In contrast, the MILPaC corpus is carefully curated from verified legal sources and ratified by Law experts, making it far more reliable and suitable for evaluating MT systems in the Indian legal domain. Also, MILPaC covers Oriya. Moreover, MILPaC’s versatility extends to tasks such as cross-lingual question answering, further distinguishing it from other datasets.

3. The MILPaC Datasets

This section describes the Multilingual Indian Legal Parallel Corpus (MILPaC) which comprises of 3 datasets, comprising a total of 17,853 parallel text pairs across English and 9 Indian languages.

3.1. Dataset 1: MILPaC-IP

We develop this dataset from a set of primers released by a society of Law practitioners – the WBNUJS Intellectual Property and Technology Laws Society (IPTLS) – from a reputed Law school in India. The primers are released in English (EN) and 9 Indian languages, viz., Bengali (BN), Hindi (HI), Marathi (MR), Tamil (TA), Gujarati (GU), Telugu (TE), Malayalam (ML), Punjabi (PA), Oriya (OR). Each primer contains approximately 57 question-answer (QA) pairs related to Indian Intellectual Property laws, each QA pair given in all the Indian languages stated above.

The 10 PDF documents (one corresponding to the primer in each language) were downloaded from the website of the IPTLS society stated above.777https://nujsiplaw.wordpress.com/my-ip-a-series-of-ip-awareness-primers/ The PDF documents had embedded text which was extracted using the pdftotext888https://www.xpdfreader.com/pdftotext-man.html utility. Regular expressions were used to identify the start of each question and answer. Each QA pair in these primers was numbered consistently across all languages, and we used this numbering to identify parallel textual units.

| EN | BN | HI | MR | TA | TE | ML | PA | OR | GU | |

|---|---|---|---|---|---|---|---|---|---|---|

| EN | 110 | 114 | 114 | 114 | 112 | 114 | 114 | 114 | 114 | |

| BN | 365 | 110 | 110 | 110 | 108 | 110 | 110 | 110 | 110 | |

| HI | 365 | 365 | 114 | 114 | 112 | 114 | 114 | 114 | 114 | |

| MR | 365 | 365 | 365 | 114 | 112 | 114 | 114 | 114 | 114 | |

| TA | 365 | 365 | 365 | 365 | 112 | 114 | 114 | 114 | 114 | |

| TE | 112 | 112 | 112 | 112 | ||||||

| ML | 114 | 114 | 114 | |||||||

| PA | 114 | 114 | ||||||||

| OR | 114 | |||||||||

| GU |

Each question and each answer is considered as a ‘textual unit’ in this dataset. Note that, we decided to not break the questions/answers into sentences, due to the following reasons: First, we have alignments in terms of questions and answers, and not their individual sentences. If we split each question and answer into sentences, aligning the sentences would require synthetic methods (like nearest neighbour search) which might have introduced some inaccuracies in the dataset. Since the primers are a high-quality parallel corpus developed manually by Law practitioners, we decided not to use such synthetic methods to maintain a high standard of the parallel corpus. Second, since this dataset contains QA pairs, it can be used for other NLP tasks such as Cross-Lingual Question-Answering. Tokenizing the text units into sentences would remove this potential utility of the dataset.

The number of parallel pairs in the MILPaC-IP dataset for various language-pairs is given in Table 4 (black entries in the upper triangular part). The slight variations in the entries for different language-pairs in Table 4 are explained in Appendix A.1. Some examples of text pairs of the MILPaC-IP are given in Figure 2(a).

3.2. Dataset 2: MILPaC-CCI-FAQ

The second parallel corpus is developed from a set of FAQ booklets released by the Competition Commission of India (CCI). These FAQ booklets are based on The Competition Act, 2002 statute that provides the legal framework to deal with competition issues in India. The FAQs are written in English and 4 Indian languages – Bengali, Hindi, Marathi, and Tamil. Each FAQ booklet contains 184 QA pairs. Each FAQ booklet has been manually translated by CCI officials and can be treated as expert translation, which makes this a reliable source for authentic multilingual parallel data.

The FAQ booklets (PDF documents) corresponding to each language were downloaded from the CCI website999https://www.cci.gov.in/advocacy/publications/advocacy-booklets. These documents are not machine readable; hence, the text was extracted using OCR (as done by Ramesh et al. (2022)). We tried out two OCR systems - Tesseract101010https://github.com/tesseract-ocr/tesseract and Google Vision API111111https://cloud.google.com/vision/docs/samples/vision-document-text-tutorial. Both OCR systems support English and all 9 Indian languages considered in this work. An internal evaluation (details in Appendix, Section C) showed that the Google Vision had better text detection accuracy than Tesseract. Hence, we decided to use Google Vision.

After applying OCR to the PDF documents, a single text file is obtained, which is annotated to separate and align the text units. For all QA pairs, the translations had the same question number in all the documents, and this information was used to align the QA pairs. Note that, as in MILPaC-IP, we do not split the questions and answers into sentences for the same reasons as discussed above.

The number of parallel text pairs for various language-pairs in the MILPaC-CCI-FAQ dataset are given in the Table 4 (blue italicized entries in lower triangular part). As stated earlier, each FAQ booklet contains 184 QA pairs. The answers for 3 QA pairs are given in tables; for these, we did not include the answers and considered only the questions, because of difficulties in accurate OCR of tables. Hence, for each language-pair, we have text pairs, as stated in Table 4. Some example text pairs are given in Figure 2(b).

3.3. Dataset 3: MILPaC-Acts

This dataset is made up from PDF scans (non-machine readable) of Indian Acts (statutes or written laws) documents published by the Indian Legislature. Unlike the previous two datasets (which are already compiled in multiple languages), this parallel corpus is not available readily, and is very challenging and time-consuming to develop.

The first challenge is that, while a lot of Indian Acts are available in English and Hindi, very few are available in other Indian languages. Even among the few Acts that are available in other Indian languages, only a small fraction are suitable for OCR (most other PDFs are of poor texture and resolution). The challenges in OCR of Indian Legal Acts are described in the Appendix (section B).

The English and translated versions of the Acts were obtained from the website of the Indian legislature121212https://legislative.gov.in/central-acts-in-regional-language/. Candidate Acts were selected based on two criteria – (i) availability of translations in multiple languages (so as to get translation-pairs across Indian-Indian languages as well), and availability of good quality scans. 10 Acts were selected as per these criteria; the selected Acts are listed in Appendix, Section A.2.

| EN | BN | HI | MR | TA | TE | ML | PA | OR | GU | |

|---|---|---|---|---|---|---|---|---|---|---|

| EN | 739 | 706 | 578 | 418 | 319 | 443 | 261 | 256 | 316 | |

| BN | 439 | 439 | 319 | 438 | ||||||

| HI | 578 | 319 | 443 | 262 | 256 | |||||

| MR | 319 | 443 | 133 | 128 | ||||||

| TA | ||||||||||

| TE | 319 | |||||||||

| ML | ||||||||||

| PA | 256 | |||||||||

| OR | ||||||||||

| GU |

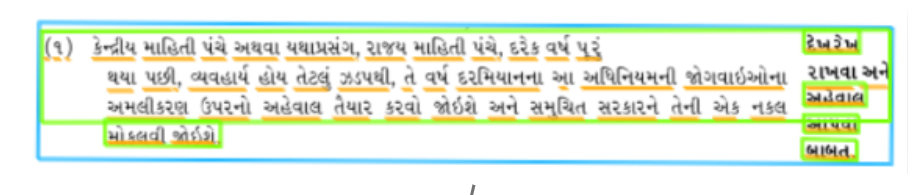

As discussed previously for the MILPaC-CCI-FAQ dataset, Google Vision was chosen for OCR. A similar process was followed to get one text file corresponding to each Act, and the extracted text was then annotated using the same semi-automatic approach as was described for MILPaC-CCI-FAQ. We identified parallel text units (paragraphs, bulleted list elements) by looking at the versions in the two languages and using structural information such as paragraphs, and section/clause numbering. Table 5 gives the number of parallel text pairs for different language-pairs in MILPaC-Acts. Some example text pairs of MILPaC-Acts are given in Figure 2(c).

Note that, compared to MILPaC-IP and MILPaC-CCI-FAQ datasets, aligning the Acts documents is much more challenging and requires frequent manual intervention. The documents are long, and often have multi-column text layout, which confuses OCR systems leading to text segmentation issues (see Appendix, Section B for the main challenges in OCR). Such factors frequently lead to OCR errors that need to be manually corrected by rearranging the extracted text by referring back to the source document. Hence developing this dataset is very time-consuming. This is why, till date, we have been able to process only 10 Acts. Even after the manual corrections, a fraction of the data had to be discarded since they could not be aligned across languages. This non-alignment was mainly due to the factors that are explained in Appendix Section B. According to our estimate, in the MILPaC-Acts, approximately 10% of the textual units had to be discarded on average across all the Acts we considered. The percentage of text units discarded for individual Acts varies between 8% and 14%. All these steps have been taken to ensure the high quality of the dataset, making it very time-consuming to develop.

3.4. Comparing the three datasets in MILPaC

Length of textual units: The distribution of the number of tokens in the textual units of the three datasets is shown in Table 6. The Moses tokenizer131313https://github.com/alvations/sacremoses has been used for tokenizing English sentences, and the IndicNLP library141414https://github.com/anoopkunchukuttan/indic_nlp_library has been used for tokenizing sentences in Indian languages. Across all three datasets, most of the textual units are shorter than 20 tokens. However, the CCI-FAQ dataset has 22% textual units having more than 100 tokens.

| # tokens | % of text units | ||

|---|---|---|---|

| MILPaC-IP | MILPaC-CCI-FAQ | MILPaC-Acts | |

| 1-10 | 34.5% | 17.6% | 18.4% |

| 11-20 | 16.0% | 22.0% | 23.7% |

| 21-30 | 4.7% | 9.4% | 19.6% |

| 31-40 | 6.2% | 5.8% | 13.2% |

| 41-50 | 5.9% | 4.9% | 8.8% |

| 51-60 | 6.3% | 4.9% | 5.7% |

| 61-70 | 5.6% | 4.7% | 3.5% |

| 71-80 | 4.5% | 3.0% | 2.3% |

| 81-90 | 4.0% | 3.7% | 1.4% |

| 91-100 | 3.2% | 1.8% | 1.0% |

| 100 | 9.3% | 22.2% | 2.2% |

Text complexity of the datasets: To compare the three datasets in terms of the complexity of the language contained in them, we have computed two well-known text readability metrics – The Flesch Kincaid Grade Level (FKGL) & Gunning Fog Index (GFI). Higher values of these metrics indicate that the text is more complex, and requires higher levels of expertise/proficiency to comprehend. The results are shown in Table 7. Both metrics imply that the MILPaC-Acts dataset is the most complex (suitable only for highly educated readers, more complex than a standard research paper), the MILPaC-CCI-FAQ dataset has intermediate complexity (suitable for college students), and the MILPaC-IP dataset is least complex (suitable for high school students). These differences are expected since the Acts (out of which MILPaC-Acts is constructed) are the most formal versions of the laws of the land which need to be precise and are hence stated using very formal language. Whereas the other two collections, particularly MILPaC-IP, are meant to be more simple and easily comprehensible for various types of stakeholders, including the general public. Hence, CCI-FAQ and IP datasets are in the form of simplified questions and answers.

| Datasets | FKGL | GFI |

|---|---|---|

| MILPaC-IP | 8.3 | 8.9 |

| MILPaC-CCI-FAQ | 15.1 | 12.2 |

| MILPaC-Acts | 20.4 | 18.7 |

Original and Translation Languages: For the MILPaC-IP dataset, it is specified that the English version is the original version, and the other versions are translated from English. For MILPaC-CCI-FAQ and MILPaC-Acts, the original language is not clearly stated anywhere. But both these datasets are based on Indian legal statutes / Acts, which have been officially published in English and Hindi since Indian independence. Hence, we believe that the English and Hindi versions are the original ones, and the versions in the other languages are translated from one of these two.

3.5. Quality of the datasets

We have attempted to make MILPaC a high-quality parallel corpus for evaluating MT systems, through the following steps.

Reliability of the sources: For MILPaC-Acts and MILPaC-CCI-FAQ, the data was extracted from the official Government of India websites. The data for MILPaC-IP is published by one of India’s most reputed Law Schools. Thus, the translations that we used for constructing the dataset have been done by Government officials/Law practitioners and hence are likely to be of high quality.

Manual corrections: We manually verified a large majority of the data extracted from the PDFs. Wherever the OCR accuracy was compromised due to the poor resolution/quality of the PDFs (notably in MILPaC-Acts), we manually corrected the alignments, thus ensuring the quality of the dataset.

Evaluation of the corpus quality by Law students: Finally, for assessing the quality of our datasets, we recruited 5 senior LLB and LLM students who are native speakers of Hindi, Bengali, Tamil, & Marathi and are also fluent in English, from a reputed Law school in India (details given later in Section 4.4). Hence this evaluation was carried out over these four languages. We asked the Law students to read a large number of English text units and their translations (from the MILPaC corpus) in their native Indian language (across all 3 datasets), and rate the quality of each text-pair on a scale of 1-5, with 1 being the lowest and 5 the highest. The purpose of the survey was explained to the Law students, and they were paid a mutually agreed honorarium for their annotations. On average, 94% of the parallel text-pairs achieved ratings of 4 or 5 from the Law students, which further demonstrates the high quality of the MILPaC corpus.

3.6. Availability of the corpus

The MILPaC corpus is publicly available at https://github.com/Law-AI/MILPaC under a standard non-commercial license. All the legal documents and translations used to develop the MILPaC datasets are publicly available data on the Web. We have obtained formal permissions for using and publicly sharing the datasets – from the Competition Commission of India (CCI) for the MILPaC-CCI-FAQ dataset, from the WBNUJS Intellectual Property and Technology Laws Society (IPTLS) for the MILPaC-IP dataset, and from the Ministry of Law and Justice, Government of India for using and publicly sharing the MILPaC-Acts dataset.

4. Benchmarking Translation Systems

We now use the MILPaC corpus to benchmark the performance of established state-of-the-art Machine Translation (MT) systems that have performed well on other MT benchmark datasets. Though MILPaC can be used to evaluate Indian-to-Indian translations as well, we primarily consider English-to-Indian translation since that is the most common use-case in the Indian legal domain.

4.1. Translation systems

We consider the following MT systems, some of which are commercial, while others are publicly available MT systems. These systems were selected because of their strong performances on well-known MT benchmarks such as FLORES-101 (Goyal et al., 2021), WAT2021151515https://lotus.kuee.kyoto-u.ac.jp/WAT/WAT2021/index.html, and WAT2020 (Nakazawa et al., 2020).

Commercial MT Systems:

(1) GOOG: the Google Cloud Translation - Advanced Edition (v3) system161616https://cloud.google.com/translate/docs/samples/translate-v3-translate-text, which is one of the most popular and widely used commercial MT systems globally. We use the Translation API provided by the Google Cloud Platform (GCP) to access this system (which we abbreviate as GOOG).

(2) MSFT: This is another well-known commercial MT system developed by Microsoft. Similar to GOOG, we use the Translation API offered by Microsoft Azure Cognitive Services (v3)171717https://azure.microsoft.com/en-us/products/cognitive-services/translator to access the system (which we abbreviate as MSFT).

(3) Large Language Models: We have also benchmarked the translation performances of two popular commercial Large Language Models (LLMs) in the GPT-3.5 family181818https://platform.openai.com/playground/p/default-translation developed by OpenAI – Davinci-003 (formally called ‘text-davinci-003’) and GPT-3.5T-Inst (formally called ‘gpt-3.5-turbo-instruct’). These are some of the most powerful models of OpenAI, with GPT-3.5T-Inst standing as the latest GPT-3.5-turbo model within the InstructGPT class. Similar to other commercial systems, we use the API provided by OpenAI to utilize these LLMs. Note that both these LLMs have a token limit of 4096 on the length of the input prompt plus the length of the generated output, where a token generally corresponds to characters of common English text.

Prompt for translation: We conduct the translation task using these LLMs in an one-shot configuration where one example translation is provided within the instruction-based prompt, before asking the LLM to translate an unseen text unit.191919We tried both zero-shot and one-shot translation, and observed better results for one-shot. Hence we report the results of only the one-shot configuration. Suppose we want to translate the textual unit from English to the target language ¡Target_lang¿. For each ¡Target_lang¿ (Indian language), we identify another textual unit in English and its translation in ¡Target_lang¿ from the gold standard translations in MILPaC, to be given as an example within the prompt. This example is chosen in consultation with the Law students (who participated in our surveys, details given later in Section 4.4), such that they are fully satisfied with its gold standard translations in all Indian languages which the Law practitioners know (Hindi, Bengali, Tamil, Marathi). Once (in English) and (in ¡Target_lang¿) are selected, then we gave the prompt to the LLM as “Translate from English to ¡Target_lang¿: ” so that the model can learn from the given example translation () and can then translate into the target language. Also, the text unit given as an example for one-shot translation, was not included in its evaluation.

Open-source MT Systems:

(5) mBART-50: It is a transformer-based seq-to-seq model with multilingual fine-tuning (using the parallel data ML50) from pre-trained mBART model (Liu et al., 2020), that has 12 encoder layers and 12 decoder layers with model dimension of 1024 on 16 attention heads (680M parameters). We use the fine-tuned checkpoint of the multilingual-BART-large-50 model202020https://huggingface.co/facebook/mbart-large-50-one-to-many-mmt (Tang et al., 2020) for this translation task. This model can translate English to 7 of the Indian languages in our datasets. Oriya and Punjabi are not supported by mBART-50; hence mBART-50 is not evaluated for Oriya and Punjabi.

(6) OPUS: It is another one-to-many transformer-based Neural Machine Translation (NMT) system212121https://huggingface.co/Helsinki-NLP/opus-mt-en-mul (Tiedemann and Thottingal, 2020) that can translate from English to all 9 Indian languages in our MILPaC corpus. It has a standard transformer architecture with 6 self-attentive layers in both the encoder and the decoder network with 8 attention heads in each layer and hidden state dimension of 512 (74M parameters). It is trained over the large bitext repository OPUS.

(7) NLLB: A seq-to-seq multilingual MT model (Costa-jussà et al., 2022) which is also based on the transformer encoder-decoder architecture. Two variants of the NLLB are evaluated – the NLLB-1.3B222222https://huggingface.co/facebook/nllb-200-1.3B and NLLB-3.3B232323https://huggingface.co/facebook/nllb-200-3.3B parameters models. NLLB-1.3B is a dense encoder-decoder model with 1.3B parameters with model dimension of 1024, 16 attention heads, 24 encoder layers and 24 decoder layers. NLLB-3.3B is another dense model with a larger model dimension of 2048, 16 attention heads, 24 encoder layers, and 24 decoder layers (3.3B parameters). These variants are trained over NLLB-SEED and PublicBitext. NLLB is primarily intended for translation to low-resource languages. It has been reported to outperform several state-of-the-art MT models over the FLORES-101 benchmark, for many low-resource languages. It allows for translation among 200 languages, which include all the 9 Indian languages present in our corpus.

(8) IndicTrans: A transformer-4x based multilingual NMT model242424https://github.com/AI4Bharat/indicTrans trained over the Samanantar dataset for translation among Indian languages (Ramesh et al., 2022). IndicTrans has 6 encoder and 6 decoder layers, input embeddings of size 1536 with 16 attention heads (434M parameters). It is a single-script model. All languages have been converted into the Devanagari script. As per the authors, this allows better lexical sharing between languages for transfer learning, while preventing fragmentation of the sub-word vocabulary between Indian languages and allows using a smaller subword vocabulary. Moreover, IndicTrans is specifically trained for translation between English and Indian languages, and for English-to-Indian and Indian-to-English translations. IndicTrans has been reported to outperform many other MT models over the established MT benchmarks like FLORES-101, WAT2021, and WAT2020.

There are 2 variants available for IndicTrans – Indic-to-English and English-to-Indic which support 11 Indian languages, including all 9 of the Indian languages present in our corpus. The English-to-Indic model is used for our experiments. We refer the readers to the original paper (Ramesh et al., 2022) for more details.

We use all the above-mentioned systems in their default settings, and without any fine-tuning, since we want to check their performance off-the-shelf.

4.2. Metrics for automatic evaluation

We use the following standard metrics to evaluate the performance of MT systems:

BLEU: BLEU (Bi-Lingual Evaluation Understudy) is an automatic MT evaluation metric (Papineni et al., 2002). It measures the overlap between the translation generated by an MT system () and the reference translation (), by considering n-grams based precision (). It calculates the highest occurrence of n-gram matches, and to avoid counting the same n-gram multiple times, it limits the count of matching n-gram to the highest frequency found in any single reference translation. This Clipped Count is used for computing Clipped Precision (). Next, a term called ‘Brevity Penalty’ (BP) is used to prevent the overfitting of sentence length and to penalize the generated translation that is too short. The formulas for BP and BLEU are as follows:

| (1) |

| (2) |

where denotes the number of tokens in the generated translation, is the number of tokens in the reference translation, and represents the weight of the gram. In general, is 4.

For calculating BLEU scores, we follow the approach of (Ramesh et al., 2022) – we first use the IndicNLP tokenizer to tokenize text in Indian languages. The tokenized text is then fed into the SacreBLEU package (Post, 2018). We state the SacreBLEU signature252525SacreBLEU signature for BLEU: to ensure uniformity and reproducibility across the models.

GLEU: GLEU (Google_BLEU) (Wu et al., 2016) is a modified metric inspired from BLEU and is known to be a more reliable metric for evaluation of translation of individual text units, since BLEU was designed to be a corpus-wide measure. In the GLEU measurement process, all subsequences comprising 1, 2, 3, or 4 tokens from both the generated and reference translations (referred to as n-grams) are recorded. Then, this n-grams based precision and recall are computed. Finally, the GLEU score is determined as the minimum of this precision and recall. We follow the same tokenization process as discussed earlier and use the Huggingface library262626https://github.com/huggingface/evaluate/tree/main/metrics/google_bleu to compute GLEU scores.

chrF: chrF++ is another automatic MT evaluation metric based on character n-grams based precision & recall enhanced with word n-grams. The F-score is computed by averaging (arithmetic mean) across all character and word n-grams, with the default order for character n-grams set at 6 and word n-grams at 2. It has been shown to have better correlation with human judgements (Popović, 2017). Here also, we use the SacreBLEU library (stated above) to compute chrF++ score.272727SacreBLEU signature for chrF++: .

We scale all the three metric values to the range , to make them more comparable.

| EN IN | Model | MILPaC-IP | MILPaC-Acts | MILPaC-CCI-FAQ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BLEU | GLEU | chrF++ | BLEU | GLEU | chrF++ | BLEU | GLEU | chrF++ | ||

| EN BN | GOOG | 27.7 | 30.7 | 56.8 | 12.0 | 17.0 | 40.7 | 52.0 | 53.6 | 74.8 |

| MSFT | 31.0 | 33.8 | 59.4 | 18.4 | 23.1 | 45.6 | 36.5 | 40.4 | 66.2 | |

| Davinci-003 | 12.4 | 17.1 | 40.7 | 7.0 | 11.8 | 31.5 | 12.4 | 17.5 | 39.0 | |

| GPT-3.5T-Inst | 14.5 | 18.3 | 43.2 | 8.1 | 12.6 | 33.2 | 14.9 | 19.1 | 41.9 | |

| NLLB-1.3B | 25.6 | 28.4 | 54.0 | 13.9 | 19.2 | 40.9 | 18.3 | 22.4 | 42.6 | |

| NLLB-3.3B | 23.7 | 27.5 | 53.6 | 13.8 | 19.0 | 40.9 | 18.6 | 22.3 | 42.2 | |

| IndicTrans | 24.7 | 27.3 | 51.7 | 18.6 | 21.8 | 45.5 | 20.9 | 25.6 | 50.2 | |

| mBART-50 | 2.1 | 4.1 | 21.1 | 0.6 | 2.7 | 19.6 | 2.7 | 3.9 | 18.6 | |

| OPUS | 4.5 | 9.8 | 28.2 | 4.2 | 9.3 | 25.7 | 3.0 | 7.0 | 20.4 | |

| EN HI | GOOG | 36.6 | 35.3 | 53.8 | 21.2 | 26.7 | 47.1 | 46.0 | 48.4 | 67.3 |

| MSFT | 38.5 | 37.0 | 54.9 | 46.4 | 48.9 | 67.3 | 45.5 | 48.2 | 67.5 | |

| Davinci-003 | 21.1 | 23 | 42.9 | 15.9 | 20.7 | 39.9 | 25.1 | 28.8 | 47.3 | |

| GPT-3.5T-Inst | 26.3 | 27.6 | 46.6 | 16.9 | 21.0 | 42.7 | 31.6 | 34.1 | 54.0 | |

| NLLB-1.3B | 32.3 | 32.3 | 51.2 | 34.0 | 37.6 | 56.7 | 31.2 | 33.7 | 49.8 | |

| NLLB-3.3B | 34.3 | 33.9 | 52.4 | 36.1 | 39.5 | 58.6 | 33.8 | 35.4 | 51.5 | |

| IndicTrans | 27.0 | 28.1 | 45.1 | 45.7 | 48.2 | 66.6 | 49.1 | 49.8 | 67.1 | |

| mBART-50 | 23.9 | 26.1 | 45.5 | 43.6 | 46.0 | 64.8 | 31.0 | 34.0 | 52.0 | |

| OPUS | 9.8 | 14.2 | 28.3 | 9.3 | 16.4 | 30.2 | 4.9 | 10.2 | 21.1 | |

| EN TA | GOOG | 39.3 | 41.8 | 69.4 | 8.1 | 13.7 | 37.0 | 41.4 | 44.0 | 70.7 |

| MSFT | 35.3 | 38.7 | 68.8 | 12.1 | 17.6 | 46.3 | 29.5 | 33.7 | 64.9 | |

| Davinci-003 | 9.2 | 12.6 | 36.9 | 4.8 | 8.3 | 29.6 | 8.5 | 10.4 | 31.3 | |

| GPT-3.5T-Inst | 6.2 | 9.2 | 34.5 | 3.7 | 6.5 | 28.0 | 7.3 | 9.3 | 32.0 | |

| NLLB-1.3B | 34.2 | 36.7 | 66.5 | 8.7 | 14.9 | 40.6 | 17.0 | 21.0 | 45.2 | |

| NLLB-3.3B | 34.2 | 37.3 | 67.2 | 9.4 | 15.2 | 41.2 | 18.6 | 21.9 | 46.4 | |

| IndicTrans | 21.4 | 25.5 | 51.9 | 11.1 | 16.7 | 43.7 | 22.9 | 26.8 | 56.1 | |

| mBART-50 | 14.8 | 19.6 | 49.2 | 8.2 | 13.6 | 37.9 | 11.2 | 13.6 | 23.6 | |

| OPUS | 5.5 | 10.2 | 28.4 | 9.3 | 16.4 | 30.2 | 2.4 | 6.3 | 19.5 | |

| EN MR | GOOG | 23.0 | 25.6 | 51.6 | 8.6 | 14.6 | 37.5 | 51.3 | 53.0 | 74.8 |

| MSFT | 19.4 | 22.8 | 49.6 | 13.9 | 19.6 | 45.0 | 34.1 | 38.3 | 65.8 | |

| Davinci-003 | 7.6 | 11.4 | 34 | 4.5 | 7.9 | 29.1 | 11.7 | 15.0 | 35.2 | |

| GPT-3.5T-Inst | 9.5 | 13.6 | 37.3 | 3.5 | 6.1 | 26.5 | 9.3 | 12.0 | 33.7 | |

| NLLB-1.3B | 17.6 | 21.7 | 48.0 | 12.8 | 17.8 | 42.3 | 17.9 | 21.1 | 42.3 | |

| NLLB-3.3B | 18.6 | 21.5 | 47.9 | 12.8 | 18.2 | 42.6 | 20.2 | 23.5 | 44.8 | |

| IndicTrans | 16.0 | 19.6 | 44.0 | 12.9 | 18.5 | 42.1 | 28.2 | 32.0 | 56.7 | |

| mBART-50 | 1.9 | 4.1 | 23.6 | 1.7 | 4.6 | 26.4 | 3.7 | 5.1 | 23.6 | |

| OPUS | 4.0 | 8.2 | 24.7 | 3.3 | 8.4 | 23.1 | 2.8 | 6.2 | 18.0 | |

| EN IN | Model | MILPaC-IP | MILPaC-Acts | ||||

|---|---|---|---|---|---|---|---|

| BLEU | GLEU | chrF++ | BLEU | GLEU | chrF++ | ||

| EN TE | GOOG | 22.4 | 23.2 | 48.9 | 6.6 | 11.4 | 28.8 |

| MSFT | 15.8 | 18.3 | 44.8 | 12.0 | 16.9 | 39.4 | |

| Davinci-003 | 6.2 | 9.7 | 29.7 | 4.2 | 7.2 | 22.7 | |

| GPT-3.5T-Inst | 4.4 | 6.8 | 27.9 | 3.5 | 6.0 | 23.0 | |

| NLLB-1.3B | 18.2 | 20.2 | 45.8 | 8.0 | 13.4 | 33.5 | |

| NLLB-3.3B | 18.7 | 20.7 | 46.3 | 7.5 | 12.8 | 33.8 | |

| IndicTrans | 15.5 | 17.6 | 40.6 | 11.9 | 16.8 | 40.4 | |

| mBART-50 | 1.4 | 3.8 | 10.9 | 3.4 | 7.6 | 15.7 | |

| OPUS | 3.7 | 7.4 | 22.7 | 5.4 | 9.9 | 23.7 | |

| EN ML | GOOG | 22.3 | 27.7 | 57.5 | 7.3 | 12.4 | 32.2 |

| MSFT | 34.2 | 37.7 | 66.5 | 10.8 | 17.0 | 46.2 | |

| Davinci-003 | 5.1 | 8.5 | 28.9 | 4.0 | 7.0 | 24.8 | |

| GPT-3.5T-Inst | 5.3 | 8.7 | 31.6 | 2.5 | 4.3 | 21.2 | |

| NLLB-1.3B | 25.6 | 29.7 | 60.0 | 8.0 | 13.0 | 39.3 | |

| NLLB-3.3B | 25.3 | 29.6 | 60.5 | 7.5 | 12.7 | 38.7 | |

| IndicTrans | 19.8 | 24.5 | 48.9 | 16.6 | 21.2 | 50.3 | |

| mBART-50 | 2.6 | 5.7 | 20.3 | 4.4 | 8.7 | 23.9 | |

| OPUS | 3.9 | 8.1 | 25.4 | 5.4 | 10.1 | 24.8 | |

| EN PA | GOOG | 17.8 | 20.8 | 41.3 | 8.9 | 14.1 | 28.6 |

| MSFT | 30.2 | 30.5 | 51.3 | 40.1 | 42.4 | 62.5 | |

| Davinci-003 | 9.1 | 12.9 | 31 | 6.4 | 10.7 | 26.8 | |

| GPT-3.5T-Inst | 13.4 | 16.2 | 35.5 | 6.9 | 10.7 | 29.1 | |

| NLLB-1.3B | 27.5 | 28.1 | 48.5 | 19.6 | 25.0 | 44.0 | |

| NLLB-3.3B | 29.6 | 29.8 | 49.8 | 20.5 | 25.9 | 45.0 | |

| IndicTrans | 28.1 | 28.8 | 47.6 | 24.0 | 28.8 | 48.8 | |

| OPUS | 5.5 | 10.3 | 22.3 | 6.7 | 12.6 | 25.7 | |

| EN OR | GOOG | 2.4 | 6.5 | 29.0 | 4.1 | 8.2 | 26.3 |

| MSFT | 5.5 | 9.0 | 33.7 | 7.6 | 13.3 | 37.3 | |

| Davinci-003 | 3.3 | 6.8 | 25.8 | 3.3 | 6.1 | 22.5 | |

| GPT-3.5T-Inst | 2.1 | 4.4 | 22.4 | 1.9 | 4.3 | 20.8 | |

| NLLB-1.3B | 5.3 | 8.8 | 33.1 | 9.5 | 14.2 | 37.0 | |

| NLLB-3.3B | 6.3 | 10.1 | 34.6 | 10.1 | 15.5 | 39.3 | |

| IndicTrans | 4.9 | 8.6 | 30.5 | 8.9 | 15.0 | 40.4 | |

| OPUS | 2.3 | 5.2 | 21.0 | 3.4 | 7.5 | 22.9 | |

| EN GU | GOOG | 43.6 | 46.0 | 67.8 | 14.3 | 19.5 | 42.1 |

| MSFT | 47.3 | 49.2 | 70.6 | 21.7 | 26.1 | 51.9 | |

| Davinci-003 | 12.3 | 17.3 | 37.2 | 8.5 | 12.3 | 32.0 | |

| GPT-3.5T-Inst | 15.8 | 19.5 | 40.9 | 5.4 | 10.1 | 31.5 | |

| NLLB-1.3B | 43.5 | 45.6 | 66.4 | 20.3 | 24.4 | 49.1 | |

| NLLB-3.3B | 42.6 | 44.8 | 66.2 | 19.7 | 24.2 | 49.8 | |

| IndicTrans | 31.3 | 34.9 | 56.3 | 22.9 | 27.0 | 50.9 | |

| mBART-50 | 1.7 | 3.2 | 5.2 | 0.6 | 3.5 | 3.0 | |

| OPUS | 9.2 | 14.7 | 30.0 | 5.1 | 9.8 | 23.7 | |

4.3. Results of automatic evaluation

Table 8 shows the performances of all the MT systems across the 3 datasets of MILPaC for the languages Bengali (BN), Hindi (HI), Tamil (TA), and Marathi (MR). Table 9 shows the performances of all the MT systems over MILPaC-IP and MILPaC-Acts for the languages Telugu (TE), Malayalam (ML), Punjabi (PA), Oriya (OR), and Gujarati (GU) (since MILPaC-CCI-FAQ does not have these languages).282828All experiments were conducted on a machine having 2 x NVIDIA Tesla P100 16GB GPU.

Note that the Davinci-003 and GPT-3.5T-Inst have a maximum bound on the number of tokens (as stated earlier). Hence, out of the 1,460 EN-IN pairs in the MILPaC-CCI-FAQ dataset, the 77 longest text units could not be fed into the Davinci-003 and GPT-3.5T-Inst models in their entirety (these include 19 text units in BN, 15 in HI, 31 in TA, and 12 in MR). One option was to break these long text units into chunks and then translate chunk-wise, but we preferred not to translate chunk-wise in order to get a fair comparison with other models. Hence, the 77 longest text units were not considered while evaluating Davinci-003 and GPT-3.5T-Inst models over the MILPaC-CCI-FAQ dataset. For all other MT systems, the values reported are averaged over all text-pairs in each dataset.

We find that no single model performs the best in all scenarios. MSFT, GOOG, and IndicTrans are the 3 best models that generally perform the best in most scenarios. It can be noted that the same trend was observed by (Ramesh et al., 2022) for Indian non-legal text, i.e., IndicTrans, GOOG, and MSFT outperform mBART-50 and OPUS. A notable exception is that NLLB performs the best for EN-OR translation, possibly because Oriya is a resource-poor language that NLLB is specially designed to handle.

The translation performances of both Davinci-003 and GPT-3.5T-Inst are relatively poor. One particular problem we observed in the translations using these two LLMs is that, for longer text units, both models sometimes repeat multiple times the translation of the last few words in their translated text.

The scores for MILPaC-Acts are consistently lower than those for other datasets. This is expected since MILPaC-Acts has very formal legal language, which is challenging for all MT systems. Interestingly, though MSFT and GOOG perform the best over most datasets, IndicTrans performs better over MILPaC-Acts for several Indian languages (e.g., Malayalam & Gujarati). The superior performance of IndicTrans over MILPaC-Acts may stem partly from the fact that it was trained on some legal documents from Indian government websites (such as State Assembly discussions) according to (Ramesh et al., 2022). However, it is not known publicly over what data commercial systems such as GOOG and MSFT are trained.

4.4. Evaluation through Law practitioners

Apart from the automatic evaluation of translation performance (as detailed above), we also evaluate the performance of MT systems through a set of Law students. This is an important part of the evaluation, since we can get an idea of how well automatic translation performance metrics agree with the opinion of domain experts.

Recruiting Law students: We recruited senior LLB & LLM students from the Rajiv Gandhi School of Intellectual Property Law which is one of the most reputed Law schools in India. We recruited these students based on the recommendations of a Professor of the same Law school, and considering two requirements – (i) the students should have good Legal domain knowledge; (ii) they should be fluent in English and be native speakers of some Indian language (for which they will evaluate translations). We could obtain Law students who are native speakers of only four Indian languages. Specifically, we recruited 5 Law students in total, among whom 2 are native speakers of Hindi, and the other three are native speakers of Bengali, Tamil, and Marathi (1 for each language). Hence, this human evaluation is carried out for these 4 Indian languages only.

Survey Setup: Given the limited availability of Law students, only the 3 MT systems, GOOG, MSFT, and IndicTrans (the best performers in our automatic evaluation), were evaluated. MILPaC-CCI-FAQ and MILPaC-Acts were selected for this human evaluation since these are the more challenging datasets. We randomly selected 50 English text units from each of MILPaC-CCI-FAQ and MILPaC-Acts datasets, and their translations in Hindi, Bengali, Tamil, and Marathi by the 3 MT systems stated above. Then, a particular Law student was shown these 50 randomly selected English text units (from each of MILPaC-CCI-FAQ and MILPaC-Acts) datasets, and their translations (by the said systems) in his/her native language, and asked to evaluate the translation quality.

The Law students who participated in our human surveys were clearly informed of the purpose for which the annotations/surveys were being carried out. They consented to participation in the survey and agreed that automatic translation tools can be of great help in the Indian legal scenario. Each of them was paid a mutually agreed honorarium for the evaluation. We took all possible steps to ensure that their annotations are unbiased (e.g., by anonymizing the names of the MT systems whose outputs they were annotating) and accurate (e.g., by asking them to write justifications for low scores, post-survey discussions, etc.). We also conducted a pilot survey (described below) and discussed with them after their evaluation to get their feedback. Through all these steps, we attempted to ensure that the annotations are done rigorously.

The Law student who is a native speaker of Bengali, was not available during the evaluation of MILPaC-Acts. Thus, in total, we performed human evaluations for 1,050 text pairs [(50 text units of MILPaC-CCI-FAQ x 3 MT systems x 4 language pairs) + (50 text units of MILPaC-Acts x 3 MT systems x 3 language pairs)].

Metrics for human evaluation:

Typically, manual evaluation of MT systems use metrics such as Accuracy / Adequacy

and Fluency (Escribe, 2019).

Since we are considering the domain of Law, we asked the Law students how they would like to evaluate translations.

To this end, an initial pilot survey had been conducted;

through this pilot survey and subsequent discussion, we agreed upon the following 3 metrics for the evaluation of legal translations:

(1) Preservation of Meaning (POM): similar to the standard measure of Accuracy/Adequacy, this captures how well the translation captures all of the meaning/information in the source text.

(2) Suitability for Legal Use (SLU): a domain-specific metric, that checks if the translation is sufficiently formal and specific/unambiguous to be used in Legal drafting. Note that SLU has some differences with POM, which is explained later in this section.

(3) Fluency (FLY): how grammatically correct and fluent the translations are.

All three metrics were recorded on a Likert scale of 1-5, with 1 being the lowest score (worst translation performance) and 5 being the highest (best translation performance).

| EN IN | Model | Automatic Metrics | Human Scores | ||||

|---|---|---|---|---|---|---|---|

| BLEU | GLEU | chrF++ | POM | SLU | FLY | ||

| MILPaC-CCI-FAQ | |||||||

| EN BN | IndicTrans | 19.4 | 24.3 | 46.5 | 3.50 | 3.48 | 3.52 |

| MSFT | 33.7 | 38.6 | 64.3 | 3.56 | 3.54 | 3.56 | |

| GOOG | 50.6 | 52.4 | 73.5 | 3.48 | 3.40 | 3.46 | |

| EN HI | IndicTrans | 49.4 | 50.6 | 66.2 | 3.67 | 3.53 | 3.97 |

| MSFT | 44.9 | 47.9 | 66.8 | 2.20 | 1.95 | 2.23 | |

| GOOG | 44.7 | 47.7 | 66.1 | 2.19 | 2.16 | 2.13 | |

| EN MR | IndicTrans | 28.9 | 32.6 | 56.1 | 4.02 | 4.02 | 4.02 |

| MSFT | 32.6 | 37.1 | 64.6 | 4.06 | 4.06 | 4.04 | |

| GOOG | 49.9 | 52.0 | 73.7 | 3.54 | 3.46 | 3.52 | |

| EN TA | IndicTrans | 22.0 | 26.4 | 54.6 | 2.98 | 2.86 | 2.98 |

| MSFT | 27.0 | 31.5 | 62.0 | 2.86 | 2.74 | 2.86 | |

| GOOG | 38.8 | 41.5 | 67.8 | 2.80 | 2.80 | 2.80 | |

| MILPaC-Acts | |||||||

| EN HI | IndicTrans | 47.1 | 49.5 | 69.3 | 4.65 | 4.07 | 4.74 |

| MSFT | 47.4 | 49.6 | 68.7 | 4.32 | 3.80 | 4.54 | |

| GOOG | 18.4 | 24.2 | 44.7 | 3.13 | 2.52 | 3.25 | |

| EN MR | IndicTrans | 18.7 | 23.7 | 47.7 | 4.0 | 3.46 | 3.90 |

| MSFT | 15.7 | 21.6 | 47.1 | 3.60 | 2.46 | 3.56 | |

| GOOG | 10.4 | 16.5 | 39.9 | 1.94 | 1.16 | 1.9 | |

| EN TA | IndicTrans | 12.3 | 17.1 | 43.0 | 4.0 | 3.76 | 4.02 |

| MSFT | 13.1 | 18.0 | 43.3 | 3.84 | 3.72 | 3.88 | |

| GOOG | 10.1 | 15.0 | 36.0 | 3.02 | 2.78 | 3.06 | |

Results of the human survey: Table 10 shows the mean scores (POM, SLU, FLY) given by the Law students to each MT system for MILPaC-CCI-FAQ & MILPaC-Acts. For comparison, we also report the automatic metrics averaged over the same text-pairs that the Law students evaluated. For MILPaC-CCI-FAQ, the Law students preferred IndicTrans for Hindi & Tamil, and MSFT for Bengali & Marathi, even though GOOG performs better for most of these languages according to automatic metrics. For MILPaC-Acts, the Law students preferred the IndicTrans translations for all three Indian languages, even though MSFT obtained the highest BLEU & GLEU scores for EN-TA and EN-HI translations. The good performance of IndicTrans over MILPaC-Acts may be because IndicTrans has been pretrained on legislative documents in some Indian languages (Ramesh et al., 2022). However, performance of most MT systems over MILPaC-CCI-FAQ is not of much satisfaction to the Law students (apart from EN-MR translation).

Comparing the two metrics for human survey (SLU vs POM): For human evaluation of Machine Translation in general domains, the two metrics Accuracy & Fluency are deemed to be sufficient. Accuracy checks whether the meaning has been rendered correctly in the translation, while Fluency checks whether the translation is grammatically correct.

However, we understood from our discussions with the Law students that, for evaluating translations in the Legal domain, a third metric (SLU: Suitability for Legal Use) is also essential. A translation may perfectly capture the meaning of the source text, but if the language of the translation is not keeping with the Legal standards, then the translated text cannot be used for purposes such as legal drafting. This difference arises mainly because legal language needs to be very formal and unambiguous, particularly while drafting laws and guidelines. Sometimes exact words and phrases need to be used, whereas if a translation uses a synonym or a similar phrase, then the translation will not be considered suitable for legal use, even if the meaning of the source text is well captured. This is why, after discussion with the Law students, we decided to use two separate metrics – Preservation of Meaning (POM) and Suitability of Legal Use (SLU) – during our human survey.

It can be seen from Table 10 that for the MILPaC-Acts dataset, SLU scores are substantially lower than the POM scores, for all Indian languages and all MT systems. This means that even when the translations well captured the meanings of the source text, the translated texts are not sufficiently formal for legal drafting, as per the opinion of the Law students. This problem is particularly important for the MILPaC-Acts dataset which contains very formal legal text that the MT systems often fail to generate. On the other hand, the SLU scores are much closer to the POM scores for the MILPaC-CCI-FAQ dataset, possibly because this dataset contains simplified versions of legal text. It is an interesting direction of future research as to how the legal translations can be made sufficiently formal when necessary, e.g., when the formal laws of a country are to be translated.

Inter Annotator Agreement (IAA): We could compute IAA only for English-to-Hindi translations since we had 2 annotators only for Hindi. For each dataset (MILPaC-CCI-FAQ and MILPaC-Acts), we considered all the 150 (50 text-pairs 3 systems) EN-HI translations for which 2 annotators gave ratings, and computed the Pearson’s correlation between the sets of scores given by the two annotators. The IAA values show relatively high agreement for MILPaC-CCI-FAQ (agreement for POM: 0.68, SLU: 0.71, FLY: 0.70; average IAA: 0.70) and moderate agreement for MILPaC-Acts (agreement for POM: 0.52, SLU: 0.43, FLY: 0.55; average IAA: 0.50).

It is to be noted that different Law practitioners have different preferences about translations of legal text (as shown by the moderate IAA between the two annotators for English-to-Hindi translations). Hence the observations presented above are according to the Law students we consulted, and can vary in case of other Law practitioners.

| EN IN | Human Metrics | Automatic Metrics | |

|---|---|---|---|

| GLEU | chrF++ | ||

| MILPaC-CCI-FAQ | |||

| EN BN | POM | 0.117 | 0.138 |

| SLU | 0.105 | 0.130 | |

| FLY | 0.105 | 0.122 | |

| EN HI | POM | 0.262 | 0.208 |

| SLU | 0.238 | 0.188 | |

| FLY | 0.222 | 0.170 | |

| EN MR | POM | ||

| SLU | |||

| FLY | |||

| EN TA | POM | 0.180 | 0.186 |

| SLU | 0.208 | 0.208 | |

| FLY | 0.180 | 0.186 | |

| Overall | POM | 0.065 | 0.097 |

| SLU | 0.057 | 0.091 | |

| FLY | 0.070 | 0.092 | |

| MILPaC-Acts | |||

| EN HI | POM | 0.602 | 0.648 |

| SLU | 0.648 | 0.687 | |

| FLY | 0.607 | 0.650 | |

| EN MR | POM | 0.203 | 0.272 |

| SLU | 0.269 | 0.280 | |

| FLY | 0.194 | 0.269 | |

| EN TA | POM | 0.164 | 0.314 |

| SLU | 0.135 | 0.282 | |

| FLY | 0.147 | 0.314 | |

| Overall | POM | 0.378 | 0.435 |

| SLU | 0.335 | 0.377 | |

| FLY | 0.403 | 0.457 | |

Correlation of automatic metrics with human scores: Table 10 brought out some differences in the MT system performances according to the automatic metrics and the scores given by the Law students (as stated above). These differences led us to investigate the correlation between the automatic metrics (GLEU and chrF++) and human scores (POM, SLU, FLY). Corresponding to each Indian language and each dataset, we checked the Pearson’s correlation over the data points that were manually evaluated (50 text-pairs 3 MT systems).

The correlation values corresponding to each language pair are given in Table 11 for MILPaC-CCI-FAQ and MILPaC-Acts datasets respectively.292929As stated earlier, the Law student who is a native speaker of Bengali, was not available during the expert evaluation of MILPaC-Acts. We observe the correlations of automatic metrics and human scores to be moderate for MILPaC-Acts (between and on average). Only for EN-HI, correlations are higher (above ). Whereas, for MILPaC-CCI-FAQ, all correlations were very low, around on average. Among the automatic metrics, chrF++ shows slightly higher correlation with human scores than GLEU. Such low correlations with human scores indicate the need for improvement in the automatic evaluation of MT systems in the legal domain.

4.5. Analysis of errors committed by the MT systems

As part of our survey (that has been described earlier), we also asked the Law students to give, along with the POM, SLU, and FLY scores, comments to explain why they gave certain translations low scores. We also asked them to identify different types of errors committed by the MT systems. They identified various types of errors such as inclusion of extra words in translations, mis-translation of terms, presence of untranslated portions, consistency errors (inconsistency between the factual information in the original and translated text), and so on. Table 12 shows the common errors and the percentage of text-pairs in which each error was identified by the Law students (across text-pairs in all Indian languages, that were evaluated by them). GOOG and MSFT frequently have untranslated portions (which possibly led to their poor expert scores). Most errors by IndicTrans were minor punctuation errors, which did not affect the translation quality much; however IndicTrans often mis-translates legal terms. We hope that these observations will help in improving these MT systems in future.

| Error | GOOG | MSFT | IndicTrans |

|---|---|---|---|

| Extra words included | 0.7% | 0.4% | 0.9% |

| Omission of words | 0.9% | 0.0% | 0.9% |

| General terms mistranslated | 0.9 % | 1.3% | 1.1% |

| Legal terms mistranslated | 1.6% | 3.8% | 5.1% |

| Untranslated Portions | 10.9% | 7.3% | 2.7% |

| Grammatical Errors | 1.1% | 0.2% | 0.0% |

| Spelling Errors | 0.2% | 0.2% | 0% |

| Punctuation Errors | 0.4% | 1.6% | 6.9% |

| Consistency Errors | 2.4% | 0.7% | 0.9% |

Some examples of errors committed by the MT systems have been shown in Figure 3. For each example, the POM, SLU, and FLY scores, along with the comments written by the Law students (who gave the scores), explaining the problem(s) / error(s) in the translated text, are reported. We see examples where the Law students opined that the meaning of the original text has not been captured in the translation, examples where a portion of the original text has not been translated, and examples where certain terms having domain-specific significance have not been properly translated.

5. Other applications of MILPaC

Till now, we have used MILPaC to benchmark MT systems for English-to-Indian languages translation of legal text. In addition to this, the MILPaC corpus has several other potential applications, some of which we describe briefly in this section.

| IN IN | MODEL | MODE | BLEU | GLEU | chrF++ |

|---|---|---|---|---|---|

| PA MR | MSFT | PA MR | 17.2 | 20.2 | 44.5 |

| PA EN MR | 17.2 | 20.3 | 44.6 | ||

| GOOG | PA MR | 15.1 | 18.6 | 42 | |

| PA EN MR | 13.3 | 16.8 | 39.5 | ||

| GU MR | MSFT | GU MR | 15.8 | 20.4 | 46.5 |

| GU EN MR | 16.0 | 20.5 | 46.6 | ||

| GOOG | GU MR | 19.6 | 23.6 | 49.1 | |

| GU EN MR | 15.7 | 20.4 | 45.4 | ||

| BN TE | MSFT | BN TE | 20.3 | 22.9 | 49.4 |

| BN EN TE | 20.4 | 23.0 | 49.5 | ||

| GOOG | BN TE | 19.3 | 22.3 | 47.3 | |

| BN EN TE | 16.0 | 20.1 | 43.8 |

5.1. Evaluating Indian-to-Indian legal translations

Translation of legal text from one Indian language to another may be necessary, e.g., in scenarios where a person who is a native of one Indian state (and understands the local language of his/her native state) becomes a party to a legal case in a lower court of another state having a different local language. MILPaC can be used for evaluating Indian-to-Indian language translation in the legal domain as well. To demonstrate this, we investigate the question – while translating between two low-resource Indian languages, is it better to translate directly, or through a high-resource intermediary language?. While answering this question systematically will require a full-fledged study, here we briefly investigate the question using only the MILPaC-IP dataset and the GOOG and MSFT systems.

We select a low-resource Indian language-pair (e.g., Punjabi to Marathi translation), and then consider two approaches for this translation – (1) direct translation (PA MR) and (2) translation through English as an intermediary language (PA EN MR). Table 13 compares these two approaches for three Indian language-pairs – Punjabi to Marathi translation, Gujarati to Marathi translation, and Bengali to Telugu translation. Interestingly, for GOOG, direct translation is consistently better, but for MSFT, going through English marginally improves performance. While more experimentation is needed for a detailed understanding, we here demonstrate that MILPaC can be used to address such questions on legal translation from one Indian language to another.

5.2. Use of MILPaC in fine-tuning MT systems

The datasets in MILPaC are much smaller in size compared to general-domain parallel corpora, due to two primary reasons. First, developing such corpora requires the involvement of Law practitioners which is expensive and limited. Second, creating these datasets required a lot of manual effort, due to the poor quality of the documents (specially in the MILPaC-Acts dataset) and the consequent challenges in OCR of these documents (detailed in the Appendix). Thus, the MILPaC datasets are not suitable for training Neural MT systems from scratch. Hence we are proposing this corpus mainly for the evaluation of MT systems. However, in this section, we show that MILPaC is also suitable for fine-tuning MT systems to improve their performance over Indian Legal text.

| EN IN | IndicTrans | IndicTrans-FT | ||||

|---|---|---|---|---|---|---|

| BLEU | GLEU | chrF++ | BLEU | GLEU | chrF++ | |

| EN BN | 17.7 | 22.6 | 46.9 | 41.8 | 43.7 | 67.1 |

| EN HI | 42.7 | 45.0 | 62.7 | 53.4 | 54.2 | 71.6 |

| EN MR | 20.6 | 25.0 | 50.0 | 40.1 | 41.5 | 65.4 |

| EN TA | 19.4 | 23.4 | 51.5 | 32.7 | 35.1 | 64.6 |

To this end, we randomly split MILPaC (combining all 3 datasets) in a 70 (train) - 10 (validation) - 20 (test) ratio language-wise. For instance, we combined the English-Bengali text-pairs from all 3 datasets, and then split this combined set to get the train-test-validation splits for the Bengali language. Then, for each Indian language, we fine-tuned the open-source MT model IndicTrans (Ramesh et al., 2022) with the 70% train set (combined 70% of all 3 MILPaC datasets). We used the 10% validation split (combined 10% of all 3 datasets) to select the best checkpoint among different iterations and to prevent overfitting by early stopping with the patience value as 3. Then we checked the performance of the fine-tuned IndicTrans (which we call ‘IndicTrans-FT’) over the 20% test set (20% of the 3 datasets combined).

Table 14 compares the performance of the off-the-shelf IndicTrans (Ramesh et al., 2022) and the fine-tuned IndicTrans-FT over the 20% test set for 4 Indian languages. For every language, we find that IndicTrans-FT gives substantial improvements over the off-the-shelf IndicTrans. Thus, though the intended primary use of MILPaC is to evaluate MT systems, these results show that MILPaC can also be used to fine-tune general domain MT systems to improve their translations in the legal domain.

6. Conclusion

This work develops the first parallel corpus for Indian languages in the legal domain. We benchmark several MT systems using the corpus, including both automatic evaluation and a human survey by Law students. This work yields important insights for the future: (1) It is necessary to improve existing MT systems for effective use in the legal domain. We indicate where to improve, by listing common errors committed by some of the MT systems (Table 12). (2) Automatic metrics correlate well with human scores only for EN-to-HI translation, but not for other Indian languages; hence better automatic metrics need to be devised for the legal domain, especially for quantifying the suitability of translated text for legal drafting (SLU). We also demonstrate the applicability of the MILPaC corpus for improving MT systems by fine-tuning them. We believe that the corpus as well as the insights derived from this study will be important to improve the translation of legal text to Indian languages, a task that is critical for making justice accessible to millions of Indians.

Our study highlights that existing state-of-the-art MT systems often commit various errors in legal translations. Therefore, we do not advocate for the direct replacement of human translators (such as law practitioners who manually translate legal documents) with existing MT models. Rather, the study is performed keeping in mind that automatic MT systems can significantly speed up the litigation process, which is highly beneficial in countries with overburdened legal systems, such as India. Therefore, we wish to advocate a human-in-the-loop approach where, as a first step, an existing MT system (e.g., the top performers over MILPaC) can be used to generate initial drafts, significantly expediting the translation process. Then, these drafts should be reviewed by a domain expert to correct errors and to make the translations more precise where necessary. This human-in-the-loop approach will harness the speed of MT systems while maintaining the quality and reliability ensured by domain experts.

Acknowledgements.

We thank the Law students from the Rajiv Gandhi School of Intellectual Property Law, India – Purushothaman R, Samina Khanum, Krishna Das, Md. Ajmal Ibrahimi, Shriyash Shingare – who helped us in evaluating the translations and provided valuable suggestions and observations during the evaluation. We thank Prof. Shouvik Guha and Prof. Anirban Mazumder of The West Bengal National University of Juridical Sciences, Kolkata for allowing us to use their “My IP” Primers. We are grateful to the Ministry of Law and Justice, Government of India, particularly Dr. Nirmala Krishnamoorthy, for allowing us to use the Central Acts in regional languages for our research. We also thank the Competition Commission of India, particularly Mr. Pramod Kumar Pramod, for allowing us to use their FAQ booklets for developing our dataset. Debtanu Datta is supported by the Prime Minister’s Research Fellowship (PMRF) from the Government of India. The work is partially supported by the Technology Innovation Hub (TIH) on AI for Interdisciplinary Cyber-Physical Systems (AI4ICPS) set up by IIT Kharagpur under the aegis of DST, Government of India (GoI).References

- (1)

- Ahmadi et al. (2022) Sina Ahmadi, Hossein Hassani, and Daban Q. Jaff. 2022. Leveraging Multilingual News Websites for Building a Kurdish Parallel Corpus. ACM Transactions on Asian and Low-Resource Language Information Processing 21, 5 (2022).

- Baisa et al. (2016) Vít Baisa, Jan Michelfeit, Marek Medveď, and Miloš Jakubíček. 2016. European union language resources in sketch engine. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16). European Language Resources Association (ELRA), 2799–2803.

- Costa-jussà et al. (2022) Marta R Costa-jussà, James Cross, Onur Çelebi, Maha Elbayad, Kenneth Heafield, Kevin Heffernan, Elahe Kalbassi, Janice Lam, Daniel Licht, Jean Maillard, et al. 2022. No language left behind: Scaling human-centered machine translation. arXiv preprint arXiv:2207.04672 (2022).

- Dabre and Sukhoo (2022) Raj Dabre and Aneerav Sukhoo. 2022. KreolMorisienMT: A Dataset for Mauritian Creole Machine Translation. In Findings of the Association for Computational Linguistics: AACL-IJCNLP 2022, Yulan He, Heng Ji, Sujian Li, Yang Liu, and Chua-Hui Chang (Eds.). Association for Computational Linguistics, Online only, 22–29.

- Escribe (2019) Marie Escribe. 2019. Human Evaluation of Neural Machine Translation: The Case of Deep Learning. In Proceedings of the Human-Informed Translation and Interpreting Technology Workshop (HiT-IT 2019). Varna, Bulgaria, 36–46.

- Goyal et al. (2021) Naman Goyal, Cynthia Gao, Vishrav Chaudhary, Peng-Jen Chen, Guillaume Wenzek, Da Ju, Sanjana Krishnan, Marc’Aurelio Ranzato, Francisco Guzmán, and Angela Fan. 2021. The FLORES-101 Evaluation Benchmark for Low-Resource and Multilingual Machine Translation. CoRR abs/2106.03193 (2021). arXiv:2106.03193

- Haddow and Kirefu (2020) Barry Haddow and Faheem Kirefu. 2020. PMIndia - A Collection of Parallel Corpora of Languages of India. ArXiv abs/2001.09907 (2020).

- Hasan et al. (2020) Tahmid Hasan, Abhik Bhattacharjee, Kazi Samin, Masum Hasan, Madhusudan Basak, M. Sohel Rahman, and Rifat Shahriyar. 2020. Not Low-Resource Anymore: Aligner Ensembling, Batch Filtering, and New Datasets for Bengali-English Machine Translation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics, Online, 2612–2623.

- Haulai and Hussain (2023) Thangkhanhau Haulai and Jamal Hussain. 2023. Construction of Mizo: English Parallel Corpus for Machine Translation. ACM Transactions on Asian and Low-Resource Language Information Processing 22, 8 (2023).

- Höfler and Sugisaki (2014) Stefan Höfler and Kyoko Sugisaki. 2014. Constructing and exploiting an automatically annotated resource of legislative texts. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14). European Language Resources Association (ELRA), Reykjavik, Iceland, 175–180.

- Koehn (2005) Philipp Koehn. 2005. Europarl: A Parallel Corpus for Statistical Machine Translation. In Proceedings of Machine Translation Summit X: Papers. Phuket, Thailand, 79–86.

- Kunchukuttan (2020) Anoop Kunchukuttan. 2020. IndoWordnet Parallel Corpus. https://github.com/anoopkunchukuttan/indowordnet_parallel.

- Kunchukuttan et al. (2018) Anoop Kunchukuttan, Pratik Mehta, and Pushpak Bhattacharyya. 2018. The IIT Bombay English-Hindi Parallel Corpus. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018). European Language Resources Association (ELRA), Miyazaki, Japan.

- Liu et al. (2020) Yinhan Liu, Jiatao Gu, Naman Goyal, Xian Li, Sergey Edunov, Marjan Ghazvininejad, Mike Lewis, and Luke Zettlemoyer. 2020. Multilingual Denoising Pre-training for Neural Machine Translation. Transactions of the Association for Computational Linguistics 8 (2020), 726–742.

- Mujadia and Sharma (2022) Vandan Mujadia and Dipti Sharma. 2022. The LTRC Hindi-Telugu Parallel Corpus. In Proceedings of the Thirteenth Language Resources and Evaluation Conference. European Language Resources Association, Marseille, France, 3417–3424.

- Nakazawa et al. (2020) Toshiaki Nakazawa, Hideki Nakayama, Chenchen Ding, Raj Dabre, Shohei Higashiyama, Hideya Mino, Isao Goto, Win Pa Pa, Anoop Kunchukuttan, Shantipriya Parida, Ondřej Bojar, and Sadao Kurohashi. 2020. Overview of the 7th Workshop on Asian Translation. In Proceedings of the 7th Workshop on Asian Translation, Toshiaki Nakazawa, Hideki Nakayama, Chenchen Ding, Raj Dabre, Anoop Kunchukuttan, Win Pa Pa, Ondřej Bojar, Shantipriya Parida, Isao Goto, Hidaya Mino, Hiroshi Manabe, Katsuhito Sudoh, Sadao Kurohashi, and Pushpak Bhattacharyya (Eds.). Association for Computational Linguistics, Suzhou, China, 1–44. https://aclanthology.org/2020.wat-1.1

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. Bleu: a Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, Philadelphia, Pennsylvania, USA, 311–318.

- Parida et al. (2020) Shantipriya Parida, Satya Ranjan Dash, Ondřej Bojar, Petr Motlicek, Priyanka Pattnaik, and Debasish Kumar Mallick. 2020. OdiEnCorp 2.0: Odia-English Parallel Corpus for Machine Translation. In Proceedings of the WILDRE5–5th Workshop on Indian Language Data: Resources and Evaluation. 14–19.

- Popović (2017) Maja Popović. 2017. chrF++: words helping character n-grams. In Proceedings of the second conference on machine translation. 612–618.

- Post (2018) Matt Post. 2018. A Call for Clarity in Reporting BLEU Scores. In Proceedings of the Third Conference on Machine Translation: Research Papers. Association for Computational Linguistics, Belgium, Brussels, 186–191.

- Ramasamy et al. (2012) Loganathan Ramasamy, Ondřej Bojar, and Zdeněk Žabokrtský. 2012. Morphological Processing for English-Tamil Statistical Machine Translation. In Proceedings of the Workshop on Machine Translation and Parsing in Indian Languages (MTPIL-2012). 113–122.

- Ramesh et al. (2022) Gowtham Ramesh, Sumanth Doddapaneni, Aravinth Bheemaraj, Mayank Jobanputra, Raghavan AK, Ajitesh Sharma, Sujit Sahoo, Harshita Diddee, Mahalakshmi J, Divyanshu Kakwani, Navneet Kumar, Aswin Pradeep, Srihari Nagaraj, Kumar Deepak, Vivek Raghavan, Anoop Kunchukuttan, Pratyush Kumar, and Mitesh Shantadevi Khapra. 2022. Samanantar: The Largest Publicly Available Parallel Corpora Collection for 11 Indic Languages. Transactions of the Association for Computational Linguistics 10 (2022), 145–162.

- Salloum et al. (2023) Said Salloum, Tarek Gaber, Sunil Vadera, and Khaled Shaalan. 2023. A New English/Arabic Parallel Corpus for Phishing Emails. ACM Transactions on Asian and Low-Resource Language Information Processing 22, 7 (2023).

- Siripragada et al. (2020) Shashank Siripragada, Jerin Philip, Vinay P. Namboodiri, and C V Jawahar. 2020. A Multilingual Parallel Corpora Collection Effort for Indian Languages. In Proceedings of the Twelfth Language Resources and Evaluation Conference. European Language Resources Association, Marseille, France, 3743–3751.

- Tang et al. (2020) Yuqing Tang, Chau Tran, Xian Li, Peng-Jen Chen, Naman Goyal, Vishrav Chaudhary, Jiatao Gu, and Angela Fan. 2020. Multilingual translation with extensible multilingual pretraining and finetuning. arXiv preprint arXiv:2008.00401 (2020).

- Tiedemann and Thottingal (2020) Jörg Tiedemann and Santhosh Thottingal. 2020. OPUS-MT – Building open translation services for the World. In European Association for Machine Translation Conferences/Workshops.

- Wu et al. (2016) Yonghui Wu, Mike Schuster, Z. Chen, Quoc V. Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, Yuan Cao, Qin Gao, Klaus Macherey, Jeff Klingner, Apurva Shah, Melvin Johnson, Xiaobing Liu, Lukasz Kaiser, Stephan Gouws, Yoshikiyo Kato, Taku Kudo, Hideto Kazawa, Keith Stevens, George Kurian, Nishant Patil, Wei Wang, Cliff Young, Jason R. Smith, Jason Riesa, Alex Rudnick, Oriol Vinyals, Gregory S. Corrado, Macduff Hughes, and Jeffrey Dean. 2016. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. ArXiv abs/1609.08144 (2016).

- Zhang et al. (2020) Shiyue Zhang, Benjamin Frey, and Mohit Bansal. 2020. ChrEn: Cherokee-English Machine Translation for Endangered Language Revitalization. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics, Online, 577–595.

- Ziemski et al. (2016) Michał Ziemski, Marcin Junczys-Dowmunt, and Bruno Pouliquen. 2016. The United Nations Parallel Corpus v1.0. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16). European Language Resources Association (ELRA), Portorož, Slovenia, 3530–3534.

Appendix

Appendix A Additional details about the MILPaC dataset

A.1. Variation in the number of text units for different language-pairs in MILPaC-IP

For the MILPaC-IP dataset, ideally the version in every language should have 57 QA pairs (the number of QA pairs in the English version), hence ideally there should be 114 text units for each language-pair. However, the number of textual units in Table 4 (upper triangular part) show some variation for some of the language-pairs. These variations are explained as follows.