11email: [email protected]

MFIM: Megapixel Facial Identity Manipulation

Abstract

Face swapping is a task that changes a facial identity of a given image to that of another person. In this work, we propose a novel face-swapping framework called Megapixel Facial Identity Manipulation (MFIM). The face-swapping model should achieve two goals. First, it should be able to generate a high-quality image. We argue that a model which is proficient in generating a megapixel image can achieve this goal. However, generating a megapixel image is generally difficult without careful model design. Therefore, our model exploits pretrained StyleGAN in the manner of GAN-inversion to effectively generate a megapixel image. Second, it should be able to effectively transform the identity of a given image. Specifically, it should be able to actively transform ID attributes (e.g., face shape and eyes) of a given image into those of another person, while preserving ID-irrelevant attributes (e.g., pose and expression). To achieve this goal, we exploit 3DMM that can capture various facial attributes. Specifically, we explicitly supervise our model to generate a face-swapped image with the desirable attributes using 3DMM. We show that our model achieves state-of-the-art performance through extensive experiments. Furthermore, we propose a new operation called ID mixing, which creates a new identity by semantically mixing the identities of several people. It allows the user to customize the new identity.

1 Introduction

Face swapping is a task that changes the facial identity of a given image to that of another person. It has now been applied in various applications and services in entertainment [23], privacy protection [30], and theatrical industry [32].

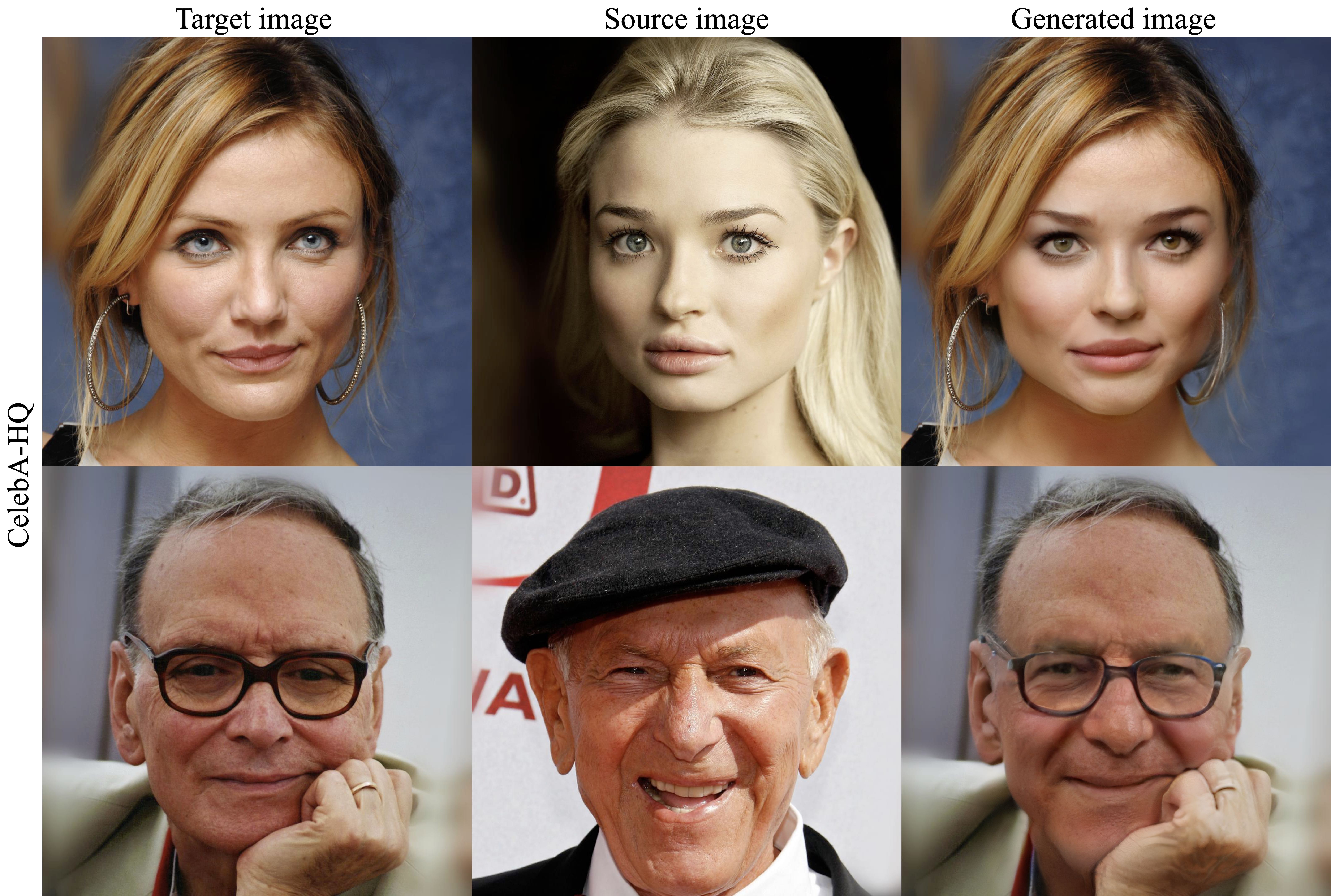

In technical terms, a face-swapping model should be able to generate a high-quality image. At the same time, it should be able to transfer the ID attributes (e.g., face shape and eyes) from the source image to the target image, while preserving the ID-irrelevant attributes (e.g., pose and expression) of the target image as shown in Figure 1. In other words, the face-swapping model has two goals: i) generating high-quality images and ii) effective identity transformation. Our model, Megapixel Facial Identity Manipulation (MFIM), is designed to achieve both of these goals.

Firstly, to generate a high-quality image, we propose a face-swapping framework that exploits pretrained StyleGAN [22] in the manner of GAN-inversion. Specifically, we design an encoder called facial attribute encoder that effectively extracts ID and ID-irrelevant representations from the source and target images, respectively. These representations are forwarded to the pretrained StyleGAN generator. Then, the generator blends these representations and generates a high-quality megapixel face-swapped image.

Basically, our facial attribute encoder extracts style codes, which is similar to existing StyleGAN-based GAN-inversion encoders [34, 38, 3]. Specifically, our facial attribute encoder extracts ID and ID-irrelevant style codes from the source and target images, respectively. Here, one of the important things for faithful face swapping is that the details of the target image such as expression or background should be accurately reconstructed. However, the ID-irrelevant style codes, which do not have spatial dimensions, can fail to preserve the details of the target image. Therefore, our facial attribute encoder extracts not only the style codes, but also the style maps which have spatial dimensions from the target image. The style maps, which take advantages from its spatial dimensions, can complement the ID-irrelevant style codes by propagating additional information about the details of the target image. As a result, our facial attribute encoder, which extracts the style codes and style maps, can effectively capture the ID attributes from the source image and the ID-irrelevant attributes including details from the target image. MegaFS [48], the previous model that exploits pretrained StyleGAN, suffers from reconstructing the details of target image because it only utilizes the style codes. To solve this problem, they use a segmentation label to take the details from the target image. However, we resolve this drawback by extracting the style maps instead of using the segmentation label.

Secondly, we utilize 3DMM [16] which can capture various facial attributes for the effective identity transformation. We especially focus on the transformation of face shape which is one of the important factors in recognizing an identity. However, it is difficult to transform the face shape while preserving the ID-irrelevant attributes of the target image at the same time because these two goals are in conflict with each other [25]. Specifically, making the generated image have the same face shape with that of the source image enforces the generated image to differ a lot from the target image. In contrast, making it preserve the ID-irrelevant attributes of the target image enforces it to be similar to the target image. To achieve these two conflicting goals simultaneously, we utilize 3DMM which can accurately and distinctly capture the various facial attributes such as shape, pose, and expression from a given image. In particular, we explicitly supervise our model to generate a face-swapped image with the desirable attributes using 3DMM, i.e., the same face shape with the source image, but the same pose and expression with the target image. The previous models [1, 27, 10, 17, 48, 25] without such explicit supervision struggle with achieving two conflicting goals simultaneously. In contrast, our model can transform the face shape well, while preserving the ID-irrelevant attributes of the target image. HiFiFace [41], the previous model that exploits 3DMM, requires 3DMM not only at the training phase, but even at the inference phase. In contrast, our model does not use 3DMM at the inference phase.

Finally, we propose a new additional task, ID mixing, which means face swapping with a new identity created with multiple source images instead of a single source image. Here, we aim to design a method that allows the user to semantically control the identity creation process. For example, when using two source images, the user can extract the global ID attributes (e.g., face shape) from one source image and the local ID attributes (e.g., eyes) from the other source image, and create the new identity by blending them as shown in Figure 1. The user can customize the new identity as desired with this operation. Furthermore, this operation does not require any additional training or segmentation label. To the best of our knowledge, we are the first to propose this operation.

In conclusion, the main contributions of this work include the following:

We propose an improved framework for face swapping by adopting GAN-inversion method with pretrained StyleGAN that takes both style codes and style maps. It allows our model to generate high-quality megapixel images without additional labels in order to preserve the details of the target image.

We introduce a 3DMM supervision method for the effective identity transformation, especially, the face shape. It allows our model to transform the face shape and preserve the ID-irrelevant attributes at the same time. Moreover, our model does not require 3DMM at the inference phase.

We propose a new operation, ID mixing, which allows the user to customize the new identity using multiple source images. It does not require any additional training or segmentation label.

| FaceShifter | HifiFace | InfoSwap | MegaFS | SmoothSwap | MFIM | |

|---|---|---|---|---|---|---|

| Megapixel | ✔ | ✔ | ✔ | |||

| W/o segmentation labels | ✔ | ✔ | ✔ | ✔ | ||

| 3DMM supervision | ✔ | |||||

| ID mixing | ✔ |

2 Related Work

2.0.1 Face swapping.

Faceshifter [27] proposes a two-stage framework in order to achieve occlusion aware method. Simswap [10] focuses on designing a framework to transfer an arbitrary identity to the target image. InfoSwap [17] proposes explicit supervision based on the IB principle for disentangling identity and identity-irrelevant information from source and target image. MegaFS [48] uses pre-trained StyleGAN [22] in order to generate megapixel samples by adopting GAN-inversion method. However, it does not introduce 3DMM supervision and relies on the segmentation labels. HifiFace [41] utilizes 3DMM for the effective identity transformation. However, HifiFace [41] requires 3DMM not only in the training phase, but also in the inference phase. On the contrary, our model only takes advantage of 3DMM at training phase and no longer needs it at the inference phase. Most recently, SmoothSwap [25] proposes a smooth identity embedder to improve learning stability and convergence speed. The key differences between our model and the previous models are given in Table 1.

2.0.2 Learning-based GAN-inversion.

Generative Adversarial Networks (GAN) [18] framework has been actively employed in the various image manipulation applications [19, 47, 26, 12, 13, 33, 5, 31, 11, 44]. Recently, as remarkable GAN frameworks (e.g., BigGAN [7] and StyleGAN [22]) have emerged, GAN-inversion [43] is being actively studied. Especially, learning-based GAN-inversion aims to train an extra encoder to find a latent code that can reconstruct a given image using a pretrained generator as a decoder. Then, one can edit the given image by manipulating the latent code. pSp [34] and e4e [38] use the pretrained StyleGAN generator as a decoder. However, they have difficulty in accurate reconstruction of the given image. To solve this problem, ReStyle [3] and HFGI [40] propose iterative refinement and distortion map, respectively. However, these methods require multiple forward passes. StyleMapGAN [24] replaces the style codes of StyleGAN with the style maps. Our model also exploits the style maps, but as additional inputs to the style codes, not as replacements for the style codes to fully utilize the capability of the pretrained StyleGAN generator.

2.0.3 3DMM.

A 3D morphable face model (3DMM) produces vector space representations that capture various facial attributes such as shape, expression and pose [6, 4, 8, 15, 16]. Although the previous 3DMM methods [6, 4, 8] have limitations in estimating face texture and lighting conditions accurately, recent methods [15, 16] overcome these limitations. We utilize the state-of-the-art 3DMM [16] to effectively capture the various facial attributes and supervise our model.

3 MFIM: Megapixel Facial Identity Manipulation

Figure 2(a) shows an overall architecture of our model. Our goal is to capture the ID and ID-irrelevant attributes from the source image, , and target image, , respectively, and synthesize a megapixel image, , by blending these attributes. Note that should have the same ID attributes with those of , while the same ID-irrelevant attributes with those of . For example, in Figure 2, has the same eyes and face shape with , and the same pose and expression with .

To achieve this goal, we firstly design a facial attribute encoder that encodes and into ID and ID-irrelevant representations, respectively. These representations are forwarded to the pretrained StyleGAN generator (Section 3.1). Secondly, for the effective identity transformation, especially the face shape, we additionally supervise our model with 3DMM. Note that 3DMM is only used at the training phase and no more used at the inference phase (Section 3.2). After training, our model can perform a new operation called ID mixing as well as face swapping. Whereas conventional face swapping uses only one source image, ID mixing uses multiple source images to create a new identity. (Section 3.3).

3.1 Facial Attribute Encoder

We introduce our facial attribute encoder. As shown in Figure 2(a), it first extracts hierarchical latent maps from a given image like pSp encoder [34]. Then, map-to-code (M2C) and map-to-map (M2M) blocks produce the style codes and style maps respectively, which are forwarded to the pretrained StyleGAN generator.

3.1.1 Style code.

Among the many latent spaces of the pretrained StyelGAN generator (e.g., [21], [21], [2], and [42]), our facial attribute encoder maps a given image to , so it extracts twenty-six style codes from a given image. The extracted style codes transform the generator feature maps via weight demodulation operation [22]. As demonstrated in previous work [21], among the twenty-six style codes, we expect that the style codes corresponding to coarse spatial resolutions (e.g., from to ) synthesize the global aspects of an image (e.g., overall structure and pose). In contrast, the style codes corresponding to fine spatial resolutions (e.g., from to ), synthesize the relatively local aspects of an image (e.g., face shape, eyes, nose, and lips).

Based on this expectation, as shown in Figure 2(a), the style codes for the coarse resolutions are extracted from and encouraged to transfer the global aspects of such as overall structure and pose. In contrast, the style codes for the fine resolutions are extracted from and encouraged to transfer the relatively local aspects of such as face shape, eyes, nose, and lips. In this respect, we call the style codes extracted from and ID-irrelevant style codes and ID style codes, respectively. However, it is important to reconstruct the details of the target image (e.g., expression and background), but the ID-irrelevant style codes, which do not have spatial dimensions, lose those details.

3.1.2 Style map.

To preserve the details of , our encoder extracts the style maps from which have the spatial dimensions. Specifically, the M2M blocks in our encoder produce the style maps with the same size of the incoming latent maps. Then, these style maps are given as noise inputs to the pretrained StyleGAN generator, which are known to generate fine details of the image.

Note that MegaFS [48] also adopts GAN-inversion method, but it struggles with reconstructing the details of . To solve this problem, it relies on the segmentation label that detects background and mouth to copy those from . In contrast, our model can reconstruct the details of due to the style maps.

3.2 Training Objectives

3.2.1 ID loss.

To ensure has the same identity with , we formulate ID loss which calculates cosine similarity between them as

| (1) |

where is the pretrained face recognition model [14].

3.2.2 Reconstruction loss.

In addition, should be similar to in most regions except for ID-related regions. To impose this constraint, we define reconstruction loss by adopting pixel-level loss and LPIPS loss [46] as

| (2) |

3.2.3 Adversarial loss.

3.2.4 3DMM supervision.

We explicitly enforce to have the same face shape with that of , and same pose and expression with those of . For these constraints, we formulate the following losses using 3DMM [16]:

| (3) | |||

| (4) | |||

| (5) |

where , , and are the shape, pose, and expression parameters extracted from a given image by 3DMM [16] encoder, respectively, with a subscript that denotes the image from which the parameter is extracted (e.g., is the shape parameter extracted from ). encourages to have the same face shape with that of . On the other hand, and encourage to have the same pose and expression with those of , respectively.

Note that HifiFace [41] also utilizes 3DMM, but it requires 3DMM even at the inference phase. This is because HiFiFace takes 3DMM parameters as inputs to generate a face-swapped image. In contrast, our model does not take 3DMM parameters as inputs to generate a face-swapped image, so 3DMM is no more used at the inference phase. Furthermore, in terms of loss function, HifiFace formulates the landmark-based loss, but we formulate the parameter-based losses. We compare these methods in the supplementary material.

3.2.5 Full objective.

Finally, we formulate the full loss as

| (6) |

3.3 ID Mixing

Our model can create a new identity by mixing multiple identities. We call this operation ID mixing. In order to allow the user to semantically control the identity creation process, we design a method to extract the ID style codes from multiple source images and then mix them like style mixing [21]. Here, we describe ID mixing using two source images, but it can be generalized to use multiple source images more than two. Specifically, when using two source images, the user can take global ID attributes (e.g., face shape) from one source image and local ID attributes (e.g., eyes) from the other source image and mix them to synthesize an ID-mixed image, .

Figure 2(b) describes this process. The ID-irrelevant style codes and style maps are extracted from (red arrow in Figure 2(b)). However, the ID style codes are extracted from two source images, global and local source images. We denote them as and , respectively, and the style codes extracted from them are called global (light blue arrow in Figure 2(b)) and local ID style codes (dark blue arrow in Figure 2(b)), respectively. These ID style codes transform the specific generator feature maps. In particular, the global ID style codes transform the ones with coarse spatial resolution (e.g., ), while the local ID style codes are for the ones with fine spatial resolutions (e.g., from to ). In this manner, the global ID style codes transfer the global ID attributes (e.g., face shape) of , while the local ID style codes transfer the local ID attributes (e.g., eyes) of due to the property of style localization [21].

MegaFS [48] which exploits pretrained StyleGAN also has the potential to perform ID mixing. However, MegaFS struggles with transforming the face shape (Section 4.2), so it is difficult to effectively perform ID mixing.

| Identity | Shape | Expression | Pose | Pose-HN | |

|---|---|---|---|---|---|

| Deepfakes | 120.907 | 0.639 | 0.802 | 0.188 | 4.588 |

| FaceShifter | 110.875 | 0.658 | 0.653 | 0.177 | 3.175 |

| SimSwap | 99.736 | 0.662 | 0.664 | 0.178 | 3.749 |

| HifiFace | 106.655 | 0.616 | 0.702 | 0.177 | 3.370 |

| InfoSwap | 104.456 | 0.664 | 0.698 | 0.179 | 4.043 |

| MegaFS | 110.897 | 0.701 | 0.678 | 0.182 | 5.456 |

| SmoothSwap | 101.678 | 0.565 | 0.722 | 0.186 | 4.498 |

| MFIM (ours) | 87.030 | 0.553 | 0.646 | 0.175 | 3.694 |

4 Experiments

We present our experimental settings and results to demonstrate the effectiveness of our model. Implementation details are in the supplementary material.

4.1 Experimental Settings

4.1.1 Baselines.

4.1.2 Datasets.

4.1.3 Evaluation metrics.

We evaluate our model and the baselines with respect to identity, shape, expression, and pose following SmoothSwap [25]. In the case of ID and shape, the closer and are, the better, and for the expression and pose, the closer and are, the better. To measure the identity, we use distance in the feature space of the face recognition model [9]. On the other hand, to measure the shape, expression, and pose, we use distance in the parameter space of 3DMM [37] for each attribute. For the pose, distance in the feature space of a pose estimation model [36] is additionally used, and this score is denoted as pose-HN. All of these metrics are the lower the better.

4.2 Comparison with the Baselines

The generated images of our model can be seen in Figure 3. The qualitative and quantitative comparisons between our model and the baselines are presented in Figure 4 and Tables 2 and 3, respectively. We first compare our model to the baselines on FaceForensics++ [35], following the evaluation protocol of SmoothSwap [25]. As shown in Table 2, our model is superior to the baselines in all metrics except for pose-HN. It is noteworthy that our model outperforms the baselines for the shape, expression, and pose at the same time, whereas the existing baselines do not perform well for all those three metrics at the same time. For example, among the baslines, SmoothSwap [25] and HifiFace [41] achieve good scores in the shape, but the expression scores of these baselines are not as good. On the other hand, FaceShifter [27] and SimSwap [10] achieve good scores in the expression and pose, but the shape scores of these baselines are not as good. However, our model accomplishes the state-of-the-art performance for the shape, expression, and pose metric at the same time.

In addition, we compare our model to the previous megapixel model, MegaFS [48], on CelebA-HQ. We generate 300,000 images following MegaFS [48]. Then, each model is evaluated with the same metrics used in the evaluation on FaceForenscis++. For FID, we use CelebA-HQ for the real distribution following MegaFS [48]. As shown in Table 3, our model outperforms MegaFS [48] in the all metrics.

| Configuration | Identity | Shape | Expression | Pose | Pose-HN |

|---|---|---|---|---|---|

| A. Baseline MFIM | 70.160 | 0.383 | 1.116 | 0.145 | 7.899 |

| B. style maps | 91.430 | 0.823 | 0.398 | 0.051 | 3.795 |

| C. | 86.476 | 0.635 | 0.864 | 0.085 | 5.091 |

| D. | 86.777 | 0.634 | 0.860 | 0.078 | 4.797 |

| E. | 91.469 | 0.782 | 0.400 | 0.057 | 4.095 |

4.3 Ablation Study of MFIM

We conduct an ablation study on CelebA-HQ to demonstrate the effectiveness of each component of our model following the evaluation protocol of the comparative experiment on CelebA-HQ (Section 4.2). The qualitative and quantitative results are presented in Figure 5 and Table 4, respectively.

The configuration (A) is trained by using only the ID-irrelevant and ID style codes. The style maps and 3DMM supervision are not used in this configuration. In Figure 5, the configuration (A) generates an image that has the overall structure and pose of , but has the identity of (e.g., eyes and face shape). This is because the ID-irrelevant style codes transform the generator feature maps with the coarser spatial resolutions (from to ) than the ID style codes (from to ), so the ID-irrelevant style codes synthesize more global aspects than the ID style codes do. However, the configuration (A) fails to reconstruct the details of (e.g., expression, hair style, and background). This is because the ID-irrelevant style codes, which do not have the spatial dimensions, lose the details of .

To solve this problem, we construct the configuration (B) by adding the style maps to the configuration (A). In Figure 5, the configuration (B) reconstructs the details of better than configuration (A). It is also supported by the improvement of the expression score in Table 4. These results show that the style maps, which have the spatial dimensions, can preserve the details of . However, the generated image by configuration (B) does not have the same face shape with that of , but with that of .

Therefore, for the more effective identity transformation, we improve our model by adding the 3DMM supervision to the configuration (B). First, we construct the configuration (C) by adding to the configuration (B). As a result, the generated image by the configuration (C) has the same face shape with that of rather than that of . It leads to the improvement of the shape score in Table 4. However, the expression and pose scores are degraded. This result is consistent with Figure 5 in that the generated image of configuration (C) has the same expression with , not , which is undesirable. We assume that this is because the expression and pose of are leaked somewhat while the face shape of is actively transferred by . It means that the ID and ID-irrelevant representations of MFIM are not perfectly disentangled. Improving our model to solve this problem can be future work.

In order to restore the pose and expression scores, we first construct the configuration (D) by adding to the configuration (C), and then construct the configuration (E) by adding to the configuration (D). As a result, as shown in Table 4, the pose and expression scores are restored to the similar scores to the configuration (B). Finally, the generated image by the configuration (E) in Figure 5 has the same face shape with that of , while the same pose and expression with that of .

Although the configuration (E) can faithfully reconstruct the details of such as background and hair style, we can further improve our model to reconstruct the high-frequency details by adding ROI only synthesis to the configuration (E) at the inference phase. This configuration is denoted as (E+). It allows our model to generate only the face region, but it does not require any segmentation label. More details on this are in the supplementary material. In Figure 5, the configuration (E+) reconstructs the high-frequency details on hair. We use the configuration (E) for all the quantitative results, and the configuration (E+) for all the qualitative results.

4.4 ID Mixing

Figure 6 shows the qualitative results of ID mixing using our model. In Figure 6, has the new identity with the global ID attributes (e.g., face shape) of , but the local ID attributes (e.g., eyes) of . This property of ID mixing allows the user to semantically control the ID creation process. We also compare our model with MegaFS [48] in terms of ID mixing in the supplementary material.

We quantitatively analyze the properties of ID mixing on CelebA-HQ. We prepare 30,000 triplets by randomly assigning one global source image and one local source image to each target image. Then, we define Relative Identity () distance and Relative Shape () distance following SmoothSwap [25]. For example, is defined as where means distance on the feature space of the face recognition model [9]. This measures how similar the overall identity of is to that of compared to . is defined similarly, so . In addition, and are defined in the same manner with and , respectively, but they are based on the 3DMM [37] shape parameter distance to measure the similarity of face shape.

In Table 5, the two rows denoted by local and global show the results of conventional face swapping, not ID mixing, which uses a single source image. In particular, the row denoted by local is the result of conventional face swapping using only as the source image without using . For this reason, and are smaller than and , respectively, which means that the generated image has the same overall identity and face shape as , not . Similarly, the row denoted by global shows that has the same overall identity and face shape as , not .

On the other hand, the row denoted by ID mixing shows the results of ID mixing, which uses both the and as described in Section 3.3. In contrast to when only one of or is used, is similar to that of . It means that the overall identity of by ID mixing is like a new identity, a mixed identity of and . Furthermore, has a smaller value than . It means that the face shape of the generated image is more similar to that of than that of , which is consistent with Figure 6.

| Overall identity | Face shape | |||

|---|---|---|---|---|

| R-ID (gb) | R-ID (lc) | R-Shape (gb) | R-Shape (lc) | |

| Local | 0.602 | 0.398 | 0.609 | 0.391 |

| ID mixing | 0.515 | 0.485 | 0.466 | 0.534 |

| Global | 0.399 | 0.601 | 0.378 | 0.622 |

5 Conclusion

We present a state-of-the-art framework for face swapping, MFIM. Our model adopts the GAN-inversion method using pretrained StyleGAN to generate a megapixel image and exploits 3DMM to supervise our model. Finally, we design a new operation, ID mixing, that creates a new identity using multiple source images and performs face swapping with that new identity.

However, the face swapping model can cause negative impacts on society. For example, a video made with a malicious purpose (e.g., fake news) can cause fatal damage to the victim. Nevertheless, it has positive impacts on the entertainment and theatrical industry. In addition, generating elaborate face-swapped images can contribute to advances in deepfake detection.

Appendix. A Architecture

In this section, we describe the architectures of facial attribute encoder, generator and discriminator.

Appendix. A.1 Facial Attribute Encoder.

Our facial attribute encoder, which is based on the psp [34] encoder, uses the same encoder backbone (blue structures denoted as ‘Encoder Blocks’ in Figure 2(a)) as the psp encoder. As shown in Figure 2(a), the encoder backbone extracts the hierarchical latent maps from the given image. The M2C and M2M blocks of our facial attribute encoder extract the style codes and style maps from the hierarchical latent maps extracted from the backbone, respectively. The details of encoding process are as follows.

Appendix. A.1.1 Style codes.

The architecture of the M2C block is the same as that of the Map2Style block of the pSp encoder. However, the pSp encoder produces eighteen style codes because it maps the image to space [2], whereas our facial attribute encoder maps the image to space [42], so twenty-six style codes, . Then, the style codes go through the following additional steps:

| (7) |

where is a set of learnable parameters and is a set of style codes that maps an average latent code of space [21] to space. , , and have the same dimensions.

We extract the style codes from the source image, , and the target image, , respectively, and combine them to construct the final style codes. Let us denote the style codes extracted from and , and , respectively. We construct the ID-irrelevant style codes, , by taking a subset of , and the ID style codes from , where is a hyperparameter for the border index between the ID and ID-irrelevant style codes. We set . Then, the final style codes, , are constructed by combining and . Finally, is used in weight demodulation operation [22].

Appendix. A.1.2 Style maps.

Our facial attribute encoder introduces an M2M block with the architecture depicted in Table 6 to extract the style maps from the target image. As shown in Table 6, the M2M block takes the latent maps as input and produces two groups of style maps, which are denoted as Output 0 and Output 1 in Table 6 respectively, of the same spatial size as the input latent maps.

Our encoder produces a total of four groups of style maps: two groups with a spatial size of , , and the remaining two groups have a spatial size of , . All of these style maps have the channel dimensions of 512. Finally, these style maps are given to the pretrained StyleGAN generator as noise inputs.

| (Input): latent maps () | |

|---|---|

| Conv (, ) | |

| LeakyReLU () | |

| Conv (, ) | |

| LeakyReLU () | |

| Conv (, ) | Conv (, ) |

| InstanceNorm | InstanceNorm |

| (Output 0): style maps () | (Output 1): style maps () |

| layer index | Resolution | Layer name | Style code | Style code type | Style maps |

| 0 | Conv | ID-irrelevant | - | ||

| 1 | ToRGB | ID-irrelevant | - | ||

| 2 | ConvUp | ID-irrelevant | - | ||

| 3 | Conv | ID-irrelevant | - | ||

| 4 | ToRGB | ID-irrelevant | - | ||

| 5 | ConvUp | ID-irrelevant | |||

| 6 | Conv | ID-irrelevant | |||

| 7 | ToRGB | ID-irrelevant | - | ||

| 8 | ConvUP | ID | |||

| 9 | Conv | ID | |||

| 10 | ToRGB | ID | - | ||

| 11 | ConvUP | ID | - | ||

| 12 | Conv | ID | - | ||

| 13 | ToRGB | ID | - | ||

| 14 | ConvUP | ID | - | ||

| 15 | Conv | ID | - | ||

| 16 | ToRGB | ID | - | ||

| 17 | ConvUP | ID | - | ||

| 18 | Conv | ID | - | ||

| 19 | ToRGB | ID | - | ||

| 20 | ConvUP | ID | - | ||

| 21 | Conv | ID | - | ||

| 22 | ToRGB | ID | - | ||

| 23 | ConvUP | ID | - | ||

| 24 | Conv | ID | - | ||

| 25 | ToRGB | ID | - |

| layer index | Resolution | Layer name | Style code | Style code type | Style maps |

| 0 | Conv | ID-irrelevant | - | ||

| 1 | ToRGB | ID-irrelevant | - | ||

| 2 | ConvUp | ID-irrelevant | - | ||

| 3 | Conv | ID-irrelevant | - | ||

| 4 | ToRGB | ID-irrelevant | - | ||

| 5 | ConvUp | ID-irrelevant | |||

| 6 | Conv | ID-irrelevant | |||

| 7 | ToRGB | ID-irrelevant | - | ||

| 8 | ConvUP | Global ID | |||

| 9 | Conv | Global ID | |||

| 10 | ToRGB | Local ID | - | ||

| 11 | ConvUP | Local ID | - | ||

| 12 | Conv | Local ID | - | ||

| 13 | ToRGB | Local ID | - | ||

| 14 | ConvUP | Local ID | - | ||

| 15 | Conv | Local ID | - | ||

| 16 | ToRGB | Local ID | - | ||

| 17 | ConvUP | Local ID | - | ||

| 18 | Conv | Local ID | - | ||

| 19 | ToRGB | Local ID | - | ||

| 20 | ConvUP | Local ID | - | ||

| 21 | Conv | Local ID | - | ||

| 22 | ToRGB | Local ID | - | ||

| 23 | ConvUP | Local ID | - | ||

| 24 | Conv | Local ID | - | ||

| 25 | ToRGB | Local ID | - |

Appendix. A.2 Generator

We use the pretrained generator of StyleGAN [22], so we use the same architecture with StyleGAN without modification except for the mapping network that maps a random vector to an intermediate latent space . We replace the mapping network with the facial attribute encoder which produces the ID-irrelevant style codes, ID style codes and style maps. These are forwarded appropriately to each layer of the pretrained StyleGAN generator, as shown in Tables 7 and 8. Table 7 describes the process of face swapping, which uses a single source image, , but Table 8 describes the process of id mixing, which uses the global and local source images, and .

Appendix. A.3 Discriminator

We use the pretrained discriminator of StyleGAN [22], so we use the same architecture with StyleGAN without modification.

Appendix. B Hyperparameters

Table 9 shows weights for each loss to train our model. Following StyleGAN [22], we use R1 regularization [29] every sixteen training steps. Table 10 shows additional hyperparameters for optimization. For the optimizer, we use the Ranger optimizer, which is a combination of RAdam [28] and Lookahead [45], following pSp [34]. We use a learning rate of and decrease it by every 40,000 steps after 500,000 steps. We use a batch size of four, which means that we use four pairs of source and target images for training. However, for one of the four pairs, we make the source image and the target image the same, so that the generator performs self-reconstruction on that pair.

| step | |||||||

|---|---|---|---|---|---|---|---|

| 2.0 | 1.0 | 0.1 | 10.0 | 5.0 | 1.0 | 1.0 | 16 |

| Training steps | Optimizer | Learning rate | Learning rate decay | Batch size | Self-recon size |

|---|---|---|---|---|---|

| 700,000 | Ranger | 0.0001 | Step | 4 | 1 |

Appendix. C Preprocess and Postprocess

Appendix. C.1 Data preprocess

We use FFHQ [21], which consists of 70,000 human faces at resolution, for the training dataset. It is noteworthy that the most of the previous face-swapping models [27, 41, 48, 17] extend the training dataset by combining multiple datasets, but we only use FFHQ. Therefore, our model can be trained more efficiently because our model does not require any additional preprocess steps such as image alignment to combine the multiple datasets.

Appendix. C.2 Postprocess: ROI Only Synthesis

Our model can faithfully reconstruct the background or hair style of , but we can further improve our model to reconstruct the high-frequency details of the background or hair style via ROI only synthesis.

Note that it does not require a segmentation label at all. This process is depicted in Figure 7. Assuming that the image is aligned, we use a mask, which has a size of , with a fixed box at the expected location of the face. Specifically, we set the size of the box to a width of 512 and a height of 608 and top-left coordinates, , to . The inside of the box has a value of one, and the outside has a value of zero. Then, we blur the boundary by downsampling the mask to the size of and upsampling it to the size of again. With this mask, the final output image is generated as

| (8) |

where is a mask and is the element-wise product. Note that it is not used at the training phase, only at the inference phase. Also, we use it only in the qualitative results, not in the quantitative results at all.

Appendix. D Analysis on 3DMM Supervision

We compare our 3DMM supervision method and that of HifiFace [41]. We first describe each method and then compare them with experimental results.

Appendix. D.1 Method

For the 3DMM supervision, our model utilizes the 3DMM parameter reconstruction loss which is formulated as

| (9) |

where , , and are described in the main manuscript, and , , and are weights for each loss.

On the other hand, HifiFace utilizes the landmark reconstruction loss. Note that 3DMM can reconstruct a 3D face using 3DMM parameters and extract landmark keypoints corresponding to the 3D face. Using this capability, HifiFace encourages the landmark keypoints of the generated image, to be equal to its ground-truth landmark keypoints, . Here, when using DECA [16], , and the ground-truth landmark keypoints are extracted from the reconstructed 3D face using the shape parameter of the source image and the pose, expression, and cam parameters of the target image. We apply this method to our model to formulate the landmark reconstruction loss as

| (10) |

| Configuration | Identity | Shape | Expression | Pose | Pose-HN |

|---|---|---|---|---|---|

| B. | 96.066 | 0.887 | 0.424 | 0.053 | 3.839 |

| B . | 96.016 | 0.892 | 0.418 | 0.046 | 3.683 |

| B . | 96.153 | 0.842 | 0.426 | 0.060 | 4.173 |

| Configuration | Identity | Shape | Expression | Pose | Pose-HN |

|---|---|---|---|---|---|

| A. Baseline MFIM | 70.160 | 0.383 | 1.116 | 0.145 | 7.899 |

| B. style maps | 91.430 | 0.823 | 0.398 | 0.051 | 3.795 |

| C. | 86.476 | 0.635 | 0.864 | 0.085 | 5.091 |

| D. | 86.777 | 0.634 | 0.860 | 0.078 | 4.797 |

| E. | 91.469 | 0.782 | 0.400 | 0.057 | 4.095 |

| F. | 92.018 | 0.778 | 0.387 | 0.041 | 3.876 |

Appendix. D.2 Comparison between and

In Table 11, we compare and on CelebA-HQ [20]. Here, unlike the quantitative experiment on CelebA-HQ in the main manuscript, we use 30,000 face-swapped images instead of 300,000. Specifically, we randomly assign an image to each image in CelebA-HQ and make 30,000 pairs of the source image and target image.

The configuration (B) in Table 11 is the same with that in the main manuscript. Then, we construct the configurations (B+) and (B+) by adding and to the configuration (B), respectively. The configuration (B+) is the same with the configuration (E), our proposed model, in the main manuscript.

As shown in Table 11, adding to the configuration (B) does not improve the shape score while improves the shape score. However, we can see that improves the pose score by comparing the configurations (B) and (B+). We think that this may be because the pose, which is the more global attribute than the shape and expression, has a greater effect on the landmark regression than the shape or expression. For this reason, the most effective way to decrease can be to match the pose of the generated image to that of the target image. As a result, the model focuses on matching poses, and may not be sufficiently motivated to improve the shape score.

In contrast, we use a separate loss for each attribute. In particular, to decrease , the face shape of the generated image should be the same as that of the source image. Due to this difference, can improve the shape score, while cannot. Although the pose score is somewhat degraded after applying , transforming the face shape rather than preserving the pose is one of our important goals. Furthermore, the configuration (B+) still shows the visually plausible results in terms of the pose. Therefore, we propose as our 3DMM supervision method.

Appendix. D.3 Combination of and

Based on the results in Table 11, we further improve our model by combining and as shown in Table 12. For the results in Table 12, we use 300,000 face-swapped images, which is the same setting with that of the quantitative experiment on CelebA-HQ in the main manuscript.

In Table 12, we construct the configuration (F) by adding with the weight for this loss of 1,000 (i.e., ) to the configuration (E). Here, we use only some of the landmark keypoints instead of the full landkark keypoints to encourage our model to further focus on matching the pose. Specifically, we use and . As shown in Table 12, the configuration (F) achieves the better pose and pose-HN scores than the configuration (E) without deterioration on the shape and expression scores. As a result, the configuration (F) achieves the better shape, expression, and pose scores than the configuration (B) at the same time. However, is not our contribution and the configuration (E) also shows the visually plausible results in terms of pose, so we propose the configuration (E) as our final model.

Figure 8 shows the qualitative results for several configurations. We construct the configuration (E+) by adding ROI only synthesis (Section Appendix. C.2) to the configuration (E). As shown in Figure 8, the configuration (E+) transfers the ID attributes (e.g., eyes and face shape) of the source image actively while preserving the ID-irrelevant attributes (e.g., pose and expression) of the target image. In Figure 8, the differences between the configurations (A) and (B) show the effectiveness of the style maps, and the differences between the configurations (B) and (E+) show the effectiveness of the 3DMM supervision.

Appendix. E Comparison with MegaFS on ID Mixing

One of the state-of-the-art models, MegaFS [48], has a potential to perform ID mixing because it also exploits the StyleGAN [22] architecture. However, MegaFS is not good at transforming the face shape as demonstrated in the manuscript. As a result, in Fig. 9, MegaFS fails to performing ID mixing because it cannot transfer the round face shape of the global source image to the target image. It only transfers the eyes of the local source image to the target image. For this reason, the generated image by MegaFS does not seem an ID-mixed image. In contrast, our model, MFIM, can transfer the round face shape of the global source image and the eyes of the local source image at the same time. As a result, the generated image by MFIM seems an ID-mixed image.

Appendix. F Additional Samples

Figure 10 shows the qualitative results of face swapping on FaceForensics++ [35]. Figures 11, 12, 13, and 14 show the qualitative results of face swapping on CelebA-HQ [20]. Figure 15 shows the qualitative results of ID mixing on CelebA-HQ.

References

- [1] Deepfakes. https://github.com/ondyari/FaceForensics/tree/master/dataset/DeepFakes

- [2] Abdal, R., Qin, Y., Wonka, P.: Image2stylegan: How to embed images into the stylegan latent space? In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 4432–4441 (2019)

- [3] Alaluf, Y., Patashnik, O., Cohen-Or, D.: Restyle: A residual-based stylegan encoder via iterative refinement. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 6711–6720 (2021)

- [4] Alexander, O., Rogers, M., Lambeth, W., Chiang, M., Debevec, P.: The digital emily project: photoreal facial modeling and animation. In: Acm siggraph 2009 courses, pp. 1–15 (2009)

- [5] Bahng, H., Chung, S., Yoo, S., Choo, J.: Exploring unlabeled faces for novel attribute discovery. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5821–5830 (2020)

- [6] Blanz, V., Vetter, T.: A morphable model for the synthesis of 3d faces. In: Proceedings of the 26th annual conference on Computer graphics and interactive techniques. pp. 187–194 (1999)

- [7] Brock, A., Donahue, J., Simonyan, K.: Large scale gan training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096 (2018)

- [8] Cao, C., Weng, Y., Zhou, S., Tong, Y., Zhou, K.: Facewarehouse: A 3d facial expression database for visual computing. IEEE Transactions on Visualization and Computer Graphics 20(3), 413–425 (2013)

- [9] Cao, Q., Shen, L., Xie, W., Parkhi, O.M., Zisserman, A.: Vggface2: A dataset for recognising faces across pose and age. In: 2018 13th IEEE international conference on automatic face & gesture recognition (FG 2018). pp. 67–74. IEEE (2018)

- [10] Chen, R., Chen, X., Ni, B., Ge, Y.: Simswap: An efficient framework for high fidelity face swapping. In: Proceedings of the 28th ACM International Conference on Multimedia. pp. 2003–2011 (2020)

- [11] Cho, W., Choi, S., Park, D.K., Shin, I., Choo, J.: Image-to-image translation via group-wise deep whitening-and-coloring transformation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 10639–10647 (2019)

- [12] Choi, Y., Choi, M., Kim, M., Ha, J.W., Kim, S., Choo, J.: Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 8789–8797 (2018)

- [13] Choi, Y., Uh, Y., Yoo, J., Ha, J.W.: Stargan v2: Diverse image synthesis for multiple domains. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 8188–8197 (2020)

- [14] Deng, J., Guo, J., Xue, N., Zafeiriou, S.: Arcface: Additive angular margin loss for deep face recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 4690–4699 (2019)

- [15] Deng, Y., Yang, J., Xu, S., Chen, D., Jia, Y., Tong, X.: Accurate 3d face reconstruction with weakly-supervised learning: From single image to image set. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. pp. 0–0 (2019)

- [16] Feng, Y., Feng, H., Black, M.J., Bolkart, T.: Learning an animatable detailed 3D face model from in-the-wild images. vol. 40 (2021), https://doi.org/10.1145/3450626.3459936

- [17] Gao, G., Huang, H., Fu, C., Li, Z., He, R.: Information bottleneck disentanglement for identity swapping. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 3404–3413 (2021)

- [18] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. Advances in neural information processing systems 27 (2014)

- [19] Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1125–1134 (2017)

- [20] Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017)

- [21] Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4401–4410 (2019)

- [22] Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., Aila, T.: Analyzing and improving the image quality of stylegan. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8110–8119 (2020)

- [23] Kemelmacher-Shlizerman, I.: Transfiguring portraits. ACM Transactions on Graphics (TOG) 35(4), 1–8 (2016)

- [24] Kim, H., Choi, Y., Kim, J., Yoo, S., Uh, Y.: Exploiting spatial dimensions of latent in gan for real-time image editing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 852–861 (2021)

- [25] Kim, J., Lee, J., Zhang, B.T.: Smooth-swap: A simple enhancement for face-swapping with smoothness. arXiv preprint arXiv:2112.05907 (2021)

- [26] Lee, H.Y., Tseng, H.Y., Huang, J.B., Singh, M., Yang, M.H.: Diverse image-to-image translation via disentangled representations. In: Proceedings of the European conference on computer vision (ECCV). pp. 35–51 (2018)

- [27] Li, L., Bao, J., Yang, H., Chen, D., Wen, F.: Faceshifter: Towards high fidelity and occlusion aware face swapping. arXiv preprint arXiv:1912.13457 (2019)

- [28] Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., Han, J.: On the variance of the adaptive learning rate and beyond. In: Proceedings of the Eighth International Conference on Learning Representations (ICLR 2020) (April 2020)

- [29] Mescheder, L., Geiger, A., Nowozin, S.: Which training methods for gans do actually converge? In: International conference on machine learning. pp. 3481–3490. PMLR (2018)

- [30] Mosaddegh, S., Simon, L., Jurie, F.: Photorealistic face de-identification by aggregating donors’ face components. In: Asian Conference on Computer Vision. pp. 159–174. Springer (2014)

- [31] Na, S., Yoo, S., Choo, J.: Miso: Mutual information loss with stochastic style representations for multimodal image-to-image translation. arXiv preprint arXiv:1902.03938 (2019)

- [32] Naruniec, J., Helminger, L., Schroers, C., Weber, R.M.: High-resolution neural face swapping for visual effects. In: Computer Graphics Forum. vol. 39, pp. 173–184. Wiley Online Library (2020)

- [33] Park, T., Liu, M.Y., Wang, T.C., Zhu, J.Y.: Semantic image synthesis with spatially-adaptive normalization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2337–2346 (2019)

- [34] Richardson, E., Alaluf, Y., Patashnik, O., Nitzan, Y., Azar, Y., Shapiro, S., Cohen-Or, D.: Encoding in style: a stylegan encoder for image-to-image translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2287–2296 (2021)

- [35] Rossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., Nießner, M.: Faceforensics++: Learning to detect manipulated facial images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 1–11 (2019)

- [36] Ruiz, N., Chong, E., Rehg, J.M.: Fine-grained head pose estimation without keypoints. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (June 2018)

- [37] Sanyal, S., Bolkart, T., Feng, H., Black, M.J.: Learning to regress 3d face shape and expression from an image without 3d supervision. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7763–7772 (2019)

- [38] Tov, O., Alaluf, Y., Nitzan, Y., Patashnik, O., Cohen-Or, D.: Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics (TOG) 40(4), 1–14 (2021)

- [39] Ulyanov, D., Vedaldi, A., Lempitsky, V.: Instance normalization: The missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022 (2016)

- [40] Wang, T., Zhang, Y., Fan, Y., Wang, J., Chen, Q.: High-fidelity gan inversion for image attribute editing. arXiv preprint arXiv:2109.06590 (2021)

- [41] Wang, Y., Chen, X., Zhu, J., Chu, W., Tai, Y., Wang, C., Li, J., Wu, Y., Huang, F., Ji, R.: Hififace: 3d shape and semantic prior guided high fidelity face swapping. arXiv preprint arXiv:2106.09965 (2021)

- [42] Wu, Z., Lischinski, D., Shechtman, E.: Stylespace analysis: Disentangled controls for stylegan image generation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12863–12872 (2021)

- [43] Xia, W., Zhang, Y., Yang, Y., Xue, J.H., Zhou, B., Yang, M.H.: Gan inversion: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence (2022)

- [44] Yoo, S., Bahng, H., Chung, S., Lee, J., Chang, J., Choo, J.: Coloring with limited data: Few-shot colorization via memory augmented networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 11283–11292 (2019)

- [45] Zhang, M., Lucas, J., Ba, J., Hinton, G.E.: Lookahead optimizer: k steps forward, 1 step back. Advances in Neural Information Processing Systems 32 (2019)

- [46] Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 586–595 (2018)

- [47] Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision. pp. 2223–2232 (2017)

- [48] Zhu, Y., Li, Q., Wang, J., Xu, C.Z., Sun, Z.: One shot face swapping on megapixels. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4834–4844 (2021)