MetaNeRV: Meta Neural Representations for Videos

with Spatial-Temporal Guidance

Abstract

Neural Representations for Videos (NeRV) has emerged as a promising implicit neural representation (INR) approach for video analysis, which represents videos as neural networks with frame indexes as inputs. However, NeRV-based methods are time-consuming when adapting to a large number of diverse videos, as each video requires a separate NeRV model to be trained from scratch. In addition, NeRV-based methods spatially require generating a high-dimension signal (i.e., an entire image) from the input of a low-dimension timestamp, and a video typically consists of tens of frames temporally that have a minor change between adjacent frames. To improve the efficiency of video representation, we propose Meta Neural Representations for Videos, named MetaNeRV, a novel framework for fast NeRV representation for unseen videos. MetaNeRV leverages a meta-learning framework to learn an optimal parameter initialization, which serves as a good starting point for adapting to new videos. To address the unique spatial and temporal characteristics of video modality, we further introduce spatial-temporal guidance to improve the representation capabilities of MetaNeRV. Specifically, the spatial guidance with a multi-resolution loss aims to capture the information from different resolution stages, and the temporal guidance with an effective progressive learning strategy could gradually refine the number of fitted frames during the meta-learning process. Extensive experiments conducted on multiple datasets demonstrate the superiority of MetaNeRV for video representations and video compression.

code — https://github.com/jialong2023/MetaNeRV

Introduction

In recent years, Implicit Neural Representations (INR) have emerged as a powerful tool for continuously representing data in various computer vision tasks. The core idea of INR is to represent a signal as a function that can be effectively approximated by a neural network (Pinkus 1999). This network encodes the signal’s values implicitly within its structure and parameters during the training or fitting process, allowing for the subsequent retrieval of these values through corresponding coordinates. Notably, INR has recently gained significant attention in the context of neural representations for videos, with notable examples including NeRV (Chen et al. 2021) and E-NeRV (Li et al. 2022). In contrast to traditional coordinate-based neural representations, NeRV-based approaches take the frame index as input and directly generate the desired frame image, which is called image-wise implicit neural representations 1, resulting in significantly faster training and inference speeds compared to their coordinate-based counterparts.

Although existing NeRV-based methods demonstrate impressive capabilities, their limitation to encoding a single video at a time restricts their applicability in real-world scenarios, as each new video typically requires optimization through numerous gradient descent steps.

D-NeRV (He et al. 2023) memorize keyframes of videos within the training set, aiming to enhance generalization by reconstructing transition frames from these keyframes. Nevertheless, such methodologies are constrained to representing frames within the trained videos, potentially hindering their ability to generalize to previously unseen videos.

To accelerate the adaptation of NeRV-based models on unseen videos, we present MetaNeRV, a meta-learning framework designed to learn optimal initial weights for neural representations of videos. Compared to traditional random initialization techniques, MetaNeRV learns effective initialization weights across a series of videos, acting as a powerful prior that accelerates convergence during optimization and enhances generalization capabilities for unseen videos. Our methodology employs the optimization-based meta-learning algorithm MAML (Finn, Abbeel, and Levine 2017), using a diverse meta-training set of videos to generate initial weight configurations ideally suited for representing unseen videos, leading to faster convergence and better generalization. As depicted in Fig. 1(b), each new video necessitates its optimization process, which can be less efficient. However, with proper initialization, the number of iteration steps can be significantly reduced.

Relying solely on meta-learning may yield poor performance. To address this, we propose two enhancements: Spatial Guidance: We introduce a multi-resolution loss function and add header modules to each NeRV block layer. These modules output video frames at different resolutions, improving the meta-learning framework’s representation. Temporal Guidance: To handle convergence issues and inefficiency with complex videos, we adopt a progressive training strategy. During meta-learning, we gradually increase subtask difficulty, allowing the framework to smoothly transition from easier to more complex tasks.

Experimental results on multiple datasets demonstrate that MetaNeRV outperforms other frame-wise methods in both video representations. Additionally, we explore various applications of our method, including video compression and video denoising tasks. With quantization-aware training and entropy coding, MetaNeRV outperforms widely-used video codecs such as H.264 (Wiegand et al. 2003) and HEVC (Sullivan et al. 2012) and performs comparably with state-of-the-art video compression algorithms.

The contributions of this work are summarized as follows:

-

•

We introduce MetaNeRV, a meta-learning-driven framework with spatial-temporal guidance, tailored for NeRV-based video reconstruction. MetaNeRV enhances its performance by optimizing the initialization parameters.

-

•

We propose a progressive training strategy as temporal guidance and incorporate a multi-resolution loss as spatial guidance within the meta-learning framework, providing precise supervision for better representation capabilities and improving training efficiency.

-

•

Comprehensive experiments on various video datasets show that: (i) optimized initialization parameters lead to a significant acceleration in the convergence of the NeRV-based model, achieving a remarkable 9x increase in speed; (ii) the incorporation of our proposed guidance enhances the training efficacy of the meta-learning framework, e.g. resulting in a notable improvement of +16 PSNR in a single step on the EchoNet-LVH dataset; (iii) excellent performance in several video-related applications, including video compression and denoising.

Related Work

Implicit neural representations. Neural networks can be used to approximate the functions that map the input coordinates to various types of signals. It has brought great interest and has been widely adopted to represent 3D shape (Sitzmann, Zollhöfer, and Wetzstein 2019; Mescheder et al. 2019; Park et al. 2019; Liu et al. 2023b), novel view synthesis (Mildenhall et al. 2021; Yu et al. 2021) and so on. These approaches train a neural network to fit a single scene or object such that the network weights encode it. Implicit neural representations have also been applied to represent signals (Hinton, Osindero, and Teh 2006; Vaswani et al. 2017; Tancik et al. 2020; Sitzmann et al. 2020b; Peng, Zeng, and Zhao 2022a, b; Zhang et al. 2025), images (Wang, Simoncelli, and Bovik 2003; Hsu et al. 2019; Chen, Liu, and Wang 2021; Dupont et al. 2021; Chen et al. 2024; Liu et al. 2023a), videos (Chen et al. 2021; Lai 2021; Rho et al. 2022; He 2022; Tong 2022), and time series (Li et al. 2024).

Image-wise implicit neural representations. The first image-wise implicit neural representation for videos is proposed by NeRV (Chen et al. 2021), which takes the frame index and outputs the corresponding RGB frame. Compared to the pixel-wise implicit neural representation (Sitzmann et al. 2020b), NeRV improves the encoding and decoding speed greatly and achieves better video reconstruction quality. Based on NeRV, E-NeRV (Li et al. 2022) boosts the video reconstruction performance via decomposing the image-wise implicit neural representation into separate spatial and temporal contexts. CNeRV (Chen et al. 2022) proposes a hybrid video neural representation with content-adaptive embedding to introduce internal generalization further. NRFF (He et al. 2023) introduces a visual content encoder to encode the clip-specific visual content from the sampled key-frames and a motion-aware decoder to output video frames. FFNeRV (Lee et al. 2023) introduces the multi-resolution temporal grids to combine different temporal resolutions. HNeRV (Chen et al. 2023) and HiVeRV (Kwan et al. 2024) proposed a hybrid neural representation approach, employing a VAE-shaped deep network to address these concerns.

Video compresion Visual data compression, a cornerstone of computer vision and image processing, has been extensively studied over several decades. Traditional video compression algorithms like H.264 (Wiegand et al. 2003), and HEVC (Sullivan et al. 2012) have achieved remarkable success. Some works have approached video compression as an image interpolation problem, introducing competitive interpolation networks (Wu, Singhal, and Krahenbuhl 2018), generalized optical flow to scale-space flow for enhanced uncertainty modeling (Agustsson et al. 2020; Yang et al. 2020b), and employed temporal hierarchical structures with neural networks for various components (Yang et al. 2020a). However, these methods are still constrained by the traditional compression pipeline. Alternatively, NeRV adopts the INR method, transforming video compression into model compression and demonstrating substantial potential. Given that videos are typically encoded once but decoded multiple times, INR methods like NeRV excel due to their high decoding efficiency and facilitate parallel decoding, contrasting with sequential decoding requirements in other video compression methods post key frame reconstruction.

Meta-learning INRs. Meta-learning typically addresses the problem of “few-shot learning”, where some example tasks are used to train an algorithm that has a great generalization ability on new similar tasks. Some previous works on meta-learning have focused on few-shot learning (Ravi and Larochelle 2016; Mishra et al. 2017; Patravali 2021; Liu et al. 2023a) and reinforcement learning (Finn, Abbeel, and Levine 2017; Sitzmann et al. 2020a), where a meta-learner allows fast adaptation for new observations and better generalization with few samples. Optimization-based meta-learning algorithms such as Model-Agnostic Meta-Learning (MAML) (Finn, Abbeel, and Levine 2017; Li et al. 2017; Antoniou, Edwards, and Storkey 2018; Flennerhag et al. 2019; Rajeswaran et al. 2019; Hospedales et al. 2021; Tancik et al. 2021; Guo et al. 2024; Liu et al. 2023a) are relevant to this work. Given a network architecture for performing a task, these methods use an outer loop of gradient-based learning to find a weight initialization that allows the network to optimize more efficiently for new tasks at test time. These methods assume the use of a standard gradient-based optimization method such as stochastic gradient descent or Adam (Kingma and Ba 2014) at test time. Liu et al. (Liu et al. 2023a) propose partition methods for learning-to-learn INRs by meta-learning.

We are the first to utilize the meta-learning framework for image-wise implicit neural representation models, resulting in increased convergence speed and enhanced generalizability of video implicit neural representation methods. As illustrated in Fig. 2, our method has shown significant performance in quantitative and qualitative experiments.

Methods

Problem Formulation

We aim to learn a prior over INR for video. As in (Chen et al. 2021; Li et al. 2022), the video can be viewed as a continuous function defined in a bounded domain that . We define a video , where is the frame and denotes totally frames. The NeRV-based model fits the video via a deep neural network which is represented by a neural representation , where the input is a frame index and the output is the corresponding RGB image . Therefore, video encoding is done by fitting a neural network to a given video. We present the details in Fig.1(a).

In NeRV-based models, the input usually consists of embedding vectors generated by frame index encoding. Some methods incorporate complementary information to generate these embedding vectors. We follow E-NeRV(Li et al. 2022) to integrate coordinate data to construct spatiotemporal embedding vectors. These embedding vectors are then fed into the generator, which is successively expanded by convolutional or anti-convolutional operations to predict the desired image size. The theoretical details concerning video fitting can be found in Appendix Section A.

MetaNeRV Framework

In this section, we provide a detailed introduction to our MetaNeRV framework, as illustrated in Fig. 3. Given a dataset of observations of videos from a specific distribution (e.g., traditional videos or ultrasound videos), our objective is to find initial weights that minimize the final loss when optimizing a network through optimization steps to represent a new video from the same distribution. Our target function is formulated as follows:

| (1) |

We utilize MAML (Finn, Abbeel, and Levine 2017; Liu et al. 2023a) to learn the initial weights of the network so that it can be a good starting point for gradient descent in the new tasks.

Given a video , calculating the weight values necessitates executing optimization steps, collectively termed as the inner loop. We encapsulate this inner loop with an outer loop of meta-learning to ascertain the initial weights . In each iteration of the outer loop, we sample a video from and apply the update rule:

| (2) |

with meta-learning learning rate . This update rule applies gradient descent to the loss on the weights resulting from the inner loop optimization.

We adopt a combination of L1 and SSIM loss as our loss function for network optimization, which calculates the loss of overall pixel locations of the predicted image and the ground-truth image. Loss function details can be found in Appendix Section A.

Spatial Guidance

The spatial challenge arises because NeRV-based models progressively enlarge from a small vector by repeatedly passing through the same block. Directly applying the finest-grained supervision at the final resolution stages may result in insufficient supervisory signals for the preceding blocks, potentially making it difficult for the earlier resolution stages to converge to a better solution at that resolution. Therefore, we introduce multi-resolution supervision, which provides supervisory signals directly at different resolution stages, to encourage the output of all NeRV blocks to converge toward the unique ground truth. This spatial guidance enhances both the fitting accuracy and convergence speed.

The NeRV-based model employs up-sample blocks to scale the encoding of the frame index into the image with an appropriate block-by-block size. These up-sample blocks comprise feature layers, and for each of these layers, we append a convolutional header:

| (3) |

where the feature of each layer is handled by the header layer to generate a video frame corresponding to the size of the feature map as shown in Fig. 4(a).

This header transforms the multi-channel feature map into a three-channel feature map. We then downsample the ground truth image to align with the size of the feature map, ultimately computing the loss for gradient backpropagation:

| (4) |

where the final loss is calculated by computing a weighted sum based on the global average pooling of downsample frame .

| Methods | PSNR (Step1/Step3) | MSSSIM (Step1/Step3) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MCLJCV | HMDB-51 | UCF101 | EchoNet-LVH | EchoCP | MCLJCV | HMDB-51 | UCF101 | EchoNet-LVH | EchoCP | |

| NeRV | 11.23/13.78 | 11.71/14.57 | 11.38/14.78 | 8.06/15.23 | 7.14/17.68 | 0.19/0.37 | 0.15/0.41 | 0.14/0.37 | 0.32/0.50 | 0.21/0.41 |

| E-NeRV | 11.13/15.78 | 11.13/15.78 | 10.04/15.04 | 6.36/16.44 | 7.79/18.53 | 0.24/0.61 | 0.24/0.61 | 0.25/0.59 | 0.38/0.71 | 0.21/0.74 |

| FFNeRV | 10.11/12.47 | 11.71/13.09 | 12.75/13.88 | 6.64/16.95 | 7.46/12.27 | 0.19/0.32 | 0.15/0.32 | 0.14/0.25 | 0.20/0.45 | 0.22/0.32 |

| HNeRV | 11.25/12.69 | 11.71/12.89 | 10.9/13.89 | 6.72/17.35 | 6.61/17.57 | 0.21/0.36 | 0.15/0.3 | 0.13/0.26 | 0.23/0.53 | 0.20/0.40 |

| MetaNeRV | 17.60/22.02 | 18.43/21.43 | 18.69/22.46 | 24.05/26.94 | 23.34/25.44 | 0.67/0.83 | 0.73/0.88 | 0.72/0.86 | 0.88/0.94 | 0.89/0.93 |

Temporal Guidance

In terms of time, a video typically consists of tens of frames, with minor changes between adjacent frames. For videos sharing similar backgrounds, utilizing spatial guidance during training can yield better results. However, when dealing with videos where background and foreground information exhibit significant differences, the training process encounters issues with low training efficiency.

To address this challenge and enhance the model’s training efficiency, we introduce a progressive training strategy as temporal guidance. This method aims to assist the model in learning optimal initialization parameters from videos with distinct differences. As illustrated in Fig. 4(b), progressive training initiates the inner loop with a simple task: learning one frame per video. Subsequently, as iterations proceed, the number of frames in the videos is increased to elevate the task complexity gradually. The simple task enables the model to converge more rapidly, and by reducing the number of frames in the task, it also significantly decreases training time. Further details regarding the algorithm can be found in Appendix Section B.

Experiments

Datasets and Implementation Details

Dataset We conduct quantitative and qualitative comparison experiments on 8 different video datasets to evaluate our MetaNeRV against NeRV-based methods for video representation tasks. The datasets include multiple real-world datasets across various video types, such as UCF101(Soomro, Zamir, and Shah 2012), HMDB-51(Kuehne et al. 2011), and MCLJCV (Wang et al. 2016), as well as ultrasound datasets like EchoCP (Wang et al. 2021), and EchoNet-LVH (Duffy et al. 2022). We selected 900 videos for each dataset, 800 for the training set, and 100 for the test set. Each video sequence contains 60 frames, processed to a resolution of 320×240.

Furthermore, we conduct inference experiments on HOLLYWOOD2 (Marcin 2009), SVW (Safdarnejad et al. 2015), and OOPS (Epstein, Chen, and Vondrick 2020), which are diverse datasets for action recognition, encompassing movie scenes, amateur sports, and unintentional human activities, with 300 videos in each dataset. A description of more datasets can be found in Appendix Section C.

Implementation We set up-scale factors for each block of our MetaNeRV model to reconstruct a 320×240 image from the feature map of size 4×3. For a fair comparison, we follow the training schedule of the original E-NeRV implementation. We train the model using Adam optimizer (Kingma and Ba 2014) with a learning rate 1e-4 by Pytorch. We conduct all experiments with RTXA6000 GPU, while the number of inner loop steps is 3.

Metrics For evaluation metrics, we use PSNR and MS-SSIM (Wang, Simoncelli, and Bovik 2003) to evaluate reconstruction quality. Bits-per-pixel (BPP) is adopted to evaluate the image compression performance.

Main Results

Video representation.

Initially, we compare our method with image-wise INR methods. Our model has trained optimal initialization weights separately on real-world datasets (HMDB-51, UCF101) and ultrasound datasets (EchoCP, EchoNet-LVH). Due to the limited number of videos in the MCLJCV dataset, which hinders the optimization of better initialization weights, we directly infer on MCLJCV using weights trained on HMDB-51.

Qualitative comparison. Our method can swiftly adapt to the content of new videos, even with just a few iteration steps in the video representation task, as shown in Fig. 5. We observe that visualizations of initial weights on real-world datasets appear more reasonable than visualizations from randomly initialized weights, while those on ultrasound datasets exhibit more dataset-specific characteristics, visually demonstrating that our method has learned an optimal initialization weight.

Quantitative comparison. Our method significantly all other outperforms image-wise methods under fewer iteration steps. as presented in Tab. 1. Notably, on ultrasound datasets, our method’s PSNR and MS-SSIM metrics in one-step iteration exceed those of others by . On real-world datasets, our method significantly outperforms other methods in both one-step and three-step iterations.

OOD results. To demonstrate the generalization and efficiency of our method, we conducted extensive experiments on out-of-distribution (OOD) datasets. All experiments in Fig. 6 used weights trained on HMDB-51 and directly inferred on four OOD datasets. The left side of Fig. 6 showcases our method’s excellent performance on MCLJCV, while the right side illustrates that, given a target PSNR value for training, our method can significantly reduce video representation time and improve efficiency on three datasets with a larger number of videos.

| Methods | Meta- | Temporal | Spatial | PSNR (Step1/Step3) | MSSSIM (Step1/Step3) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| learning | Guidance | Guidance | HMDB-51 | UCF101 | EchoNet-LVH | EchoCP | HMDB-51 | UCF101 | EchoNet-LVH | EchoCP | |

| E-NeRV | 11.13/15.78 | 10.04/15.04 | 6.36/16.44 | 7.79/18.53 | 0.24/0.61 | 0.25/0.59 | 0.38/0.71 | 0.21/0.74 | |||

| MetaNeRV-NoG | 16.89/19.43 | 16.4/19.08 | 21.66/23.72 | 19.77/21.78 | 0.57/0.71 | 0.55/0.7 | 0.81/0.87 | 0.79/0.87 | |||

| MetaNeRV-TG | 17.3/19.31 | 16.83/19.53 | 22.16/24.31 | 20.63/23.15 | 0.63/0.76 | 0.59/0.73 | 0.83/0.88 | 0.81/0.89 | |||

| MetaNeRV-SG | 17.41/22.06 | 18.04/21.96 | 23.19/25.63 | 22.03/23.77 | 0.69/0.85 | 0.68/0.83 | 0.85/0.91 | 0.88/0.92 | |||

| MetaNeRV-STG | ✓ | ✓ | ✓ | 18.43/21.43 | 18.69/22.46 | 24.05/26.94 | 23.34/25.44 | 0.73/0.88 | 0.72/0.86 | 0.88/0.94 | 0.89/0.93 |

Video Compression and Denoising.

We further evaluate MetaNeRV’s versatility with two downstream tasks: 1) video denoising on the Bunny data, and 2) video compression on the UVG dataset. Adhered to NeRV’s setting, we apply videos with noise as training data, and compare the prediction results with the real videos. We also apply an additional neural network parameter pruning with various prune ratios for different NeRV-based methods to evaluate the video compression performance. In addition, we compare the compression ability of our methods with lots of popular methods, including H.264(Wiegand et al. 2003), HEVC(Sullivan et al. 2012), HLVC(Yang et al. 2020a), Scale-space(Agustsson et al. 2020), Wu et al.(Wu, Singhal, and Krahenbuhl 2018), NeRV(Chen et al. 2021), and PS-NeRV(Bai et al. 2023).

Stronger denoising ability. As shown in Fig. 7(a), we observe that the denoising result from MetaNeRV achieves better visualization performance and higher PSNR than the denoising result from NeRV, which demonstrates the strong denoising ability of MetaNeRV.

Better performance for network pruning. As depicted in Fig. 7(b), MetaNeRV achieves better reconstruction PSNR at all different sparsity (pruning with different parameter ratios) than NeRV and E-NeRV, highlighting its robust network pruning ability for video compression.

More powerful compression ability. We present the rate-distortion curves in Fig.7(c). We find that MetaNeRV surpasses all image-wise NeRV-based approaches. Furthermore, MetaNeRV outperforms traditional video compression technologies and other learning-based video compression methods at most BPPs.

Ablation Studies

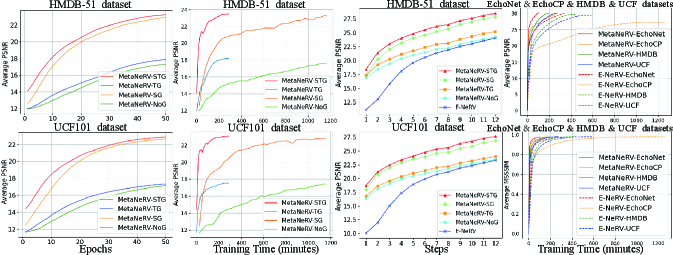

We also conducted ablation studies on four datasets to verify the effect of different guidance. More qualitative and quantitative results can be found in Appendix Section D.

Meta-learning. The meta-learning framework accelerates the video representation of four datasets by gradually adding both guidances across all experiment video datasets, as presented in Tab.2.

Spatial guidance. The spatial guidance significantly enhances the model’s performance as shown in Fig.8 (a) of training curves. Furthermore, during the inference stage in Fig.8 (c), the method with added spatial guidance achieves a higher PSNR under the same number of iterations, indicating its effectiveness in improving the model’s performance.

Temporal guidance. The temporal guidance is effective in reducing the model’s training time while also achieving a slight performance improvement, as shown in Fig.8 of training curves. During the inference stage, the model exhibits good performance, indicating that our proposed temporal guidance enhances training efficiency without compromising the model’s overall performance.

Significant results. Remarkably, our proposed method shows outstanding results after one iteration, surpassing the baseline by over 16 PSNR and 3x MS-SSIM on EchoNet-LVH, and consistently exceeding by at least 4 PSNR across other datasets. Fig.8 (d) illustrates that our proposed method significantly enhances the efficiency of video representation under the given training objectives.

Conclusion

In conclusion, we present MetaNeRV, a sophisticated meta-learning framework designed to optimize the initialization process of NeRV models. By learning optimal initialization parameters, we have achieved significant improvements in both the performance and efficiency of video reconstruction tasks. We introduce spatial guidance for precise training supervision and a temporal guidance regimen to enhance training efficiency while maintaining stability. The model has limitations, it cannot represent video for higher resolutions without retraining and may face convergence challenges with limited video training data. Future work will focus on addressing these constraints.

Acknowledgements

This work is supported by the National Key R&D Program of China (Grant No. 2022ZD0160703), the National Natural Science Foundation of China (Grant Nos. 62202422, 62406279, and 62071330), and the Shanghai Artificial Intelligence Laboratory.

References

- Agustsson et al. (2020) Agustsson, E.; Minnen, D.; Johnston, N.; Balle, J.; Hwang, S. J.; and Toderici, G. 2020. Scale-space flow for end-to-end optimized video compression. In CVPR, 8503–8512.

- Antoniou, Edwards, and Storkey (2018) Antoniou, A.; Edwards, H.; and Storkey, A. 2018. How to train your MAML. arXiv preprint arXiv:1810.09502.

- Bai et al. (2023) Bai, Y.; Dong, C.; Wang, C.; and Yuan, C. 2023. Ps-nerv: Patch-wise stylized neural representations for videos. In ICIP, 41–45. IEEE.

- Chen et al. (2022) Chen, H.; Gwilliam, M.; He, B.; Lim, S.-N.; and Shrivastava, A. 2022. CNeRV: Content-adaptive neural representation for visual data. arXiv preprint arXiv:2211.10421.

- Chen et al. (2023) Chen, H.; Gwilliam, M.; Lim, S.-N.; and Shrivastava, A. 2023. Hnerv: A hybrid neural representation for videos. In CVPR, 10270–10279.

- Chen et al. (2021) Chen, H.; He, B.; Wang, H.; Ren, Y.; Lim, S. N.; and Shrivastava, A. 2021. Nerv: Neural representations for videos. NeurIPS, 34: 21557–21568.

- Chen et al. (2024) Chen, X.; Ding, M.; Wang, X.; Xin, Y.; Mo, S.; Wang, Y.; Han, S.; Luo, P.; Zeng, G.; and Wang, J. 2024. Context autoencoder for self-supervised representation learning. International Journal of Computer Vision, 132(1): 208–223.

- Chen, Liu, and Wang (2021) Chen, Y.; Liu, S.; and Wang, X. 2021. Learning continuous image representation with local implicit image function. In CVPR, 8628–8638.

- Duffy et al. (2022) Duffy, G.; Cheng, P. P.; Yuan, N.; He, B.; Kwan, A. C.; Shun-Shin, M. J.; Alexander, K. M.; Ebinger, J.; Lungren, M. P.; Rader, F.; et al. 2022. High-throughput precision phenotyping of left ventricular hypertrophy with cardiovascular deep learning. JAMA cardiology, 7(4): 386–395.

- Dupont et al. (2021) Dupont, E.; Goliński, A.; Alizadeh, M.; Teh, Y. W.; and Doucet, A. 2021. Coin: Compression with implicit neural representations. arXiv preprint arXiv:2103.03123.

- Epstein, Chen, and Vondrick (2020) Epstein, D.; Chen, B.; and Vondrick, C. 2020. Oops! predicting unintentional action in video. In CVPR, 919–929.

- Finn, Abbeel, and Levine (2017) Finn, C.; Abbeel, P.; and Levine, S. 2017. Model-agnostic meta-learning for fast adaptation of deep networks. In International conference on machine learning, 1126–1135. PMLR.

- Flennerhag et al. (2019) Flennerhag, S.; Rusu, A. A.; Pascanu, R.; Visin, F.; Yin, H.; and Hadsell, R. 2019. Meta-learning with warped gradient descent. arXiv preprint arXiv:1909.00025.

- Glorot, Bordes, and Bengio (2011) Glorot, X.; Bordes, A.; and Bengio, Y. 2011. Deep sparse rectifier neural networks. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, 315–323. JMLR Workshop and Conference Proceedings.

- Guo et al. (2024) Guo, H.; Ba, Y.; Hu, J.; Si, L.; Qiang, W.; and Shi, L. 2024. Self-Supervised Representation Learning with Meta Comprehensive Regularization. In AAAI, volume 38, 1959–1967.

- He et al. (2023) He, B.; Yang, X.; Wang, H.; Wu, Z.; Chen, H.; Huang, S.; Ren, Y.; Lim, S.-N.; and Shrivastava, A. 2023. Towards scalable neural representation for diverse videos. In CVPR, 6132–6142.

- He (2022) He, K. 2022. Masked autoencoders are scalable vision learners. In CVPR, 16000–16009.

- Hendrycks and Gimpel (2016) Hendrycks, D.; and Gimpel, K. 2016. Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415.

- Hinton, Osindero, and Teh (2006) Hinton, G. E.; Osindero, S.; and Teh, Y.-W. 2006. A fast learning algorithm for deep belief nets. Neural computation, 18(7): 1527–1554.

- Hospedales et al. (2021) Hospedales, T.; Antoniou, A.; Micaelli, P.; and Storkey, A. 2021. Meta-learning in neural networks: A survey. IEEE transactions on pattern analysis and machine intelligence, 44(9): 5149–5169.

- Hsu et al. (2019) Hsu, Y.-C.; Lv, Z.; Schlosser, J.; Odom, P.; and Kira, Z. 2019. Multi-class classification without multi-class labels. arXiv preprint arXiv:1901.00544.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Kuehne et al. (2011) Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; and Serre, T. 2011. HMDB: a large video database for human motion recognition. In 2011 ICCV, 2556–2563. IEEE.

- Kwan et al. (2024) Kwan, H. M.; Gao, G.; Zhang, F.; Gower, A.; and Bull, D. 2024. Hinerv: Video compression with hierarchical encoding-based neural representation. NeurIPS, 36.

- Lai (2021) Lai, Z. 2021. Video autoencoder: self-supervised disentanglement of static 3d structure and motion. In ICCV, 9730–9740.

- Lee et al. (2023) Lee, J. C.; Rho, D.; Ko, J. H.; and Park, E. 2023. Ffnerv: Flow-guided frame-wise neural representations for videos. In Proceedings of the 31st ACM International Conference on Multimedia, 7859–7870.

- Li et al. (2024) Li, M.; Liu, K.; Chen, H.; Bu, J.; Wang, H.; and Wang, H. 2024. TSINR: Capturing Temporal Continuity via Implicit Neural Representations for Time Series Anomaly Detection. arXiv preprint arXiv:2411.11641.

- Li et al. (2022) Li, Z.; Wang, M.; Pi, H.; Xu, K.; Mei, J.; and Liu, Y. 2022. E-nerv: Expedite neural video representation with disentangled spatial-temporal context. In ECCV, 267–284. Springer.

- Li et al. (2017) Li, Z.; Zhou, F.; Chen, F.; and Li, H. 2017. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv preprint arXiv:1707.09835.

- Liu et al. (2023a) Liu, K.; Liu, F.; Wang, H.; Ma, N.; Bu, J.; and Han, B. 2023a. Partition Speeds Up Learning Implicit Neural Representations Based on Exponential-Increase Hypothesis. In ICCV, 5474–5483.

- Liu et al. (2023b) Liu, K.; Ma, N.; Wang, Z.; Gu, J.; Bu, J.; and Wang, H. 2023b. Implicit Neural Distance Optimization for Mesh Neural Subdivision. In 2023 IEEE International Conference on Multimedia and Expo, 2039–2044. IEEE.

- Marcin (2009) Marcin. 2009. Actions in Context. In CVPR.

- Mescheder et al. (2019) Mescheder, L.; Oechsle, M.; Niemeyer, M.; Nowozin, S.; and Geiger, A. 2019. Occupancy networks: Learning 3d reconstruction in function space. In CVPR, 4460–4470.

- Mildenhall et al. (2021) Mildenhall, B.; Srinivasan, P. P.; Tancik, M.; Barron, J. T.; Ramamoorthi, R.; and Ng, R. 2021. Nerf: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 65(1): 99–106.

- Mishra et al. (2017) Mishra, N.; Rohaninejad, M.; Chen, X.; and Abbeel, P. 2017. A simple neural attentive meta-learner. arXiv preprint arXiv:1707.03141.

- Park et al. (2019) Park, J. J.; Florence, P.; Straub, J.; Newcombe, R.; and Lovegrove, S. 2019. Deepsdf: Learning continuous signed distance functions for shape representation. In CVPR, 165–174.

- Patravali (2021) Patravali, J. 2021. Unsupervised few-shot action recognition via action-appearance aligned meta-adaptation. In ICCV, 8484–8494.

- Peng, Zeng, and Zhao (2022a) Peng, R.; Zeng, Y.; and Zhao, J. 2022a. Distill The Image to Nowhere: Inversion Knowledge Distillation for Multimodal Machine Translation. In EMNLP, 2379–2390.

- Peng, Zeng, and Zhao (2022b) Peng, R.; Zeng, Y.; and Zhao, J. 2022b. HybridVocab: Towards Multi-Modal Machine Translation via Multi-Aspect Alignment. In ICMR, 380–388.

- Pinkus (1999) Pinkus, A. 1999. Approximation theory of the MLP model in neural networks. Acta numerica, 8: 143–195.

- Rajeswaran et al. (2019) Rajeswaran, A.; Finn, C.; Kakade, S. M.; and Levine, S. 2019. Meta-learning with implicit gradients. NeurIPS, 32.

- Ravi and Larochelle (2016) Ravi, S.; and Larochelle, H. 2016. Optimization as a model for few-shot learning. In International conference on learning representations.

- Rho et al. (2022) Rho, D.; Cho, J.; Ko, J. H.; and Park, E. 2022. Neural residual flow fields for efficient video representations. In Proceedings of the Asian Conference on Computer Vision, 3447–3463.

- Safdarnejad et al. (2015) Safdarnejad, S. M.; Liu, X.; Udpa, L.; Andrus, B.; Wood, J.; and Craven, D. 2015. Sports videos in the wild (svw): A video dataset for sports analysis. In 2015 11th IEEE international conference and workshops on automatic face and gesture recognition (FG), volume 1, 1–7. IEEE.

- Sitzmann et al. (2020a) Sitzmann, V.; Chan, E.; Tucker, R.; Snavely, N.; and Wetzstein, G. 2020a. Metasdf: Meta-learning signed distance functions. NeurIPS, 33: 10136–10147.

- Sitzmann et al. (2020b) Sitzmann, V.; Martel, J.; Bergman, A.; Lindell, D.; and Wetzstein, G. 2020b. Implicit neural representations with periodic activation functions. NeurIPS, 33: 7462–7473.

- Sitzmann, Zollhöfer, and Wetzstein (2019) Sitzmann, V.; Zollhöfer, M.; and Wetzstein, G. 2019. Scene representation networks: Continuous 3d-structure-aware neural scene representations. NeurIPS, 32.

- Soomro, Zamir, and Shah (2012) Soomro, K.; Zamir, A. R.; and Shah, M. 2012. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402.

- Sullivan et al. (2012) Sullivan, G. J.; Ohm, J.-R.; Han, W.-J.; and Wiegand, T. 2012. Overview of the high efficiency video coding (HEVC) standard. IEEE Transactions on circuits and systems for video technology, 22(12): 1649–1668.

- Tancik et al. (2021) Tancik, M.; Mildenhall, B.; Wang, T.; Schmidt, D.; Srinivasan, P. P.; Barron, J. T.; and Ng, R. 2021. Learned initializations for optimizing coordinate-based neural representations. In CVPR, 2846–2855.

- Tancik et al. (2020) Tancik, M.; Srinivasan, P.; Mildenhall, B.; Fridovich-Keil, S.; Raghavan, N.; Singhal, U.; Ramamoorthi, R.; Barron, J.; and Ng, R. 2020. Fourier features let networks learn high frequency functions in low dimensional domains. NeurIPS, 33: 7537–7547.

- Tong (2022) Tong, Z. 2022. Videomae: Masked autoencoders are data-efficient learners for self-supervised video pre-training. NeurIPS, 35: 10078–10093.

- Vaswani et al. (2017) Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; and Polosukhin, I. 2017. Attention is all you need. NeurIPS, 30.

- Wang et al. (2016) Wang, H.; Gan, W.; Hu, S.; Lin, J. Y.; Jin, L.; Song, L.; Wang, P.; Katsavounidis, I.; Aaron, A.; and Kuo, C.-C. J. 2016. MCL-JCV: a JND-based H. 264/AVC video quality assessment dataset. In ICIP, 1509–1513. IEEE.

- Wang et al. (2021) Wang, T.; Li, Z.; Huang, M.; Zhuang, J.; Bi, S.; Zhang, J.; Shi, Y.; Fei, H.; and Xu, X. 2021. EchoCP: An Echocardiography Dataset in Contrast Transthoracic Echocardiography for Patent Foramen Ovale Diagnosis. In MICCAI, 506–515. Springer.

- Wang, Simoncelli, and Bovik (2003) Wang, Z.; Simoncelli, E. P.; and Bovik, A. C. 2003. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, volume 2, 1398–1402. Ieee.

- Wiegand et al. (2003) Wiegand, T.; Sullivan, G. J.; Bjontegaard, G.; and Luthra, A. 2003. Overview of the H. 264/AVC video coding standard. IEEE Transactions on circuits and systems for video technology, 13(7): 560–576.

- Wu, Singhal, and Krahenbuhl (2018) Wu, C.-Y.; Singhal, N.; and Krahenbuhl, P. 2018. Video compression through image interpolation. In ECCV, 416–431.

- Yang et al. (2020a) Yang, R.; Mentzer, F.; Gool, L. V.; and Timofte, R. 2020a. Learning for video compression with hierarchical quality and recurrent enhancement. In CVPR, 6628–6637.

- Yang et al. (2020b) Yang, R.; Yang, Y.; Marino, J.; and Mandt, S. 2020b. Hierarchical autoregressive modeling for neural video compression. arXiv preprint arXiv:2010.10258.

- Yu et al. (2021) Yu, A.; Ye, V.; Tancik, M.; and Kanazawa, A. 2021. pixelnerf: Neural radiance fields from one or few images. In CVPR, 4578–4587.

- Zhang et al. (2025) Zhang, S.; Liu, K.; Gu, J.; Cai, X.; Wang, Z.; Bu, J.; and Wang, H. 2025. Attention Beats Linear for Fast Implicit Neural Representation Generation. In ECCV 2024, 1–18.

Appendix A Appendix

A. Video Fitting Details

In implicit neural representation for video, the video is estimated and parameterized by a neural function with as its weights (learnable parameters). A typical example of is a NeRV (Chen et al. 2021). We consider a more general class of where the model fits video by mapping the inputs to high embedding space and upscaling by the MLP layers. Specifically, the basic NeRV uses Positional Encoding (Tancik et al. 2020) as its emz2bedding function, while the E-NeRV separately embeds the spatial-temporal context and is fused by the transformer (Vaswani et al. 2017).

| (5) |

where is frequency positional encoding, and is an MLP network encoder of the timestamp into a vector. Here stands for a network that fuses the other information into the temporal embeddings like spatial information. stands for the final embedding of the temporal or spatial-temporal information, and the input of the next upscaleed block.

The NeRV-based model uses MLP blocks as upscaled blocks, and the final embedding is upscaled to the output image size by stacking multiple layers of upscaled blocks. Suppose we have L layers, each of which performs an upscale operation. For layer , the upscaling operation can be expressed as:

| (6) |

where is the output of the previous layer (for the first layer, the temporal embedding vector ) and and are the weight and bias of layer . can be any appropriate upscaling function, such as convolution, fully connected layers, etc. Here the NeRV block is shown in Fig. 9.

In each layer, feature transformations and nonlinear activations can be performed in addition to upscaling operations. This can be expressed as:

| (7) |

where is a nonlinear activation function such as ReLU (Glorot, Bordes, and Bengio 2011) and GeLU (Hendrycks and Gimpel 2016).

After the (L) layer of processing, we obtain a representation that matches the image aspect size. This representation can be considered as a feature map where each layer contains image information related to the input time (t).

In summary, the whole process can be formulated as:

| (8) |

here, is the final output feature map, associated with the input time t and has the same spatial dimension as the target image. e is the temporal embedding function, and are the weight and bias of the layers, is the nonlinear activation function, and is the upscaling function.

We apply gradient descent to fit the neural network. Let denote the initial network weights before any gradient steps are taken, and let denote the weights after steps of optimization. Basic gradient descent is applied following the rule:

| (9) |

where is a learning rate parameter with an optimizer, to track the gradient moments over time to redirect the optimization trajectory. Given optimization steps, different initial weights will lead to different final weights and loss .

We adopt a combination of L1 and SSIM loss as our loss function for network optimization, which calculates the loss of overall pixel locations of the predicted image and the ground-truth image. Loss function as follows:

where is the hyper-parameter to balance the weight for each loss component, and is the responding video frame of frame index . The total number of video frames is .

We adopt E-NeRV (Li et al. 2022) with parameters as our baseline. We follow the same settings as in E-NeRV, like activation choice, as shown in Fig. 9. We set for spatial and temporal feature fusion and for temporal instance normalization. We set all the positional encoding layers in our model identical to E-NeRV’s positional encoding formulated, and we use and if not otherwise denoted.

In this work, we implemented the proposed MetaNeRV and other NeRV-based models (Chen et al. 2021; Li et al. 2022; Chen et al. 2023; Lee et al. 2023) using the PyTorch framework. And we adopted their original implementations for training NeRV, E-NeRV, HNeRV, and FFNeRV.

B. Algorithm Details

In this section, we provide a detailed introduction to the application of spatial-temporal guidance in meta-learning methods. The algorithm 1 details the progressive training approach with a NeRV-based model. The network parameters are initially set with predefined values, denoted as . The training commences with the first frame and progressively incorporates subsequent frames until all frames have been utilized. In the outer-loop iteration , the network receives the top frames from to as input (if , we choose ). The network computes the multi-resolution output based on the current parameters for all frames. Subsequently, a multi-resolution loss function evaluates the difference between the actual video and the network’s output , generating a loss value , where indicates the inner loop result and indicates the outer loop.

The algorithm updates the network parameters with the previous loss. This update is performed using gradient descent, specifically by calculating the average gradient across all losses incurred in iteration (i.e., ). The learning rate regulates the step size in the parameter update. As the training progresses from iteration to iteration, more frames are incorporated, enabling the network to learn from a growing dataset. The updated equation from equation (3) can be represented as:

| (10) |

C. Datasets Details

UCF101 (Soomro, Zamir, and Shah 2012) dataset is an extension of UCF50 and consists of 13,320 video clips, which are classified into 101 categories. These 101 categories can be classified into 5 types (Body motion, Human-human interactions, Human-object interactions, Playing musical instruments, and Sports). The total length of these video clips is over 27 hours. All the videos are collected from YouTube and have a fixed frame rate of 25 FPS with a resolution of 320 × 240.

EchoCP (Wang et al. 2021) dataset is an echocardiography dataset in contrast to transthoracic echocardiography (cTTE) targeting PFO (Patent foramen ovale) diagnosis. EchoCP consists of 30 patients with both rest and Valsalva maneuver videos which cover various PFO grades. The video is captured in the apical-4-chamber view and contains at least ten cardiac cycles with a resolution of 640 × 480.

EchoNet-LVH (Duffy et al. 2022) dataset is a standard echocardiogram study consisting of a series of videos and images visualizing the heart from different angles, positions, and image acquisition techniques. The EchoNet-LVH dataset contains 12,000 parasternal-long-axis echocardiography videos from individuals who underwent imaging as part of routine clinical care at Stanford Medicine. Each video was cropped and masked to remove text and information outside of the scanning sector. The resulting videos are at native resolution.

HMDB-51 (Kuehne et al. 2011) dataset is a large collection of realistic videos from various sources, including movies and web videos. The dataset is composed of 6,766 video clips from 51 action categories (such as “jump”, “kiss” and “laugh”), with each category containing at least 101 clips. The original evaluation scheme uses three different training/testing splits. In each split, each action class has 70 clips for training and 30 clips for testing. The average accuracy over these three splits is used to measure the final performance.

MCL-JCV (Wang et al. 2016) dataset consists of 24 source videos with resolution 1920×1080 and 51 H.264/AVC encoded clips for each source sequence. Single-pass constant QP encoding (CQP) was used with the Quantization Parameter (QP) ranging from 1 to 51. More than 120 volunteers participated in the subjective test. Each set of sequences was evaluated by around 50 subjects in a controlled environment.

HOLLYWOOD2 (Marcin 2009): This dataset contains 3669 video clips, totaling 20.1 hours, featuring 12 human action and 10 scene classes from 69 movies, providing a benchmark for real-world action recognition.

SVW (Safdarnejad et al. 2015): Containing 4200 videos from the Coach’s Eye app, SVW covers 30 sports categories and 44 actions. Its amateur content poses significant challenges for automated analysis.

OOPS (Epstein, Chen, and Vondrick 2020): The OOPS dataset includes 20,338 diverse YouTube videos totaling over 50 hours, capturing unintentional human actions in various environments and with differing intentions.

| Ultrasound | Real-world | |||

|---|---|---|---|---|

| Initialization | EchoNet | EchoCP | HMDB51 | UCF101 |

| Random | 7.15/18.74 | 7.79/18.53 | 11.93/17.08 | 10.83/16.34 |

| EchoNet | 24.05/26.94 | 18.63/19.41 | 10.62/15.11 | 12.42/17.62 |

| EchoCP | 23.49/26.60 | 23.34/25.44 | 11.03/15.68 | 12.46/17.97 |

| HMDB51 | 13.72/22.82 | 16.89/21.19 | 18.43/23.02 | 18.52/22.55 |

| UCF101 | 14.93/24.58 | 17.71/21.59 | 18.22/22.65 | 18.69/22.46 |

D. Additional Results

Impact of Dataset Scale on Meta-Initialization training and Inference Performance. The dataset’s scale significantly affects the representation capacity and generalization of meta-initialization. As the dataset expands, meta-initialization’s representation capacity and generalization gradually enhance. Still, the computational cost also increases, resulting in longer training times for obtaining a superior meta-initialization.

Experimental results in figure 12 show that a straightforward combination of meta-learning and the NeRV-base model (MetaNeRV-NoG) benefits from larger datasets. However, its performance, even with 6000 training videos, doesn’t surpass that of our proposed method (MetaNeRV-STG) with just 200 videos. Our method, incorporating spatio-temporal guidance, improves with more training videos up to about 2000, after which performance stabilizes.

Our study demonstrates that adjusting the initialization parameters can markedly improve video representation effectiveness. Specifically, meta-initialization methods, with our proposed MetaNeRV-STG leading the way, consistently outperform random initialization across various reasoning steps. Notably, while both MetaNeRV-STG and MetaNeRV-NoG exhibit strong performance in the initial iterations, the effectiveness of MetaNeRV-NoG gradually diminishes as the number of iterations increases.

Figures 13 and figures 14 provide detailed insights into this conclusion. These figures present the statistical distributions of meta-initialization, obtained through training sets comprising different numbers of videos, across various inference steps on two datasets of 6000 videos. The videos are segmented and counted based on 1.5 PSNR value intervals. The curves in these figures are color-coded to indicate varying training video quantities, with 0 representing random initialization and 50 denoting a training set of 50 videos. From left to right, the distributions correspond to different inference steps, with the upper layer showcasing the MetaNeRV-STG method and the lower layer depicting the MetaNeRV-NoG method. The visual representations clearly illustrate the superiority of our meta-initialization techniques, particularly MetaNeRV-STG, in enhancing video representation effectiveness throughout the inference process.

More OOD Quantitative results. Note that our method significantly outperforms baseline methods on independent (a.k.a. OOD) test datasets even when trained on a single real-world dataset, demonstrating the robustness and generalizability of MetaNeRV. The ablation studies on ultrasound datasets prove the effect of different guidance, as shown in Fig. 11. As illustrated in Fig.10(a), we utilized parameters trained on the HMDB-51 dataset as the optimal initialization parameters for direct inference on the three new datasets. The solid line represents our method, while the dashed line represents the E-NeRV method. The other three sub-figures in Fig.10 demonstrate the performance of our method on the OOD dataset. It can be observed that the optimal initialization parameters trained on the real-world dataset can be well transferred to other real-world datasets, indicating that our method is not affected by data distribution and can be quickly transferred to other datasets with just training on one real-world dataset.

More OOD Qualitative results. When adopting the model to specialized domains, we acknowledge that the optimal initial parameters should ideally be obtained from data with the same distribution. However, due to diverse samples in the training set, our model trained on real-world datasets exhibits good generalization capabilities compared to random initialization. See results in Tab. 3. Parameters trained on general real-world datasets still generalize well to the ultrasound dataset, performing significantly better than random initialization. This highlights the effectiveness of our model’s generalizability.

Additional PSNR result for the video representation task on the MCLJCV (Wang et al. 2016) dataset is summarized in Tab. 4. This comparison underscores the proficiency of our method in substantially decreasing convergence time.

More visualization results. Additional visual comparisons between the outputs from E-NeRV (Li et al. 2022) and MetaNeRV are given in Fig. 15, Fig. 16, Fig. 17, Fig. 18. Despite having a few steps of iteration, the output from MetaNeRV is still noticeably better, with more detail from the original video frames preserved.

From Fig. 15 and Fig. 16, we observe that our approach can capture the approximate colors and shapes of the ground truth video in the first iteration by altering the initialization weights. In the second iteration step, our method has closely approximated the real video, reconstructing the primary cartoon characters and the background. Meanwhile, the shapes of the cartoon characters and background in the image reconstructed by E-NeRV are more pronounced. In subsequent iteration steps, our method has achieved sufficient clarity to discern the finer details of the cartoon characters and the background.

Our approach performs exceptionally well on medical datasets as observed in Fig. 17 and Fig. 18. We hypothesize that the videos in medical ultrasound datasets are often collected using similar ultrasound equipment. Consequently, the initialization representation learned by our method already encapsulates the blurred sector shape and black background characteristic of medical ultrasound videos. Additionally, the monochromatic nature of ultrasound videos contributes significantly to the performance enhancement of our model. Our method can achieve representation results similar to the original video after just one iteration, and subsequent iterations primarily focus on refining the details within the video. In contrast, random initialization typically learns the black background within a few iterations but struggles to capture fine details, resulting in only a rough shape representation.

| Video ID | step1 | step2 | step3 | step9 | step15 | step20 | step25 |

|---|---|---|---|---|---|---|---|

| videoSRC01 | 9.21/24.85 | 11.54/28.82 | 19.17/30.4 | 29.09/35.53 | 31.7/37.81 | 32.75/38.67 | 33.24/39.07 |

| videoSRC02 | 12.16/18.11 | 14.22/19.55 | 17.07/20.26 | 21.45/22.92 | 22.8/25.07 | 23.37/26.19 | 23.59/26.69 |

| videoSRC03 | 9.59/21.56 | 12.15/24.11 | 18.99/25.14 | 24.93/28.29 | 26.51/30.19 | 27.21/31.08 | 27.46/31.5 |

| videoSRC04 | 11.78/18.65 | 13.52/20.29 | 16.65/20.98 | 20.74/22.51 | 21.69/23.27 | 22.04/23.63 | 22.16/23.78 |

| videoSRC05 | 14.75/16.49 | 15.77/18.54 | 17.41/19.46 | 20.81/22.38 | 22.3/24.21 | 22.88/25.21 | 23.12/25.54 |

| videoSRC06 | 9.37/9.57 | 10.85/13.12 | 15.44/15.62 | 25.29/28.37 | 31.93/33.38 | 35.54/37.98 | 37.75/39.24 |

| videoSRC07 | 12.98/19.99 | 15.23/22.69 | 19.42/23.87 | 23.21/27.05 | 24.96/28.93 | 25.66/29.83 | 25.94/30.23 |

| videoSRC08 | 7.86/17.24 | 10.28/22.35 | 18.16/23.71 | 23.89/27.4 | 25.24/29.08 | 25.93/29.61 | 26.21/29.91 |

| videoSRC09 | 11.84/15.76 | 13.62/17.44 | 14.83/18.38 | 18.92/21.77 | 20.96/23.81 | 21.77/24.65 | 22.08/25.02 |

| videoSRC10 | 10.59/13.13 | 11.59/13.64 | 12.33/13.83 | 14.28/14.8 | 14.98/15.52 | 15.49/16.05 | 15.72/16.37 |

| videoSRC11 | 10.74/16.87 | 12.45/19.32 | 15.96/20.41 | 20.61/23.27 | 22.25/24.97 | 22.9/26.0 | 23.18/26.41 |

| videoSRC12 | 11.76/19.43 | 13.55/22.33 | 17.42/23.87 | 23.17/28.4 | 25.84/31.3 | 27.15/32.49 | 27.61/32.93 |

| videoSRC13 | 14.28/17.41 | 15.24/19.57 | 16.78/20.44 | 21.45/24.6 | 24.41/28.48 | 25.91/29.95 | 26.49/30.6 |

| videoSRC14 | 10.6/17.09 | 12.82/19.14 | 15.77/20.0 | 19.22/22.61 | 20.88/24.37 | 21.61/25.09 | 21.89/25.42 |

| videoSRC15 | 9.61/20.08 | 12.24/22.46 | 17.84/23.48 | 22.49/25.78 | 24.41/27.34 | 25.09/27.99 | 25.33/28.31 |

| videoSRC16 | 7.66/15.66 | 9.61/22.61 | 16.15/26.38 | 27.29/30.65 | 29.02/32.2 | 29.69/32.69 | 29.93/33.04 |

| videoSRC17 | 10.44/20.83 | 13.43/22.49 | 19.52/23.41 | 22.86/25.61 | 24.03/26.85 | 24.54/27.47 | 24.76/27.77 |

| videoSRC18 | 11.33/17.14 | 13.64/19.01 | 16.12/20.04 | 19.89/22.53 | 21.59/24.16 | 22.15/24.99 | 22.37/25.31 |

| videoSRC19 | 11.73/18.32 | 13.43/19.52 | 16.85/20.23 | 20.25/22.61 | 21.38/24.46 | 22.01/25.5 | 22.26/25.84 |

| videoSRC20 | 14.11/16.9 | 15.05/19.11 | 16.33/20.07 | 20.66/22.6 | 22.66/24.42 | 23.48/25.25 | 23.8/25.71 |

| videoSRC21 | 8.6/20.5 | 10.91/25.43 | 19.33/27.39 | 26.65/32.72 | 29.04/35.45 | 30.78/36.5 | 31.34/36.92 |

| videoSRC22 | 10.95/18.22 | 13.85/19.7 | 17.53/20.56 | 20.61/22.63 | 21.89/24.4 | 22.38/25.22 | 22.59/25.56 |

| videoSRC23 | 11.56/17.1 | 13.34/20.49 | 16.53/21.88 | 22.06/25.92 | 23.82/27.85 | 24.6/28.73 | 24.9/29.1 |

| videoSRC24 | 8.4/17.71 | 10.75/20.26 | 16.71/21.13 | 20.47/23.76 | 21.89/25.5 | 22.78/26.26 | 23.08/26.67 |

| videoSRC25 | 9.32/15.07 | 10.02/17.15 | 12.19/18.44 | 18.68/21.43 | 20.41/22.68 | 21.08/23.31 | 21.35/23.55 |

| videoSRC26 | 10.89/17.84 | 12.96/19.6 | 15.62/20.78 | 20.38/24.39 | 22.0/26.46 | 22.91/27.71 | 23.25/28.17 |

| videoSRC27 | 10.79/19.92 | 12.92/22.84 | 17.1/24.1 | 23.14/27.09 | 25.39/29.12 | 26.46/30.16 | 26.82/30.48 |

| videoSRC28 | 7.48/15.29 | 9.58/20.67 | 15.48/23.99 | 25.7/30.11 | 28.52/32.67 | 29.66/33.29 | 30.07/33.64 |

| videoSRC29 | 7.54/14.12 | 9.45/21.89 | 15.43/25.26 | 32.86/38.7 | 35.19/41.08 | 36.23/41.8 | 36.66/42.23 |

| videoSRC30 | 13.05/15.75 | 15.38/21.28 | 18.11/24.49 | 26.27/31.12 | 29.34/33.76 | 30.23/34.78 | 30.56/35.26 |

| means | 10.69/17.55 | 12.64/20.51 | 16.74/21.93 | 22.91/25.91 | 24.73/27.96 | 25.54/28.94 | 25.85/29.34 |