MetaDT: Meta Decision Tree for Interpretable Few-Shot Learning

Abstract

Few-Shot Learning (FSL) is a challenging task, which aims to recognize novel classes with few examples. Recently, lots of methods have been proposed from the perspective of meta-learning and representation learning for improving FSL performance. However, few works focus on the interpretability of FSL decision process. In this paper, we take a step towards the interpretable FSL by proposing a novel decision tree-based meta-learning framework, namely, MetaDT. Our insight is replacing the last black-box FSL classifier of the existing representation learning methods by an interpretable decision tree with meta-learning. The key challenge is how to effectively learn the decision tree (i.e., the tree structure and the parameters of each node) in the FSL setting. To address the challenge, we introduce a tree-like class hierarchy as our prior: 1) the hierarchy is directly employed as the tree structure; 2) by regarding the class hierarchy as an undirected graph, a graph convolution-based decision tree inference network is designed as our meta-learner to learn to infer the parameters of each node. At last, a two-loop optimization mechanism is incorporated into our framework for a fast adaptation of the decision tree with few examples. Extensive experiments on performance comparison and interpretability analysis show the effectiveness and superiority of our MetaDT. Our code will be publicly available upon acceptance.

1 Introduction

Deep convolutional neural network (CNN) has achieved great success on image classification with abundant labeled data [16]. However, for many rare or new-found objects, acquiring so much labeled data is unrealistic, which limits their applications in practical scenarios such as drug discovery [1] and cold-start recommendations [39]. In contrast, humans can quickly learn and recognize novel classes from very few observations. To bridge the gap, Few-Shot Learning (FSL) problem has been proposed and has attracted wide attention recently. It targets at learning transferable knowledge from some base classes with sufficient labeled samples, and then transferring the knowledge to quickly learn a classifier for novel classes with few examples [44].

To address the FSL problem, various methods have been proposed, which can be roughly divided into two categories: meta-learning based methods and representation learning-based methods. The former aims to learn a task-agnostic meta-learner (e.g., a good initial model [13]) by constructing a large number of few-shot tasks from base classes, and then leverages the meta-learner to quickly learn/infer a classifier for novel classes. The latter aims to learn transferable representations from base classes by designing a good training strategy [30] or feature extractor [47], so that the novel classes can be nicely recognized via a simple classifier (e.g., cosine classifier [9]). Though both types of methods have achieved promising performance on FSL, they mainly focus on improving FSL performance, but have not paid sufficient attention to the interpretability of FSL. In fact, such ability is very important in risk-sensitive applications (e.g., medical diagnosis [4]), where the model not only needs to make a decision (e.g., the clinical outcome of a patient), but also to explain the reason of making such decision.

Recently, several works [6, 48, 43] attempt to explore interpretable FSL, i.e., recognizing novel classes meanwhile providing a clear decision explanation for making the class prediction. However, almost all methods focus on explaining how FSL models work from the perspective of heatmap visualization, e.g., visualizing the importance of image regions [48]. These methods only roughly locate and visualize the image region that the FSL model focuses on, but it is still unclear why the FSL model makes such decision.

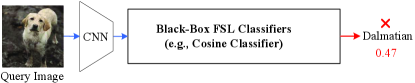

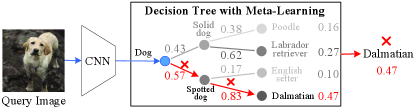

To address the drawback, in this paper, we present a new perspective for interpretable FSL by proposing a novel decision tree-based meta-learning framework, namely, MetaDT. As shown in Figure 1(a) and 1(b), our key idea is 1) replacing the last black-box FSL classifier (e.g., cosine classifier) of the representation learning methods by an interpretable decision tree with meta-learning; 2) introducing a tree-like class hierarchy consisting of few-shot classes (e.g., “English setter” or “Dalmatian”) and their superclasses (e.g., “Spotted dog”) as the decision tree, where each node in the tree is a learnable model making each step decision (e.g., “Is spotted dog?”); and 3) performing novel class prediction in a sequential decision manner from the root of decision tree to its leafs. The advantage of such design is that a sequence of intermediate decisions that lead up to a final class prediction can be obtained, which helps to diagnose why the FSL model makes such prediction. For example, as shown in Figure. 1(b), the image of “Labrador retriever” is wrongly predicted to “Dalmatian”. By examining the intermediate decisions, we can identify the reason for such wrong decision is that the image is misclassified as “Spotted dog” when making the decision of “Is spotted dog or solid dog?”.

Specifically, the key challenge of such idea is how to effectively learn the decision tree (i.e., the tree structure and the parameters of each node) in the FSL setting. To address the challenge, we regard the introduced class hierarchy as a determined structure prior of the decision tree. By viewing it as an undirected graph, we design a graph convolution-based decision tree inference network as our meta-learner to learn to infer the parameters of each node. The advantage of such design is the class hierarchy relations and abundant class semantics can be fully and effectively exploited in our meta-learner. Besides, we incorporate a two-loop optimization mechanism [13] into our framework for a fast adaptation. The outer-loop optimization aims to learn good initial parameters for our meta-learner from abundant base classes. Then, the inner-loop optimization applies the initial parameters to novel classes for quickly inferring a task-specific decision tree with few examples. At last, we perform the novel class prediction in a sequential decision manner from the root of the task-specific decision tree to its leaf nodes.

Our main contributions can be summarized as follows:

-

•

We present a new perspective for interpretable FSL by replacing the black-box FSL classifier with an interpretable decision tree, which provides clear decision explanations for class prediction. To our knowledge, this is the first work to explore decision trees on FSL.

-

•

We propose a novel decision tree-based meta-learning framework. Here, a graph convolution-based decision tree inference network with class hierarchy is carefully designed for effectively learning a decision tree. Its advantage is the priors of class hierarchy (i.e., class semantics and hierarchy relations) can be fully exploited.

-

•

We conduct comprehensive experiments on miniImagenet, CIFAR-FS, and tieredImagenet, which verify the effectiveness of our MetaDT. In addition, extensive interpretability and visualization analyses show the superiority of our MetaDT for interpretable FSL.

2 Related Work

2.1 Few-Shot Learning

Few-Shot Learning (FSL) aims to recognize novel classes with few labeled samples. Existing methods can be roughly grouped into two categories: meta-learning based approaches and representation learning-based approaches. The meta-learning based approaches focus on learning a task-agnostic meta-learner by constructing a large number of few-shot tasks from base classes, and then leverage the meta-learner to quickly learn/infer an FSL classifier for recognizing novel classes. The meta-learner can be a good initial model [13, 32], optimization algorithm [3, 53], embedding network [36, 21, 55], metric strategy [45, 56, 23], or label propagation strategy[37, 34, 50, 31], etc. The representation learning-based approaches aim to design a good feature extractor [52, 47] or training strategy [11, 38, 8, 25] to learn transferable representations from abundant base classes, so that the novel class samples can be recognized by a simple cosine classifier [9] or logistic regression classifier [30].

Recently, some studies attempt to introduce some external knowledge as auxiliary priors to further boost the performance [22, 46, 35, 27, 54] of existing FSL methods. For example, in [46, 22], the authors extend [36] by introducing the semantic information of class names as priors, to enhance class prototypes for FSL. In [27], they follow the representation learning-based approaches and introduce class hierarchies as priors to further boost cosine classifier [9] for FSL. Different from these methods, our MetaDT focuses on interpretable FSL and introduces the priors of class hierarchy to construct and infer a novel decision tree for interpretable FSL. Its advantage is that more superclasses can be exploited to improve knowledge transfer and detailed decision explanations can be provided for class prediction.

2.2 Zero-Shot Learning

Zero-Shot Learning (ZSL) is a challenging machine learning task, which targets at recognizing novel classes without any labeled examples by resorting to some semantic knowledge summarized from human’s past experiences [42, 14]. The main idea is regarding class-level semantic knowledge as the auxiliary information, and then learning a map function between semantic knowledge and class representations for novel class prediction. The semantic knowledge can be class attributions [42], class names [46], class descriptions [12], or class hierarchies [17]. Our work differs from these models in two aspects: 1) our MetaDT focuses on interpretable FSL, where few labeled samples should be fully exploited; 2) resorting to the semantic knowledge of class hierarchy, we focus on learning an interpretable decision tree, i.e., learning a map from class hierarchy to decision trees instead of learning class representations.

2.3 Interpretable Decision Models

Decision model interpretability is a very popular research topic in machine learning, which focuses on clearing model decision process to ensure decision reliability [7]. In earlier studies [33, 20], the decision tree is a very popular model on a wide variety of tasks, as its attractive interpretability. After that, considering the high accuracy of neural networks, some works [41, 18, 51, 19, 49] further combine neural network and decision tree to improve accuracy and interpretability jointly. Recently, several FSL studies also attempt to improve FSL interpretability [6, 48, 43]. For example, in [48], Xue et al. propose a region comparison network to highlight the region similarity between samples for the interpretable FSL. Wang et al. [43] propose an attention-based explainable FSL model by highlighting the discriminative patterns of each image. Almost all methods improve FSL interpretability from the perspective of heatmap visualization, which does not directly reveal why FSL models make such decision. Different from these existing methods, we focus on the interpretability of FSL decision process and propose a novel decision tree-based meta-learning framework (i.e., MetaDT), which directly reveals the reason for the classification decision of each sample.

3 Problem Definition

For the FSL, we are given two data sets: 1) a training set (called support set) that contains novel classes , with labeled samples per class where is very small (e.g., or 5); and 2) an auxiliary data set that consists of abundant labeled images from base classes . Here, the sets of class and are disjoint, i.e., . Based on the support set and the auxiliary data set , our goal is to learn a good classifier for a test set (called query set) that consists of unlabeled novel class samples. This is a common setting in FSL studies, which is called -way -shot tasks (e.g., 5-way 1-shot tasks, or 5-way 5-shot tasks).

In this paper, we focus on the interpretable FSL. Our goal is to learn a decision-interpretable classifier for query set , i.e., the learned classifier not only can perform novel class prediction but also can provide a clear explanation for making the class prediction. To this end, in this paper, we introduce a tree-like class hierarchy (i.e., the semantic hierarchy relations between classes) from external knowledge graphs (e.g., WordNet [26]) as FSL priors, and then carefully design a meta-learner leveraging the priors to effectively infer an interpretable decision tree for recognizing novel classes.

4 MetaDT Framework

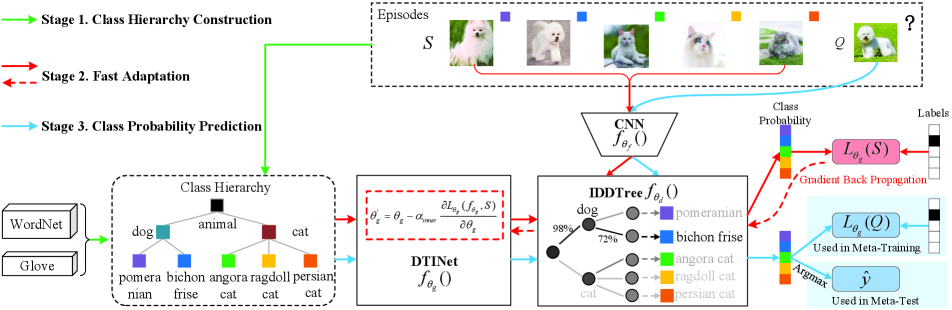

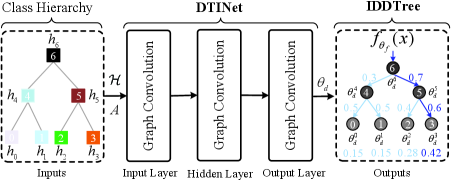

In this section, we introduce the technical details of the proposed MetaDT framework. As shown in Figure 2, the framework consists of three key modules: a CNN-based feature extractor with parameters , a decision tree inference network (DTINet) with parameters , and an interpretable and differentiable decision tree (IDDTree) with parameters . Here, the feature extractor aims to provide a good -dimensional representation for each image, which is obtained by following the representation learning method [30]. The DTINet is a meta-learner, which accounts for learning to quickly infer a task-specific decision tree (i.e., IDDTree) by using the priors of class hierarchy and few labeled samples. The IDDTree is a classifier where its parameters is not trainable but infered by the DTINet , which aims to perform class prediction for each support/query sample.

4.1 Overall Workflow

The main details of the IDDTree and the DTINet will be introduced in Sections 4.2 and 4.3, respectively. Here, we mainly present the workflow depicted in Figure 2, including meta-training and meta-test phases.

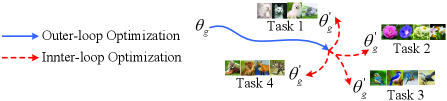

Meta-Training. The key challenge of our MetaDT is how to train our meta-learner (i.e., DTINet ) by leveraging the priors of class hierarchy to quickly infer an interpretable decision tree (i.e., IDDTree ) with very few labeled samples. To address the challenge, we employ a two-loop optimization mechanism [13] (i.e., an inner-loop optimization and an outer-loop optimization) to learn the DTINet . As shown in Figure 3, our intuition is treating the parameters of our meta-learner as the initial parameters of inner-loop optimization, and then attempting to learn good (i.e., transferable) initial parameters by using an outer-loop optimization. By doing so, the initial model can quickly adapt to novel classes with gradient updates upon few labeled samples .

Specifically, as shown in Figure 2, following the episodic training manner [40], we mimic the test setting and construct a number of -way -shot tasks (called episodes) from base classes. Then, we train our meta-learner to quickly infer a task-specific IDDTree for each episode by minimizing the cross entropy loss on query set . That is,

| (1) | ||||

where is the set of constructed episodes and denotes the cross entropy loss function. The above training process can be regarded as an outer-loop optimization for learning good initial parameters . Next, we introduce how to calculate the class probability that each query sample belongs to class in an inner-loop optimization manner, given initial parameters and support set . It includes the following three stages:

Stage 1. Class Hierarchy Construction. We first construct a tree-like class hierarchy as FSL priors. The class hierarchy reflects the semantic relations between classes, i.e., what kinds of superclasses these few-shot classes share. For example, the classes “pomeranian” and “bichon frise” share the same superclass “dog”. We note that such type of class hierarchy can be easily obtained from some external knowledge graphs, e.g., WordNet [26]. Then, we encode the tree-like class hierarchy by using an undirected graph , where its nodes denote all semantic classes (i.e., all few-shot classes and their superclasses) and its edges represent the hierarchy relations between all classes. Let and denote the set of nodes and edges respectively, i.e., . In the graph , each node is represented by a -dimensional semantic vector , i.e., the mean of all -dimensional Glove-based word embeddings [28] of their class names, denoted by where is the number of graph nodes. The edge set is represented as a adjacency matrix where if the node is associated with the node , otherwise .

Stage 2. Fast Adaptation. In this stage, we regard the class hierarchy as inputs and the IDDTree as predicted targets of our initial DTINet . Then, we evaluate the predicted IDDTree on the support set and leverage the evaluated classification loss to quickly finetune the initial DTINet with -step gradient updates. As a result, a task-specific DTINet with parameters can be obtained for each few-shot task. For example, when using one gradient updates (i.e., =1), the process of such fast adaptation can be expressed as:

| (2) |

where is the learning rate of gradient updates. The above process can be regarded as an inner-loop optimization for learning good task-specific parameters . Next, we elaborate on the calculation details for the classification loss on the support set .

Speficifally, we first extract the features of each support sample via the feature extractor . Then, the class probability that each sample belongs to class can be calculated by using the IDDTree infered by our meta-learner (Please refers to Section 4.2 for its calculation details). That is,

| (3) | ||||

Finally, we calculate the classification loss by using a cross entropy loss on support set :

| (4) |

Stage 3. Class Probability Prediction. Based on the above task-specific DTINet , we can obtain a task-specific IDDTree . Then, the class probability that each query sample belongs to class can be calculated in a sequential decision manner (Please refers to Section 4.2 for its details). That is,

| (5) | ||||

Meta-Test. The workflow of meta-test is similar to meta-training. As shown in Figure 2, the difference is that we remove the training step of Eq. 1 and directly evaluate the class probability of each query sample by following Eqs. 2 5. Finally, we perform the novel class prediction to the novel class with highest probability:

| (6) |

4.2 Interpretable and Differentiable Decision Tree

In the subsection, we introduce the technical details of our IDDTree , including the following two parts.

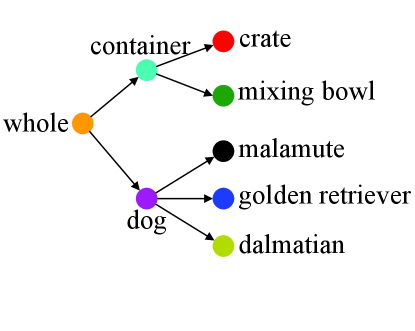

How to design the IDDTree ? As shown in Figure 4, for interpretability, our main idea is treating the hierarchy relations in the tree-like class hierarchy as explicit decision rules, and then performing class predictions by following the rules. For differentiability, our notion is assigning each tree node of the class hierarchy with a -dimensional weight vector (i.e., where is the number of tree nodes), and then performing the class prediction in a sequential and soft probability inference manner from the root of decision rules to its leafs.

How to evaluate the class probability? Intuitively, the weight vector can be regarded as the prototype or class center of each class in the feature space. Thus, given a support/query image and its features , we can evaluate the class probability that the sample belongs to few-shot class in a metric-based decision manner from the root of decision tree to its leaf nodes. It includes the following two steps:

Step 1. For each non-leaf node (i.e., superclasses), we need to make decisions for its all child nodes denoted by , i.e., evaluating the conditional probability that the sample belongs to child class . We evaluate the conditional probability by computing the cosine similarity between the sample and the weights of child class . That is,

| (7) |

where denotes the cosine similarity of two vectors and is a scale parameter. Following [8], is used.

Step 2. For each leaf node (i.e., few-shot class ), given its traversal path from the root and the conditional probability of each node traversing its child node in the path , i.e., , the class probability that each support/query sample belongs to the class can be calculated in a cumulative product manner along the traversal path . That is,

| (8) |

For clarity, we give an example to show the above calculation process. As shown in Figure 4, given a sample , 1) we compute all conditional probability of non-leaf nodes in Eq. 7, e.g., ; and then 2) the class probability is obtained by using Eq. 8, e.g., .

4.3 Decision Tree Inference Network

In the IDDTree , its key challenge is how to quickly infer its parameters when only few labeled samples are available. To address the challenge, we regard the class hierarchy as inputs and then design a graph convolution-based inference network to learn the map from class hierarchy to the parameters . The intuition behind this design is fully leveraging human’s prior knowledge to enable fast adaptation of decision trees.

The overall structure is shown in Figure 4, which consists of an input layer, a hidden layer, and an output layer. The input and hidden layers aim to obtain a good representation for each graph node by exploiting abundant semantic information and hierarchy relations between classes. Then, the parameters of IDDTree are predicted by the final output layer. The overall process can be defined as:

| (9) |

where , , and denote a trainable weight matrix for input, hidden and output layers, respectively, i.e., ; is the normalized , i.e., ; is the degree matrix, i.e., ; and denotes the ReLU activation function.

| Method | Using Knowledge | Backbone | miniImagenet | CIFAR-FS | ||

| 5-way 1-shot | 5-way 5-shot | 5-way 1-shot | 5-way 5-shot | |||

| CGCS [15] | No | ResNet12 | ||||

| RCNet [48] | No | ResNet12 | ||||

| ALFA [3] | No | ResNet12 | - | - | ||

| MeTAL [2] | No | ResNet12 | ||||

| CRF-GNN [37] | No | ConvNet256 | 57.89 0.87 | 73.58 0.87 | 76.45 0.99 | 88.42 0.23 |

| Neg-Cosine [24] | No | ResNet12 | 81.57 0.56% | - | - | |

| RFS [38] | No | ResNet12 | 79.64 0.44% | |||

| InvEq [30] | No | ResNet12 | ||||

| TriNet [10] | Yes | ResNet18 | - | - | ||

| AM3-PNet [46] | Yes | ResNet12 | - | - | ||

| AM3-TRAML [22] | Yes | ResNet12 | 67.10 0.52 | 79.54 0.60% | - | - |

| FSLKT [27] | Yes | ConvNet128 | - | - | ||

| MetaDT | Yes | ResNet12 | 69.08 0.73 | 83.40 0.51 | 79.03 0.75 | 88.50 0.58 |

| MetaDT + Cosine Classifier | Yes | ResNet12 | 70.45 0.81 | 84.84 0.52 | 80.14 0.78 | 89.84 0.56 |

5 Enhancement: MetaDT + Cosine Classifier

Till now, we have introduced all details of our MetaDT. Its advantage is that the decision process of prediction is interpretable resorting to the priors of class hierarchy. In this section, we introduce how to fuse our MetaDT and the existing cosine classifier [9] for better performance.

Intuitively, the two methods are complementary to each other: 1) when the labeled samples are very scarce (e.g., =1), our MetaDT is more reliable because it fully exploits the priors of class hierarchy which effectively alleviates the data sparsity issue; and 2) as more and more labeled samples become available, the data sparsity issue gradually disappears and the existing cosine classifier becomes more effective. Thus, we propose to fuse the two methods in class probability via a convex combination manner. That is,

| (10) |

where , , and denote the class probability of our MetaDT, the cosine classifier, and after fusion, respectively; is a weight parameter. Finally, we perform the class prediction of query sample by using fused probability . The fusion strategy is only used in meta-test. In real applications, the “MetaDT + Cosine Classifier” can be used when we merely go for better performance; otherwise our MetaDT would be a good choice for FSL, which not only delivers promising performance but also has clear interpretability.

6 Experiments

6.1 Datasets and Settings

miniImagenet. The dataset is a subset from ImageNet, containing 100 classes. Following [40], we split it into 64, 16, and 20 classes for training, validation, and test, respectively. Besides, the tree-like class hierarchy is constructed from WordNet by using the relation of “hypernyms()”.

CIFAR-FS. The dataset is built from CIFAR100, including 100 classes. Following [5], we split the data set into 64 classes for training, 16 classes for validation, and 20 classes for test, respectively. Similarly, its tree-like class hierarchy is also acquired from WordNet via its class names.

tieredImagenet. The dataset is a more challenging FSL dataset constructed from ImageNet, including 608 classes. Following [29], we partition the dataset into 34 high-level classes, and then split it into 20 classes for training, 6 classes for validation, and 8 classes for test. Similarly, its class hierarchy is also extracted from WordNet.

6.2 Implementation Details

Architecture. We employ the ResNet12 trained following previous work [30] as our feature extractor. For DTINet, we use a three-layer graph convolution with a 300-dimensional input, a 1024-dimensional input layer, a 2048-dimensional hidden layer, and a 640-dimensional output layer to infer the IDDTree. In each layer, ReLU is used as the activation function except for the output layer, and a dropout layer with probability of 0.5 is used for better generalization.

Training and Test Details. In meta-training phase, we train our meta-learner with 20 epochs by using an Adam optimizer with a weight decay of 0.0005 and a learning rate of 0.0001. The update steps and learning rate of inner-loop optimization is set to 25 and 0.05, respectively. In meta-test phase, the learning rate remains unchanged, and the update step is changed to 50, 70, and 150 on miniImagenet, CIFAR-FS, and tieredImagenet datasets, respectively. For the weight parameter , we set it to 0.8 and 0.1 for 1-shot and 5-shot tasks, respectively.

Evaluation. We evaluate our methods on 600 5-way 1-shot/5-shot tasks randomly sampled from the test set. Lastly, the mean accuracy together with the 95% confidence interval is reported as the evaluation results.

6.3 Experimental Results

| Method | Backbobe | tieredImagenet | |

|---|---|---|---|

| 5-way 1-shot | 5-way 5-shot | ||

| CGCS [15] | ResNet12 | ||

| ALFA [3] | ResNet12 | ||

| MeTAL [2] | ResNet12 | ||

| CRF-GNN [37] | ConvNet256 | 58.45 0.59 | 74.58 0.84 |

| RFS [38] | ResNet12 | 84.41 0.55% | |

| InvEq [30] | ResNet12 | ||

| AM3-PNet [46] | ResNet12 | ||

| MetaDT | ResNet12 | 70.56 0.90 | 85.17 0.56 |

| MetaDT + CC | ResNet12 | 72.69 0.90 | 87.10 0.56 |

| Types | miniImagenet | CIFAR-FS | tieredImagenet |

|---|---|---|---|

| Seen Superclasses | 97.30% | 85% | 49.60% |

| Unseen Superclasses | 2.70% | 15.00% | 50.40% |

Results on miniImagenet and CIFAR-FS. Table 1 shows the experimental results of various methods on miniImagenet and CIFAR-FS. From these results, we observe that our “MetaDT + Cosine Classifier” exceeds these state-of-the-art methods by around 1% 4%, which shows the superiority of our method on FSL. In addition, it can be found that our MetaDT achieves superior performance over existing methods by around 2% 3% on 1-shot tasks, as well as comparable performance on 5-shot tasks. Note that our MetaDT is decision-interpretable. Specifically, compared with these methods without exploiting knowledge, our MetaDT additionally explores the priors of class hierarchy, and mainly focuses on leveraging these priors to quickly infer a decision tree. The results validate the effectiveness of our decision tree classifier. It is worth noting that RCNet [48] is also developed for interpretable FSL, which is a strong competitor. Our MetaDT significantly outperforms RCNet by a large margin by 6% 12%. This further shows the superiority of our MetaDT for interpretable FSL.

As for the FSL methods using knowledge, they also explore the semantic knowledge as priors for improving FSL but mainly use the nearest neighbor classifier. Different from these methods, our MetaDT leverages the priors of class hierarchy to quickly infer a task-specific decision tree for interpretable FSL. The results demonstrate the effectiveness of our MetaDT. Note that our MetaDT exceeds FSLKT around 6% 10%, which also utilizes the priors of class hierarchy. Different from FSLKT, our MetaDT regards the class hierarchy as a decision tree, so that more superclasses can be exploited for novel class prediction. The results show the superiority of our MetaDT to leverage class hierarchy.

Results on tieredImagenet. In Table 2, we report the classification results of our methods and various baseline methods on tieredImagenet. We find that our “MetaDT + Cosine Classifier” achieves superior performance over state-of-the-art methods. In addition, our MetaDT achieves competitive performance with some state-of-the-art methods. It is worth noting that the performance improvement of our MetaDT on tieredImagenet is limited, which is inconsistent with the one of miniImagenet and CIFAR-FS. This may be reasonable because the tieredImagenet dataset is split by following the high-level semantic classes. As a result, some superclasses are unseen in our meta-training phase, which impedes the knowledge transfer of our MetaDT.

To illustrate this point, we calculate the percentage of seen and unseen superclasses on miniImagenet, CIFAR-FS, and tieredImagenet, in Table 3. From the results, we indeed find that the percentage of unseen superclasses of tieredImagenet is much larger than the one of miniImagenet and CIFAR-FS. This means the meta knowledge learned from the base classes is difficult to transfer to the novel classes. For the issue, we will leave it to future work.

6.4 Ablation Study

We conduct various experiments on miniImagenet to investigate the impacts of different components.

| Settings | 5-way 1-shot | 5-way 5-shot | |

|---|---|---|---|

| (i) | MetaDT | ||

| (ii) | w/o Class Semantic | ||

| (iii) | w/o Graph Convolution | ||

| (iv) | w/o DTINet | ||

| (v) | w/o Fast Adaptation |

| Settings | 5-way 1-shot | 5-way 5-shot | |

|---|---|---|---|

| (i) | MetaDT | ||

| Cosine Classifier | |||

| (ii) | MetaDT + Cosine Classifier |

How do different components affect our MetaDT? We conduct various ablation studies to analyze the contribution of different components, including class semantic, class hierarchy, graph convolution, DTINet, and fast adaptation. Specifically, (i) we evaluate our MetaDT on 5-way 1/5-shot tasks; (ii) we remove the semantic vectors and replace it by using one-hot vectors on (i); (iii) we replace the three-layer graph convolution by using a three-layer fully-connected network on (i); (iv) we remove the DTINet on (i) and estimate the parameters of IDDTree in an average manner on mean-based prototypes by following the class hierarchy relations; and (v) we remove the fast adaptation of our meta-learner on (i). These results are shown in Table 4. From these results, we can see that the classification performance decreases by around 2% 16% when removing these components respectively. This suggests that employing these four key components is beneficial for our MetaDT.

Is the fusion of our MetaDT and cosine classifier effective? In Table 5, we analyze the effectiveness of the fusion strategy described in Section 5. Specifically, (i) we evaluate our MetaDT and cosine classifier, respectively; (ii) following Eq. 10, we fuse our MetaDT with cosine classifier. From the results, we find that the performance of our “MetaDT + Cosine Classifier” exceeds our MetaDT and the cosine classifier by around 1% 4%. This shows the superiority of the proposed fusion strategy on improving FSL performance.

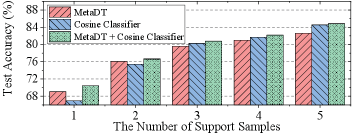

How does our method perform under different numbers of support samples? In Figure 5, we count the performance of our MetaDT, cosine classifier, and our “MetaDT + Cosine Classifier” on different numbers of support samples. We find that 1) our MetaDT is more reliable on 1/2-shot tasks while the cosine classifier is more effective on 3/4/5-shot tasks; 2) our “MetaDT + Cosine Classifier” performs best. This verifies that our MetaDT and the cosine classifier indeed remedy the shortcomings of each other.

6.5 Interpretability and Visualization Analysis

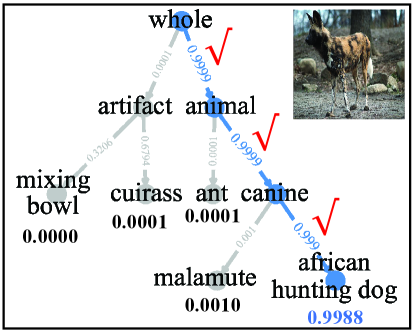

Is the decision of our MetaDT interpretable? To show the decision interpretability of our MetaDT, we conduct two 5-way 1-shot case studies on miniImagenet, including a right and a wrong decision case. Specifically, we randomly select a 5-way 1-shot task from test set. Then we construct and learn a four-layer decision tree by using only one labeled sample. After that, we randomly select an image that is rightly predicted and an image that is wrongly predicted as an example to illustrate the interpretable decision process, which are shown in Figure 6. From Figure 6(a), we can see that our decision tree makes right decision in entire sequential decision path for the image of “african hunting dog”, where all decisions have real meaning and fit our intuition. Besides, we note that such interpretable decison process can also help us trace and locate the root reason of making such wrong decisions. As shown in Figure 6(b), the reason causing the image of “malamute” is misidentified is that the image is misclassified as “african hunting dog” when performing the decision of “canine”.

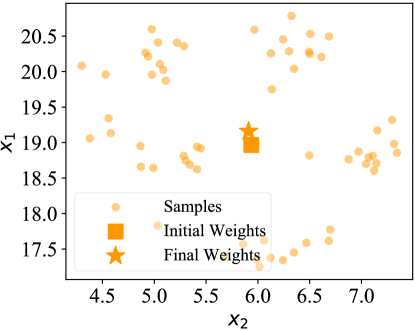

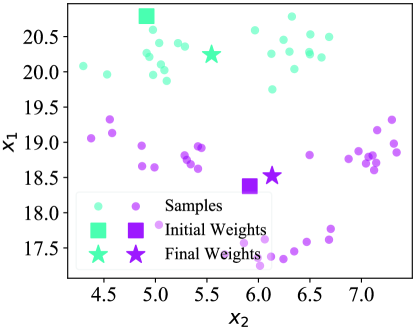

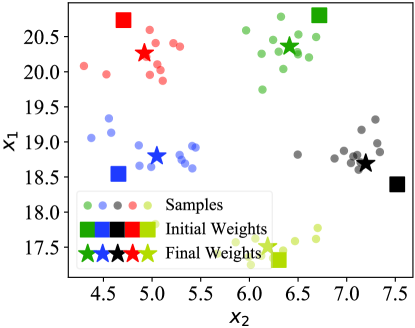

How does our MetaDT work? In Figures 7(a), 7(b), and 7(c), we visualize the weights of decision tree on a 5-way 1-shot task of miniImagenet. Here, the weights of decision tree before and after fast adaptation are marked by the squares and stars, respectively. Besides, the structure of decison tree is shown in Figure 7(d). We find that the final weights (i.e., final prototypes) marked by stars are much closer to the centers of few-shot classes/superclass after fast adaptation. This visualization indicates that our MetaDT effectively learns the class hierarchy and infers the task-specific decision tree.

7 Conclusion

In this paper, we propose a novel decision tree-based meta-learning framework for interpretable few-shot learning, called MetaDT. In particular, we replace the black-box FSL classifier with an interpretable decision tree. In addition, a novel decision tree inference network and a two-loop optimization mechanism is designed, respectively, for a fast adaptation of decision tree. Extensive experiments and interpretability analyses show our MetaDT is effective and provides interpretability for novel class predictions.

References

- [1] Han Altae-Tran, Bharath Ramsundar, Aneesh S Pappu, and Vijay Pande. Low data drug discovery with one-shot learning. ACS central science, 3(4):283–293, 2017.

- [2] Sungyong Baik, Janghoon Choi, Heewon Kim, Dohee Cho, Jaesik Min, and Kyoung Mu Lee. Meta-learning with task-adaptive loss function for few-shot learning. In ICCV, pages 9465–9474, 2021.

- [3] Sungyong Baik, Myungsub Choi, Janghoon Choi, Heewon Kim, and Kyoung Mu Lee. Meta-learning with adaptive hyperparameters. In NeurIPS, 2020.

- [4] Antonio Jesús Banegas-Luna, Jorge Peña-García, Adrian Iftene, Fiorella Guadagni, Patrizia Ferroni, Noemi Scarpato, Fabio Massimo Zanzotto, Andrés Bueno-Crespo, and Horacio Pérez-Sánchez. Towards the interpretability of machine learning predictions for medical applications targeting personalised therapies: A cancer case survey. International Journal of Molecular Sciences, 22(9):4394, 2021.

- [5] Luca Bertinetto, João F. Henriques, Philip H. S. Torr, and Andrea Vedaldi. Meta-learning with differentiable closed-form solvers. In ICLR, 2019.

- [6] Kaidi Cao, Maria Brbic, and Jure Leskovec. Concept learners for few-shot learning. In ICLR, 2021.

- [7] Diogo V Carvalho, Eduardo M Pereira, and Jaime S Cardoso. Machine learning interpretability: A survey on methods and metrics. Electronics, 8(8):832, 2019.

- [8] Wei-Yu Chen, Yen-Cheng Liu, Zsolt Kira, Yu-Chiang Frank Wang, and Jia-Bin Huang. A closer look at few-shot classification. In ICLR, 2019.

- [9] Yinbo Chen, Xiaolong Wang, Zhuang Liu, Huijuan Xu, Trevor Darrell, et al. A new meta-baseline for few-shot learning. In ICML, 2020.

- [10] Zitian Chen, Yanwei Fu, Yinda Zhang, Yu-Gang Jiang, Xiangyang Xue, and Leonid Sigal. Multi-level semantic feature augmentation for one-shot learning. IEEE Trans. Image Process., 28(9):4594–4605, 2019.

- [11] Zhengyu Chen, Jixie Ge, Heshen Zhan, Siteng Huang, and Donglin Wang. Pareto self-supervised training for few-shot learning. In CVPR, pages 13663–13672, 2021.

- [12] Mohamed Elhoseiny and Mohamed Elfeki. Creativity inspired zero-shot learning. In ICCV, pages 5784–5793, 2019.

- [13] Chelsea Finn, Pieter Abbeel, Sergey Levine, et al. Model-agnostic meta-learning for fast adaptation of deep networks. In ICML, pages 1126–1135, 2017.

- [14] Andrea Frome, Gregory S. Corrado, Jonathon Shlens, Samy Bengio, Jeffrey Dean, Marc’Aurelio Ranzato, and Tomas Mikolov. Devise: A deep visual-semantic embedding model. In NeurIPS, pages 2121–2129, 2013.

- [15] Zhi Gao, Yuwei Wu, Yunde Jia, and Mehrtash Harandi. Curvature generation in curved spaces for few-shot learning. In ICCV, pages 8691–8700, 2021.

- [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [17] Michael Kampffmeyer, Yinbo Chen, Xiaodan Liang, Hao Wang, Yujia Zhang, and Eric P Xing. Rethinking knowledge graph propagation for zero-shot learning. In CVPR, pages 11487–11496, 2019.

- [18] Peter Kontschieder, Madalina Fiterau, Antonio Criminisi, and Samuel Rota Bulo. Deep neural decision forests. In ICCV, pages 1467–1475, 2015.

- [19] Peter Kontschieder, Madalina Fiterau, Antonio Criminisi, and Samuel Rota Bulò. Deep neural decision forests. In IJCAI, pages 4190–4194, 2016.

- [20] D Lavanya and K Usha Rani. Ensemble decision tree classifier for breast cancer data. International Journal of Information Technology Convergence and Services, 2(1):17–24, 2012.

- [21] Kwonjoon Lee, Subhransu Maji, Avinash Ravichandran, and Stefano Soatto. Meta-learning with differentiable convex optimization. In CVPR, pages 10657–10665, 2019.

- [22] Aoxue Li, Weiran Huang, Xu Lan, Jiashi Feng, Zhenguo Li, and Liwei Wang. Boosting few-shot learning with adaptive margin loss. In CVPR, pages 12576–12584, 2020.

- [23] Wenbin Li, Jinglin Xu, Jing Huo, Lei Wang, Yang Gao, and Jiebo Luo. Distribution consistency based covariance metric networks for few-shot learning. In AAAI, pages 8642–8649, 2019.

- [24] Bin Liu, Yue Cao, Yutong Lin, Qi Li, Zheng Zhang, Mingsheng Long, and Han Hu. Negative margin matters: Understanding margin in few-shot classification. In ECCV, pages 438–455, 2020.

- [25] Puneet Mangla, Mayank Singh, Abhishek Sinha, Nupur Kumari, Vineeth N. Balasubramanian, and Balaji Krishnamurthy. Charting the right manifold: Manifold mixup for few-shot learning. In WACV, pages 2207–2216, 2020.

- [26] George A Miller. WordNet: An electronic lexical database. MIT press, 1998.

- [27] Zhimao Peng, Zechao Li, Junge Zhang, Yan Li, Guo-Jun Qi, and Jinhui Tang. Few-shot image recognition with knowledge transfer. In ICCV, pages 441–449, 2019.

- [28] Jeffrey Pennington, Richard Socher, and Christopher Manning. Glove: Global vectors for word representation. In EMNLP, pages 1532–1543, 2014.

- [29] Mengye Ren, Eleni Triantafillou, Sachin Ravi, Jake Snell, Kevin Swersky, Joshua B. Tenenbaum, Hugo Larochelle, and Richard S. Zemel. Meta-learning for semi-supervised few-shot classification. In ICLR, 2019.

- [30] Mamshad Nayeem Rizve, Salman Khan, Fahad Shahbaz Khan, and Mubarak Shah. Exploring complementary strengths of invariant and equivariant representations for few-shot learning. In CVPR, pages 10836–10846, 2021.

- [31] Pau Rodríguez, Issam Laradji, Alexandre Drouin, and Alexandre Lacoste. Embedding propagation: Smoother manifold for few-shot classification. In ECCV, 2020.

- [32] Andrei A Rusu, Dushyant Rao, Jakub Sygnowski, Oriol Vinyals, Razvan Pascanu, Simon Osindero, and Raia Hadsell. Meta-learning with latent embedding optimization. In ICLR, 2018.

- [33] S Rasoul Safavian and David Landgrebe. A survey of decision tree classifier methodology. IEEE transactions on systems, man, and cybernetics, 21(3):660–674, 1991.

- [34] Victor Garcia Satorras and Joan Bruna Estrach. Few-shot learning with graph neural networks. In ICLR, 2018.

- [35] Eli Schwartz, Leonid Karlinsky, Rogério Schmidt Feris, Raja Giryes, and Alexander M. Bronstein. Baby steps towards few-shot learning with multiple semantics. CoRR, abs/1906.01905, 2019.

- [36] Jake Snell, Kevin Swersky, Richard Zemel, et al. Prototypical networks for few-shot learning. In NeurIPS, pages 4077–4087, 2017.

- [37] Shixiang Tang, Dapeng Chen, Lei Bai, Kaijian Liu, Yixiao Ge, and Wanli Ouyang. Mutual crf-gnn for few-shot learning. In CVPR, pages 2329–2339, 2021.

- [38] Yonglong Tian, Yue Wang, Dilip Krishnan, Joshua B Tenenbaum, and Phillip Isola. Rethinking few-shot image classification: a good embedding is all you need? In ECCV, pages 266–282, 2020.

- [39] Manasi Vartak, Arvind Thiagarajan, Conrado Miranda, Jeshua Bratman, and Hugo Larochelle. A meta-learning perspective on cold-start recommendations for items. In NeurIPS, pages 6904–6914, 2017.

- [40] Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Daan Wierstra, et al. Matching networks for one shot learning. In NeurIPS, pages 3630–3638, 2016.

- [41] Alvin Wan, Lisa Dunlap, Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah Adel Bargal, and Joseph E. Gonzalez. Nbdt: Neural-backed decision trees. In ICLR, 2021.

- [42] Ziyu Wan, Dongdong Chen, Yan Li, Xingguang Yan, Junge Zhang, Yizhou Yu, and Jing Liao. Transductive zero-shot learning with visual structure constraint. In NeurIPS, pages 9972–9982, 2019.

- [43] Bowen Wang, Liangzhi Li, Manisha Verma, Yuta Nakashima, Ryo Kawasaki, and Hajime Nagahara. Mtunet: Few-shot image classification with visual explanations. In CVPR Workshops, pages 2294–2298, 2021.

- [44] Yaqing Wang, Quanming Yao, James T. Kwok, and Lionel M. Ni. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv., 53(3):63:1–63:34, 2020.

- [45] Davis Wertheimer, Luming Tang, and Bharath Hariharan. Few-shot classification with feature map reconstruction networks. In CVPR, pages 8012–8021, 2021.

- [46] Chen Xing, Negar Rostamzadeh, Boris N Oreshkin, and Pedro O Pinheiro. Adaptive cross-modal few-shot learning. In NeurIPS, pages 4848–4858, 2019.

- [47] Chengming Xu, Yanwei Fu, Chen Liu, Chengjie Wang, Jilin Li, Feiyue Huang, Li Zhang, and Xiangyang Xue. Learning dynamic alignment via meta-filter for few-shot learning. In CVPR, pages 5182–5191, 2021.

- [48] Zhiyu Xue, Lixin Duan, Wen Li, Lin Chen, and Jiebo Luo. Region comparison network for interpretable few-shot image classification. arXiv preprint arXiv:2009.03558, 2020.

- [49] Bin-Bin Yang, Song-Qing Shen, and Wei Gao. Weighted oblique decision trees. In AAAI, pages 5621–5627, 2019.

- [50] Ling Yang, Liangliang Li, Zilun Zhang, Xinyu Zhou, Erjin Zhou, and Yu Liu. DPGN: distribution propagation graph network for few-shot learning. In CVPR, pages 13390–13399, 2020.

- [51] Yongxin Yang, Irene Garcia Morillo, and Timothy M Hospedales. Deep neural decision trees. arXiv preprint arXiv:1806.06988, 2018.

- [52] Han-Jia Ye, Hexiang Hu, De-Chuan Zhan, and Fei Sha. Few-shot learning via embedding adaptation with set-to-set functions. In CVPR, pages 8805–8814, 2020.

- [53] Baoquan Zhang, Xutao Li, Yunming Ye, Shanshan Feng, and Rui Ye. Metanode: Prototype optimization as a neural ode for few-shot learning. arXiv preprint arXiv:2103.14341, 2021.

- [54] Baoquan Zhang, Xutao Li, Yunming Ye, Zhichao Huang, and Lisai Zhang. Prototype completion with primitive knowledge for few-shot learning. In CVPR, pages 3754–3762, 2021.

- [55] Chi Zhang, Yujun Cai, Guosheng Lin, and Chunhua Shen. Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In CVPR, pages 12203–12213, 2020.

- [56] Jian Zhang, Chenglong Zhao, Bingbing Ni, Minghao Xu, and Xiaokang Yang. Variational few-shot learning. In ICCV, pages 1685–1694, 2019.