Measurement Based Feedback Quantum Control With Deep Reinforcement Learning for a Double-well Non-linear Potential

Abstract

Closed loop quantum control uses measurement to control the dynamics of a quantum system to achieve either a desired target state or target dynamics. In the case when the quantum Hamiltonian is quadratic in and , there are known optimal control techniques to drive the dynamics towards particular states e.g. the ground state. However, for nonlinear Hamiltonians such control techniques often fail. We apply Deep Reinforcement Learning (DRL), where an artificial neural agent explores and learns to control the quantum evolution of a highly non-linear system (double well), driving the system towards the ground state with high fidelity. We consider a DRL strategy which is particularly motivated by experiment where the quantum system is continuously but weakly measured. This measurement is then fed back to the neural agent and used for training. We show that the DRL can effectively learn counter-intuitive strategies to cool the system to a nearly-pure ‘cat’ state which has a high overlap fidelity with the true ground state.

As the research on quantum communication and computation has progressed rapidly with the goal of achieving the holy grail of quantum computing, quantum state engineering has begun to take on a high profile Verstraete et al. (2009); Motta et al. (2020); Love (2020). Of particular importance are feedback control techniques, in which a physical system subjected to noise is continuously monitored in real time while using measurement information to impart specific driving controls to modulate the system dynamics Wiseman and Milburn (2009). Unlike classical systems, measurement control of a quantum mechanical system is challenging for a number of reasons. Firstly, the act of continuously observing a quantum system introduces non-linearity within the conditioned dynamics. Secondly, continuous measurement on a quantum system generally alters it, generating measurement-induced noisy dynamics, commonly known as quantum back-action. Finally, applying feedback which is dependent on the noisy measurement current adds further noise into the dynamics. Consequently, a variety of feedback control schemes that work well for classical systems may not for the analogous quantum counterparts Jacobs and Steck (2006); Zhang et al. (2017); Wiseman and Milburn (2009).

In recent years, machine learning (ML) has rapidly gained interest, leading to numerous technological advancements in machine vision, voice recognition, natural language processing, automatic handwriting recognition, gaming, and engineering and robotics, to name a few. Goodfellow et al. (2016) Various ML models, broadly fall into three categories: supervised learning, unsupervised learning and reinforcement learning (RL) Goodfellow et al. (2016); Mnih et al. (2015); Sutton and Barto (2018). For supervised/unsupervised methods, the ML model is provided with enough labeled/unlabeled datasets to be trained on, which it uses for discovering the predictive hidden features in the system of interest. On the other hand, RL approaches the problem differently and is not pre-trained with any external data explicitly, but learns in real-time based on rewards. Indeed, RL is regarded as the most effective way to benefit from the creativity of machines, where it collects experiences by performing random experiments on the system (known as the environment in RL literature), learning by trial and error. RL, specially in combination with deep neural networks, abbreviated as DRL, is poised to revolutionize the field of artificial intelligence (AI), particularly with the emergence of autonomous systems which process, in real time, stimuli from real world environments Li (2019).

There have already been several important applications of ML in different areas of physics, such as in statistical mechanics, many-body systems, fluid dynamics, and quantum mechanics Carleo et al. (2019); Brunton et al. (2020); Carrasquilla and Melko (2017); Dunjko and Briegel (2018); Mehta et al. (2019). Most of these applications are supervised in nature, e.g., in the quantum domain, these have been applied to solving the many-body systems Carleo and Troyer (2017), in the determination of high-fidelity gates and the optimization of quantum memories by dynamic decoupling August and Ni (2017), quantum error corrections Baireuther et al. (2018); Torlai and Melko (2017); Krastanov and Jiang (2017), quantum state tomography Torlai et al. (2018); Neugebauer et al. (2020); Lohani et al. (2020); Ahmed et al. (2020a); Palmieri et al. (2020), classification and reconstruction of optical quantum states Ahmed et al. (2020b). Recently, a few applications of DRL in quantum mechanics have also appeared that includes applications in quantum control Wang et al. (2020); Niu et al. (2019); Zhang et al. (2019a); Xu et al. (2021), quantum state preparation and engineering Zhang et al. (2019b); Mackeprang et al. (2020); Haug et al. (2020); Guo et al. (2021); Bilkis et al. (2020), state transfer Porotti et al. (2019); Ding et al. (2021); Paparelle et al. (2020), and quantum error correction Fösel et al. (2018); Nautrup et al. (2019). While the number of works utilising DRL is increasing, a very few consider using continuous measurement outcomes explicitly towards training the DRL agent Fösel et al. (2018); Wang et al. (2020); Mackeprang et al. (2020). As experiments often employ such continuous quantum measurement techniques for feedback control, we will consider this type of measurement as a key ingredient of our analysis below.

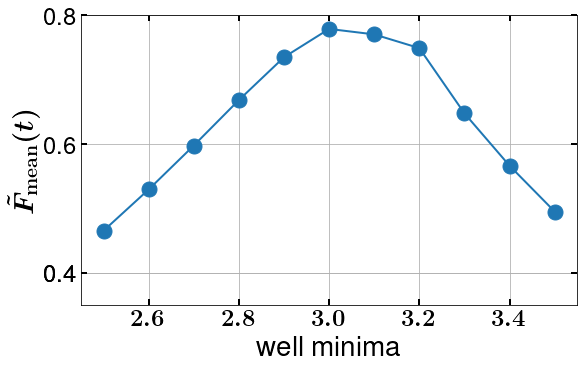

While traditionally known optimal control techniques work very well for linear, unitary and deterministic quantum systems, there is no known generalised method for non-linear and stochastic systems. RL, on the contrary, is agnostic to the underlying physical model, but attempts to control the dynamics of the system by finding patterns from the data produced by it. In this Letter, we model the quantum evolution of a particle in a double well (DW) subject to continuous measurement at a rate of the operator , whose even parity avoids measurement localisation of the particle’s wavefunction to either well Jacobs et al. (2009). The DRL agent controls the quantum dynamics via a modulation of the Hamiltonian , with , where and are (dimensionless) canonical operators – a squeezing operator, whose strength , is modulated by the DRL agent. The DRL agent is trained via the continuous measurement current, while, in real time acts back on the system via . We show that the DRL agent can be trained to cool the particle close to the ground state. Interestingly, the cooling efficiency depends on the choice of , for which there is an optimal value of to achieve the best cooling which we identify numerically.

RL translates a problem at hand into a game-like situation in which an artificial agent (also called the controller), finds a solution to the problem based on a trial-and-error approach Sutton and Barto (2018); Mnih et al. (2015), with no hints or suggestions on how to solve the problem itself. For this purpose, the agent is given a policy (in the case of DRL, it is the neural network itself), which is optimized based on some scalar values (rewards) it receives from the environment (that includes the physics of the problem and the reward estimation function based on the observables) for each decision (action) made by it. By harnessing the power of search, coupled with many trials, the RL will gain experience from thousands of instances executed sequentially or in parallel in a sufficiently powerful computing infrastructure. After sufficient training, the agent can become skilled enough to have sophisticated tactics and superhuman abilities as was phenomenally demonstrated by Google’s AlphaGO Silver et al. (2016, 2017). To give a perspective on the applicability of RL in physics and the kinds of tasks it can solve, we provide a short demonstration to a problem in elementary mechanics which we include as media file in the supporting information.

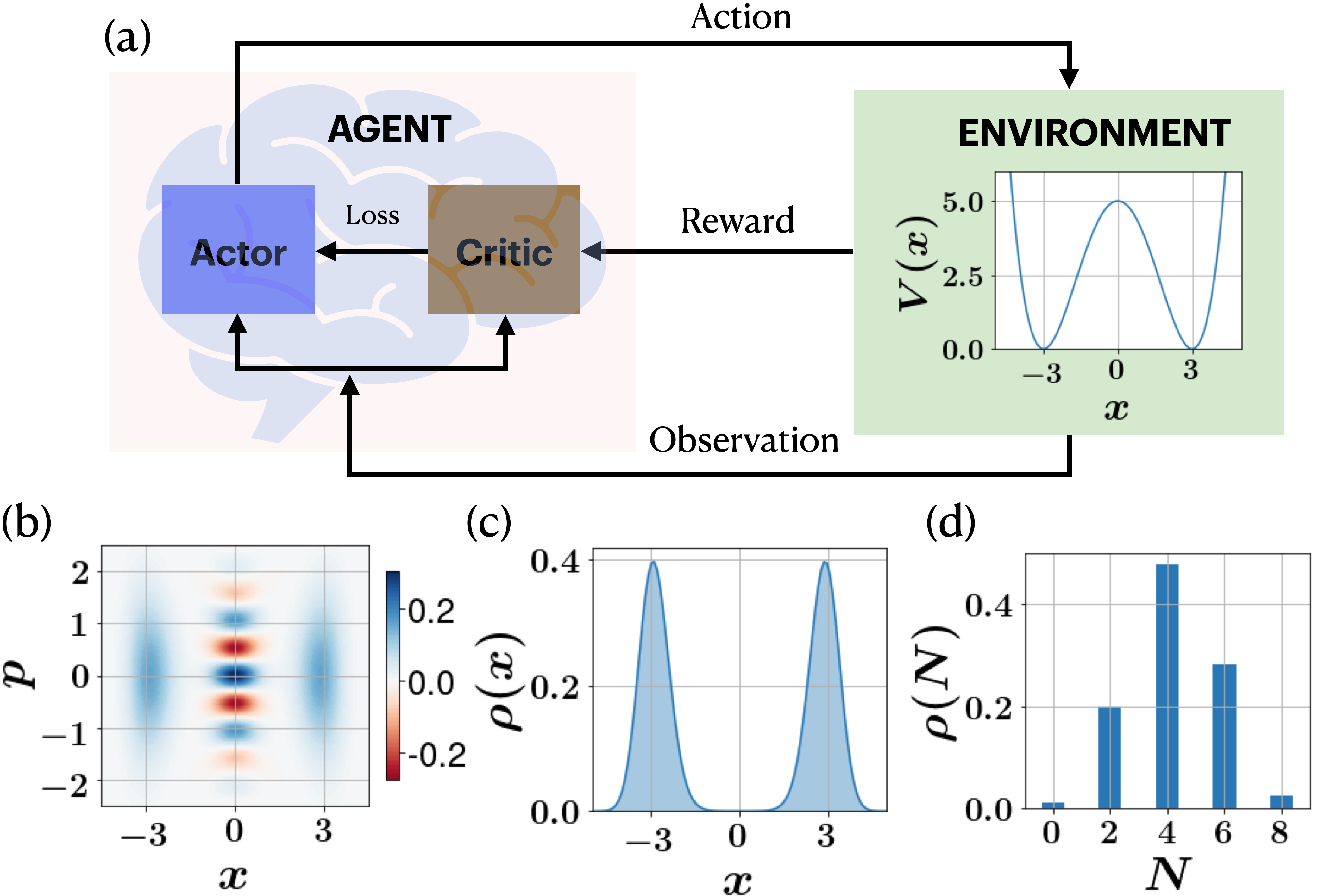

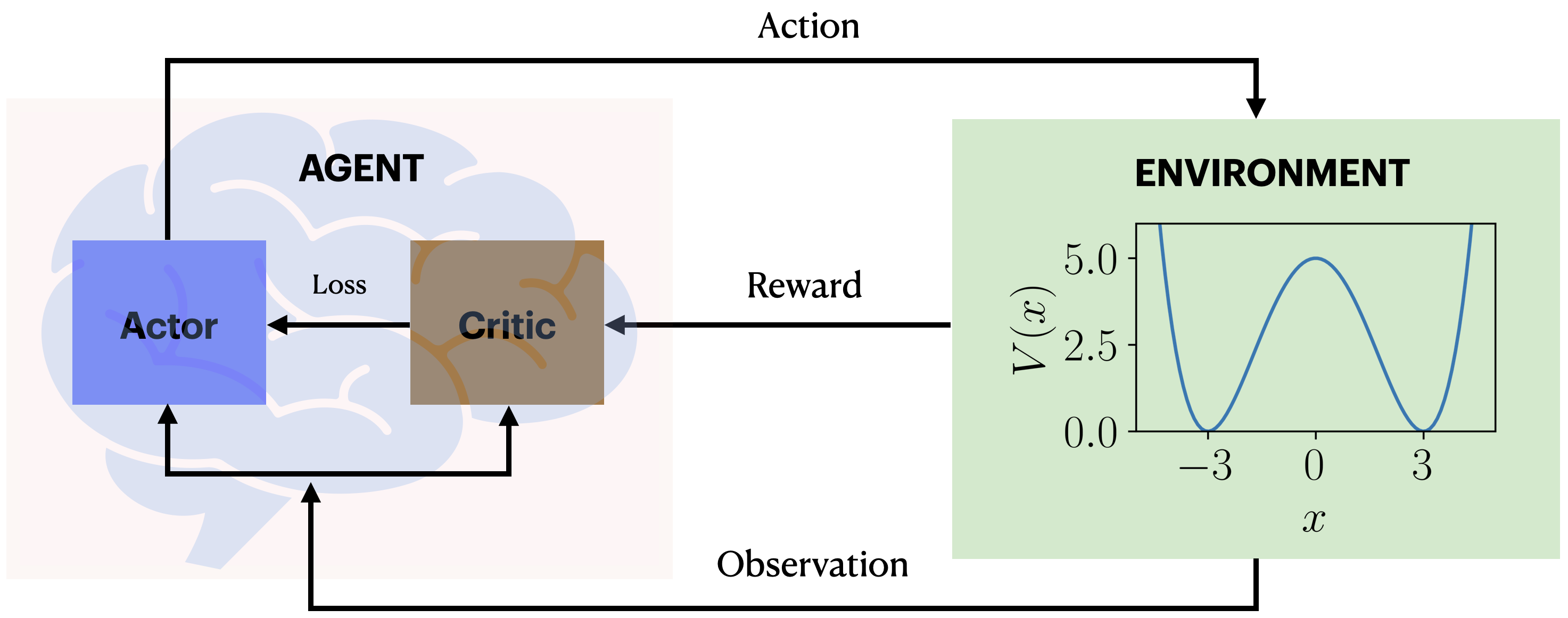

It is possible to implement the DRL agent according to two distinct frameworks, a policy-based or value-based framework Sutton and Barto (2018). In policy-based frameworks, the policy parameters—the weights and biases of the neural network—are optimized directly based on the rewards it receives and informs its future actions on the environment (see Fig. 1(a)). Value-based methods on the other hand, optimize the expected future return of a given value function, and deduce the policy from it Mnih et al. (2015). It is possible to achieve the best of both these worlds by combining these two approaches in a meaningful way, known as Actor-Critic (AC) methods Sutton and Barto (2018). Here the actor is the policy which is being optimised and the critic is the value function which is being learned. The actor network can be modeled using various policy-based approaches such as Vanilla Policy Gradient Sutton and Barto (2018), Trust Region Policy Optimization (TRPO) Schulman et al. (2015), or the more recent Proximal Policy Optimization (PPO) Schulman et al. (2017). In our work, we used PPO in combination with Advantage Actor-Critic (A2C) Mnih et al. (2016) as a DRL agent. In the PPO scheme, it optimizes a clipped surrogate objective/loss function given by,

| (1) |

where, is the probability ratio between current and old stochastic policies, so . Furthermore, is the empirical average over a finite batch of samples and is an estimator of the advantage function at timestep , obtained from the critic, and calculated as the difference between the Q-value for action at the state and its average value, : . Clipping the ratio within a bound specified by ensures that the policy is not updated too much. The A2C framework allows synchronous training of multiple parallel worker environments simultaneously, which enables faster training. A more detailed theory can be found in the Supplementary Materials. A depiction of the DRL model employed in this study is shown in Fig. 1(a).

In this article, we will work with dimensionless position and momentum denoted by . The relationship to the physical position and momentum variables is where are suitable scales for position and momentum. As the canonical commutation relations are ,thus , where the dimensionless Planck’s constant is defined by . The DW potential we consider is formed along the axis by the Hamiltonian of a particle

| (2) |

where gives the location of the well’s minima, is the height of the barrier between the wells, and is the offset along . The ground state of this potential is a type of Schrödinger cat state due to the even parity symmetry of in both and . The ground state can be depicted by the Wigner function , which is shown in Fig. 1(b). The probability distribution along the -axis i.e. is shown in Fig. 1(c). Furthermore, the ground state has even parity symmetry while the first excited state has odd parity Jelic and Marsiglio (2012). Hereafter, we will set the parameters , and , which sets the potential to be symmetric around the origin at . It is worthy to note that the DW potential can now be engineered in laboratories such as in superconducting circuits, Bose-Einstein condensates and magneto-optical setups Abdi et al. (2016).

To provide data to the agent we consider that the quantum system is subject to a continuous measurement process and these measurement results are provided to the agent in real time. This continuous measurement also induces back-action on the quantum system and noise on both the conditioned quantum dynamics and also on the observed measurement data. We can describe the dynamical evolution of the conditioned density operator , conditioned on a stochastic measurement record to time via a quantum stochastic master equation Wiseman and Milburn (2009) given by,

| (3) |

where is a hermitian observable operator under continuous measurement (known as the measurement operator), and and are super-operators given by,

| (4) | |||

| (5) |

where , denotes the anti-commutator. Furthermore, in Eq. 3 represents a Wiener increment. It has mean zero and variance equal to . The measurement result current, , are described by a classical stochastic process that satisfies an Ito stochastic differential equation (see supplementary information)

| (6) |

where is a gain with inverse units to that of the measurement operator , meaning has the units of frequency. For the context of the present work, we have and where is the measurement rate and quantifies the quality of the measurement (see supplementary information). As we have fixed units so that are dimensionless we can set .

To cool the system to the ground state (which is a ‘cat’ state) via continuous measurement, it is important to choose the stochastic operator, in Eq. 3 as instead of , as the latter would collapse the state to either of the two minima of the DW Jacobs et al. (2009). At each interaction, the agent adds a squeezing term to the Hamiltonian (Eq. 2), attempting to adjust the values of in the continuous range , to maximize the reward. The choice of such a feedback is motivated by the physics of the problem, which we explain in detail in the supporting information, backed by an analysis using Bayesian control driven by the conditional mean of the measurement record following the method of Stockton et al., Stockton et al. (2004). It is possible to implement type Hamiltonian terms via motion in a magnetic field Romero-Sánchez et al. (2018). In each episode of the training process the DRL interacts with the environment times, in intervals of , and each time applies an action to the environment. Further detail of the implementation and other technicalities of the DRL can be found in the supporting information.

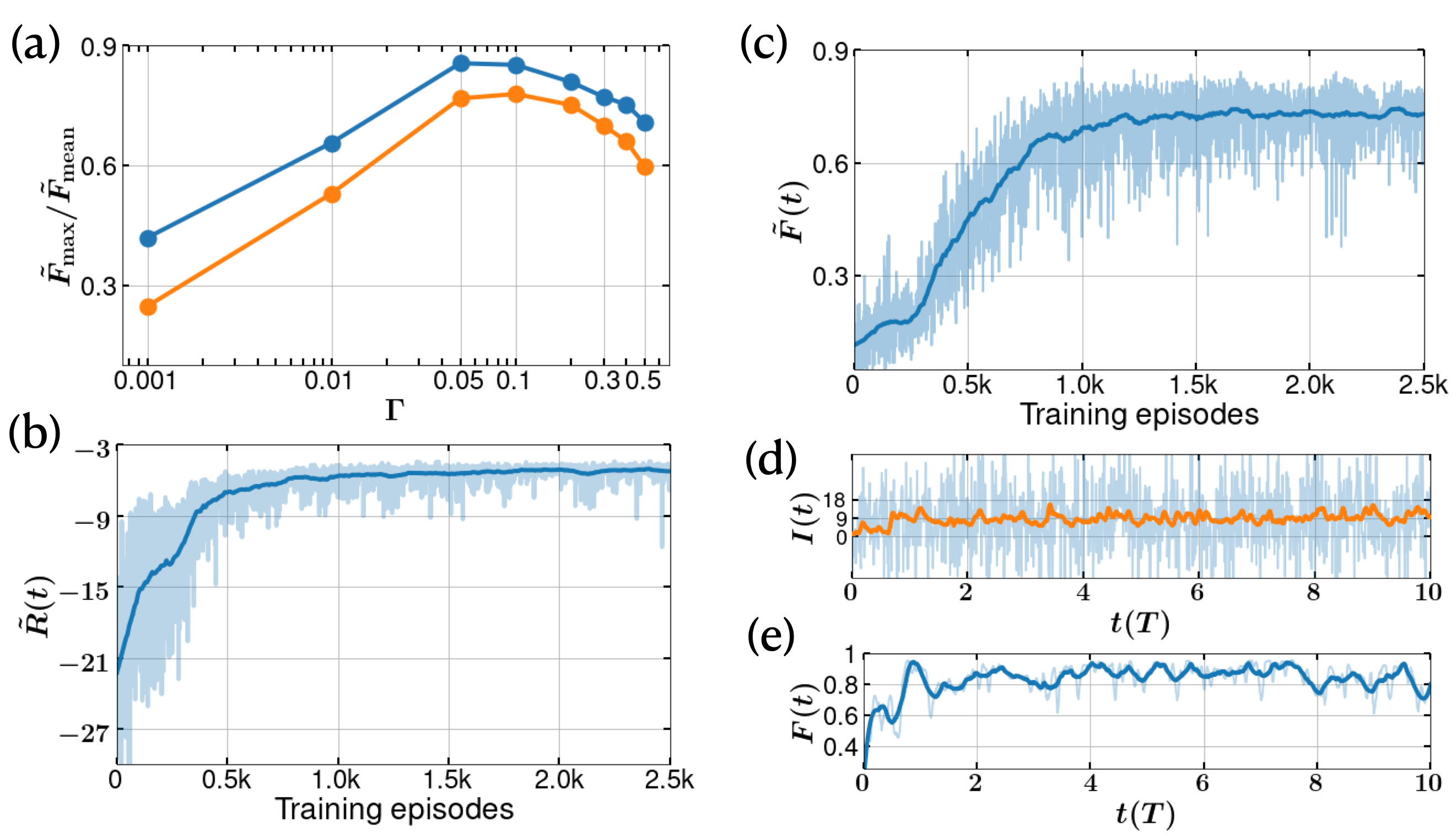

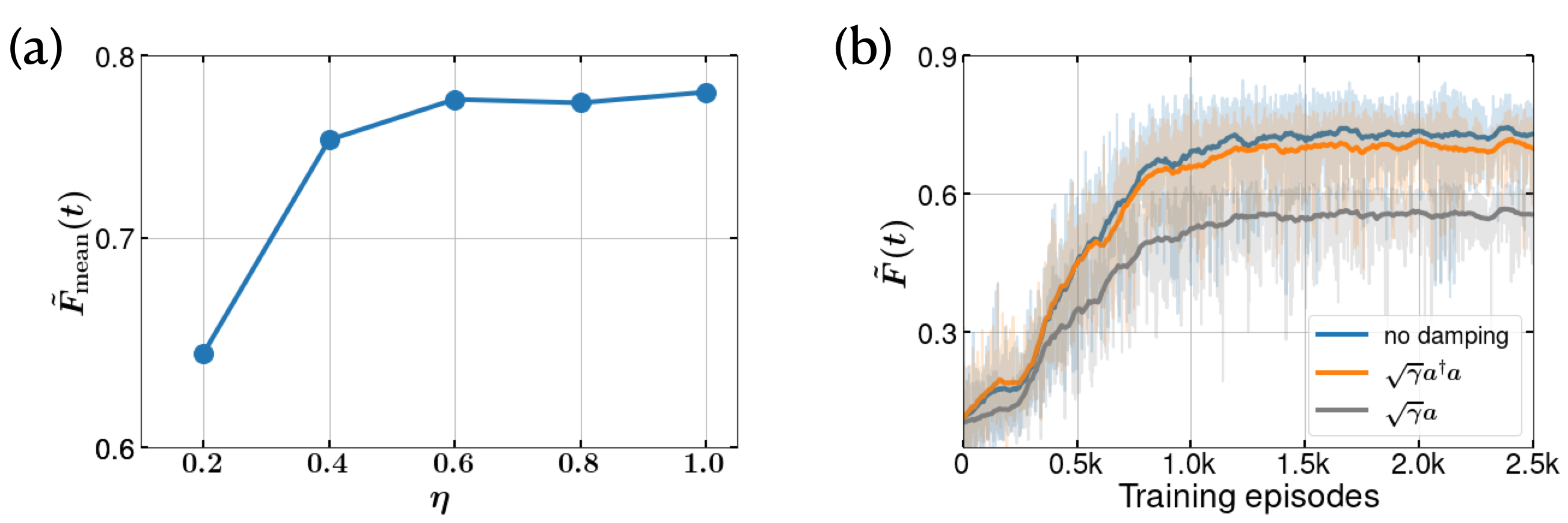

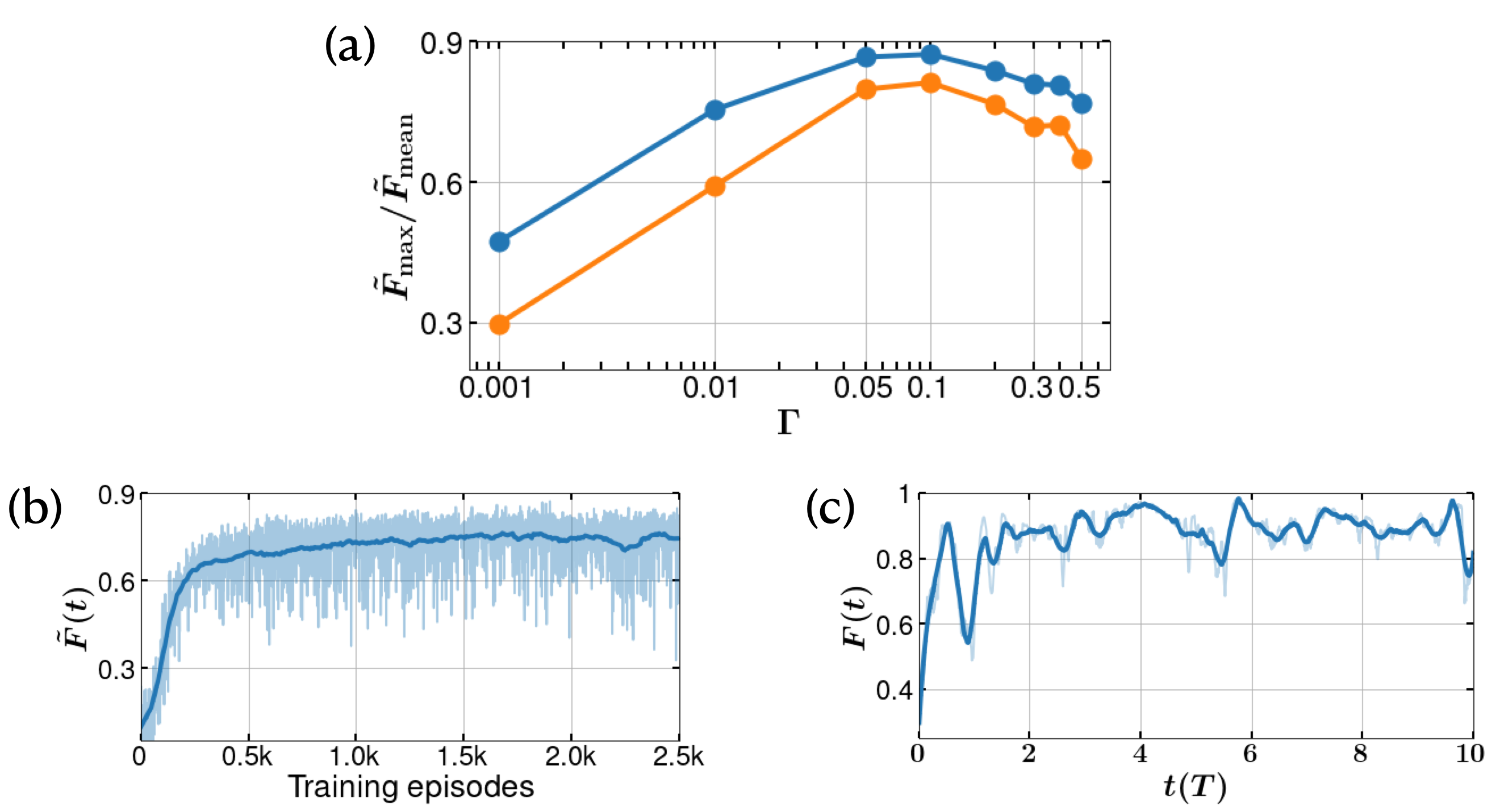

The amplitude of the measurement noise depends on two parameters - (a) the measurement strength, and (b) the measurement time, . Since the Wiener noise in Eq. 6 is a Gaussian with variance , the noise term in the measurement current , scales at least as . Because of this, one might expect the DRL to learn more efficiently for larger values of , however, this is not the case. This makes it critical to choose an optimal value for along with the measurement time . For a choice of , we observe that optimal learning occurs near , and worsens for other values of . Similar effects can be observed in Markovian measurement-based direct feedback which we discuss in the supplementary information. Larger values result in an increase in noise, while smaller values return a very low signal to noise ratio in the continuous measurement process. The agent tends to learn most efficiently when the dynamics fluctuate in a limited domain around the DW minima. The effectiveness of the agent learning is shown in Fig. 2(a), in terms mean and maximum fidelities from 10 successive deterministic episodes of the agents trained on the measurement current, .

An important ingredient in the DRL is a suitable choice for the reward function. Many research studies have previously used fidelity or energy as the reward function. However, such a function is not practically available in experiments. Instead we propose a measurement based reward function , where is the measurement current (Eq. 6). This function obtains its maximum reward of , when , at the well minima positions. The learning process of the agent is shown in Fig. 2(b), along with the fidelity of the instantaneous state of the system with respect to the DW ground state in Fig. 2(c). Although such a fidelity is not possible to evaluate experimentally in real-time, we present this as a check of the learning process. In a given episode the trained agent is able to adapt the feedback in such a way that the particle oscillates near the minima of the DW, as shown in Fig. 2(d) (for ). The corresponding variation of fidelity for the episode is shown in Fig. 2(e). It is worthy to note that with a different choice of the reward function it might be possible to obtain better and more stable learning, see supporting information for details.

At the beginning of each episode, the environment must be reset to an initial state (initial density matrix, ), which is needed to start the stochastic master equation solver. We have found that the choice of plays a crucial role in determining the total reward that can typically be achieved by the agent. When is a thermal or coherent state, the DRL converges to an average fidelity of about 60%. However, if we use a small cat state or the ground state of the DW itself, the agent is able to achieve a mean trained fidelity of over 80% with noisy measurement data. The parity of the initial state is crucial as the stochastic process of continuous measurement and feedback we have chosen is parity conserving. The target ground state of the DW however has even parity and so choosing an initial state with a component of odd-parity will lower the ultimate fidelity achievable. We achieve similar high performance if we start with a thermal state projected on to even parity, as done for Fig. 2. The explicit comparison of the performance of the trained agent with an untrained one is demonstrated in the supporting media.

We benchmark our results against the state-based Bayesian feedback protocol (where the feedback is based on an estimate of the state) as proposed by Doherty et al. Doherty and Jacobs (1999); Stockton et al. (2004). In the context of the present work, the protocol can be simplified to provide feedback of the form where denotes the conditional mean of the observable . We find that this Bayesian control achieves a mean fidelity of . However, is not a quantity directly accessible in real experiments. When the Bayesian feedback is instead driven by the noisy measurement current (which is available in experiments), we find that Bayesian feedback demonstrates almost no control over the dynamics. Numerical simulations with 1000 copies of the system (ensemble), evolving under a given feedback based on the mean of the measurement currents during each time step, yields an average fidelity of . This is considerably worse than the performance of the DRL agent. A more detailed discussion can be found in the supporting information.

We have found that the DRL shows a robust control when the measurement efficiency, , as shown in Fig. S5(a) of the supporting information implying that the the DRL-agent is able to find patterns in the underlying dynamics even when the stochasticity is significantly increased. Similarly, the DRL shows no significant drop of fidelity under additional dephasing of the form , but this fidelity does drop for damping , where is the decoherence rate (see supporting information).

On the computational side, the challenge for DRL control is the significant computational expense, e.g. 3-4 days of simulation time, even with fast computational CPUs, the bottleneck being the slow stochastic solver routines. For the RL side, we find that RL algorithms does not scale up linearly with the number of parallel processes, and thus improved parallelization in such RL computations could be worthwhile. We expect improved performance if the agent is made to learn in a dynamic combination of supervised (under a supervised setting a ML agent can learn more effectively from less data points, but is not reward based), and reinforcement learning (which is reward based and hence useful for feedback control), as done for image recognition Kangin and Pugeault (2018); Senft et al. (2017); Wang et al. (2018). It is possible to reshape the data to images of actions and measurement records which would enable the usage of convolution neural networks (CNN) in GPUs/TPUs with multi-core support and utilise different image compression techniques, e.g., deep compressed sensing technique, proposed recently by researchers from DeepMind Wu et al. (2019). From the physics side, use of proper filters (as normally done in experiments) to filter the noisy signals prior to inputting into the DRL is expected to be crucial. In addition, the use of better reward estimation, such as combining constraints on current, fidelity (using tomography), and energy, is expected to be useful for further improvement. An even further innovation would be to use RL in combination with various optimal and Bayesian control protocols, as recently explored in applications outside of quantum mechanics Shashua and Mannor (2020); Carron et al. (2016); Krastanov and Jiang (2017)

In conclusion, we have demonstrated the usefulness of deep reinforcement learning to tailor the non-trivial feedback parameters in a non-linear system to engineer evolution towards the ground state. We found that the artificial agent can discover novel strategies solely based on measurement records to engineer high-fidelity ‘cat states’ for the quantum double well.

I acknowledgments

Acknowledgements.

The authors thank the super-computing facilities provided by the Okinawa Institute of Science and Technology (OIST) Graduate University and financial support. GJM and MK acknowledge the support of the Australian Research Council Centre of Excellence for Engineered Quantum Systems CE170100009.Sangkha Borah Bijita Sarma Michael Kewming Gerard J. Milburn Jason Twamley

Measurement Based Feedback Quantum Control With Deep Reinforcement Learning for Double-well Non-linear Potential: Supporting Information

S1 A quick introduction to reinforcement learning

Essentially there are three kinds of machine learning, namely supervised learning, unsupervised learning and reinforcement learning Goodfellow et al. (2016). In supervised learning, the ML model is provided with sufficient input and output data (labeled data), which are used to train the model to discover the hidden pattern/features in the datasets. For example, the dataset may consist of RGB images of different fruits, e.g., of apples, oranges, and bananas, with proper labels assigned by their names. The ML model can read the pixels of those images and correlate to the labels in the datasets by training on them. After training on enough datasets, the ML model is expected to acquire the necessary wisdom to predict from a new image (on which no training was done) whether it is an apple or not. On the other hand, in unsupervised learning, we do not provide labels to the datasets. After training, the model is able to make a classification of those fruits based on the hidden features in the pixel data of the images, and eventually acquire the expertise to classify new test images of fruits again, whether it is an apple, orange or banana or not.

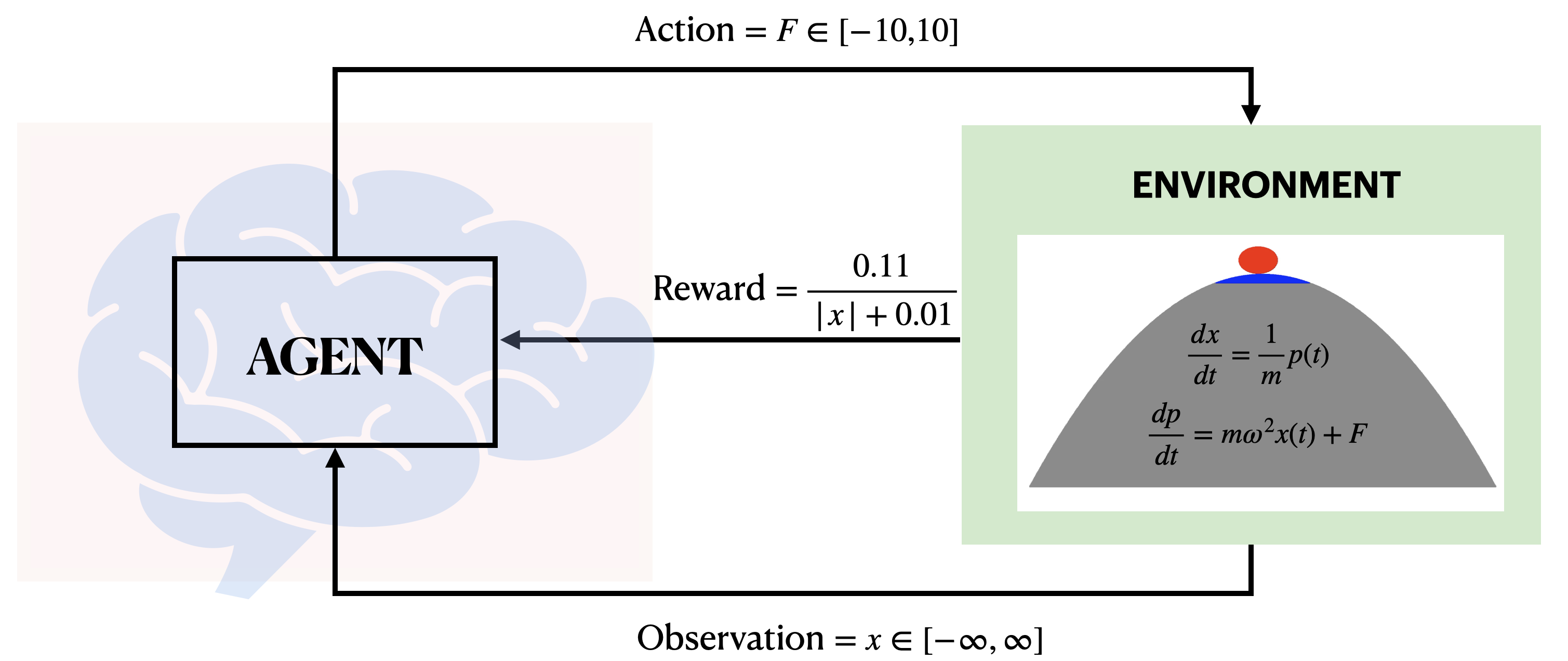

Reinforcement learning (RL) Sutton and Barto (2018) is the third kind of ML, the basic workflow of which is shown in Fig. S1. It comprises of two main characters - the agent and the environment. The environment is the agent’s world in which it lives and interacts with. The agent is the brain of the RL and comprises of routines to function approximators, such as the artificial neural networks. In the case when the agent uses deep neural networks as function approximators (neural networks are non-linear function approximators), the RL is known as deep reinforcement learning (DRL). The RL-agent periodically interacts with the RL-environment in real time, in which it applies some changes to the dynamics of the system by changing some dependent variables. The changes it applies to the environment are called the actions. In return, the agent gets back full/partial information of the changes in the dynamics of the environment (known as the observation or the state of the system) along with a scalar value known as the reward signal. The reward function returns a scalar value that gives an estimation of the likelihood of the applied action bringing to desirable changes in the dynamics or not. The idea behind choosing a reward function is that rewarding or punishing an agent for its behaviour (actions applied) makes it more likely to repeat/forgo that behaviour in future. For that, the agent is set the goal to maximize the cumulative rewards (known as return) by changing its actions within a certain number of interactions in real time, known as an episode (a play in games). At the beginning of such a RL experiment, the agent applies the actions randomly, and later updates the parameters of the agents function approximators (the weights and biases of the neural networks in the case of DRL) based on which the next actions are chosen. In reality, the agent-environment loop has to be computed for several hundreds/thousands of episodes depending on the complexity of the problem of interest. For instance, for stochastic environments with noisy data, the learning process of the RL-agent is slower than compared to its deterministic counterpart.

To understand it better, we here try to demonstrate the working of DRL with a fundamental classical mechanics problem, shown in Fig. S1. The environment is an upside-down harmonic oscillator with a completely frictionless surface, such that a ball can not be held fixed on top of it without any external forces. The task of the agent would be to apply engineered amount of forces back and forth to keep it on top, namely in the blue region shown in the figure. The reward function is chosen such that it gets the maximum reward at and gradually smaller ones as we go away from the origin. This must be programmed together with the solver of the equations of motion in the RL environment. The agent, composed of a few layers of neural networks (hence known as deep reinforcement learning, DRL) can be trained for few thousand steps before it acquires superhuman or human-like skills to adapt the external forces to keep it stable within the blue region. A video demonstration of the same can be obtained in the supporting media or the GitHub link Quantum Machines Unit (2021a).

The rule based on which the agent decides the action to be applied on the environment is known as its policy. In the case of DRL the rule roughly represents the neural network function approximators, as the rule is implicitly determined by some non-intuitive choices of the parameters (weights and biases) of the neural networks. A policy can be deterministic, in which case it is usually denoted by or stochastic, which is denoted by , where is the action chosen by the agent as a function of the state at time . The dot () in the case of stochastic policy () represents the conditioning on (probabilistic), unlike the case of deterministic policy , for which the action is a deterministic function that maps the state to the the policy at time . A deterministic policy is usually chosen only for evaluating the performance of an agent after being trained.

The reward function is one of the most critical parts of RL, which is crucial for the agent’s learning. In its most general form, it is a function of the current state, the action applied and the next state of the environment, , where is the scalar reward signal at time . The cumulative reward is defined as,

| (S7) |

where represents the sequences of state and action over an episode known as a trajectory, and represents the horizon, giving the extent to which the summation is done. In this case, the return is known as finite-horizon un-discounted return. Often the choice is to use infinite horizon limit with a discount factor , known as infinite-horizon discounted return:

| (S8) |

which essentially signifies the fact that rewards now is better than rewards later. The choice of the discounted rewards also makes it mathematically convenient.

The task of the agent is to maximize the expected return , where is the empirical average over a batch of samples, for which the policy is optimized for the optimal policy : . The expected return for choosing a given state or state-action pair is known as the value function. The expected return for the starting in a state and following the policy afterwards gives the on-policy value function, given by,

| (S9) |

On the other hand, the expected return for the starting in a state and taking an action and following the policy afterwards gives the on-policy action-value function, given by,

| (S10) |

The value functions obey the Bellman equations, which can be solved self-consistently, for example, for action-value function, it is given by,

| (S11) |

Sometimes, yet another function that combines both the types of values function is defined, given by,

| (S12) |

This is called the advantage function and gives a measure of advantage of taking an specific action in state over the random selection of it following .

In recent years, several different RL algorithms have been proposed, among which the leading contenders are the deep Q-learning Mnih et al. (2015), vanilla policy gradient methods Sutton and Barto (2018), and trust region natural policy gradient methods Schulman et al. (2015, 2017). In the modern scenario, a successful RL method is expected to be scalable to large models, parallel implementations, data efficiency, and robustness, and should be successful on a variety of problems without much of hyper-parameter tuning. While Q-learning methods are known for their efficiency in numerous discrete action-based environments, such as various Atari games Silver et al. (2016, 2017), they fail on many simple problems, and may suffer from the lack of guarantees for an accurate value function, the intractability problem resulting from uncertain (stochastic) state information as well as the complexity arising from continuous states and actions Schulman et al. (2017). Vanilla policy gradient methods Sutton and Barto (2018) are known for better convergence properties, are effective in high-dimensional or continuous action spaces, and can learn stochastic policies. However, they often converge to a local minimum rather than a global one, and have poor data efficiency and robustness, often leading to high variance in policy updates. The trust region policy optimization (TRPO) Schulman et al. (2015) is relatively complicated, and is not compatible with architectures that include noise such as dropout or parameter sharing between the policy and value function, or with auxiliary tasks. Proximal policy optimization (PPO) Schulman et al. (2017) is the latest inclusion in the list, which is shown to yield similar or better performance and robustness as TRPO, with much easier implementation and parallelization, and faster optimization of policy parameters with stochastic gradient methods, and is naturally compatible with noisy environments.

Policy gradient methods work by optimizing the following objective (loss) function:

| (S13) |

where, is a stochastic policy, and is an estimator of the advantage function at timestep t. is the empirical average over a finite batch of samples. In TRPO methods, the objective function (the “surrogate” objective) contains the probability ratio of the new () and old policies () instead of :

| (S14) |

under the constraints that the average KL-divergence between new and old policies are less than a small cutoff, :

| (S15) |

Without the constraint, maximizing the objective would lead to an excessively large policy update Schulman et al. (2015). PPO modifies the above constraint by clipping the policy update, with a hyper-parameter , and optimizing the objective function with stochastic gradient method Schulman et al. (2017):

| (S16) |

Q-learning, on the contrary, optimizes value function using the Bellman equation, as given in Eq. S11. Lately, different algorithms that combines policy gradient methods with Q-learning methods have been proposed and have been found to sidestep many of the drawbacks of the traditionally used approaches. These are known as the actor-critic methods, in which there are two policy networks, actor and critic. While the actor, based on a policy network, takes an action on the environment, the critic network receives the reward from the environment and makes useful suggestion to the actor by updating the parameters of the action value function. In this paper, we have used a DRL agent that combines PPO with advantage actor-critic (A2C) approach Mnih et al. (2016) , where the advantage function is is calculated as the difference between the Q-value for action at the state and the average value of the state: , discussed above. The A2C framework allows synchronous training of multiple parallel worker environments simultaneously, which enables faster training. The basic workflow of A2C is shown in Fig. S2.

S2 Weak continuous measurement model

We have used a weak continuous measurement of described using a master equation for the system density operator. In this section we derive this equation using a continuous limit for a sequence of weak measurements at random times.

A single weak measurement of a Hermitian operator is described using an operation on the state of the system that gives the post-measurement state conditioned on a measurement result

| (S17) |

where is the probability density of the measurement result and

| (S18) |

with , and is the gain in inverse units to . The measurement result, , is a classical random variable conditioned on the state of the measured system. The mean and variance of are given by

| (S19) | |||||

| (S20) |

where with the quantum averages defined by . It is clear that can be interpreted as the measurement error over and above any intrinsic quantum uncertainty. The unconditional state of the system post measurement is then given by

| (S21) |

We now move to a weak continuous measurement by assuming each of these single weak measurements occur at random, Poisson distributed times. At each time, the device that performs the measurement is discarded after the measurement result is recorded, and a new measurement device is supplied - ready for the subsequent measurement. This builds in the Markov condition for the irreversible measurement process. The master equation describing the unconditional dynamics of the entire system is given by Milburn (1987),

| (S22) |

where is the rate of measurements.

We now take the limit in which the rate of measurements satisfies where is the time scale of the free dynamics of the system and the uncertainty of the measurements such that the ratio is constant. This is the continuous weak measurement limit. The unconditional master equation then becomes

| (S23) |

with . The effect of the double commutator term in this master equation is to destroy coherence in the diagonal basis of and adds diffusive noise to incommensurate variables where . A good measurement requires that is large.

We define an observed classical stochastic process in the continuum limit as follows. Denote a complete record of the measurement results, indexed by the random times of the measurement times, as . Now define the observed process by the stochastic differential equation

| (S24) |

where and while is a Gaussian distribution. In the continuum limit this point process can be approximated by a diffusion process as

| (S25) |

where the subscript indicates that this average must be computed from the state conditioned on the entire history of to time and is the Wiener process. Note that for a good measurement in which is large, the signal-to-noise ratio also becomes large.

In order to evaluate the conditional averages in the observed process we need a continuous limit of the conditional state at each measurement. The result is the stochastic master equation Wiseman and Milburn (2009),

| (S26) |

where

| (S27) |

and

| (S28) |

This equation is non linear; not surprising as for a conditional process the future must depend on the entire past history of measurement results.

S3 Markovian Quantum Feedback

Let us consider the case when we remove the DRL agent altogether and set the feedback Hamiltonian to be proportional to the measurement current, (Eq. S25). The effect of feedback on can be introduced as,

| (S29) |

where is a super-operator such that for some Hermitian feedback action operator . With Eq. (S26), we get the modified closed-loop stochastic master equation under feedback ,

| (S30) |

Averaging over the noise we get the the Wiseman-Milburn unconditional master equation with Markovian feedback as

| (S31) |

where , the average over . Looking at the unconditional master equation (S31), one can identify the feedback term, , which modifies the base Hamiltonian evolution generated by . The term , is the decoherence introduced by the continuous measurement of . However we now observe a new source of decoherence , introduced by the feedback itself Zhang et al. (2017). There is often this competition between the two sources of decoherence that decide an optimal value of . For small values of , the measurement current , is very noisy and the feedback decoherence is high, while the continuous measurement decoherence is low. For large values of , the feedback decoherence is low while the measurement decoherence is high. This competition carries over to the case studied in the main manuscript where the feedback action is determined by a DRL agent. We thus observe an optimal measurement rate, for the learning of the DRL agent, as shown in Figs. 2(a) and S7(a).

S4 The DRL in the study

Using the model given in Eq. (2) of the paper, we created a DRL environment (shown in Fig.1(a) using the open-source platform OpenAI-Gym Brockman et al. (2016), in which QuTIP’s Johansson et al. (2012) stochastic master equation (SME) solver is used to compute the dynamics, see Eq. (6). The environment primarily includes two routines– the quantum dynamics/state of particle moving in a DW and modulation function to alter the quantum dynamics and a reward estimation function based on the observables (measurement results) in a step-wise manner for every interaction made by the agent. Our PPO agent Schulman et al. (2017) is constructed following the implementations of stable-baselines3 Raffin et al. (2019) in the A2C settings Mnih et al. (2016), where both the actor and critic are modelled with a set of fully connected feed-forward networks dimensions , with the first layer as a shared network between the two. For modeling the neural networks we have used the open-source ML platform PyTorch Paszke et al. (2019). The input of the network is provided as the mean of the measurement currents, from the last four time-steps (which helps learning faster rather than using the instantaneous current), along with the action returned by the network. In each episode of the training process the DRL interacts with the environment times, in intervals of , and each time applies an action to the environment. The agent gathers trajectories as far out as the horizon limits (in our case 4000 steps, i.e., data from 4 episode for each environment) for each environment running in parallel (in our case 8 environments in total), then performs a stochastic gradient descent update of mini-batch size (in our case it is 100) on all the gathered trajectories for the specified number of epochs (in our case 10). We have found that a larger network along with a small learning rate () helps the agent keep the learning process stable during longer simulations.

These simulations require a high truncation of the Hilbert-space dimension (we took ), since the process of measurement often drives the state to high Fock numbers (see supporting media for demonstration or the GitHub link Quantum Machines Unit (2021b)). This is especially important for the DRL in the initial stages of learning when it is trying to gather information about the system by performing random actions. In our numerical experiments we chose , , and used 8 parallel vector environments to speed up the processing. After training within a few hundred such parallel environments the DRL learns to apply the actions in such a way that it limits the occupation to low Fock states, avoiding and errors with truncation limits. In addition, we observe that the efficiency of learning depends on the type of agent. Although traditional DQN methods based on discrete actions works well with simple conditional mean data, we find that they perform very poorly in the presence of noise. Actor-critic methods, especially the newer PPO agent (an evolution of A2C and TRPO), is better suited for dealing with a stochastic environment (stochastic data) than a purely value-based algorithm like DQN.

S5 Role of feedback Hamiltonian

In the main manuscript we have chosen the agent controlled action to be . This action augments the default DW quantum dynamics. It is natural to enquire if there are other, more effective actions that will improve the learning of the DRL process to achieve the goal of cooling the DW to its quantum ground state. Interestingly, we find that this form of action appears to optimally effective and choosing other forms of action results in weaker DRL efficiency. The action operator, , where , is the Hermitian squeezing operator. The reason why this action is so effective in learning and controlling the quantum dynamics of the particle in the double-well potential can be understood merely from classical perspectives.

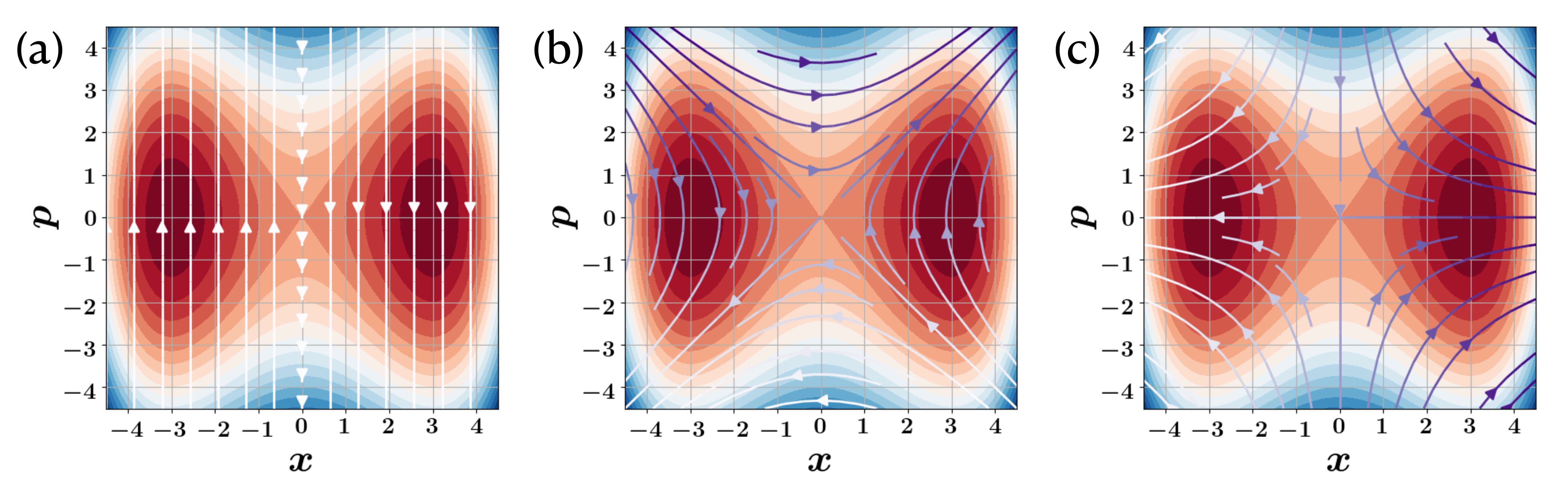

Consider the classical streamlines of the feedback action Hamiltonian in the phase space of the double-well potential. The feedback, generates a flow vector in classical phase space defined by, and , where is the Poisson bracket. This flow is always tangential to the curves of , where is a phase space point at time just before feedback, and moves the particle along curves of constant . Fig. S3 shows the phase space streamlines for three different possible feedback action Hamiltonians. Feedback in the form of is likely to be very ineffective, as indicated by the streamlines in Fig. S3(a), as the flow is always orthogonal to the -axis. Similarly, the streamlines for would push the state starting near the axis further away from the axis. On the other hand, for the case of , the streamlines push the particle in the direction of the x-axis, which is appropriate for approaching the double-well minima.

Numerically, we can demonstrate the best of the three controls by using these choices of the feedback with the Bayesian control with conditional data, discussed in details down below.

S6 Effect of input state

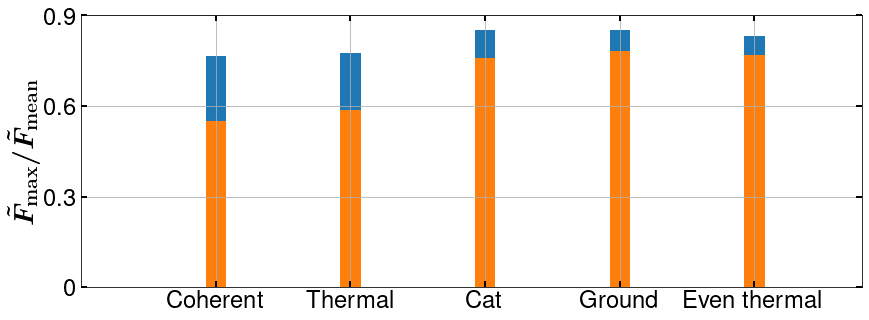

It is found that the choice of initial state for the time evolution via the stochastic master equation has a profound effect on how well the DRL agent can achieve its goal to bring the system to the ground state. The maximum (blue) and mean (orange) fidelity of the trained agents for different initial states are shown in Fig. S4. When the initial state, is a thermal or coherent state, the DRL converges to an average fidelity of about 60%. However, if we use a small cat state or the ground state of the DW itself, the agent is able to achieve a mean trained fidelity of over 80% with noisy measurement data. We achieve similar high performance if we start with a thermal state projected on to even parity.

S7 Effect of measurement efficiency and decoherence

We benchmark the mean fidelity achieved as a function of the measurement efficiency, in the Fig. S5(a). We find that the DRL reveals robust control for . In Fig. S5(b), the learning of the DRL agent is compared with the one without environment damping (blue),in the presence of different collapse operators – (i) , and (ii) . Further improvement of the results could be achieved by implementing the suggestions mentioned at the end of the main manuscript.

S8 Generalizability of the trained model

Since the DRL is trained explicitly on the measurement data that varies with the double-well parameters (for example, the position of the well minima), measurement strength () and measurement time interval (), the generalizability of a trained model is poor across any significant changes of those parameters (see Fig. S6). However, this is not the case for the initial state . Although the choice of the type of has a significant effect on the mean fidelity achieved by the agent, we found that a trained model with a given (say coherent state) yields exactly the same behavior for others (say even-thermal state) and vice versa (see Fig. S4), which means that choosing a better prediction (or a similar basis with respect to the target state) would lead to better net fidelity, without the need to retrain the model for different initial states.

S9 Fidelity as the reward function

It is possible to choose fidelity as the reward function instead of measurement current discussed in the main text of the paper, shown in Fig. S7. However, fidelity is not directly accessible in real time in a laboratory experiment, and the following analysis is included for the sake of clarity and helpful comparison. When the DRL agent uses fidelity as a reward function we see that the learning behaviour, shown in (Fig. S7(a)), as a function of the measurement rate , essentially mirrors the behaviour seen when the measurement current is used instead as a reward function (shown in Fig. 2(a) in the main text of the paper). In Fig. S7(b) the episodic mean reward evolution in time during the training of the agent when [light(dark) blue includes(averages) noise]. In Fig. S7(c) the fidelity variation of a given random episode of the training agent is shown.

S10 Bayesian vs. DRL control

Here we benchmark our result against the state-based Bayesian feedback protocol (where the feedback is based on an estimate of the state) as proposed by Doherty et al., Doherty and Jacobs (1999); Stockton et al. (2004). In the context of the present work, the protocol is simplified to provide feedback of the form where denotes the conditional mean of the observable . However, is not a quantity directly accessible in real experiments, and the above feedback condition should be replaced by the measurement current, . We find that when Bayesian feedback is driven by , it shows very little control over the dynamics. We performed a numerical simulation with 1000 copies of the system (ensemble), all evolving under feedback based on the mean of the measurement currents during each time step. The performances of these two approaches is compared in Table S1

| Feedback basis | Bayesian control (% fidelity) | DRL control (% fidelity) |

|---|---|---|

| conditional means, (t) | 85% | 83% |

| (t) with damping | 62% | 63% |

| (t) with damping | 76% | 79% |

| measurement current, | 42% | 77% |

| with damping | 40% | 58% |

| with damping | 41% | 72% |

We see from S1 that in order that the Bayesian control performs well only when it is provided the control with conditional mean data. When used with measurement currents, it, however, shows no control at all. Even when it is used with an ensemble of 1000 identical systems, it does not find any control beyond 50%. We found from our analysis that it is very important to provide the Bayesian controller with very accurate estimation of the mean currents that does not deviate significantly from the conditional means. The presence of a bad data point often throws the system into a non-recoverable state resulting in much lower fidelities. DRL, on the other hand, has a brain, and hence can recover from such bad data points.

It is also seen that when the Bayesian as well as the DRL controller is trained with conditional mean data, both yield comparable performances. It is interesting to observe the significant drop in achievable fidelity in presence of the environment damping , but not in presence of , environment dephasing. Thus, the drop of the performance of the DRL agent in presence of an environment damping can be attributed to the reduction of the indistinguishably of the target state of the DW potential in the presence of decoherence.

Finally, we found that the choice of the feedback over and , discussed above, can be demonstrated using Bayesian feedback with conditional mean data. We found a feedback of the form could never control the dynamics, while with achieves a mean fidelity of . The mean fidelity of 85% shown shown in table S1 could only be obtained when used with the feedback .

References

- Verstraete et al. (2009) F. Verstraete, M. M. Wolf, and J. Ignacio Cirac, Nat. Phys. 5, 633 (2009).

- Motta et al. (2020) M. Motta, C. Sun, A. T. K. Tan, M. J. O’Rourke, E. Ye, A. J. Minnich, F. G. S. L. Brandão, and G. K.-L. Chan, Nat. Phys. 16, 205 (2020).

- Love (2020) P. J. Love, Nat. Phys. 16, 130 (2020).

- Wiseman and Milburn (2009) H. M. Wiseman and G. J. Milburn, Quantum Measurement and Control (Cambridge University Press, Cambridge, 2009).

- Jacobs and Steck (2006) K. Jacobs and D. A. Steck, Contemp. Phys. 47, 279 (2006).

- Zhang et al. (2017) J. Zhang, Y.-x. Liu, R.-B. Wu, K. Jacobs, and F. Nori, Phys. Rep. 679, 1 (2017).

- Goodfellow et al. (2016) I. Goodfellow, Y. Bengio, A. Courville, and Y. Bengio, Deep learning, Vol. 1 (MIT press Cambridge, 2016).

- Mnih et al. (2015) V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, and D. Hassabis, Nature 518, 529 (2015).

- Sutton and Barto (2018) R. S. Sutton and A. G. Barto, Reinforcement Learning: An Introduction, 2nd ed. (The MIT Press, 2018).

- Li (2019) Y. Li, arXiv (2019), 1908.06973 .

- Carleo et al. (2019) G. Carleo, I. Cirac, K. Cranmer, L. Daudet, M. Schuld, N. Tishby, L. Vogt-Maranto, and L. Zdeborová, Rev. Mod. Phys. 91, 045002 (2019).

- Brunton et al. (2020) S. L. Brunton, B. R. Noack, and P. Koumoutsakos, Annu. Rev. Fluid Mech. 52, 477 (2020).

- Carrasquilla and Melko (2017) J. Carrasquilla and R. G. Melko, Nat. Phys. 13, 431 (2017).

- Dunjko and Briegel (2018) V. Dunjko and H. J. Briegel, Rep. Prog. Phys. 81, 074001 (2018).

- Mehta et al. (2019) P. Mehta, M. Bukov, C.-H. Wang, A. G. R. Day, C. Richardson, C. K. Fisher, and D. J. Schwab, Phys. Rep. 810, 1 (2019).

- Carleo and Troyer (2017) G. Carleo and M. Troyer, Science 355, 602 (2017).

- August and Ni (2017) M. August and X. Ni, Phys. Rev. A 95, 012335 (2017).

- Baireuther et al. (2018) P. Baireuther, T. E. O’Brien, B. Tarasinski, and C. W. J. Beenakker, Quantum 2, 48 (2018), 1705.07855v3 .

- Torlai and Melko (2017) G. Torlai and R. G. Melko, Phys. Rev. Lett. 119, 030501 (2017).

- Krastanov and Jiang (2017) S. Krastanov and L. Jiang, Sci. Rep. 7, 1 (2017).

- Torlai et al. (2018) G. Torlai, G. Mazzola, J. Carrasquilla, M. Troyer, R. Melko, and G. Carleo, Nat. Phys. 14, 447 (2018).

- Neugebauer et al. (2020) M. Neugebauer, L. Fischer, A. Jäger, S. Czischek, S. Jochim, M. Weidemüller, and M. Gärttner, Phys. Rev. A 102, 042604 (2020).

- Lohani et al. (2020) S. Lohani, B. T. Kirby, M. Brodsky, O. Danaci, and R. T. Glasser, Mach. Learn.: Sci. Technol. 1, 035007 (2020).

- Ahmed et al. (2020a) S. Ahmed, C. S. Muñoz, F. Nori, and A. F. Kockum, arXiv (2020a), 2008.03240 .

- Palmieri et al. (2020) A. M. Palmieri, E. Kovlakov, F. Bianchi, D. Yudin, S. Straupe, J. D. Biamonte, and S. Kulik, npj Quantum Inf. 6, 20 (2020).

- Ahmed et al. (2020b) S. Ahmed, C. S. Muñoz, F. Nori, and A. F. Kockum, arXiv (2020b), 2012.02185 .

- Wang et al. (2020) Z. T. Wang, Y. Ashida, and M. Ueda, Phys. Rev. Lett. 125, 100401 (2020).

- Niu et al. (2019) M. Y. Niu, S. Boixo, V. N. Smelyanskiy, and H. Neven, npj Quantum Inf. 5, 33 (2019).

- Zhang et al. (2019a) X.-M. Zhang, Z. Wei, R. Asad, X.-C. Yang, and X. Wang, npj Quantum Inf. 5, 85 (2019a).

- Xu et al. (2021) H. Xu, L. Wang, H. Yuan, and X. Wang, Phys. Rev. A 103, 042615 (2021).

- Zhang et al. (2019b) X.-M. Zhang, Z. Wei, R. Asad, X.-C. Yang, and X. Wang, npj Quantum Inf. 5, 85 (2019b).

- Mackeprang et al. (2020) J. Mackeprang, D. B. R. Dasari, and J. Wrachtrup, Quantum Mach. Intell. 2, 5 (2020).

- Haug et al. (2020) T. Haug, W.-K. Mok, J.-B. You, W. Zhang, C. E. Png, and L.-C. Kwek, Mach. Learn.: Sci. Technol. 2, 01LT02 (2020).

- Guo et al. (2021) S.-F. Guo, F. Chen, Q. Liu, M. Xue, J.-J. Chen, J.-H. Cao, T.-W. Mao, M. K. Tey, and L. You, Phys. Rev. Lett. 126, 060401 (2021).

- Bilkis et al. (2020) M. Bilkis, M. Rosati, R. M. Yepes, and J. Calsamiglia, Phys. Rev. Res. 2, 033295 (2020).

- Porotti et al. (2019) R. Porotti, D. Tamascelli, M. Restelli, and E. Prati, Commun. Phys. 2, 1 (2019).

- Ding et al. (2021) Y. Ding, Y. Ban, J. D. Martín-Guerrero, E. Solano, J. Casanova, and X. Chen, Phys. Rev. A 103, L040401 (2021).

- Paparelle et al. (2020) I. Paparelle, L. Moro, and E. Prati, Phys. Lett. A 384, 126266 (2020).

- Fösel et al. (2018) T. Fösel, P. Tighineanu, T. Weiss, and F. Marquardt, Phys. Rev. X 8, 031084 (2018).

- Nautrup et al. (2019) H. P. Nautrup, N. Delfosse, V. Dunjko, H. J. Briegel, and N. Friis, Quantum 3, 215 (2019), 1812.08451v5 .

- Jacobs et al. (2009) K. Jacobs, L. Tian, and J. Finn, Phys. Rev. Lett. 102, 057208 (2009).

- Silver et al. (2016) D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. van den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot, S. Dieleman, D. Grewe, J. Nham, N. Kalchbrenner, I. Sutskever, T. Lillicrap, M. Leach, K. Kavukcuoglu, T. Graepel, and D. Hassabis, Nature 529, 484 (2016).

- Silver et al. (2017) D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, A. Huang, A. Guez, T. Hubert, L. Baker, M. Lai, A. Bolton, Y. Chen, T. Lillicrap, F. Hui, L. Sifre, G. van den Driessche, T. Graepel, and D. Hassabis, Nature 550, 354 (2017).

- Schulman et al. (2015) J. Schulman, S. Levine, P. Moritz, M. I. Jordan, and P. Abbeel, arXiv (2015), 1502.05477 .

- Schulman et al. (2017) J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, arXiv (2017), 1707.06347 .

- Mnih et al. (2016) V. Mnih, A. P. Badia, M. Mirza, A. Graves, T. P. Lillicrap, T. Harley, D. Silver, and K. Kavukcuoglu, arXiv (2016), 1602.01783 .

- Jelic and Marsiglio (2012) V. Jelic and F. Marsiglio, Eur. J. Phys. 33, 1651 (2012).

- Abdi et al. (2016) M. Abdi, P. Degenfeld-Schonburg, M. Sameti, C. Navarrete-Benlloch, and M. J. Hartmann, Phys. Rev. Lett. 116, 233604 (2016).

- Stockton et al. (2004) J. K. Stockton, R. van Handel, and H. Mabuchi, Phys. Rev. A 70, 022106 (2004).

- Romero-Sánchez et al. (2018) E. Romero-Sánchez, W. P. Bowen, M. R. Vanner, K. Xia, and J. Twamley, Phys. Rev. B 97, 024109 (2018).

- Doherty and Jacobs (1999) A. C. Doherty and K. Jacobs, Phys. Rev. A 60, 2700 (1999).

- Kangin and Pugeault (2018) D. Kangin and N. Pugeault, in 2018 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2018) pp. 1–8.

- Senft et al. (2017) E. Senft, P. Baxter, J. Kennedy, S. Lemaignan, and T. Belpaeme, Pattern Recognit. Lett. 99, 77 (2017).

- Wang et al. (2018) L. Wang, W. Zhang, X. He, and H. Zha, arXiv (2018), 1807.01473 .

- Wu et al. (2019) Y. Wu, M. Rosca, and T. Lillicrap, arXiv (2019), 1905.06723 .

- Shashua and Mannor (2020) S. D.-C. Shashua and S. Mannor, arXiv (2020), 2002.07171 .

- Carron et al. (2016) A. Carron, M. Todescato, R. Carli, L. Schenato, and G. Pillonetto, in 2016 IEEE 55th Conference on Decision and Control (CDC) (IEEE, 2016) pp. 4594–4599.

- Quantum Machines Unit (2021a) Quantum Machines Unit, “A little video of how deep reinforcement learning works to control the motion of a ball moving on an upside-down harmonic oscillator,” https://github.com/QuantumMachinesUnit/ml-iho-demo (2021a).

- Milburn (1987) G. J. Milburn, Phys. Rev. A 36, 744 (1987).

- Brockman et al. (2016) G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “Openai gym,” (2016), arXiv:1606.01540 [cs.LG] .

- Johansson et al. (2012) J. R. Johansson, P. D. Nation, and F. Nori, Comput. Phys. Commun. 183, 1760 (2012).

- Raffin et al. (2019) A. Raffin, A. Hill, M. Ernestus, A. Gleave, A. Kanervisto, and N. Dormann, “Stable baselines3,” https://github.com/DLR-RM/stable-baselines3 (2019).

- Paszke et al. (2019) A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Köpf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala, “Pytorch: An imperative style, high-performance deep learning library,” (2019), arXiv:1912.01703 [cs.LG] .

- Quantum Machines Unit (2021b) Quantum Machines Unit, “Supporting media files for the paper measurement based feedback quantum control with deep reinforcement learning,” https://github.com/QuantumMachinesUnit/ml-dw (2021b).