∎

22email: [email protected], [email protected], [email protected] 33institutetext: Daiheng Gao 44institutetext: University of Science and Technology of China 55institutetext: Xuan Wang ✉ 66institutetext: Ant Group

66email: [email protected] 77institutetext: Jie Zhang 88institutetext: Faculty of Applied Sciences, Macao Polytechnic University 99institutetext: Peng Zhang, Longhao Zhang, Bang Zhang and Liefeng Bo 1010institutetext: Institute for Intelligent Computing, Alibaba Group 1111institutetext: Xusen Sun 1212institutetext: Nanjing University 1313institutetext: Shiqi Yang 1414institutetext: Computer Vision Center, Universitat Autònoma 1515institutetext: This work was partly done as an intern at Alibaba Group.

MaTe3D: Mask-guided Text-based 3D-aware Portrait Editing

Abstract

3D-aware portrait editing has a wide range of applications in multiple fields. However, current approaches are limited due that they can only perform mask-guided or text-based editing. Even by fusing the two procedures into a model, the editing quality and stability cannot be ensured. To address this limitation, we propose MaTe3D: mask-guided text-based 3D-aware portrait editing. In this framework, first, we introduce a new SDF-based 3D generator which learns local and global representations with proposed SDF and density consistency losses. This enhances masked-based editing in local areas; second, we present a novel distillation strategy: Conditional Distillation on Geometry and Texture (CDGT). Compared to exiting distillation strategies, it mitigates visual ambiguity and avoids mismatch between texture and geometry, thereby producing stable texture and convincing geometry while editing. Additionally, we create the CatMask-HQ dataset, a large-scale high-resolution cat face annotation for exploration of model generalization and expansion. We perform expensive experiments on both the FFHQ and CatMask-HQ datasets to demonstrate the editing quality and stability of the proposed method. Our method faithfully generates a 3D-aware edited face image based on a modified mask and a text prompt. Our code and models will be publicly at link.

Keywords:

Mask-guided Editing Text-guided Editing Diffusion Model Score Distillation Sampling1 Introduction

Leveraging the advancements of StyleGAN series stylegan , stylegan2 , stylegan3 , zhou2022sd , EG3D eg3d and related works like stylesdf , stylenerf , cips3d , meshwgan have successfully created high-quality 3D scenes with consistent views and rich details. Recent 3D-aware image synthesis methods fenerf , ide3d , lenerf aim to enhance view-consistent image editing capabilities by incorporating additional information like semantic maps. This caters to a range of user needs, including 3D avatar creation, personalized services, and industrial design.

Existing NeRF-based generation methods with editability can be categorized into two classes: mask-guided fenerf ,ide3d and text-guided clipnerf , lenerf . Both methods rely on a common pipeline: (1) training a 3D-aware generator; (2) exploring various editing methods based on the well-trained generator. Mask-guided methods aim to facilitate user-friendly editing through hand-drawn sketches or semantic label interfaces. For example, IDE-3D ide3d enables both high-quality and real-time local face control by manipulating a semantic map. However, current mask-guided approaches primarily emphasize shape manipulation and lack texture control capabilities. On the other hand, text-guided methods strive to produce more expressive results based on textual descriptions by distilling knowledge from large models. LENeRF lenerf utilizes CLIP clip for precise and localized manipulation using text inputs. Nevertheless, existing text-based techniques encounter challenges in accurately controlling shape to achieve desired results(Fig. 6). In this paper, we focus on using both masked-guided and text-guided 3D image editing with high quality and stability. Masks allow for precise spatial modifications, such as removing or altering specific parts of the scene, while text editing allows for semantic changes based on natural language descriptions. Utilizing both mask and text enhances interactivity and control by enabling users to interact with and modify 3D scenes in more intuitive ways.

To achieve these properties, a simple approach is to combine mask-guided and text-guided techniques presented in the aforementioned part. However, when naively combining the current state-of-the-art methods of both mask-guided and text-based approaches, the system suffers from unstable texture and unconvincing geometry (refer to Fig. 10). Also it hinders the ability to merge visual and textual information, as illustrated in Fig. 6. Unfortunately, there are no prior tailored works exploring how to effectively perform both mask-guided and text-guided manipulation in a single model without conflict.

To address this limitation, we introduce MaTe3D, Mask-guided Text-based 3D-aware Portrait Editing, which consists of two steps:(1) modeling 3D generator and (2) effectively using both mask and text information in a single model. Specifically,in the first step we introduce a new SDF-based 3D generator that explicitly models the local representations as well as the global ones. Meanwhile, we also propose the SDF and density based consistency losses to make the generator more accurate. The SDF-based 3D generator further enables the possibility of directly constructing semantic probability to learn well-established geometry and view-consistency editing results (Fig. 4 and Fig. 8).

In the second step, we introduce an innovative editing technique, Condition Distillation on Geometry and Texture (CDGT), tailored for stable texture and convincing geometry while editing. This method leverages a user-provided mask and textual description to facilitate optimal inference, addressing the challenge of 3D mask annotation scarcity through a progressive condition updating scheme. By iteratively refining masks with rendered results from the generator, CDGT improves stable texture, mitigating visual ambiguity across diverse views within the diffusion model. Additionally, our approach employs Score Distillation Sampling (SDS) on the blending of images and their normal maps, ensuring the preservation of the underlying geometry integrity and avoiding mismatch between texture and geometry. Meanwhile, to explore the generalization and expansion of our method, we further develop a large-scale and high-resolution CatMask-HQ annotation tailored specifically for AFHQ dataset based on analysis.

To summarize, we make the following contributions:

-

•

We propose a novel framework (MaTe3D) for portrait editing. The framework can perform more effective mask-guided and text-based editing simultaneously in a single model, ensuring editing quality and stability.

-

•

We develop a novel 3D generator and a distillation strategy in our framework. Our generator learns global and local representations via proposed SDF and density consistency losses to enhance the mask-based editing. Our Condition Distillation on Geometry and Texture (CDGT) mitigates visual ambiguity, avoids mismatch between texture and geometry, and produces stable texture and convincing geometry while editing.

-

•

We create a new cat face dataset named CatMask-HQ with large scale and high-quality annotations. The dataset extends the assessment of model generalization to domains beyond human facial.

-

•

We conduct extensive qualitative and quantitative experiments, which demonstrate that our method can perform mask-guided and text-based portrait editing simultaneously with higher quality and stability.

2 Related Works

2.1 3D-aware GAN based Synthesis and Editing

Shocked by NeRF nerf and SIREN siren in exceeding the past method with regards to multi-view image synthesis, recent methods start to focus on 3D-aware models without multi-view supervision. GRAF graf and pi-GAN pigan are pioneers in exploring NeRF-based 3D-aware synthesis and render view-consistent results. StyleNeRF stylenerf and CIPS-3D cips3d adopt progressively upsampling to synthesize high-quality 3D-aware images. StyleSDF stylesdf , which merges NeRF with Signed Distance Function (SDF) deepsdf , achieves comparative performance to aforementioned methods and also produces 3D smooth complex shapes. EG3D eg3d introduces a hybrid explicit-implicit 3D representation that produces high-resolution image and brings implicitly learned mesh to a new level of meticulosity. However, none of the above methods consider fine-grained locally-controllable face editing with other conditions.

To achieve fine-grained level control with mask, FENeRF fenerf pioneers local face editing using decoupled latent codes to generate corresponding facial semantics and texture in a spatially aligned 3D volume with shared geometry. IDE-3D ide3d and NeRFFaceEditing nerffaceediting allow for semantic mask guided local control of images in real time. CNeRF cnerf and 3D-SSGAN 3dssgan aim to achieve part-level editing through the compositional synthesis. For fine-grained editing with text, LENeRF lenerf achieves local manipulation via establishing 3D attention map via CLIP clip . StyleGANFusion styleganfusion and FaceDNeRF facednerf distill knowledge from diffusion model to enable manipulate Face NeRFs in zero-shot learning based on text prompts.

2.2 Diffusion Models Guided Editing

In recent years, diffusion models have made great strides in the field of text-to-2D generation. Some works have begun to use diffusion model priors to edit 3D scenes through text guidance. For example, Instruct-NeRF2NeRF haque2023instruct employs Instruct-Pix2Pix instructp2p to modify NeRF content using language instructions. The approach optimizes both the underlying scene and iterative optimization, resulting in a 3D scene that conforms to the input text. Blended-NeRF gordon2023blended uses a pre-trained language image model to synthesize objects based on textual prompts and blend them into existing scenes using 3D multi-layer perceptron (MLP) models and volume blending techniques for natural appearance.

Score Distillation Sampling (SDS) from DreamFusion dreamfusion provides a new way to apply diffusion models to 3D scenes. DreamEditor zhuang2023dreameditor allows controllable editing of neural fields using textual prompts through SDS. FocalDreamer li2023focaldreamer merges shapes with editable parts based on SDS for fine-grained editing in desired regions. SKED mikaeili2023sked , which edits 3D shapes represented by NeRFs, utilizes two guiding sketches without altering existing fields much, supporting object creation or modification within designated sketch regions. To improve SDS, HeadSculpt headsculpt introduces prior-driven score distillation with landmark-guided ControlNet to improve 3D-head generation results. HeadArtist headartist develops self-score distillation for generating geometry and texture, leading to a significant performance boost.

3 Preliminaries

3.1 ControlNet

ControlNet controlnet is an innovative approach that enhances Stable Diffusion by incorporating additional conditions(canny edge, pose maps, etc.). In MaTe3D, we train two ControlNets on color mask for face and cat, respectively. The mask representation not only supports interactive editing but also enables inpainting pipeline. To achieve this, we employ a mask encoder to map the condition (e.g., mask) to the same dimensions as the latent code in Stable Diffusion. The optimization process can be expressed as follows:

| (1) |

where represents the latent feature map encoded from training data. The variables and represent the text condition and mask condition, respectively. The variable represents the timestep. Additionally, is an additive Gaussian noise term. represents the parameters of learnable mask encoder.

The noisy data at timestep is denoted as and , where and are predefined functions decided by timestep .

3.2 Score Distillation Sampling (SDS)

Score Distillation Sampling (SDS) is developed by DreamFusion dreamfusion to distill knowledge from a pre-trained diffusion model into a differentiable 3D representation generator, such as NeRF nerf or DMTet dmtet . Consider a 3D representation generator is parameterized by , we can obtain the rendered image . Then, given the noisy image , text embedding and noise timestep , a random noise is added to latent code encoded from image . The pre-trained diffusion model is then adopted to predict the added noise from the noisy image. The SDS provides gradient to update the generator and can be represented as follows:

| (2) |

where , .

4 Methods

Problem setting. Our goal is to perform mask-guided and text-based portrait editing in a single model, enabling high quality and stability of 3D editing. We decouple MaTe3D into two steps. First, we propose a new 3D generator based on SDF to improve the mask-based editing performance in local areas. Our approach uses both SDF and density consistency losses to learn semantic mask fields by joint modeling of the global and local representations. Second, considering when directly manipulating the generator with both mask and text information, the system suffers from: unstable texture and unconvincing geometry. Hence, we further introduce Condition Distillation on Geometry and Texture (CDGT).

Overview. We outline the proposed MaTe3D pipeline in Fig. 1, which consists of two key parts: the proposed generator in the first step (Fig. 1(a)) and the inference-optimized editing (Fig. 1(b)) in the second step. In Sec. 4.1, we provide more details of the proposed generator, including the generator architecture, the SDF-based neural rendering and the training objectives. In Sec. 4.2, using the well-trained generator from the first step, we introduce to fuse a frozen generator and a learnable generator, and a Conditional Distillation on Geometry and Texture (CDGT) method to update the fused generator.

4.1 MaTe3D Generator

As shown in Fig. 1(a), our MaTe3D generator contains three parts: 1) Dual tri-planes generation for enhancing specific facial details to capture both global and local representations, 2) Neural rendering to incorporate both color and semantic features into the renderings, and 3) Optimization to learn global and local representations simultaneously. In the following paragraphs, we present detailed descriptions for each part.

4.1.1 Dual Tri-planes Generation

Let and be a camera pose and a latent code, respectively. Dual Tri-planes Generation takes camera pose and latent code as inputs, and generates texture tri-plane and shape tri-plane . The dual-generation process allows for the enhancement of specific facial details, such as glasses, as shown in Fig. 9. The texture feature and shape feature are extracted by projecting coordinates onto the tri-planes. Subsequently, two separate MLP decoders are adopted to learn color features and Signed Distance Function (SDF) values . The SDF values can not only capture the global facial structure but also the local components like the nose, eyes, and mouth, represented as , where denotes the number of semantic parts.

4.1.2 Neural Rendering

As shown in Fig. 1(a), in Neural Rendering we utilize SDF values to generate density values through Density feature generation. Next, we render a semantic map from local SDF values using Implicit semantic generation to enable mask-based editing. Finally, by combining density, color, and semantic probabilities, we propose Feature map generation to produce high-resolution portrait and semantic mask .

Density Feature Generation

Inspired by both StyleSDF stylesdf and VolSDF volsdf , we transform the signed distance values into density values for volume rendering. This transformation is formulated as follows:

| (3) | |||

| (4) |

where is the density value of the global face, and the () refer to local facial components. The learnable hyper-parameter controls the density value tightness around the surface boundary stylesdf . is shared among both global SDF values and the decomposed local SDF values.

Implicit Semantic Generation

To support mask-based editing, Implicit semantic generation is proposed to render the semantic mask. Different from conventional methods that predict a 3D semantic field, our approach leverages the correlation between semantic information and decompositional geometries. By directly converting SDF into a semantic representation within a generative radiance field, we maintain semantic consistency within geometric classes and prevent abrupt changes across different classes.

Inspired by scene representation in objectsdf , we directly convert SDF into semantic probabilities in generative radiance field:

| (5) |

where is a hyper-parameter to control the smoothness of the function.

Feature Map Generation

Given the previously learned density, semantic probabilities and color features, the neural renderer generates both a color feature map and a low-resolution semantic map . The first channels of store the low-resolution portrait . These maps are used to synthesize a high-resolution portrait and semantic mask through a dual StyleGAN-based sampler, which is modulated by corresponding latent codes.

4.1.3 Optimization

Optimizing a SDF-based generator is challenging when learning the global and the local representations simultaneously. Thus, we propose both the SDF and the density consistency losses. The full loss function consists of several parts which are listed as follows:

SDF Consistency Loss

In MaTe3D generator, we adopt the global SDF that defines the overall shape of the face and a set of local SDFs that capture the local parts of the face. To ensure geometrical consistency across the whole model, it is essential to integrate these local SDFs with the global SDF. This integration guarantees that all local shapes blend seamlessly, resulting in an accurate 3D representation and further rendering anti-aliasing masks. In this study, we achieve the integration by computing the loss between the minimum value of the local SDFs and the global SDF. The loss can be formulated as follows:

| (6) |

Density Consistency Loss

In addition to the geometrical consistency achieved through the SDF consistency loss, we propose a density consistency loss to enhance the representation of the 3D model. The density consistency loss is computed by computing the difference between the local and global density values from learned signed distance values. For quantifying discrepancies in density across the entire object volume, the density consistency loss guarantees a stable training, and a better image quality. The loss can be formulated as follows:

| (7) |

Dual Adversarial Loss

We use a dual discriminator to model the distribution of portrait and semantic mask. The discriminator takes concatenation of the portrait image and semantic mask as input. We apply classic non-saturating adversarial loss with R1 regularization to obtain .

Regularization Losses

We introduce three regularization losses: Eikonal loss () to ensure the physical valid of SDFs stylesdf , minimal surface loss () to prevent the SDFs from modeling spurious and non-visible surfaces, and density regularization loss () to regularize the density convert from SDF to prevent ”seam” artifacts.

In summary, the final loss of SDF-based generator is:

| (8) | ||||

where the hyper-parameters , , , , and balance the contribution of each loss term, respectively.

4.2 Inference-optimized Editing

The Inference-optimized Editing aims to modify the content and structure of the input image using a customized semantic mask and a text prompt (e.g. ’a woman with wrinkles on face’). As shown in Fig. 1(b), it includes Generators Fusion and Conditional Distillation on Geometry and Texture. In Generators Fusion module, we present a frozen and a learnable generators, respectively. Both of them are used to generate 3D masks for feature fusion. In Conditional Distillation on Geometry and Texture, we aim to address both distortion-free textures and convincing geometries when performing both the mask-guided and the text-based 3D-aware portrait editing.

Generator Fusion Generator Fusion uses the camera pose and latent code to generate pairs of texture tri-planes and shape tri-planes. It then fuses the tri-planes by incorporating 3D masks derived from semantic probabilities. In Fig. 1(b), we illustrate the Generator Fusion of inference-optimized editing phase in MaTe3D, which comprises a frozen generator and a learnable generator . Both and are initialized based on the well-trained generator from Sec. 4.1. We utilize and to derive 3D masks that facilitate feature fusion across tri-planes from both generators, allowing us to synthesize edited images .

Conditional Distillation on Geometry and Texture We innovatively propose CDGT for precise and interactive 3D manipulation by leveraging a user-provided mask and textual description. By iteratively refining masks through a progressive condition updating scheme with rendered results from the generators, CDGT addresses the challenge of 3D mask annotation scarcity while enhancing texture stability while 3D editing. This mitigates visual ambiguity across diverse views within the diffusion model. Additionally, by combining gradients from both image and normal map, we distill controllable diffusion priors over texture and geometry to preserves underlying geometry integrity, avoiding mismatch between texture and geometry.

4.2.1 Condition Updating Scheme

The original implementation of SDS loss primarily focuses on texture, lacking the ability to control shape headsculpt . Some methods enhance diffusion by incorporating landmarks obtained from an off-shelf detector for better control. However, these approaches still face challenges with unstable texture due to sparse conditions and limited 3D consistency. To tackle this issue, we introduce Condition Updating Scheme. This technology regards the generated mask as a condition for ControlNet, establishing a connection between the generator and diffusion prior. We iteratively update the condition of ControlNet during editing and integrate it into multi-view consistency to ensure stable texture. This design progressively integrates diffusion priors conditioned by 3D-aware information into the 3D content. Despite initially computing incorrect gradients during optimization, the interplay between front-view supervision and Condition Updating Scheme guarantees that results converge to maintain texture consistency and align with the modified mask.

4.2.2 Gradient Combination

While editing a neural radiance field, maintaining convincing geometry deformation is as essential as changing texture. However, we observe that the quality of geometry degrades when editing certain complex attributes (e.g., hat in Fig. 10). This degradation is attributed to a mismatch between texture and geometry caused by the absence of 3D supervision. To address this issue, we align geometry and texture by computing gradients on both the image and normal map . In the approach, we introduce a novel random blending strategy to combine gradients. Specifically, we encode the image and normal map to obtain their latent representations and . We then use a sampled value to blend texture and geometry: . In contrast that TADA tada fuses texture and geometry using a constant value, our method employs randomly sampled values ranging from to . By incorporating randomness into the fusion process, random blending enhances the robustness of editing process by preventing overfitting on specific patterns and improving its capability to handle discrete attributes.

Given a pre-trained SDF-based generator with parameters , we generate images , normal maps and semantic masks using . Given target prompt , we compute CDGT as follows:

| (9) | ||||

We iteratively optimize the learnable using CDGT loss, ID loss , and segmentation loss . The weights for these loss terms are empirically defined, respectively. Here, is generated from the frozen generator , and is rendered in the same view (front view) as .

In summary, we first pretrain the proposed generators on training dataset. Then, we train ControlNet on Stable Diffusion with mask condition, utilizing images and annotations. Finally, when editing image, we fix both the generators and ControlNet and update a new well-initialized generator with the proposed Condition Distillation on Geometry and Texture (CDGT) loss, ID loss and segmentation loss.

5 Experiments

5.1 Implementation Details

5.1.1 Setting of Generator Training

We train our generator on FFHQ stylegan and proposed CatMask-HQ.We adopt sphere initialization from StyleSDF stylesdf . The training is performed on NVIDIA A100 GPUs (G) using batch size of for steps. We use Adam optimizer adam and set the learning rates for the generator and dual discriminator to and , respectively. For losses, we assign , , , , , and . The weights , , and are defined the same as those in StyleSDF stylesdf . We define both and by tentative experiments.

5.1.2 Setting of Editing

During editing phase, we optimize learnable generator iterations for each target mask and input text prompt, which takes around minutes on a single NVIDIA A100 GPU (G). We use Adam adam as the optimizer with learning rate of . We determine the values of , , and as , , and through preliminary experiments.

| Method | FFHQ | AFHQ | |||

|---|---|---|---|---|---|

| FID | KID | ID | FID | KID | |

| FENeRF fenerf | 29.0 | 3.728 | 0.61 | / | / |

| StyleNeRF stylenerf | 7.8 | 0.220 | 0.62 | 14.9 | 0.368 |

| StyleSDF stylesdf | 13.1 | 0.269 | 0.74 | 12.8 | 0.455 |

| MVCGAN mvcgan | 13.4 | 0.375 | 0.58 | 17.1 | 0.198 |

| EG3D eg3d | 4.7 | 0.132 | 0.77 | 2.7 | 0.041 |

| IDE-3D ide3d | 4.6 | 0.130 | 0.76 | / | / |

| BallGAN ballgan | 5.7 | 0.312 | 0.75 | 4.7 | 0.096 |

| MaTe3D | |||||

| (w/o dual tri-planes) | 5.8 | 0.389 | 0.75 | 3.9 | 0.106 |

| MaTe3D | 5.1 | 0.215 | 0.79 | 2.5 | 0.078 |

5.2 CatMask-HQ

Current mask-based editing methods are primarily validated only on CelebA-HQ Mask maskgan or FFHQ stylegan While some few-shot techniques have produced faces and masks in other domains, such as cat faces sem2nerf , both the image and the mask quality are subpar with noticeable aliasing kangle2023pix2pix3d .

To expand the scope beyond human face and explore the model generalization and expansion, we design the CatMask-HQ dataset with the following representative features:

-

•

Specialization: CatMask-HQ is specifically designed for cat faces, including precise annotations for six facial parts (background, skin, ears, eyes, nose, and mouth) relevant to feline features.

-

•

High-Quality Annotations: The dataset benefits from manual annotations by annotators and undergoes accuracy checks, ensuring high-quality labels and reducing individual differences.

-

•

Substantial Dataset Scale: With approximately high-quality real cat face images and corresponding annotations, CatMask-HQ provides ample training database for deep learning models.

For the fair comparison among prior works, we utilize the same segmentation network as CatMask to label AFHQ and produce CatMask. Qualitative comparisons between the pseudo labels and annotated results are depicted in Fig. 2. Our proposed dataset boasts more precise annotations devoid of aliasing edges or empty classes found in the pseudo labels.

| Method | Cham. | Norm. |

|---|---|---|

| IDE-3D ide3d w nose | 3039.3770 | 0.0443 |

| IDE-3D ide3d w nose, mouth | 3084.0366 | 0.0445 |

| IDE-3D ide3d w nose, mouth, hair | 2607.2324 | 0.0450 |

| IDE-3D ide3d w nose, mouth, hair, ear | 2677.9805 | 0.0451 |

| MaTe3D w nose | 1419.5851 | 0.0934 |

| MaTe3D w nose, mouth | 1424.8981 | 0.0927 |

| MaTe3D w nose, mouth, hair | 528.1699 | 0.0877 |

| MaTe3D w nose, mouth, hair, ear | 528.7918 | 0.0878 |

5.3 Baselines

We evaluate the generator of MaTe3D against state-of-the-art genertors, including FENeRF fenerf , StyleNeRF stylenerf , StyleSDF stylesdf , EG3D eg3d , MVCGAN mvcgan , BallGAN ballgan and IDE-3D ide3d , respectively. We evaluate MaTe3D from two aspects including text-based and mask-text-hybrid editing. For text-based editing, we compare MaTe3D with LENeRF, FENeRF+StyleCLIP, IDE-3D+StyleCLIP, FaceDNeRF facednerf and HeadSculpt headsculpt , respectively. We reimplement LENeRF lenerf and HeadSculpt headsculpt strictly according to their papers. We reproduce FENeRF+StyleCLIP and IDE-3D+StyleCLIP for comparison purposes, similarly to LENeRF lenerf . In hybrid editing, we compare two baselines: FENeRF+StyleCLIP and IDE-3D+StyleCLIP with mask and text editing simultaneously.

5.4 MaTe3D generator Evaluation

Generator Performance: qualitative and quantitative results.

We adopt three metrics to comprehensively evaluate the synthesis quality of generated images quantitatively. Both the lower Frechet Inception Distance (FID) fid and Kernel Inception Distance (KID) kid indicate higher quality of synthesized images. Multi-view identity consistency (ID) id calculates the face similarity under different sampled camera pose. As shown in Tab. 1, MaTe3D achieves comparable results with SOTAs in terms of FID, KID and ID. Fig. 3 presents a qualitative comparison of MaTe3D against 3D-aware GANs. It is evident that MaTe3D achieves superior image quality and better view consistency than the baselines, indicating the effectiveness of both the global and the local SDF representations in the generator. Also, we present additional results for CatMask-HQ, and compare the image quality with that by EG3D eg3d , as depicted in Fig. 3 and Tab. 1. Our method produces better image quality with better view consistency.

Then we evaluate the geometry quality of MaTe3D, which contributes to accurate mask and provides view consistency results.

Decompositional Geometries: qualitative result. We compare MaTe3D with two other state-of-the-art methods: FENeRF and IDE-3D. As shown in Fig. 4, MaTe3D outperforms its predecessors. FENeRF produces visually irritating staircasing artifacts morsy2022shape (the so-called bulls eye effect IDW1968 ) that are particularly noticeable when viewing small objects up close. It is possibly caused by the sparse and clustered sampling points in the frustum of FENeRF. IDE-3D produces photorealistic semantic-aware images of relatively compact whole faces. However, it fails to maintain a well-established zero level-set in its neural radiance field, resulting in constant density distribution along rays even for local parts like nose, mouth and eyes. The situation may be caused by ignoring the association between geometries and semantic information, which leads to the mis-disentanglement of underlying local geometries.

Decompositional Geometries: quantitative result. We aim to investigate the compositional consistency in Mate3D in quantitative way. We combine the local facial meshes into a unified mesh, and then compare it with the original whole face. The results, presented in Tab. 2, demonstrate that MaTe3D significantly enhances 3D surface reconstruction quality compared to IDE-3D. Note that we do not include FENeRF in this comparison as its produced geometries have different resolutions of MaTe3D and IDE-3D, making it an unfair comparison. MaTe3D outperforms IDE-3D by large margins in terms of Chamfer-L1 (approximately 1/5 of IDE-3D) and normal consistency (approximately two times of IDE-3D), indicating its superior performance in integrity and compositionality.

5.5 Edit Results

| CLIPScore | FFHQ | AFHQ |

|---|---|---|

| LENeRF stylesdf | 0.612 | 0.512 |

| FENeRF+SC eg3d | 0.729 | / |

| IDE-3D+SC ide3d | 0.762 | / |

| MaTe3D | 0.791 | 0.732 |

Comparison with Text-based Method. In Fig. 5, we show the results of text-based editing.When using CLIP for text-based editing, LENeRF (unoffical reproduction) struggles to produce diverse images, and suffers from insufficient expressive ability and scalability. Although FENeRF+StyleCLP is able to generate images that match the text, other attributes have been unexpected changed. Like FENeRF+StyleCLP, IDE-3D+StyleCLIP suffers from a similar issue that the model tends to generate images based on text rather than the mask. Our method produces high-quality textures that are faithful to the text prompt and meanwhile maintain structural stability.

We report the CLIPScore clipscore values in Tab. 3, which replies the correlation between edited images and input texts. We achieve the best ClIPscore () comparing with LENeRF (), FENeRF+StyleCLIP (), and IDE-3D+StyleCLIP (), respectively.

Comparison with Mask-guided Text-based Method. As shown in Fig. 6, we present qualitative comparisons of our method with existing methods for both mask and text. Our results show high-quality performance in both the mask and text. However, all baselines struggle to effectively utilize both information of mask and text. Some methods prioritize preserving the mask at the expense of ignoring the text (e.g., FENeRF+StyleCLIP with prompt ’blue sweater’), while others focus solely on matching the text and disregard the mask (e.g., IDE-3D+StyleCLIP with prompt ’Christmas hat, Afro hair’). This highlights a lack of balance between the roles of mask and text during synchronous editing within baseline methods.

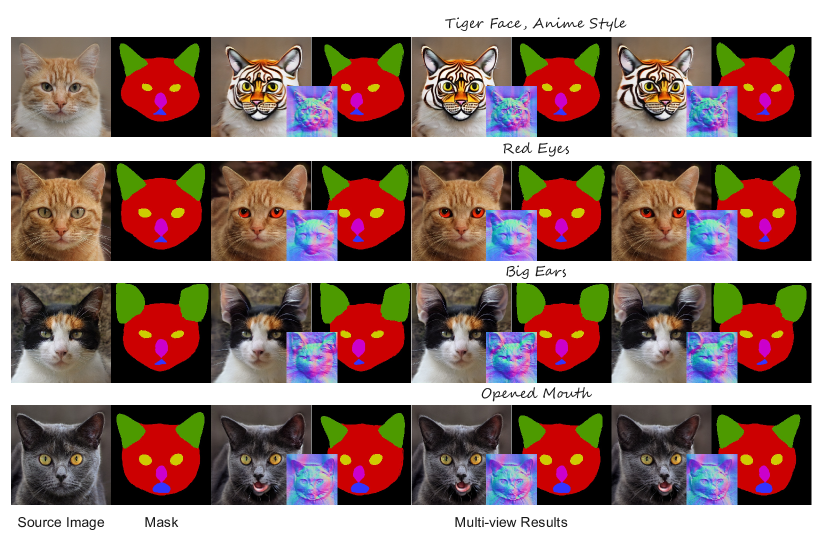

Editing Results on CatMask-HQ. We provide more editing results in Fig. 7. Our method can synthesize stable and anti-aliasing textures, as well as maintain view consistency by synthesizing anti-degradation geometries.

5.6 Ablation Study

Local SDF Representations. We conduct an ablation study on the local SDF representation. As depicted in Fig. 4, Our model (w/o SDFs) generates photorealistic images and semantic-aware masks, but suffers from unsatisfactory geometries. Benefiting from our generator architecture, the results without SDFs have superior geometries compared to IDE-3D ide3d , but errors still exist in modeling eyeglasses (incomplete edges) and nose (longer and deeper than normal). These errors occur due to a loss of connection between decompositional facial components. The poor geometry also leads to inaccurate masks.

We randomly sample images and masks, then use a pre-trained face segmentation model to predict the ground-truth of masks. We find that using both global and local SDF representations can achieve a higher mIoU of , while ’w/o SDF’ only reaches . Our method significantly improves mask accuracy with the global and local SDFs and their corresponding geometric constraints. Additionally, we observe that using global and local SDF representations helps maintain view-consistency during the editing phase (Fig. 8). Meanwhile, ignoring global and local representations causes inconsistent results, particularly when adding facial accessories during editing.

Consistency Loss and Network Architecture. As depicted in Fig. 9, we perform an ablation study on the proposed consistency loss and network architecture. Our findings suggest that omitting the density consistency loss leads to a significant degradation in image quality, severely compromising the overall fidelity of the whole image. Additionally, omitting the SDF consistency loss results in aliasing edges in the semantic mask and requires more iterations to converge. Incorporating dual tri-plane generation improves image quality (see Tab. 1) and provides more details in specific areas, such as glasses in face (see Fig. 9).

Conditional Distillation on Geometry and Texture. As shown in Fig. 10, we conduct an ablation study on our proposed blending Conditional Distillation on Geometry and Texture (CDGT). Our observations indicate that without Condition Updating Scheme in CDGT, the editing phase loses 3D-aware control, leading to unstable textures, and noticeable blurring. Without gradient combination, image synthesis in novel views results in severe artifacts due to 3D degradation caused by mismatch between the underlying geometry and texture. The strategy proposed in TADA tada can partially alleviate this issue. However, the results still suffer from unstable texture and lead to hollow geometric structures. By incorporating gradient combination via random blend, we introduce randomness into the fusion process. This random blending boosts the resilience of edited results by preventing overfitting on specific patterns while enhancing its capability to handle challenging discrete attributes such as hats and glasses.

6 Applications

6.1 Real Portrait Editing

Our MaTe3D allows for high-fidelity 3D inversion and mask-guided text-based editing on real portrait images. We start by projecting the input image into the latent space using PTI pti , and then use MaTe3D for mask-guided and text-based editing. The results are displayed in Fig. 11, demonstrating that our approach can achieve high-quality 3D portrait editing on real images.

6.2 Out-of-Domain Editing

Editing 3D faces with out-of-domain attributes is a challenging task due to the limitations of pre-trained generator training data. In our research, MaTe3D facilitates Out-of-Domain Editing by creating a 3D face based on a target mask and text prompt. As shown in Fig. 12, our approach enables precise modifications with out-of-domain textures, while producing a convincing geometries for consistent visualization.

6.3 Face Swapping

Face swapping is a crucial research area in computer vision with extensive applications in entertainment industry 3dswap . Our method can also perform face swap editing with celebrities. As shown in Fig. 13, with a prompt of a celebrity, MaTe3D can transfer their face to the source image while keeping the remaining regions unchanged.

7 Conclusion

In this study, we propose MaTe3D, a novel editing pipeline to support mask-guided and text-based 3D-aware portrait editing with high quality and stability. Extensive experiments demonstrated our significant performance over the-state-of-the-art methods. The proposed CatMask extends the mask-based editing domain from face to cat face, and provide an additional dataset to verify the feasibility, extensibility, and generalization of the model.

Limitation As previously mentioned, MaTe3D has a natural ability to enable mask-guided text-based editing and has surpassed its baselines. However, there are still some challenging cases that need to be addressed: 1) the image quality may slightly deteriorate when learning better geometry through SDFs, and 2) Our method is more time-consuming than baseline methods due to the optimized strategy. Much more efficient solutions need to be explored in our future work.

Ethical Concerns MaTe3D can reconstruct the representation of a given face and then launch mask-guided and text-based editing. A series of 3D-consistent images can thus be produced by taking advantage of MaTe3D, which means the great potential to empower areas like artistic creation and industrial design. However, the synthesized images can be wrongly identified by face recognition systems with high probability. Besides, downstream applications like single-view 3D inversion and mask-guided and text-based editing can be misused for generating edited imagery of real people. Such misusage put cyberspace in danger inevitably. Overall, the use of this technology needs to be more careful and better regulated.

Acknowledgments

This work was supported by OpenBayes.

References

- (1) T. Karras, S. Laine, and T. Aila, “A Style-Based Generator Architecture for Generative Adversarial Networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 4401–4410.

- (2) T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and Improving the Image Quality of StyleGAN,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 8107–8116.

- (3) T. Karras, M. Aittala, S. Laine, E. Härkönen, J. Hellsten, J. Lehtinen, and T. Aila, “Alias-Free Generative Adversarial Networks,” in Advances in Neural Information Processing Systems, 2021, pp. 852–863.

- (4) K. Zhou, X. Zhu, D. Gao, K. Lee, X. Li, and X.-c. Yin, “SD-GAN: Semantic Decomposition for Face Image Synthesis with Discrete Attribute,” in Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 2513–2524.

- (5) E. R. Chan, C. Z. Lin, M. A. Chan, K. Nagano, B. Pan, S. D. Mello, O. Gallo, L. Guibas, J. Tremblay, S. Khamis, T. Karras, and G. Wetzstein, “Efficient Geometry-aware 3D Generative Adversarial Networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 16 123–16 133.

- (6) R. Or-El, X. Luo, M. Shan, E. Shechtman, J. J. Park, and I. Kemelmacher-Shlizerman, “StyleSDF: High-Resolution 3D-Consistent Image and Geometry Generation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 13 503–13 513.

- (7) J. Gu, L. Liu, P. Wang, and C. Theobalt, “StyleNeRF: A Style-based 3D Aware Generator for High-resolution Image Synthesis,” in Proceedings of the International Conference on Learning Representations, 2022.

- (8) P. Zhou, L. Xie, B. Ni, and Q. Tian, “CIPS-3D: A 3D-Aware Generator of GANs Based on Conditionally-Independent Pixel Synthesis,” arXiv preprint arXiv:2110.09788, 2021.

- (9) J. Zhang, K. Zhou, Y. Luximon, , P. Li, and T. Lee, “MeshWGAN: Mesh-to-Mesh Wasserstein GAN with Multi-Task Gradient Penalty for 3D Facial Geometric Age Transformation,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–14, 2023 (Early Access).

- (10) J. Sun, X. Wang, Y. Zhang, X. Li, Q. Zhang, Y. Liu, and J. Wang, “FENeRF: Face Editing in Neural Radiance Fields,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 7672–7682.

- (11) J. Sun, X. Wang, Y. Shi, L. Wang, J. Wang, and Y. Liu, “IDE-3D: Interactive Disentangled Editing for High-Resolution 3D-aware Portrait Synthesis,” ACM Transactions on Graphics, vol. 41, no. 6, pp. 1–10, 2022.

- (12) J. Hyung, S. Hwang, D. Kim, H. Lee, and J. Choo, “Local 3D Editing via 3D Distillation of CLIP Knowledge,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 12 674–12 684.

- (13) C. Wang, M. Chai, M. He, D. Chen, and J. Liao, “CLIP-NeRF: Text-and-Image Driven Manipulation of Neural Radiance Fields,” in Proceedings of the Conference on Computer Vision and Pattern Recognition, 2022, pp. 3835–3844.

- (14) A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark et al., “Learning Transferable Visual Models From Natural Language Supervision,” in Proceedings of the International Conference on Machine Learning, 2021, pp. 8748–8763.

- (15) B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, and R. Ng, “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis,” in Proceedings of the European Conference on Computer Vision, 2020, pp. 405–421.

- (16) V. Sitzmann, J. Martel, A. Bergman, D. Lindell, and G. Wetzstein, “Implicit Neural Representations with Periodic Activation Functions,” in Advances in Neural Information Processing Systems, 2020, pp. 7462–7473.

- (17) K. Schwarz, Y. Liao, M. Niemeyer, and A. Geiger, “GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis,” in Advances in Neural Information Processing Systems, 2020, pp. 20 154–20 166.

- (18) E. R. Chan, M. Monteiro, P. Kellnhofer, J. Wu, and G. Wetzstein, “pi-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 5799–5809.

- (19) J. J. Park, P. Florence, J. Straub, R. Newcombe, and S. Lovegrove, “DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 165–174.

- (20) K. Jiang, S.-Y. Chen, F.-L. Liu, H. Fu, and L. Gao, “NeRFFaceEditing: Disentangled Face Editing in Neural Radiance Fields,” in Proceedings of the ACM SIGGRAPH Asia, 2022, pp. 1–9.

- (21) T. Ma, B. Li, Q. He, J. Dong, and T. Tan, “Semantic 3D-aware Portrait Synthesis and Manipulation Based on Compositional Neural Radiance Field,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2023, pp. 1878–1886.

- (22) R. Liu, P. Zheng, Y. Wang, and R. Ma, “3D-SSGAN: Lifting 2D Semantics for 3D-Aware Compositional Portrait Synthesis,” arXiv preprint arXiv:2401.03764, 2024.

- (23) K. Song, L. Han, B. Liu, D. Metaxas, and A. Elgammal, “StyleGAN-Fusion: Diffusion Guided Domain Adaptation of Image Generators,” in Proceedings of the IEEE Conference on Applications of Computer Vision, 2024, pp. 5453–5463.

- (24) H. Zhang, T. DAI, Y. Xu, Y.-W. Tai, and C.-K. Tang, “FaceDNeRF: Semantics-Driven Face Reconstruction, Prompt Editing and Relighting with Diffusion Models,” in Advances in Neural Information Processing Systems, 2024, pp. 55 647–55 667.

- (25) A. Haque, M. Tancik, A. A. Efros, A. Holynski, and A. Kanazawa, “Instruct-NeRF2NeRF: Editing 3D Scenes with Instructions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 19 740–19 750.

- (26) T. Brooks, A. Holynski, and A. A. Efros, “Instructpix2pix: Learning to Follow Image Editing Instructions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 18 392–18 402.

- (27) O. Gordon, O. Avrahami, and D. Lischinski, “Blended-NeRF: Zero-Shot Object Generation and Blending in Existing Neural Radiance Fields,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 2941–2951.

- (28) B. Poole, A. Jain, J. T. Barron, and B. Mildenhall, “DreamFusion: Text-to-3D using 2D Diffusion,” in Proceedings of the International Conference on Learning Representations, 2022.

- (29) J. Zhuang, C. Wang, L. Lin, L. Liu, and G. Li, “DreamEditor: Text-Driven 3D Scene Editing with Neural Fields,” in Proceeding of the ACM SIGGRAPH Asia, 2023, pp. 1–10.

- (30) Y. Li, Y. Dou, Y. Shi, Y. Lei, X. Chen, Y. Zhang, P. Zhou, and B. Ni, “FocalDreamer: Text-driven 3D Editing via Focal-fusion Assembly,” arXiv preprint arXiv:2308.10608, 2023.

- (31) A. Mikaeili, O. Perel, M. Safaee, D. Cohen-Or, and A. Mahdavi-Amiri, “SKED: Sketch-guided Text-based 3D Editing,” in Proceedings of the IEEE International Conference on Computer Vision, 2023, pp. 14 607–14 619.

- (32) X. Han, Y. Cao, K. Han, X. Zhu, J. Deng, Y.-Z. Song, T. Xiang, and K.-Y. K. Wong, “HeadSculpt: Crafting 3D Head Avatars with Text,” in Advances in Neural Information Processing Systems, 2023, pp. 4915–4936.

- (33) H. Liu, X. Wang, Z. Wan, Y. Shen, Y. Song, J. Liao, and Q. Chen, “HeadArtist: Text-conditioned 3D Head Generation with Self Score Distillation,” arXiv preprint arXiv:2312.07539, 2023.

- (34) L. Zhang, A. Rao, and M. Agrawala, “Adding Conditional Control to Text-to-Image Diffusion Models,” in Proceedings of the IEEE International Conference on Computer Vision, 2023, pp. 3836–3847.

- (35) T. Shen, J. Gao, K. Yin, M.-Y. Liu, and S. Fidler, “Deep Marching Tetrahedra: a Hybrid Representation for High-Resolution 3D Shape Synthesis,” in Advances in Neural Information Processing Systems, 2021, pp. 6087–6101.

- (36) L. Yariv, J. Gu, Y. Kasten, and Y. Lipman, “Volume Rendering of Neural Implicit Surfaces,” in Advances in Neural Information Processing Systems, 2021, pp. 4805–4815.

- (37) Q. Wu, X. Liu, Y. Chen, K. Li, C. Zheng, J. Cai, and J. Zheng, “Object-Compositional Neural Implicit Surfaces,” in Proceedings of the European Conference on Computer Vision, 2022, pp. 197–213.

- (38) T. Liao, H. Yi, Y. Xiu, J. Tang, Y. Huang, J. Thies, and M. J. Black, “Tada! Text to Animatable Digital Avatars,” arXiv preprint arXiv:2308.10899, 2023.

- (39) D. P. Kingma and J. Ba, “Adam: A Method for Stochastic Optimization,” in Proceeding or the International Conference on Learning Representations, 2015.

- (40) X. Zhang, Z. Zheng, D. Gao, B. Zhang, P. Pan, and Y. Yang, “Multi-View Consistent Generative Adversarial Networks for 3D-aware Image Synthesis,” in Proceedings of the Conference on Computer Vision and Pattern Recognition, 2022, pp. 18 450–18 459.

- (41) M. Shin, Y. Seo, J. Bae, Y. S. Choi, H. Kim, H. Byun, and Y. Uh, “BallGAN: 3D-aware Image Synthesis with a Spherical Background,” in Proceedings of the IEEE International Conference on Computer Vision, 2023, pp. 7268–7279.

- (42) C.-H. Lee, Z. Liu, L. Wu, and P. Luo, “MaskGAN: Towards Diverse and Interactive Facial Image Manipulation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 5549–5558.

- (43) Y. Chen, Q. Wu, C. Zheng, T.-J. Cham, and J. Cai, “Sem2NeRF: Converting Single-View Semantic Masks to Neural Radiance Fields,” in Proceedings of the European Conference on Computer Vision, 2022, pp. 730–748.

- (44) K. Deng, G. Yang, D. Ramanan, and J.-Y. Zhu, “3D-aware Conditional Image Synthesis,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 4434–4445.

- (45) L. Mescheder, M. Oechsle, M. Niemeyer, S. Nowozin, and A. Geiger, “Occupancy networks: Learning 3D Reconstruction in Function Space,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 4460–4470.

- (46) M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs Trained by a Two Time-Scale Update Rule Converge to a Nash Equilibrium,” in Advances in neural information processing systems, 2017.

- (47) M. Bińkowski, D. J. Sutherland, M. Arbel, and A. Gretton, “Demystifying MMD GANs,” in Proceedings of the International Conference on Learning Representations, 2018.

- (48) J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 4690–4699.

- (49) M. M. A. Morsy, A. Brunton, and P. Urban, “Shape Dithering for 3D Printing,” ACM Transactions on Graphics, vol. 41, no. 4, pp. 1–12, 2022.

- (50) D. Shepard, “A Two-dimensional Interpolation Function for Irregularly-spaced Data,” in Proceedings of the ACM National Conference, 1968, pp. 517–524.

- (51) J. Hessel, A. Holtzman, M. Forbes, R. Le Bras, and Y. Choi, “CLIPScore: A Reference-free Evaluation Metric for Image Captioning,” in Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 2021, pp. 7514–7528.

- (52) D. Roich, R. Mokady, A. H. Bermano, and D. Cohen-Or, “Pivotal Tuning for Latent-based Editing of Real Images,” ACM Transactions on Graphics, vol. 42, no. 1, pp. 1–13, 2022.

- (53) Y. Li, C. Ma, Y. Yan, W. Zhu, and X. Yang, “3d-aware face swapping,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 12 705–12 714.