Matching Neuromorphic Events and Color Images via Adversarial Learning

Abstract

The event camera has appealing properties: high dynamic range, low latency, low power consumption and low memory usage, and thus provides complementariness to conventional frame-based cameras. It only captures the dynamics of a scene and is able to capture almost “continuous” motion. However, different from frame-based camera that reflects the whole appearance as scenes are, the event camera casts away the detailed characteristics of objects, such as texture and color. To take advantages of both modalities, the event camera and frame-based camera are combined together for various machine vision tasks. Then the cross-modal matching between neuromorphic events and color images plays a vital and essential role. In this paper, we propose the Event-Based Image Retrieval (EBIR) problem to exploit the cross-modal matching task. Given an event stream depicting a particular object as query, the aim is to retrieve color images containing the same object. This problem is challenging because there exists a large modality gap between neuromorphic events and color images. We address the EBIR problem by proposing neuromorphic Events-Color image Feature Learning (ECFL). Particularly, the adversarial learning is employed to jointly model neuromorphic events and color images into a common embedding space. We also contribute to the community N-UKbench and EC180 dataset to promote the development of EBIR problem. Extensive experiments on our datasets show that the proposed method is superior in learning effective modality-invariant representation to link two different modalities.

1 Introduction

The event camera is bio-inspired, event-driven, time-based neuromorphic vision sensor, which senses the world on a radically different principle [15]. Instead of capturing static images of the scene at a constant acquisition rate like the conventional frame-based camera, the event camera works asynchronously to measure the brightness changes and reports an event once the change exceeds a threshold. It casts away the concepts like exposure time and frame that have dominated the computer vision community over decades, thus enabling to provide compensation to the shortcomings of the frame-based camera. For example, the frame-based camera represents motion by capturing a series of still frames, which results in the loss of information between frames. Instead, the event camera represents motion by capturing an event stream, which enables to capture almost “continuous” motion in frame-free mode. Beyond that, the event camera only records what changes, which drastically reduces power, data storage and computational requirements. Such unique advantages drive the event-based vision to disrupt the current technology in fields such as automotive vehicles, security and surveillance [6, 22, 31, 33].

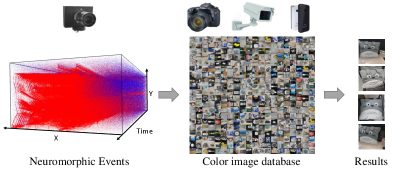

However, unlike the frame-based camera encoding illumination intensity to depict the object/scene as it is in real world, the event camera encodes only brightness changes. It makes the detailed characteristics of object/scene that recorded in the event camera, such as texture information and color information, still have to be obtained from the traditional frame-based camera. As a consequence, we are encountering the situation where the event camera and frame-based camera are combined together for various machine vision tasks, such as a distributed computer machine vision system composed of these two sensors for surveillance and monitoring. Then how to match the interested object from these two modalities of data becomes critical. In this paper, to study the cross-modal matching between neuromorphic events and color images, the problem of Event-Based Image Retrieval (EBIR) is proposed. Given an event stream as query, the aim is to retrieve the color images describing the same objects from an image database, as shown in Fig. 1. This is an extremely challenging problem due to the following aspects: (i) The output of event camera is not a sequence of frames but a sparse, asynchronous stream of events, which makes the commonly used methods for conventional frame-based camera are not directly applicable. (ii) A large modality gap exists between neuromorphic events and color images due to the inconsistent distributions, which arises many difficulties to infer the joint distribution or learn the common representations. (iii) A fit-for-purpose dataset is lacking. None of existed datasets can provide an evaluation for EBIR task.

We address all above challenges by proposing an EBIR framework that we refer to as neuromorphic Events-Color image Feature Learning (ECFL) and introducing two EBIR datasets, i.e., N-UKbench and EC180. For the framework, we first encode the neuromorphic events into understandable tensors, i.e., event image. Then we propose a deep adversarial learning architecture to align the feature distributions of the two modalities of data. Concretely when an event image/color image is embedded in the joint space, the embedding vector is fed into a modality discriminator which acts as an adversary of feature generators. The feature generators learn modality-invariant features by confusing the discriminator while the discriminator aims at maximizing its ability to identify modalities. For the datasets, N-UKbench is converted from existed frame-based instance retrieval dataset UKbench [20], in which 2550 event streams and 7650 color images are evenly distributed in 2550 instances. And to further verify the effectiveness of proposed method for real-world data, EC180 is collected by finding different objects to record, in which 180 event streams and 900 color images are evenly distributed in 180 instances.

To sum up, the main contributions of this paper are:

-

•

The formulation of a new Event-Based Image Retrieval (EBIR) problem to study the cross-modal matching, requiring that retrieve images of a particular object given an event stream as query.

-

•

A new method called neuromorphic Events-Color image Feature Learning (ECFL) for performing EBIR, which generates representations that are discriminating among instances and invariant with respect to modalities to capture correlations across neuromorphic events and color images.

-

•

The collection of N-UKbench and EC180 dataset specifically for EBIR to advance the problem. Dataset website: (double-blind review).

2 Related Work

2.1 Cross-modal Retrieval

Data acquired from heterogeneous sensors constitutes our digital modern life. As multi-modal data grows rapidly, cross-modal retrieval has drawn great attention due to its widespread application prospects, such as multi-sensor information fusion, object recognition and scene matching. It aims to take one modality of data to retrieve relevant data of another modality. Till now, multi-modal retrieval has been widely studied, including retrieving infrared images with visible images [14], retrieving videos with texts [28], retrieving photos with sketches [30], etc. The major concerns are how to perform the cross-modal correlation modeling to learn common representations for various modalities of data so that the similarity between different modalities can be measured.

There is a significant amount of work in learning a common subspace shared by different modalities of data. Most studies extract the common features which are robust in multi-modalities of data and achieve multi-modal matching by comparing the similarities between the features. Edge features are widely used since there is usually a certain relationship between the edges in different modalities of data [24]. Besides, some existed descriptors developed in single modality like SIFT [16] are optimized and improved as feature representation methods for cross-modalities. Although there are several ways to extract features for neuromorphic events and color images, the methods developed on neuromorphic events is not applicable to color images and vice versa. Another line is to project the features of different modalities to a common feature subspace, in which the similarities are measured. Due to the recent progress of deep learning, the Convolutional Neural Networks (CNN) acting as high-level feature extractors can generate modality-invariant representations, which can be categorized into two types: the classification-based network and the verification-based network [34]. The former is trained to classify architectures into pre-trained categories and the learned embedding, e.g., FC7 in Alexnet, is usually employed as cross-modality feature. The latter may use a siamese network and employ the contrastive loss to learn common representations.

Although cross-modal matching among data of multi-sensors is a hot spot in the field of computer vision, to our best knowledge, there is no studies about cross-modal matching between neuromorphic events and color images yet due to the novelty of event-based vision field. Owing to the unconventional output of event camera and its inconsistent encoding content with conventional camera, existing cross-modal retrieval methods fail to tackle the EBIR task. In this work, we embed the adversarial training strategy into the process of representation learning to bridge the gap between neuromorphic events and color images.

2.2 Event-based Datasets

One of the key barriers towards EBIR task is the scarcity of well-annotated datasets. Prior to our work, several datasets for development and evaluation of various event-based methods have already been collected [6]. For example, N-Caltech101 is an event-stream dataset for object recognition which is converted from existing computer vision static image dataset Caltech101 [5] using an actuated pan-tilt camera platform [21]; DET is a high-resolution dynamic vision sensor dataset for lane extraction [4]; and the MVSEC dataset is used to perform a variety of 3D perception tasks, such as feature tracking, visual odometry, and stereo depth estimation [35]. However, none of them are designed for the EBIR task.

Since a limited number of datasets are available in the community of neuromorphic vision, it is very difficult to develop a neuromorphic vision dataset by crawling data directly from the web like developing a computer vision dataset. Inspired by the methods of creating datasets in [21, 12], which convert existing computer vision static image datasets into neuromorphic vision datasets, we convert the popular frame-based instance retrieval dataset UKbench [20] to a dataset suitable for EBIR task, named as “N-UKbench”. Moreover, the neuromorphic events converted from static images may be different from that obtained by photographing the real objects. To provide access to evaluating algorithms applied to real scenes, we further provide EC180 dataset, which is collected by finding different objects to record. Such two datasets are important to exploit methods of cross-modal matching between neuromorphic events and color images.

3 Event Representation

Event camera works radically different from the frame-based camera. Pixels in an event camera work asynchronously to respond the change of their log photocurrent . Here is the photocurrent (brightness) of the event at time that locates at , and indicates the logarithmic operation of the photocurrent. When the brightness change since last event at the pixel with duration :

| (1) |

reaches threshold (), the event camera will generate a new event with polarity indicating the increase (ON events) or decrease (OFF events) of brightness. Then, a stream of events can be defined as:

| (2) |

where , and is the location, trigging time and polarity of the event in the stream.

The output of event camera is a discrete spatial-temporal event stream (as shown in Fig 1), which is not able to be processed by methods developed on the conventional frame-based camera. Thus we convert the event stream into a fix-sized tensor representation. To testify the robustness of proposed model, we adopt three event representation methods including event stacking, time surface, and event frequency.

3.1 Event Stacking (ES)

As the most straightforward way to encode an event stream into a tensor, the event stacking has been applied in many tasks including steering prediction [18], visual-inertial odometry [25], and video generation [29], etc. Unlike previous stacking methods, we stack all events ignoring their polarity into one tensor to prevent the context from being separated into two dispersed part. Thus we can integrate the events within the interval into a tensor using:

| (3) |

where is the Kronecker delta, and is the pixel position of an event.

3.2 Time Surface (TS)

The time surface is a spatial-temporal representation of asynchronous event stream. It has been adopted in tasks like object classification [10] and action recognition [23]. To generate a time surface of an event , we need to first generate the Surface of Active Events (SAE) [1]:

| (4) |

where is the SAE around the incoming event for the pixels in the square, centered at the pixel position . And is the pixel in that square, , . Then we can apply the exponential decay kernel with time constant to generate a dynamic spatial-temporal time surface :

| (5) |

3.3 Event Frequency (EF)

Besides above representations, we can also analyze events from frequency domain. The event frequency [19] counts the event occurrence at each pixel within a range as activation frequency to encode the event stream into a tensor. Since the noise in an event camera has relative low frequency, the representation is more friendly to noise tolerance. The event frequency can be generated by using:

| (6) |

where is the total number of the activated events at pixel within a time interval .

4 Cross-modal feature learning

4.1 Overview

We focus on the task of neuromorphic event and color image bimodal representation learning. We divide the event stream in 90 ms intervals, where each bin is converted to an “event image”. To make full use of the temporal information of the neuromorphic events, we further divide each bin into three consecutive parts. Each part is processed by the event representation method and acts as a channel of the event image. Let be the set of all possible instances in a given dataset; and be the set of event images and color images respectively, where is an event image, is an original color image, are their corresponding instance-level object identity label, and are the total number of event images and color images respectively. As event images and color images clearly have different statistical properties and follow unknown distributions, they fail to be directly compared against each other. Our goal is to learn a common subspace in which the similarity between data items from these two modalities (neuromorphic events and color images) can be assessed directly, i.e., learned features have the same or very similar distributions in the two modalities of data.

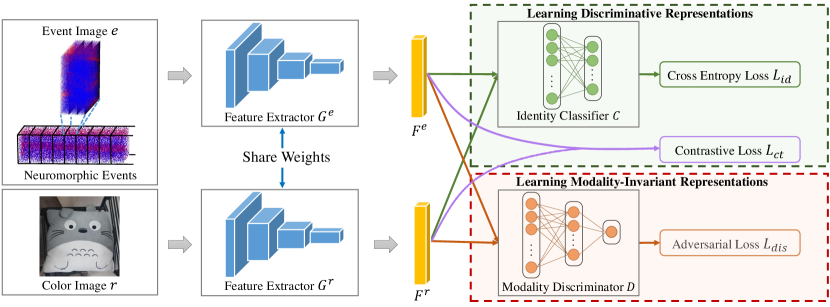

Towards this goal, our proposed ECFL method described in Fig. 2 embeds adversarial training strategy into the process of representation learning, so that the learned features combine discriminativeness and modality-invariance. Specifically, the aim of our framework is to learn two feature extractors and which respectively map the event image and color image into a common embedding space. The cost function which guides the learning process to provide the embedding with the desired properties contains two parts. The first part learns features that are distinguishable for different instances, including cross entropy loss and contrastive loss. The cross entropy loss is calculated by the output of identify classifier , which takes the feature representation as input and predicts the object identity label. It makes sure that the learned embedding space preserves instance-discriminative information. The identify classifier is composed of a softmax layer, whose number of output nodes equals to the number of instances . The contrastive loss is calculated directly in the embedding space, which ensures that the distance between the event image and color image is minimized if they denote the same instance and is greater than a margin value if they denote two different instances. The second part utilizes the adversarial training strategy to minimize the distribution distance between data items from two modalities, such that the feature distributions are well aligned in the embedding space, where a modality discriminator acts as an adversary. It predicts for event image and for color image. We regard a representation as a modality-invariant feature when the modality discriminator fails to discriminate modalities they belong to. The modality discriminator is composed of two FC layers and a sigmoid function.

The architecture of feature extractors and are based on that of ResNet [8], which has enough capacity of representations considering large margin of statistical properties between the event images and color images. The weights of the two feature extractors are shared everywhere. The weight-sharing constraint allows to align the distributions of two modalities from the very beginning of the feature extraction. Besides, there is another strategy: without any weight-sharing. It learns features of two modalities independently and relies entirely on the cost function to guide the alignment of them. But it can preferably deal with the heterogeneous nature of the input. Both strategies are evaluated in our experiment.

4.2 Model Learning and Deployment

The feature extractors learn representations that are invariant to different modalities by constantly trying to outsmart the modality discriminator, which is working to become a better detective. At the same time, they learn representations that are distinguishable for different instances. Therefore, the learning stage contains two steps: (i) Update the modality discriminator by minimizing the loss of the modality discriminator; (ii) Update the feature extractors and by maximizing the loss of the modality discriminator while minimizing the cross entropy loss and contrastive loss. Let us consider a training batch including event image-color image pairs, where and . The objective of the modality discriminator is to predict the modality of the input given its representation and can be written as:

| (7) |

where and . The objective of the feature generator is now to defeat the modality discriminator, i.e., the modality discriminator should not be able to predict the modality of the input given its representation. In addition, it has to minimize the cross entropy loss and contrastive loss. The complete objective is then:

| (8) |

where is the cross entropy loss for predicting the object identity label:

| (9) |

| (10) |

is the contrastive loss, whose goal is to make the distance between an event image and a color image of the same instance closer than that the one of two different instances:

| (11) |

| (12) |

if and denote the same instance else and is the margin. , and control the contribution of the three items respectively. Suppose , and are the parameters of feature generators, identity classifier and modality discriminator, respectively. We apply an alternating gradient update scheme similar to the one described in [7] to seek , and , as shown in Algorithm 1.

After learning, the modality discriminator and the identity classifier are stripped off during testing. We use the feature generators to map the event images and color images to the d-dimensional feature representations. Thus the similarity of neuromorphic events and color images can be simply measured by Euclidean distance. Color images in database are ranked according to the similarity, and the ones with similarity at the top are set as matching results.

5 N-UKbench and EC180 Dataset

Datasets are fundamental tools to facilitate adoption of event-driven technology and advance its research [6]. A good dataset should meet the requirements of algorithm prototyping, deep learning and algorithm benchmarking. In this paper, we contribute N-UKbench and EC180 dataset specifically for EBIR task.

N-UKbench is converted from an existed instance retrieval dataset UKbench [20]. UKbench consists of 2550 instances, where each instance includes 4 color images under various angles, illuminations, translations, etc. To make the dataset applicable to our EBIR task, we convert 1 image of each instance to 1 event stream and the rest of 3 color images depicting the same instance are remained. We use the method in [12] to convert the static image to event stream, i.e., using the CeleX-V [3] event camera to record the screen on which image is moving. the CeleX-V [3] is the current highest resolution event camera, whose resolution is pixels.

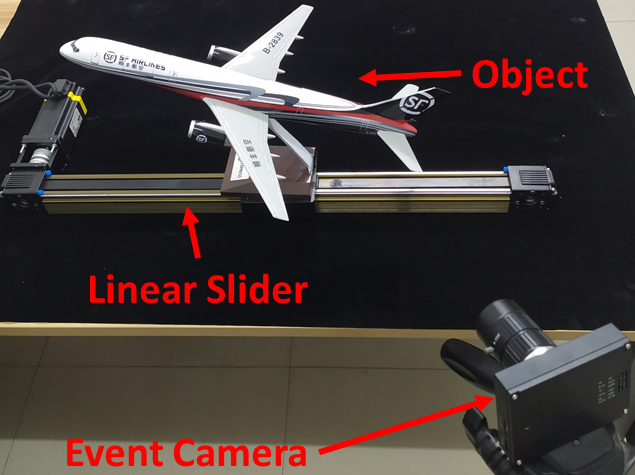

EC180 dataset is collected by photographing real objects. There are overall 180 instances in it. Since neuromorphic events can only be generated when there is relative motion between the event camera and the object, most recordings in EC180 are conducted in the setup shown in Fig. 3a. The linear slider will drive the object to move so that the event camera can “see” the object. There are also several objects that are recorded by moving the event camera. The color images in EC180 dataset are captured with different background and perspective. Fig. 3b gives three samples in our EC180 dataset, where each sample contains 1 event stream and 5 color images.

6 Experiments

6.1 Experiment Settings

We use the Pytorch to train our models. The modality discriminator is trained with Adam [9], using a learning rate of 0.002 and . The feature generators and the identity classifier are trained with SGD [2], using a learning rate of 0.001 and a momentum of 0.9. The weighting factors , and are set to 1, 0.01, 0.01. The maximum epoch of training iterations is set to 20.

We randomly select 2040 instances from N-UKbench as training set and the remaining 510 instances are for evaluation. We rotate each event image/color image to 9 angles ranging from to and double the numbers by flipping them horizontally. All event images and color images are resized to the same size of pixels.

6.2 Performance Analysis

| mAP | acc.@1 | acc.@3 | |

|---|---|---|---|

| SIFT (ES) | 0.0491 | 0.0373 | 0.0346 |

| SIFT (TS) | 0.0559 | 0.0554 | 0.0415 |

| SIFT (EF) | 0.0550 | 0.0426 | 0.0364 |

| LSS (ES) | 0.0394 | 0.0289 | 0.0230 |

| LSS (TS) | 0.0310 | 0.0157 | 0.0201 |

| LSS (EF) | 0.0247 | 0.0172 | 0.0163 |

| ECFL (ES, ResNet-18) | 0.5676 | 0.5961 | 0.4913 |

| ECFL (TS, ResNet-18) | 0.5683 | 0.5931 | 0.4848 |

| ECFL (EF, ResNet-18) | 0.5724 | 0.6069 | 0.4935 |

| ECFL (ES, ResNet-50) | 0.6463 | 0.6784 | 0.5691 |

| ECFL (TS, ResNet-50) | 0.6419 | 0.6843 | 0.5585 |

| ECFL (EF, ResNet-50) | 0.6651 | 0.6892 | 0.5881 |

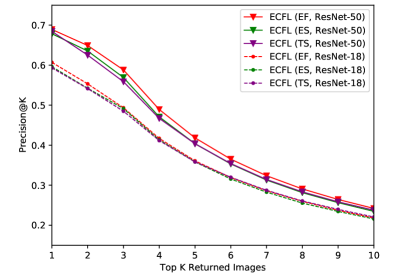

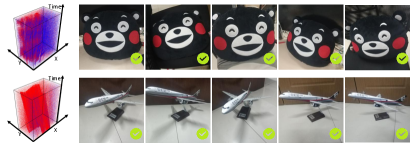

The purpose of this paper is to retrieve images of a particular object given an event stream as query. To the best of our knowledge, there are no studies about cross-modal matching between neuromorphic events and color images. Nevertheless, we still compare ECFL with Scale Invariant Feature Transform (SIFT) [16] and Local Self-Similarity (LSS) [26] because the event cluster in each stream forms the shape of the object. SIFT is the most representative in describing the structure information. We employ SIFT in combination with spatial pyramid [11] to describe both event images and color images and measure the similarity by histogram intersection. LSS ia able to capture shape features of a large image region and it is suitable for images with complex radiometric differences, which is widely adopted to multi-modal image matching, such as optical-to-SAR image matching [13] and sketch-to-image matching [26]. We use LSS with BoW [27] to describe event images and color images and measure the similarity by Euclidean distance. Tab. 1 shows the mean average precision (mAP) and the precision of top K retrieval results (acc.@K, K=1,3) of SIFT, LSS and ECFL while employing different event representations. Fig. 4 shows the precision of top K retrieval results of ECFL and Fig. 5a shows examples of retrieval results of ECFL using event frequency as representation. For each sample, the top 5 retrieved results are shown in each row.

It is shown that our proposed ECFL method (for each event representation) performs the best. It is able to bridge the large gap between neuromorphic events and color images and return the true matches. SIFT and LSS descriptors performs poorly for the novel EBIR task. Because the event image is different from the color image, it is produced by brightness changes, which results in the poor distribution consistency of their features. As shown in Fig. 4, the retrieval performance of the three event representations is very close. Among them, the event frequency performs slightly better. Comparing the other two event representation methods, the event frequency can significantly filter out the noise caused by the sensor since the occurrence frequency of noise at a particular pixel is low. Our proposed ECFL method, including ResNet-18 and ResNet-50 as backbone, produce a significant performance improvement. ResNet-50 architecture performs better than ResNet-18. It is expected that deeper architecture have more parameters, and can therefore better cope with the inconsistent distribution of features.

| mAP | acc.@1 | acc.@3 | |

|---|---|---|---|

| ECFL (ES, ResNet-50, NWS) | 0.6284 | 0.6456 | 0.5539 |

| ECFL (TS, ResNet-50, NWS) | 0.6293 | 0.6676 | 0.5462 |

| ECFL (EF, ResNet-50, NWS) | 0.6303 | 0.6480 | 0.5564 |

6.3 Impact of Different Weight-Sharing Strategies

It has been verified that the strategy of all weight-sharing is better suited for situations where two inputs are comparatively similar, e.g., image and sketch [32], while the strategy of no weight-sharing is better suited for situations where two inputs differ somewhat, e.g., image and text [32]. So, what about the neuromorphic events and color images? In this experiment, we compare two weight-sharing strategies described above. Tab. 2 shows the quantitative evaluation results of our proposed model with the strategy of no weight-sharing. It is easy to see that our ECFL method with all weight-sharing performs better than without any weight-sharing in aligning the feature distributions of two modalities of data. We consider that although the output of event camera is sparse and non-uniform spatiotemporal event signal, it is also a type of vision sensor. After converting the event stream into event images, the two modalities of data can be regarded as similar.

| mAP | acc.1 | acc.@3 | |

|---|---|---|---|

| ECFL (ES, ResNet-50, NTC) | 0.5501 | 0.5686 | 0.4796 |

| ECFL (TS, ResNet-50, NTC) | 0.5762 | 0.6029 | 0.4931 |

| ECFL (EF, ResNet-50, NTC) | 0.5727 | 0.5775 | 0.4917 |

6.4 Impact of temporal component

To make full use of the temporal component of the neuromorphic events, we propose a simple but effective approach to model the temporal component of the neuromorphic events, i.e., discretizing each bin into three consecutive parts and treating these parts as different channels in the first convolutional layer. To examine whether temporal component helps the improvement of retrieval performance, in this experiment, we show the quantitative evaluation results of our model with no use of the temporal component. We simply convert each bin into an event image and feed it into the network. Tab. 3 presents the results. We can clearly see that the performance of discarding temporal information is poorer than that of preserving temporal information. We consider supplementary details can be observed along the time axis. By discretizing each fixed time interval into consecutive parts, we can preserve more details and avoid them being offset by each other.

6.5 Impact of Adversarial Learning

Here, we investigate the contribution of adversarial learning. In our proposed ECFL model, we use a modality discriminator as an adversary of feature generators. We consider that the feature generators learn modality-invariant features by confusing the discriminator while the discriminator aims at maximizing its ability to identify modalities. In order to evaluate the contribution of adversarial learning, we remove the modality discriminator and train the feature extractors and identity classifier in one step. Tab. 4 shows the quantitative evaluation results of our model with no adversarial learning. Obviously, for each event representation method, when the modality discriminator is removed, the retrieval performance is degraded. Therefore, adversarial learning can narrow the modality gap between neuromorphic events and color images effectively.

| mAP | acc.1 | acc.@3 | |

|---|---|---|---|

| ECFL (ES, ResNet-50, NAL) | 0.6159 | 0.6574 | 0.5538 |

| ECFL (TS, ResNet-50, NAL) | 0.6243 | 0.6627 | 0.5482 |

| ECFL (EF, ResNet-50, NAL) | 0.6329 | 0.6696 | 0.5510 |

6.6 Experimental Results on Real Data

To verify the performance of our algorithm in real scenes, we also evaluate the proposed ECFL method on EC180 dataset. Considering the subtle differences between the neuromorphic events converted from the static image and the neuromorphic events recorded from the real object, our model may suffer performance degradation when applied to real scenes. To avoid it, we take the model trained on the converted N-UKbench dataset as the preliminary model and use the real EC180 dataset to fine-tune it. We randomly select 144 instances in EC180 as training set and the remaining 36 instances are as test set. Tab. 5 shows the quantitative results after fine-tuning and Fig. 5b shows examples of retrieval results using event frequency as representation. We can find that our model performs well for real scenes. The retrieval performance is somewhat worse than that on converted N-UKbench dataset. Because the color images in EC180 contain significant amounts of backgrounds and are captured from perspectives with large variants.

| mAP | acc.@1 | acc.@3 | |

|---|---|---|---|

| ECFL (ES, ResNet-50) | 0.5974 | 0.6111 | 0.5611 |

| ECFL (TS, ResNet-50) | 0.5960 | 0.6167 | 0.5463 |

| ECFL (EF, ResNet-50) | 0.6102 | 0.6389 | 0.5556 |

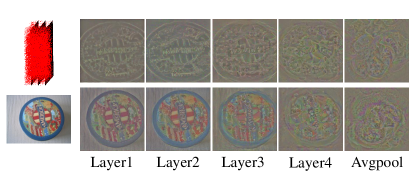

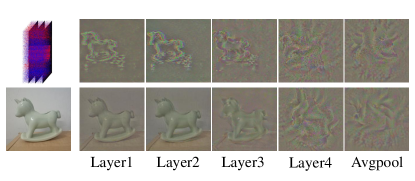

6.7 Feature Visualization

Fig. 6 shows the visualized results of different layers in feature generators. The visualization is achieved using the method in [17], which can invert the learned representation to reconstruct the image to analyze the visual information contained in it. The retrieval performance is often not determined by all the information of the input, but by the main target area. As the layer goes deeper, for the reconstruction of color images, the cluttered background is eliminated and the spatial structure of object gradually changes. It is proved that our model is able to extract the common features of neuromorphic events and color images at avgpool layer.

7 Conclusion

In this paper, we propose the Event-Based Image Retrieval (EBIR) task, which is a novel problem in event-based vision field. Along with the EBIR task, the neuromorphic Events-Color image Feature Learning (ECFL) method has been proposed to address it. We embed the adversarial training strategy into the process of representation learning, so that the learned features combine discriminativeness and modality-invariance. To give an evaluation to our model and stimulate the EBIR task, two event-based color image retrieval datasets, i.e., N-Ukbench and EC180, are collected and will be publicly available soon. Experimental results on our datasets show our model is capable to learn the cross-model representation of neuromorphic events and color images.

As an interesting direction for future work, we plan to extend this work to the case of more than two sensors. There are many possible combinations can be studied, such as event camera, RGB camera, infrared camera and LiDAR. Besides that, how to conduct single-modality-based neuromorphic event retrieval is also a direction that deserves researchers’ attention.

References

- [1] Ryad Benosman, Charles Clercq, Xavier Lagorce, Sio-Hoi Ieng, and Chiara Bartolozzi. Event-based visual flow. IEEE Transactions on Neural Networks and Learning Systems, 25(2):407–417, 2013.

- [2] Léon Bottou. Large-scale machine learning with stochastic gradient descent. pages 177–186, 2010.

- [3] Shoushun Chen and Menghan Guo. Live demonstration: CeleX-V: A 1M pixel multi-mode event-based sensor. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 0–0, 2019.

- [4] Wensheng Cheng, Hao Luo, Wen Yang, Lei Yu, Shoushun Chen, and Wei Li. DET: A high-resolution DVS dataset for lane extraction. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 0–0, 2019.

- [5] Li Fei-Fei, Rob Fergus, and Pietro Perona. Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 178–178, June 2004.

- [6] Guillermo Gallego, Tobi Delbruck, Garrick Orchard, Chiara Bartolozzi, Brian Taba, Andrea Censi, Stefan Leutenegger, Andrew Davison, Joerg Conradt, Kostas Daniilidis, et al. Event-based vision: A survey. arXiv:1904.08405, 2019.

- [7] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in Neural Information Processing Systems, pages 2672–2680, 2014.

- [8] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In IEEE Conference on Computer Vision and Pattern Recognition, pages 770–778, 2016.

- [9] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In International Conference on Learning Representations, 2015.

- [10] Xavier Lagorce, Garrick Orchard, Francesco Galluppi, Bertram E Shi, and Ryad B Benosman. HOTS: A hierarchy of event-based time-surfaces for pattern recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(7):1346–1359, 2016.

- [11] Svetlana Lazebnik, Cordelia Schmid, and Jean Ponce. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In IEEE Conference on Computer Vision and Pattern Recognition, volume 2, pages 2169–2178. IEEE, 2006.

- [12] Hongmin Li, Hanchao Liu, Xiangyang Ji, Guoqi Li, and Luping Shi. Cifar10-dvs: An event-stream dataset for object classification. Frontiers in neuroscience, 11:309, 2017.

- [13] Jiayuan Li, Qingwu Hu, and Mingyao Ai. RIFT: Multi-modal image matching based on radiation-invariant feature transform. arXiv:1804.09493, 2018.

- [14] Jing Li, Congcong Li, Tao Yang, and Zhaoyang Lu. Cross-domain co-occurring feature for visible-infrared image matching. IEEE Access, 6:17681–17698, 2018.

- [15] Patrick Lichtsteiner, Christoph Posch, and Tobi Delbruck. A 128128 120 db 15s latency asynchronous temporal contrast vision sensor. IEEE Journal of Solid-State Circuits, 43(2):566–576, 2008.

- [16] David G Lowe. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2):91–110, 2004.

- [17] Aravindh Mahendran and Andrea Vedaldi. Understanding deep image representations by inverting them. In IEEE Conference on Computer Vision and Pattern Recognition, pages 5188–5196, 2015.

- [18] Ana I Maqueda, Antonio Loquercio, Guillermo Gallego, Narciso García, and Davide Scaramuzza. Event-based vision meets deep learning on steering prediction for self-driving cars. In IEEE Conference on Computer Vision and Pattern Recognition, pages 5419–5427, 2018.

- [19] Shu Miao, Guang Chen, Xiangyu Ning, Yang Zi, Kejia Ren, Zhenshan Bing, and Alois C Knoll. Neuromorphic benchmark datasets for pedestrian detection, action recognition, and fall detection. Frontiers in neurorobotics, 13:38, 2019.

- [20] David Nister and Henrik Stewenius. Scalable recognition with a vocabulary tree. In IEEE Conference on Computer Vision and Pattern Recognition, volume 2, pages 2161–2168. IEEE, 2006.

- [21] Garrick Orchard, Ajinkya Jayawant, Gregory K Cohen, and Nitish Thakor. Converting static image datasets to spiking neuromorphic datasets using saccades. Frontiers in neuroscience, 9:437, 2015.

- [22] Ewa Piatkowska, Ahmed Nabil Belbachir, Stephan Schraml, and Margrit Gelautz. Spatiotemporal multiple persons tracking using dynamic vision sensor. In IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 35–40. IEEE, 2012.

- [23] Bibrat Ranjan Pradhan, Yeshwanth Bethi, Sathyaprakash Narayanan, Anirban Chakraborty, and Chetan Singh Thakur. N-HAR: A neuromorphic event-based human activity recognition system using memory surfaces. In IEEE International Symposium on Circuits and Systems, pages 1–5. IEEE, 2019.

- [24] Filip Radenović, Giorgos Tolias, and Ondřej Chum. Fine-tuning CNN image retrieval with no human annotation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(7):1655–1668, 2018.

- [25] Henri Rebecq, Timo Horstschaefer, and Davide Scaramuzza. Real-time visual-inertial odometry for event cameras using keyframe-based nonlinear optimization. In British Machine Vision Conference, 2017.

- [26] Eli Shechtman and Michal Irani. Matching local self-similarities across images and videos. In IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8, June 2007.

- [27] Josef Sivic and Andrew Zisserman. Video Google: A text retrieval approach to object matching in videos. In International Conference on Computer Vision, pages 1470–1477, 2003.

- [28] Subhashini Venugopalan, Marcus Rohrbach, Jeffrey Donahue, Raymond Mooney, Trevor Darrell, and Kate Saenko. Sequence to sequence-video to text. In IEEE International Conference on Computer Vision, pages 4534–4542, 2015.

- [29] Lin Wang, Yo-Sung Ho, Kuk-Jin Yoon, et al. Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networks. In IEEE Conference on Computer Vision and Pattern Recognition, pages 10081–10090, 2019.

- [30] Xinggang Wang, Xiong Duan, and Xiang Bai. Deep sketch feature for cross-domain image retrieval. Neurocomputing, 207:387–397, 2016.

- [31] Yanxiang Wang, Bowen Du, Yiran Shen, Kai Wu, Guangrong Zhao, Jianguo Sun, and Hongkai Wen. EV-Gait: Event-based robust gait recognition using dynamic vision sensors. In IEEE Conference on Computer Vision and Pattern Recognition, pages 6358–6367, 2019.

- [32] Qian Yu, Feng Liu, Yi-Zhe Song, Tao Xiang, Timothy M Hospedales, and Chen-Change Loy. Sketch me that shoe. In IEEE Conference on Computer Vision and Pattern Recognition, pages 799–807, 2016.

- [33] Alessandro Zanardi, Andreas Jianhao Aumiller, Julian Zilly, Andrea Censi, and Emilio Frazzoli. Cross-modal learning filters for rgb-neuromorphic wormhole learning. In Robotics: Science and Systems, volume 15, 2019.

- [34] Liang Zheng, Yi Yang, and Qi Tian. SIFT meets CNN: A decade survey of instance retrieval. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(5):1224–1244, 2018.

- [35] Alex Zihao Zhu, Dinesh Thakur, Tolga Özaslan, Bernd Pfrommer, Vijay Kumar, and Kostas Daniilidis. The multivehicle stereo event camera dataset: An event camera dataset for 3d perception. IEEE Robotics and Automation Letters, 3(3):2032–2039, 2018.