appendixReferences of Appendix

Matching a Desired Causal State

via Shift Interventions

Abstract

Transforming a causal system from a given initial state to a desired target state is an important task permeating multiple fields including control theory, biology, and materials science. In causal models, such transformations can be achieved by performing a set of interventions. In this paper, we consider the problem of identifying a shift intervention that matches the desired mean of a system through active learning. We define the Markov equivalence class that is identifiable from shift interventions and propose two active learning strategies that are guaranteed to exactly match a desired mean. We then derive a worst-case lower bound for the number of interventions required and show that these strategies are optimal for certain classes of graphs. In particular, we show that our strategies may require exponentially fewer interventions than the previously considered approaches, which optimize for structure learning in the underlying causal graph. In line with our theoretical results, we also demonstrate experimentally that our proposed active learning strategies require fewer interventions compared to several baselines.

1 Introduction

Consider an experimental biologist attempting to turn cells from one type into another, e.g., from fibroblasts to neurons (Vierbuchen et al.,, 2010), by altering gene expression. This is known as cellular reprogramming and has shown great promise in recent years for regenerative medicine (Rackham et al.,, 2016). A common approach is to model gene expression of a cell, which is governed by an underlying gene regulatory network, using a structural causal model (Friedman et al.,, 2000; Badsha et al.,, 2019). Through a set of interventions, such as gene knockouts or over-expression of transcription factors (Dominguez et al.,, 2016), a biologist can infer the structure of the underlying regulatory network. After inferring enough about this structure, a biologist can identify the intervention needed to successfully reprogram a cell. More generally, transforming a causal system from an initial state to a desired state through interventions is an important task pervading multiple applications. Other examples include closed-loop control (Touchette and Lloyd,, 2004) and pathway design of microstructures (Wodo et al.,, 2015).

With little prior knowledge of the underlying causal model, this task is intrinsically difficult. Previous works have addressed the problem of intervention design to achieve full identifiability of the causal model (Hauser and Bühlmann,, 2014; Greenewald et al.,, 2019; Squires et al., 2020a, ). However, since interventional experiments tend to be expensive in practice, one wishes to minimize the number of trials and learn just enough information about the causal model to be able to identify the intervention that will transform it into the desired state. Furthermore, in many realistic cases, the set of interventions which can be performed is constrained. For instance, in CRISPR experiments, only a limited number of genes can be knocked out to keep the cell alive; or in robotics, a robot can only manipulate a certain number of arms at once.

Contributions. We take the first step towards the task of causal matching (formalized in Section 2), where an experimenter can perform a series of interventions in order to identify a matching intervention which transforms the system to a desired state. In particular, we consider the case where the goal is to match the mean of a distribution. We focus on a subclass of interventions called shift interventions, which can for example be used to model gene over-expression experiments (Triantafillou et al.,, 2017). These interventions directly increase or decrease the values of their perturbation targets, with their effect being propagated to variables which are downstream (in the underlying causal graph) of these targets. We show that there always exists a unique shift intervention (which may have multiple perturbation targets) that exactly transforms the mean of the variables into the desired mean (Lemma 1). We call this shift intervention the matching intervention.

To find the matching intervention, in Section 3 we characterize the Markov equivalence class of a causal graph induced by shift interventions, i.e., the edges in the causal graph that are identifiable from shift interventions; in particular, we show that the resulting Markov equivalence classes can be more refined than previous notions of interventional Markov equivalence classes. We then propose two active learning strategies in Section 4 based on this characterization, which are guaranteed to identify the matching intervention. These active strategies proceed in an adaptive manner, where each intervention is chosen based on all the information gathered so far.

In Section 5, we derive a worst-case lower bound on the number of interventions required to identify the matching intervention and show that the proposed strategies are optimal up to a logarithmic factor. Notably, the proposed strategies may use exponentially fewer interventions than previous active strategies for structure learning. Finally, in Section 6, we demonstrate also empirically that our proposed strategies outperform previous methods as well as other baselines in various settings.

1.1 Related Works

Experimental Design. Previous work on experimental design in causality has considered two closely related goals: learning the most structural information about the underlying DAG given a fixed budget of interventions (Ghassami et al.,, 2018), and fully identifying the underlying DAG while minimizing the total number or cost (Shanmugam et al.,, 2015; Kocaoglu et al.,, 2017) of interventions. These works can also be classified according to whether they consider a passive setting, i.e., the interventions are picked at a single point in time (Hyttinen et al.,, 2013; Shanmugam et al.,, 2015; Kocaoglu et al.,, 2017), or an active setting, i.e., interventions are decided based on the results of previous interventions (He and Geng,, 2008; Agrawal et al.,, 2019; Greenewald et al.,, 2019; Squires et al., 2020a, ). The setting addressed in the current work is closest to the active, full-identification setting. The primary difference is that in order to match a desired mean, one does not require full identification; in fact, as we show in this work, we may require significantly less interventions.

Causal Bandits. Another related setting is the bandit problem in sequential decision making, where an agent aims to maximize the cumulative reward by selecting an arm at each time step. Previous works considered the setting where there are causal relations between regrets and arms (Lattimore et al.,, 2016; Lee and Bareinboim,, 2018; Yabe et al.,, 2018). Using a known causal structure, these works were able to improve the dependence on the total number of arms compared to previous regret lower-bounds (Bubeck and Cesa-Bianchi,, 2012; Lattimore et al.,, 2016). These results were further extended to the case when the causal structure is unknown a priori (de Kroon et al.,, 2020). In all these works the variables are discrete, with arms given by do-interventions (i.e., setting variables to a given value), so that there are only a finite number of arms. In our work, we are concerned with the continuous setting and shift interventions, which corresponds to an infinite (continuous) set of arms.

Correlation-based Approaches. There are also various correlation-based approaches for this task that do not make use of any causal information. For example, previous works have proposed score-based (Cahan et al.,, 2014), entropy-based (D’Alessio et al.,, 2015) and distance-based approaches (Rackham et al.,, 2016) for cellular reprogramming. However, as shown in bandit settings (Lattimore et al.,, 2016), when the system follows a causal structure, this structure can be exploited to learn the optimal intervention more efficiently. Therefore, we here focus on developing a causal approach.

2 Problem Setup

We now formally introduce the causal matching problem of identifying an intervention to match the desired state in a causal system under a given metric. Following Koller and Friedman, (2009), a causal structural model is given by a directed acyclic graph (DAG) with nodes , and a set of random variables whose joint distribution factorizes according to . Denote by the parents of node in . An intervention with multiple perturbation targets either removes all incoming edges to (hard intervention) or modifies the conditional probability (soft intervention) for all . This results in an interventional distribution . Given a desired joint distribution over , the goal of causal matching is to find an optimal matching intervention such that best matches under some metric. In this paper, we address a special case of the causal matching problem, which we call causal mean matching, where the distance metric between and depends only on their expectations.

We focus on causal mean matching for a class of soft interventions, called shift interventions (Rothenhäusler et al.,, 2015). Formally, a shift intervention with perturbation targets and shift values modifies the conditional distribution as . Here, the shift values are assumed to be deterministic. We aim to find and such that the mean of is closest to that of , i.e., minimizes for some metric . In fact, as we show in the following lemma, there always exists a unique shift intervention, which we call the matching intervention, that achieves exact mean matching.111To lighten notation, we use to denote both the perturbation targets and the shift values of this intervention.

Lemma 1.

For any causal structural model and desired mean , there exists a unique shift intervention such that .

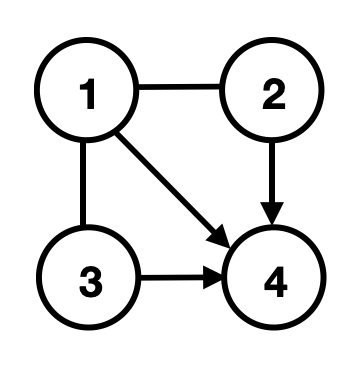

We assume throughout that the underlying causal DAG is unknown. But we assume causal sufficiency (Spirtes et al.,, 2000), which excludes the existence of latent confounders, as well as access to enough observational data to determine the joint distribution and thus the Markov equivalence class of (Andersson et al.,, 1997). It is well-known that with enough interventions, the causal DAG becomes fully identifiable (Yang et al.,, 2018). Thus one strategy for causal mean matching is to first use interventions to fully identify the structure of , and then solve for the matching intervention given full knowledge of the graph. However, in general this strategy requires more interventions than needed. In fact, the number of interventions required by such a strategy can be exponentially larger than the number of interventions required by a strategy that directly attempts to identify the matching intervention, as illustrated in Figure 1 and proven in Theorem 2.

In this work, we consider active intervention designs, where a series of interventions are chosen adaptively to learn the matching intervention. This means that the information obtained after performing each intervention is taken into account for future choices of interventions. We here focus on the noiseless setting, where for each intervention enough data is obtained to decide the effect of each intervention. Direct implications for the noisy setting are discussed in Appendix G. To incorporate realistic cases in which the system cannot withstand an intervention with too many target variables, as is the case in CRISPR experiments, where knocking out too many genes at once often kills the cell, we consider the setting where there is a sparsity constraint on the maximum number of perturbation targets in each intervention, i.e., we only allow where .

3 Identifiability

In this section, we characterize and provide a graphical representation of the shift interventional Markov equivalence class (shift--MEC), i.e., the equivalence class of DAGs that is identifiable by shift interventions . We also introduce mean interventional faithfulness, an assumption that guarantees identifiability of the underlying DAG up to its shift--MEC. Proofs are given in Appendix C.

3.1 Shift-interventional Markov Equivalence Class

For any DAG with nodes , a distribution is Markov with respect to if it factorizes according to . Two DAGs are Markov equivalent or in the same Markov equivalence class (MEC) if any positive distribution which is Markov with respect to (w.r.t.) one DAG is also Markov w.r.t. the other DAG. With observational data, a DAG is only identifiable up to its MEC (Andersson et al.,, 1997). However, the identifiability improves to a smaller class of DAGs with interventions. For a set of interventions (not necessarily shift interventions), the pair is -Markov w.r.t. if is Markov w.r.t. and factorizes according to

Similarly, the interventional Markov equivalence class (-MEC) of a DAG can be defined, and Yang et al., (2018) provided a structural characterization of the -MEC for general interventions (not necessarily shift interventions).

Following, we show that if consists of shift interventions, then the -MEC becomes smaller, i.e., identifiability of the causal DAG is improved. The proof utilizes Lemma 2 on the relationship between conditional probabilities. For this, denote by the ancestors of node , i.e., all nodes for which there is a directed path from to in . For a subset of nodes , we say that is a source w.r.t. if . A subset is a source w.r.t. if every node in is a source w.r.t. .

Lemma 2.

For any distribution that factorizes according to , the interventional distribution for a shift intervention with shift values satisfies

for any source . Furthermore, if is not a source w.r.t. , then there exists a positive distribution such that .

Hence, we can define the shift--Markov property and shift-interventional Markov equivalence class (shift--MEC) as follows.

Definition 1.

For a set of shift interventions , the pair is shift--Markov w.r.t. if is -Markov w.r.t. and

Two DAGs are in the same shift--MEC if any positive distribution that is shift--Markov w.r.t. one DAG is shift--Markov also w.r.t. the other DAG.

The following graphical characterizations are known: Two DAGs are in the same MEC if and only if they share the same skeleton (adjacencies) and v-structures (induced subgraphs ), see Verma and Pearl, (1991). For general interventions , two DAGs are in the same -MEC, if they are in the same MEC and they have the same directed edges , where means that either or (Hauser and Bühlmann,, 2012; Yang et al.,, 2018). In the following theorem, we provide a graphical criterion for two DAGs to be in the same shift--MEC.

Theorem 1.

Let be a set of shift interventions. Then two DAGs and belong to the same shift--MEC if and only if they have the same skeleton, v-structures, directed edges , as well as source nodes of for every .

In other words, two DAGs are in the same shift--MEC if and only if they are in the same -MEC and they have the same source perturbation targets. Figure 2 shows an example; in particular, to represent an MEC, we use the essential graph (EG), which has the same skeleton as any DAG in this class and directed edges if for every DAG in this class. The essential graphs corresponding to the MEC, -MEC and shift--MEC of a DAG are referred to as EG, -EG and shift--EG of , respectively. They can be obtained from the aforementioned graphical criteria (along with a set of logical rules known as the Meek rules (Meek,, 1995); see details in Appendix A). Figure 2 shows an example of EG, -EG and shift--EG of a four-node DAG.

3.2 Mean Interventional Faithfulness

For the causal mean matching problem, the underlying can be identified from shift interventions up to its shift--MEC. However, we may not need to identify the entire DAG to find the matching intervention . Lemma 1 implies that if is neither in nor downstream of , then the mean of already matches the desired state, i.e., ; this suggest that these variables may be negligible when learning . Unfortunately, the reverse is not true; one may design “degenerate" settings where a variable is in (or downstream of) , but its marginal mean is also unchanged:

Example 1.

Let , with and , so that . Suppose is a shift intervention with perturbation targets , with , , and . Then , i.e., the marginal mean of is unchanged under the intervention.

Such degenerate cases arise when the shift on a node (deemed if not shifted) exactly cancels out the contributions of shifts on its ancestors. Formally, the following assumption rules out these cases.

Assumption 1 (Mean Interventional Faithfulness).

If satisfies , then is neither a nor downstream of any perturbation target, i.e., .

This is a particularly weak form of faithfulness, which is implied by interventional faithfulness assumptions in prior work (Yang et al.,, 2018; Squires et al., 2020b, ; Jaber et al.,, 2020).

Let be the collection of nodes for which . The following lemma shows that under the mean interventional faithfulness assumption we can focus on the subgraph induced by , since and interventions on do not affect .

4 Algorithms

Having shown that shift interventions allow the identification of source perturbation targets and that the mean interventional faithfulness assumption allows reducing the problem to an induced subgraph, we now propose two algorithms to learn the matching intervention. The algorithms actively pick a shift intervention at time based on the current shift-interventional essential graph (shift--EG). Without loss of generality and for ease of discussion, we assume that the mean interventional faithfulness assumption holds and we therefore only need to consider . In Appendix D, we show that the faithfulness violations can be identified and thus Assumption 1 is not necessary for identifying the matching intervention, but additional interventions may be required.

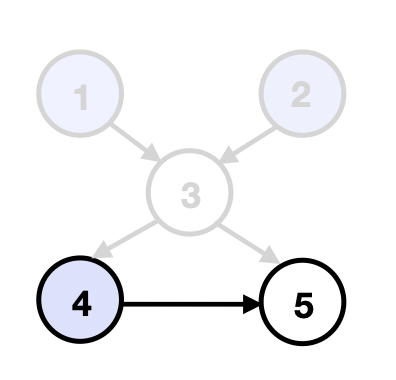

Warm-up: Upstream Search. Consider solving for the matching intervention when the structure of is known, i.e., the current shift--EG is fully directed. Let be the non-empty set of source nodes in . We make the following key observation.

Observation 1.

, and the shift values are for each .

This follows since shifting other variables in cannot change the mean of nodes in . Further, the shifted means of variables in match the desired mean (Lemma 2). Given the resulting intervention , we obtain a new distribution . Assuming mean interventional faithfulness on this distribution, we may now remove those variables whose means in already match . We then repeat this process on the new set of unmatched source nodes, , to compute the corresponding shift intervention . Repeating until we have matched the desired mean for all variables yields . We illustrate this procedure in Figure 3.

The idea of upstream search extends to shift--EG with partially directed or undirected . In this case, if a node or nodes of are identified as source, Observation 1 still holds. Hence, we solve a part of with these source nodes and then intervene on them to reduce the unsolved graph size.

Decomposition of Shift Interventional Essential Graphs: In order to find the source nodes, we decompose the current shift--EG into undirected components. Hauser and Bühlmann, (2014) showed that every interventional essential graph is a chain graph with chordal chain components, where the orientations in one chain component do not affect the orientations in other components.222The chain components of a chain graph are the undirected connected components after removing all its directed edges, and an undirected graph is chordal if all cycles of length greater than 3 contain a chord. By a similar argument, we can obtain an analogous decomposition for shift interventional essential graphs, and show that there is at least one chain component with no incoming edges. Let us separate out all of the chain components of shift--EG with no incoming edges. The following lemma proves that all sources are contained within these components.

Lemma 4.

For any shift--EG of , each chain component has exactly one source node w.r.t. this component. This node is a source w.r.t. if and only if there are no incoming edges to this component.

These results hold when replacing with any induced subgraph of it. Thus, we can find the source nodes in by finding the source nodes in each of its chain components with no incoming edges.

4.1 Two Approximate Strategies

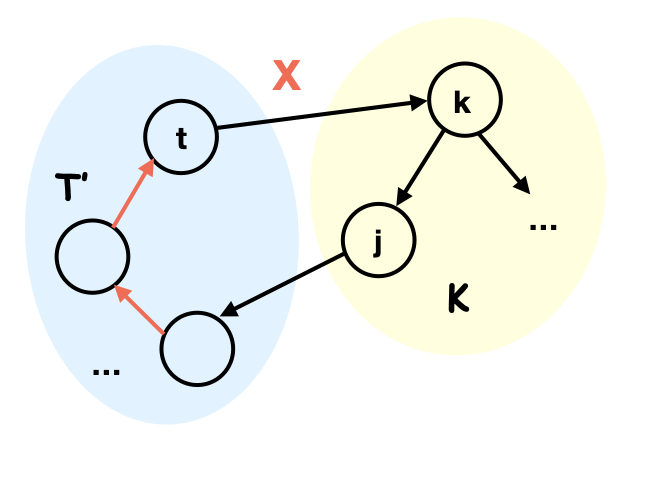

Following the chain graph decomposition, we now focus on how to find the source node of an undirected connected chordal graph . If there is no sparsity constraint on the number of perturbation targets in each shift intervention, then directly intervening on all of the variables in gives the source node, since by Theorem 1, all DAGs in the shift--MEC share the same source node. However, when the maximum number of perturbation targets in an intervention is restricted to , multiple interventions may be necessary to find the source node.

After intervening on nodes, the remaining unoriented part can be decomposed into connected components. In the worst case, the source node of is in the largest of these connected components. Therefore we seek the set of nodes, within the sparsity constraint, that minimizes the largest connected component size after being removed. This is known as the MinMaxC problem (Lalou et al.,, 2018), which we show is NP-complete on chordal graphs (Appendix D). We propose two approximate strategies to solve this problem, one based on the clique tree representation of chordal graphs and the other based on robust supermodular optimization. The overall algorithm with these subroutines is summarized in Algorithm 1. We outline the subroutines here, and give further details in Appendix D.

Clique Tree Strategy. Let be the set of maximal cliques in the chordal graph . There exists a clique tree with nodes in and edges satisfying that , their intersection is a subset of any clique on the unique path between in (Blair and Peyton,, 1993). Thus, deleting a clique which is not a leaf node in the clique tree will break into at least two connected components, each corresponding to a subtree in the clique tree. Inspired by the central node algorithm (Greenewald et al.,, 2019; Squires et al., 2020a, ), we find the -constrained central clique of by iterating through and returning the clique with no more than nodes that separates the graph most, i.e., solving MinMaxC when interventions are constrained to be maximal cliques. We denote this approach as CliqueTree.

Supermodular Strategy. Our second approach, denoted Supermodular, optimizes a lower bound of the objective of MinMaxC. Consider the following equivalent formulation of MinMaxC

| (1) |

where represents the nodes of and , with if and are the same or connected after removing nodes in from and otherwise.

MinMaxC (1) resembles the problem of robust supermodular optimization (Krause et al.,, 2008). Unfortunately, is not supermodular for chordal graphs (Appendix D). Therefore, we propose to optimize for a surrogate of defined as , where

| (2) |

Here is the number of paths without cycles between and in (deemed if or does not belong to and if ) and means is either connected or equal to . Comparing with , we see that is a lower bound of for MinMaxC, which is tight when is a tree. We show that is monotonic supermodular for all (Appendix D). Therefore, we consider (2) with replaced by , which can be solved using the SATURATE algorithm (Krause et al.,, 2008). Notably, the results returned by Supermodular can be quite different to those returned by CliqueTree since Supermodular is not constrained to pick a maximal clique; see Figure 4.

5 Theoretical Results

In this section we derive a worst-case lower bound on the number of interventions for any algorithm to identify the source node in a chordal graph. Then we use this lower bound to show that our strategies are optimal up to a logarithmic factor. This contrasts with the structure learning strategy, which may require exponentially more interventions than our strategy (Figure 1).

The worst case is with respect to all feasible orientations of an essential graph (Hauser and Bühlmann,, 2014; Shanmugam et al.,, 2015), i.e., orientations corresponding to DAGs in the equivalence class. Given a chordal chain component of , let be the number of maximal cliques in , and be the size of the largest maximal clique in . The following lemma provides a lower bound depending only on .

Lemma 5.

In the worst case over feasible orientations of , any algorithm requires at least shift interventions to identify the source node, under the sparsity constraint .

To give some intuition for this result, consider the case where the largest maximal clique is upstream of all other maximal cliques. Given such an ordering, in the worst case, each intervention rules out only nodes in this clique (namely, the most downstream ones). Now, we show that our two strategies need at most shift interventions for the same task.

Lemma 6.

In the worst case over feasible orientations of , both CliqueTree and Supermodular require at most shift interventions to identify the source node, under the sparsity constraint .

By combining Lemma 5 and Lemma 6, which consider subproblems of the causal mean matching problem, we obtain a bound on the number of shift interventions needed for solving the full causal mean matching problem. Let be the largest for all chain components of :

Theorem 2.

Algorithm 1 requires at most times more shift interventions, compared to that required by the optimal strategy, in the worst case over feasible orientations of .

A direct application of this theorem is that, in terms of the number of interventions required to solve the causal mean matching problem, our algorithm is optimal in the worst case when , i.e., when every chain component is a clique. All proofs are provided in Appendix E.

6 Experiments

We now evaluate our algorithms in several synthetic settings.333Code is publicly available at: https://github.com/uhlerlab/causal_mean_matching. Each setting considers a particular graph type, number of nodes in the graph and number of perturbation targets in the matching intervention. We generate problem instances in each setting. Every problem instance contains a DAG with nodes generated according to the graph type and a randomly sampled subset of nodes denoting the perturbation targets in the matching intervention. We consider both, random graphs including Erdös-Rényi graphs (Erdős and Rényi,, 1960) and Barabási–Albert graphs (Albert and Barabási,, 2002), as well as structured chordal graphs, in particular, rooted tree graphs and moralized Erdös-Rényi graphs (Shanmugam et al.,, 2015). The graph size in our simulations ranges from to , while the number of perturbation targets ranges from to .

We compare our two subroutines for Algorithm 1, CliqueTree and Supermodular, against three carefully constructed baselines. The UpstreamRand baseline follows Algorithm 1 where line 8 is changed to selecting randomly from without exceeding , i.e., when there is no identified source node it randomly samples from the chain component with no incoming edge. This strategy highlights how much benefit is obtained from CliqueTree and Supermodular on top of upstream search. The Coloring baseline is modified from the coloring-based policy for structure learning (Shanmugam et al.,, 2015), previously shown to perform competitively on large graphs (Squires et al., 2020a, ). It first performs structure learning with the coloring-based policy, and then uses upstream search with known DAG. We also include an Oracle baseline, which does upstream search with known DAG.

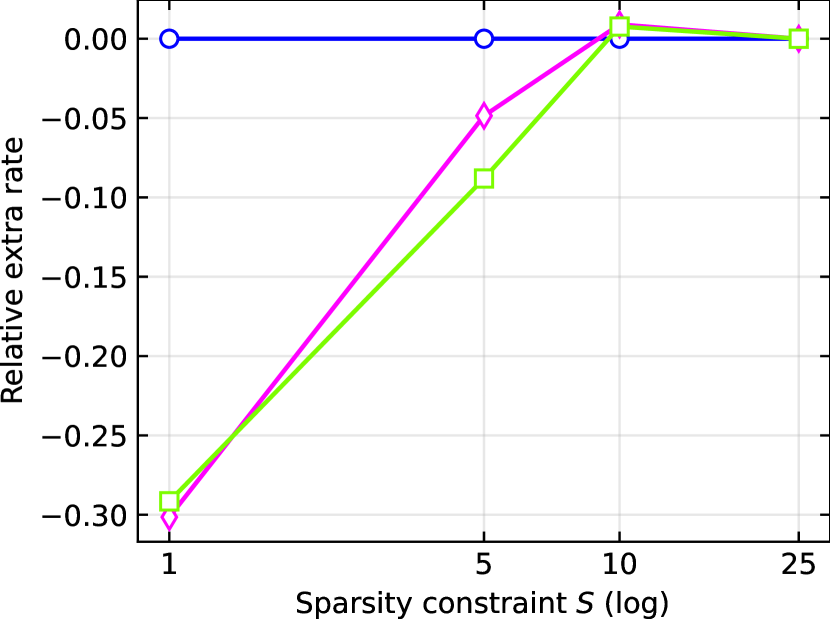

In Figure 5 we present a subset of our results on Barabási–Albert graphs with nodes; similar behaviors are observed in all other settings and shown in Appendix F. In Figure 5(a), we consider problem instances with varying size of . Each algorithm is run with sparsity constraint . We plot the number of extra interventions compared to Oracle, averaged across the problem instances. As expected, Coloring requires the largest number of extra interventions. This finding is consistent among different numbers of perturbation targets, since the same amount of interventions are used to learn the structure regardless of . As increases, CliqueTree and Supermodular outperform UpstreamRand. To further investigate this trend, we plot the rate of extra interventions444The rate is calculated by (#Strategy-#UpstreamRand)/#UpstreamRand where # denotes the number of extra interventions compared to Oracle and Strategy can be CliqueTree, Supermodular or UpstreamRand. used by CliqueTree and Supermodular relative to UpstreamRand in Figure 5(b). This figure shows that CliqueTree and Supermodular improve upon upstream search by up to as the number of perturbation targets increases. Finally, we consider the effect of the sparsity constraint in Figure 5(c) with . In line with the discussion in Section 4.1, as increases, the task becomes easier for plain upstream search. However, when the number of perturbation targets is restricted, CliqueTree and Supermodular are superior, with Supermodular performing best in most cases.

7 Discussion

In this work, we introduced the causal mean matching problem, which has important applications in medicine and engineering. We aimed to develop active learning approaches for identifying the matching intervention using shift interventions. Towards this end, we characterized the shift interventional Markov equivalence class and showed that it is in general more refined than previously defined equivalence classes. We proposed two strategies for learning the matching intervention based on this characterization, and showed that they are optimal up to a logarithmic factor. We reported experimental results on a range of settings to support these theoretical findings.

Limitations and Future Work. This work has various limitations that may be interesting to address in future work. First, we focus on the task of matching a desired mean, rather than an entire distribution. This is an inherent limitation of deterministic shift interventions: as noted by Hyttinen et al., (2012), in the linear Gaussian setting, these interventions can only modify the mean of the initial distribution. Thus, matching the entire distribution, or other relevant statistics, will require broader classes of interventions. Assumptions on the desired distribution are also required to rule out possibly non-realizable cases. Second, we have focused on causal DAG models, which assume acyclicity and the absence of latent confounders. In many realistic applications, this could be an overly optimistic assumption, requiring extensions of our results to the cyclic and/or causally insufficient setting. Finally, throughout the main text, we have focused on the noiseless setting; we briefly discuss the noisy setting in Appendix G, but there is much room for more extensive investigations.

Acknowledgements

C. Squires was partially supported by an NSF Graduate Fellowship. All authors were partially supported by NSF (DMS-1651995), ONR (N00014-17- 1-2147 and N00014-18-1-2765), the MIT-IBM Watson AI Lab, and a Simons Investigator Award to C. Uhler.

References

- Agrawal et al., (2019) Agrawal, R., Squires, C., Yang, K., Shanmugam, K., and Uhler, C. (2019). Abcd-strategy: Budgeted experimental design for targeted causal structure discovery. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 3400–3409. PMLR.

- Albert and Barabási, (2002) Albert, R. and Barabási, A.-L. (2002). Statistical mechanics of complex networks. Reviews of modern physics, 74(1):47.

- Andersson et al., (1997) Andersson, S. A., Madigan, D., Perlman, M. D., et al. (1997). A characterization of markov equivalence classes for acyclic digraphs. Annals of statistics, 25(2):505–541.

- Badsha et al., (2019) Badsha, M., Fu, A. Q., et al. (2019). Learning causal biological networks with the principle of mendelian randomization. Frontiers in genetics, 10:460.

- Blair and Peyton, (1993) Blair, J. R. and Peyton, B. (1993). An introduction to chordal graphs and clique trees. In Graph theory and sparse matrix computation, pages 1–29. Springer.

- Bubeck and Cesa-Bianchi, (2012) Bubeck, S. and Cesa-Bianchi, N. (2012). Regret analysis of stochastic and nonstochastic multi-armed bandit problems. In Foundations and Trends® in Machine Learning, pages 1–122.

- Cahan et al., (2014) Cahan, P., Li, H., Morris, S. A., Da Rocha, E. L., Daley, G. Q., and Collins, J. J. (2014). Cellnet: network biology applied to stem cell engineering. Cell, 158(4):903–915.

- de Kroon et al., (2020) de Kroon, A. A., Belgrave, D., and Mooij, J. M. (2020). Causal discovery for causal bandits utilizing separating sets. arXiv preprint arXiv:2009.07916.

- Dominguez et al., (2016) Dominguez, A. A., Lim, W. A., and Qi, L. S. (2016). Beyond editing: repurposing crispr–cas9 for precision genome regulation and interrogation. Nature reviews Molecular cell biology, 17(1):5.

- D’Alessio et al., (2015) D’Alessio, A. C., Fan, Z. P., Wert, K. J., Baranov, P., Cohen, M. A., Saini, J. S., Cohick, E., Charniga, C., Dadon, D., Hannett, N. M., et al. (2015). A systematic approach to identify candidate transcription factors that control cell identity. Stem cell reports, 5(5):763–775.

- Erdős and Rényi, (1960) Erdős, P. and Rényi, A. (1960). On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci, 5(1):17–60.

- Friedman et al., (2000) Friedman, N., Linial, M., Nachman, I., and Pe’er, D. (2000). Using bayesian networks to analyze expression data. Journal of computational biology, 7(3-4):601–620.

- Ghassami et al., (2018) Ghassami, A., Salehkaleybar, S., Kiyavash, N., and Bareinboim, E. (2018). Budgeted experiment design for causal structure learning. In International Conference on Machine Learning, pages 1724–1733. PMLR.

- Greenewald et al., (2019) Greenewald, K., Katz, D., Shanmugam, K., Magliacane, S., Kocaoglu, M., Boix Adsera, E., and Bresler, G. (2019). Sample efficient active learning of causal trees. In Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc.

- Hauser and Bühlmann, (2012) Hauser, A. and Bühlmann, P. (2012). Characterization and greedy learning of interventional markov equivalence classes of directed acyclic graphs. The Journal of Machine Learning Research, 13(1):2409–2464.

- Hauser and Bühlmann, (2014) Hauser, A. and Bühlmann, P. (2014). Two optimal strategies for active learning of causal models from interventional data. International Journal of Approximate Reasoning, 55(4):926–939.

- He and Geng, (2008) He, Y.-B. and Geng, Z. (2008). Active learning of causal networks with intervention experiments and optimal designs. Journal of Machine Learning Research, 9(Nov):2523–2547.

- Hyttinen et al., (2012) Hyttinen, A., Eberhardt, F., and Hoyer, P. O. (2012). Learning linear cyclic causal models with latent variables. The Journal of Machine Learning Research, 13(1):3387–3439.

- Hyttinen et al., (2013) Hyttinen, A., Eberhardt, F., and Hoyer, P. O. (2013). Experiment selection for causal discovery. Journal of Machine Learning Research, 14:3041–3071.

- Jaber et al., (2020) Jaber, A., Kocaoglu, M., Shanmugam, K., and Bareinboim, E. (2020). Causal discovery from soft interventions with unknown targets: Characterization and learning. Advances in Neural Information Processing Systems, 33.

- Kocaoglu et al., (2017) Kocaoglu, M., Dimakis, A., and Vishwanath, S. (2017). Cost-optimal learning of causal graphs. In International Conference on Machine Learning, pages 1875–1884. PMLR.

- Koller and Friedman, (2009) Koller, D. and Friedman, N. (2009). Probabilistic graphical models: principles and techniques. MIT press.

- Krause et al., (2008) Krause, A., McMahan, H. B., Guestrin, C., and Gupta, A. (2008). Robust submodular observation selection. Journal of Machine Learning Research, 9(12).

- Lalou et al., (2018) Lalou, M., Tahraoui, M. A., and Kheddouci, H. (2018). The critical node detection problem in networks: A survey. Computer Science Review, 28:92–117.

- Lattimore et al., (2016) Lattimore, F., Lattimore, T., and Reid, M. D. (2016). Causal bandits: Learning good interventions via causal inference. In Advances in Neural Information Processing Systems, volume 29. Curran Associates, Inc.

- Lee and Bareinboim, (2018) Lee, S. and Bareinboim, E. (2018). Structural causal bandits: where to intervene? Advances in Neural Information Processing Systems 31, 31.

- Meek, (1995) Meek, C. (1995). Causal inference and causal explanation with background knowledge. In Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence, UAI’95, page 403–410, San Francisco, CA, USA. Morgan Kaufmann Publishers Inc.

- Rackham et al., (2016) Rackham, O. J., Firas, J., Fang, H., Oates, M. E., Holmes, M. L., Knaupp, A. S., Suzuki, H., Nefzger, C. M., Daub, C. O., Shin, J. W., et al. (2016). A predictive computational framework for direct reprogramming between human cell types. Nature genetics, 48(3):331.

- Rothenhäusler et al., (2015) Rothenhäusler, D., Heinze, C., Peters, J., and Meinshausen, N. (2015). Backshift: Learning causal cyclic graphs from unknown shift interventions. In Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc.

- Shanmugam et al., (2015) Shanmugam, K., Kocaoglu, M., Dimakis, A. G., and Vishwanath, S. (2015). Learning causal graphs with small interventions. In Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc.

- Spirtes et al., (2000) Spirtes, P., Glymour, C. N., Scheines, R., and Heckerman, D. (2000). Causation, prediction, and search. MIT press.

- (32) Squires, C., Magliacane, S., Greenewald, K., Katz, D., Kocaoglu, M., and Shanmugam, K. (2020a). Active structure learning of causal dags via directed clique trees. In Advances in Neural Information Processing Systems, volume 33, pages 21500–21511. Curran Associates, Inc.

- (33) Squires, C., Wang, Y., and Uhler, C. (2020b). Permutation-based causal structure learning with unknown intervention targets. In Conference on Uncertainty in Artificial Intelligence, pages 1039–1048. PMLR.

- Touchette and Lloyd, (2004) Touchette, H. and Lloyd, S. (2004). Information-theoretic approach to the study of control systems. Physica A: Statistical Mechanics and its Applications, 331(1-2):140–172.

- Triantafillou et al., (2017) Triantafillou, S., Lagani, V., Heinze-Deml, C., Schmidt, A., Tegner, J., and Tsamardinos, I. (2017). Predicting causal relationships from biological data: Applying automated causal discovery on mass cytometry data of human immune cells. Scientific reports, 7(1):1–11.

- Verma and Pearl, (1991) Verma, T. and Pearl, J. (1991). Equivalence and synthesis of causal models. UCLA, Computer Science Department.

- Vierbuchen et al., (2010) Vierbuchen, T., Ostermeier, A., Pang, Z. P., Kokubu, Y., Südhof, T. C., and Wernig, M. (2010). Direct conversion of fibroblasts to functional neurons by defined factors. Nature, 463(7284):1035–1041.

- Wodo et al., (2015) Wodo, O., Zola, J., Pokuri, B. S. S., Du, P., and Ganapathysubramanian, B. (2015). Automated, high throughput exploration of process–structure–property relationships using the mapreduce paradigm. Materials discovery, 1:21–28.

- Yabe et al., (2018) Yabe, A., Hatano, D., Sumita, H., Ito, S., Kakimura, N., Fukunaga, T., and Kawarabayashi, K.-i. (2018). Causal bandits with propagating inference. In International Conference on Machine Learning, pages 5512–5520. PMLR.

- Yang et al., (2018) Yang, K., Katcoff, A., and Uhler, C. (2018). Characterizing and learning equivalence classes of causal dags under interventions. In International Conference on Machine Learning, pages 5541–5550. PMLR.

Appendix A Preliminaries

A.1 Meek Rules

Given any Markov equivalence class of DAGs with shared directed and undirected edges, the corresponding essential graph can be obtained using a set of logical relations known as Meek rules \citepappendixmeek2013causal+. The Meek rules are stated in the following proposition.

Proposition 1 (Meek Rules \citepappendixmeek2013causal+).

We can infer all directed edges in using the following four rules:

-

1.

If and is not adjacent to , then .

-

2.

If and , then .

-

3.

If and is not adjacent to , then .

-

4.

If and is not adjacent to , then .

Figure 6 illustrates these four rules.

Appendix B Proof of Exact Matching

Proof of Lemma 1.

Without loss of generality, assume is the topological order of the underlying DAG , i.e., implies . We will first construct such that , and then show that is unique.

Existence: Denote as the smallest such that . Witout loss of generality we assume that exists (if does not exists, then suffices since ).

Let be the shift intervention with perturbation target and shift values . Since and by definition, we have

Thus . Also for . Denote as the smallest such that . If does not exists, then suffices. Otherwise .

Let be the shift intervention with perturbation target and corresponding shift values and . We have and by definition, the topological order, and . Then

Thus . Also for . By iterating this process, we will reach for some such that there is no with . Taking suffices.

Uniqueness: If there exists such that , let be the smallest index such that either has different shift values in and , or is only in one intervention’s perturbation targets. In either case, we have by the topological order and for some . Thus contradicting . ∎

Appendix C Proof of Identifiability

C.1 Shift Interventional MEC

Proof of Lemma 2.

For any distribution that factorizes according to and shift intervention , let be any source w.r.t. . By definition, . Thus contains neither a member nor a descendant of , i.e., there does not exists and such that there is a direct path from to or . Hence we have , which gives

Therefore .

On the other hand, if is not a source w.r.t. , consider the following linear Gaussian model,

where are deterministic scalars and are i.i.d. random variables.

Since is not a source in , there exists a source in such that there is a directed path . From above, for . Consider setting , for , and the remaining edge weights to . For sufficiently small, we have that , i.e., we cannot have that . ∎

Proof of Theorem 1.

Denote . For , let and be the collection of source nodes in in and , respectively. From Definition 1, we know that and are in the same shift--MEC if and only if they are in the same -MEC and, for any pair that is -Markov w.r.t. both and , it satisfies

| (3) |

if and only if it also satisfies

| (4) |

By Lemma 2, we know that for all . Otherwise we can find a pair that violates (4) for . Similarly, we have . Therefore . In this case, (3) is equivalent to (4).

Hence, and are in the same shift--MEC if and only if they are in the same -MEC and they have the same source nodes of for every . From Theorem 3.9 in \citetappendixyang2018characterizing+, we know that and are in the same -MEC if and only if they share the same skeleton, -structures and directed edges . Therefore, and are in the same shift--MEC if and only if they have the same skeleton, -structures, directed edges , as well as source nodes of for every . ∎

Let be any DAG, suppose that and is the collection of source nodes in in for . Then as a direct corollary of Theorem 1, we can represent a shift interventional Markov equivalence class with a (general) interventional Markov equivalence class.

Corollary 1.

Let ; a DAG is shift--Markov equivalent to if and only if is -Markov equivalent to .

Proof.

The proof follws as a direct application of Theorem 1, Theorem 3.9 in \citetappendixyang2018characterizing+, and the fact that there are no edges between nodes in . ∎

C.2 Mean Interventional Faithfulness

Proof of Lemma 3.

If Assumption 1 holds, then for any , since , then and . Let such that there is an edge between and . Since , there is either or . Therefore if , then , a contradiction. Thus .

Conversely, if Assumption 1 does not hold, then there exists (i.e., ) such that either or . If , then since and Lemma 2, must not be a source in . Therefore we only need to discuss the case where and .

Let be a source of , then must also be a source of . Otherwise there is a directed path from to where and . By definition of ancestors, we know from that there is also . Therefore , which violates being a source of .

Using Lemma 3, we know that we can check the authenticity of Assumption 1 by looking at the orientation of edges between and , which is achievable by any (general) intervention on (or ).

Corollary 2.

Assumption 1 holds if and only if the -essential graph (or -essential graph) of has edges for all .

Appendix D Details of Algorithms

D.1 Decomposition of Shift Interventional Essential Graphs

Chain Graph Decomposition: \citetappendixhauser2014two+ showed that every interventional essential graph is a chain graph with undirected connected chordal chain components, where the orientations in one component do not affect any other components. This decomposition also holds for shift interventional essential graphs, since every shift interventional essential graph is also an interventional essential graph (Corollary 1). Below, we show an example of this decomposition (Figure 7).

Proof of Lemma 4.

Suppose an undirected connected chain component of the essential graph has two source nodes and w.r.t. . Since is connected, there is a path between and in ; let be the shortest among all these paths. Because and are sources of , there must be and . Therefore, such that (let and ). By the shortest path definition, there is no edge between and . Therefore there is a v-structure in induced by . Since all DAGs in the same shift interventional equivalence class share the same v-structures, must be oriented in the essential graph. This violates belonging to the same undirected chain component . Thus, combining this with the fact that must have one source node, we obtain that has exactly one source node w.r.t. .

Next we show that the source node of a chain component is also the source of if and only if there are no incoming edges to this component. Let be the source of the chain component . On one hand, must be the source of if there is no incoming edges to . On the other hand, if there is an incoming edge for some and , then since the essential graph is closed under Meek R1 and R2 (Proposition 1), we know that there must be an edge for all neighbors of . Following the same deduction and the fact that is connected, we obtain that for all (Figure 8). This means that as well. Therefore cannot be a source of .

∎

D.2 NP-completeness of MinMaxC

It was shown separately in \citetappendixshen2012exact and \citetappendixlalou2018critical+ that the MinMaxC problem is NP-complete for general graphs and split graphs. Split graphs are a subclass of chordal graphs, where the vertices can be separated into a clique and an independent set (isolated nodes after removing the clique). Thus, MinMaxC is also NP-complete for chordal graphs.

D.3 Clique Tree Strategy

The clique tree strategy takes inputs of an undirected connected chordal graph and the sparsity constraint , and outputs a shift intervention with no more than perturbation targets. If contains no more than nodes, then it returns any shift intervention with perturbation targets in . If contains more than nodes, it first constructs a clique tree of by the maximum-weight spanning tree algorithm \citepappendixkoller2009probabilistic+. Then it iterates through the nodes in (which are maximal cliques in ) to find a maximal clique that breaks into subtrees with sizes no more than half of the size of . If has no more than nodes, then it returns any shift intervention with perturbation targets in . Otherwise, it samples nodes from and returns any shift intervention with these nodes as perturbation targets. The following subroutine summarizes this procedure.

Complexity: Let represent the number of nodes in , i.e., . All the maximal cliques of the chordal graph can be found in time \citepappendixgalinier1995chordal. We use Kruskal’s algorithm for computing the maximum-weight spanning tree, which can be done in \citepappendixkruskal1956shortest. The remaining procedure of iterating through takes no more than since chordal graphs with nodes have no more than maximal cliques \citepappendixgalinier1995chordal and all subtree sizes can be obtained in . Therefore this subroutine can be computed in time.

D.4 Supermodular Strategy

The supermodular procedure takes as input an undirected connected chordal graph as well as the sparsity constraint , and outputs a shift intervention with perturbation targets by solving

| (5) |

with the SATURATE algorithm \citepappendixkrause2008robust+. Here represents nodes of and with defined in (2). Algorithm 3 summarizes this subroutine.

Supermodularity: First we give an example showing that defined in (1) is not supermodular for chordal graphs, although it is clearly monotonic decreasing.

Example 2.

Consider the chordal graph in Figure 9; we have . Therefore is not supermodular for this graph.

Next we prove that is supermodular and monotonic decreasing.

Proof.

Since , we only need to show that every is supermodular and monotonic decreasing. In the following, we refer to a path without cycles as a simple path.

For any , since is a subgraph of , then any simple path between and in must also be in . Hence , which means that

i.e., is monotonic decreasing.

For any , the difference is the number of simple paths in between and that pass through . Each of these paths must also be in , since is a subgraph of . Therefore,

which means that

i.e., is supermodular. ∎

SATURATE algorithm \citepappendixkrause2008robust+: Having shown that is monotonic supermodular, we solve the robust supermodular optimization problem in (5) with the SATURATE algorithm in \citepappendixkrause2008robust+. SATURATE performs a binary search for potential objective values and uses a greedy partial cover algorithm to check the feasibility of these objective values; for a detailed description of the algorithm, see \citetappendixkrause2008robust+.

Complexity: Let represent the number of nodes in , i.e., . SATURATE uses at most evaluations of supermodular functions \citepappendixkrause2008robust+. Each computes all the simple paths between and all other in . A modified depth-first search is used to calculated these paths \citepappendixsedgewick2001algorithms, which results in complexity. For general graphs, this problem is #P-complete \citepappendixvaliant1979complexity. However, this might be significantly reduced for chordal graphs. We are unaware of particular complexity results for chordal graphs, which would be an interesting direction for future work. The total runtime of this subroutine is thus bounded by .555For a more efficient implementation, one could replace the undirected graph with a DAG in its MEC (which can be found in linear time using L-BFS). All statements hold except that is no longer necessarily tight for tree graphs. This replacement results in a total complexity of for the subroutine, since directed simple paths can be counted in .

D.5 Violation of Faithfulness

From Corollary 2, we know that we can check whether Assumption 1 holds or not by any intervention on (or ). However, we can run Algorithm 1 to obtain without Assumption 1 because lines 2-14 in Algorithm 1 always return the correct .

Let be the resolved part of in line 2, i.e., it is a shift intervention constructed by taking a subset of perturbation targets of and their corresponding shift values. Let be the remaining shift intervention constructed by deleting in . Denote , which is returned by line 3. If , then we have solved . Otherwise we have:

Lemma 7.

The source nodes w.r.t. must be perturbation targets of and their corresponding shift values are (for source node ).

Proof.

Let be a source node w.r.t. and be its corresponding shift value. Since intervening on other nodes in does not change the marginal distribution of , we must have that . And because , we know that

From this, we also have that since . Therefore, all source nodes w.r.t. are in and their corresponding shift values are .

Let be a source w.r.t. , then must also be a source node w.r.t. . Since , must be a source node in or has a source node in as its ancestor. If it is the latter case, then since all source nodes in must be in , cannot be a source node w.r.t. , a contradiction. Therefore the source w.r.t. must also be the source w.r.t. . Combined with the result in the previous paragraph, we have that all source nodes w.r.t. are perturbation targets of and their corresponding shift values are . ∎

This lemma shows that obtained in lines 5-11 of Algorithm 1 must be the perturbation targets of and line 12 gives the correct shift values. Therefore Algorithm 1 must return the correct .

However, to be able to obtain the shift--EG of , we need mean interventional faithfulness to be satisfied by (replacing with and with in Assumption 1) as well as -faithfulness \citepappendixsquires2020permutation+ to be satisfied by with respect to .

Appendix E Proof of Worst-case Bounds

E.1 Proof of Lemma 5

To show Lemma 5, we need the following proposition, which states that we can orient any maximal clique of a chordal graph to be most-upstream without creating cycles and v-structures, and the orientation in this clique can be made arbitrary. It was pointed out in \citepappendixvandenberghe2015chordal using similar arguments that any clique of a chordal graph can be most-upstream. Here, we provide the complete proof.

Proposition 2.

Let be any undirected chordal graph and be any maximal clique of , for any permutation of the nodes in , there exists a topological order of the nodes in such that starts with and orienting according to does not create any v-structures.

Proof.

A topological order of a chordal graph , orienting according to which does not create v-structures, corresponds to the reverse of a perfect elimination order \citepappendixhauser2014two+. A perfect elimination order is an order of nodes in , such that all neighbors of in that appear after in this order must constitute a clique in . Any chordal graph has at least one perfect elimination order \citepappendixandersson1997characterization+. In the following, we will use the reverse of a perfect elimination order to refer to a topological order that does not create v-structures.

To prove Proposition 2, we first prove the following statement: if , then there exists a perfect elimination order of nodes in that starts with a node not in . To show this, by Proposition 6 in \citetappendixhauser2014two+, we only need to prove that if , then there is a node not in , whose neighbors in constitute a clique.

We use induction on the number of nodes in : Consider . Since is a maximal clique, . This statement holds trivially. Suppose the statement is true for chordal graphs with size . Consider . Since is a chordal graph, it must have a perfect elimination order. If this perfect elimination order starts with , then there is no edge between and any node . Otherwise, since it is a perfect elimination order starting with and is a clique, there must be edges for all . This is a contradiction to being a maximal clique.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/233ecfb6-eb81-4c5b-baee-cf9e0052fde1/afig-1.png)

Consider the chordal graph by deleting from , . Let be the maximal clique in containing . If , let be any node in . Since there is no edge , and is a clique. ’s neighbors in must also constitute a clique. If , then by induction, we know that there exists such that ’s neighbors in constitute a clique. Since there is no edge , ’s neighbors in must also constitute a clique. Thus the statement holds for chordal graphs of size . Therefore the statement holds.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/233ecfb6-eb81-4c5b-baee-cf9e0052fde1/afig-2a.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/233ecfb6-eb81-4c5b-baee-cf9e0052fde1/afig-2b.png)

Now, we prove Proposition 2 by induction on the number of nodes in : Consider . Since is a maximal clique, . Thus Proposition 2 holds trivially.

Suppose Proposition 2 holds for chordal graphs of size . Consider . If , then Proposition 2 holds. If , then by the above statement, there exists , such that there exists a perfect elimination order of starting with . Let be the chordal graph obtained by deleting from . By induction, there exists , a reverse of perfect elimination order, that starts with . Let ; we must have that the reverse of is a perfect elimination order, since all neighbors of in constitute a clique. Therefore gives the wanted topological order and Proposition 2 holds for chordal graphs of size . This completes the proof of Proposition 2. ∎

Proof of Lemma 5.

Given any algorithm , let be the first shift interventions given by . By Proposition 2, we know that there exists a feasible orientation of such that the largest maximal clique of is most-upstream and that, for , is most-downstream of . For example, in the figure below, suppose algorithm chooses based on (a) and based on (b). There is a feasible orientation in (d) such that the largest clique is most-upstream and is most-downstream of , for .

Since and , in this worst case, it needs at least interventions to identify the source of , i.e., the source of (minus because in this case, if there is only one node left, then it must be the source). ∎

E.2 Proof of Lemma 6

Let be the clique obtained by lines 7-13 in Algorithm 2; when has more than nodes, we refer to as the central clique. To prove Lemma 6, we need the following proposition. This proposition shows that by looking at the undirected graph , we can find a node in the central clique satisfying certain properties, which will become useful in the proof of Lemma 6.

Proposition 3.

Let be the connected subtrees of after removing . For a node , let be the set of indices such that the tree is connected to only through the node . Let be the collection of all such subtrees. If there exists such that there is an edge between and , let be the one with the largest number of maximal cliques; otherwise let . Then there exists a node such that the number of maximal cliques in the subgraph induced by the subtrees and itself does not exceed .

Example 3.

As an example, the following figure shows the subtrees that are connected to only through node , indexed by (blue). The largest subtree in that is adjacent to node is denoted by (undimmed in green).

Proof of Proposition 3.

Notice the following facts.

Fact 1: Let be any subtree in ; then there must exist a node such that there is no edge between and .

Proof of Fact 1: For any two nodes , because is chordal and is connected, either the neighbors of in subset that of , or the the neighbors of in subset that of . Therefore we can order all nodes , where all neighbors of in subset that of that appear after . Then if the first node in this order has some neighbor , all nodes in have as neighbor, contradicting being a maximal clique.

Fact 2: Let be the collection of the subtrees where all edges connecting to are through a single node . We have that is the union of disjoint sets .

Proof of Fact 2: This follows directly from the definition of .

Fact 3: Let be the collection of non-empty . Then . Furthermore, for any subtree in , there is a node such that there is no edge between and this subtree.

Proof of Fact 3: This follows directly from the definition of and Fact 1.

Now we prove Proposition 3. If , then since contains at least two nodes (otherwise and the proposition holds trivially) and the number of maximal cliques in does not exceed , using Fact 2, we have at least one such that the number of maximal cliques in the subgraph induced by and itself does not exceed .

If . Let be the subtree with the largest number of maximal cliques in . Let be the node such that there is no edge between and the subtree ( exists because of Fact 3). Now suppose that the proposition does not hold. Then the number of maximal cliques in the subgraph induced by and itself must exceed . Also, the number of maximal cliques in the subgraph induced by and itself exceeds . Notice that . Therefore is connected to only through . Hence the sum of numbers of maximal cliques in and does not exceed . We cannot have both and having more than maximal cliques. Therefore the proposition must hold. ∎

Proof of Lemma 6.

For CliqueTree, we prove this lemma for a “less-adaptive” version for the sake of clearer discussions. In this “less-adaptive” version, instead of output intervention with perturbation targets sampled from the central clique (when it has more than nodes) in Algorithm 2, we directly output interventions with non-overlapping perturbation targets in . Each of these interventions has no more than perturbation targets and they contain at least nodes in altogether. Furthermore, we pick these interventions such that if they contain exactly nodes, then the remaining node satisfies Proposition 3.

After these interventions, we obtain a partially directed , which is a chain graph, with one of its chain components without incoming edges as input to CliqueTree in the next iteration of the inner-loop in Algorithm 1. Denote this chain component as . We show that has no more than maximal cliques each with no more than nodes. If , then and this trivially holds since the source of must be identified. In the following, we assume .

Size of maximal cliques: The maximal clique in must belong to a maximal clique in , and thus has no more than nodes.

Number of maximal cliques: If the source node is identified, then only has one node. This trivially holds. Now consider when the source node is not identified. We proceed in two cases.

Case I: if these interventions contain all nodes in , then they break the clique tree into subtrees each with no more than maximal cliques. must belong to one of these subtrees. Therefore it must have no more than maximal cliques.

Case II: if these interventions do not contain all nodes in , then there is exactly one node left in that is not a perturbation target, which satisfies Proposition 3. Denote this node as and the source node w.r.t. the intervened nodes as . From Theorem 1, we have that is identified and , the orientation of edge is identified.

If , then is the source w.r.t. : if is the source w.r.t. , then has no more than maximal cliques; otherwise, there is a unique subtree of after removing that has an edge pointing to in (it exists because is the source of but not the source of ; it is unique because there is no edge between subtrees and there is no v-structure at ), and therefore must belong to this subtree which has no more than maximal cliques.

If , then is the source w.r.t. : consider all the subtrees of after removing . We have the following two facts:

Fact 1: Let be a subtree such that there is an edge between and and all these edges are pointing towards . Then all edges between and must be oriented as . Thus .

Proof of Fact 1: Otherwise, suppose and . Let such that there is an edge between and . Since is connected, there must be a path from to in . Let be the shortest of these path. Since is shortest, there cannot be an edge between and with . And since all edges between and are pointing towards , there is an edge . Therefore to avoid v-structures, it must be . This creates a directed cycle , a contradiction.

Fact 2: There can be at most one subtree such that there is an edge pointing from to and also some such that or is unidentified. Therefore at most one subtree of this type can have .

Proof of Fact 2: Otherwise suppose there are two different subtrees such that . Since there is no edge , we have . Without loss of generality, suppose . Let be any node in with an edge , since is connected, let be the shortest path between and in . Let be the maximum in such that . If such does not exist, then . Since and there is no v-structure at , there must be an identified edge . Notice that there is no edge between and and , to avoid v-structure, it must be . The same deduction leads to identified edges . Since and there are no cycles, the edge must be identified. If exists, since is the shortest path and there is no v-structure, we must have . Furthermore, since is the largest, . By a similar deduction as in the case where does not exist, we must have an identified edge . Therefore . To avoid directed cycles, must be identified. Therefore all edges between and are identified as pointing to .

Using the above two facts, let be the unique subtree in Fact 2 (if it exists); if there is no edge between and , then must be in the subgraph induced by itself and in Proposition 3, which has no more than maximal cliques. If there is an edge between and , we know that must be in the joint set of , and . Since the number of maximal cliques in must be no more than that of in Proposition 3, we know that has no more than maximal cliques.

Therefore, after interventions, we reduce the number of maximal cliques to at most while maintaining the size of the largest maximal clique . Using this iteratively, we obtain that CliqueTree identifies the source node with at most interventions.

For Supermodular, we do not discuss the gap between and and how well SATURATE solves (5). In this case, it is always no worse than the CliqueTree in the worst case over the feasible orientations of , since it solves MinMaxC optimally without constraining to maximal cliques. Therefore, it also takes no more than to identify the source node. ∎

E.3 Proof of Theorem 2

Proof of Theorem 2.

This result follows from Lemma 5 and 6. Divide into such that is the source node of . Since shifting affects the marginal of subsequent with , any algorithm needs to identify sequentially in order to identify the exact shift values.

Suppose are learned. For , consider the chain components of the subgraph of the shift--EG induced by with no incoming edge. Applying Lemma 4 for } and Observation 1 for this subgraph and , we deduce that there are exactly such chain components and has exactly one member in each of these chain components. Let be the sizes of the largest maximal cliques in these chain components. By Lemma 5, we know that any algorithm needs at least number of interventions to identify in the worst case. However, since all these chain components contain no more than maximal cliques, by Lemma 6, we know that our strategies need at most to identify .

Applying this result for , we obtain that our strategies for solving the causal mean matching problem require at most times more interventions, compared to the optimal strategy, in the worse case over all feasible orientations. ∎

Appendix F Numerical Experiments

F.1 Experimental Setup

Graph Generation: We consider two random graph models: Erdös-Rényi graphs (Erdős and Rényi,, 1960) and Barabási–Albert graphs (Albert and Barabási,, 2002). The probability of edge creation in Erdös-Rényi graphs is set to ; the number of edges to attach from a new node to existing nodes in Barabási–Albert graphs is set to . We then tested on two types of structured chordal graphs: rooted tree with root randomly sampled from all the nodes in this tree, and moralized Erdös-Rényi graphs (Shanmugam et al.,, 2015) with the probability of edge creation set to .

Multiple Runs: For each instance in the settings of Barabási–Albert graphs with 100 nodes and in Figure 5(a), we ran the three non-deterministic strategies (UpstreamRand,CliqueTree, Supermodular) for five times and observed little differences across all instances. Therefore, we excluded the error bars when plotting the results as they are visually negligible and the strategies are robust in these settings.

Implementation: We implemented our algorithms using the NetworkX package \citepappendixhagberg2008exploring and the CausalDAG package https://github.com/uhlerlab/causaldag. All code is written in Python and run on AMD 2990wx CPU.

F.2 More Empirical Results

In the following, we present additional empirical result. The evaluations are the same as in Section 6. The following figures show that we observe similar behaviors as in Figure 5 across different settings.

Random graphs of size : Barabási–Albert and Erdös-Rényi graphs with number of nodes in .

Larger Barabási–Albert graphs of size :

Two types of structured chordal graphs:

Appendix G Discussion of the Noisy Setting

In the noisy setting, an intervention can be repeated many times to obtain an estimated essential graph. Each intervention results in a posterior update of the true DAG over all DAGs in the observational Markov equivalence class. For a tree graph , this corresponds to a probability over all possible roots. To be able to learn the edges, \citetappendixgreenewald2019sample+ proposed a bounded edge strength condition on the noise for binary variables. Under this condition, they showed that the root node of a tree graph can be learned in finite steps in expectation with high probability.

In our setting, to ensure that the source node w.r.t. an intervention can be learned, we need to repeat this intervention for enough times such that the expectation of each variable can be estimated. Furthermore, to ensure that the edges in the (general) interventional essential graph can be learned, we need a similar condition as in \citepappendixgreenewald2019sample+ for general chordal graphs and continuous variables.

apalike \bibliographyappendixmain