Massive MIMO As an Extreme Learning Machine

Abstract

This work shows that a massive multiple-input multiple-output (MIMO) system with low-resolution analog-to-digital converters (ADCs) forms a natural extreme learning machine (ELM). The receive antennas at the base station serve as the hidden nodes of the ELM, and the low-resolution ADCs act as the ELM activation function. By adding random biases to the received signals and optimizing the ELM output weights, the system can effectively tackle hardware impairments, such as the nonlinearity of power amplifiers and the low-resolution ADCs. Moreover, the fast adaptive capability of ELM allows the design of an adaptive receiver to address time-varying effects of MIMO channels. Simulations demonstrate the promising performance of the ELM-based receiver compared to conventional receivers in dealing with hardware impairments.

Index Terms:

Massive MIMO, ELM, signal detection, nonlinear distortion, low-resolution ADC, hardware impairments.I Introduction

Massive multiple-input multiple-output (MIMO) systems, where the base station (BS) is equipped with a large number of antennas, is a promising technology for 5G and future generation wireless communications [1]. However, the requirement for a large number of radio frequency (RF) chains leads to high power consumption. To address this challenge, various techniques have been proposed to reduce the number of RF chains [2] and low-resolution analog-to-digital converters (ADCs) have been considered [3], [4], [5]. In addition to low-resolution ADCs at the BS, hardware impairments of the user equipment may also need to be addressed. For example, the use of cheap power amplifiers (PAs) can lead to severe nonlinear distortions to transmitted signals [6, 7]. Previous work has shown that hardware impairments have to be handled properly to avoid system performance degradation [3], [4], [5], [7]. Existing results consider impairments on either the BS or the user side, however they do not consider impairments on both sides.

An extreme learning machine (ELM) is a single-hidden layer feed-forward neural network (NN). In an ELM, the input weights and biases are randomly initialized and fixed, so that the only parameters to be learned are its output weights. Learning reduces to solving a least squares (LS) problem, making ELM fast in learning [8]. ELM receivers have been designed in our previous works [9, 10] to handle LED nonlinearity and/or cross-LED interference. These works show that ELM is very effective in dealing with nonlinear distortions and delivers much better performance than conventional polynomial based techniques [9, 10]. ELM has also been used for channel estimation and data detection in OFDM systems [11, 12].

In this work, we address the hardware impairments at both the BS and the user side in a massive MIMO system using the concept of an ELM. Although deep NNs have attracted much attention for MIMO receiver design (such as [4, 13]), we focus on ELM (with a single-hidden layer) due to its fast learning and adaptive capabilities. In particular, no back propagation is needed and the size of training samples required is relatively small. We consider the uplink of a massive MIMO system with low-resolution ADCs, where the transmitted signals of users suffer from PA nonlinear distortions. We show that the massive MIMO system forms a natural ELM. Specifically, the transmit antennas of users are regarded as the input nodes of the ELM, the massive number of antennas at the BS serve as the hidden nodes of the ELM so that the channel matrix functions as the ELM input weight matrix, and the low-resolution ADCs act as the activation function. We add random biases to the received signals before the ADC and learn the output weights of the ELM from training signals. As the ELM output weight optimization is simply an LS problem, an adaptive receiver is designed to deal with time-varying channels. Simulation results demonstrate the promising performance of the ELM based system in handling hardware impairments compared to conventional techniques.

Notations: Boldface lower-case and upper-case letters denote vectors and matrices, respectively. The superscripts and represent the transpose and conjugate transpose operations. We use and to denote the amplitude of and the norm of , and , represent the real and imaginary parts of a complex number, respectively.

II Massive MIMO with Hardware Impairments

Consider the uplink transmission of a massive MIMO system with active users. Each user has a single antenna and the BS is equipped with antennas, where can be much larger than . We consider two hardware impairments: PA nonlinear distortion at the transmitter (user) side and low-resolution ADCs at the receiver (BS) side.

The PA nonlinear distortion can be characterized by the amplitude to amplitude conversion and amplitude to phase conversion [14]:

| (1) |

where is the signal input to the PA, and , , and are parameters. The distorted signal due to the PA nonlinearity is expressed as

| (2) |

where denotes the angle of the complex signal .

The received signal at time instant is modeled as

| (3) |

where is an MIMO channel matrix, with being the transmitted signals of the users at time instant , and denotes an additive white Gaussian noise vector. Conventionally the receiver signal is quantized directly, however in this work, a random bias is added to the received signal, which is then quantized, i.e.,

| (4) |

where and represent the biases for the real part and imaginary part of , respectively, and denotes an element-wise quantization operation. For a uniform mid-rise quantizer, we have

| (5) |

where denotes the signal to be quantized, is the floor function and is the quantization step size. Due to the limited number of bits used for the ADCs, the input signal is clipped when its amplitude exceeds a threshold. The aim of the receiver is to recover based on .

III From ELM to ELM Based Massive MIMO System

III-A ELM Structure and Training

The structure of an ELM with input nodes, hidden nodes and output nodes is shown in Fig. 1 (a). The input weights and biases are randomly initialized and fixed [8]. The parameters to be learned are the output weights .

Suppose we have an input . The output of the hidden nodes of the ELM shown in Fig. 1 (a) can be expressed as

| (6) |

where is the input weight matrix, whose th element associates the th input node and the th hidden node, is the activation function, and is the bias vector. The th ELM output is then

| (7) |

where , and denotes the output weight associating the th hidden node with the th output node.

Suppose that there are training samples , where denotes the expected output. We can write (7) in matrix form as

| (8) |

with , where is an matrix given by

| (9) |

The output weight vector is trained by minimizing the cost function based on the linear model (8), where . The regularized LS solution is given by [15]

| (10) |

where is an identity matrix and is a regularization parameter.

III-B Massive MIMO as an ELM

Our design is inspired by the fact that the BS is equipped with a large number of antennas (normally ), and the quantization is nonlinear. As illustrated in Fig. 1, by biasing the received signals, a massive MIMO system naturally forms an ELM.

Comparing Fig. 1 (b) to Fig. 1 (a), we consider the transmit antennas as the input nodes of the ELM. The distorted signal is transmitted over the air, and detected by the receive antennas at the BS. We treat the receive antennas as the hidden nodes of the ELM, so that the channel matrix resembles the input weight matrix of the ELM. The received signals are biased before quantization, as shown in Fig. 1 (b). As the signals are complex-valued, we separate their real parts and imaginary parts. The quantized signals are then represented in vector form as

| (11) | |||||

where the length- bias vector is randomly generated and fixed,

| (12) |

and . Treating as the activation function, the only difference between (11) and (6) is the extra noise term .

If we ignore the noise term, then resembles the hidden layer output vector of the ELM. By mimicking ELM, the outputs of the ADCs are weighted to recover the signal at the output nodes in Fig. 1 (b). The transmitters, the massive MIMO channel and the BS receiver form a complete ELM, where the input is the distorted signal and the output is an estimate of (or more precisely estimates of ).

III-B1 Receiver training

The ELM output weight vectors , each pair corresponding to a user, can be learned using training signals . In the resulting ELM, the output vector of the hidden nodes in (11) is formed by signal transmission and low resolution ADC quantization without any additional computations, enabling a low complexity receiver. Collecting the outputs of the ADCs, we get the hidden node output matrix

| (13) |

The values of and can then be obtained by solving two regularized LS problems, i.e.,

| (14) |

| (15) |

where

| (16) | |||

| (17) |

III-B2 Data detection

Once the output weights for the users are learned, they are applied to the received signals to estimate the transmitted data symbols via

| (18) |

where is the output of the ADCs at time instant . A decision based on is then made, i.e.,

| (19) |

where belongs to the symbol alphabet.

III-C Comparisons with Other Receivers

III-C1 Conventional ZF and MMSE receiver

Given the channel matrix , the weight for user using the zero-forcing (ZF) receiver is represented as

| (20) |

where denotes the th row of the matrix. If the noise power or the signal to noise power ratio (SNR) is known, we can use the minimum mean squared error (MMSE) receiver whose weight for user is

| (21) |

Both receivers ignore the nonlinear distortion at the transmitter side and the impact of the low-resolution ADCs at the receiver side, resulting in very poor performance as shown in Section V. It is noted that both receivers require the channel matrix, which needs to first be estimated with training signals.

III-C2 ZF receiver with training

The ZF receiver can also directly be trained using training signals. In this case the weight of the receiver for user can be expressed as

| (22) |

where is the matrix of the quantized signals (no biasing before quantization), and is a regularization parameter. We can also separate the real and imaginary parts, so that the weights are obtained similarly to (14) and (15). The difference between the proposed receiver and the trained ZF receiver is that the received signals are biased before quantization in the proposed receiver. It is interesting that the directly trained detector performs better than the detectors with perfect (see Section V) because the hardware impairments are considered in training.

III-C3 ELM receiver borrowed from [9]

In [9], we proposed an ELM receiver to handle both the LED nonlinearity and cross-LED interference in MIMO LED communications. The receiver can be readily extended to massive MIMO, as shown in Fig. 2. The input to the ELM is the quantized signal (the output of the ADCs), the number of hidden nodes is , and the activation function is the sigmoid function. With the training signals, the output weights of the ELM can also be learned, and then applied for data symbol detection, similar to the proposed receiver. The proposed ELM based receiver in Fig. 1 (b) is very different, where the multiplication of the input weight matrix with the input vector is naturally accomplished by signal transmission over the air, which leads to much lower complexity of the new ELM based receiver in both training and detection. In data detection, the proposed ELM based receiver only needs to carry out (18) and (19). However, the ELM receiver borrowed from [9] needs to implement matrix-vector multiplications. In addition, as shown in Section V, the proposed ELM based receiver results in considerably better performance.

IV ELM Based Adaptive Receiver Design

ELM is attractive in that it allows fast adaptive learning as its output weights can be easily updated. This endows ELM with the unique capability of dealing with time-varying massive MIMO channels. By leveraging the online sequential-ELM (OSELM) [16], the ELM based receiver is readily extended to an adaptive setting.

The adaptive receiver consists of two learning phases, an initialization phase and a sequential learning phase. Suppose that we have training samples for initialization. The output weights for the users can be computed using (14) and (15), which are denoted as and for user . Define

| (23) |

In the subsequent learning phase with new training samples arriving one by one, the adaptive receiver recursively updates its weights. Taking as an example, we have

| (24) |

| (25) |

| (26) |

| (27) |

where is a forgetting factor to adjust the tracking capability and convergence rate. The above sample-by-sample update can also be extended to a chunk-by-chunk update [17].

Although the adaptive receiver may require a relatively large number of training samples (still smaller compared to deep NNs) for initialization, a small number of training samples are sufficient for tracking. This makes the adaptive receiver very attractive in handling time-varying massive MIMO channels, as demonstrated in Section V.

| Parameters of the massive MIMO channel | Value |

| Number of antennas at BS | 256 |

| Number of users | 10 |

| Carrier frequency | 2GHz |

| Symbol duration | 1s |

| Mean value of AOA | [,] |

| Angular spread (AS) | |

| Number of multiple rays for each user | 5 |

V Simulation Results

Assume that the BS is equipped with a uniform linear array (ULA) of antennas, number of users = 10, and 16-QAM is used. As in [14], the parameter setting for the PA nonlinearity is , , and . Assume 6-bit ADCs. The SNR is defined as , where is the power of the signal (per transmit antenna), and is the power of the noise (per receive antenna). We employ the massive channel model in [18], [19] and [20], which considers both spatial and temporal correlations, and assume that the ULA has a half-wavelength spacing. Table I lists the parameters used for massive MIMO channel generation. Small scale fading is considered and the multiple rays of each user are normalized. The power angular spectrum is modelled using a truncated Laplacian distribution. We consider the channel under a typical mobility scenario, i.e., urban macro [20]. For the ZF receiver with training, the weights are computed with real and imaginary parts separated. For the ELM receiver in [9], 512 hidden nodes are used, and the input weights and the biases (also the biases of the new ELM based receiver) are drawn independently from a uniform distribution [-0.1, 0.1].

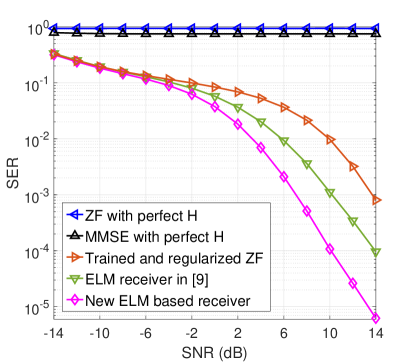

Figure 3 shows the symbol error rate (SER) of the new ELM based receiver, the ELM receiver borrowed from [9] and the ZF and MMSE receivers with perfect channel state information and noise power (but note that it is difficult to acquire them when low resolution ADCs are used). Quasi-static channels are assumed without considering the mobility of users, and the training length is 3000. It can be seen from Fig. 3 that the conventional ZF and MMSE receivers deliver poor performance due to the inability to mitigate the PA nonlinearity. The trained and regularized ZF receiver performs better than them as the impact of nonlinearity is considered in training. In contrast, the ELM receiver in [9] and the new ELM based receiver can effectively handle the hardware impairments, and the new ELM based receiver delivers the best performance, with significantly lower complexity compared to the ELM receiver in [9].

The difference between the trained and regularized ZF receiver and the new ELM receiver is that the received signals are biased before quantization in the new receiver. Their huge performance difference indicates the impact of signal biasing. To further examine the impact of signal biasing and quantization, We assume two trained and regularized ZF receivers without quantization: one performs signal biasing but the other one does not. The results are shown in Fig. 4. It is interesting to see that the trained ZF with biasing performs better than the trained ZF without biasing. This indicates that, for a linear receiver, adding biases to the received signals is helpful to deal with the nonlinear distortions. However, the trained ZF receiver with biasing still performs much worse than the new ELM based receiver. This indicates that the low resolution ADCs are helpful in dealing with the nonlinear distortion when they are exploited as the ELM activation function. The ADCs mimic a scaled version of typically used activation functions, e.g., tanh, due to the clipping affect.

Figure 5 shows the SER performance of the adaptive receiver with time-varying channels, where a mobile velocity of 100 km/h is assumed. After the initialization with 3000 training symbols, only 300 training symbols in each subsequent frame are used for receiver update. The forgetting factor is 0.98, and chunk-by-chunk update is used. As a benchmark, we also show the performance of the receiver, where we assume that 3000 training symbols are available for training in each frame. We can see that the adaptive receiver with training length 300 can achieve almost the same performance as that with training length 3000, indicating that training length 300 is sufficient for the receiver to track the channel.

Note that no explicit channel estimation is needed for the new receiver and the ZF receiver with training, which is in contrast to the conventional ZF and MMSE receivers. Batch training of the new ELM receiver and the ZF receiver requires the same complexity of . As for detection, the new ELM receiver has the same complexity as the ZF and MMSE receivers, i.e., per user.

VI Conclusion

We have shown that massive MIMO with low resolution ADCs can be treated as a natural ELM where the massive number of antennas serve as the hidden nodes and the ADCs act as the activation function of the ELM. By adding biases to the received signals and optimizing the ELM output weights, the receiver can effectively handle hardware impairments. An adaptive receiver is also designed and its capability of tracking time-varying channels is demonstrated.

References

- [1] J. G. Andrews, S. Buzzi, W. Choi, S. V. Hanly, A. Lozano, A. C. K. Soong, and J. C. Zhang, “What will 5G be?” IEEE J. Sel. Areas Commun., vol. 32, no. 6, pp. 1065–1082, June 2014.

- [2] S. S. Ioushua and Y. C. Eldar, “A family of hybrid analog–digital beamforming methods for massive MIMO systems,” IEEE Trans. Signal Process., vol. 67, no. 12, pp. 3243–3257, 2019.

- [3] S. Jacobsson, G. Durisi, M. Coldrey, U. Gustavsson, and C. Studer, “Throughput analysis of massive mimo uplink with low-resolution ADCs,” IEEE Trans. Wireless Commun., vol. 16, no. 6, pp. 4038–4051, April 2017.

- [4] N. Shlezinger and Y. C. Eldar, “Task-based quantization with application to mimo receivers,” Commun. Inf. Syst., vol. 20, pp. 131–162, 2020.

- [5] J. Singh, O. Dabeer, and U. Madhow, “On the limits of communication with low-precision analog-to-digital conversion at the receiver,” IEEE Trans. Commun., vol. 57, no. 12, pp. 3629–3639, December 2009.

- [6] E. Björnson, J. Hoydis, M. Kountouris, and M. Debbah, “Massive MIMO systems with non-ideal hardware: Energy efficiency, estimation, and capacity limits,” IEEE Trans. Inf. Theory, vol. 60, no. 11, pp. 7112–7139, Nov 2014.

- [7] L. Ding, G. T. Zhou, D. R. Morgan, Zhengxiang Ma, J. S. Kenney, Jaehyeong Kim, and C. R. Giardina, “A robust digital baseband predistorter constructed using memory polynomials,” IEEE Trans. Commun., vol. 52, no. 1, pp. 159–165, March 2004.

- [8] G.-B. Huang, Q.-Y. Zhu, and C.-K. Siew, “Extreme learning machine: theory and applications,” Neurocomputing, vol. 70, no. 1, pp. 489–501, Dec. 2006.

- [9] D. Gao and Q. Guo, “Extreme learning machine-based receiver for MIMO LED communications,” Digit. Signal Process., vol. 95, p. 102594, Dec. 2019.

- [10] D. Gao, Q. Guo, J. Tong, N. Wu, J. Xi, and Y. Yu, “Extreme-learning-machine-based noniterative and iterative nonlinearity mitigation for LED communication systems,” IEEE Syst. J., pp. 1–10, March 2020.

- [11] J. Liu, K. Mei, X. Zhang, D. Ma, and J. Wei, “Online extreme learning machine-based channel estimation and equalization for OFDM systems,” IEEE Commun. Lett., vol. 23, no. 7, pp. 1276–1279, 2019.

- [12] L. Yang, Q. Zhao, and Y. Jing, “Channel equalization and detection with ELM-based regressors for OFDM systems,” IEEE Commun. Lett., vol. 24, no. 1, pp. 86–89, Nov. 2020.

- [13] H. He, C. Wen, S. Jin, and G. Y. Li, “Model-driven deep learning for MIMO detection,” IEEE Trans. Signal Process., vol. 68, pp. 1702–1715, 2020.

- [14] A. A. M. Saleh, “Frequency-independent and frequency-dependent nonlinear models of twt amplifiers,” IEEE Trans. Commun., vol. 29, no. 11, pp. 1715–1720, Nov. 1981.

- [15] W. Deng, Q. Zheng, and L. Chen, “Regularized extreme learning machine,” in Proc. IEEE Symp. CIDM, March 2009, pp. 389–395.

- [16] N. Liang, G. Huang, P. Saratchandran, and N. Sundararajan, “A fast and accurate online sequential learning algorithm for feedforward networks,” IEEE Trans. Neural Netw., vol. 17, no. 6, pp. 1411–1423, 2006.

- [17] J.-S. Lim, S. Lee, and H.-S. Pang, “Low complexity adaptive forgetting factor for online sequential extreme learning machine (OS-ELM) for application to nonstationary system estimations,” Neural Comput. Appl., vol. 22, no. 3-4, pp. 569–576, 2013.

- [18] J. Ma, S. Zhang, H. Li, F. Gao, and S. Jin, “Sparse bayesian learning for the time-varying massive MIMO channels: Acquisition and tracking,” IEEE Trans. Wirel. Commun., vol. 67, no. 3, pp. 1925–1938, 2019.

- [19] Ke Liu, V. Raghavan, and A. M. Sayeed, “Capacity scaling and spectral efficiency in wide-band correlated MIMO channels,” IEEE Trans. Inf. Theory, vol. 49, no. 10, pp. 2504–2526, 2003.

- [20] L. You, X. Gao, A. L. Swindlehurst, and W. Zhong, “Channel acquisition for massive MIMO-OFDM with adjustable phase shift pilots,” IEEE Trans. Signal Process., vol. 64, no. 6, pp. 1461–1476, 2016.