Masked GAN for Unsupervised Depth and Pose Prediction with Scale Consistency

Abstract

Previous work has shown that adversarial learning can be used for unsupervised monocular depth and visual odometry (VO) estimation, in which the adversarial loss and the geometric image reconstruction loss are utilized as the mainly supervisory signals to train the whole unsupervised framework. However, the performance of the adversarial framework and image reconstruction is usually limited by occlusions and the visual field changes between frames. This paper proposes a masked generative adversarial network (GAN) for unsupervised monocular depth and ego-motion estimation. The MaskNet and Boolean mask scheme are designed in this framework to eliminate the effects of occlusions and impacts of visual field changes on the reconstruction loss and adversarial loss, respectively. Furthermore, we also consider the scale consistency of our pose network by utilizing a new scale-consistency loss, and therefore, our pose network is capable of providing the full camera trajectory over a long monocular sequence. Extensive experiments on the KITTI dataset show that each component proposed in this paper contributes to the performance, and both our depth and trajectory predictions achieve competitive performance on the KITTI and Make3D datasets.

Index Terms:

Adversarial learning, unsupervised learning, depth estimation, visual odometry, GAN, scale consistency.I Introduction

Understanding the 3D structure of a scene and estimating the ego-motion are two basic tasks of autonomous robots [1, 2]. With the development in deep learning (DL) technology, DL-based depth and pose prediction have achieved outstanding results in both supervised [1] and unsupervised manners [2]. Because they are free from expensive training methods that involve ground truth during training, unsupervised methods have been widely studied [3, 4], in which depth and pose networks are jointly trained by monocular videos. The principle is that one can warp the image in one frame (source frame) to another frame (target frame) using the projection based on predicted depth and ego-motion [2], which is called image warping or view reconstruction, and the difference between the real-world and synthesized images is regarded as the main supervisory signal instead of the ground truth during training. However, occlusions as well as the visual field changes between frames influence the quality of the synthesized images, which will also affect the view reconstruction and the training of unsupervised frameworks. Recent studies have combined masks [2], semantic segmentation [5] or motion segmentation [6] networks for multi-task training framework, thereby further improving the performance of the networks.

Adversarial learning has shown strong capabilities in the field of image processing recently [7]. Recent studies have demonstrated that introducing adversarial learning for depth and pose estimation can significantly improve the accuracy of these two tasks [8, 9, 10, 11, 12]. In [8, 9, 13], adversarial learning is combined with the framework of unsupervised methods for depth and pose estimation based on monocular videos. In their frameworks [8, 9, 13], a discriminator is designed to distinguish between the real-world images and the synthesized images, and the min-max game between the generator and discriminator is used to improve the performance of both the depth and VO networks. However, because of the occlusions and the visual field changes between frames, the quality of synthesized images is affected, causing the unreconstructed regions in synthesized images, which are inevitable and shown in Figs. 1 and 2. If these inevitable distinguishing features are learned by the discriminator, then the min-max game between the generator and discriminator will be broken and the performance of the total framework is limited, which is overlooked by previous works [8, 9, 13]. Finally, the performance of the generator (depth and pose networks) will be heavily affected. In this paper, we focus on eliminating the effects of occlusions and the visual field changes between frames on adversarial loss and propose a Boolean mask processing step to improve the training of the adversarial framework. Our framework is shown in Fig. 1.

Compared with the traditional visual odometry (VO) or structure from motion (SfM) methods [14, 15], DL-based methods can estimate the 6-DOF (degrees of freedom) ego-motion and pixel-level dense depth maps in an end-to-end manner without back-end optimization process. Previous unsupervised methods, such as [2, 16, 6, 9], are trained by short monocular frame snippets. There is lack of a suitable loss function to constrain the scale consistency of the predicted results among the different snippets, , the scale factors of the poses and depth maps predicted by the networks in two different snippets are different. As a result, because of the scale-inconsistency among different image snippets, the global trajectory of the monocular videos cannot be provided by the pose network, and the scale-inconsistent depth maps cannot be used in practice. To have a consistent scale estimation of a pose network, Bian et al. [17] consider this challenge and propose a geometric consistency loss to align the scale factor of different depth maps. However, they only constrain the consistency of values between depth maps and ignore the consistency of structures, resulting in performance limited. Therefore, we utilize the structural similarity (SSIM) [18] to further constrain the structural similarity and scale-consistency and get a better result.

Motivated by the limitation in previous studies and the outstanding performance of adversarial learning, this paper proposes a novel unsupervised adversarial framework for monocular depth and ego-motion estimation. A MaskNet and a Boolean mask scheme are proposed to eliminate the impacts of occlusions and visual field changes between frames on reconstruction loss and especially adversarial loss. In addition, a novel adaptive loss function based on SSIM is also designed in this paper to strengthen the scale consistency of our pose network. Moreover, we conduct detailed ablation studies to clearly demonstrate the effectiveness of the proposed unsupervised adversarial framework. Comprehensive evaluation results on the KITTI[19] and Make3D datasets [20] show that our proposed framework obtains a competitive accuracy and transferability. Furthermore, our pose network has the ability to provide an accurate trajectory over a long monocular video.

In summary, our main contributions are as follows:

-

•

We introduce a masked GAN framework for pose and depth estimation, where the effects of occlusion and visual field changes on view reconstruction are considered.

-

•

We discuss the effect of unreconstructed regions on adversarial learning, which is ignored in previous work, and Boolean mask processing is proposed in this paper to eliminate this negative influence.

-

•

We consider the scale-inconsistent problem and propose a adaptive constraint for a better global trajectory prediction. At the same time, both the pose and depth networks proposed in this paper show competitive results on public datasets.

In this paper, previous work on monocular ego-motion and depth prediction are discussed in Section II. Section III introduces the proposed unsupervised masked GAN framework in detail. Section IV shows our experimental results using the proposed method on KITTI [19] and Make3D datasets [20]. Finally, this study is concluded in Section V.

II Related Work

Traditional 3D structure recovery is based on the triangulation algorithm [15], which must find a set of matching pixels between multiple frames. With the technological advancements in DL, convolutional neural networks (CNNs) have shown their superb ability in monocular depth estimation. In this section, we review the previous works on DL-based monocular depth and ego-motion estimation.

Learning from the ground truth. Eigen et al. [1] introduce CNNs into monocular depth estimation and predict the depth in an end-to-end manner. Alex et al. [21] design a PoseNet based on CNNs for 6-DOF pose regression. Recently, in [22, 23], recurrent neural networks are also adopted to extract temporal features and preserve accumulated information to improve the estimation accuracy. Although the above methods [22, 23] achieve satisfactory accuracy in pose and depth estimation, both of them rely on the ground truth as the supervisory signal, and the ground truth is difficult and expensive to acquire.

Recently, unsupervised monocular depth and ego-motion estimation has been well investigated because it is free from the ground truth. With regard to the training approaches, unsupervised depth learning methods can be divided into two types: learning from stereo image pairs [24, 25, 26] and learning from monocular videos [2, 16, 27]. Although the training process of these methods depends on the geometric constraints between multi-frame, the trained networks can predict depth maps from a single image independently during testing.

Learning from stereo image pairs. Garg et al. [24] prove that a depth network can be trained by stereo image pairs in an unsupervised manner. They utilize the inverse depth prediction and the geometry between the stereo image pairs to reconstruct (inverse warping) the left image from the right image. The difference between synthesized and real target images is used as a supervisory signal during training. Godard et al. [25] follow and expand this idea by using a left-right consistency constraint to achieve better performance. In addition, in [26], authors introduce pose estimation into the framework, and the networks are jointly trained on stereo sequences. The geometric constraints of the temporal (image sequences) and spatial (stereo image pairs) aspects are utilized to improve the performance of the ego-motion and depth estimation. Although the above method [26] can estimate the pose and depth with scale information, the accuracy relies heavily on having accurate calibration between the stereo cameras.

Learning from monocular videos. Considering the advantages of a single camera system, such as small size and low power consumption, unsupervised methods based on monocular sequences have been proposed to train the ego-motion and depth prediction networks. Zhou et al. [2] propose a framework in which the depth network is jointly trained with the pose network by using monocular videos. They reconstruct the current image from its adjacent frames by view reconstruction, which relies on the output of pose and depth networks. Then, the reconstruction loss between the reconstructed and raw images is computed as supervisory signals. Afterwards, several researchers follow and extend [2] this approach into multiple tasks [16, 6, 5, 28], and the intrinsic geometric constraints among the different tasks are utilized to strengthen the supervised signal and improve the training process. For example, Ranjan et al. [6] combine four fundamental problems (depth prediction, ego-motion estimation, optical flow and motion segmentation) through geometric constraints. In addition, a competitive training approach is proposed to balance the training process of each network. Various sensor data [29, 30, 31] are also used for depth and pose estimation. Nevertheless, due to the lack of appropriate scale consistent constraints, the pose network trained by unlabelled frame snippets cannot generate the full trajectory of a long video sequence. Therefore, Bian et al. [17] tackle this challenge by a geometric loss for scale consistency.

Learning with generative adversarial networks (GANs). Because of the outstanding performance of GANs on image processing, introducing adversarial learning into monocular depth estimation is becoming a hot topic. In the adversarial learning framework [32], a generator is designed to learn and mimic the distribution of real data, and a discriminator is designed to assess the quality of the generated data and promote the performance of the generator. Kumar et al. [8] apply GANs to monocular depth estimation. Their generator consists of depth and pose networks, and the outputs of the networks are used to reconstruct the images by view construction. At the same time, a discriminator is designed to distinguish between the synthesized and real images. Recently, Almalioglu et al. [9] combine a recurrent learning approach with GAN for pose and depth estimation in an unsupervised manner. They leverage a long short-term memory module (LSTM) to extract temporal information for pose estimation. At the same time, their generator estimates the depth map from a random vector , i.e., the depth network cannot predict the depth map from a single image in an end-to-end manner. The most similar work to this paper is proposed in [13]. Li et al. introduce a mask network to reduce the effects of dynamic objects and occlusions on reconstruction loss, and an LSTM module is used for depth estimation. However, their depth network takes one single image and sequence information extracted by the LSTM as input for the depth estimation, i.e., their depth network can only be used on monocular sequences and cannot estimate the depth map from a single image, which is different from the proposed depth network herein. In addition, they do not consider the effects of occlusions on adversarial loss, which results in limited performance. In this paper, we propose a novel unsupervised adversarial framework for monocular depth and ego-motion estimation. A Boolean mask scheme is proposed to eliminate the effects of occlusions on adversarial loss, thereby getting a better performance.

Moreover, the above GAN-based methods [8, 9, 13] ignore the influence of occlusions and the visual field changes between adjacent frames in the adversarial learning. The pixels between the target and source images do not correspond exactly because of the visual field changes caused by motion, and thus, the target images cannot be reconstructed completely from the source images by view reconstruction algorithms and bilinear interpolation, as shown in Fig. 2. Therefore, the data distribution of the unreconstructed regions in the synthesized images is unique and cannot be eliminated exactly by the training of the generator. These unique distributions will be learned by the discriminator, which will affect the adversarial learning process and the performance of the generator, as shown in the experiments.

In this paper, we present a novel loss function to constrain the scale-consistency of our pose and depth networks. At the same time, considering the influence of the unreconstructed regions on the discriminator, which is overlooked in previous work, we design a mask network to estimate these regions, and we introduce Boolean mask processing to eliminate their influence.

III Methodology

In this section, we will give a brief introduction to the network architecture proposed in this paper, the unsupervised training framework, and the overall supervisory signals.

III-A Architecture overview

The framework of our unsupervised network is shown in Fig. 3. The generator takes a short sequential frame snippet that consists of a target image and an adjacent source image ; in addition, the output of the generator contains a depth map, a 6-DOF pose, and the Boolean mask , which corresponds to the unreconstructed region in the synthesized images. Based on the predicted depth and pose, the view reconstruction algorithm that is widely used in previous unsupervised methods is applied to warp the image in the source frame to the target frame, which is shown as the synthesized target image. Because of the inconsistent visual information between the frames caused by motion and view-field changes, synthesized target images produced by view reconstruction are incomplete when compared with the real target image. These unreconstructed areas in the synthesized target images become a distinctive feature between the real and fake images for the discriminator, which will help the discriminator to distinguish them, thereby breaking the balance of the max-min game in adversarial learning. Moreover, because the visual field changes between the adjacent frames are inevitable, these distinctive features (unreconstructed regions) usually persist and cannot be eliminated by continuous training of the generator. In this paper, to eliminate the effects of the unreconstructed regions on the discriminator, we present a Boolean mask to preprocess the real target images and construct the same unreconstructed regions on the real target image as the synthesized target image, as shown in Fig. 3. Therefore, the synthesized and real target images contain the same data distribution of these unreconstructed regions, which will have a positive effect on the training of the discriminator and thus the adversarial training process.

III-B Unsupervised depth and pose estimation

Generator: Our generator consists of three networks for different tasks, as shown in Fig. 3; a DepthNet for monocular depth prediction, a PoseNet for regressing the ego-motion between the target and source image, and a MaskNet for mask estimation. The view reconstruction algorithm is utilized to reconstruct the target image from a source image based on the output of the pose and depth networks. The mask predicted by MaskNet is utilized to eliminate the impacts of incomplete reconstruction regions on reconstruction loss and adversarial loss. For reconstruction loss, the effectiveness of mask has been demonstrated in recent works [2, 13]. For adversarial loss, we tackle this challenge by converting the mask into the Boolean mask to preprocess the target images and synthesize the same unreconstructed regions on target images as that on synthesized images. We train the proposed architecture in an unsupervised manner, and the overall objective loss function is formulated as follows:

| (1) |

where , , and are the balance factors between the loss terms. refers to the proposed scale consistency loss, and is a regularization term to constrain the training of MaskNet, which is inspired by Zhou et al. [2]. denotes the adversarial loss. stands for the basic loss. The basic loss is used to assist the training process of the generator and consists of two parts, the traditional reconstruction loss and the smoothness loss :

| (2) |

where and are the balance factors.

Reconstruction Loss: With the output of DepthNet and PoseNet, the target images can be reconstructed from the source images through the view reconstruction algorithm, which is widely used in previous unsupervised monocular depth estimation frameworks [2, 16, 17]. The principle of this algorithm is based on the following projection function:

| (3) |

where stands for the camera intrinsics matrix. denotes the depth estimation of the target image , and represents the predicted 6-DOF transformation between the target image and the source image . The pixels of two images establish the correspondence by a projection function. Then, we synthesize by warping the image in source frame to target frame. Finally, the reconstruction loss is formulated as follows:

| (4) |

where refers to a balance factor, and SSIM [18] is an index that shows the structural similarity between and .

Smoothness Loss: To filter out erroneous predictions and promote the representation of the geometric structure, a smoothness loss is designed to constrain the smoothness of the predicted depth map. Inspired by [33], we adopt the second-order differential for improving the smoothness:

| (5) |

where denotes the second-order differential operator, and is the transpose operation.

Traditional mask for reconstruction loss: To eliminate the influence of the visual field changes and the dynamic objects on reconstruction loss, we use a MaskNet to predict these unreconstructed regions. During training, a MaskNet is designed to estimate the different regions between the real target image and the synthesized target image , formatted as , and a regularization term is used to constrain the MaskNet during training, which is similar to [2]. Our intention is to use the MaskNet to predict the regions that could not be reconstructed on synthesized images. Considering the effect of incomplete reconstruction on the reconstruction loss, the mask predicted by MaskNet is introduced into :

| (6) |

Our final basic model with the mask is formulated as follow:

| (7) |

Scale Consistency Loss: In unsupervised monocular frameworks, the depth and pose networks are trained by unlabelled short snippets. However, different snippets have different scale factors (compared with the ground truth) because there is no corresponding scale constraint loss applied. Therefore, previous pose and depth networks [2, 16] cannot provide scale-consistent results between different snippets. Bian et al. [17] consider the scale-consistency of pose networks for generating full trajectories over long monocular videos. Similarly, this paper proposes a novel adaptive loss for better constraint on geometric and scale consistency between snippets, which is formulated as follows:

| (8) |

| (9) |

where refers to a balance factor. is computed by the projection algorithm shown in Eq. (9), and it has the same scale information as . stands for the predicted depth map of the target image. is the predicted depth map of the source image (the target image of the next frame snippet). is reconstructed from by the warping algorithm, which is similar to the view reconstruction process in [2], and it contains the same scale information as . Then, SSIM loss [18] is adopted for the consistency of and in such a way that the scale between the snippets is aligned.

III-C Boolean mask processing for the masked GAN

Original GAN: consists of two components, a generator () and a discriminator () [32]. The is designed to distinguish the real data from synthesized data by learning the difference of data distribution. At the same time, the major task of the generator is to generate a set of data with the same distribution as the real data to fool the discriminator. Therefore, a max-min game is played between the generator and discriminator, and the loss function of the original GAN [32] is formulated as:

| (10) | ||||

where and stand for the data distribution of and , respectively. Finally, a mapping relationship between two data distributions is established through adversarial learning.

The unsupervised monocular depth estimation cannot feed the discriminator with a real depth map because there is no ground truth. To address this limitation, instead of distinguishing between the real and predicted depth map, the RGB images synthesized by the view reconstruction are sent to discriminator together with the real target images in the monocular framework combined with GANs [8, 9]:

| (11) | ||||

where and stand for the discriminator and generator of this paper.

Since the data distribution of the unreconstructed regions is unique to the synthetic images, which will affect the adversarial learning, we propose a Boolean mask to reduce the impact of the unreconstructed regions on the adversarial learning, and the same unreconstructed regions as synthesized images are produced on the target images.

Boolean mask processing: First, we transfer the floating-point mask predicted by MaskNet to a Boolean type by comparing it with a threshold :

| (12) |

where stands for the pixel index on the mask. Then, to eliminate the distinctive feature (unreconstructed regions in synthesized images) between the synthesized and real target images, we use the Boolean mask to reweight the target image and get similar unreconstructed regions as the synthesized image on the target image :

| (13) |

Besides, to prevent this Boolean mask processing step from introducing the new and particular noises into target images, which will also influence the training of discriminator, we do the same Boolean mask processing operation to the synthesized image :

| (14) |

Masked GAN: After preprocessing by the Boolean mask, the masked target image (real) and synthesized target image (fake) are sent to the discriminator, and based on [32], our final adversarial loss is formulated as follows:

| (15) | ||||

where and are calculated by Boolean mask processing, Eq.13 and Eq. 14.

Floating-point mask processing: To verify the effectiveness of Boolean mask processing, instead of transferring the mask to the Boolean one, the floating-point mask predicted by MaskNet is directly used to preprocess the synthesized and real target images (, ):

| (16) |

Therefore, based on [32], the adversarial loss combined with floating-point mask processing is formulated as:

| (17) | ||||

where and are calculated by floating-point mask processing, shown in Eq.(16).

| Lower is better | Higher is better | |||||||

| Ablation study based on different loss function | ||||||||

| Method | Resolution | Abs Rel | Sq Rel | RMSE | RMSE log | |||

| 416128 | 0.154 | 1.287 | 5.918 | 0.236 | 0.796 | 0.925 | 0.970 | |

| + | 416128 | 0.152 | 1.166 | 5.552 | 0.225 | 0.794 | 0.934 | 0.974 |

| + + | 416128 | 0.148 | 1.104 | 5.667 | 0.227 | 0.801 | 0.932 | 0.974 |

| + + + | 416128 | 0.145 | 1.095 | 5.601 | 0.223 | 0.803 | 0.932 | 0.975 |

| + + + | 416128 | 0.147 | 1.086 | 5.555 | 0.223 | 0.799 | 0.934 | 0.976 |

| + + + | 416128 | 0.146 | 1.084 | 5.445 | 0.221 | 0.807 | 0.936 | 0.976 |

| Comparison between different mask networks | ||||||||

| Mask network | Resolution | Abs Rel | Sq Rel | RMSE | RMSE log | |||

| MaskNet (Ours) | 416128 | 0.146 | 1.084 | 5.445 | 0.221 | 0.807 | 0.936 | 0.976 |

| Mask network ([2]) | 416128 | 0.149 | 1.121 | 5.580 | 0.225 | 0.799 | 0.932 | 0.974 |

| Parameter optimization | ||||||||

| Weight | Resolution | Abs Rel | Sq Rel | RMSE | RMSE log | |||

| 416128 | 0.150 | 1.107 | 5.594 | 0.227 | 0.799 | 0.931 | 0.974 | |

| 416128 | 0.148 | 1.091 | 5.536 | 0.223 | 0.802 | 0.934 | 0.976 | |

| 416128 | 0.146 | 1.081 | 5.544 | 0.222 | 0.805 | 0.934 | 0.975 | |

| 416128 | 0.147 | 1.103 | 5.520 | 0.224 | 0.805 | 0.934 | 0.975 | |

| 416128 | 0.146 | 1.081 | 5.544 | 0.222 | 0.805 | 0.934 | 0.975 | |

| 416128 | 0.146 | 1.084 | 5.445 | 0.221 | 0.807 | 0.936 | 0.976 | |

| 416128 | 0.147 | 1.091 | 5.448 | 0.222 | 0.804 | 0.936 | 0.976 | |

IV Experiments

IV-A Implementation Details

Network architecture: Our unsupervised method mainly consists of two modules, the generator and the discriminator, and the model can be divided into three subnetworks, the MaskNet coupled with the PoseNet, the DepthNet, and the discriminator network. For the DepthNet, we follow previous work [6, 17] and take the DispResNet [16] as our DepthNet. The DepthNet takes a single image as input and outputs a depth map in an end-to-end manner. For the PoseNet and MaskNet, we directly adopt the coupled framework proposed in [2, 13], while the main difference is that we add the skip-connections between the corresponding encoding and decoding layers to improve the performance of the mask prediction. This coupled framework takes a concatenated RGB image snippet as input, which consists of one target image and two or four source images. The PoseNet predicts the 6 DOF poses between the target and source images, and the MaskNet predicts the masks to handle the occlusions and visual field changes between the target and source images. The architecture of discriminator network is designed with reference to the encoder of DispNet [34]. The real target images and synthesized target images are both preprocessed by a Boolean mask processing step before being sent to the discriminator. During training, the discriminator outputs the probability that the input image is real or fake, and the adversarial learning between the generator and discriminator improves the training of the depth and pose networks.

Training detail: The proposed adversarial learning framework is implemented using TensorFlow [35]. For the depth prediction task, we train and test our DepthNet on the KITTI raw dataset [19] by using Eigen’s split [1]. For the pose prediction task, following [26], we train and test our PoseNet on the KITTI odometry dataset [19], where the sequences 00-08 are used for training and sequences 09-10 are used for testing. The setup of our training and testing sets is the same as the ones in previous related studies [2, 16, 17, 26]. We regard a snippet of three (for training PoseNet) or five (for training DepthNet) sequential video frames as a training sample, which consists of one target image (middle frame) and two or four source images. We have done experiments with two different resolutions, and . We train our framework in 50 epochs and obtain 10 randomly sampled batches in one epoch for validation, which is different from previous work in which the framework is trained in 100 epochs [36] or 200 epochs [17] and 1000 randomly sampled batches are obtained in one epoch for validation, and thus, it indicates that our model shows a good convergence rate. Following [17, 36], we also pre-train our networks on Cityscapes [37] and fine-tune it on KITTI [19], each for 50 epochs.

The training of our networks is based on a single RTX 8000 GPU. During training, the image resolution is resized to or , the weights of the generator and discriminator are optimized by ADAM optimizers [38] with , , and the learning rate is 0.0002. During training, we adopt , , , , , , , . Our parameters are set based on the experiments or related work [16], and we show some experimental results of hyper-parameter optimization in Table I.

| Lower is better | Higher is better | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Method | Resolution | SC | Abs Rel | Sq Rel | RMSE | RMSE log | |||

| Kumar et al. [8] | 384128 | 0.211 | 1.980 | 6.154 | 0.264 | 0.732 | 0.898 | 0.959 | |

| GANVO ∗ [9] | 416128 | 0.150 | 1.141 | 5.448 | 0.216 | 0.808 | 0.939 | 0.975 | |

| Li et al. ∗ [13] | 416128 | 0.150 | 1.127 | 5.564 | 0.229 | 0.823 | 0.936 | 0.974 | |

| Ours | 416128 | 0.146 | 1.084 | 5.445 | 0.221 | 0.807 | 0.936 | 0.976 | |

| Ours | 640192 | 0.139 | 1.034 | 5.264 | 0.214 | 0.821 | 0.942 | 0.978 | |

Evaluation metrics: For depth evaluation, the commonly used evaluation metrics proposed by Eigen et al. [1] are used in this paper to compare our method with others fairly; these include five evaluation indicators: RMSE, RMSE log, Abs Rel, Sq Rel, Accuracy:

-

•

,

-

•

,

-

•

,

-

•

,

-

•

Accuracy: of s.t. ,

where and denote the predicted depth of pixel and the corresponding ground truth, and denotes the total number of pixels that correspond to the ground truth depth value. denotes a threshold, which is always set to , , and .

For the trajectory evaluation, the generated full trajectory is evaluated by the standard evaluation metrics provided in the dataset [19], including a translation error metric and a rotation error metric . Compared with the 5-frame pose evaluation that proposed in [2], the evaluation metrics in this paper are more widely used in traditional VO methods and are more meaningful.

IV-B Monocular Depth Estimation

Ablation study: In this section, we first conduct a series of ablation experiments to validate the efficacy of our proposed framework, as shown in Table I. The “” denotes that our framework is trained by the basic loss (Eq. (2)), which consists of the reconstruction loss (, Eq. (4)) and smoothness loss (, Eq. (5)). Then, scale consistency loss (, Eq. (8)), adversarial learning (, Eq. (11)), masks for reconstruction loss (, Eq. (7), ), and Boolean mask processing (, Eq. (15)) are introduced sequentially into training. Although both the mask and Boolean mask processing (BMP) in Table I are based on the output of MaskNet, the mask is to reduce the effect of the unreconstructed regions on the reconstruction loss, while BMP is applied to eliminate the impact of the unreconstructed regions on the adversarial loss.

| Lower is better | Higher is better | ||||||||||

| Method | Dataset | Supervision | Resolution | Cap | Abs Rel | Sq Rel | RMSE | RMSE log | |||

| SfMLearner [2] | K | Mono. | 416128 | 80m | 0.208 | 1.768 | 6.865 | 0.283 | 0.678 | 0.885 | 0.957 |

| Yang et al. [39] | K | Mono. | 416128 | 80m | 0.182 | 1.481 | 6.501 | 0.267 | 0.725 | 0.906 | 0.963 |

| Vid2depth [40] | K | Mono. | 416128 | 80m | 0.163 | 1.240 | 6.220 | 0.250 | 0.762 | 0.916 | 0.968 |

| GeoNet-ResNet. [16] | K | Mono. | 416128 | 80m | 0.155 | 1.296 | 5.857 | 0.233 | 0.793 | 0.931 | 0.973 |

| Wang et al. [27] | K | Mono. | 416128 | 80m | 0.154 | 1.163 | 5.700 | 0.229 | 0.792 | 0.932 | 0.974 |

| SC-SfM-ResNet [17] | K | Mono. | 416128 | 80m | 0.149 | 1.137 | 5.771 | 0.230 | 0.799 | 0.932 | 0.973 |

| Xiong et al. [36] | K | Mono. | 416128 | 80m | 0.148 | 1.077 | 5.506 | 0.228 | 0.806 | 0.934 | 0.973 |

| Ours | K | Mono | 416128 | 80m | 0.146 | 1.084 | 5.445 | 0.221 | 0.807 | 0.936 | 0.976 |

| DF-Net [28] | K | Mono. | 576160 | 80m | 0.150 | 1.124 | 5.507 | 0.223 | 0.806 | 0.933 | 0.973 |

| CC [6] | K | Mono. | 832256 | 80m | 0.140 | 1.070 | 5.326 | 0.217 | 0.826 | 0.941 | 0.975 |

| SC-SfM [17] | K | Mono. | 832256 | 80m | 0.137 | 1.089 | 5.439 | 0.217 | 0.830 | 0.942 | 0.975 |

| Xiong et al. [36] | K | Mono. | 832256 | 80m | 0.140 | 1.061 | 5.309 | 0.219 | 0.823 | 0.940 | 0.976 |

| Ours | K | Mono. | 640192 | 80m | 0.139 | 1.034 | 5.264 | 0.214 | 0.821 | 0.942 | 0.978 |

| DF-Net [28] | CS+K | Mono. | 576160 | 80m | 0.146 | 1.182 | 5.215 | 0.213 | 0.818 | 0.943 | 0.978 |

| CC [6] | CS+K | Mono. | 832256 | 80m | 0.139 | 1.032 | 5.199 | 0.213 | 0.827 | 0.943 | 0.977 |

| SC-SfM [17] | CS+K | Mono. | 832256 | 80m | 0.128 | 1.047 | 5.234 | 0.208 | 0.846 | 0.947 | 0.976 |

| Xiong et al. [36] | CS+K | Mono. | 832256 | 80m | 0.126 | 0.902 | 5.052 | 0.205 | 0.851 | 0.950 | 0.979 |

| Ours | CS+K | Mono. | 640192 | 80m | 0.135 | 1.026 | 5.153 | 0.210 | 0.833 | 0.945 | 0.979 |

From the results shown in Table I, comparing lines 1 and 2 in the table, the proposed scale consistency loss is conducive to improvement in the depth estimation. Comparing lines 2 and 3, the introduction of adversarial learning (GAN) does not effectively improve the accuracy of the depth network, , the introduced adversarial learning does not play a key role in the training of DepthNet. In addition, we achieve a better result when the proposed is used to reduce the impact of the unreconstructed regions on adversarial learning. Therefore, we believe that the unreconstructed regions influence the performance of adversarial learning. Then, the mask predicted by MaskNet is introduced into the framework, which is shown as “ + + + ”. From the evaluation metrics, the depth estimation accuracy of “ + + + ” outperforms that of “ + + ”, which means that the adopted mask improves the training process of the network. With the qualitative results on the mask in Fig. 4, the unreconstructed regions of the synthesized images are accurately predicted in the masks. Therefore, the reason for accuracy improvement in the depth estimation is that the mask can significantly mitigate the influence of the unreconstructed regions on the reconstruction loss. Afterwards, we adopt the BMP in this paper to mask the real target images (real) and synthesized target images (fake) before sending them to the discriminator, which is shown as “ + + + ”, and the best result among the four cases is achieved. In addition, the qualitative results of the BMP shown in Fig. 4 also demonstrate that our proposed BMP can construct similar unreconstructed regions in target images, which helps to reduce the impact of the unreconstructed regions on the adversarial learning and plays a key role in adversarial learning. In summary, this ablation study indicates the effectiveness of our proposed modules.

Comparison of different mask networks: Since the mask predicted by the MaskNet is very important in this paper and plays a key role in the adversarial loss and reconstructing loss, we applied some modifications (skip connection between encoder and decoder) on the mask network proposed in [2] to improve its performance. As shown in Table I, the experimental result shows that these modifications effectively improve the performance of our framework, which also illustrates the importance of the MaskNet to our framework.

Comparison of different mask processing: To further verify the ability of the Boolean mask, we compare the GAN processed by the BMP () with cases of GANs processed by floating-point mask (FMP) () and the original GAN without mask processing (); the results are shown in lines 4, 5 and 6 of Table I. Although the FMP cannot create similar unreconstructed regions on real target images as synthesized target images, it can be seen from Table I that FMP plays a positive role in improving the performance of adversarial learning when compared with the original GAN. According to the examples of the different mask processing results shown in Fig. 5, the effect of the FMP is not as obvious as that of the BMP. In addition, the experimental results in Table I show that both the FMP and BMP are effective in accuracy improvement of the depth estimation, and the BMP works the best.

Comparison with the methods using GANs: We compare our method with the unsupervised monocular methods proposed in [8, 9, 13], which introduce adversarial learning into the training framework. Note that the GANVO [9] generates its depth prediction from a vector, which means that their depth network cannot be used independently and predict the depth in an end-to-end manner. At the same time, the depth network in [13] uses a single image as well as the temporal information for the depth estimation, and as a result, this network takes more information than ours on depth estimation. In addition, because of the LSTM module used in their framework in [13], the accuracy of the depth network depends on the image sequences, and the depth network cannot predict the depth map from only a single image, which limits its practical applications. Although our DepthNet is jointly trained with other networks in an unsupervised manner, it can be used independently during testing and has the ability to accurately generate depth maps from single images, which enables it to perform depth estimation on some independent images such as network images. The quantitative results are shown in Table II, and our method obtain the competitive results.

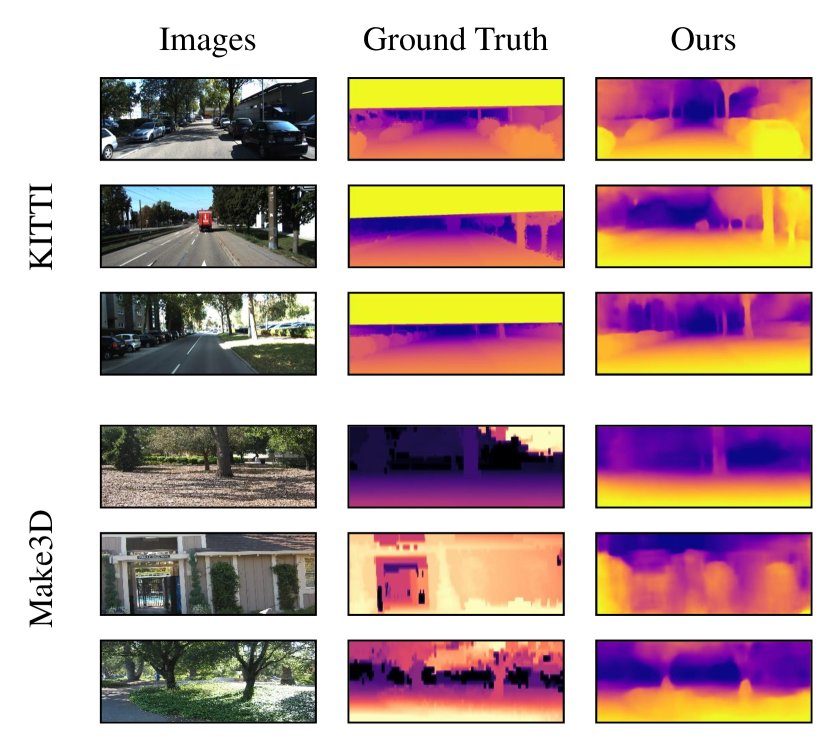

Comparison with previous work: The qualitative and quantitative results of our DepthNet are evaluated by public metrics and are shown in Fig. 6 and Table III. Comparing the ground truth with our predicted depth maps in Fig. 6, our deep network predicts the depth information of the geometric structures in the scenes, such as trees, streets, cars, and buildings. Note that higher resolutions include detailed geometric details, and thus, we divide the results of the different methods according to the resolution of their input images for fairness. We choose images with different resolutions as input to our DepthNet. As shown in Table III, we obtain competitive performance on end-to-end monocular depth prediction with different resolution, 416128 and 640192. Our results also prove that a high-resolution input is conducive to improvement in the accuracy.

Evaluation based on other datasets: Previous methods [16, 17, 6] have proved that depth networks will get better results if the models are pre-trained on the Cityscapes datasets [37] before training on KITTI dataset [19]. Following this approach, we first use the Cityscapes dataset to pre-train our total framework, and then the pre-trained model is further trained by the KITTI training set, which is shown as “CS+K” in Tables III and V.

To further evaluate the performance of our depth network on other data domains, following [36], we directly test our depth model trained by “CS+K” on the Make3D test set [20]. As shown in Table IV, our DepthNet also shows good performance when compare with previous methods, which means that our model has better transferability. Our qualitative results are illustrated in Fig. 6.

IV-C Trajectory Prediction

For PoseNet, following Zhan et al. [26] and Bian et al. [17], the standard evaluation tools provided by the dataset are used to evaluate the full predicted trajectories, which are different from previous monocular DL-based pose evaluation methods [2, 16, 6, 42]. Table V shows the average rotation and translation errors of the predicted trajectories on the KITTI odometry sequences 09-10. As shown in Table V, comparing the results of “Ours(Without )” and “Ours”, the proposed scale consistency loss is effective in constraining the scale consistency in the trajectory prediction. Although the method in [13] also considers the scale-inconsistency of pose estimation, the generated trajectories are neither evaluated in the article nor published online, and thus, we cannot make a quantitative comparison with their trajectories. The visual results are shown in Fig. 7, which are drawn by evo tools [43] with automatic scale alignment for the full trajectory. The method [26] trained with stereo image pairs does not have the problem of scale inconsistency because it learns the scale information from stereo image pairs during the training. Monocular methods [2, 16, 44] train their network with monocular sequence and suffer from per-pose scale ambiguity and inconsistency. At the same time, the scale information between the different snippets predicted by PoseNet is inconsistent in such a way that it cannot provide an accurate trajectory. Bian et al. tackle this problem by geometric alignment, and our framework obtains a better result than theirs. As shown in Table. V, our model, when trained on “CS+K”, does not obtain a large improvement similar to others [28, 17] when compared with the model trained on “K”.

| Seq. 09 | Seq. 10 | ||||

| Method | Supervision | ||||

| ORB-SLAM [15] | - | 15.30 | 0.26 | 3.68 | 0.48 |

| SfMLearner [2] | Mono./ K | 17.84 | 6.78 | 37.91 | 17.78 |

| GeoNet [16] | Mono./ K | 41.47 | 13.14 | 32.74 | 13.12 |

| Zhan et al. [26] | Stereo./ K | 11.93 | 3.91 | 12.45 | 3.46 |

| Bian et al. [17] | Mono./ K | 11.2 | 3.35 | 10.1 | 4.96 |

| Wang et al. [44] | Mono./ K | 9.88 | 3.40 | 12.24 | 5.20 |

| Ours(Without ) | Mono./ K | 12.83 | 3.87 | 13.58 | 4.33 |

| Ours | Mono./ K | 8.71 | 3.10 | 9.63 | 3.42 |

| Bian et al. [17] | Mono./ CS+K | 8.24 | 2.19 | 10.7 | 4.58 |

| Xiong et al. [36] | Mono./ CS+K | 5.85 | 1.73 | 10.11 | 3.89 |

| Ours | Mono./ CS+K | 8.13 | 2.64 | 9.74 | 3.58 |

In addition, as shown in Table V, because of the strong back-end optimization, ORB-SLAM [15] shows more powerful performance in position and orientation prediction than deep learning-based VO methods. Although there is still a large gap compared with the traditional VO method [15], our PoseNet has the ability to predict the full trajectory over a long monocular video in an end-to-end manner.

V Conclusions

In this paper, we present an unsupervised monocular depth and VO estimation framework that has scale consistency through adversarial learning methods. The proposed method considers the impact of incomplete reconstruction caused by dynamic objects, occlusions and visual field changes on the reconstruction loss and adversarial loss, which in turn affects the training of the discriminator and generator networks. This paper tackles these problems by introducing MaskNet. The mask predicted by MaskNet is used to reduce the impact of incomplete reconstruction on the reconstruction loss. In addition, we design a BMP to preprocess real images to produce the same data distribution as the unreconstructed areas on the synthesized images, thus restoring the balance between the generator and discriminator. Furthermore, we tackle the scale-inconsistency in pose and depth estimation by introducing an adaptive constraint. With the proposed adversarial learning framework, our depth model shows competitive results with state-of-the-art methods, and our pose model has the ability to provide a global trajectory over a long monocular sequence, which is meaningful for practical applications. In the future, we will improve the geometric constraints for more accurate trajectory prediction.

References

- [1] D. Eigen, C. Puhrsch, and R. Fergus, “Depth map prediction from a single image using a multi-scale deep network,” in Advances in Neural Information Processing Systems, 2014, pp. 2366–2374.

- [2] T. Zhou, M. Brown, N. Snavely, and D. G. Lowe, “Unsupervised learning of depth and ego-motion from video,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 1851–1858.

- [3] C. Zhao, Q. Sun, C. Zhang, Y. Tang, and F. Qian, “Monocular Depth Estimation Based On Deep Learning: An Overview,” Science China Technological Sciences, pp. 1612–1627, 2020.

- [4] C. Zhang, J. Wang, G. G. Yen, C. Zhao, Q. Sun, Y. Tang, F. Qian, and J. Kurths, “When autonomous systems meet accuracy and transferability through ai: A survey,” Patterns (Cell Press), vol. 1, no. 4, art. No. 100050, 2020.

- [5] P.-Y. Chen, A. H. Liu, Y.-C. Liu, and Y.-C. F. Wang, “Towards scene understanding: Unsupervised monocular depth estimation with semantic-aware representation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 2624–2632.

- [6] A. Ranjan, V. Jampani, L. Balles, K. Kim, D. Sun, J. Wulff, and M. J. Black, “Competitive Collaboration: Joint Unsupervised Learning of Depth, Camera Motion, Optical Flow and Motion Segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 12 240–12 249.

- [7] J. Zhang and C. Li, “Adversarial examples: Opportunities and challenges,” IEEE Transactions on Neural Networks and Learning Systems, vol. 31, no. 7, pp. 2578–2593, 2020.

- [8] A. CS Kumar, S. M. Bhandarkar, and M. Prasad, “Monocular depth prediction using generative adversarial networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 300–308.

- [9] Y. Almalioglu, M. R. U. Saputra, P. P. de Gusmao, A. Markham, and N. Trigoni, “GANVO: Unsupervised deep monocular visual odometry and depth estimation with generative adversarial networks,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 5474–5480.

- [10] S. Zhao, H. Fu, M. Gong, and D. Tao, “Geometry-Aware Symmetric Domain Adaptation for Monocular Depth Estimation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 9788–9798.

- [11] H. Jung, Y. Kim, D. Min, C. Oh, and K. Sohn, “Depth prediction from a single image with conditional adversarial networks,” in 2017 IEEE International Conference on Image Processing (ICIP). IEEE, 2017, pp. 1717–1721.

- [12] A. Pilzer, D. Xu, M. Puscas, E. Ricci, and N. Sebe, “Unsupervised adversarial depth estimation using cycled generative networks,” in 2018 International Conference on 3D Vision (3DV). IEEE, 2018, pp. 587–595.

- [13] S. Li, F. Xue, X. Wang, Z. Yan, and H. Zha, “Sequential adversarial learning for self-supervised deep visual odometry,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 2851–2860.

- [14] J. Engel, V. Koltun, and D. Cremers, “Direct sparse odometry,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 3, pp. 611–625, 2017.

- [15] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source slam system for monocular, stereo, and rgb-d cameras,” IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

- [16] Z. Yin and J. Shi, “GeoNet: Unsupervised learning of dense depth, optical flow and camera pose,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1983–1992.

- [17] J.-W. Bian, Z. Li, N. Wang, H. Zhan, C. Shen, M.-M. Cheng, and I. Reid, “Unsupervised Scale-consistent Depth and Ego-motion Learning from Monocular Video,” in Thirty-third Conference on Neural Information Processing Systems (NeurIPS), 2019, pp. 35–45.

- [18] Z. Wang, A. C. Bovik, H. R. Sheikh, E. P. Simoncelli et al., “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004.

- [19] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The KITTI dataset,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1231–1237, 2013.

- [20] A. Saxena, M. Sun, and A. Y. Ng, “Make3d: Learning 3d scene structure from a single still image,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 31, no. 5, pp. 824–840, 2008.

- [21] A. Kendall, M. Grimes, and R. Cipolla, “Posenet: A convolutional network for real-time 6-dof camera relocalization,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 2938–2946.

- [22] F. Xue, X. Wang, S. Li, Q. Wang, J. Wang, and H. Zha, “Beyond tracking: Selecting memory and refining poses for deep visual odometry,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 8575–8583.

- [23] A. CS Kumar, S. M. Bhandarkar, and M. Prasad, “Depthnet: A recurrent neural network architecture for monocular depth prediction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 283–291.

- [24] R. Garg, V. K. BG, G. Carneiro, and I. Reid, “Unsupervised cnn for single view depth estimation: Geometry to the rescue,” in European Conference on Computer Vision. Springer, 2016, pp. 740–756.

- [25] C. Godard, O. Mac Aodha, and G. J. Brostow, “Unsupervised monocular depth estimation with left-right consistency,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 270–279.

- [26] H. Zhan, R. Garg, C. Saroj Weerasekera, K. Li, H. Agarwal, and I. Reid, “Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 340–349.

- [27] G. Wang, H. Wang, Y. Liu, and W. Chen, “Unsupervised Learning of Monocular Depth and Ego-Motion Using Multiple Masks,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 4724–4730.

- [28] Y. Zou, Z. Luo, and J.-B. Huang, “Df-Net: Unsupervised joint learning of depth and flow using cross-task consistency,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 36–53.

- [29] B.-U. Lee, H.-G. Jeon, S. Im, and I. S. Kweon, “Depth completion with deep geometry and context guidance,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 3281–3287.

- [30] S. Imran, Y. Long, X. Liu, and D. Morris, “Depth coefficients for depth completion,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2019, pp. 12 438–12 447.

- [31] C. Chen, S. Rosa, Y. Miao, C. X. Lu, W. Wu, A. Markham, and N. Trigoni, “Selective sensor fusion for neural visual-inertial odometry,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 10 542–10 551.

- [32] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in Neural Information Processing Systems, 2014, pp. 2672–2680.

- [33] Z. Yang, P. Wang, Y. Wang, W. Xu, and R. Nevatia, “LEGO: Learning Edge with Geometry all at Once by Watching Videos,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 225–234.

- [34] N. Mayer, E. Ilg, P. Hausser, P. Fischer, D. Cremers, A. Dosovitskiy, and T. Brox, “A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 4040–4048.

- [35] M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin et al., “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” arXiv preprint arXiv:1603.04467, 2016.

- [36] M. Xiong, Z. Zhang, W. Zhong, J. Ji, J. Liu, and H. Xiong, “Self-supervised Monocular Depth and Visual Odometry Learning with Scale-consistent Geometric Constraints,” the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20), 2020.

- [37] M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The Cityscapes Dataset for Semantic Urban Scene Understanding,” in Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 3213–3223.

- [38] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [39] Z. Yang, P. Wang, W. Xu, L. Zhao, and R. Nevatia, “Unsupervised learning of geometry with edge-aware depth-normal consistency,” arXiv preprint arXiv:1711.03665, 2017.

- [40] R. Mahjourian, M. Wicke, and A. Angelova, “Unsupervised learning of depth and ego-motion from monocular video using 3d geometric constraints,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 5667–5675.

- [41] C. Wang, J. Miguel Buenaposada, R. Zhu, and S. Lucey, “Learning depth from monocular videos using direct methods,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 2022–2030.

- [42] T. Feng and D. Gu, “Sganvo: Unsupervised deep visual odometry and depth estimation with stacked generative adversarial networks,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 4431–4437, 2019.

- [43] M. Grupp, “evo: Python package for the evaluation of odometry and SLAM.” https://github.com/MichaelGrupp/evo, 2017.

- [44] R. Wang, S. M. Pizer, and J.-M. Frahm, “Recurrent neural network for (un-) supervised learning of monocular video visual odometry and depth,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 5555–5564.