MarsSQE: Stereo Quality Enhancement for Martian Images Using Bi-level Cross-view Attention

Abstract

Stereo images captured by Mars rovers are transmitted after lossy compression due to the limited bandwidth between Mars and Earth. Unfortunately, this process results in undesirable compression artifacts. In this paper, we present a novel stereo quality enhancement approach for Martian images, named MarsSQE. First, we establish the first dataset of stereo Martian images. Through extensive analysis of this dataset, we observe that cross-view correlations in Martian images are notably high. Leveraging this insight, we design a bi-level cross-view attention-based quality enhancement network that fully exploits these inherent cross-view correlations. Specifically, our network integrates pixel-level attention for precise matching and patch-level attention for broader contextual information. Experimental results demonstrate the effectiveness of our MarsSQE approach.

Index Terms:

Stereo quality enhancement, Martian images, attention mechanisms.I Introduction

Driven by the rapid advancements in Mars exploration, rovers such as Perseverance [1] and Zhu Rong [2] have successfully landed on Mars and captured invaluable images showcasing the Martian surface. These images provide invaluable data for scientific research. However, the vast communication distance between Earth and Mars—reaching up to 400 million kilometers [3]—poses a major challenge for transmitting these images. To overcome this challenge, Martian images are typically subjected to lossy compression [4], which inevitably introduces compression artifacts and degrades image quality. This highlights the need for Martian image quality enhancement.

To the best of our knowledge, there is only one pioneering study on the quality enhancement of Martian images [5], which leverages semantic similarities among Martian images. In addition, approaches for enhancing Earth image quality offer straightforward solutions for improving Martian image quality. For instance, Dong et al. [6] introduced the first Convolutional Neural Network (CNN) for quality enhancement, proposing a four-layer network named the Artifacts Reduction CNN (AR-CNN). Zhang et al. [7] developed a Denoising CNN (DnCNN) capable of removing blocking effects caused by JPEG compression. Further advancements have focused on blind and resource-efficient quality enhancement [8, 9, 10, 11, 12].

The aforementioned quality enhancement approaches are all monocular-based, relying solely on single-view images. However, Mars rovers are equipped with stereo cameras to capture binocular images for depth estimation and navigation [13, 14]. These stereo images exhibit cross-view correlations unavailable in single-view images, making them valuable for quality enhancement tasks. In fact, studies on Earth image quality enhancement have demonstrated the potential of cross-view information exchange. For instance, PASSRnet [15] and iPASSR [16] utilize cross-view information for stereo super-resolution, achieving superior results compared to monocular approaches.

Despite this potential, stereo quality enhancement for Martian images remains unexplored. To address this gap, we establish the first stereo Martian image dataset for quality enhancement. Through extensive analysis, we confirm that these images exhibit notably high intra-view and cross-view correlations. Motivated by these insights, we propose a novel stereo quality enhancement approach for Martian images, named MarsSQE. Our approach incorporates a bi-level cross-view attention-based network that fully exploits these inherent correlations. In addition to employing pixel-level attention, commonly used for Earth images [15, 16], our network integrates patch-level attention to capture broader contextual information. This bi-level design is particularly important because the Martian surface is highly unstructured, featuring irregular gravel, rocks, soil, and dunes [17], which challenges precise pixel-level matching [18]. Moreover, accurate cross-view correspondence depends not only on individual pixels but also on their spatial relationships with surrounding pixels, as verified by [18].

Finally, we conduct extensive experiments and validate the effectiveness of our MarsSQE approach. The contributions of this paper are summarized as following:

-

•

We establish and analyze the first dataset of stereo Martian images, highlighting their high inherent intra-view and cross-view correlations.

-

•

We propose the first stereo quality enhancement approach for Martian images, integrating bi-level cross-view attention to effectively exploit these correlations.

II Stereo Martian Image Dataset

Dataset establishment

We establish the first stereo Martian image dataset for quality enhancement. Our dataset comprises 1,350 pairs of stereo Martian images captured by the Mast Camera Zoom (Mastcam-Z)—an imaging system consisting of a pair of RGB cameras mounted on the Perseverance Rover [14]. These image pairs are all binocular with a resolution of . The dataset covers four primary Martian landforms: rock, soil, sand, and sky, as shown in Figure 2. When establishing the dataset, we exclude images with severe occlusion, corruption, or insignificant parallax shifts. The images are of high quality without noticeable artifacts, serving as ground truth for our dataset111High-quality or even lossless Martian images are returned when downlink data volumes are high [14] and thus are available to us.. Following the Mastcam-Z procedure, these high-quality images are then compressed using JPEG to emulate the actual image transmission process, resulting in compressed stereo Martian images.

Intra-view and cross-view correlations of stereo Martian images

We compare Martian images from our dataset with Earth images from the Flickr1024 dataset [19]. Then we calculate the Correlation Coefficient (CC) and Mutual Information (MI), with higher values indicating greater similarity. Specifically, we pair patches within the same image to assess intra-view similarity, and between the left and right images to assess cross-view similarity. As shown in Table I, Martian images exhibit significantly higher CC results than Earth images, suggesting stronger correlations both within and across views. Additionally, Martian images show 21.66% higher MI result for intra-view and 20.61% higher for cross-view, indicating greater information gain in both contexts. These results highlight the significance of the stereo quality enhancement paradigm for Martian images, as it leverages complementary stereo information to improve image quality.

| Metric | Martian | Earth | |

|---|---|---|---|

| Intra-view | CC | 0.7719 | 0.0688 |

| MI | 1.1657 | 0.9582 | |

| Cross-view | CC | 0.7738 | 0.0793 |

| MI | 1.1657 | 0.9665 | |

III MarsSQE Approach

Overview

The overall framework of our MarsSQE approach is shown in Figure 1. Our framework includes three procedures: feature extraction, bi-level cross-view attention, and image reconstruction. Given the compressed image pair and as inputs, we first extract image features and send them to the proposed bi-level cross-view attention sub-network for enhancement. This sub-network employs two patch-level attention modules and one pixel-level attention module, such that details missed in the current view but reserved in the other view during compression can be discovered. Finally, we reconstruct images and from the enhanced features. In the subsequent paragraph, we describe the pipeline of the bi-level cross-view attention sub-network. Then, we detail the design of patch-level and pixel-level attention modules in this sub-network.

Bi-level cross-view attention

Due to the unique unstructured terrain of Mars, Martian images are highly similar, which causes difficulties in stereo image matching. Therefore, we first apply patch-level attention between two views with broader contextual information. As shown in the middle of Figure 1, the resulting feature is concatenated with the input feature and fused by a Channel Attention (CA) layer [20] and a convolution layer with a kernel size of . The fusion of features is then passed through a convolution layer with a kernel size of , followed by a sigmoid layer. After that, a pixel-level attention module is used for precise matching between two views, followed by a convolution layer with a kernel size of and two Residual Dense Blocks (RDBs) [21]. Finally, the feature is enhanced by another patch-level attention module.

Patch-level attention module

As shown in Figure 3 left, the patch-level attention module has two main operations: intra-view patch attention and cross-view patch attention . Mathematically, given input features and , we obtain the enhanced features and by the following processes:

| (1) | ||||

| (2) | ||||

| (3) |

Take the left-view enhancement as an example. Supposing , the input feature is divided into non-overlapping patches of size . In , self-attention is performed in every patch, generating for each view. Within the cross-view attention calculation, the left-view and the right-view take the following calculation:

| (4) |

Pixel-level attention module

As shown in Figure 3 right, we first employ cross-view pixel attention to the left-view feature and the right-view feature , and then employ intra-view pixel attention to each view. This way, we obtain the enhanced features and . The whole process can be formulated as:

| (5) | ||||

| (6) |

where and represent cross-view pixel attention and intra-view pixel attention, respectively.

IV Experiments

IV-A Experimental Settings

We randomly select 800 image pairs from our stereo Martian image dataset for training, and 100 image pairs for testing. Following the Mastcam-Z procedure, JPEG is utilized for Martian image compression, with the Quality Factor (QF) set to 30, 40, 50, and 60 separately. We choose a patch size of 16 in the patch-level attention module. The batch size is set to 4. We use the Adam optimizer [22] with and . The learning rate is set to initially and decreases by 0.9 times every three epochs. Our network is trained with a maximum of two NVIDIA 4090 GPUs using the PyTorch framework. The L1 loss is used for network training, which is formulated as:

| (7) |

where and represent enhanced left and right view images generated by our MarsSQE approach, and and represent their ground-truth raw images.

We compare our MarsSQE approach with several quality enhancement baselines, including AR-CNN [6], DnCNN [7], CBDNet [9], RBQE [10], and MarsQE [5]. We also compare it to super-resolution baselines PASSRnet [15] and iPASSR [16]. MarsQE is the only approach specifically designed for Martian images, while other approaches were originally proposed for Earth images. Among these approaches, PASSRnet and iPASSR handle binocular images, while others are applicable to monocular ones. All monocular approaches are retrained using 800 left-view and 800 right-view Martian images. PASSRnet and iPASSR are also retrained with the scale factor set to 1. Then we calculate the Peak Signal to Noise Ratio (PSNR) and Structural SIMilarity (SSIM) index for enhancement quality.

IV-B Evaluation

| View | QF | JPEG | AR-CNN | DnCNN | CBDNet | RBQE | MarsQE | PASSRnet | iPASSR | MarsSQE |

|---|---|---|---|---|---|---|---|---|---|---|

| Left | 30 | 30.44/0.823 | 31.30/0.838 | 31.60/0.845 | 31.50/0.843 | 31.66/0.847 | 31.68/0.849 | 31.64/0.847 | 31.76/0.850 | 31.86/0.852 |

| 40 | 31.06/0.843 | 31.89/0.856 | 32.14/0.862 | 32.29/0.866 | 32.38/0.868 | 32.40/0.869 | 32.32/0.867 | 32.41/0.868 | 32.50/0.870 | |

| 50 | 31.57/0.858 | 32.41/0.871 | 32.71/0.877 | 32.82/0.880 | 32.95/0.882 | 33.01/0.884 | 32.84/0.880 | 32.89/0.881 | 33.07/0.885 | |

| 60 | 32.14/0.873 | 33.00/0.885 | 33.27/0.891 | 33.46/0.894 | 33.55/0.896 | 33.61/0.897 | 33.30/0.892 | 33.55/0.896 | 33.66/0.898 | |

| Right | 30 | 30.57/0.828 | 31.44/0.844 | 31.74/0.851 | 31.63/0.849 | 31.83/0.853 | 31.84/0.854 | 31.81/0.853 | 31.93/0.855 | 32.04/0.858 |

| 40 | 31.21/0.847 | 32.05/0.862 | 32.29/0.867 | 32.43/0.871 | 32.56/0.873 | 32.58/0.875 | 32.50/0.872 | 32.59/0.874 | 32.69/0.876 | |

| 50 | 31.73/0.862 | 32.57/0.876 | 32.87/0.882 | 32.97/0.884 | 33.15/0.887 | 33.20/0.889 | 33.03/0.885 | 33.06/0.886 | 33.26/0.890 | |

| 60 | 32.29/0.876 | 33.15/0.889 | 33.43/0.895 | 33.61/0.898 | 33.74/0.900 | 33.79/0.901 | 33.48/0.896 | 33.74/0.900 | 33.84/0.902 | |

| Avg. | 30 | 30.50/0.825 | 31.37/0.841 | 31.67/0.848 | 31.57/0.846 | 31.74/0.850 | 31.76/0.851 | 31.73/0.850 | 31.84/0.852 | 31.95/0.855 |

| 40 | 31.14/0.845 | 31.97/0.859 | 32.21/0.865 | 32.36/0.869 | 32.47/0.870 | 32.49/0.872 | 32.41/0.869 | 32.50/0.871 | 32.59/0.873 | |

| 50 | 31.65/0.860 | 32.49/0.873 | 32.79/0.879 | 32.90/0.882 | 33.05/0.885 | 33.10/0.886 | 32.94/0.883 | 32.98/0.883 | 33.16/0.887 | |

| 60 | 32.21/0.875 | 33.08/0.887 | 33.35/0.893 | 33.53/0.896 | 33.65/0.898 | 33.70/0.899 | 33.39/0.894 | 33.64/0.898 | 33.75/0.900 |

| Approach | PSNR | SSIM | Params. |

|---|---|---|---|

| PASSRnet | 31.73 | 0.8502 | 1.36M |

| iPASSR | 31.84 | 0.8524 | 1.37M |

| MarsSQE-S | 31.90 | 0.8537 | 1.00M |

| MarsSQE-M | 31.92 | 0.8539 | 1.32M |

| MarsSQE-L | 31.95 | 0.8550 | 1.69M |

Quantitative performance

As shown in Table II, our MarsSQE approach achieves superior performance compared to other approaches. Specifically, MarsSQE achieves a PSNR of 31.95 dB under QF=30, which is 0.19 dB higher than the best monocular approach, MarsQE, and 0.11 dB higher than the best binocular approach, iPASSR. Similar trends are observed under QF=40, 50, and 60. Furthermore, MarsSQE achieves the best Rate-Distortion (RD) performance, as shown in Figure 4. In conclusion, MarsSQE outperforms both monocular and binocular approaches in quantitative evaluation.

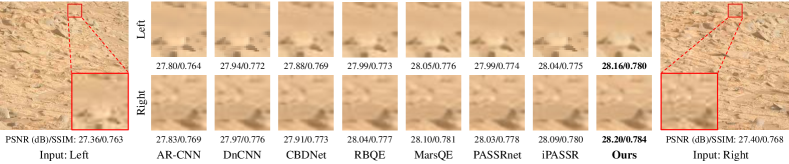

Qualitative performance

As shown in Figures 5a and 5b, the texture and boundary of rocks are blurred and noisy due to compression in both the left- and right-view images. MarsSQE successfully restores the texture and distinguishes the boundary, while other approaches (such as PASSRnet in Figure 5a and CBDNet in Figure 5b) fail to recover the complete rock. Additionally, some approaches introduce artifacts, such as AR-CNN and DnCNN in both images. In conclusion, MarsSQE provides the best qualitative enhancement performance.

Efficiency performance

We provide two lightweight variants, MarsSQE-M and MarsSQE-S, by reducing the number of main channels in MarsSQE(-L) from 64 to 48 and 32, respectively. As shown in Table III, MarsSQE-S surpasses iPASSR by 0.06 dB while requiring 27% fewer parameters. MarsSQE-M, with fewer parameters than both iPASSR and PASSRnet, provides a PSNR gain of 0.08 dB. Additionally, MarsSQE-L, despite having 23% more parameters than iPASSR, delivers a 0.11 dB higher PSNR. These results demonstrate the superior efficiency of MarsSQE compared to existing approaches.

IV-C Ablation Study

To verify the effectiveness of the core component of our MarsSQE approach, i.e., the bi-level cross-view attention, we perform an ablation study. Specifically, both levels of cross-attention are removed, and only several convolutional layers and residual dense blocks are maintained to extract and reconstruct compressed images. The results show that PSNR is reduced by 0.18 dB (but still higher than all monocular approaches), which proves that cross-view information is very effective for binocular image recovery. This result further demonstrates the importance of conducting stereo quality enhancement for Martian images.

V Conclusion

In this letter, we established the first stereo Martian image dataset. By evaluating the correlations between left and right views, we found that cross-view relationships are significantly stronger in Martian images compared to Earth images. This motivated us to propose a bi-level cross-view attention-based stereo quality enhancement network for Martian images. Our network integrates patch-level attention for broader contextual information and pixel-level attention for precise matching to fully exploit the inherent cross-view correlations. Experiments demonstrate that our MarsSQE approach achieves better performance in both quantitative and qualitative comparisons.

References

- [1] J. N. Maki, D. Gruel, C. McKinney, M. A. Ravine, M. Morales, D. Lee, R. Willson, D. Copley-Woods, M. Valvo, T. Goodsall, J. McGuire, R. G. Sellar, J. A. Schaffner, M. A. Caplinger, J. M. Shamah, A. E. Johnson, H. Ansari, K. Singh, T. Litwin, R. Deen, A. Culver, N. Ruoff, D. Petrizzo, D. Kessler, C. Basset, T. Estlin, F. Alibay, A. Nelessen, and S. Algermissen, “The mars 2020 engineering cameras and microphone on the perseverance rover: A next-generation imaging system for mars exploration,” Space Science Reviews, vol. 216, no. 8, p. 137, Nov. 2020.

- [2] X. Liang, W. Chen, Z. Cao, F. Wu, W. Lyu, Y. Song, D. Li, C. Yu, L. Zhang, and L. Wang, “The navigation and terrain cameras on the tianwen-1 mars rover,” Space Science Reviews, vol. 217, no. 3, p. 37, Mar. 2021.

- [3] D. Manzey, “Human missions to mars: New psychological challenges and research issues,” Acta Astronautica, vol. 55, no. 3, pp. 781–790, Aug. 2004.

- [4] A. G. Hayes, P. Corlies, C. Tate, M. Barrington, J. F. Bell, J. N. Maki, M. Caplinger, M. Ravine, K. M. Kinch, K. Herkenhoff, B. Horgan, J. Johnson, M. Lemmon, G. Paar, M. S. Rice, E. Jensen, T. M. Kubacki, E. Cloutis, R. Deen, B. L. Ehlmann, E. Lakdawalla, R. Sullivan, A. Winhold, A. Parkinson, Z. Bailey, J. van Beek, P. Caballo-Perucha, E. Cisneros, D. Dixon, C. Donaldson, O. B. Jensen, J. Kuik, K. Lapo, A. Magee, M. Merusi, J. Mollerup, N. Scudder, C. Seeger, E. Stanish, M. Starr, M. Thompson, N. Turenne, and K. Winchell, “Pre-flight calibration of the mars 2020 rover mastcam zoom (mastcam-z) multispectral, stereoscopic imager,” Space Science Reviews, vol. 217, no. 2, p. 29, Feb. 2021.

- [5] C. Liu, M. Xu, Q. Xing, and X. Zou, “Marsqe: Semantic-informed quality enhancement for compressed martian image,” 2024. [Online]. Available: https://arxiv.org/abs/2404.09433

- [6] C. Dong, Y. Deng, C. C. Loy, and X. Tang, “Compression artifacts reduction by a deep convolutional network,” in 2015 IEEE International Conference on Computer Vision, ICCV 2015, Santiago, Chile, December 7-13, 2015. Santiago, Chile: IEEE Computer Society, 2015-12-07/2015-12-13, pp. 576–584.

- [7] K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang, “Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising,” IEEE Transactions on Image Processing, vol. 26, no. 7, pp. 3142–3155, Jul. 2017.

- [8] X. Fu, Z.-J. Zha, F. Wu, X. Ding, and J. Paisley, “Jpeg artifacts reduction via deep convolutional sparse coding,” in Proceedings of the IEEE/CVF International Conference on Computer Vision. Seoul, Korea (South): IEEE, 2019, pp. 2501–2510.

- [9] S. Guo, Z. Yan, K. Zhang, W. Zuo, and L. Zhang, “Toward convolutional blind denoising of real photographs,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, CA, USA: Computer Vision Foundation / IEEE, 2019-06-16/2019-06-20, pp. 1712–1722.

- [10] Q. Xing, M. Xu, T. Li, and Z. Guan, “Early exit or not: Resource-efficient blind quality enhancement for compressed images,” in Computer Vision – ECCV 2020 - 16th European Conference, ser. Lecture Notes in Computer Science, A. Vedaldi, H. Bischof, T. Brox, and J.-M. Frahm, Eds., vol. 12361. Glasgow,UK: Springer International Publishing, 2020-08-23/2020-08-28, pp. 275–292.

- [11] J. Li, Y. Wang, H. Xie, and K.-K. Ma, “Learning a single model with a wide range of quality factors for jpeg image artifacts removal,” IEEE Transactions on Image Processing, vol. 29, pp. 8842–8854, 2020.

- [12] Q. Xing, M. Xu, X. Deng, and Y. Guo, “Daqe: Enhancing the quality of compressed images by exploiting the inherent characteristic of defocus,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 8, pp. 9611–9626, Aug. 2023.

- [13] J. F. Bell III, A. Godber, S. McNair, M. A. Caplinger, J. N. Maki, M. T. Lemmon, J. Van Beek, M. C. Malin, D. Wellington, K. M. Kinch, M. B. Madsen, C. Hardgrove, M. A. Ravine, E. Jensen, D. Harker, R. B. Anderson, K. E. Herkenhoff, R. V. Morris, E. Cisneros, and R. G. Deen, “The mars science laboratory curiosity rover mastcam instruments: Preflight and in-flight calibration, validation, and data archiving,” Earth and Space Science, vol. 4, no. 7, pp. 396–452, 2017.

- [14] J. F. Bell, J. N. Maki, G. L. Mehall, M. A. Ravine, M. A. Caplinger, Z. J. Bailey, S. Brylow, J. A. Schaffner, K. M. Kinch, M. B. Madsen, A. Winhold, A. G. Hayes, P. Corlies, C. Tate, M. Barrington, E. Cisneros, E. Jensen, K. Paris, K. Crawford, C. Rojas, L. Mehall, J. Joseph, J. B. Proton, N. Cluff, R. G. Deen, B. Betts, E. Cloutis, A. J. Coates, A. Colaprete, K. S. Edgett, B. L. Ehlmann, S. Fagents, J. P. Grotzinger, C. Hardgrove, K. E. Herkenhoff, B. Horgan, R. Jaumann, J. R. Johnson, M. Lemmon, G. Paar, M. Caballo-Perucha, S. Gupta, C. Traxler, F. Preusker, M. S. Rice, M. S. Robinson, N. Schmitz, R. Sullivan, and M. J. Wolff, “The mars 2020 perseverance rover mast camera zoom (mastcam-z) multispectral, stereoscopic imaging investigation,” Space Science Reviews, vol. 217, no. 1, p. 24, Feb. 2021.

- [15] L. Wang, Y. Wang, Z. Liang, Z. Lin, J. Yang, W. An, and Y. Guo, “Learning parallax attention for stereo image super-resolution,” in IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019. Long Beach, CA, USA: Computer Vision Foundation / IEEE, 2019, pp. 12 250–12 259.

- [16] Y. Wang, X. Ying, L. Wang, J. Yang, W. An, and Y. Guo, “Symmetric parallax attention for stereo image super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. virtual: Computer Vision Foundation / IEEE, 2021-06-19/2021-06-25, pp. 766–775.

- [17] H. Liu, M. Yao, X. Xiao, and H. Cui, “A hybrid attention semantic segmentation network for unstructured terrain on mars,” Acta Astronautica, vol. 204, pp. 492–499, Mar. 2023.

- [18] J. Sun, Z. Shen, Y. Wang, H. Bao, and X. Zhou, “Loftr: Detector-free local feature matching with transformers,” in IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, June 19-25, 2021. virtual: Computer Vision Foundation / IEEE, 2021-06-19/2021-06-25, pp. 8922–8931.

- [19] Y. Wang, L. Wang, J. Yang, W. An, and Y. Guo, “Flickr1024: A large-scale dataset for stereo image super-resolution,” in 2019 IEEE/CVF International Conference on Computer Vision Workshops, ICCV Workshops 2019, Seoul, Korea (South), October 27-28, 2019, Seoul, Korea (South), Oct. 2019, pp. 3852–3857.

- [20] Y. Zhang, K. Li, K. Li, L. Wang, B. Zhong, and Y. Fu, “Image super-resolution using very deep residual channel attention networks,” in Computer Vision - ECCV 2018 - 15th European Conference, Munich, Germany, September 8-14, 2018, Proceedings, Part VII, ser. Lecture Notes in Computer Science, vol. 11211. Springer, 2018, pp. 294–310.

- [21] Y. Zhang, Y. Tian, Y. Kong, B. Zhong, and Y. Fu, “Residual dense network for image super-resolution,” in 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018. Salt Lake City, UT, USA: Computer Vision Foundation / IEEE Computer Society, 2018-06-18/2018-06-22, pp. 2472–2481.

- [22] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, San Diego, CA, USA, 2015.