Market Directional Information Derived From

(Time, Execution Price, Shares Traded) Sequence of Transactions.

On The Impact From The Future.

Abstract

$Id: ImpactFromTheFuture.tex,v 1.269 2022/10/09 10:41:55 mal Exp $

An attempt to obtain market directional information from non–stationary solution of the dynamic equation: ‘‘future price tends to the value maximizing the number of shares traded per unit time’’ is presented. A remarkable feature of the approach is an automatic time scale selection. It is determined from the state of maximal execution flow calculated on past transactions. Both lagging and advancing prices are calculated.

Времена Пугачёвского бунта. Самозванец выступает перед народом, говорит о грядущем счастье, которое придёт в форме мужицкого царства. Пленный офицер спрашивает: ‘‘Откуда деньги будут на всю эту благодать’’? Пугачёв ответил: ‘‘Ты что, дурак? Из казны жить будем!’’

Народная легенда, 1774.

I Introduction

Introduced in [1] the ultimate market dynamics problem: an evidence of existence (or a proof of non–existence) of an automated trading machine, consistently making positive P&L trading on a free market as an autonomous agent can be formulated in its weak and strong forms[2]: whether such an automated trading machine can exist with legally available data (weak form) and whether it can exist with transaction sequence triples (time, execution price, shares traded) as the only information available (strong form); in the later case execution flow is the only available characteristic determining market dynamics.

Let us formulate the problem in the third, ‘‘superstrong’’, form: Whether the future value of price can be predicted from (time, execution price, shares traded) sequence of past transactions? Previously[3, 4] we thought this is not possible, only P&L that includes not only price dynamics but also trader actions can be possibly predicted. Recent results changed our opinion.

There are two types of predicted price: ‘‘lagging’’ (retarded) and ‘‘advancing’’ (future) Lagging price corresponds to past observations; future direction is determined by the difference of last price and . An example of is moving average. A common problem with lagging price is that it typically assumes an existence of a time scale the is calculated with, what gives incorrect direction for market movements with time scales lower than the one of ; however making the time scale too low creates a large amount of false signals. Advancing price is predicting actual value of future price; the direction is determined by the difference of and . The is typically calculated from limit order book information, brokerage clients order flow timings, etc.

In this work both lagging and advancing prices are calculated from (time, execution price, shares traded) sequence of past transactions. The key element is to determine the state of maximal execution flow (eigenvalue problem (10)), as experiments show it’s importance for market dynamics. Found state automatically selects the time scale what makes the approach robust.

Found lagging price (49) is the price in state plus trending term that suppresses false signals. The advancing price is obtained by considering density matrix state corresponding to the state ‘‘since till now’’ and experimentally observed fact that operators and have to be equal in state. This corresponds to the result of our previous works [3, 5]: execution flow (the number of shares traded per unit time), not trading volume (the number of shares traded), is the driving force of the market: asset price is much more sensitive to execution flow (dynamic impact), rather than to traded volume (regular impact).

This paper is concerned only with obtaining directional information from a sequence of past transaction in a ‘‘single asset universe’’ just for simplicity, see Section VIII below for multi asset universe generalization. Whereas the dynamics theory of Section IV definitely requires additional research, the lagging indicator (49) of Section VI, see Fig. 8, can be practically applied to trading even in a single asset universe. In this work we do not implement any trading ideas of [3, 4], where a concept of liquidity deficit trading: open a position at low , then close already opened position at high , as this is the only strategy that avoids eventual catastrophic P&L losses. This paper is concerned only with obtaining a directional information that is required to determine what side the position has to be open on a liquidity deficit event.

II The State Of Maximal Execution Flow

Introduce a wavefunction as a linear combination of basis function :

| (1) |

Then an observable market–related value , corresponding to probability density , is calculated by averaging timeserie sample with the weight ; the expression corresponds to an estimation of Radon–Nikodym derivative[6]:

| (2) | |||||

| (3) |

For averages we use bra–ket notation by Paul Dirac: and . The (2) is plain ratio of two moving averages, but the weight is not regular decaying exponent from (55), but exponent multiplied by wavefunction squared as , the defines how to average a timeserie sample. Any function is defined by coefficients , the value of an observable variable in state is a ratio of two quadratic forms on (3); as an example of a wavefunction see localized state (13), it can be used for Radon–Nikodym interpolation: ; familiar least squares interpolation is also available: .

One can also consider a more general form of average, , where is an arbitrary polynomial, not just the square of a wavefunction. These states correspond to a density matrix average:

| (4) |

This average, the same as (2), is a ratio of two moving averages. For an algorithm to convert a polynomial to the density matrix see Theorem 3 of [7]. A useful application of the density matrix states is to study an average ‘‘since ’’; for example if corresponds to a past spike, then the polynomial ‘‘since till now’’ is with defined in (61); price change between ‘‘now’’ and the time of spike is , similarly, total traded volume on this interval is .

The main idea of [3] is to consider a wavefunction (1) then to construct (3) quadratic forms ratio. A generalized eigenvalue problem can be considered with the two matrices from (3). The most general case corresponds to two operators and . Consider an eigenvalue problem with the matrices and :

| (5) | ||||

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

If at least one of these two matrices is positively defined – the problem has a unique solution (within eigenvalues degeneracy). In the found basis the two matrices are simultaneously diagonal: (8) and (9). See (80) to convert an operator’s matrix from to basis and (81) to convert it from to basis.

In our previous work [3, 5, 1, 2] we considered various and operators, with the goal to find operators and states that are related to market dynamics. We established, that execution flow (the number of shares traded per unit time), not trading volume (the number of shares traded), is the driving force of the market: asset price is much more sensitive to execution flow (dynamic impact), rather than to traded volume (regular impact). This corresponds to the matrices and . These two matrices are volume- and time- averaged products of two basis functions. Generalized eigenvalue problem for operator is the equation to determine market dynamics:

| (10) | ||||

| (11) | ||||

| (12) | ||||

| (13) | ||||

| (14) |

The is the time ‘‘now’’, is a wavefunction localized at . Here and below we write instead of to simplify notations. The is the projection of the state of (10) eigenproblem to the state ‘‘now’’ .

Our analysis[3, 5, 1, 2] shows that among the states of the problem (10) the state corresponding to the maximal eigenvalue among all , , is the most important for market dynamics. Consider various observable characteristics in this state .

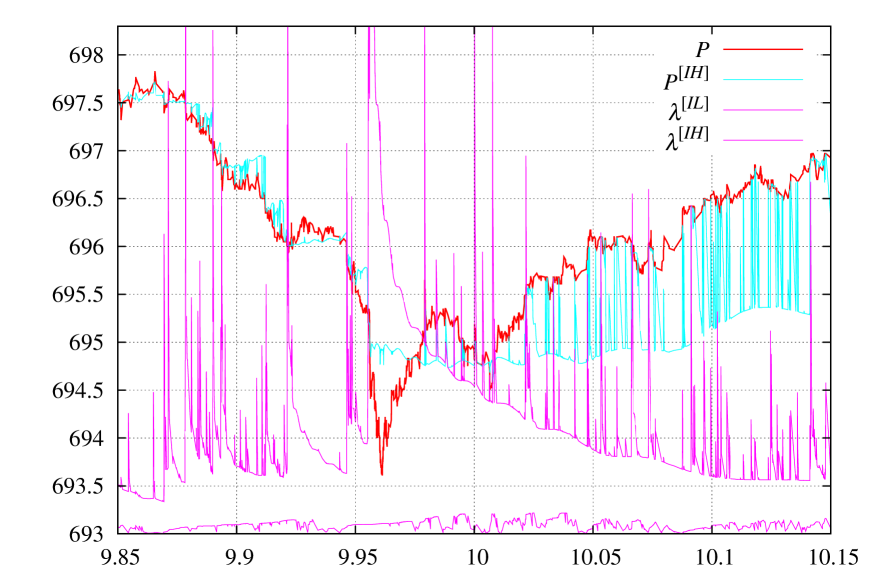

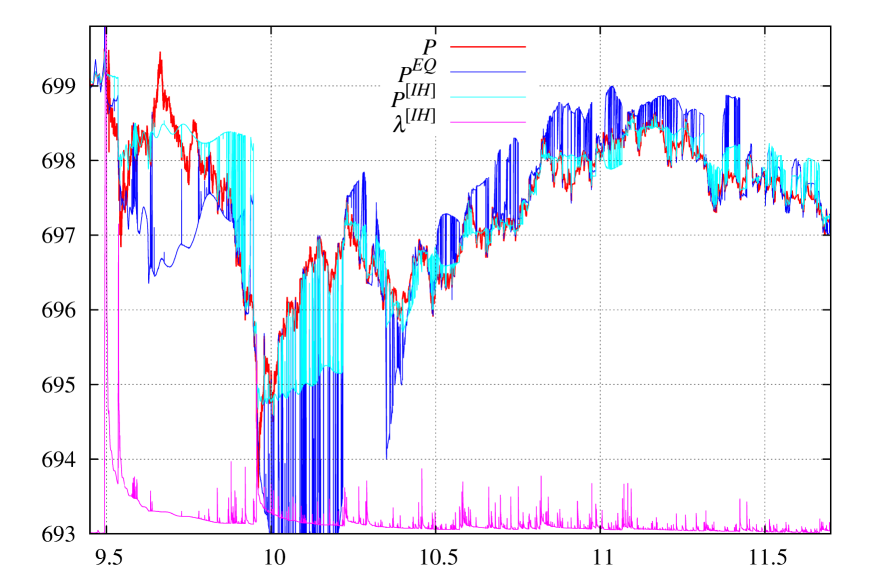

In Fig. 1 a demonstration of several observables: the price in state (15), maximal eigenvalue of (10) problem, and minimal eigenvalue (for completeness) are presented.

| (15) |

From these observable one can clearly see that singularities in cause singularities in price, and that a change in localization causes an immediate ‘‘switch’’ in an observable. This switch is caused by the presence of internal degrees of freedom ( coefficients, one less due to normalizing , Eq. (8)). Such a ‘‘switch’’ is not possible in regular moving average (16) since it has no any internal degree of freedom, hence, all regular moving average dependencies are smooth.

The state that maximizes the number of shares traded per unit time on past observations sample is the main result of our initial work [3].

III On Time Scale Selection of a Trading Strategy

Financial markets have no intrinsic time scales111 Trivial time scales such as seasonal, daily open/close, year end, etc. while actually do exist provide little trading opportunities. (at least those a market participant can take an advantage of). For US equity market — market timeserie data manifests an existence of time scales from microseconds to decades. For NASDAQ ITCH [8] data feed time-discretization is one nanosecond. Whereas real markets typically have no intrinsic time scale, any trading strategy typically does have an intrinsic time scale. This time scale is determined by: available data feeds, available execution, trader personal preferences, etc. An implementation of trading strategies with time scales under one second requires a costly IT infrastructure of data feed/execution, and is hard to program algorithmically; moreover, market liquidity at such a low time scale is low, a situation when a dozen of HFT firms are chasing a single limit order of 100 shares is very common. For trading strategies with a large time scale the major difficulty is that a trader, observing post-factum missed opportunities, often starts to ‘‘adjust’’ the strategy to lower time scales. For professional money managers (managing other people money), with the rare exception of ‘‘super-stars’’, the maximal possible time scale is one month: once a month a letter to investors explaining the fund performance is required to be sent. There is no such a ‘‘monthly’’ constraint for somebody managing his own money, for example, an individual crypto investor may be 50% down in April 2022 – but for him this problem is not as big as it were for a fund. For traders the most popular time scales are between ‘‘daily trading’’ and ‘‘monthly P&L’’; these time scales provide sufficient number of opportunity events along with market data availability (e.g. Bloomberg). Important that these time scales are ‘‘compatible’’ with human reaction time.

The major drawbacks of trading systems the authors observed among institutional investors/hedge funds/individual investors is that all of them typically have a few time scales. Most often – a single time scale. It may be explicit or implicit, but it almost always exists. The contradiction between a spectrum of time scales of the financial markets and a single time scale of a trading system is the most common limitation in trading systems design.

Consider familiar demonstration with a moving average. Let be a regular exponential moving average. The average is calculated with the weight (55):

| (16) |

The averaging takes place between the past and using exponentially decaying weight . With increase, the contributing to integral interval becomes larger and moving average ‘‘shifts to the right’’ (-proportional time delay, lagging indicator). The (16) has no single parameter that can ‘‘adjust’’ the time scale as do in (3) where . In (16) we have . Trading strategies that watch crossing between price and moving average, or between two moving averages calculated with different values of , have the problem that the specific values of time scales are initially preset. We personally observed a number of successful (and failed) traders who were constantly watching moving averages on Bloomberg — we may tell that their success is caused by intuitively switching from one scale to another; if you ask such a person what he is doing – he cannot explain; but looking at him from a side it is clear – the person is trying to identify relevant time- and price- scales. Successful traders also jump frequently by observing assets of different classes; it is a common situation before placing a trade on GOOG to observe: DJI, AAPL, commodity, power generating industry, chemical industry – all withing less than a minute. If you go from a human (who select the time scale based on intuition, market knowledge, news, personal communications, experience, etc.) to an ‘‘automated trading machine’’ that has none of that – the problem of selecting the time scale becomes very difficult. The problem of automatic time scale selection is crucial in trading systems design. Another critically important problem is to adsorb information of different financial instruments. The theory presented below is perfectly applicable in multi asset universe; the analysis and interpretation, however, become more complicated, see Section VIII below for a discussion. In this paper we will be concerned only with a single asset universe to demonstrate the main ideas, and a detailed generalization of the theory to multi asset universe will be published elsewhere.

Whereas we still have no approach to price scale selection (the [5] uses price basis as , but in the stationary case this is actually a time scale equivalent), we do have a practical method for an authomatic selection of the time scale.

Considered in Section II above the state that maximizes the number of shares traded per unit time on past observations sample determines the time scale. Let us consider in this state not the price and execution flow as we studied before, but simply time distance to ‘‘now’’ in state:

| (17) | ||||

| (18) |

here is regular moving average. As all the values of time (future and past) are known, the (17) carry information about localization. When the value is small – a large spike event happened very recently. When it is large – a large spike happened a substantial time ago, the value is an information when a large spike in took place.

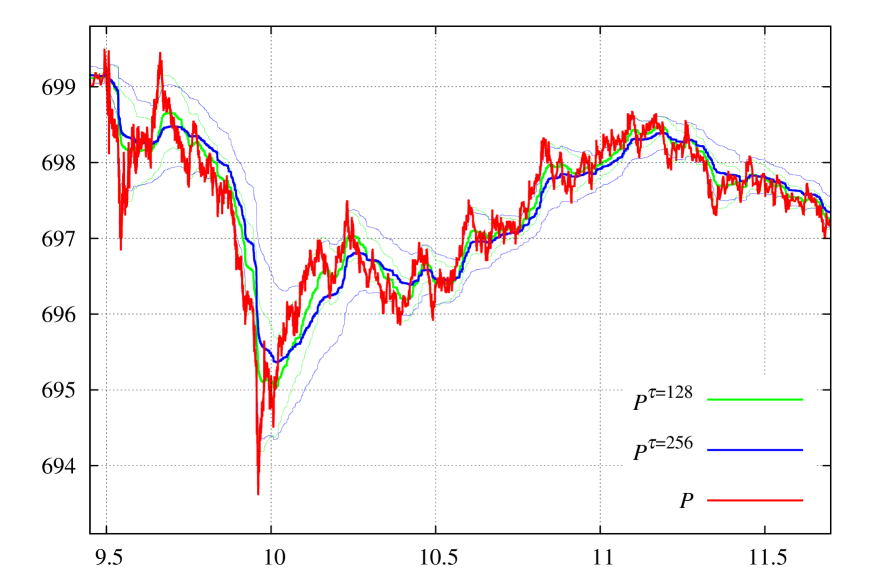

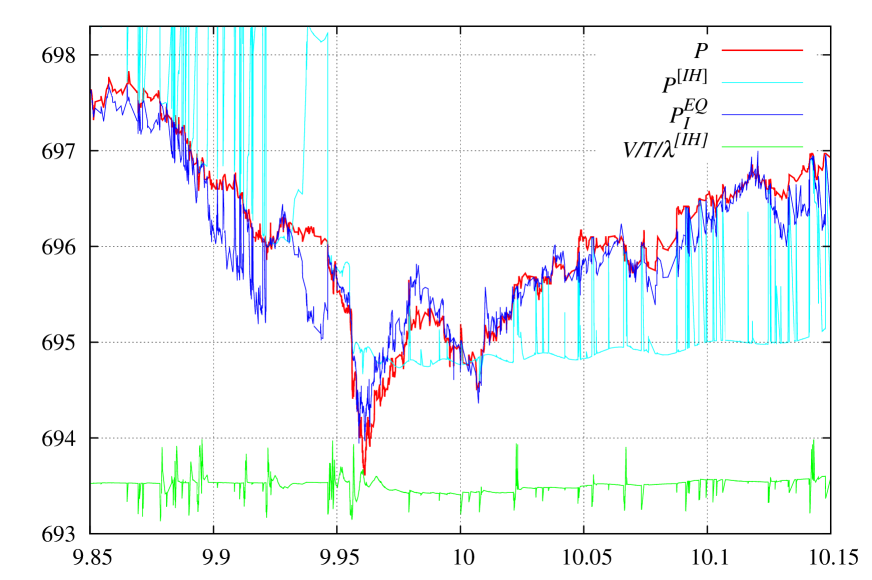

In Fig. 3 (top) the value of (scaled by the factor and shifted up to fit the chart) is presented for s and s. One can clearly see that there is no smooth transition between the states, the ‘‘switch’’ happens instantly, there is no -proportional time delay, what is typical for regular moving averages . A linear dependence of on time is also observed, this is an indication of stability of state identification. The value of is the time scale; typically it is easier to work with the density matrix obtained from rather than with the time scale itself; a typical operation with time scale – calculate an average of some observable in the interval of time scale length till ‘‘now’’: the density matrix does exactly this.

We have tried a number of other operator pairs in generalized eigenvalue problem (6)

| eigenvalue meaning | ||

|---|---|---|

| (execution flow) | ||

| (aggregated execution flow) | ||

| (traded volume) | ||

| (price) | ||

| (price) | ||

| (market impact) | ||

| (aggregated market impact) | ||

among many others[1, 2]. An eigenproblem with an additional constraint was also considered, see ‘‘Appendix F’’ of [2] and, more generally, ‘‘Appendix G’’ of [6]. All price-related operators cause noisy behavior, no ‘‘switching’’ whatsoever. Only the operator does have similar to switching (but less pronounced); it is also more sensitive to selection. Time to max spike in is presented in Fig. 3 (bottom). See [2] about the properties of states: ‘‘Appendix C: The state of maximal aggregated execution flow ’’.

This makes us to conclude that the state to determine the time scale is the state that maximizes the number of shares traded per unit time on past observations sample. This state allows us to average an observable with the weights:

| meaning | measure | |

|---|---|---|

| ‘‘at spike’’ | ||

| variation ‘‘at spike’’ | ||

| ‘‘since spike till now’’ | ; | |

| ‘‘since since spike’’ | ; |

Found solution automatically adjusts averaging weight what makes the value of parameter in (55) much less important. The ‘‘switch’’ happens instantly, without a -proportional time delay as it were for a regular moving average.

IV On The Impact From The Future

The concept of the Impact From The Future was introduced in [1]. It predicts the value of future execution flow. Given currently observed (at ) value of execution flow we know with certainty that future value of execution flow will be greater than because more trading will definitely occur in the future. But how to estimate the value of ? The maximal eigenvalue of (10) is used as the estimation of future execution flow :

| (19) | ||||

| (20) | ||||

| (21) |

Whereas the is an ‘‘impact from the past’’ (already observed current execution flow), the is an ‘‘impact from the future’’ (not yet observed contribution to current execution flow); it’s value is non–negative by construction. Similar ideology (use past maximal value as an estimator of future value) is often applied by market practitioners to asset prices or their standard deviations. This is incorrect. Experimental observations show: this ideology is applicable only to execution flow , not to the trading volume, asset price standard deviation or any other observable.

A criterion of no information about the future can be formulated. If current is close to , this means that we have a ‘‘very dramatic market’’ right now and there is no information about the future of this market:

| (22) |

An alternative form of (22) is more convenient in practice because the value is bounded:

| (23) |

This means that and are the same (14). In practice a good value of the threshold is between instead of the maximal value of . In Fig. 1 one can clearly see the spikes in when (23) approaches , for see Fig. 1 of [2], it is not presented in Fig. 1 to save the place.

We have the state and the criterion (23) of no information about the future. How to obtain directional information? Previously [3] we considered the price (15) in the found state as an indicator related to market direction. The difference between and was used as a directional indicator. A typical result is presented in Fig. 1. The price is actually a moving average with positive weight having internal degrees of freedom. It determines the direction (and can possibly work for ‘‘reverse to the mean’’ type of strategy), but this is not the future price.

Consider a concept from classical machanics. Let us introduce a Lagrangian–like function:

| (24) |

and try to variate it. Let us first take to be an exact differential (e.g. total energy in classical mechanics ). Then (24) carry no information about the dynamics, but we obtain two distinct terms and that we can consider the difference of and obtain actual equation of motion. We implemented this strategy below by considering various hypothesises for action and testing them experimentally.

IV.1 Volume Driven Dynamics

Assume that price changes are caused by trading volume. Introduce

| (25) |

Then ‘‘exact differential action’’

| (26) |

To obtain ‘‘actual’’ action we have to change the sign in between:

| (27) |

These two terms can be considered as ‘‘kinetic’’ and ‘‘potential’’ energy. It is difficult to variate (27) so let us just find the price that makes these two terms equal222Similar concept in mechanics corresponds to finding the state in which kinetic energy equals to potential energy. This gives an exact result for oscillators and approximate result for many other systems, see Virial theorem.:

| (28) | ||||

| (29) |

The dynamics with (27) action is a volume-driven dynamics. Let then both price and volume are linear functions on time, and , and two terms in (27) are equal exactly in any state: . Taking into account that is typically localized (see Fig. 6 of Ref. [2]) obtain . Since is negative, the (29) means trend following, the trend is determined in state. In this state we have price equals to and . The (29) means that reference price will slowly follow the trend as trading proceed. This trend following stops only with state switch, what means a new spike in has been observed and this new spike is now the ‘‘most dramatic market observed’’.

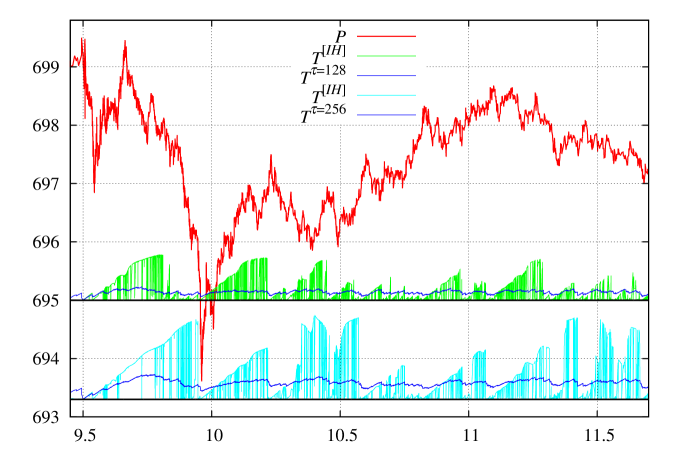

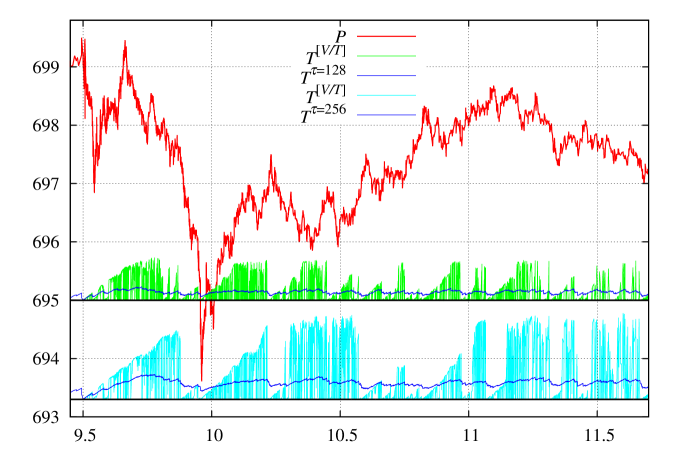

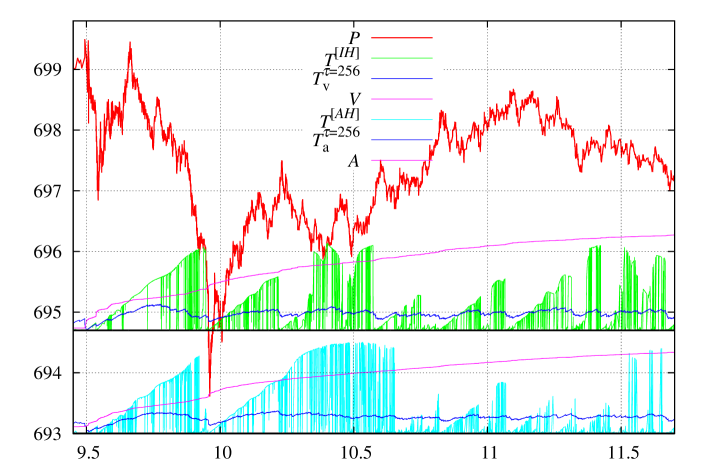

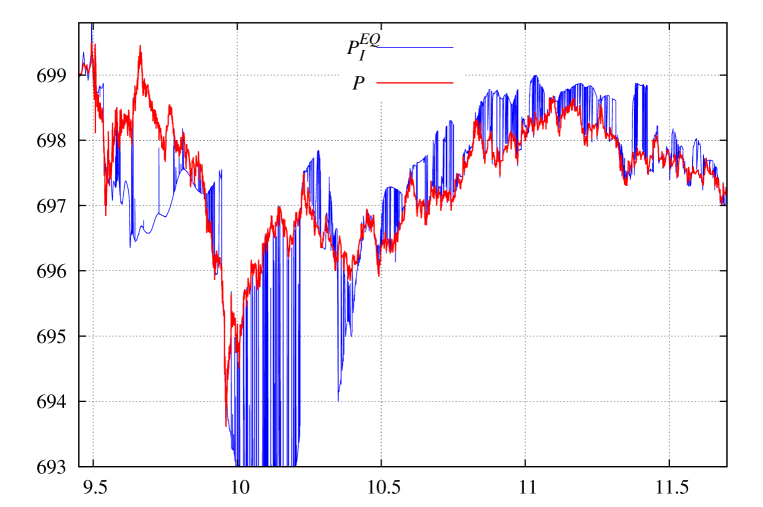

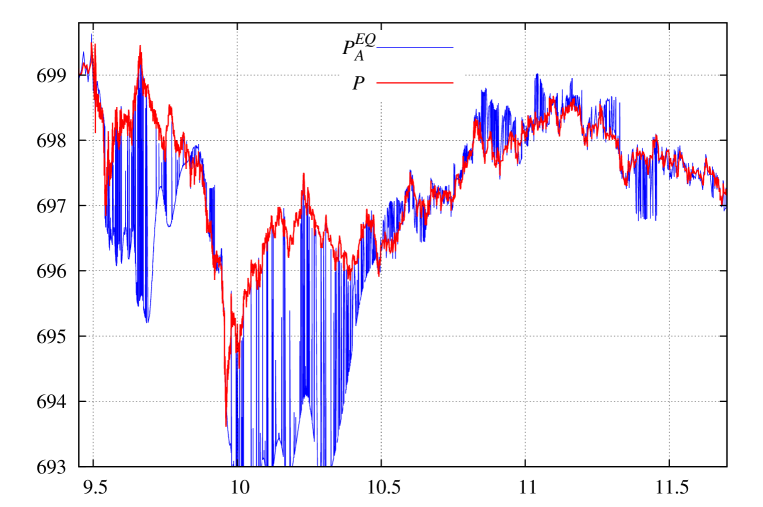

An important feature of is that in (29) there are only the moments that are calculated directly from sample using (66): , , and . This makes all the calculations easy. In Fig. 4 we present the along with . In [3] the best directional indicator found was the difference between last price and , without trending term. As the price reaches some trading band – it starts crossing multiple times thus creating false signals. The has a great advantage of extra trend following contribution in (29), what very much suppresses false signals. The result is also stable in situations when max ‘‘switch’’ is missed.

IV.2 Execution Flow Driven Dynamics

Consider ‘‘exact differential’’ action with term from (25):

| (30) |

Then ‘‘actual’’ action333 Note that if one put in (31) and instead of and the (27) is obtained. is considered as ‘‘kinetic’’ and ‘‘potential’’ terms split; the kinetic term is defined as the one with first derivative of price in with .

| (31) |

Here and below is the densitity matrix corresponding to the state ‘‘since till now’’ (60), obtained from the polynomial (63) by applying Theorem 3 of [7], see \seqsplitcom/polytechnik/utils/BasisFunctionsMultipliable.java:getMomentsOfMeasureProducingPolynomialInKK_MQQM for numerical implementation; the is the densitity matrix corresponding to the polynomial (64);

| (32) | ||||

| (33) |

see \seqsplitcom/polytechnik/freemoney/IandDM.java:{QQDensityMatrix,QQDensityMatrix2} for numerical calculation of and from . The second term in (31) does not depend on price shift as with (19) boundary condition we have . The dynamics with (31) action is execution flow driven dynamics. Let then the volume is a linear functions on time and the price is constant , both terms in (31) are zero in any state; moreover when the two terms are equal exactly for any . This is different from the dynamics defined by (27) action where constant causes linear dependence of price on time. With (31) action all changes in price are caused by changes in execution flow. Our previous observations [3, 5] show that asset prices are much more sensitive to execution flow (dynamic impact), rather than to traded volume (regular impact).

Whereas the calculation of (27) action was easy because the moments were calculated directly from sample, the calculation of (31) is much more difficult. We can obtain directly from sample only operator and then, using (72), operator :

| (34) |

The problem left is to calculate the operator . Currently we do not have a method to obtain it exactly. The problem is simplified by the fact that we need not the operator per se, but just , what enables us to work with it’s approximation. See Appendix A below for several approximations for ; one can possibly try secondary sampling approach of Appendix D as an alternative route. Different approximations give noticeably different results. Nevetheless, assume we know the value of on past sample. Then, assume one more observation with price is coming. With the knowledge of future execution flow (19) we can put equal ‘‘kinetic’’ and ‘‘potential’’ terms in (31), thus to obtain the value of price at which (31) is zero (use (19) boundary condition with (72) expansion):

| (35) | ||||

| (36) | ||||

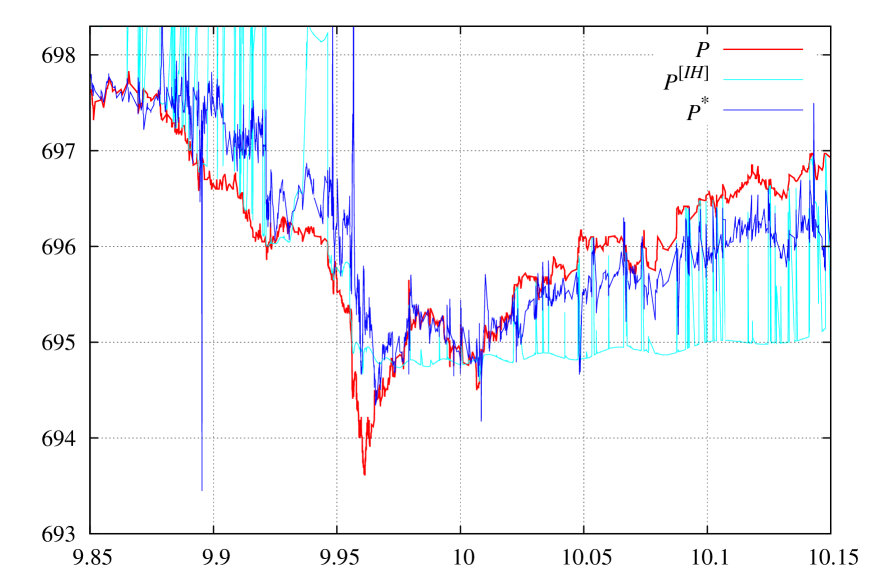

In (36) all ‘‘future’’ price contributions are moved to the left hand side and in the right hand side all the integration is performed till last observed point with . Technically (36) means: calculate the difference (35) on observed sample, and if it is not zero – the price will move on to compensate. Our experiments show that these two terms are very close to each other and the value of is small. One may also try other states, such as (33) to consider the operators in, but the property of operators and being equal in some density matrix state seems to be special to the state , see Appendix F below for a study of the state .

IV.3 Local Volume Driven Dynamics

Similarly to (30) one can consider the term from (25) to be an ‘‘exact differential’’ action:

| (37) |

Then ‘‘actual’’ action is:

| (38) |

The dynamics with (38) action is local volume driven dynamics. Let , then the volume is a linear functions on time . Two terms equal give quadratic dependence of price on time: ; then two terms in (38) are equal exactly in any state: . There is a similar problem with calculation of the derivatives to the one considered above. Taking into account obtain:

| (39) |

As with (34) above the second term is obtained by applying (72) to the moments that are available directly from sample. Consider one more observation with price coming. With the knowledge of future execution flow (19) we can obtain the equilibrium price:

| (40) | ||||

| (41) |

Technically (41) means: calculate the difference (40) on observed sample, and if it is not zero – the price will move on to compensate.

IV.4 Total Lagrangian Driven Dynamics

The same as in Sections IV.2 and IV.3 above we can consider the entire from (25) in state to be an ‘‘exact differential’’ action:

| (42) |

This is actually the same expression as (26) above (with changed sign), but split differently into ‘‘kinetic’’ and ‘‘potential’’ energy terms. The ‘‘actual’’ action is then obtained by changing the sign of ‘‘potential’’ contributions:

| (43) |

The dynamics for can be obtained in a regular way. The volume is a linear functions on time . The price with ‘‘kinetic’’ and ‘‘potential’’ terms equal gives cubic dependence of price on time: ; the terms in (43) are equal exactly in any state: .

The correponds to the sum of (35) and (40):

| (44) | ||||

| (45) | ||||

| (46) |

Whereas this ‘‘Total Lagrangian Driven’’ and ‘‘Volume Driven’’ dynamics of Section IV.1 above use the same ‘‘exact differential action’’: (42) and (26), they generate different dynamics as we differently split action into ‘‘kinetic’’ and ‘‘potential’’ terms. The dynamics of Section IV.1 is of trend following type, the direction changes only when ‘‘switches’’. The direction of ‘‘Total Lagrangian Driven’’ dynamics takes into account a number of factors in (43), thus it may reverse the direction even without a ‘‘switch’’ in .

V On Selection Of A Type Of The Dynamics

In Sections IV.1, IV.2, IV.3, and IV.4 we chose an ‘‘exact differential’’ action from which price dynamics was determined. The ‘‘volume driven’’ dynaimcs of Section IV.1 stays separately as it is a trend–following model with automatic time–scale selection of Section III, it is the simplest ‘‘practical’’ model. An important feature of possible ‘‘action’’ of the forms: (31), (38), and (43) is that all of them include fluctuations of execution flow . Now we have to choose which one corresponds to market dynamics most closely. Technically, we have three calculated characteristics: and that are obtained exactly and that is obtain from an approximation, such as in the Appendix A. Now we need to select from them the most appropriate linear combination to obtain the future price according to (35), (40), or (44).

Experiments show that only execution flow driven dynamics of (31) form can possibly work to determine the future price. This type of dynamics has only two contributing terms: and , that are very close to each other in state. The sum of them is equal exactly to (the result of [3]), and their difference determines (35) future price (a new result of this paper). In Fig. (5) a demonstration of (35) (field \seqsplitcom/polytechnik/freemoney/PFuture.java:PEQ_I_fromDPI, obtained with from (78) is presented. The result is almost identical to (88) approximation, field \seqsplitcom/polytechnik/freemoney/PFuture.java:PEQ_I_fromI2DtpDivI, and the is slightly lower with (77)). This is the only ‘‘advancing’’ (not lagging!) indicator we managed to obtain so far. A very important feature is that operators and from (34) are very close in state, and the difference determines future direction (35). This corresponds to ; the ratio of aggregated execution flow and execution flow is about in state, see green line in Fig. 5.

One may also consider other states, e.g. from [2]: ‘‘Appendix C: The state of maximal aggregated execution flow ’’, that corresponds to eigenvalue problem

| (47) |

and using this maximal in boundary condition (19). A remarkable feature of this state is that in it :

| (48) |

what allows us to consider ‘‘double integrated’’ type of the density matrix we considered in Appendix F. In this state we typically have , no ‘‘advancing’’ information we managed to obtain so far.

When asked about ‘‘direct application’’ of the solution presented in Fig. 5, an ‘‘advancing’’ indicator, to practical trading – it is not ready for two reasons:

-

•

The result strongly depends on approximation used for , see Appendix A.

-

•

In Fig. 5 we have shown only the interval, where predicted future price is substantially different from last price. For large intervals of this trading session the difference between predicted and last price is very small, this means the relation holds almost exactly in state.

This makes us to conclude that execution flow driven dynamics of Section IV.2, while is a very promising one and is the only one we obtained, that can possibly provide an ‘‘advancing’’ indicator – it still requires more work for practical applications. These two directions are the most promising:

- 1.

-

2.

Select directional indicator. The best indicator we found so far is the (35).

VI On Practical Source Of Directional Information

Whereas our attempts above to obtain an ‘‘advancing’’ indicator were promising but not ready yet for practical trading, a lagging directional indicator with automatic time–scale selection of Section IV.1, is ready and can be applied to trading. This indicator (29) is the price plus trend-following factor that is proportional to in state:

| (49) |

The formula automatically selects the time scale from the interval (in Shifted Legendre basis) or from the interval (in Laguerre basis). The difference between last price and determines the trend. Trend ‘‘switch’’ occurs instantly as a ‘‘switch’’ in of (10) eigenproblem. In Fig. 8 below the (49) is presented in higher resolution than in Fig. 4 above. The situation when is close to corresponds to no information about the future (23) situation. Typically all the directional signals should be ignored (23) when

| (50) |

as this corresponds to little information about the future available.

VII A Brief Description Of The Algorithm

Whereas a theory presented is this work may look rather complicated, it’s computer implementation is very straightforward. It is way simpler than multiple systems of other people the authors have seen in diversity . Technically an implementation of the theory requires an integration to calculate the moments from timeserie sample (66), polynomials multiplication (53) to calculate the matrices from moments, and solving an eigenproblem (10) for time scale. There is no magic, simple and precise description of an algorithm implementing the theory is this: On each (Time, Execution Price, Shares Traded) tick coming do the following:

-

1.

Have an integrator that on each tick coming recurrently updates an internal states to calculate the moments: , , , and ; see \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java.

- 2.

- 3.

- 4.

- 5.

See Appendix C below with a description of the software implementing the algorithm. This software reads a sequence of (Time, Execution Price, Shares Traded) ticks (line after line, one tick per line), and for every tick read prints the results.

VIII Conclusion

An approach to obtain directional information from a sequence of past transactions with an automatic time–scale selection from execution flow is presented. Whereas a regular moving average has a built-in fixed time scale, the approach of this paper uses the state of maximal execution flow (10) to automatically determine the one. Contrary to regular moving average the developed approach has internal degrees of freedom to adjust averaging weight according to spikes in execution flow . These internal degrees of freedom allow to obtain an immediate ‘‘switch’’, what is not possible in regular moving average that always has a -proportional time delay, lagging indicator. For a problem of dimension in Shifted Legendre basis the system automatically selects the time scale from the interval , and in Laguerre basis from the interval . Among unsolved problem we would note a selection of optimal interpolation to obtain an advancing price from (35), see Appendix A, and studying a possibility to ‘‘split’’ some average value based on some other operator spectrum, see Appendix E, Eq. (95). The software implementing the theory is available from the authors. Among directly applicable to trading results we would note the price (49) that includes both ‘‘switching’’ and ‘‘tending’’ contributions, see Fig. 8.

A generalization of the developed theory to a multi asset universe creates a number of new opportunities. Now from a sequence of past transactions for financial instruments:

| (51) |

one can construct execution flow operators , each one with it’s own state of maximal and corresponding to it . In addition to this an operator of capital-flow index

| (52) |

can be constructed to determine market overall activity; it also has it’s own state of maximal 444 A one of self-evident trading strategies: when current value of is large select the assets with currently low execution flow as “lagging” and soon to follow in the direction of the market. . Critically important that all these are in the same basis (the one of (56)) and their scalar products can be readily calculated. Technically this means we can independently use integrators \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java, where each one calculates the moments of it’s own single asset , and then, from here, all the cross-asset characteristics can be calculated via projections! For example: how similar is the state of high execution flow of asset and the one of asset ?— it is just a regular scalar product of two wavefunctions ; ‘‘correlated’’ assets are not the assets which prices ‘‘go together’’ but the assets with simultaneous spikes in execution flow. In addition to simultaneously criterion (projection) a criterion for ‘‘which one came earlier: a spike in or a spike in ’’ can be written in a similar way: obtained from directly sampled moments and , or as what does not require other moments. There are several alternative forms of ‘‘distance’’ to determine which happened earlier, see [1], ‘‘Appendix A: Time–Distance Between States’’.

In a multi asset univers complexity of calculations growths linearly with , hence the value of can be very high even for realtime processing. Moreover, as every integrator \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java works independently, the problem can be easily parallelized to run each integrator on a separate core. Then all the cross-asset characteristics can be obtained from individual asset data (the moments from \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java instance) with standard linear algebra operations such as projection (scalar product), taking the difference between two to determine the distance, or considering some other operator (e.g. capital-flow index (52)) in a state like , , or .

We see an application of this paper theory to multi asset universe as the most promising direction of future research. The simplest, but really good, indicator is the indicator (49) calculated for each asset then all summed with the weights for the terms in the sum to have the dimension of capital flow.

Acknowledgements.

This research was supported by Autretech group[9], www.атретек.рф. We thank our colleagues from Autretech R&D department who provided insight and expertise that greatly assisted the research. Our grateful thanks are also extended to Mr. Gennady Belov for his methodological support in doing the data analysis.Appendix A On Calculation of moments from sampled moments.

A theory developed in this paper works primarily with an observable and corresponding operator (matrix) , that is obtained by applying multiplication operator:

| (53) |

to sampled moments , . The moments are defined with being a polynomial of order and integration measure having the support :

| (54) |

In this paper we use: is decaying exponent and is either linear or exponential function on time:

| (55) | ||||

| (56) |

These two bases correspond to a more general (also analytically approachable) form , where and are both exponential functions on but with different time scales: and . Other forms can also be considered.

If we want to consider moments, then put it to (54) and do an integration by parts:

| (57) |

if is equal to the same weight multiplied by a polynomial: then the moments of can be obtained from the moments of according to (57). The key element is an existence of , a polynomial–to–polynomial mapping function (it is obtained as a derivative of a polynomial multiplied by the weight):

| (58) | ||||

| (59) |

where the time-derivative of a polynomial multiplied by a weight is represented by the same weight multiplied by other polynomial. The (57) corresponds to and .

For the two bases we consider in this paper it is also possible to obtain moments from moments using integration by parts, see [2], section ‘‘Basic Mathematics’’, about polynomial-to-polynomial mapping such that for an arbitrary polynomial :

| (60) |

For the bases we use such a polynomial-to-polynomial transform exists:

| (61) |

A remarkable feature of this transform is that since and is also a polynomial, thus an average with it can be converted to a density matrix average, any average can be represented as the spur from a product of operator and a density matrix :

| (62) |

This way any average of an observable can be calculated as operator averaged in some mixed state obtained from the polynomial . Note, that transform can be applied in chain:

| (63) | ||||

| (64) |

The moments of are usually obtained from direct sampling of all available observations in a timeserie:

| (65) |

the moments of a derivative can also be obtained from direct sampling:

| (66) |

See [1], section ‘‘Basis Selection’’, for one more basis: price basis: . It has no and operators available, but has similar sampling formula. Given a good choice of basis polynomials:

| (67) |

one can calculate (with double precision arithmetic) the moments to a very high order (limited by the divergence of multiplication coefficients (53)) in Laguerre basis, and (limited by poorly conditioned matrices) in shifted Legendre basis; Chebyshev polynomials also provide very stable calculations in shifted Legendre basis (Chebyshev polynomials have perfectly stable multiplication: all except , ). The result is invariant with respect to basis choice, and the ones from (67) give identical results, but numerical stability can be drastically different[10, 3].

Moments calculated from market data timeserie using Eqs. (65) and (66) are the cornerstone of our theory. The most important are the moments of execution flow , they are obtained from (66) by putting the volume as , thus the moments are obtained from timeserie sample; the matrix is obtained from them using multiplication operator (53). The matrix is known analytically. These two matrices are volume- and time- averaged products of two basis functions. A generalized eigenvalue problem (5) is then formulated:

| (68) | ||||

| (69) | ||||

| (70) |

and solved. Whereas the calculation of the moments , , , , create no problem whatsoever, an attempt to go beyond them turned out to be problematic. For example any second order derivative (e.g. ) cannot be obtained directly from sample (66) and, in the same time, has singularities when applying an integration by parts (57), not to mention difficulties to formulate a boundary condition at .

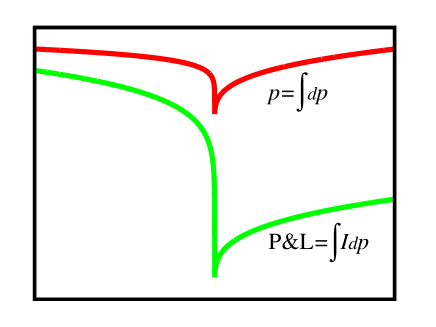

However, some of these characteristics are of great interest. The most important one is . The price provides an information of current market state, but little one about possible trading opportunities. Assume at a given time interval we have some specific constant value of execution flow and some . During this interval shares were traded and the price change was . How much money we potentially can make during this ? Buy at the beginning of : shares at . Sell at the end of : shares at . Total potential P&L (assuming we can perfectly frontrun the market555 During every we hold shares, i.e. we always hold a position equals to execution flow , the , see [3], Section “P&L operator and trading strategy”.) is then . The tells us how much money can be potentially made (or lost) on market movements taking into account traded volume capacity. This is the same sum of price changes as for regular price , but not all are created equal. If occurred on a large execution flow – it contributes more, if on a small – it contributes less666 The concept of is very different from commonly studied market impact concept that is a price sensitivity to volume traded: . This creates a different way to study opportunities of market movements, see Fig. 6.

The value of cannot be calculated directly as well as it cannot be calculated using integration by parts (57) in a general basis. However, if we change the basis and vary basis functions in the basis of (68) eigenproblem an approximate solution can be obtained. Consider the basis , it is orthogonal (8), (9) as:

| (71a) | |||

| (71b) | |||

what gives for an arbitrary . Then taking into account (57) and (58) put and . Obtain for matrix elements:

| (72) |

These are matrix elements of . We are going to modify them to obtain sought matrix elements , that should be zero when . For this reason the average in (72) should be reduced to an integral of an exact differential to be canceled with out of integral term. Taking into account (68) and (71) one can see that

| (73) |

satisfies exact differential condition. Then practically applicable expression is:

| (74) |

This is an expression of operator in the basis of (68). The reason why we were able to obtain this explicit expression is that we managed to combine differentiation of a product (58) with eigenvalues problem (68) to write, when , each matrix element as an exact differential. This approximation can also be viewed as operators multiplication factoring:

| (75) |

what gives

| (76) |

and two approximations for :

| (77) | ||||

| (78) |

Using an approximation the (77) result can be generalized to a –power , however this result is not very accurate for other than or , since it is obtained from the moments:

| (79) |

This method of calculation is implemented in \seqsplitcom/polytechnik/freemoney/MatricesFromPI.java:getbQQIpowdpdtFromQQpi_withLastP; for see e.g. \seqsplitcom/polytechnik/freemoney/PFuture.java:bQQ_DP. A matrix obtained in basis can be converted to basis in a regular way:

| (80) | ||||

| (81) |

where Gram matrix , and are (68) eigenvectors in basis, Eq. (70), see \seqsplitcom/polytechnik/utils/EVXData.java. 777 A question arise whether obtained matrix , corresponds to a measure or not: Whether it can be obtained from some moments, by applying multiplication operator (53)? We have an algorithm to establish this fact, see Theorem 3 of [7]. Numerical experiments show that this is almost the case. A mismatch may be caused either by some numerical instability or by some degeneracy in the problem. A numerical instability is very unlikely because (62) holds exactly for both: 1). original matrix (77) and 2). the matrix obtained by applying multiplication operator (53) to the moments obtained from Theorem 3 of [7] applied to the original matrix (77). A mismatch between these two matrices is observed starting with ; the difference is very small but clearly established numerically. This degeneracy (if exists) does not create any problem in calculation of the value of any observable as the density matrix used has the form: a state since spike. The expression (77) is an approximation, similar (and slightly better) approximation is a non–Hermitian matrix (78) as it has a single approximate product compared with two in (77).

Different approximations can be obtained using a general form of (75):

| (82) |

corresponding to an approximation . We can obtain a result applying it to other moments available directly from sample: , along with their derivatives , , , and obtained from integration by parts formula (57) with boundary conditions: 1). impact from the future (19) and 2). zero at ; traded volume is measured relatively , it is negative for past observations. A few useful approximations of operator :

| (83) | |||

| (84) | |||

| (85) |

Despite these operators being non-Hermitian this creates no problem as they are used only in calculation of with Hermitian density matrix such as in (45). The approximation (83) uses directly sampled moments , it is a product of two separately sampled operators with spikes, nevertheless it gives very similar to (78) results, without spurious artifacts; sometimes, however, there is a difficulty to combine it with from (34), as in this case the moments from two different samplings are used together. The approximation (84) uses exact and operators matrix elements but the result is noisy. The (85) also uses exact values of and (obtained from (57) with zero boundary condition due to factor) operators, but it is completely useless due to singularities; however without the last term the operator gives similar to results. Two these operators should probably be considered together as corresponding to and . In sufficiently localizes states (e.g. ) we typically have .

Another possible approach to obtain that enters into (35) together with is to consider the operator . As above, let us take (72) and, assuming that changes in are much smaller than changes in , modify it to obtain matrix elements:

| (86) |

This expression satisfies limit case conditions. When the result exactly equals to , when the result exactly equals to , and when the result is a differential of a constant. With as per (19), the (86) also satisfies , the same as for . See \seqsplitcom/polytechnik/freemoney/MatricesFromPI.java:getbQQDtpDivIFromQQpi_withLastP for numerical implementation. This approximation can be tried as a proxy to in (35), but the result is very poor. The problem is that (86) has an extra common factor . Similarly to (76) we can modify it by factor to remove from the denominator:

| (87) | ||||

| (88) |

but this creates a problem that the condition no longer holds, thus it cannot be applied in (35). One may try to adjust the value of in (88) to have this condition satisfied (put and take the Spur with of (88), let it equals to zero; the values of and ‘‘adjusted’’ are typically very close, adjusted value is often slightly larger). An important difference between and is that the first one is an exact differential (thus it’s Spur with can be reduced to sub-differential expression in state and the boundary term), and the second one is not, thus it cannot be reduced to some observable in state.

Appendix B On Surrogate Volume

Another question of interest is whether all the developed theory and software of this work can be used without trading volume available. For a number of markets (such as: sovereign CDS, corporate fixed income, crypto exchanges, currency trading, etc.) it is quite common to have the price to be very accurate and available almost realtime, but the traded volume is either not available at all or provided incorrectly (sometimes intentionally incorrectly). In such a case there is an option to use the absolute value of price change as it were the volume; the calculations are the same – in (66) instead of one can use . The only problem with this approach is that market events without price change would not be taken into account as for them , hence the results will be less accurate. However, our past experiments, see [3], Fig. 6, show that absolute value of price tick as a ‘‘poor man volume’’ often provides quite similar results. A feed of ‘‘all price ticks’’ can be used as a surrogate volume. For a liquid asset it typically takes a few minutes for to exceed in several orders of magnitude; for the entire trading session in Fig. 7 a typical maximal price change is about , but the sum of all absolute price changes is about ; total reported by NASDAQ ITCH [8] trading volume of this session is about shares. As this sequence is all positive, we also tried it with the market impact concept (that completely failed with actual price change ) in a hope that with this we may find an identifiable limit for . The result is also unsatisfactory: The is very similar to : it fluctuates in orders of magnitude and clearly has no stable limit at any time scale below 10 minutes (for US equity marker). However the is similar to and for liquid assets can be used as a ‘‘poor man volume’’ with the matrices and in (69). See \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java:addObservationNoBasisShift for calculation of surrogate volume moments.

In Fig. 7 we present (17) average (along with regular moving average ) calculated for regular volume and surrogate volume . One can see similar behavior of localization ‘‘switches’’. However, surrogate volume states have some ‘‘switches’’ missed and overall picture is less detailed. In Fig. 8 the price from (29) is presented. One can see similar, but less detailed picture. This makes us to conclude that is a ‘‘poor man volume’’.

Appendix C Software Usage Description

The software is written in java. As with [1] follow the steps:

-

•

Install java 19 or later.

-

•

Download from [11] NASDAQ ITCH data file \seqsplitS092012-v41.txt.gz, and the archive \seqsplitAMuseOfCashFlowAndLiquidityDeficit.zip with the source code. There is also an alternative location of these files.

-

•

Decompress and recompile the program:

unzip AMuseOfCashFlowAndLiquidityDeficit.zip javac -g com/polytechnik/*/*java

-

•

Run the command to test the program

java com/polytechnik/algorithms/TestCall_FreeMoneyForAll \ --musein_file=dataexamples/aapl_old.csv.gz \ --musein_cols=9:1:2:3 \ --n=12 \ --tau=256 \ --measure=CommonlyUsedMomentsLegendreShifted \ --museout_file=museout.datProgram parameters are:

-

--musein_file=aapl.csv: Input tab–separated file with (time, execution price, shares traded) triples timeserie. The file is possiblygzip-compressed. -

--musein_cols=9:1:2:3: Out of total 9 columns ofdataexamples/aapl_old.csv.gzfile, take column #1 as time (nanoseconds since midnight), #2 (execution price), and #3 (shares traded), column index is base 0. -

--museout_file=museout.dat: Output file name is set to \seqsplitmuseout.dat. -

--n=12: Basis dimension. Typical values are: 2 (for testing a concept), or some value about for more advance use. -

--tau=256: Exponent time (in seconds) for the measure used. -

--measure=CommonlyUsedMomentsLegendreShiftedThe measure. The values \seqsplitCommonlyUsedMomentsLaguerre,CommonlyUsedMomentsMonomials correspond to Laguerre measure and \seqsplitCommonlyUsedMomentsLegendreShifted corresponds to shifted Legendre measures. The results of \seqsplitCommonlyUsedMomentsMonomials (uses ) should be identical to \seqsplitCommonlyUsedMomentsLaguerre (uses ), as the measure is the same and all the calculations are –basis invariant (but numerical stability is worse for \seqsplitCommonlyUsedMomentsMonomials).

-

-

•

The results are saved in output file

museout.dat. Among the values of interest are the following:-

pFV.pv_averageRegular moving average from (16). -

pFV.Tv_averageThe moving average from (18). -

pFV.totalVolumeTotal volume traded up to current tick. -

pFV.pv_MThe value of (15). -

pFV.Tv_MThe value of (17), an indicator of localization of . -

pFV.I.wH_squaredThe value of (50), an indicator of applicability of any prediction.

-

The output includes two versions: calculated with ‘‘actual’’ volume

(prefixed with pFV.) and calculated with ‘‘surrogate volume’’

of Appendix B (prefixed with pFA.).

C.1 Software Code Structure

Provided software is located in several directories:

-

•

com/polytechnik/utils/General basis utilities including my Radon-Nikodym approach [6] to machine learning. -

•

com/polytechnik/lapack/Ported to java LAPACK library. -

•

com/polytechnik/lapack/NASDAQ ITCH [8] parsing. -

•

com/polytechnik/trading/Both: ‘‘scaffolding’’ for new ideas and a ‘‘graveyard’’ for old ones. Also contains unit tests. One can run all unit tests (takes >10 hours) asjava com/polytechnik/trading/QVM

or three simple unit test of this paper algorithms:

java com/polytechnik/trading/PnLInPsiHstateLegendreShifted\$PnLInPsiHstateLegendreShiftedTest java com/polytechnik/trading/PnLInPsiHstateLaguerre\$PnLInPsiHstateLaguerreTest java com/polytechnik/trading/PnLInPsiHstateMonomials\$PnLInPsiHstateMonomialsTest

-

•

com/polytechnik/freemoney/The code implementing the theory of this paper. Most noticeable are:-

com/polytechnik/freemoney/CommonlyUsedMoments.javaCalculate the moments from (Time, Execution Price, Shares Traded) sequence of transactions using direct sampling. -

com/polytechnik/freemoney/FreeMoneyForAll.javaA wrapper to calculate the matrices from sampled moments . Secondary sampling of Appendix D is also included. -

com/polytechnik/freemoney/PFuture.javaTo calculate all the theory of this paper.

-

-

•

com/polytechnik/algorithms/Drivers to call various algorithms.

Appendix D On Secondary Sampling

When direct sampling (66) of an observable is not available an advanced technique of ‘‘secondary sampling’’[2] can be applied to calculate the moments of it. An example. Assume on every tick a moving average is calculated. Then this calculated value is used as it were a new observable, and the moving average of this new observable is calculated. For trivial cases this gives nothing new: a moving average of a moving average is a moving average with different weight (for exponential moving average it is the moving average with twice lower exponent time). However, calculated quantity ‘‘as it were an observable’’ can be a characteristic that describes an immanent property of the system. In [2] we applied this technique to the maximal eigenvalue of eigenproblem (10); we treated tick change in (calculated value, see Fig. 1) as it were a change in the observable and calculated the moments with as . The simplest application of this ‘‘calculated observable’’ is the sum of price changes corresponding to positive changes what gives the scalp price[2]:

| (89) |

Normalization is typically used for scalp price. Regular price corresponds to no condition on . Whereas actual computation is performed in a single pass by highly optimized code using recurrent relation for moments and in-place calculation of the value to be used as a ‘‘new observable’’, for understanding the concept one may think about secondary sampling as having two-passes for input timeserie: first — scan all timeserie observations and build a ‘‘new observable’’ for every timeserie point read; second — scan this timeserie once again treating the value calculated on the first pass as it were a regular observable. This ‘‘secondary sampling’’ approach greatly extends the types of observable that can be studied. However, while being very powerful in calculation of moments that otherwise are not approachable at all, it has difficulties in interpretation of the results.

The implementation of this technique is available in \seqsplitcom/polytechnik/freemoney/CommonlyUsedMoments.java. The methods \seqsplitupdateWithSingleObservation recurrently adjusts the basis and adds calculated contributions corresponding to regular measures , , as in (66). After this call all regular moments become available, and we have an option to calculate the value of some ‘‘secondary’’ observable from them, such as . When the calculation is completed — the method \seqsplitaddIHObservationSecondarySampling(double IH) can be called. It, in addition to the moments already available from \seqsplitupdateWithSingleObservation, calculates, as in (66), three other moments corresponding to the measure , specifically: , , and . The IH can be a calculated characteristic of various meaning, and the moments of this characteristic are now obtained as it were a regular observable. This technique is also very convenient for unit tests of moments calculation by using regular observable as IH.

Appendix E On Separation of States Based On Sign

The value of future execution flow (19) allows us to obtain operator’s matrix elements. Using integration by parts (put in (72)) obtain:

| (90) |

This operator’s matrix elements cannot be obtained directly from sample (66), however the knowledge of the impact from the future allows us to apply an integration by parts. Actually this is the only operator for which integration by parts gives exact answer; for other operators (e.g ) the boundary value at is not known and matrix elements are typically obtained ‘‘within a boundary term’’. Only having determined the exact value of (that includes both: an ‘‘impact from the past’’ and an ‘‘impact from the future’’) it is possible to have the matrix elements that are accurate enough to consider an eigenproblem:

| (91) |

Other than (19) values can be used in the boundary term of (90), see [2] ‘‘Appendix E: On calculation of operator matrix elements from operator ’’ for a list of reasonable options for . The concept introduced in [2] is to treat low high and high low transitions separately, as they lead to a very different price behavior. These -transitions correspond to derivative of different signs; corresponding operator always has eigenvalues of different signs: . Let us split the entire space into direct sum of two subspaces888 Here we use matrix elements (90) that are calculated from directly sampled moments. An alternative is to split the states according to or sign using the “secondary sampling” of [2]. The simplest example of it’s application is the scalp price (89) that takes into account only “important” price changes (regular price is the sum of all price changes). . Construct two projection operators:

| (92) | ||||

| (93) | ||||

| (94) |

This transform can be considered as eigenvalues adjustment technique[12] where the eigenvalues (not the eigenvectors!) are adjusted for an effective identification of weak hydroacoustic signals. The can be viewed as operator with all negative eigenvalues set to and all positive eigenvalues set to ; the same with for opposite sign. This technique is most easy to implement in (91) basis (where is diagonal), then to convert obtained projection operators back to the basis used applying (80). Alternatively one can convert all the matrices , , , , , to the basis of (91) eigenproblem applying (81). All the results will be identical as the theory is gauge invariant[6]. With projection operators (92) and (93) any density matrix average can be written in the form:

| (95) |

This split allows us to separate an average of in density matrix state to the ones corresponding to positive and negative .

First candidates on application of this technique are the terms from Total Lagrangian action (43) where the operators are calculated in the state of density matrix. Every that enter into the expression (44) can be split into contributions of different signs. There are several implementation of this technique, e.g. \seqsplitcom/polytechnik/freemoney/SplitdIdt.java and several others. The result, however, is not that great and this projection operators approach requires more research to be performed.

The property that requires special attention is that while the density matrix is obtained from the polynomial with transform (60), and all the average relations hold exactly, the density matrix itself may not have all the eigenvalues positive. This creates no problem with the total but sometimes lead to spurious artifacts when combined with projection operators; the effect, however, is small. These small but negative eigenvalues of the density matrix, ‘‘Hermann Minkowski-style space’’, also require additional research.

E.1 Execution Flow Based Eigenvalues Adjustment Example

In the Appendix above we considered projection operators (94) to ‘‘split’’ or based on some other operator spectrum, e.g. . To demonstrate a simplified example of this eigenvalues adjustment technique let us apply it to the operator . Consider the state ‘‘since till now’’ and a trading strategy: buy at execution flow below aggregated execution flow with (110) and (111), and sell above it. The P&L position changes , see [3] Section ‘‘P&L operator and trading strategy’’, is:

| (96) |

Then the constraint is satisfied:

| (97) |

and, for this , the can be calculated:

| (98) |

If we want to consider (98) as a superposition of states, introduce an operator:

| (99) | ||||

| (100) | ||||

| (101) | ||||

| (102) |

The operator is actually the operator but with the eigenvalues instead of the 999 The operator may not correspond to a measure, i.e. it does not necessary correspond to moments from which to obtain using multiplication operator (53). . Then (97) and (98) become (103) and (104) respectively:

| (103) | ||||

| (104) |

From these operators and one can obtain ‘‘equilibrium prices’’ and etc. The result is similar to the technique of ‘‘extra volume’’ and of the Appendix F below; no advancing information we managed to obtain from (99).

Appendix F On The States Of Double Integration

The density matrix state (32) was obtained from the pure state of maximal execution flow by applying transform (60) to the polynomial , a variant of integration by parts:

| (105) | |||

| (106) |

with (106) due to normalizing and due to basis choice obtain familiar ‘‘integration by parts’’ relation (63). The density matrix allows us to calculate ‘‘execution-flow’’-related values from operator. We already used this relation (a special case of (62)) to calculate e.g.

| (107) | ||||

| (108) | ||||

| (109) | ||||

| (110) | ||||

| (111) |

The (108) corresponds to (72), (109) with boundary condition (19) gives we used in (31), (110) and (111) are traded volume and time since spike till ‘‘now’’; typically we use normalizing and .

Now consider a density matrix (33) obtained from the pure state of maximal execution flow by applying transform (60) to the polynomial twice. This density matrix corresponds to integration by parts performed twice. Obtain from (64):

| (112) | ||||

| (113) |

The density matrix allows us to calculate ‘‘volume’’-related values from operator. One of the major results of this paper is established in Section IV.2 fact that in state the values of operators and are very close and their difference (if exists) gives future price (36). Let us consider these operators not in state, but instead in the state . An important difference from state is that the condition of being zero in state no longer holds, as execution flow and aggregated execution flow are different in state. The (112) requires an ‘‘extra volume’’

| (114) |

to obtain proper value of operator ; an alternative is to use eigenvalues adjustment technique of Appendix E.1 above. Using (35) obtain

| (115) |

The difference from (35) is that there is an extra term caused by the difference between and . We can consider providing equal execution flow and aggregated execution flow, see [2]: ‘‘Appendix C: The state of maximal aggregated execution flow ’’, but these states gives little improvement. Consider instead a simplistic approach: select the value of that makes equals to zero:

| (116) |

In Fig. 9 the is presented. We see no ‘‘advancing’’ property as it is for (36), this is an indicator of ‘‘lagging’’ type. This makes us to conclude that the state while it has a number of interesting properties to research, does not immediately provide and ‘‘advancing’’ indicator. The is probably the only state in which and operators are very close and their difference (if exists) gives future price (36).

References

- Malyshkin [2017a] V. G. Malyshkin, Market Dynamics. On A Muse Of Cash Flow And Liquidity Deficit, ArXiv e-prints (2017a), arXiv:1709.06759 [q-fin.TR] .

- Malyshkin [2019a] V. G. Malyshkin, Market Dynamics: On Directional Information Derived From (Time, Execution Price, Shares Traded) Transaction Sequences., ArXiv e-prints (2019a), arXiv:1903.11530 [q-fin.TR] .

- Malyshkin and Bakhramov [2015] V. G. Malyshkin and R. Bakhramov, Mathematical Foundations of Realtime Equity Trading. Liquidity Deficit and Market Dynamics. Automated Trading Machines., ArXiv e-prints (2015), http://arxiv.org/abs/1510.05510, arXiv:1510.05510 [q-fin.CP] .

- Malyshkin and Bakhramov [2016] V. G. Malyshkin and R. Bakhramov, Market Dynamics vs. Statistics: Limit Order Book Example, ArXiv e-prints (2016), arXiv:1603.05313 [q-fin.TR] .

- Malyshkin [2016] V. G. Malyshkin, Market Dynamics. On Supply and Demand Concepts, ArXiv e-prints (2016), http://arxiv.org/abs/1602.04423, arXiv:1602.04423 .

- Malyshkin [2019b] V. G. Malyshkin, On The Radon–Nikodym Spectral Approach With Optimal Clustering, arXiv preprint arXiv:1906.00460 (2019b), arXiv:1906.00460 [cs.LG] .

- Malyshkin [2018] V. G. Malyshkin, On Lebesgue Integral Quadrature, ArXiv e-prints (2018), arXiv:1807.06007 [math.NA] .

- OMX [2014] N. OMX, NASDAQ TotalView-ITCH 4.1, Report (Nasdaq OMX, 2014) See data files samples https://emi.nasdaq.com/ITCH/ and newest version specification TotalView-ITCH 5.0. .

- [9] AUTRETECH LLC, www.атретек.рф, is a resident of the Skolkovo Technopark. In May 2019, the company became the winner of the Skolkovo Cybersecurity Challenge competition.

- Beckermann [1996] B. Beckermann, On the numerical condition of polynomial bases: estimates for the condition number of Vandermonde, Krylov and Hankel matrices, Ph.D. thesis, Habilitationsschrift, Universität Hannover (1996).

- Malyshkin [2014] V. G. Malyshkin, (2014), the code for polynomials calculation, http://www.ioffe.ru/LNEPS/malyshkin/code.html, there is also an alternative location.

- Malyshkin [2017b] G. S. Malyshkin, The comparative efficiency of classical and fast projection algorithms in the resolution of weak hydroacoustic signals (Сравнительная эффективность классических и быстрых проекционных алгоритмов при разрешении слабых гидроакустических сигналов), Acoustical Physics 63, 216 (2017b), doi:10.1134/S1063771017020099 (eng) ; doi:10.7868/S0320791917020095 (рус).