Marginal treatment effects in the absence of instrumental variables

Abstract

We propose a method for defining, identifying, and estimating the marginal treatment effect (MTE) without imposing the instrumental variable (IV) assumptions of independence, exclusion, and separability (or monotonicity). Under a new definition of the MTE based on reduced-form treatment error that is statistically independent of the covariates, we find that the relationship between the MTE and standard treatment parameters holds in the absence of IVs. We provide a set of sufficient conditions ensuring the identification of the defined MTE in an environment of essential heterogeneity. The key conditions include a linear restriction on potential outcome regression functions, a nonlinear restriction on the propensity score, and a conditional mean independence restriction which will lead to additive separability. We prove this identification using the notion of semiparametric identification based on functional form. And we provide an empirical application for the Head Start program to illustrate the usefulness of the proposed method in analyzing heterogenous causal effects when IVs are elusive.

Keywords: causal inference; treatment effect heterogeneity; point identification; Head Start

JEL Codes: C14, C31, C51

1 Introduction

The marginal treatment effect (MTE), which was developed in a series of seminal papers by Heckman and Vytlacil (1999, 2001, 2005), has become one of the most popular tools in social sciences for describing, interpreting, and analyzing the heterogeneity of the effect of a nonrandom treatment. The MTE is defined as the expected treatment effect conditional on the observed covariates and normalized error term consisting of unobservable determinants of the treatment status. Although the definition of MTE doesn’t necessitate an instrumental variable (IV), nearly all the existing identification strategies for the MTE rely heavily on valid instrumental variation which can induce otherwise similar individuals into different treatment choices. This is mainly because the MTE has been regarded as an extension of and supplement to the standard IV method in the causal inference literature. However, in practical applications, the validity of IVs is usually difficult to justify. In the most common case of just-identification in which only one IV is available, the exogeneity or randomness of the IV is fundamentally untestable. The exclusion restriction, which is another condition underlying the validity of IVs, has also been challenged more often than not (e.g., van den Berg, 2007; Jones, 2015).

Motivated by the potential invalidity of the available instruments, and inspired by the observation that instruments are unnecessary in the definition of MTE, we model, identify, and estimate the MTE without imposing the standard IV assumptions of conditional independence, exclusion, and separability. Namely, we allow all the observed covariates to be statistically correlated to the error term and have a direct impact on the outcome in addition to an indirect impact through the treatment variable. The cornerstone of our model is a normalized treatment equation, which determines treatment participation by the propensity score crossing a reduced-form error term that is statistically independent of (although functionally dependent on) all the covariates. This is comparable to the conventional IV model, in which normalization is performed with respect to only the non-IV covariates. The independence of the reduced-form treatment error from all the covariates can partly justify a conditional mean independence assumption on the potential outcome residuals, which can ensure the additive separability of the MTE into observables and unobservables. This separability then facilitates the semiparametric identification of the MTE, given a linear restriction on the potential outcome regression functions and a nonlinear restriction on the propensity score function. We prove the identification by representing the MTE as a known function of conditional moments of observed variables. Intuitively, the identification power comes from the excluded nonlinear variation in the propensity score, which plays the role of the IV in exogenously perturbing the treatment status. Our identification strategy closely resembles the semiparametric counterpart of identification based on functional form (Dong, 2010; Escanciano et al., 2016; Lewbel, 2019; Pan et al., 2022). We build our result on the notion of identification based on functional form mainly because (i) it can lead to point identification and estimation, (ii) the required assumptions are regular, and (iii) the resulting estimation procedures are the most compatible with the standard procedures in conventional IV-MTE models. After identification, the MTE can be semiparametrically estimated by implementing the kernel-weighted pairwise difference regression for the sample selection model (Ahn and Powell, 1993) for each treatment status.

We contribute to the literature on program evaluation under essential heterogeneity by proposing a method for defining, identifying, and estimating the MTE in the absence of IVs. The main value of this IV-free framework of the MTE is threefold. First, it provides an approach for consistently estimating heterogeneous causal effects when a valid IV is hard to find. Second, it suggests an easy-to-implement means for testing the validity of a candidate IV, because it nests the models that assume exclusion restrictions. For instance, in studies on returns to education, the validity of most instruments for educational attainment is suspect, such as parental education, distance to the school, and local labor market conditions (Kédagni and Mourifié, 2020; Mourifié et al., 2020). Our framework enables a reliable evaluation of returns to education without imposing IV assumptions on the candidate instruments and implies a simple test for exclusion restrictions by -testing the instruments’ coefficients in potential outcome equations. Third, even though the validity of the IV is verified, identification based on functional form can be invoked as a way to increase the efficiency of estimation or to check the robustness of the results to alternative identifying assumptions.

Early explorations of the identification of endogenous regression models when IVs are unavailable focused on linear systems of simultaneous equations, in which the lack of IVs is associated with hardly justifiable exclusion restrictions and insufficient moment conditions. The typical strategy for addressing this underidentification problem is to impose second- or higher-order moment restrictions to construct instruments by using the available exogenous covariates (e.g., Fisher, 1966; Lewbel, 1997; Klein and Vella, 2010). In particular, imposing restrictions on the correlation between the covariates and the variance matrix of the vector of model errors leads to identification based on heteroscedasticity (Lewbel, 2012). D’Haultfœuille et al. (2021) considered a more general nonseparable, nonparametric triangular model without the exclusion restriction, and established identification for the control function approach based on a local irrelevance condition. However, these results are restricted to the case of continuous endogenous variable or treatment. Lewbel (2018) showed that, in linear regression models, the moment conditions of identification based on heteroscedasticity can be satisfied when the endogenous treatment is binary, at the expense of strong restrictions on the model errors. Alternatively, Conley et al. (2012) and Van Kippersluis and Rietveld (2018) presented a sensitivity analysis approach for performing inference for linear IV models while relaxing the exclusion restriction to a certain extent. However, the linear models implicitly impose an undesirable homogeneity assumption on treatment effects.

The literature on sample selection models without the exclusion restriction is also closely related. A general solution to the problem of lack of excluded variables is partial identification and set estimation (Honoré and Hu, 2020, 2022; Westphal et al., 2022). The limitation of this approach is that the identified or estimated set may be too wide to be informative. Another solution is the approach of identification at infinity that uses the data only for large values of a special regressor (Chamberlain, 1986; Lewbel, 2007) or of the outcome (D’Haultfœuille and Maurel, 2013). Although identification at infinity can lead to point identification, it is typically featured as irregular identification (Khan and Tamer, 2010), and the derived estimate will converge at a rate slower than , where is the sample size. Heckman (1979) exploited nonlinearity in the selectivity correction function to achieve point identification and root- consistent estimation for the linear coefficients of a parametric sample selection model, which is the original version of identification based on functional form. However, Heckman’s model imposes a restrictive bivariate normality assumption on the error terms and thus poses the risk of model misspecification. Escanciano et al. (2016) extended Heckman’s approach to a general semiparametric model and established identification of the linear coefficients by exploiting nonlinearity elsewhere in the model. At the expense of the generality of their model, Escanciano et al. (2016)’s identification result was only up to scale and required a scale normalization assumption. This normalization would be innocuous if we know the sign of the normalized coefficient and are interested in only the sign, but not the magnitude, of the other coefficients. However, the magnitude of the linear coefficients is indispensable to the evaluation of a program or a policy. In addition, the identifying assumption about nonlinearity developed by Escanciano et al. (2016) implicitly rules out the case of the existence of two continuous covariates. We adapt the result of Escanciano et al. (2016) to the MTE model by amending the two defects. First, we take advantage of the specific model structure to relax the scale normalization and identify the magnitude of the linear coefficients. Moreover, the identifying assumption about nonlinearity will be simplified owing to the specific model structure. We give an intuitive interpretation of the new nonlinearity assumption. Second, we combine the nonlinearity assumption with a local irrelevance condition to allow for an arbitrary number of continuous covariates.

The rest of this paper is organized as follows. In Section 2, we introduce our model and the definition of MTE without IVs. In Section 3, we propose a possible set of sufficient conditions for the identification of the MTE, in place of the standard IV assumptions. The key conditions include semiparametric functional form restrictions and the conditional mean independence assumption, of which the latter implies the additive separability of the MTE into observed and unobserved components. Section 4 suggests consistent estimation procedures, and Section 5 provides an empirical application to Head Start. Section 6 concludes. Online appendices comprise (A) a proof of the primary identification result, (B) a discussion on variants of the nonlinearity assumption, and (C) an identification result for limited valued outcomes which entails a slight modification of the assumptions.

2 Model

In the following, we denote random variables or random vectors by capital letters, such as , and their possible realizations by the corresponding lowercase letters, such as . We denote as the cumulative distribution function (CDF) of and as the conditional CDF of given . Our analysis builds on the potential outcomes framework developed by Roy (1951) in econometrics and Rubin (1973a, b) in statistics. Specifically, we consider a binary treatment, denoted by , and let and denote the potential outcomes if the individuals are treated () or not treated (), respectively. The observed outcome is

and the quantity of interest is the counterfactual treatment effect . We suppose that the treatment status is determined by the following threshold crossing rule:

| (1) |

where is a vector of pretreatment covariates, is the indicator function of event , is an unknown function, and is a structural error term containing unobserved characteristics that may affect participation in the treatment, such as the opportunity costs or intangible benefits of the treatment.

Compared with the conventional MTE model, we relax the independence and separability assumptions in the treatment equation (1) to account for the absence of IVs. First, we don’t assume that is stochastically independent of ; that is, no exogenous covariate is needed. Second, we allow the treatment decision rule to be intrinsically nonseparable in observed and unobserved characteristics, which is equivalent to relaxing the monotonicity assumption in the IV model (Vytlacil, 2002, 2006). Specifically, let be the counterfactual error term denoting what would have been if had been externally set to , then the nonseparability of (1) means that at least two vectors and exist in the support of such that with positive probability. In particular, is allowed to depend functionally on , as in the following example.

Example 1.

We consider a latent index rule for treatment participation:

| (2) |

where the observed can be statistically correlated to the unobserved , and no restriction is imposed on the cross-partials of the index function . Without independence and additive separability, model (2) is completely vacuous, imposing no restrictions on the observed or counterfactual outcomes (Heckman and Vytlacil, 2001). This general latent index rule fits into the treatment equation (1) by taking and .

Example 1 illustrates that no generality is lost by modeling the treatment variable as equation (1) without imposing the independence and separability assumptions. We define the propensity score function as the conditional probability of receiving the treatment given the covariates,

and define the propensity score variable as . Under the regularity condition that is absolutely continuous with respect to the Lebesgue measure for all , the structural treatment equation (1) can be innocuously normalized into a reduced form:

| (3) |

where

is a normalized error term, which by definition follows standard uniform distribution conditional on and thus is stochastically independent of and . This independence, which seems counterintuitive due to the functional dependence of on , will be lost if we consider , the counterfactual variable of when is set to . In general, the conditional distribution of given for depends on , and the unconditional distribution of is not uniform.

Example 2.

We suppose that is a scalar, and

Through a property of the bivariate normal distribution, we obtain and , where , , and denotes the standard normal CDF. Hence,

We observe that because , but because

and is not uniformly distributed because .

Given this independence, we may consider the reduced-form treatment error as the orthogonalized unobservables with respect to the observables, or the unobservables that are projected onto the subspace orthogonal to the one spanned by the observables. Another interpretation of is the ranking of the structural error conditional on . For instance, represents a typical individual whose value ranks above 20% individuals with identical covariates. enters the normalized crossing rule on the right, making an individual less likely to receive treatment; thus, it refers to resistance or distaste regarding the treatment in the MTE literature. If is large, then the propensity score should be large to induce the individual to participate in the treatment. However, an individual with a value close to zero will participate even though is small.

In the above instrument-free model, we define the MTE as the expected treatment effect conditional on the observed and unobserved characteristics:

captures all the treatment effect heterogeneity that is consequential for selection bias by conditioning on the orthogonal coordinates of the observable and unobservable dimensions. Given and , the treatment status is fixed and thus independent of the treatment effect . Similar to the MTE in the IV model, can be used as a building block for constructing the commonly used causal parameters, such as the average treatment effect (ATE), the treatment effect on the treated (TT), the treatment effect on the untreated (TUT), and the local average treatment effect (LATE), which can be expressed as weighted averages of , as follows:

Somewhat surprisingly, compared with the weights on the conventional MTE (e.g., Heckman and Vytlacil, 2005, Table IB), which are generally difficult to estimate in practice, the weights on are simpler, more intuitive, and easier to compute.

Heckman and Vytlacil (2001, 2005) considered defining the MTE in a similar nonseparable setting by conditioning on the structural error, and showed how to integrate to generate other causal parameters. However, such an MTE cannot be identified even in the presence of IVs. Our definition, based instead on the reduced-form error, can effectively exploit the statistical independence between the observed and unobserved variables to facilitate identification of the MTE. Zhou and Xie (2019) proposed to redefine the MTE by conditioning on rather than covariates, which is a more parsimonious specification of all the relevant treatment effect heterogeneity for selection bias. The extension of our identification and estimation procedures to this alternative definition is straightforward.

3 Identification

Our identification strategy relies on a linearity restriction on the potential outcome equations and a nonlinearity restriction on the propensity score function. The intuition is that the propensity score minus the linear outcome index will provide the excluded variation that perturbs treatment status, which plays the role of a continuous IV. Furthermore, to ensure the exogeneity of the excluded variation, it is necessary to impose a certain form of independence between the potential outcome residuals and covariates. We denote and , , as the regression functions and residuals of potential outcomes.

Assumption CMI (Conditional Mean Independence). Assume that with probability one.

Assumption CMI is standard in the MTE literature and commonly referred to as separability or additive separability assumption (e.g., Brinch et al., 2017; Mogstad et al., 2018; Zhou and Xie, 2019), because it’s a necessary and sufficient condition for the MTE to be additively separable into observables and unobservables (Zhou and Xie, 2019, p.3074):

Namely, under Assumption CMI, the shape of the MTE curve with respect to will not vary with covariates. Assumption CMI is partly justified by and , which come directly from the definition. On this basis, it is sufficient for Assumption CMI to hold if the conditional covariance of and is independent of , which is a key assumption in the heteroscedasticity-based identification method as well (Lewbel, 2012). Assumption CMI is implied by and much weaker than the full independence frequently imposed (often implicitly) in applied work, where is the structural treatment error in (1). In particular, Assumption CMI doesn’t rule out the marginal dependence of or on , as illustrated by Example 3.

Example 3.

Suppose that is a scalar and

In this setting, is correlated to with an unconstrained correlation coefficient. Through a property of the multivariate normal distribution, we obtain

| (4) |

where and . Hence,

By Example 2, we have , so that . Consequently,

and Assumption CMI holds. More generally, to allow the dependence of on as well, we can set

in place of (4), where is the conditional variance of given . Since is irrelevant to the variance of according to the above analysis, Assumption CMI still holds in the presence of such heteroscedastic .

For the purpose of identifying the MTE, we first establish the relationship between and observed regression functions. Under Assumption CMI, the observed regression functions for treated and untreated individuals are additively separable in a similar way:

| (5) | |||||

where

By multiplying both sides of the above equations with or in order to eliminate the denominators and then differentiating with respect to , we obtain

where denotes the derivative function of . Therefore, the MTE can be represented as

| (6) |

and the identification of it can be achieved by identifying and from the regression equation (5).

Recall that , where is a deterministic function, albeit unknown. Given that the argument of in (5) exhibits no variation apart from that generated by , it is impossible to distinguish between and in the absence of additional assumptions. For example, one may choose to let absorb and set , or vice versa. Functional form restrictions that differentiate and from one another can address this issue and facilitate the identification of both and . To this end, we impose linearity on and nonlinearity on .

Assumption L (Linearity). Assume that with probability one for some fixed and , .

Assumption L imposes a linear restriction on the potential outcome regression functions, which is a common practice in empirical studies. The linear restriction may seem too strong compared with that in the existing identification strategies, which allow highly flexible (especially nonparametric) specifications on the potential outcomes. However, for the estimation of MTE, the linear specification has been nearly universally adopted for tractability and interpretability (e.g., Kirkeboen et al., 2016; Kline and Walters, 2016; Brinch et al., 2017; Heckman et al., 2018; Mogstad et al., 2021; Aryal et al., 2022; Mountjoy, 2022). Noting that Assumption L implicitly requires the potential outcomes to be continuously distributed and supported on the entire real line, we would like to point out that our identification strategy can also be adapted to the case of limited valued outcomes by specifying linear latent index. A detailed discussion is left to Appendix C. With a little abuse of notation, we redenote to absorb the intercept so that

and thus that the regression equation (5) and the MTE become

| (7) | |||||

| (8) |

To present the nonlinearity assumption, we let be partitioned as , where and consist of covariates that are continuously and discretely distributed, respectively. We denote , , and as the -th coordinates of , , and , respectively. And we denote as a generic element in the support of ; likewise for , , and . The nonlinearity assumption requires the propensity score function to be nonlinear in given a benchmark value of . Without loss of generality, we suppose that the vector of zeros is in the support of and is the benchmark value. We denote for notational convenience, where the discrete covariates are equal to zero.

Assumption NL (Non-Linearity). Assume that and , , are differentiable with respect to , and that the derivative of satisfies the following NL2 when or NL1 when

– NL2 (): there exist two vectors , in the support of and two elements , in set such that (i) , (ii) , (iii) , (iv) , and (v) , where is the partial derivative of with respect to the -th argument.

– NL1 (): there exists a constant in the support of such that , where is the univariate derivative of .

Assumption NL requires the propensity score function to be nonlinear in continuous covariates in a generalized sense. The combination of Assumptions L and NL will enable identification based on functional form in a semiparametric version, which can realize the identification of linear coefficients by exploiting nonlinearity elsewhere in the model. The nonlinearity assumption has two different forms, depending on the number of continuous covariates. When two or more continuous covariates are available, Assumption NL2 will require some variation in to distinguish it from a linear-index function. Concretely, NL2 will not hold if for a smooth function , because in this case, both sides of the inequality in (v) are equal to . Otherwise, however, it is difficult to construct examples that violate (v). In practice, (v) can be fulfilled even when is single-index specified, if interaction or/and quadratic terms are added, as shown in Example 4.

Example 4.

Consider the case of two continuous covariates. Suppose that for a smooth function , or . Then, we obtain for the interaction case, or for the quadratic case. In both cases, Assumption NL2.(v) will generally hold for and satisfying .

Assumption NL2 requires the existence of at least two continuous covariates. However, in empirical studies based on survey data, most of the demographic characteristics are documented as discrete or categorical variables, such as age, gender, race, marital status, educational attainment, and so on. Therefore, we also impose Assumption NL1, as a supplement to NL2, to take into account the situation in which only one continuous covariate is available. NL1 requires to have a stationary point, which implies that is necessarily nonlinear. NL1 will hold if the probability of receiving treatment is unaffected by some local change in the continuous covariate. It’s a differential version of the local irrelevance assumption imposed in nonseparable models for attaining point identification (e.g., Torgovitsky, 2015; D’Haultfœuille et al., 2021). NL1 can be extended to the case of no continuous covariate, as discussed in Appendix B. However, using only discrete covariates provides little identifying variation, which may lead to poor performance in the subsequent model estimation (Garlick and Hyman, 2022). Hence, we focus on the case of at least one continuous covariate.

We note that Assumption NL doesn’t exclude the widely specified linear-index treatment equation, as long as the structural error is not independent of covariates.

Example 5.

Suppose the treatment status is determined by a linear-index threshold crossing rule:

where is not independent of . Namely, suppose in the structural treatment equation (1). Consider a multiplicatively heteroscedastic such that , where is a positive function and is an idiosyncratic error independent of . The normalization derives

that is, the reduced-form error in (3) is equal to , and the propensity score function is equal to . In general, is a nonlinear function. Another specification we consider is a linear-index model with endogeneity in a certain component of , in which . Given that is generally highly nonlinear in , Assumption NL1 holds straightforwardly, and NL2 holds with .

Unlike in the binary response model, the ubiquitous heteroscedasticity and endogeneity benefit our results while inducing no trouble, because the identification of the MTE is irrelevant to the structural coefficients in . Our MTE is defined by the reduced-form treatment error; thus, all we need from the treatment equation is the propensity score, which has a reduced-form nature.

Assumption NL ensures identification of coefficients of continuous covariates. In order to identify coefficients of discrete covariates, we impose a mild support condition. For any , we denote as the vector with the -th coordinate being equal to and all the other coordinates being equal to zero.

Assumption S (Support). For each , assume for some in the support of that there exists in the support of such that is in the support of .

A sufficient condition for Assumption S to hold is that has a full support on the unit interval, or, more generally, that the support of is overlapped with those of for all . Otherwise, we have to find an for each such that the support of is overlapped with that of . The following theorem establishes our main identification result.

Theorem 1.

If Assumptions CMI, L, NL, and S hold, then and at all in the support of the propensity score are identified for .

The proof of Theorem 1 is grounded on the observed regression functions (7), which summarize the information from the data. As is unknown, we need to eliminate it through some subtraction to realize the identification of . When only one continuous covariate exists, the subtraction can be carried out locally around the point satisfying Assumption NL1. Otherwise, our strategy is to perturb two continuous covariates, such as and , in such a way that remains unchanged. Specifically, for each group , we increase by a small and simultaneously change by multiplied by , resulting in a perturbed value of the regression function. Subtracting the perturbed regression function from the original (7) will cancel out due to the equality of , giving rise to an equation for and . Note that the multiplier is the partial derivative of with respect to if we view as an implicit function of the other continuous covariates by equating to a constant. Accordingly, Assumption NL2.(v) implies two linearly independent equations, ensuring an exact solution (i.e., identification) of and . The detailed proof of Theorem 1 is presented in Appendix A. It is worth mentioning that our strategy naturally features overidentification in the sense that generally more than one pair of points in the support of satisfy Assumption NL2 or more than one point satisfies NL1, because is continuously distributed. Moreover, there may be more than one value of that satisfies Assumptions NL and S. Consequently, the identification can also be represented as the average of solutions over all points of satisfying Assumption NL and over all values of satisfying Assumptions NL and S.

According to (8), Theorem 1 implies the identification of MTE in the absence of IVs. Specifically, This result allows practitioners to include all the relevant observed characteristics into both treatment and outcome equations, without imposing any exclusion or full independence assumptions. Under Theorem 1, the conventional causal parameters can also be identified without instruments, provided that the support of contains 0 and/or 1 (which implies identifiability of and/or ):

| (9) | |||||

4 Estimation

Our identification strategy for the MTE implies a separate estimation procedure that works with the partially linear regression (7) by each treatment status. In particular, we recommend the kernel-weighted pairwise difference estimation method proposed by Ahn and Powell (1993) because of its computational simplicity, well-established asymptotic properties, and, most importantly, its capacity to effectively leverage the overidentifying information that underlies the data. Suppose that is a random sample of observations on . In the first step, we estimate the nonparametrically specified propensity score using the kernel method, that is,

| (10) |

and

| (11) |

where , , are bandwidths and is a univariate kernel function. If the dimension of is large, then a smoothed kernel for discrete covariates (Racine and Li, 2004) can be applied as a substitute for the indicator function, to alleviate the potential problem of inadequate observations in each data cell divided by the support of . If the number of continuous covariates is not small either, then the well-known curse of dimensionality will appear, and a linear-index specification may thus be practically more relevant when modeling the propensity score. The index should include a series of interaction terms and quadratic or even higher-order terms of continuous covariates to supply sufficient nonlinear variation. The linear-index propensity score can be estimated by parametric probit/logit or semiparametric methods (e.g., Powell et al., 1989; Ichimura, 1993; Klein and Spady, 1993; Lewbel, 2000), depending on the distributional assumption on the error term.

In the second step, we estimate for each through a weighted pairwise difference least squares regression:

| (12) | |||||

where the weights are given by

with and being the bandwidth and kernel function, respectively, which can be different from those in the first step. Given , the nonlinear function and its derivative function at any in the support of can be estimated by the local linear method, namely,

where

Finally, we plug , , , and into identification equations (8) and (9) to estimate the MTE and other causal parameters, as follows:

The kernel-weighted pairwise difference estimator has the advantage of having a closed-form expression, so we need not solve any formidable optimization problems. However, it faces the challenging problem of bandwidth selection, similar to most semiparametric estimation methods. Alternatively, we can consider imposing a parametric specification on the unobservable heterogeneity of the MTE such that for finite dimensional , e.g., the polynomial specification that or the normal polynomial specification that . In the latter, setting will match Heckman’s normal sample selection model. Under the parametric restriction, the second step becomes a global regression with parameterized selection bias correction term for each ; therefore, the tuning of and is circumvented. Another advantage of a parametrically specified second step is the lower computational burden compared with that of the pairwise difference estimator defined by double summation which requires a squared amount of calculation (Pan and Xie, 2023).

Notably, the local IV (LIV) estimation procedure can be adapted to our model as well, though it needs no IV. Unlike the separate estimation procedure, the adapted LIV approach is based on a whole-sample regression:

| (13) | |||||

where

Note that since

the MTE is equal to the derivative of the regression function (13) with respect to . As a consequence, it would be sufficient to estimate for and functions and . Given the estimated propensity score, we can likewise use the pairwise difference principle to obtain

where is an estimator for according to (13). Given and , we apply the local linear method as well, yielding

where is an estimator for . Then, we construct the instrument-free LIV estimators for the MTE and other treatment parameters as

This adapted LIV approach is computationally more convenient than the separate estimation procedure. Furthermore, if we accept a parametric specification on and thus on , the adapted LIV approach can be implemented through a parametric least squares regression.

In summary, the identification based on functional form can accommodate most of the frequently-used estimation procedures in a typical IV model.

5 Empirical application

In this section, we revisit the heterogeneous long-term effects of the Head Start program on educational attainment and labor market outcomes by using the proposed MTE method. Head Start, which began in 1965, is one of the largest early child care programs in the United States. The program is targeted at children from low-income families and can provide such children with preschool, health, and nutritional services. Currently, Head Start serves more than a million children, at an annual cost of 10 billion dollars. As a federally funded large-scale program, Head Start has encountered concerns about its effectiveness and thus spawned numerous studies to evaluate its educational and economic effects on the participants. Early studies focused mainly on short-term benefits and found that participation in Head Start is associated with improved test scores and reduced grade repetition at the beginning of primary schooling. However, such benefits seem to fade out during the upper primary grades (Currie, 2001). Garces et al. (2002) provided the first empirical evidence for the longer-term effects of Head Start on high school completion, college attendance, earnings, and crime. Since then, the literature has shifted its focus to the medium- and long-term or intergenerational (e.g., Barr and Gibbs, 2022) gains of Head Start enrollment.

However, despite the enormous policy interest, evidence for the long-term effectiveness of Head Start is not unified, as summarized in Figure 1 in De Haan and Leuven (2020). The lack of consistency between these results may be due to differences in the population or problems related to the empirical approach (Elango et al., 2016). For example, the LATE obtained by the family fixed-effects approach (e.g., Garces et al., 2002; Deming, 2009; Bauer and Schanzenbach, 2016) relies on families that differ from other Head Start families in size and in other observable dimensions. Moreover, the sibling comparison design underlying that approach is limited by endogeneity concerns. To reconcile the divergent evidence, De Haan and Leuven (2020) evaluated the heterogeneous long-term effects of Head Start by using a distributional treatment effect approach that relies on two weak stochastic dominance assumptions, instead of restrictive IV assumptions. The authors found substantial heterogeneity in the returns to Head Start. Specifically, they found that the program has positive and statistically significant effects on education and wage income for the lower end of the distribution of participants.

To produce a complete picture, we assess the causal heterogeneity of Head Start from another perspective; namely, we examine the effects across different levels of unobserved resistance to participation in the program, rather than across the distribution of long-term outcomes. By relating the treatment effects to participation decision, the MTE is informative about the nature of selection into treatment and allows the computation of various causal parameters, such as the ATE, TT, and TUT. Another feature of the MTE is that its description of the effect heterogeneity is irrelevant to the specific outcome variable. Instead, the MTE curve depicts the treatment effects on the unobserved determinants of the treatment. This is another advantage of the MTE in the case of multiple outcomes of interest, as in this application, where the interpretation of the heterogeneity is kept consistent across different outcomes. Finally, in contrast to the distributional treatment effects partially identified by De Haan and Leuven (2020), our MTE method can achieve point identification and estimation.

We use the data provided by De Haan and Leuven (2020), which are from Round 16 (1994 survey year) of the National Longitudinal Study of Youth 1979 (NLSY79). The sample is restricted to the 1960–64 cohorts, because the first cohort eligible for Head Start was born in 1960. In addition, the sample excludes individuals who participated in any preschool programs other than Head Start, implying that we estimate the returns to Head Start relative to informal care. The treatment variable is whether the respondents attended the Head Start program as a child, and the outcome variables are the respondents’ highest years of education and logarithmic yearly wage incomes in their early 30s (they were between 30 and 34 years old in 1994). The covariates are age, gender, race, parental education, and family income in 1978. We refer the reader to the original paper for additional details on the data, sample, and variables. For our analysis, since we apply nonparametric estimation in the first step, we recode parental education into two categories to reduce the number of the data cells or subsamples split by different values of discrete covariates. Specifically, we redefine parental education as a binary variable equal to one if at least one parent went to college or zero if both parents are high school graduates or lower.

We first verify the credibility of Assumptions S and NL for the Head Start data. Table 1 lists the support of the nonparametrically estimated propensity score for all data cells that are split by different values of discrete covariates. We find that the 34th data cell has a full support on the unit interval. Therefore, Assumption S must hold if we choose the corresponding values (32 years old, female, black race, parental education being college or higher) as the benchmark. We then verify Assumption NL for the 34th data cell. Note that only one continuous covariate exists in the data, that is, family income in 1978. Hence, we invoke Assumption NL1, which requires the propensity score function to have at least one stationary point. The left panel of Figure 1 plots the estimated propensity score as a univariate function of the continuous covariate for the 34th data cell. We find that the propensity score is highly nonlinear and nonmonotonic in family income in 1978, possibly owing to the parents’ various self-selection on participation in the program. So Assumption NL1 is fulfilled.

Furthermore, the largest data cell (i.e., the 29th data cell with 175 observations) can be considered an alternative candidate for the benchmark (32 years old, male, white race, parental education being high school or lower). Nevertheless, closer examination of Assumption S is necessary for this data cell, as the support of the propensity score is now limited to , which is totally separate from the supports for some other data cells. To this end, we need to find a distinct value of each discrete variable such that the propensity score has overlapping support with . For example, if the value of age is altered from 32 to 30 (or 31, 33, 34) while the other discrete variables remain unaltered, we will go to the 5th (or 17th, 41st, 53rd) data cell with support (or ), which is overlapped with as required. Altering the values of gender, race, and parental education leads to the 35th, 25th (or 27th), and 30th data cells with supports , (or ), and , respectively, which all meet the overlapping condition as well. Therefore, Assumption S holds for the largest data cell. The validation of Assumption NL1 for this data cell can be demonstrated as well by the existence of stationary points of the estimated propensity score function, as illustrated in the right panel of Figure 1.

We next turn to the estimation. In the first step, we nonparametrically regress the treatment variable (Head Start) on all of the covariates to generate propensity scores for the sample, by employing the kernel estimation method in (10) and (11) with the rule-of-thumb bandwidth. Figure 2 plots the frequency distribution of the estimated propensity score by treatment status. The figure shows that the propensity score in our sample follows a bimodal distribution, with the main peak being at approximately 0.5 for the participants and approximately 0.1 for the nonparticipants. To reduce the potential impact of outliers, we trim the observations of the 1% smallest and 1% largest propensity scores in the following steps. This trimming leads to a common support ranging from 0 to 0.6, as indicated by the two dashed vertical lines. Given the estimated propensity score, we then estimate in sequence the linear coeffecients, MTE, and summary treatment effect measures including ATE, TT, and TUT. We adopt the separate estimation procedure, since it generally exploits more identifying information behind the data than the LIV procedure (Brinch et al., 2017).

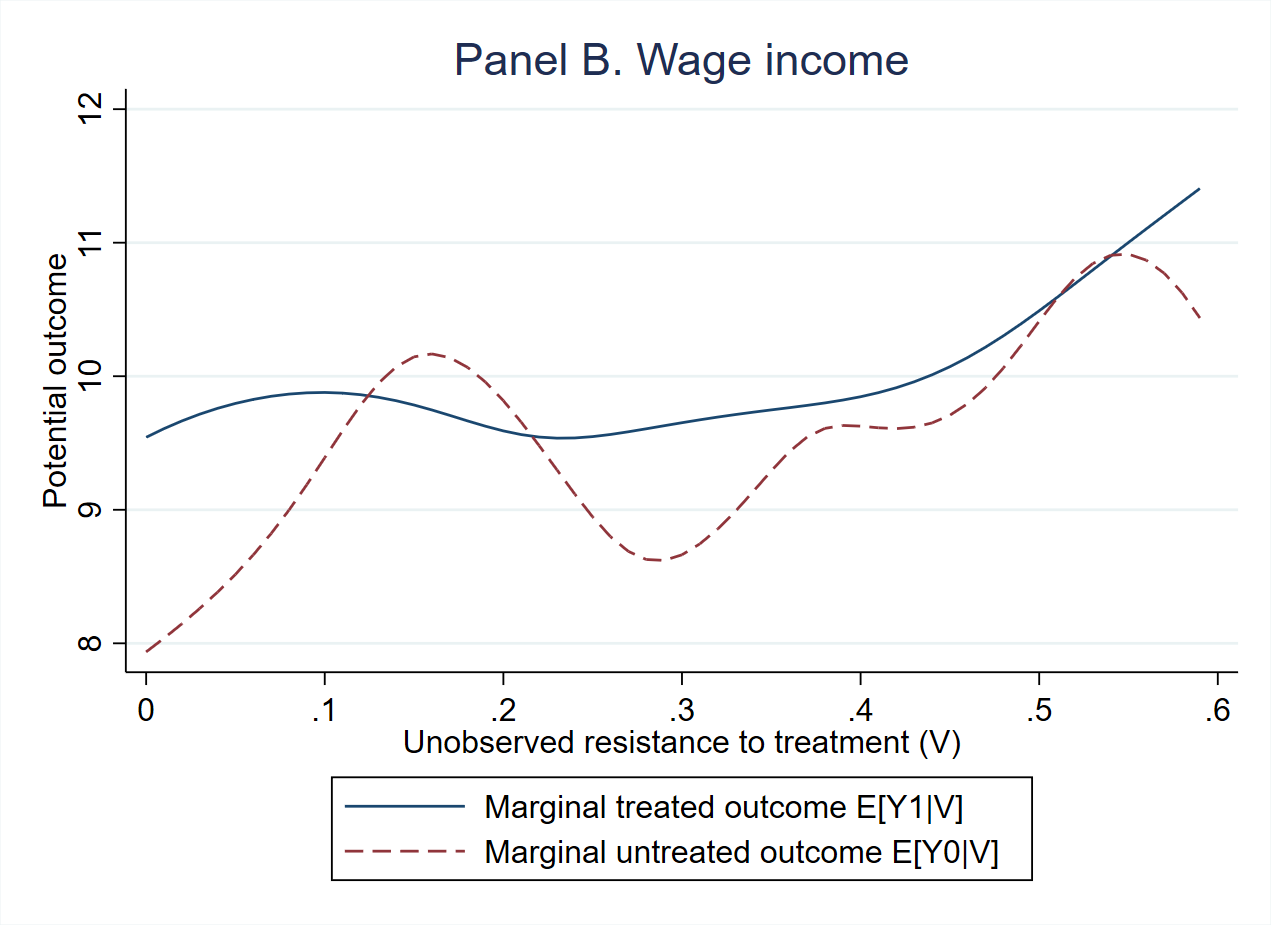

Figure 3 shows the estimated MTE under Heckman’s normal specification that for , where is the CDF of normal distribution, which can be derived by assuming that follows a bivariate normal distribution with covariance . The MTE curves, evaluated at the mean values of , relate the unobserved component of the treatment effect to the unobserved component of the treatment choice. A high value of implies a low probability of treatment; thus, we interpret as resistance to participation in Head Start. The MTE curve for wage income plotted in panel B decreases with resistance, revealing a pattern of selection on gains, as expected. In other words, based on the unobserved characteristics, the children who were most likely to enroll in Head Start benefitted the most from the program in terms of their labor market outcome. However, when the outcome is educational attainment in panel A, the positive slope of the MTE curve points to a pattern of reverse selection on gains. In consequence, the TUT exceeds the ATE, which in turn exceeds the TT. The same pattern was observed by Cornelissen et al. (2018) when estimating school-readiness return to a preschool program in Germany. This phenomenon may be attributed partly to the fact that parents have their own objectives in deciding childcare arrangements. Nevertheless, the disagreement between selection patterns for the two outcomes raises concern about the possible functional form misspecification of the normal MTE, which is strictly restricted to be monotonic in the resistance to treatment. Therefore, we consider a nonparametric specification for and implement a semiparametric separate estimation procedure.

Figure 4 plots the semiparametric MTE curves for education and labor market outcomes in panels A and B, respectively. Under the flexible specification, the MTE curves are no longer monotonic, and the clear pattern of selection disappears. In the case of education outcome, the curve is initially flat, then becomes an inverted U shape, with a statistically significant positive effect appearing in the region of the peak, corresponding to the children with resistance to treatment ranging from 0.32 to 0.42. The complex feature of this curve is hardly captured by any parsimonious parametric function. Similar observations are seen in the case of wage income, in which the MTE curve is nonmonotonic, with a complex shape, and significantly greater than zero for less than 10% of the children who were most likely to attend childcare early. A comparison of the summary treatment effect measures indicates weak selection on gains for both outcomes. Table 2 reports the semiparametric estimates for the effects of the covariates on potential outcomes and their difference based on (12). Columns 3 and 6 show that girls gain significantly more returns to Head Start attendance than boys. However, other than gender, no substantial observable heterogeneity exists in the treatment effects of the program, though parental education and family income in 1978 have a significantly positive effect on the respondents’ potential education and potential labor market outcomes in both the treated and untreated states.

Based on and reported in Table 2, and the separate estimates of for under the semiparametric specification, we can estimate the marginal structural functions

for potential outcomes and , which we plot in Figure 5. Panel A sheds light on the significantly positive effect of Head Start on education for the respondents with medium-level resistance to treatment, revealing that their gains from the program are driven mainly by the remarkably low educational attainment when untreated. Panel B leads to similar results for wage income, where significant gain emerges for low values of . Moreover, the relatively flat curve of potential wage income in the treated state implies that early childcare attendance serves as an equalizer that diminishes the intergroup difference in the labor market outcome.

In addition, we can semiparametrically estimate conditional expectations of the observed responses given the unobserved determinants of treatment choice, such as the marginal probability of participation

which mirrors the distribution of the propensity score, and the marginal observed outcome

where the last equality follows from the independence of and and from the conditional mean independence of and given . Plugging in proper estimates of each component in the above equations, we obtain the estimated marginal response functions and plot them in Figure 6. In particular, the marginal observed outcome curves relate the individuals with significant positive returns (with medium in Panel A and small in Panel B) to those with low education and low wage income, thereby bridging our results on the MTE and those of De Haan and Leuven (2020) on the distributional treatment effect.

6 Conclusion

We propose a novel method for defining, identifying, and estimating the MTE in the absence of IVs. Our MTE model allows all the covariates to be correlated to the structural treatment error. In this model, we define the MTE based on a reduced-form treatment error that (i) is uniformly distributed on the unit interval, (ii) is statistically independent of covariates, and (iii) has several economic meanings. The independence property facilitates the identification of our defined MTE. We provide sufficient conditions under which the MTE can be point identified based on functional form. The conditions are standard in a certain sense. The conditional mean independence assumption is equivalent to the separability assumption commonly imposed in the MTE literature, and is implied by and much weaker than the full independence assumption. The linearity and nonlinearity assumptions are the foundation of identification based on functional form, and can make sense to most empirical studies. We prove the identification by using a construction method. Our identification strategy allows the adaptation of most of the existing estimation procedures for conventional IV-MTE models, such as separate estimation, LIV estimation, parametric estimation, and semiparametric estimation. For the empirical application, we evaluate the MTE of the Head Start program on long-term education and labor market outcomes, in which an IV for Head Start participation is difficult to acquire. We find significant positive effects for individuals with medium-level or low resistance to treatment, and substantial heterogeneity exists.

References

- Ahn and Powell (1993) Ahn, H. and J. L. Powell (1993). Semiparametric estimation of censored selection models with a nonparametric selection mechanism. Journal of Econometrics 58(1-2), 3–29.

- Aryal et al. (2022) Aryal, G., M. Bhuller, and F. Lange (2022). Signaling and employer learning with instruments. American Economic Review 112(5), 1669–1702.

- Barr and Gibbs (2022) Barr, A. and C. R. Gibbs (2022). Breaking the cycle? intergenerational effects of an antipoverty program in early childhood. Journal of Political Economy 130(12), 3253–3285.

- Bauer and Schanzenbach (2016) Bauer, L. and D. W. Schanzenbach (2016). The Long-Term Impact of the Head Start Program. Washington DC: Hamilton Project.

- Brinch et al. (2017) Brinch, C. N., M. Mogstad, and M. Wiswall (2017). Beyond LATE with a discrete instrument. Journal of Political Economy 125(4), 985–1039.

- Chamberlain (1986) Chamberlain, G. (1986). Asymptotic efficiency in semi-parametric models with censoring. Journal of Econometrics 32(2), 189–218.

- Conley et al. (2012) Conley, T. G., C. B. Hansen, and P. E. Rossi (2012). Plausibly exogenous. Review of Economics and Statistics 94(1), 260–272.

- Cornelissen et al. (2018) Cornelissen, T., C. Dustmann, A. Raute, and U. Schönberg (2018). Who benefits from universal child care? estimating marginal returns to early child care attendance. Journal of Political Economy 126(6), 2356–2409.

- Currie (2001) Currie, J. (2001). Early childhood education programs. Journal of Economic Perspectives 15(2), 213–238.

- De Haan and Leuven (2020) De Haan, M. and E. Leuven (2020). Head start and the distribution of long-term education and labor market outcomes. Journal of Labor Economics 38(3), 727–765.

- Deming (2009) Deming, D. (2009). Early childhood intervention and life-cycle skill development: Evidence from head start. American Economic Journal: Applied Economics 1(3), 111–134.

- D’Haultfœuille et al. (2021) D’Haultfœuille, X., S. Hoderlein, and Y. Sasaki (2021). Testing and relaxing the exclusion restriction in the control function approach. Journal of Econometrics (in press). Available at https://doi.org/10.1016/j.jeconom.2020.09.012.

- D’Haultfœuille and Maurel (2013) D’Haultfœuille, X. and A. Maurel (2013). Another look at the identification at infinity of sample selection models. Econometric Theory 29(1), 213–224.

- Dong (2010) Dong, Y. (2010). Endogenous regressor binary choice models without instruments, with an application to migration. Economics Letters 107(1), 33–35.

- Elango et al. (2016) Elango, S., J. L. García, J. J. Heckman, and A. Hojman (2016). Early childhood education. In R. A. Moffitt (Ed.), Economics of Means-Tested Transfer Programs in the United States, Volume 2. Chicago: University of Chicago Press.

- Escanciano et al. (2016) Escanciano, J. C., D. Jacho-Chávez, and A. Lewbel (2016). Identification and estimation of semiparametric two-step models. Quantitative Economics 7(2), 561–589.

- Fisher (1966) Fisher, F. M. (1966). The Identification Problem in Econometrics. McGraw-Hill.

- Garces et al. (2002) Garces, E., D. Thomas, and J. Currie (2002). Longer-term effects of head start. American economic review 92(4), 999–1012.

- Garlick and Hyman (2022) Garlick, R. and J. Hyman (2022). Quasi-experimental evaluation of alternative sample selection corrections. Journal of Business & Economic Statistics 40(3), 950–964.

- Heckman (1979) Heckman, J. J. (1979). Sample selection bias as a specification error. Econometrica 47(1), 153–61.

- Heckman et al. (2018) Heckman, J. J., J. E. Humphries, and G. Veramendi (2018). Returns to education: The causal effects of education on earnings, health, and smoking. Journal of Political Economy 126(S1), S197–S246.

- Heckman and Vytlacil (1999) Heckman, J. J. and E. Vytlacil (1999). Local instrumental variables and latent variable models for identifying and bounding treatment effects. Proceedings of the national Academy of Sciences 96(8), 4730–4734.

- Heckman and Vytlacil (2001) Heckman, J. J. and E. Vytlacil (2001). Local instrumental variables. In C. Hsiao, K. Morimune, and J. L. Powell (Eds.), Nonlinear Statistical Modeling: Proceedings of the Thirteenth International Symposium in Economic Theory and Econometrics: Essays in Honor of Takeshi Amemiya, Chapter 1, pp. 1–46. Cambridge, U.K.: Cambridge University Press.

- Heckman and Vytlacil (2005) Heckman, J. J. and E. Vytlacil (2005). Structural equations, treatment effects, and econometric policy evaluation. Econometrica 73(3), 669–738.

- Honoré and Hu (2020) Honoré, B. E. and L. Hu (2020). Selection without exclusion. Econometrica 88(3), 1007–1029.

- Honoré and Hu (2022) Honoré, B. E. and L. Hu (2022). Sample selection models without exclusion restrictions: Parameter heterogeneity and partial identification. Journal of Econometrics (in press). Available at https://doi.org/10.1016/j.jeconom.2021.07.017.

- Ichimura (1993) Ichimura, H. (1993). Semiparametric least squares (SLS) and weighted SLS estimation of single-index models. Journal of Econometrics 58, 71–120.

- Jones (2015) Jones, D. (2015). The economics of exclusion restrictions in IV models. NBER Working Paper (No.21391). Available at https://doi.org/10.3386/w21391.

- Kédagni and Mourifié (2020) Kédagni, D. and I. Mourifié (2020). Generalized instrumental inequalities: testing the instrumental variable independence assumption. Biometrika 107(3), 661–675.

- Khan and Tamer (2010) Khan, S. and E. Tamer (2010). Irregular identification, support conditions, and inverse weight estimation. Econometrica 78(6), 2021–2042.

- Kirkeboen et al. (2016) Kirkeboen, L. J., E. Leuven, and M. Mogstad (2016). Field of study, earnings, and self-selection. The Quarterly Journal of Economics 131(3), 1057–1111.

- Klein and Vella (2010) Klein, R. and F. Vella (2010). Estimating a class of triangular simultaneous equations models without exclusion restrictions. Journal of Econometrics 154(2), 154–164.

- Klein and Spady (1993) Klein, R. W. and R. H. Spady (1993). An efficient semiparametric estimator for binary response models. Econometrica 61, 387–421.

- Kline and Walters (2016) Kline, P. and C. R. Walters (2016). Evaluating public programs with close substitutes: The case of Head Start. The Quarterly Journal of Economics 131(4), 1795–1848.

- Lewbel (1997) Lewbel, A. (1997). Constructing instruments for regressions with measurement error when no additional data are available, with an ppplication to patents and r&d. Econometrica 65(5), 1201–1214.

- Lewbel (2000) Lewbel, A. (2000). Semiparametric qualitative response model estimation with unknown heteroscedasticity or instrumental variables. Journal of Econometrics 97(1), 145–177.

- Lewbel (2007) Lewbel, A. (2007). Endogenous selection or treatment model estimation. Journal of Econometrics 141(2), 777–806.

- Lewbel (2012) Lewbel, A. (2012). Using heteroscedasticity to identify and estimate mismeasured and endogenous regressor models. Journal of Business & Economic Statistics 30(1), 67–80.

- Lewbel (2018) Lewbel, A. (2018). Identification and estimation using heteroscedasticity without instruments: The binary endogenous regressor case. Economics Letters 165, 10–12.

- Lewbel (2019) Lewbel, A. (2019). The identification zoo: Meanings of identification in econometrics. Journal of Economic Literature 57(4), 835–903.

- Mogstad et al. (2018) Mogstad, M., A. Santos, and A. Torgovitsky (2018). Using instrumental variables for inference about policy relevant treatment parameters. Econometrica 86(5), 1589–1619.

- Mogstad et al. (2021) Mogstad, M., A. Torgovitsky, and C. R. Walters (2021). The causal interpretation of two-stage least squares with multiple instrumental variables. American Economic Review 111(11), 3663–98.

- Mountjoy (2022) Mountjoy, J. (2022). Community colleges and upward mobility. American Economic Review 112(8), 2580–2630.

- Mourifié et al. (2020) Mourifié, I., M. Henry, and R. Méango (2020). Sharp bounds and testability of a Roy model of STEM major choices. Journal of Political Economy 128(8), 3220–3283.

- Pan and Xie (2023) Pan, Z. and J. Xie (2023). -penalized pairwise difference estimation for a high-dimensional censored regression model. Journal of Business & Economic Statistics 41(2), 283–297.

- Pan et al. (2022) Pan, Z., X. Zhou, and Y. Zhou (2022). Semiparametric estimation of a censored regression model subject to nonparametric sample selection. Journal of Business & Economic Statistics 40(1), 141–151.

- Powell et al. (1989) Powell, J. L., J. H. Stock, and T. M. Stoker (1989). Semiparametric estimation of index coefficients. Econometrica 57(6), 1403–1430.

- Racine and Li (2004) Racine, J. and Q. Li (2004). Nonparametric estimation of regression functions with both categorical and continuous data. Journal of Econometrics 119(1), 99–130.

- Roy (1951) Roy, A. D. (1951). Some thoughts on the distribution of earnings. Oxford Economic Papers 3(2), 135–146.

- Rubin (1973a) Rubin, D. B. (1973a). Matching to remove bias in observational studies. Biometrics 29(1), 159–183.

- Rubin (1973b) Rubin, D. B. (1973b). The use of matched sampling and regression adjustment to remove bias in observational studies. Biometrics 29(1), 184–203.

- Torgovitsky (2015) Torgovitsky, A. (2015). Identification of nonseparable models using instruments with small support. Econometrica 83(3), 1185–1197.

- van den Berg (2007) van den Berg, G. J. (2007). An economic analysis of exclusion restrictions for instrumental variable estimation. IZA Discussion Paper (No. 2585). Available at SSRN: https://ssrn.com/abstract=964965 or https://doi.org/10.2139/ssrn.964965.

- Van Kippersluis and Rietveld (2018) Van Kippersluis, H. and C. A. Rietveld (2018). Beyond plausibly exogenous. The Econometrics Journal 21(3), 316–331.

- Vytlacil (2002) Vytlacil, E. (2002). Independence, monotonicity, and latent index models: An equivalence result. Econometrica 70(1), 331–341.

- Vytlacil (2006) Vytlacil, E. (2006). A note on additive separability and latent index models of binary choice: Representation results. Oxford Bulletin of Economics and Statistics 68(4), 515–518.

- Westphal et al. (2022) Westphal, M., D. A. Kamhöfer, and H. Schmitz (2022). Marginal college wage premiums under selection into employment. The Economic Journal 132(646), 2231–2272.

- Zhou and Xie (2019) Zhou, X. and Y. Xie (2019). Marginal treatment effects from a propensity score perspective. Journal of Political Economy 127(6), 3070–3084.

| Data cell | Age | Gender | Race | Parental education | Observations | Support of |

|---|---|---|---|---|---|---|

| 1 | 30 | Male | Hispanic | High school or lower | 70 | [0, 0.208] |

| 2 | 30 | Male | Hispanic | College or higher | 10 | [0, 0.288] |

| 3 | 30 | Male | Black | High school or lower | 93 | [0, 0.588] |

| 4 | 30 | Male | Black | College or higher | 21 | [0, 0.813] |

| 5 | 30 | Male | White | High school or lower | 147 | [0, 0.177] |

| 6 | 30 | Male | White | College or higher | 78 | [0, 0.208] |

| 7 | 30 | Female | Hispanic | High school or lower | 65 | [0, 0.434] |

| 8 | 30 | Female | Hispanic | College or higher | 11 | 0 |

| 9 | 30 | Female | Black | High school or lower | 100 | [0, 0.671] |

| 10 | 30 | Female | Black | College or higher | 20 | [0, 0.641] |

| 11 | 30 | Female | White | High school or lower | 125 | [0, 0.759] |

| 12 | 30 | Female | White | College or higher | 73 | [0, 0.179] |

| 13 | 31 | Male | Hispanic | High school or lower | 87 | [0, 0.374] |

| 14 | 31 | Male | Hispanic | College or higher | 19 | [0, 0.442] |

| 15 | 31 | Male | Black | High school or lower | 112 | [0.030, 0.548] |

| 16 | 31 | Male | Black | College or higher | 24 | [0, 0.531] |

| 17 | 31 | Male | White | High school or lower | 148 | [0, 0.242] |

| 18 | 31 | Male | White | College or higher | 92 | [0, 0.125] |

| 19 | 31 | Female | Hispanic | High school or lower | 97 | [0, 0.737] |

| 20 | 31 | Female | Hispanic | College or higher | 14 | [0, 0.788] |

| 21 | 31 | Female | Black | High school or lower | 121 | [0.249, 0.817] |

| 22 | 31 | Female | Black | College or higher | 24 | [0, 0.639] |

| 23 | 31 | Female | White | High school or lower | 149 | [0, 0.178] |

| 24 | 31 | Female | White | College or higher | 75 | [0, 0.221] |

| 25 | 32 | Male | Hispanic | High school or lower | 65 | [0, 0.771] |

| 26 | 32 | Male | Hispanic | College or higher | 13 | 0 |

| 27 | 32 | Male | Black | High school or lower | 133 | [0, 0.623] |

| 28 | 32 | Male | Black | College or higher | 23 | [0, 0.949] |

| 29 | 32 | Male | White | High school or lower | 175 | [0, 0.194] |

| 30 | 32 | Male | White | College or higher | 90 | [0, 0.061] |

| Data cell | Age | Gender | Race | Parental education | Observations | Support of |

|---|---|---|---|---|---|---|

| 31 | 32 | Female | Hispanic | High school or lower | 94 | [0, 0.642] |

| 32 | 32 | Female | Hispanic | College or higher | 17 | [0, 0.351] |

| 33 | 32 | Female | Black | High school or lower | 130 | [0.021, 0.999] |

| 34 | 32 | Female | Black | College or higher | 34 | [0, 1] |

| 35 | 32 | Female | White | High school or lower | 155 | [0, 0.526] |

| 36 | 32 | Female | White | College or higher | 81 | [0, 0.118] |

| 37 | 33 | Male | Hispanic | High school or lower | 72 | [0, 0.567] |

| 38 | 33 | Male | Hispanic | College or higher | 15 | [0, 0.743] |

| 39 | 33 | Male | Black | High school or lower | 123 | [0, 0.818] |

| 40 | 33 | Male | Black | College or higher | 25 | [0, 0.596] |

| 41 | 33 | Male | White | High school or lower | 141 | [0, 0.154] |

| 42 | 33 | Male | White | College or higher | 95 | [0, 0.133] |

| 43 | 33 | Female | Hispanic | High school or lower | 83 | [0, 0.481] |

| 44 | 33 | Female | Hispanic | College or higher | 10 | [0, 0.447] |

| 45 | 33 | Female | Black | High school or lower | 125 | [0.258, 0.628] |

| 46 | 33 | Female | Black | College or higher | 27 | [0, 0.925] |

| 47 | 33 | Female | White | High school or lower | 152 | [0, 0.140] |

| 48 | 33 | Female | White | College or higher | 93 | [0, 0.350] |

| 49 | 34 | Male | Hispanic | High school or lower | 85 | [0, 0.864] |

| 50 | 34 | Male | Hispanic | College or higher | 16 | [0, 0.673] |

| 51 | 34 | Male | Black | High school or lower | 101 | [0.176, 1] |

| 52 | 34 | Male | Black | College or higher | 27 | [0, 0.790] |

| 53 | 34 | Male | White | High school or lower | 162 | [0, 0.204] |

| 54 | 34 | Male | White | College or higher | 85 | [0, 0.057] |

| 55 | 34 | Female | Hispanic | High school or lower | 73 | [0, 0.443] |

| 56 | 34 | Female | Hispanic | College or higher | 11 | 0 |

| 57 | 34 | Female | Black | High school or lower | 109 | [0.271, 0.586] |

| 58 | 34 | Female | Black | College or higher | 24 | [0, 0.856] |

| 59 | 34 | Female | White | High school or lower | 143 | [0, 0.5] |

| 60 | 34 | Female | White | College or higher | 76 | [0, 0.055] |

| Years of education | Wage income | ||||||

|---|---|---|---|---|---|---|---|

| Treated | Untreated | Difference | Treated | Untreated | Difference | ||

| (1) | (2) | (3) | (4) | (5) | (6) | ||

| Age | 0.000 | 0.000 | 0.000 | 0.005 | 0.007 | -0.002 | |

| (0.056) | (0.027) | (0.062) | (0.030) | (0.014) | (0.033) | ||

| Female | 0.558∗∗∗ | 0.254∗∗∗ | 0.304∗ | -0.321∗∗∗ | -0.503∗∗∗ | 0.182∗∗ | |

| (0.159) | (0.078) | (0.174) | (0.083) | (0.039) | (0.091) | ||

| Black | -0.378 | -0.226 | -0.152 | -0.015 | -0.404∗∗∗ | 0.389 | |

| (0.518) | (0.227) | (0.559) | (0.216) | (0.145) | (0.263) | ||

| Hispanic | -0.642 | -0.403∗∗∗ | -0.239 | -0.097 | -0.000 | -0.097 | |

| (0.408) | (0.145) | (0.430) | (0.169) | (0.068) | (0.183) | ||

| Parental education | 1.764∗∗∗ | 1.796∗∗∗ | -0.032 | 0.247∗∗ | 0.197∗∗∗ | 0.050 | |

| (0.254) | (0.108) | (0.271) | (0.096) | (0.050) | (0.107) | ||

| Family income 1978 | 0.053∗∗∗ | 0.047∗∗∗ | 0.006 | 0.021∗∗∗ | 0.014∗∗∗ | 0.007 | |

| (0.011) | (0.004) | (0.012) | (0.005) | (0.002) | (0.005) | ||

| ATE | 0.421 | 0.457∗∗ | |||||

| (0.492) | (0.228) | ||||||

| TT | 0.597 | 0.543 | |||||

| (1.047) | (0.484) | ||||||

| TUT | 0.376 | 0.438∗ | |||||

| (0.579) | (0.266) | ||||||

| Sample size | 4,554 | 3,589 | |||||

Notes: Columns 1 and 4 display the estimates of coefficients in the treated

state ( in Equation [8]), and columns 2 and 5

display the estimates of coefficients in the untreated state ().

Columns 3 and 6 display the difference in the estimates between the treated

and untreated states (), as well as the summary

causal parameters (i.e., ATE, TT, and TUT). Bootstrapped standard errors

from 1,000 replications are reported in parentheses.

∗ Significant at the 10% level

∗∗ Significant at the 5% level

∗∗∗ Significant at the 1% level

Online Appendices to “Marginal treatment effects in the absence of instrumental variables”

Appendix A Proof of Theorem 1

Denote and , . Note that and , and thus and , are identified functions because they are conditional expectations of observed variables. We first consider identifying , the coefficients of continuous covariates, from and . By equation (7), we have

| (A.1) |

When , by differentiating both sides of (A.1) at and invoking Assumption NL1, we obtain

which identifies for . When , for satisfying Assumption NL2, taking the partial derivatives of with respect to and yields that

It follows from Assumption NL2.(i)-(ii) that

so that

| (A.2) |

which is linear in and . The same equation is obtained if we evaluate the expression at another point that satisfies Assumption NL2.(iii)-(iv), which gives

| (A.3) |

where

Assumption NL2.(v) ensures that the determinant of is nonzero, which shows that is nonsingular. Therefore, equation (A.3) can be solved for and by inverting , thereby identifying and . Given identification of , we can then identify all other coefficient in by solving (A.2) with the subscript replaced by , which gives

Given the identification of , the function is identified on the support of by

Next, we consider identifying , the coefficients of discrete covariates. For each , we have

for any in the support of . By Assumption S, there exists in the support of such that is in the support of . It follows from the above identification result that is identified. Consequently, is identified by

This argument holds for each , thereby identifying . Finally, given the identification of , it follows from (7) that the function is identified on the support of by

for .

Appendix B Further discussion on the nonlinearity assumption

In light of the proof of Theorem 1, our identification strategy may work even when no continuous covariate is available in the data. In that case, we put a discretely distributed covariate in .

Theorem B.1.

Theorem 1 holds if Assumption NL1 is replaced by that there exist two different constants , in the support of such that .

Proof.

The assumption of Theorem B.1 in place of NL1 requires the univariate function to be not one-to-one, but imposes no other smoothness or continuity restriction on . Therefore, it doesn’t require to be continuously valued. In the extreme case of containing only one binary covariate, this assumption will be equivalent to the full irrelevance of to the treatment probability, which is a condition suggested by Chamberlain (1986) for identification of the sample selection model. The following two theorems show that Assumption NL1 and the assumption of Theorem B.1 can be extended to the case of multiple continuous covariates.

Theorem B.2.

Theorem 1 holds if Assumption NL is replaced by that (i) there are two points and in the support of and an index such that , , and , and (ii) there exists in the support of such that functions and are differentiable at , with .

Proof.

It follows from equation (A.1) and that

Hence, is identified by condition (i) as

For any index , taking the partial derivatives of with respect to and at yields that

| (B.1) | |||||

| (B.2) |

Since by condition (ii), we can find from (B.1) to be

and substitute it into (B.2) to identify as

Therefore, is identified. The remaining part of the proof is the same as the proof of Theorem 1.

Theorem B.3.

Theorem 1 holds if Assumption NL is replaced by that there exist two points and in the support of and an index such that functions and are differentiable at and , with and .

Proof.

Alternatively, the identification of coefficients of continuous covariates can be attained by exploiting the local irrelevance of the function .

Theorem B.4.

Theorem 1 holds if Assumption NL is replaced by the existence of a vector in the support of such that for , and that functions and are differentiable at .

Proof.

Appendix C Limited valued outcomes

To accommodate limited valued outcomes, we first generalize the linear additive model to a linear latent index model.

Assumption C.L (Linear index). Assume that , , holds for some known link function and unknown coefficients , where is unobserved error term of .

The generalized linear model applies to a variety of frequently encountered limited dependent variables, such as for binary , for censored (at zero) , for proportion-valued , for ordered with known thresholds , and so forth. Although setting reduces to the linear additive model, the following discussion doesn’t include Theorem 1 as a special case because here can be only identified up to scale for a general link function . Any changes in the scaling of can be freely absorbed into , and a scale normalization is needed for identification of and .

Assumption C.N (Normalization). Decompose , where and consist of covariates that are continuously and discretely distributed, respectively. Assume that , the first element of , equal to 1.

Assumption C.N imposes the convenient normalization that the first continuous covariate has a unit coefficient. This scaling of is arbitrary and is innocuous because our focus is on the identification of MTE, which is a difference in the conditional expectations of , rather than on separate identification of and . Since in the generalized linear model the potential outcomes are not necessarily additive in the error term, the mean independence assumption needs to be strengthened to a stricter distributional independence assumption.

Assumption C.CI (Conditional Independence). Assume that for , namely, is independent of conditional on , where is the reduced-form treatment error in equation (3).

Recalling that by definition, Assumption C.CI is equivalent to the full independence for , which implies that both the marginal distribution of and the copula of and are independent of . Under Assumptions C.L and C.CI, we have

The additive nonseparability of the observables and unobservables constitutes the primary difficulty in identifying MTE in the limited outcome case. Meanwhile, Assumptions C.L and C.CI lead to a double index form of the observable outcome regression functions for each treatment status, that is,

| (C.1) |

for , where

| (C.2) | |||||

| (C.3) |

Provided that is a nonlinear index, and thus can be identified based on functional form, which ensures identification of MTE since

| (C.4) |

where is the partial derivative of with respect to its second argument. Like in the case of unlimited outcomes, the key powers of this identification strategy are supplied by the nonlinearity of , as specified by the following assumption.

Assumption C.NL (Non-Linearity). Assume that the functions and are differentiable and denote their partial derivatives with respect to the -th argument as and , where and , , are defined in (C.1) and (C.1). Assume that there exist two vectors and on the support of and two elements and of the set such that (i) , (ii) , (iii) , (iv) , (v) for , and

| (vi) | ||||

Assumption C.NL.(i)-(ii) require to depend on the linear index, and (iii)-(vi) essentially require some nonlinear variation in under the scale normalization. As in Assumption NL, it is difficult to construct examples other than that violates Assumption C.NL. Finally, a support assumption and an invertibility assumption are imposed for technical reasons.

Assumption C.S (Support). For each , assume for some in the support of that there exists in the support of such that is in the support of and that is in the support of for .

Assumption C.I (Invertibility). Assume that the function is invertible on its first argument for .

For the case of a binary outcome, we have and , hence a sufficient condition for Assumption C.I to hold is that is continuously distributed with support , conditional on , for . Interestingly, the same continuous distribution condition also suffices for Assumption C.I in the censored case with , where and . Under the imposed assumptions, the following identification theorem for the generalized linear model follows immediately from Escanciano et al. (2016, Theorems 3.1-3.2).

Theorem C.1.

If Assumptions C.L, C.NL, C.CI, C.S, C.N, and C.I hold, then and at all points in the support of and are identified for .