MambaPro: Multi-Modal Object Re-Identification with Mamba Aggregation and Synergistic Prompt

Abstract

Multi-modal object Re-IDentification (ReID) aims to retrieve specific objects by utilizing complementary image information from different modalities. Recently, large-scale pre-trained models like CLIP have demonstrated impressive performance in traditional single-modal object ReID tasks. However, they remain unexplored for multi-modal object ReID. Furthermore, current multi-modal aggregation methods have obvious limitations in dealing with long sequences from different modalities. To address above issues, we introduce a novel framework called MambaPro for multi-modal object ReID. To be specific, we first employ a Parallel Feed-Forward Adapter (PFA) for adapting CLIP to multi-modal object ReID. Then, we propose the Synergistic Residual Prompt (SRP) to guide the joint learning of multi-modal features. Finally, leveraging Mamba’s superior scalability for long sequences, we introduce Mamba Aggregation (MA) to efficiently model interactions between different modalities. As a result, MambaPro could extract more robust features with lower complexity. Extensive experiments on three multi-modal object ReID benchmarks (i.e., RGBNT201, RGBNT100 and MSVR310) validate the effectiveness of our proposed methods. The source code is available at https://github.com/924973292/MambaPro.

Introduction

Object Re-IDentification (ReID) aims to re-identify specific objects across non-overlapping cameras. Due to its wide applications, object ReID has advanced significantly in recent years (Liu et al. 2023; Wang et al. 2024a; Liu et al. 2024a, 2021; Zhang et al. 2021; Yu et al. 2024a). However, single-modal object ReID has many limitations in challenging scenarios (Li et al. 2020; Zheng et al. 2021), such as lighting changes, shadows and low image resolutions. Under such extreme conditions, single-modal object ReID methods may extract misleading features (Li et al. 2020), resulting in the loss of discriminative information (Wang et al. 2023). Fortunately, multi-modal object ReID has demonstrated a promising capability in addressing these challenges (Wang et al. 2023; Shi et al. 2024a, b). With complementary image information from different modalities, multi-modal object ReID methods can obtain more robust feature representations (Zheng et al. 2023; Wang et al. 2023; Crawford et al. 2023; Yang, Chen, and Ye 2023, 2024). Meanwhile, with the widespread application of Transformers (Vaswani et al. 2017), the framework for multi-modal object ReID also changes from CNN-based methods (Wang et al. 2022; Zheng et al. 2023) to Transformer-based methods (Wang et al. 2023; Zhang et al. 2024). Although these methods show promising performance, they still face challenges in computational complexity as shown in the top of Fig. 1 (a).

Recently, large-scale pre-trained models like CLIP (Radford et al. 2021) have demonstrated strong generalization capabilities in downstream tasks, such as single-modal object ReID (Li, Sun, and Li 2023). However, full fine-tuning of CLIP leads to the high computational complexity and catastrophic forgetting (French 1999). To address above issues, Parameter-Efficient Fine-Tuning (PEFT) methods like adapter and prompt tuning show competitive performance with fewer parameters and FLOPs. Meanwhile, current multi-modal aggregation methods face challenges in managing the high computational complexity that arises from interactions between different modalities. As shown in Fig. 1 (b), Self-Attention (SA)-based aggregation methods (Wang et al. 2023; Yin et al. 2023; Pan et al. 2023) struggle with quadratic complexity when handling long sequences from multiple modalities. Fortunately, the Selective State Space Models (SSM) in Mamba (Gu and Dao 2023) offer an efficient solution. They provide a superior scalability for long sequences by reducing complexity to linear levels (Gu, Goel, and Ré 2021). Motivated by the above observations, we propose MambaPro, a novel framework that combines CLIP-driven synergistic prompt tuning with Mamba aggregation.

To be specific, our MambaPro consists of three key components: Parallel Feed-Forward Adapter (PFA), Synergistic Residual Prompt (SRP) and Mamba Aggregation (MA). Technically, we start by inserting the PFA into the frozen image encoder of CLIP to facilitate the transfer of pre-trained knowledge into the ReID task. To achieve this, the PFA is implemented as a parallel branch to the Feed-Forward Network (FFN). This parallel design not only maintains the original multi-modal feature representations but also supports flexible knowledge transfer across modalities. By combining features derived from the CLIP pre-trained model with refinements from the adapter, the framework can extract more robust representations for each modality. Then, we introduce the SRP to guide the joint learning of multi-modal features. To be specific, we propose a Synergistic Prompt (SP) to achieve the synergistic transfer with modality-specific prompts, facilitating effective exchanges of discriminative multi-modal information. Furthermore, we introduce a Residual Prompt (RP) to layer-wisely aggregate multi-modal information with residual refinements. As a result, our SRP can significantly enhance the information synergism. Finally, we propose the MA to efficiently model interactions between long sequences from different modalities. Specifically, the stacked MA blocks integrate complementary features from two aspects: intra-modality and inter-modality. With the cooperation of these two aspects, our MA can fully capture discriminative information with linear complexity. As a result, our framework can extract more robust multi-modal features with lower complexity. Experiments on three multi-modal object ReID benchmarks clearly demonstrate the effectiveness of our proposed methods.

In summary, our contributions are as follows:

-

•

We propose MambaPro, a novel framework for multi-modal object ReID. To our best knowledge, we are the first to introduce CLIP into multi-modal object ReID with Mamba aggregation and synergistic prompt tuning.

-

•

We develop a Synergistic Residual Prompt (SRP) to guide the joint learning of multi-modal features, which effectively facilitate knowledge transfer and modality interactions with fewer parameters and FLOPs.

-

•

We introduce a Mamba Aggregation (MA) to fully integrate the complementary information within and across different modalities with linear complexity.

-

•

Extensive experiments are performed on three multi-modal object ReID benchmarks. The results fully validate the effectiveness of our proposed methods.

Related Work

Multi-Modal Object ReID

Traditional object ReID aims to extract features with single-modal input, such as RGB images or depth maps. In the past few years, single-modal object ReID has achieved remarkable progress. However, relying solely on single-modal input, these methods may extract limited information in complex scenarios. Meanwhile, the generalization capability of multi-modal data has sparked increasing attention in multi-modal object ReID (Zheng et al. 2021; Li et al. 2020; Yin et al. 2023; He et al. 2023; Wang et al. 2023). However, multi-modal input introduces additional challenges, such as distribution gaps among different modalities (Liang et al. 2022) and modality laziness (Crawford et al. 2023). To address above challenges, Zheng et al. (Zheng et al. 2021) propose to learn robust features through a progressive fusion for multi-modal person ReID. For multi-modal vehicle ReID, Li et al. (Li et al. 2020) propose to learn balanced multi-modal features. Based on Transformers (Vaswani et al. 2017), Pan et al. (Pan et al. 2023) employ a feature hybrid mechanism to control modal-specific information. Recently, Zhang et al. (Zhang et al. 2024) propose to select diverse tokens with the cooperation of multi-modal information. Despite promising results, these methods still face challenges in generalization capability and model complexity. Especially for previous Transformer-based methods, full fine-tuning introduces high computational complexity. Thus, with the strong transfer capabilities of large-scale pre-trained models, we introduce the powerful CLIP into multi-modal object ReID with fewer trainable parameters and FLOPs.

Parameter-Efficient Fine-Tuning

With the development of foundational models (Yang et al. 2024), the transfer of pre-trained knowledge is attracting increasing attention (Diao et al. 2024a, b). Currently, mainstream PEFT methods can be divided into three categories: prompt tuning, adapter tuning and LoRA tuning. Prompt tuning (Lester, Al-Rfou, and Constant 2021) usually inserts layer-wise learnable tokens into the frozen backbone and guides the model to learn task-specific knowledge. Adapter tuning (Houlsby et al. 2019) introduces a plug-and-play module into the backbone, typically consisting of an MLP or attention module. LoRA tuning(Hu et al. 2021) uses a low-rank approximation as a side branch to reshape the backbone. With the above methods, PEFT demonstrates promising performance with limited resources. However, knowledge transfer for multi-modal fusion has been insufficiently explored, particularly in multi-modal object ReID. Therefore, we propose the PFA and SRP to leverage adapter tuning and prompt tuning for multi-modal object ReID. With the parallel adapters and synergistic transformations across multi-modal prompts, we can fully integrate the complementary information with lower complexity.

Visual State Space Models

Recently, Mamba (Gu and Dao 2023) draws considerable attention (Wan et al. 2024; Xu et al. 2024) with the superior scalability of State Space Models (SSM) (Gu et al. 2021). By making the Structured State Space Sequence Model (S4) (Gu, Goel, and Ré 2021) data-dependent with a selection mechanism, Mamba outperforms Transformers in handling long sequences with linear complexity. In computer vision, ViS4mer (Islam and Bertasius 2022) and TranS4mer (Islam et al. 2023) demonstrate the effectiveness of SSM. To fully model the interactions between image patches, Vision Mamba (Zhu et al. 2024) and VMamba (Liu et al. 2024b) employ different scanning strategies. However, most of these methods focus on single-modal tasks. Additionally, attention-based aggregation methods often face quadratic complexity. To address these issues, we design the intra-modality and inter-modality aggregation with Mamba, which together form the complete MA block. This approach integrates complementary information with linear complexity and complements the progressive fusion from PFA and SRP, enhancing feature robustness for ReID.

Method

As shown in Fig. 2, our framework comprises three main components: Parallel Feed-Forward Adapter (PFA), Synergistic Residual Prompt (SRP) and Mamba Aggregation (MA). With the frozen image encoder of CLIP as the shared backbone, we extract discriminative features from different modalities, i.e., RGB, Near Infrared (NIR) and Thermal Infrared (TIR). Details of the each component are as follows.

Parallel Feed-Forward Adapter

To transfer CLIP’s knowledge to ReID tasks, we introduce the Parallel Feed-Forward Adapter (PFA). Previous methods (Diao et al. 2024b) typically insert adapters sequentially into networks. However, this can disrupt the original information flow, leading to suboptimal feature transformations. Especially in multi-modal settings, it is crucial to maintain the integrity of input features. To address these issues, our PFA is designed as a parallel branch to the Feed-Forward Network (FFN) rather than as a sequential operation. Taking the NIR modality as an example, as shown in Fig. 2 (a), the tokenized features are first processed through the initial Transformer layer with the following equation:

| (1) |

where represents the Layer Normalization (LN) (Ba, Kiros, and Hinton 2016) and denotes the multi-head self-attention (Dosovitskiy et al. 2020). The output is then fed into the PFA, undergoing the following transformation:

| (2) |

Here, is a linear layer and is the GELU activation function (Hendrycks and Gimpel 2016). Finally, we integrate the output with the FFN, resulting in the feature :

| (3) |

where represents the FFN. This parallel structure preserves the original feature representations while enabling flexible and efficient knowledge transfer across different modalities. Additionally, PFA employs an ascending-then-descending pattern in linear layers. This design enhances its ability to adapt to complex multi-modal feature distributions, supporting more stable knowledge transfer.

Synergistic Residual Prompt

To promote the joint learning of multi-modal features, we introduce the Synergistic Residual Prompt (SRP).

Different from previous single modal prompt-based methods, our SRP is designed to integrate the modality-specific knowledge with synergistic transformations.

Besides, current multi-modal prompt-based methods (Li et al. 2023; Khattak et al. 2023) often discard prompts from earlier layers, potentially resulting in the loss of discriminative information.

To address these issues, our SRP introduces two key components: Synergistic Prompt (SP) and Residual Prompt (RP).

The SP transfers modality-specific knowledge across different modalities, while the RP aggregates multi-modal information across layers with residual refinements.

Synergistic Prompt.

Without loss of generality, we take the NIR modality as an example.

In Fig. 2 (b), the NIR image is first tokenized by the patch embedding (Vaswani et al. 2017).

The patch tokens are concatenated with the class token to form :

| (4) |

where is the concatenation operation. For the prompt tokens in NIR modality, they are composed of three parts: . Here, is the randomly initialized NIR-specific prompt, while and are transferred from RGB and TIR modalities, respectively. Finally, all of them will be concatenated to form the input of the first layer as follows:

| (5) |

Here, is the embedding dimension and is the number of patches. is the number of prompts in each modality.

| (6) |

where can be or . is the transfer block composed of linear layers and a GELU activation function. Then, the output of the first Transformer layer is calculated with the input with the following equation:

| (7) |

Other modalities undergo similar operations.

As a result, we can leverage SP to facilitate the transfer of discriminative modality-specific information across different modalities.

Residual Prompt.

As shown in Fig. 2 (c), we first extract prompt tokens from , with NIR modality as an example.

Then, we average these prompts to form the fused prompts .

Afterwords, we add the fused prompts with linear layers to the new NIR-specific prompt to form the final prompts for the second layer:

| (8) |

Finally, the input of the second layer is calculated as:

| (9) |

Other modalities and layers are processed in the same way:

| (10) |

| (11) |

| (12) |

where . With the synergistic transfer of modality-specific knowledge across different modalities and the residual refinements of multi-modal information, our SRP fully enhances the information synergism, promoting the feature discrimination. After the feature extraction from the backbone, we obtain for each modality. Then, we extract class tokens and concatenate them to form for supervision.

Mamba Aggregation

To efficiently model interactions between tokenized sequences from different modalities, we introduce Mamba Aggregation (MA) as a fusion strategy. As depicted in Fig. 3, MA is essentially composed of two parts: intra-modality MA and inter-modality MA. The intra-modality MA is designed to capture the discriminative information within each modality. While the inter-modality MA is introduced to fully integrate the complementary features across different modalities. In the intra-modality MA, we first extract patch tokens from , and , respectively. In the left part of Fig. 3, each of them will undergo the Mamba block composed of Depth-Wise Convolution (DWConv) (Chollet 2017) , Batch Normalization (BN) (Ioffe and Szegedy 2015) , SSM and SiLU activation function (Elfwing, Uchibe, and Doya 2018) with the following transformations:

| (13) |

| (14) |

Here, denotes the modality. Then, features from different Mamba blocks are concatenated along the token dimension. After passing through a linear layer, the aggregated features are split by modality for the intra-modality MA:

|

|

(15) |

where . Here, denotes the feature splitting along the token dimension. Thus, through a separable SSM for each modality, the intra-modality MA effectively captures interactions within each modality.

Furthermore, as shown in the right part of Fig. 3, , and will be sent to the inter-modality MA as follows:

|

|

(16) |

Here, represents the aggregated features obtained by modeling all modality tokens as the long sequences. Finally, we split the aggregated features into different modalities for the next MA block:

| (17) |

Through the cooperation of the intra-modality MA and inter-modality MA, MA fully models the interactions of patch tokens within and across modalities. After the stacked MA blocks, we concatenate the class tokens and the average of patch tokens of each modality. Then, we use a LN to stabilize the feature learning. With a linear reduction, we obtain the final features for each modality:

| (18) |

Here, represents the average operation. Finally, we concatenate the features from different modalities to form for the loss supervision:

| (19) |

With the above aggregation strategy, we can fully model the interactions across modalities with linear complexity.

Objective Functions

As illustrated in Fig. 2, our objective function comprises two parts: losses for the image encoder of CLIP and the MA. For the backbone and MA, they are both supervised by the label smoothing cross-entropy loss (Szegedy et al. 2016) and triplet loss (Hermans, Beyer, and Leibe 2017):

| (20) |

Finally, the total loss for our framework can be defined as:

| (21) |

Experiments

Experimental Setup

Datasets and Evaluation Protocols.

To fully evaluate the performance of our method, we conduct experiments on three multi-modal object ReID benchmarks.

Specifically, RGBNT201 (Zheng et al. 2021) is a multi-modal person ReID dataset comprising RGB, NIR and TIR images.

RGBNT100 (Li et al. 2020) is a large-scale multi-modal vehicle ReID dataset with diverse visual challenges, such as abnormal lighting, glaring and occlusion.

MSVR310 (Zheng et al. 2022) is a small-scale multi-modal vehicle ReID dataset with more complex visual challenges.

For evaluation metrics, we utilize the mean Average Precision (mAP) and Cumulative Matching Characteristics (CMC) at Rank-K ().

Meanwhile, we report the GPU memory, trainable parameters and FLOPs for complexity analysis.

Implementation Details.

Our model is implemented by using the PyTorch toolbox with one NVIDIA A100 GPU.

We employ the pre-trained image encoder of CLIP (Radford et al. 2021) as the backbone.

For the input resolution, images are resized to 256128 for RGBNT201 and 128256 for RGBNT100/MSVR310.

For data augmentation, we employ random horizontal flipping, cropping and erasing (Zhong et al. 2020).

For small-scale datasets (i.e., RGBNT201 and MSVR310), the mini-batch size is set to 64, with 4 images sampled for each identity and 16 identities sampled in a batch.

For the large-scale dataset, i.e., RGBNT100, the mini-batch size is set to 128, with 16 images sampled for each identity.

We set to 0.25 and to 1.0.

We use the Adam optimizer to fine-tune the model with a learning rate of 3.5.

The warmup strategy with a cosine decay is used for learning rate scheduling.

We set the total number of training epochs to 60 for RGBNT201/MVSR310 and 30 for RGBNT100, respectively.

| Methods | RGBNT201 | ||||

|---|---|---|---|---|---|

| mAP | R-1 | R-5 | R-10 | ||

| Single | MUDeep (Qian et al. 2017) | 23.8 | 19.7 | 33.1 | 44.3 |

| PCB (Sun et al. 2018) | 32.8 | 28.1 | 37.4 | 46.9 | |

| OSNet (Zhou et al. 2019) | 25.4 | 22.3 | 35.1 | 44.7 | |

| CAL (Rao et al. 2021) | 27.6 | 24.3 | 36.5 | 45.7 | |

| Multi | HAMNet (Li et al. 2020) | 27.7 | 26.3 | 41.5 | 51.7 |

| PFNet (Zheng et al. 2021) | 38.5 | 38.9 | 52.0 | 58.4 | |

| IEEE (Wang et al. 2022) | 49.5 | 48.4 | 59.1 | 65.6 | |

| DENet (Zheng et al. 2023) | 42.4 | 42.2 | 55.3 | 64.5 | |

| LRMM (Wu et al. 2025) | 52.3 | 53.4 | 64.6 | 73.2 | |

| UniCat∗ (Crawford et al. 2023) | 57.0 | 55.7 | - | - | |

| HTT∗ (Wang et al. 2024b) | 71.1 | 73.4 | 83.1 | 87.3 | |

| TOP-ReID∗ (Wang et al. 2023) | 72.3 | 76.6 | 84.7 | 89.4 | |

| EDITOR∗ (Zhang et al. 2024) | 66.5 | 68.3 | 81.1 | 88.2 | |

| RSCNet∗ (Yu et al. 2024b) | 68.2 | 72.5 | - | - | |

| MambaPro | 78.9 | 83.4 | 89.8 | 91.9 | |

Performance Comparison

Multi-modal Person ReID.

As shown in Tab. 1, we compare our proposed MambaPro with state-of-the-art methods on RGBNT201.

Within single-modal methods, PCB exhibits a remarkable mAP of 32.8%.

For multi-modal methods, CNN-based methods like IEEE (Wang et al. 2022) and LRMM (Wu et al. 2025) achieve competitive performance with mAPs of 49.5% and 52.3%, respectively.

However, Transformer-based methods outperform CNN-based methods.

Especially, TOP-ReID (Wang et al. 2023) achieves a mAP of 72.3%.

Moreover, our MambaPro achieves a 78.9% mAP and 83.4% Rank-1, surpassing TOP-ReID by 6.6% and 6.8%, respectively.

These performance improvements verify the effectiveness of our proposed method.

Multi-modal Vehicle ReID.

As depicted in Tab. 2, single-modal methods generally under-perform multi-modal methods.

In single-modal methods, CNN-based methods like BoT (Luo et al. 2019) achieve better results than some Transformer-based methods.

For multi-modal methods, LRMM (Wu et al. 2025) achieves a mAP of 78.6% on RGBNT100, showing the effectiveness of its low rank fusion strategy.

Meanwhile, Transformer-based methods exhibit their superiority on integrating multi-modal information.

Specifically, EDITOR (Zhang et al. 2024) achieves a mAP of 82.1%, surpassing LRMM by 3.5% on RGBNT100.

Our MambaPro achieves a mAP of 83.9% on RGBNT100, surpassing EDITOR by 1.8%.

Especially on the small-scale dataset MSVR310, our MambaPro achieves a mAP of 47.0%, surpassing EDITOR by 8.0%.

These results clearly verify the effectiveness of our proposed methods in multi-modal object ReID tasks.

| Methods | RGBNT100 | MSVR310 | |||

|---|---|---|---|---|---|

| mAP | R-1 | mAP | R-1 | ||

| single | PCB (Sun et al. 2018) | 57.2 | 83.5 | 23.2 | 42.9 |

| BoT (Luo et al. 2019) | 78.0 | 95.1 | 23.5 | 38.4 | |

| OSNet (Zhou et al. 2019) | 75.0 | 95.6 | 28.7 | 44.8 | |

| TransReID∗ (He et al. 2021) | 75.6 | 92.9 | 18.4 | 29.6 | |

| Multi | HAMNet (Li et al. 2020) | 74.5 | 93.3 | 27.1 | 42.3 |

| PFNet (Zheng et al. 2021) | 68.1 | 94.1 | 23.5 | 37.4 | |

| GAFNet (Guo et al. 2022) | 74.4 | 93.4 | - | - | |

| GPFNet (He et al. 2023) | 75.0 | 94.5 | - | - | |

| CCNet (Zheng et al. 2022) | 77.2 | 96.3 | 36.4 | 55.2 | |

| LRMM (Wu et al. 2025) | 78.6 | 96.7 | 36.7 | 49.7 | |

| GraFT∗ (Yin et al. 2023) | 76.6 | 94.3 | - | - | |

| PHT∗ (Pan et al. 2023) | 79.9 | 92.7 | - | - | |

| UniCat∗ (Crawford et al. 2023) | 79.4 | 96.2 | - | - | |

| HTT∗ (Wang et al. 2024b) | 75.7 | 92.6 | - | - | |

| TOP-ReID∗ (Wang et al. 2023) | 81.2 | 96.4 | 35.9 | 44.6 | |

| EDITOR∗ (Zhang et al. 2024) | 82.1 | 96.4 | 39.0 | 49.3 | |

| RSCNet∗ (Yu et al. 2024b) | 82.3 | 96.6 | 39.5 | 49.6 | |

| MambaPro | 83.9 | 94.7 | 47.0 | 56.5 | |

Ablation Study

We conduct ablation studies on RGBNT201 to validate the effectiveness of different components.

The locked image encoder of CLIP is denoted as CLIP(L) with frozen parameters.

Meanwhile, we refer to the fully fine-tuned CLIP as CLIP(F).

When MA is not applied, we use for retrieval.

Otherwise, we use for retrieval.

Effect of Key Components.

Tab. 3 shows the performance comparison with different components.

Comparing Model A with Model B, we observe an impressive mAP improvement by fully fine-tuning CLIP, demonstrating the necessity of fine-tuning.

With 32.9% of the trainable parameters compared with Model B, Model C outperforms the fully fine-tuned CLIP by 3.8% mAP, showing the effectiveness of PFA.

Meanwhile, Model D also surpasses Model B with minimal additional parameters and FLOPs.

Furthermore, through the combination of SRP and PFA, Model E achieves higher performance.

Besides, with the efficient modeling of multi-modal long sequences, Model F achieves the best mAP of 78.9%.

Model G, with the full fine-tuning of CLIP, achieves a comparable mAP of 78.7%.

Comparing the last two rows, our method achieves robust performance no matter whether the backbone is fully fine-tuned or not.

With our efficient learning framework, MambaPro transfers the knowledge from CLIP to multi-modal object ReID with superior performance and low complexity.

| Methods | Performance | Complexity | |||||||||

| CLIP(L) | CLIP(F) | PFA | SRP | MA | mAP | R-1 | R-5 | R-10 | Mem(GB) | Params(M) | |

| A | ✓ | ✕ | ✕ | ✕ | ✕ | 3.3 | 2.8 | 7.4 | 11.1 | 12.79 | 0 |

| B | ✕ | ✓ | ✕ | ✕ | ✕ | 69.4 | 71.5 | 81.8 | 87.4 | 16.85 | 86.14 |

| C | ✓ | ✕ | ✓ | ✕ | ✕ | 73.2 | 76.4 | 85.2 | 88.2 | 15.96 | 28.34 |

| D | ✓ | ✕ | ✕ | ✓ | ✕ | 71.3 | 74.5 | 83.4 | 87.9 | 13.82 | 28.48 |

| E | ✓ | ✕ | ✓ | ✓ | ✕ | 74.4 | 77.6 | 87.4 | 90.3 | 17.79 | 56.82 |

| F | ✓ | ✕ | ✓ | ✓ | ✓ | 78.9 | 83.4 | 89.8 | 91.9 | 20.89 | 74.20 |

| G | ✕ | ✓ | ✓ | ✓ | ✓ | 78.7 | 83.5 | 89.6 | 92.9 | 25.22 | 160.34 |

| Methods | Performance | Prompt Complexity | ||||

| mAP | R-1 | R-5 | R-10 | FLOPs(G) | Params(M) | |

| CLIP(L) + IP | 64.7 | 65.9 | 77.3 | 82.9 | 0.09 | 0.01 |

| CLIP(L) + SP | 68.9 | 70.7 | 80.9 | 85.2 | 0.28 | 1.78 |

| CLIP(L) + SRP(Separation) | 70.1 | 72.3 | 82.1 | 86.7 | 0.29 | 3.55 |

| CLIP(L) + SRP(Fusion) | 71.3 | 74.5 | 83.4 | 87.9 | 0.29 | 2.37 |

Effect of Different Prompt Mechanisms.

Fig. 4 illustrates the details of different prompt mechanisms.

Tab. 4 shows the corresponding performances.

The first row represents that each modality has its own prompts without synergistic transfer.

SP achieves better performance than Independent Prompt (IP), demonstrating the effectiveness of synergistic transformation between modalities.

Both SRP (Separation) and SRP (Fusion) outperform SP, underscoring the effectiveness of residual connections in multi-modal prompts.

The difference between SRP (Separation) and SRP (Fusion) lies in the comes from the prompts of separate modalities or the fusion of them.

SRP (Fusion) performs best by averaging tokens with the residual connections.

Effect of Different Adapters.

Tab. 5 shows the performance with different adapters.

Due to the weaker learning capability without non-linear transformations, LoRA generally achieves inferior performance compared with the Bottleneck Adapter (BNA).

Meanwhile, with acceptable complexity, our PFA outperforms BNA by 4.7% mAP, verifying the effectiveness in multi-modal object ReID.

Effect of Different Aggregation Methods.

In Tab. 6, we compare the performance with different aggregation methods.

The Sum and Concat are simple aggregation methods that yield inferior results.

The Transformer achieves better performance, demonstrating the effectiveness of attention-based aggregation.

Our Intra-MA and Inter-MA outperform Transformers, showcasing the effectiveness of modeling multi-modal sequences with Mamba.

Finally, our MA achieves the best performance, demonstrating the effectiveness of our proposed method.

| Methods | Performance | Adapter Complexity | ||||

| mAP | R-1 | R-5 | R-10 | FLOPs(G) | Params(M) | |

| CLIP(L) + LoRA-r(64) | 54.5 | 55.3 | 68.1 | 76.1 | 0.01 | 0.10 |

| CLIP(L) + LoRA-r(256) | 55.7 | 56.9 | 69.7 | 77.3 | 0.05 | 0.39 |

| CLIP(L) + LoRA-r(384) | 47.2 | 47.1 | 62.2 | 69.1 | 0.08 | 0.60 |

| CLIP(L) + BNA | 68.5 | 69.4 | 79.1 | 84.0 | 0.08 | 0.60 |

| CLIP(L) + PFA | 73.2 | 76.4 | 85.2 | 88.2 | 0.30 | 2.36 |

| Methods | Performance | Aggregation Complexity | ||||

| mAP | R-1 | R-5 | R-10 | FLOPs(G) | Params(M) | |

| Sum | 75.3 | 79.4 | 87.2 | 89.4 | 0.01 | 1.58 |

| Concat | 74.3 | 75.6 | 84.9 | 88.9 | 0.10 | 2.37 |

| Transformer | 76.5 | 80.2 | 87.7 | 90.6 | 1.36 | 4.73 |

| Intra-MA | 76.2 | 80.9 | 88.2 | 90.5 | 0.71 | 5.64 |

| Inter-MA | 77.5 | 81.2 | 88.6 | 90.9 | 0.71 | 5.41 |

| MA | 78.9 | 83.4 | 89.8 | 91.9 | 1.42 | 9.47 |

Visualization

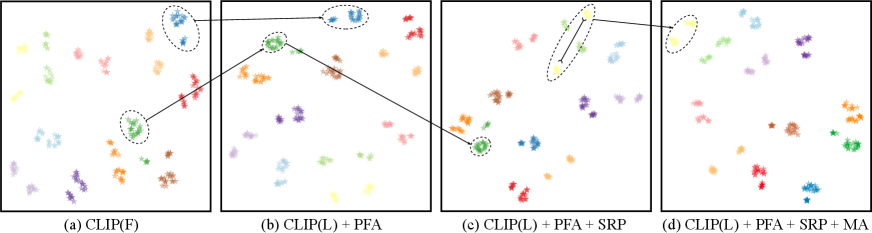

Feature Distributions.

In Fig. 5, we show the feature distributions with different modules.

Comparing Fig. 5 (a) and Fig. 5 (b), CLIP(L) with PFA leads to more compact distributions across different IDs.

Besides, with SRP, the gaps between different IDs in Fig. 5 (c) are further expanded.

Finally, with MA, the feature distributions become more separable as shown in Fig. 5 (d).

These visualizations strongly support the effectiveness of our proposed modules.

Feature Alignment of MA.

In Fig. 6, we visualize the similarity distributions of different modalities before and after MA.

The results clearly show that MA can fully align the distributions of different modalities, enhancing the feature aggregation.

More details are in the supplementary material.

Conclusion

In this paper, we propose a novel feature learning framework named MambaPro, for multi-modal object ReID. We first employ a Parallel Feed-Forward Adapter (PFA) for knowledge transfer from the pre-trained CLIP to the ReID task. To guide joint learning of multi-modal features, we introduce the Synergistic Residual Prompt (SRP) for interactions of modality-specific prompts. Meanwhile, we propose a Mamba Aggregation (MA) to efficiently aggregate tokenized sequences from different modalities. With linear complexity, the MA efficiently handles long sequences, outperforming existing attention-based methods with low consumption. Extensive experiments on three multi-modal object ReID benchmarks validate the effectiveness of our method and show the potentials of fundamental models.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (No.62101092, 62476044, 62388101), Open Project of Anhui Provincial Key Laboratory of Multimodal Cognitive Computation, Anhui University (No.MMC202102, MMC202407) and Fundamental Research Funds for the Central Universities (No.DUT23BK050, DUT23YG232).

References

- Ba, Kiros, and Hinton (2016) Ba, J. L.; Kiros, J. R.; and Hinton, G. E. 2016. Layer normalization. arXiv preprint arXiv:1607.06450.

- Chang, Hospedales, and Xiang (2018) Chang, X.; Hospedales, T. M.; and Xiang, T. 2018. Multi-level factorisation net for person re-identification. In CVPR, 2109–2118.

- Chollet (2017) Chollet, F. 2017. Xception: Deep learning with depthwise separable convolutions. In CVPR, 1251–1258.

- Crawford et al. (2023) Crawford, J.; Yin, H.; McDermott, L.; and Cummings, D. 2023. UniCat: Crafting a Stronger Fusion Baseline for Multimodal Re-Identification. arXiv preprint arXiv:2310.18812.

- Diao et al. (2024a) Diao, H.; Wan, B.; Jia, X.; Zhuge, Y.; Zhang, Y.; Lu, H.; and Chen, L. 2024a. Sherl: Synthesizing high accuracy and efficient memory for resource-limited transfer learning. In ECCV, 75–95.

- Diao et al. (2024b) Diao, H.; Wan, B.; Zhang, Y.; Jia, X.; Lu, H.; and Chen, L. 2024b. Unipt: Universal parallel tuning for transfer learning with efficient parameter and memory. In CVPR, 28729–28740.

- Dosovitskiy et al. (2020) Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. 2020. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929.

- Elfwing, Uchibe, and Doya (2018) Elfwing, S.; Uchibe, E.; and Doya, K. 2018. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural networks, 107: 3–11.

- French (1999) French, R. M. 1999. Catastrophic forgetting in connectionist networks. Trends in cognitive sciences, 3(4): 128–135.

- Gu and Dao (2023) Gu, A.; and Dao, T. 2023. Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752.

- Gu, Goel, and Ré (2021) Gu, A.; Goel, K.; and Ré, C. 2021. Efficiently modeling long sequences with structured state spaces. arXiv preprint arXiv:2111.00396.

- Gu et al. (2021) Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; and Ré, C. 2021. Combining recurrent, convolutional, and continuous-time models with linear state space layers. NeurIPS, 34: 572–585.

- Guo et al. (2022) Guo, J.; Zhang, X.; Liu, Z.; and Wang, Y. 2022. Generative and attentive fusion for multi-spectral vehicle re-identification. In ICSP, 1565–1572.

- He et al. (2023) He, Q.; Lu, Z.; Wang, Z.; and Hu, H. 2023. Graph-Based Progressive Fusion Network for Multi-Modality Vehicle Re-Identification. TITS, 1–17.

- He et al. (2021) He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; and Jiang, W. 2021. Transreid: Transformer-based object re-identification. In ICCV, 15013–15022.

- Hendrycks and Gimpel (2016) Hendrycks, D.; and Gimpel, K. 2016. Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415.

- Hermans, Beyer, and Leibe (2017) Hermans, A.; Beyer, L.; and Leibe, B. 2017. In defense of the triplet loss for person re-identification. arXiv preprint arXiv:1703.07737.

- Houlsby et al. (2019) Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; and Gelly, S. 2019. Parameter-efficient transfer learning for NLP. In ICML, 2790–2799.

- Hu et al. (2021) Hu, E. J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; and Chen, W. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

- Ioffe and Szegedy (2015) Ioffe, S.; and Szegedy, C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML, 448–456.

- Islam and Bertasius (2022) Islam, M. M.; and Bertasius, G. 2022. Long movie clip classification with state-space video models. In ECCV, 87–104.

- Islam et al. (2023) Islam, M. M.; Hasan, M.; Athrey, K. S.; Braskich, T.; and Bertasius, G. 2023. Efficient Movie Scene Detection using State-Space Transformers. In CVPR, 18749–18758.

- Khattak et al. (2023) Khattak, M. U.; Rasheed, H.; Maaz, M.; Khan, S.; and Khan, F. S. 2023. Maple: Multi-modal prompt learning. In CVPR, 19113–19122.

- Lester, Al-Rfou, and Constant (2021) Lester, B.; Al-Rfou, R.; and Constant, N. 2021. The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691.

- Li et al. (2020) Li, H.; Li, C.; Zhu, X.; Zheng, A.; and Luo, B. 2020. Multi-spectral vehicle re-identification: A challenge. In AAAI, volume 34, 11345–11353.

- Li, Sun, and Li (2023) Li, S.; Sun, L.; and Li, Q. 2023. CLIP-ReID: exploiting vision-language model for image re-identification without concrete text labels. In AAAI, volume 37, 1405–1413.

- Li, Zhu, and Gong (2018) Li, W.; Zhu, X.; and Gong, S. 2018. Harmonious attention network for person re-identification. In CVPR, 2285–2294.

- Li et al. (2023) Li, Y.; Liu, Z.; Yang, W.; Wang, Y.; Liao, Q.; et al. 2023. CLIP-based Synergistic Knowledge Transfer for Text-based Person Retrieval. arXiv preprint arXiv:2309.09496.

- Liang et al. (2022) Liang, V. W.; Zhang, Y.; Kwon, Y.; Yeung, S.; and Zou, J. Y. 2022. Mind the gap: Understanding the modality gap in multi-modal contrastive representation learning. NeurIPS, 35: 17612–17625.

- Liu et al. (2023) Liu, X.; Yu, C.; Zhang, P.; and Lu, H. 2023. Deeply coupled convolution–transformer with spatial–temporal complementary learning for video-based person re-identification. TNNLS.

- Liu et al. (2021) Liu, X.; Zhang, P.; Yu, C.; Lu, H.; and Yang, X. 2021. Watching you: Global-guided reciprocal learning for video-based person re-identification. In CVPR, 13334–13343.

- Liu et al. (2024a) Liu, X.; Zhang, P.; Yu, C.; Qian, X.; Yang, X.; and Lu, H. 2024a. A video is worth three views: Trigeminal transformers for video-based person re-identification. TITS, 25.

- Liu et al. (2024b) Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; and Liu, Y. 2024b. Vmamba: Visual state space model. arXiv preprint arXiv:2401.10166.

- Luo et al. (2019) Luo, H.; Gu, Y.; Liao, X.; Lai, S.; and Jiang, W. 2019. Bag of tricks and a strong baseline for deep person re-identification. In CVPRW, 1487–1495.

- Pan et al. (2023) Pan, W.; Huang, L.; Liang, J.; Hong, L.; and Zhu, J. 2023. Progressively Hybrid Transformer for Multi-Modal Vehicle Re-Identification. Sensors, 23(9): 4206.

- Qian et al. (2017) Qian, X.; Fu, Y.; Jiang, Y.-G.; Xiang, T.; and Xue, X. 2017. Multi-scale deep learning architectures for person re-identification. In ICCV, 5399–5408.

- Radford et al. (2021) Radford, A.; Kim, J. W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. 2021. Learning transferable visual models from natural language supervision. In ICML, 8748–8763.

- Rao et al. (2021) Rao, Y.; Chen, G.; Lu, J.; and Zhou, J. 2021. Counterfactual attention learning for fine-grained visual categorization and re-identification. In ICCV, 1025–1034.

- Shi et al. (2024a) Shi, J.; Yin, X.; Chen, Y.; Zhang, Y.; Zhang, Z.; Xie, Y.; and Qu, Y. 2024a. Multi-Memory Matching for Unsupervised Visible-Infrared Person Re-Identification. arXiv preprint arXiv:2401.06825.

- Shi et al. (2024b) Shi, J.; Yin, X.; Zhang, Y.; Xie, Y.; Qu, Y.; et al. 2024b. Learning commonality, divergence and variety for unsupervised visible-infrared person re-identification. In NeurIPS.

- Smith, Warrington, and Linderman (2022) Smith, J. T.; Warrington, A.; and Linderman, S. W. 2022. Simplified state space layers for sequence modeling. arXiv preprint arXiv:2208.04933.

- Sun et al. (2018) Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; and Wang, S. 2018. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In ECCV, 480–496.

- Szegedy et al. (2016) Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; and Wojna, Z. 2016. Rethinking the inception architecture for computer vision. In CVPR, 2818–2826.

- Van der Maaten and Hinton (2008) Van der Maaten, L.; and Hinton, G. 2008. Visualizing data using t-SNE. JMLR, 9(11).

- Vaswani et al. (2017) Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; and Polosukhin, I. 2017. Attention is all you need. NeurIPS, 30.

- Wan et al. (2024) Wan, Z.; Zhang, P.; Wang, Y.; Yong, S.; Stepputtis, S.; Sycara, K.; and Xie, Y. 2024. Sigma: Siamese mamba network for multi-modal semantic segmentation. arXiv preprint arXiv:2404.04256.

- Wang et al. (2023) Wang, Y.; Liu, X.; Zhang, P.; Lu, H.; Tu, Z.; and Lu, H. 2023. TOP-ReID: Multi-spectral Object Re-Identification with Token Permutation. arXiv preprint arXiv:2312.09612.

- Wang et al. (2024a) Wang, Y.; Zhang, P.; Wang, D.; and Lu, H. 2024a. Other tokens matter: Exploring global and local features of Vision Transformers for Object Re-Identification. CVIU, 244: 104030.

- Wang et al. (2024b) Wang, Z.; Huang, H.; Zheng, A.; and He, R. 2024b. Heterogeneous Test-Time Training for Multi-Modal Person Re-identification. In AAAI, volume 38, 5850–5858.

- Wang et al. (2022) Wang, Z.; Li, C.; Zheng, A.; He, R.; and Tang, J. 2022. Interact, embed, and enlarge: Boosting modality-specific representations for multi-modal person re-identification. In AAAI, volume 36, 2633–2641.

- Wu et al. (2025) Wu, D.; Liu, Z.; Chen, Z.; Gan, S.; Tan, K.; Wan, Q.; and Wang, Y. 2025. LRMM: Low rank multi-scale multi-modal fusion for person re-identification based on RGB-NI-TI. ESWA, 263: 125716.

- Xu et al. (2024) Xu, Z.; Tang, F.; Chen, Z.; Zhou, Z.; Wu, W.; Yang, Y.; Liang, Y.; Jiang, J.; Cai, X.; and Su, J. 2024. Polyp-Mamba: Polyp Segmentation with Visual Mamba. In MICCAI.

- Yang, Chen, and Ye (2023) Yang, B.; Chen, J.; and Ye, M. 2023. Towards Grand Unified Representation Learning for Unsupervised Visible-Infrared Person Re-Identification. In ICCV, 11069–11079.

- Yang, Chen, and Ye (2024) Yang, B.; Chen, J.; and Ye, M. 2024. Shallow-Deep Collaborative Learning for Unsupervised Visible-Infrared Person Re-Identification. In CVPR, 16870–16879.

- Yang et al. (2024) Yang, L.; Kang, B.; Huang, Z.; Xu, X.; Feng, J.; and Zhao, H. 2024. Depth anything: Unleashing the power of large-scale unlabeled data. arXiv preprint arXiv:2401.10891.

- Yin et al. (2023) Yin, H.; Li, J.; Schiller, E.; McDermott, L.; and Cummings, D. 2023. GraFT: Gradual Fusion Transformer for Multimodal Re-Identification. arXiv preprint arXiv:2310.16856.

- Yu et al. (2024a) Yu, C.; Liu, X.; Wang, Y.; Zhang, P.; and Lu, H. 2024a. TF-CLIP: Learning text-free CLIP for video-based person re-identification. In AAAI, volume 38, 6764–6772.

- Yu et al. (2024b) Yu, Z.; Huang, Z.; Hou, M.; Pei, J.; Yan, Y.; Liu, Y.; and Sun, D. 2024b. Representation Selective Coupling via Token Sparsification for Multi-Spectral Object Re-Identification. TCSVT.

- Zhang et al. (2021) Zhang, G.; Zhang, P.; Qi, J.; and Lu, H. 2021. Hat: Hierarchical aggregation transformers for person re-identification. In ACM MM, 516–525.

- Zhang et al. (2024) Zhang, P.; Wang, Y.; Liu, Y.; Tu, Z.; and Lu, H. 2024. Magic tokens: Select diverse tokens for multi-modal object re-identification. In CVPR, 17117–17126.

- Zheng et al. (2023) Zheng, A.; He, Z.; Wang, Z.; Li, C.; and Tang, J. 2023. Dynamic Enhancement Network for Partial Multi-modality Person Re-identification. arXiv preprint arXiv:2305.15762.

- Zheng et al. (2021) Zheng, A.; Wang, Z.; Chen, Z.; Li, C.; and Tang, J. 2021. Robust multi-modality person re-identification. In AAAI, volume 35, 3529–3537.

- Zheng et al. (2022) Zheng, A.; Zhu, X.; Ma, Z.; Li, C.; Tang, J.; and Ma, J. 2022. Multi-spectral vehicle re-identification with cross-directional consistency network and a high-quality benchmark. arXiv preprint arXiv:2208.00632.

- Zhong et al. (2020) Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; and Yang, Y. 2020. Random erasing data augmentation. In AAAI, volume 34, 13001–13008.

- Zhou et al. (2019) Zhou, K.; Yang, Y.; Cavallaro, A.; and Xiang, T. 2019. Omni-scale feature learning for person re-identification. In ICCV, 3702–3712.

- Zhu et al. (2024) Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; and Wang, X. 2024. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv preprint arXiv:2401.09417.

A. Introduction

The supplementary material validates the effectiveness of our MambaPro with additional evidence. We provide a comprehensive analysis of the model’s performance, including the impact of various hyperparameters and the model’s generalization to vehicle datasets. To be specific, the supplementary material is composed of the following parts:

-

1.

More method details:

-

•

Background knowledge of Mamba

-

•

Workflow of our Synergistic Residual Prompt (SRP)

-

•

-

2.

More experimental details:

-

•

Implementation details

-

•

-

3.

Module validation and hyper-parameter analysis:

-

•

Model parameter comparison with other methods

-

•

Analysis of different structures and hyper-parameters

-

•

Generalization of the model on vehicle datasets

-

•

-

4.

Grad-CAM visualizations:

-

•

Visualizations for both person and vehicle ReID

-

•

These comprehensive experiments fully validate the effectiveness of our MambaPro.

B. Methodology Details

Background knowledge of Mamba

Mamba (Gu and Dao 2023) is a sequential modeling framework designed to effectively capture complex interactions within ultra-long sequences by leveraging State Space Models (SSMs). SSMs (Gu, Goel, and Ré 2021; Gu et al. 2021; Smith, Warrington, and Linderman 2022) are a type of sequence-to-sequence models inspired by continuous linear time-invariant (LTI) systems. With linear complexity, they can effectively capture the dynamics inherent in the system’s state variables, specifically defined as:

| (22) |

Here, means the input, denotes the hidden state and represents the output. stands for the time derivative of . Besides, , , and are the system matrices, where is the hidden state dimension. Meanwhile, we often need to process discrete sequences like image and text. Thus, SSMs utilize zero-order hold discretization to discretize the system matrices. Then, the input sequence can be mapped to the output sequence with the following equations:

| (23) |

Although effective for sequence modeling, SSMs still faces limitations for the LTI property. To address this issue, the Selective State Space Model (S6) (Gu and Dao 2023) is introduced to make SSMs data-dependent. With the selection mechanism, S6 can effectively model the complex interactions in ultra-long sequences with linear complexity. In this work, we specifically design the intra-modality MA and inter-modality MA with S6, which can fully integrate complementary information from multiple modalities.

| Methods | Params(M) | RGBNT201 | RGBNT100 | MSVR310 | |||

| mAP | Rank-1 | mAP | Rank-1 | mAP | Rank-1 | ||

| MUDeep (Qian et al. 2017) | 77.75 | 23.8 | 19.7 | - | - | - | - |

| HACNN (Li, Zhu, and Gong 2018) | 10.50 | 21.3 | 19.0 | - | - | - | - |

| MLFN (Chang, Hospedales, and Xiang 2018) | 95.57 | 26.1 | 24.2 | - | - | - | - |

| CAL (Rao et al. 2021) | 97.62 | 27.6 | 24.3 | - | - | - | - |

| PCB (Sun et al. 2018) | 72.33 | 32.8 | 28.1 | 57.2 | 83.5 | 23.2 | 42.9 |

| OSNet (Zhou et al. 2019) | 7.02 | 25.4 | 22.3 | 75.0 | 95.6 | 28.7 | 44.8 |

| HAMNet (Li et al. 2020) | 78.00 | 27.7 | 26.3 | 74.5 | 93.3 | 27.1 | 42.3 |

| CCNet (Zheng et al. 2022) | 74.60 | - | - | 77.2 | 96.3 | 36.4 | 55.2 |

| IEEE (Wang et al. 2022) | 109.22 | 49.5 | 48.4 | - | - | - | - |

| GAFNet (Guo et al. 2022) | 130.00 | - | - | 74.4 | 93.4 | - | - |

| LRMM (Wu et al. 2025) | 86.40 | 52.3 | 51.1 | 78.6 | 96.7 | 36.7 | 49.7 |

| TransReID∗ (He et al. 2021) | 278.23 | - | - | 75.6 | 92.9 | 18.4 | 29.6 |

| UniCat∗ (Crawford et al. 2023) | 259.02 | 57.0 | 55.7 | 79.4 | 96.2 | - | - |

| GraFT∗ (Yin et al. 2023) | 101.00 | - | - | 76.6 | 94.3 | - | - |

| TOP-ReID∗ (Wang et al. 2023) | 324.53 | 72.3 | 76.6 | 81.2 | 96.4 | 35.9 | 44.6 |

| EDITOR∗ (Zhang et al. 2024) | 118.55 | 66.5 | 68.3 | 82.1 | 96.4 | 39.0 | 49.3 |

| RSCNet∗ (Yu et al. 2024b) | 124.10 | 68.2 | 72.5 | 82.3 | 96.6 | 39.5 | 49.6 |

| MambaPro | 74.20 | 78.9 | 83.4 | 83.9 | 94.7 | 47.0 | 56.5 |

Workflow of the SRP

As shown in Fig. 7 (a), we take the NIR modality as an example to clarify it. In fact, SRP’s input consists of two parts: (1) prompt tokens from the output of the last Transformer layer; (2) prompt tokens to be added in the current layer. The output includes from RP and , from SP. As shown in Fig. 7 (b), SP utilizes the Transfer Block (TB) to transfer complementary information from prompts of other modalities. Each modality utilizes its specific TB. RP aggregates information from and injects the aggregated information into , forming with different levels of information. Finally, we concatenate the output of SRP with the class token and patch tokens, as the input of the current Transformer layer for further processing.

C. Experimental Details

Implementation Details

For the input resolution, images are resized to 256128 for RGBNT201 and 128256 for RGBNT100/MSVR310. For data augmentation, we employ random horizontal flipping, cropping and erasing (Zhong et al. 2020). A warmup strategy with a cosine decay is used for the learning rate scheduling. On the three datasets, we use a learning rate of 3.5 for PEFT. To compare with the fully fine-tuned CLIP, we use a learning rate of 5 on the CLIP backbone, while other parts are trained with 3.5. In the small-scale datasets RGBNT201 and MSVR310, the mini-batch size is set to 64, 4 images sampled for each identity and 16 identities sampled for each batch. Both of the two datasets are trained with 60 epochs and the warm up epoch is set to 10. For the large-scale RGBNT100 dataset, to better utilize the data, we set the mini-batch size to 128, with 16 images sampled for each identity. This leads to a more stable training. Besides, we train the model for 30 epochs with a 5-epoch warm-up.

D. Experimental Analysis

Parameter Comparisons

In Tab. 7, we compare the trainable parameters of our model with other methods, including both CNN-based methods (Zhou et al. 2019; Sun et al. 2018; Rao et al. 2021; Chang, Hospedales, and Xiang 2018; Li, Zhu, and Gong 2018; Qian et al. 2017; Li et al. 2020; Wang et al. 2022; Zheng et al. 2022; Guo et al. 2022) and Transformer-based methods (He et al. 2021; Wang et al. 2023; Crawford et al. 2023; Yin et al. 2023). In general, CNN-based methods have fewer parameters than Transformer-based methods. However, in Transformer-based methods, our MambaPro achieves the best performance with the fewest trainable parameters. In contrast, the parameter count of the other Transformer-based methods is at least 100M, with the highest reaching 324.53M. With comparable parameters as CNN-based methods, our MambaPro outperforms them by a large margin, verifying the effectiveness of our method.

Different Hyper-parameters and Structures

Effect of and in MA.

In Fig. 8, we compare the performance with different and in MA.

In fact, is the dimension of the hidden state , while is the rank when generating the discrete time steps in SSM.

As shown in Fig. 8 (a), the performance first rises and then falls with the increase of .

The best performance is achieved when is set to 16.

The reason is that a small is not able to capture the complex multi-modal correlations, while a large may lead to overfitting.

As for , the performance is relatively stable.

However, the performance exhibits an evident increase when is bigger than 32.

The bigger can avoid information loss, which is beneficial for the performance improvement.

Effect of Varying Depths of Proposed Modules.

In Fig. 9, we show the performance with different depths of PFA, SRP and MA.

Meanwhile, we also perform the analysis on the length of prompts.

Fig. 9 (a) shows that with more learnable prompts in each modality, the performance first increases and then decreases, achieving the best performance with .

In Fig. 9 (b), the performance shows a large improvement with the depth increasing from 9-12 to 6-12.

Furthermore, the best performance is achieved with SRP in every layer.

Similar trends can be observed in Fig. 9 (c), with the best performance achieved with all layers.

Meanwhile, the overall performance is higher than that of Fig. 9 (b), indicating the strong transfer capability of PFA.

For Fig. 9 (d), stacked MA blocks improve the performance, with a 79.5% mAP achieved with 4 MA blocks.

Considering the complexity, we choose 2 MA blocks as the best trade-off.

These results verify the effectiveness of our proposed modules.

Effect of the Inserted Position of PFA.

In Tab. 8, we compare the performance with different inserted positions of PFA.

Within a Transformer layer, the main components include the Multi-Head Self-Attention (MHSA) and the Feed-Forward Network (FFN).

Thus, we insert the PFA behind the FFN and the MHSA or on the side of the FFN and the MHSA, respectively.

We observe that the PFA inserted on the side of original components achieves better performance.

The main reason is that the stable structure of original components is not disturbed.

By introducing additional knowledge from special domains with our proposed PFA, the pre-trained knowledge can be better utilized.

| Methods | RGBNT201 | |||

| mAP | Rank-1 | Rank-5 | Rank-10 | |

| Side (FFN) | 73.2 | 76.4 | 85.2 | 88.2 |

| Behind (FFN) | 46.9 | 46.2 | 63.4 | 72.7 |

| Side (MHSA) | 66.3 | 67.2 | 79.7 | 84.2 |

| Behind (MHSA) | 52.0 | 52.3 | 66.4 | 72.8 |

| Methods | RGBNT201 | |||

| mAP | Rank-1 | Rank-5 | Rank-10 | |

| ViT (L) | 67.5 | 71.3 | 82.1 | 88.6 |

| ViT (F) | 67.6 | 70.2 | 83.1 | 87.4 |

| CLIP (L) | 78.9 | 83.4 | 89.8 | 91.9 |

| CLIP (F) | 78.7 | 83.5 | 89.6 | 92.9 |

Effect of the Pre-trained Backbone.

In fact, our framework can also change the backbone to ViT, which is pre-trained on the ImageNet.

Thus, we show the performance with different pre-trained backbones in Tab. 9.

Comparing the first line and the third line, we observe that CLIP (L) achieves better performance than ViT (L), which indicates the effectiveness of the CLIP pre-trained knowledge in multi-modal object ReID.

Meanwhile, ViT (F) achieves competitive performance, showcasing the effectiveness of our modules.

Comparison with CLIP-based TOP-ReID.

In Tab. 10, we compare our MambaPro with the CLIP-based TOP-ReID, with both models fully fine-tuned.

The results show that TOP-ReID with CLIP underperforms compared to our MambaPro.

Despite having nearly half the parameters, MambaPro achieves a 5.4% improvement in mAP and a 6.9% improvement in Rank-1.

These findings suggest that our MambaPro can better leverage CLIP’s knowledge.

| Methods | Params(M) | Pretrained | RGBNT201 | |||

| mAP | Rank-1 | Rank-5 | Rank-10 | |||

| TOP-ReID | 324.53 | CLIP | 73.3 | 77.2 | 85.9 | 90.1 |

| MambaPro | 160.34 | CLIP | 78.7 | 83.5 | 89.6 | 92.9 |

| Methods | Performance | Aggregation Complexity | ||||

| mAP | R-1 | R-5 | R-10 | FLOPs(G) | Params(M) | |

| MA(Trans) | 77.0 | 80.4 | 88.4 | 90.9 | 1.81 | 14.19 |

| MA(Mamba) | 78.9 | 83.4 | 89.8 | 91.9 | 1.42 | 9.47 |

Effect of Mamba Block in MA. To clarify, the complete MA module includes both intra-modal and inter-modal interactions. A fair comparison is shown in Tab. 6 between Row 3 (standard Transformer applied across all three modalities) and Row 5 (using Mamba blocks). Mamba achieves a 1% mAP increase with nearly half the FLOPs of the Transformer. To further enhance modeling capability, we add Intra-MA, and the final MA achieves a 3.4% mAP improvement with FLOPs and parameters comparable to the Transformer block. Additionally, in Tab. 11, we validate MA’s effectiveness by replacing Mamba blocks in both MA-inter and MA-intra with Transformers, denoted as MA(Trans), verifying the superiority of our proposed Mamba-based MA.

| Methods | RGBNT100 | Complexity | |||||||||

| CLIP(L) | CLIP(F) | PFA | SRP | MA | mAP | Rank-1 | Rank-5 | Rank-10 | Mem.(G) | Param.(M) | |

| A | ✓ | ✕ | ✕ | ✕ | ✕ | 17.9 | 40.9 | 50.5 | 56.0 | 21.79 | 0 |

| B | ✕ | ✓ | ✕ | ✕ | ✕ | 80.7 | 93.5 | 96.2 | 96.7 | 28.73 | 86.14 |

| C | ✓ | ✕ | ✓ | ✕ | ✕ | 81.6 | 93.9 | 95.0 | 95.6 | 28.21 | 28.34 |

| D | ✓ | ✕ | ✕ | ✓ | ✕ | 79.1 | 92.9 | 94.2 | 94.9 | 24.65 | 28.48 |

| E | ✓ | ✕ | ✓ | ✓ | ✕ | 82.0 | 94.0 | 95.3 | 95.8 | 32.05 | 56.82 |

| F | ✓ | ✕ | ✓ | ✓ | ✓ | 83.9 | 94.7 | 94.9 | 95.3 | 38.01 | 74.20 |

| G | ✕ | ✓ | ✓ | ✓ | ✓ | 83.1 | 94.1 | 94.9 | 95.6 | 45.36 | 160.34 |

Generalization to Vehicle Datasets

In Tab. 12, we validate the effectiveness of key components on the vehicle dataset RGBNT100. Here, the memory consumption is measured with a batch size of 128. Comparing Model A with Model B, we observe a remarkable improvement in mAP, showcasing the necessity of knowledge transfer. In Model C, PFA outperforms the fully fine-tuned CLIP, showcasing the effectiveness of parallel knowledge transfer. Meanwhile, Model D shows competitive performance, showing the effectiveness of SRP. Furthermore, through the combination of SRP and PFA, Model E achieves higher performance. Finally, with the MA module, Model F achieves the best performance. Similarly, we also compare our method with the fully fine-tuned CLIP, which has a much higher complexity. In contrast, our method achieves better performance with lower complexity, validating the effectiveness of our proposed method on vehicle datasets.

E. Visualization Results

In this part, we provide the Grad-CAM visualizations of . These features are the fully interacted features of different modalities after MA. To be specific, we visualize discriminative features of persons and vehicles in Fig. 10 and Fig. 11, respectively. As we can see, different modalities have different discriminative regions, which means the complementary information is effectively utilized. Meanwhile, in Fig. 10, the discriminative regions are consistent with the human perception, where fine-grained details like the head, clothes, shoes and bags are highlighted. In Fig. 11, we exhibit the samples with different visual scenarios and viewpoints. We observe that even certain modalities are completely invisible, the discriminative regions are still highlighted, showcasing the effectiveness of modality interaction. In conclusion, the Grad-CAM visualizations fully validate the effectiveness of our proposed method.