[Contents (Appendix)]tocatoc \AfterTOCHead[toc] \AfterTOCHead[atoc]

MALI: A memory efficient and reverse accurate integrator for Neural ODEs

Abstract

Neural ordinary differential equations (Neural ODEs) are a new family of deep-learning models with continuous depth. However, the numerical estimation of the gradient in the continuous case is not well solved: existing implementations of the adjoint method suffer from inaccuracy in reverse-time trajectory, while the naive method and the adaptive checkpoint adjoint method (ACA) have a memory cost that grows with integration time. In this project, based on the asynchronous leapfrog (ALF) solver, we propose the Memory-efficient ALF Integrator (MALI), which has a constant memory cost w.r.t number of solver steps in integration similar to the adjoint method, and guarantees accuracy in reverse-time trajectory (hence accuracy in gradient estimation). We validate MALI in various tasks: on image recognition tasks, to our knowledge, MALI is the first to enable feasible training of a Neural ODE on ImageNet and outperform a well-tuned ResNet, while existing methods fail due to either heavy memory burden or inaccuracy; for time series modeling, MALI significantly outperforms the adjoint method; and for continuous generative models, MALI achieves new state-of-the-art performance.Code is available at https://github.com/juntang-zhuang/TorchDiffEqPack

1 Introduction

Recent research builds the connection between continuous models and neural networks. The theory of dynamical systems has been applied to analyze the properties of neural networks or guide the design of networks (Weinan, 2017; Ruthotto & Haber, 2019; Lu et al., 2018). In these works, a residual block (He et al., 2016) is typically viewed as a one-step Euler discretization of an ODE; instead of directly analyzing the discretized neural network, it might be easier to analyze the ODE.

Another direction is the neural ordinary differential equation (Neural ODE) (Chen et al., 2018), which takes a continuous depth instead of discretized depth. The dynamics of a Neural ODE is typically approximated by numerical integration with adaptive ODE solvers. Neural ODEs have been applied in irregularly sampled time-series (Rubanova et al., 2019), free-form continuous generative models (Grathwohl et al., 2018; Finlay et al., 2020), mean-field games (Ruthotto et al., 2020), stochastic differential equations (Li et al., 2020) and physically informed modeling (Sanchez-Gonzalez et al., 2019; Zhong et al., 2019).

Though the Neural ODE has been widely applied in practice, how to train it is not extensively studied. The naive method directly backpropagates through an ODE solver, but tracking a continuous trajectory requires a huge memory. Chen et al. (2018) proposed to use the adjoint method to determine the gradient in continuous cases, which achieves constant memory cost w.r.t integration time; however, as pointed out by Zhuang et al. (2020), the adjoint method suffers from numerical errors due to the inaccuracy in reverse-time trajectory. Zhuang et al. (2020) proposed the adaptive checkpoint adjoint (ACA) method to achieve accuracy in gradient estimation at a much smaller memory cost compared to the naive method, yet the memory consumption of ACA still grows linearly with integration time. Due to the non-constant memory cost, neither ACA nor naive method are suitable for large scale datasets (e.g. ImageNet) or high-dimensional Neural ODEs (e.g. FFJORD (Grathwohl et al., 2018)).

In this project, we propose the Memory-efficient Asynchronous Leapfrog Integrator (MALI) to achieve advantages of both the adjoint method and ACA: constant memory cost w.r.t integration time and accuracy in reverse-time trajectory. MALI is based on the asynchronous leapfrog (ALF) integrator (Mutze, 2013). With the ALF integrator, each numerical step forward in time is reversible. Therefore, with MALI, we delete the trajectory and only keep the end-time states, hence achieve constant memory cost w.r.t integration time; using the reversibility, we can accurately reconstruct the trajectory from the end-time value, hence achieve accuracy in gradient. Our contributions are:

-

1.

We propose a new method (MALI) to solve Neural ODEs, which achieves constant memory cost w.r.t number of solver steps in integration and accuracy in gradient estimation. We provide theoretical analysis.

-

2.

We validate our method with extensive experiments: (a) for image classification tasks, MALI enables a Neural ODE to achieve better accuracy than a well-tuned ResNet with the same number of parameters; to our knowledge, MALI is the first method to enable training of Neural ODEs on a large-scale dataset such as ImageNet, while existing methods fail due to either heavy memory burden or inaccuracy. (b) In time-series modeling, MALI achieves comparable or better results than other methods. (c) For generative modeling, a FFJORD model trained with MALI achieves new state-of-the-art results on MNIST and Cifar10.

2 Preliminaries

2.1 Numerical Integration Methods

An ordinary differential equation (ODE) typically takes the form

| (1) |

where is the hidden state evolving with time, is the end time, is the start time (typically 0), is the initial state. The derivative of w.r.t is defined by a function , and is defined as a sequence of layers parameterized by . The loss function is , where is the target variable. Eq. 1 is called the initial value problem (IVP) because only is specified.

Notations We summarize the notations following Zhuang et al. (2020).

-

•

: hidden state in forward/reverse time trajectory at time .

-

•

: the numerical solution at time , starting from with a stepsize .

-

•

: is the number of layers in in Eq. 1, is the dimension of .

-

•

: number of discretized points (outer iterations in Algo. 1) in forward / reverse integration.

-

•

: average number of inner iterations in Algo. 1 to find an acceptable stepsize.

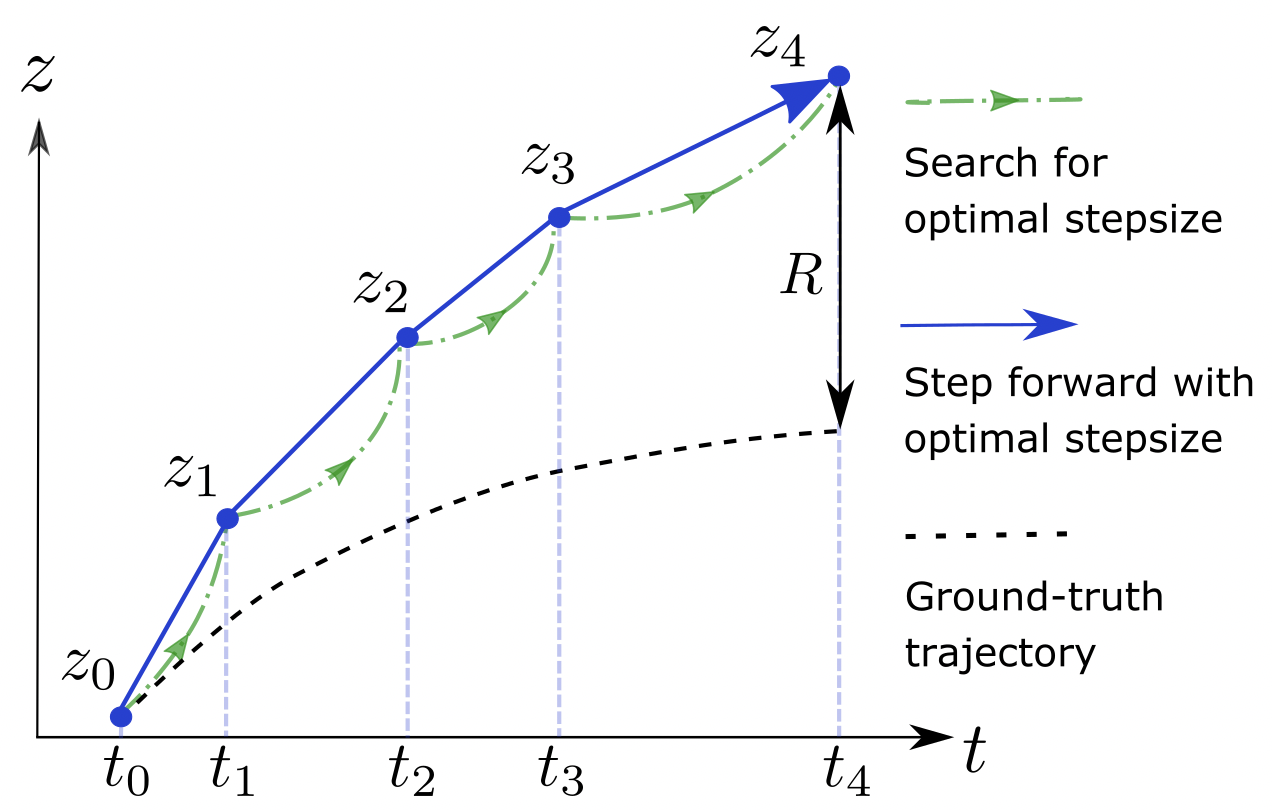

Numerical Integration The algorithm for general adaptive-stepsize numerical ODE solvers is summarized in Algo. 1 (Wanner & Hairer, 1996). The solver repeatedly advances in time by a step, which is the outer loop in Algo. 1 (blue curve in Fig. 1). For each step, the solver decreases the stepsize until the estimate of error is lower than the tolerance, which is the inner loop in Algo. 1 (green curve in Fig. 1). For fixed-stepsize solvers, the inner loop is replaced with a single evaluation of using predefined stepsize . Different methods typically use different , for example different orders of the Runge-Kutta method (Runge, 1895).

2.2 Analytical form of gradient in continuous case

We first briefly introduce the analytical form of the gradient in the continuous case, then we compare different numerical implementations in the literature to estimate the gradient. The analytical form of the gradient in the continuous case is

| (2) |

| (3) |

where is the “adjoint state”. Detailed proof is given in (Pontryagin, 1962). In the next section we compare different numerical implementations of this analytical form.

| Naive | Adjoint | ACA | MALI | |

| Computation | ||||

| Memory | ||||

| Computation graph depth | ||||

| Reverse accuracy | ✓ | ✗ | ✓ | ✓ |

2.3 Numerical implementations in the literature for the analytical form

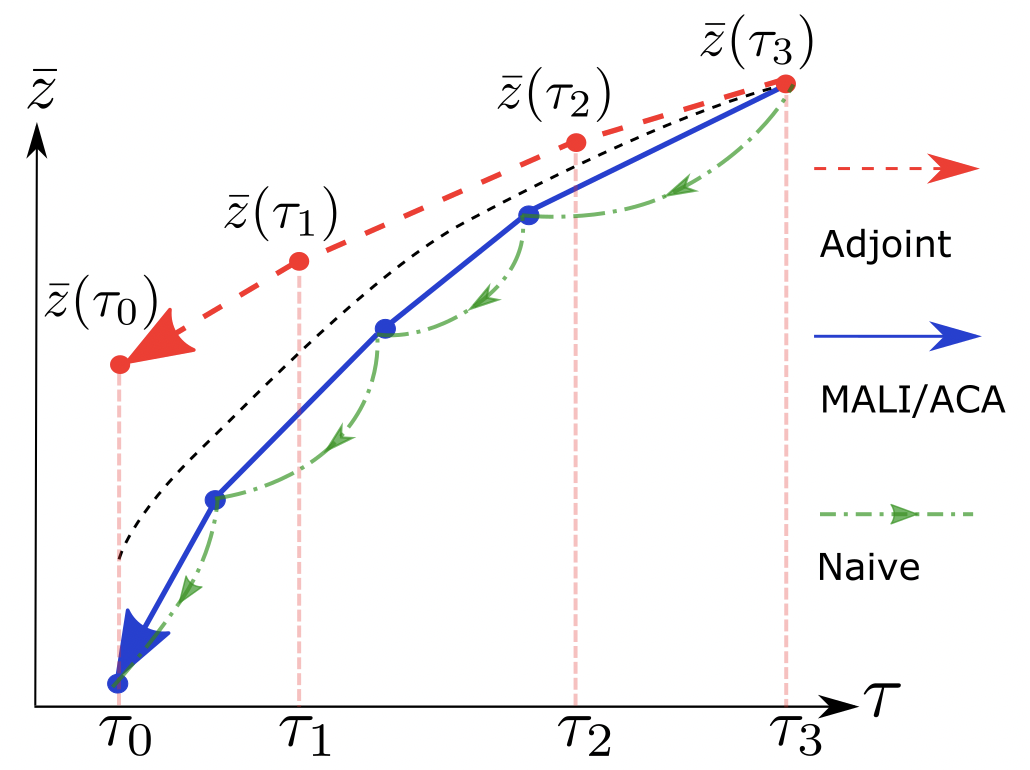

We compare different numerical implementations of the analytical form in this section. The forward-pass and backward-pass of different methods are demonstrated in Fig. 1 and Fig. 2 respectively. Forward-pass is similar for different methods. The comparison of backward-pass among different methods are summarized in Table. 1. We explain methods in the literature below.

Naive method The naive method saves all of the computation graph (including search for optimal stepsize, green curve in Fig. 2) in memory, and backpropagates through it. Hence the memory cost is and depth of computation graph are , and the computation is doubled considering both forward and backward passes. Besides the large memory and computation, the deep computation graph might cause vanishing or exploding gradient (Pascanu et al., 2013).

Adjoint method Note that we use “adjoint state equation” to refer to the analytical form in Eq. 2 and 3, while we use “adjoint method” to refer to the numerical implementation by Chen et al. (2018). As in Fig. 1 and 2, the adjoint method forgets forward-time trajectory (blue curve) to achieve memory cost which is constant to integration time; it takes the end-time state (derived from forward-time integration) as the initial state, and solves a separate IVP (red curve) in reverse-time.

Theorem 2.1.

(Zhuang et al., 2020) For an ODE solver of order , the error of the reconstructed initial value by the adjoint method is , where is the ideal solution, is the Jacobian of , and are the local error in forward-time and reverse-time integration respectively.

Theorem 2.1 is stated as Theorem 3.2 in Zhuang et al. (2020); please see reference paper for detailed proof. To summarize, due to inevitable errors with numerical ODE solvers, the reverse-time trajectory (red curve, ) cannot match the forward-time trajectory (blue curve, ) accurately. The error in propagates to by Eq. 2, hence affects the accuracy in gradient estimation.

Adaptive checkpoint adjoint (ACA) To solve the inaccuracy of adjoint method, Zhuang et al. (2020) proposed ACA: ACA stores forward-time trajectory in memory for backward-pass, hence guarantees accuracy; ACA deletes the search process (green curve in Fig. 2), and only back-propagates through the accepted step (blue curve in Fig. 2), hence has a shallower computation graph ( for ACA vs for naive method). ACA only stores , and deletes the computation graph for , hence the memory cost is . Though the memory cost is much smaller than the naive method, it grows linearly with , and can not handle very high dimensional models. In the following sections, we propose a method to overcome all these disadvantages of existing methods.

3 Methods

3.1 Asynchronous Leapfrog Integrator

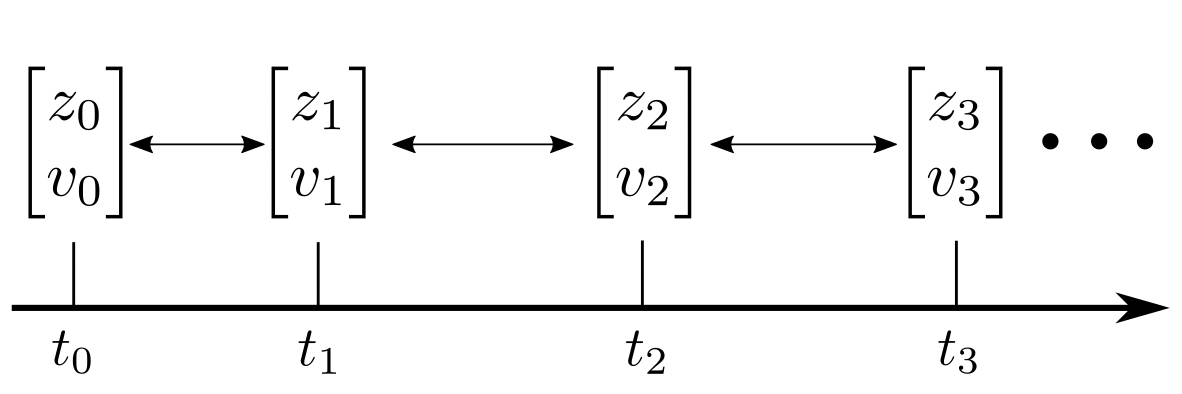

In this section we give a brief introduction to the asynchronous leapfrog (ALF) method (Mutze, 2013), and we provide theoretical analysis which is missing in Mutze (2013). For general first-order ODEs in the form of Eq. 1, the tuple is sufficient for most ODE solvers to take a step numerically. For ALF, the required tuple is , where is the “approximated derivative”. Most numerical ODE solvers such as the Runge-Kutta method (Runge, 1895) track state evolving with time, while ALF tracks the “augmented state” . We explain the details of ALF as below. Input where is current time, and are correponding values at time , is stepsize. Forward Output Algorithm 2 Forward of in ALF Input where is current time, and are corresponding values at , is stepsize. Inverse Output Algorithm 3 (Inverse of ) in ALF

Procedure of ALF Different ODE solvers have different in Algo. 1, hence we only summarize for ALF in Algo. 2. Note that for a complete algorithm of integration for ALF, we need to plug Algo. 2 into Algo. 1. The forward-pass is summarized in Algo. 2. Given stepsize , with input , a single step of ALF outputs .

As in Fig. 3, given , the numerical forward-time integration calls Algo. 2 iteratively:

| (4) |

Invertibility of ALF An interesting property of ALF is that defines a bijective mapping; therefore, we can reconstruct from , as demonstrated in Algo. 7. As in Fig. 3, we can reconstruct the entire trajectory given the state at time , and the discretized time points . For example, given and , the trajectory for Eq. 3.1 is reconstructed:

| (5) |

In the following sections, we will show the invertibility of ALF is the key to maintain accuracy at a constant memory cost to train Neural ODEs. Note that “inverse” refers to reconstructing the input from the output without computing the gradient, hence is different from “back-propagation”.

Initial value For an initial value problem (IVP) such as Eq. 1, typically is given while is undetermined. We can construct , so the initial augmented state is .

Difference from midpoint integrator The midpoint integrator (Süli & Mayers, 2003) is similar to Algo. 2, except that it recomputes for every step, while ALF directly uses the input . Therefore, the midpoint method does not have an explicit form of inverse.

Local truncation error Theorem 3.1 indicates that the local truncation error of ALF is of order ; this implies the global error is . Detailed proof is in Appendix A.3.

Theorem 3.1.

For a single step in ALF with stepsize , the local truncation error of is , and the local truncation error of is .

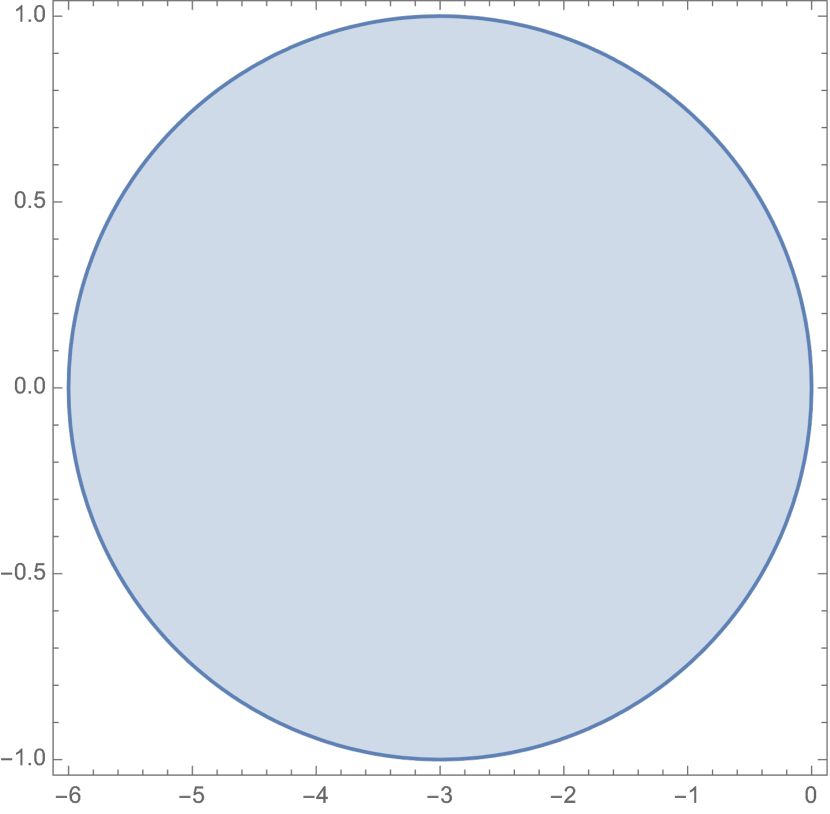

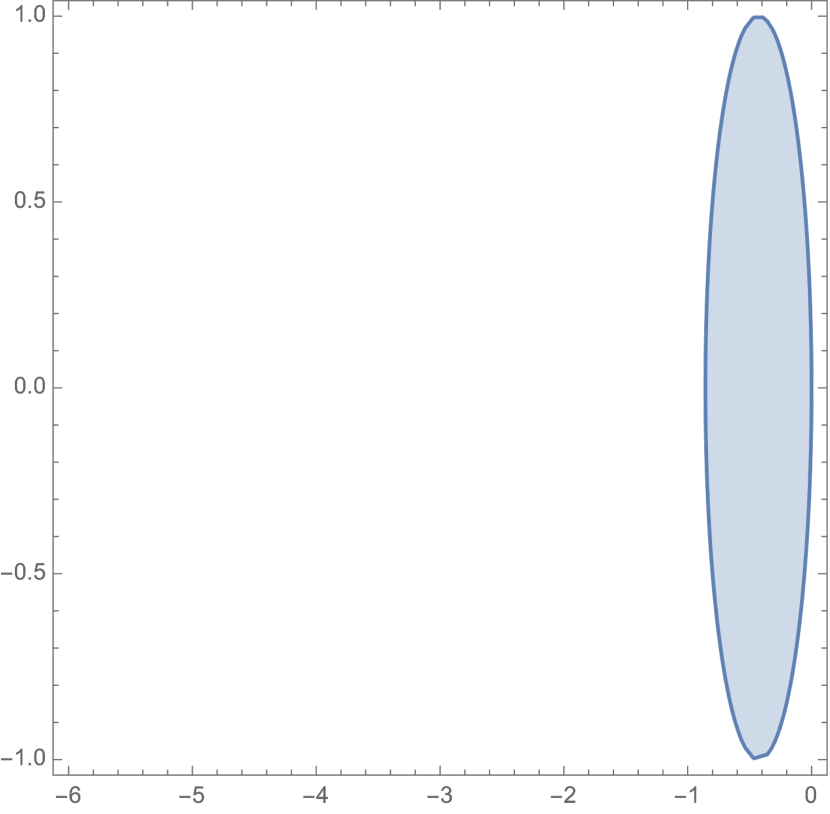

A-Stability The ALF solver has a limited stability region, but this can be solved with damping. The damped ALF replaces the update of in Algo. 2 with , where is the “damping coefficient” between 0 and 1. We have the following theorem on its numerical stability.

Theorem 3.2.

For the damped ALF integrator with stepsize , where is the -th eigenvalue of the Jacobian , then the solver is A-stable if

Proof is in Appendix A.4 and A.5. Theorem 3.2 implies the following: when , the damped ALF reduces to ALF, and the stability region is empty; when , the stability region is non-empty. However, stability describes the behaviour when goes to infinity; in practice we always use a bounded and ALF performs well. Inverse of damped ALF is in Appendix A.5.

3.2 Memory-efficient ALF Integrator (MALI) for gradient estimation

An ideal solver for Neural ODEs should achieve two goals: accuracy in gradient estimation and constant memory cost w.r.t integration time. Yet none of the existing methods can achieve both goals. We propose a method based on the ALF solver, which to our knowledge is the first method to achieve the two goals simultaneously.

Procedure of MALI Details of MALI are summarized in Algo. 4. For the forward-pass, we only keep the end-time state and the accepted discretized time points (blue curves in Fig. 1 and 2). We ignore the search process for optimal stepsize (green curve in Fig. 1 and 2), and delete other variables to save memory. During the backward pass, we can reconstruct the forward-time trajectory as in Eq. 5, then calculate the gradient by numerical discretization of Eq. 2 and Eq. 3.

Constant memory cost w.r.t number of solver steps in integration We delete the computation graph and only keep the end-time state to save memory. The memory cost is , where is due to evaluating and is irreducible for all methods. Compared with the adjoint method, MALI only requires extra memory to record , and also has a constant memory cost w.r.t time step . The memory cost is .

Accuracy Our method guarantees the accuracy of reverse-time trajectory (e.g. blue curve in Fig. 2 matches the blue curve in Fig. 1), because ALF is explicitly invertible for free-form (see Algo. 7). Therefore, the gradient estimation in MALI is more accurate compared to the adjoint method.

Computation cost Recall that on average it takes steps to find an acceptable stepsize, whose error estimate is below tolerance. Therefore, the forward-pass with search process has computation burden . Note that we only reconstruct and backprop through the accepted step and ignore the search process, hence it takes another computation. The overall computation burden is as in Table 1.

Shallow computation graph Similar to ACA, MALI only backpropagates through the accepted step (blue curve in Fig. 2) and ignores the search process (green curve in Fig. 2), hence the depth of computation graph is . The computation graph of MALI is much shallower than the naive method, hence is more robust to vanishing and exploding gradients (Pascanu et al., 2013).

Summary The adjoint method suffers from inaccuracy in reverse-time trajectory, the naive method suffers from exploding or vanishing gradient caused by deep computation graph, and ACA finds a balance but the memory grows linearly with integration time. MALI achieves accuracy in reverse-time trajectory, constant memory w.r.t integration time, and a shallow computation graph.

4 Experiments

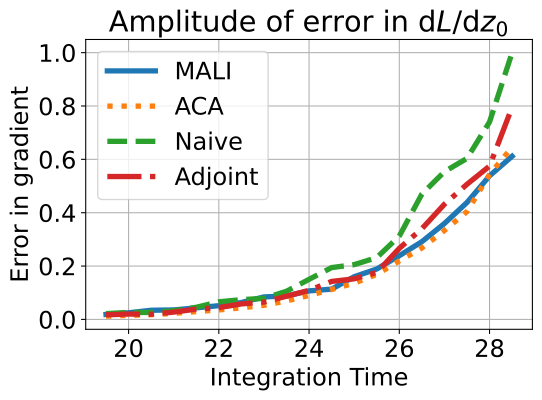

4.1 Validation on a toy example

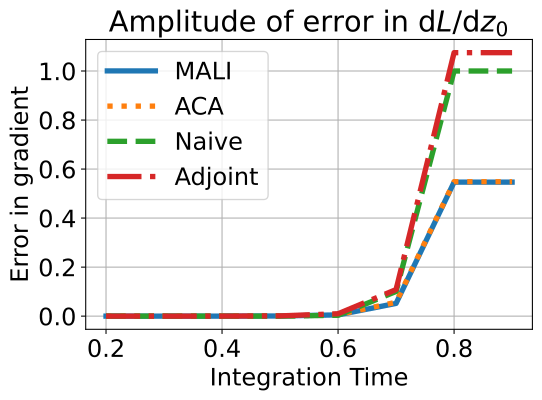

We compare the performance of different methods on a toy example, defined as

| (6) |

The analytical solution is

| (7) |

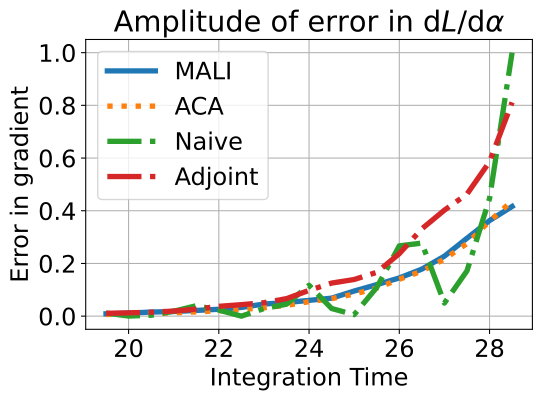

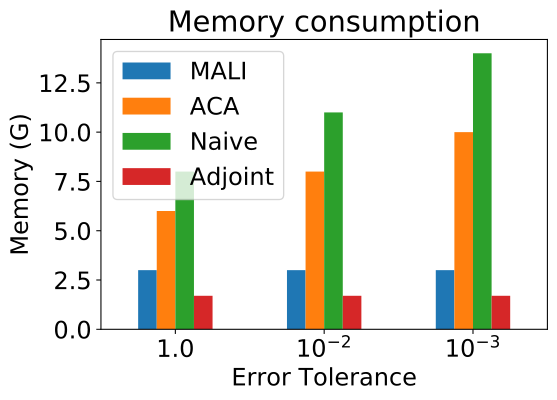

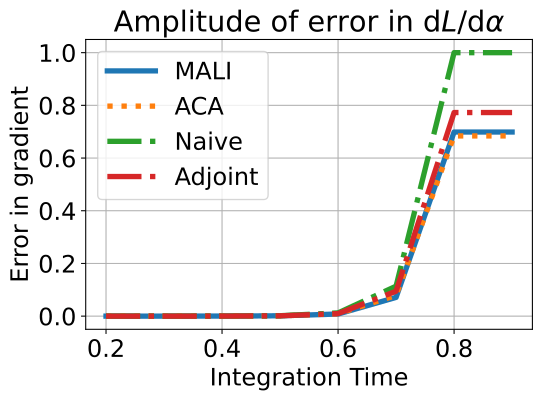

We plot the amplitude of error between numerical solution and analytical solution varying with (integrated under the same error tolerance, ) in Fig 4. ACA and MALI have similar errors, both outperforming other methods. We also plot the memory consumption for different methods on a Neural ODE with the same input in Fig. 4. As the error tolerance decreases, the solver evaluates more steps, hence the naive method and ACA increase memory consumption, while MALI and the adjoint method have a constant memory cost. These results validate our analysis in Sec. 3.2 and Table 1, and shows MALI achieves accuracy at a constant memory cost.

| Fixed-stepsize solvers of various stepsizes | Adaptive-stepsize solver of various tolerances | |||||||||

| Stepsize | 1 | 0.5 | 0.25 | 0.15 | 0.1 | Tolerance | 1.00E+00 | 1.00E-01 | 1.00E-02 | |

| Neural ODE | MALI | 42.33 | 66.4 | 69.59 | 70.17 | 69.94 | MALI | 62.56 | 69.89 | 69.87 |

| Euler | 21.94 | 61.25 | 67.38 | 68.69 | 70.02 | Heun-Euler | 68.48 | 69.87 | 69.88 | |

| RK2 | 42.33 | 69 | 69.72 | 70.14 | 69.92 | RK23 | 50.77 | 69.89 | 69.93 | |

| RK4 | 12.6 | 69.99 | 69.91 | 70.21 | 69.96 | Dopri5 | 52.3 | 68.58 | 69.71 | |

| ResNet | 70.09 | |||||||||

| MALI | Heun-Euler | RK23 | Dopri5 | MALI | Heun-Euler | RK23 | Dopri5 | ||

|---|---|---|---|---|---|---|---|---|---|

| Neural ODE | MALI | 14.69 | 14.72 | 14.77 | 15.71 | 10.38 | 10.46 | 10.62 | 10.62 |

| Heun-Euler | 14.77 | 14.75 | 14.80 | 15.74 | 10.63 | 10.47 | 10.44 | 10.49 | |

| RK23 | 14.82 | 14.77 | 14.79 | 15.69 | 10.78 | 10.53 | 10.48 | 10.56 | |

| Dopri5 | 14.82 | 14.78 | 14.79 | 15.15 | 10.76 | 10.49 | 10.48 | 10.51 | |

| ResNet | 13.02 | 9.57 | |||||||

4.2 Image recognition with Neural ODE

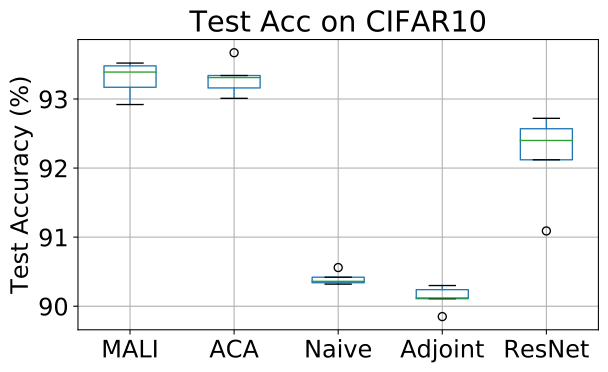

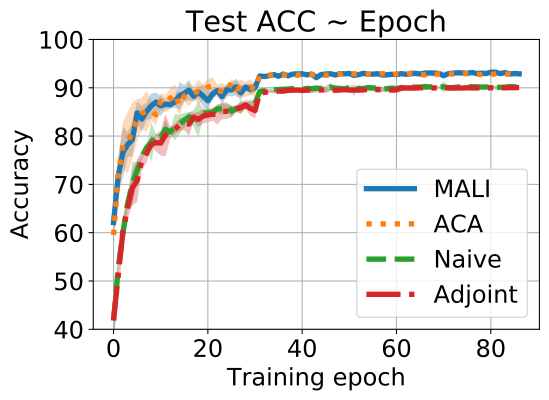

We validate MALI on image recognition tasks using Cifar10 and ImageNet datasets. Similar to Zhuang et al. (2020), we modify a ResNet18 into its corresponding Neural ODE: the forward function is and for the residual block and Neural ODE respectively, where the same is shared. We compare MALI with the naive method, adjoint method and ACA.

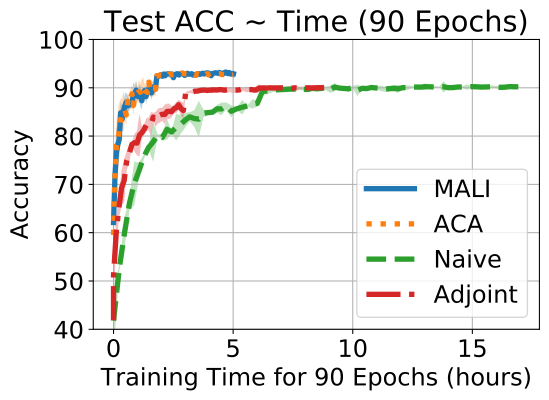

Results on Cifar10 Results of 5 independent runs on Cifar10 are summarized in Fig. 5. MALI achieves comparable accuracy to ACA, and both significantly outperform the naive and the adjoint method. Furthermore, the training speed of MALI is similar to ACA, and both are almost two times faster than the adjoint memthod, and three times faster than the naive method. This validates our analysis on accuracy and computation burden in Table 1.

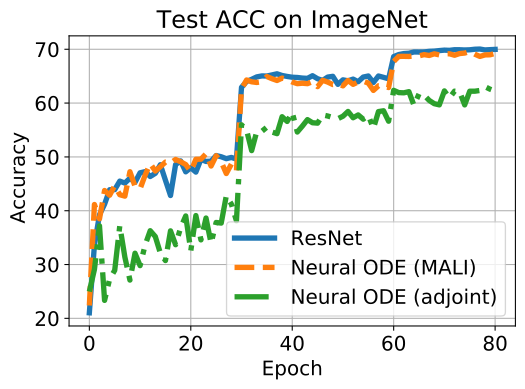

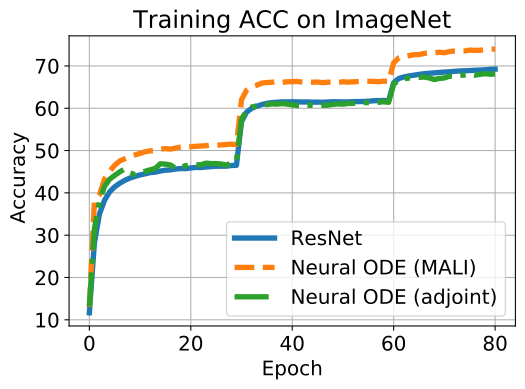

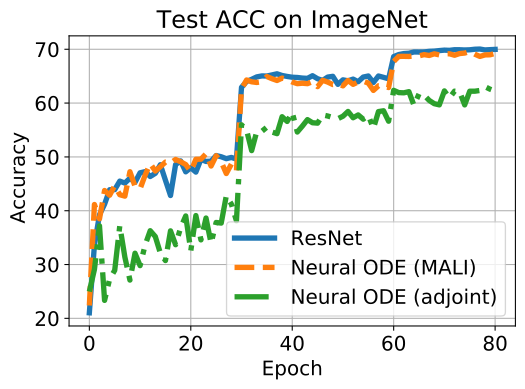

Accuracy on ImageNet Due to the heavy memory burden caused by large images, the naive method and ACA are unable to train a Neural ODE on ImageNet with 4 GPUs; only MALI and the adjoint method are feasible due to the constant memory. We also compare the Neural ODE to a standard ResNet. As shown in Fig. 6, the accuracy of the Neural ODE trained with MALI closely follows ResNet, and significantly outperforms the adjoint method (top-1 validation: 70% v.s. 63%).

Invariance to discretization scheme A continuous model should be invariant to discretization schemes (e.g. different types of ODE solvers) as long as the discretization is sufficiently accurate. We test the Neural ODE using different solvers without re-training; since ResNet is often viewed as a one-step Euler discretization of an ODE (Haber & Ruthotto, 2017), we perform similar experiments. As shown in Table 2, Neural ODE consistently achieves high accuracy (70%), while ResNet drops to random guessing (0.1%) because ResNet as a one-step Euler discretization fails to be a meaningful dynamical system (Queiruga et al., 2020).

Robustness to adversarial attack Hanshu et al. (2019) demonstrated that Neural ODE is more robust to adversarial attack than ResNet on small-scale datasets such as Cifar10. We validate this result on the large-scale ImageNet dataset. The top-1 accuracy of Neural ODE and ResNet under FGSM attack (Goodfellow et al., 2014) are summarized in Table 3. For Neural ODE, due to its invariance to discretization scheme, we derive the gradient for attack using a certain solver (row in Table 3), and inference on the perturbed images using various solvers. For different combinations of solvers and perturbation amplitudes, Neural ODE consistently outperforms ResNet.

Summary In image recognition tasks, we demonstrate Neural ODE is accurate, invariant to discretization scheme, and more robust to adversarial attack than ResNet. Note that detailed explanation on the robustness of Neural ODE is out of the scope for this paper, but to our knowledge, MALI is the first method to enable training of Neural ODE on large datasets due to constant memory cost.

4.3 Time-series modeling

We apply MALI to latent-ODE (Rubanova et al., 2019) and Neural Controlled Differential Equation (Neural CDE) (Kidger et al., 2020a; b). Our experiment is based on the official implementation from the literature. We report the mean squared error (MSE) on the Mujoco test set in Table 4, which is generated from the “Hopper” model using DeepMind control suite (Tassa et al., 2018); for all experiments with different ratios of training data, MALI achieves similar MSE to ACA, and both outperform the adjoint and naive method. We report the test accuracy on the Speech Command dataset for Neural CDE in Table 5; MALI achieves a higher accuracy than competing methods.

4.4 Continuous generative models

We apply MALI on FFJORD (Grathwohl et al., 2018), a free-from continuous generative model, and compare with several variants in the literature (Finlay et al., 2020; Kidger et al., 2020a). Our experiment is based on the official implementaion of Finlay et al. (2020); for a fair comparison, we train with MALI, and test with the same solver as in the literature (Grathwohl et al., 2018; Finlay et al., 2020), the Dopri5 solver with from the torchdiffeq package (Chen et al., 2018). Bits per dim (BPD, lower is better) on validation set for various datasets are reported in Table 6. For continuous models, MALI consistently generates the lowest BPD, and outperforms the Vanilla FFJORD (trained with adjoint), RNODE (regularized FFJORD) and the SemiNorm Adjoint (Kidger et al., 2020a). Furthermore, FFJORD trained with MALI achieves comparable BPD to state-of-the-art discrete-layer flow models in the literature. Please see Sec. B.3 for generated samples.

5 Related works

Besides ALF, the symplectic integrator (Verlet, 1967; Yoshida, 1990) is also able to reconstruct trajectory accurately, yet it’s typically restricted to second order Hamiltonian systems (De Almeida, 1990), and are unsuitable for general ODEs. Besides aforementioned methods, there are other methods for gradient estimation such as interpolated adjoint (Daulbaev et al., 2020) and spectral method (Quaglino et al., 2019), yet the implementations are involved and not publicly available. Other works focus on the theoretical properties of Neural ODEs (Dupont et al., 2019; Tabuada & Gharesifard, 2020; Massaroli et al., 2020). Neural ODE is recently applied to stochastic differential equation (Li et al., 2020), jump differential equation (Jia & Benson, 2019) and auto-regressive models (Wehenkel & Louppe, 2019). 00footnotetext: 1. Rubanova et al. (2019); 2. Zhuang et al. (2020); 3. Kidger et al. (2020a); 4. Chen et al. (2018); 5. Finlay et al. (2020); 6. Dinh et al. (2016); 7. Behrmann et al. (2019); 8. Kingma & Dhariwal (2018); 9. Ho et al. (2019); 10. Chen et al. (2019)

| Percentage of training data | RNN1 | RNN-GRU1 | Latent-ODE | |||

|---|---|---|---|---|---|---|

| Adjoint1 | Naive2 | ACA2 | MALI | |||

| 10% | 2.451 | 1.972 | 0.471 | 0.362 | 0.312 | 0.35 |

| 20% | 1.711 | 1.421 | 0.441 | 0.302 | 0.272 | 0.27 |

| 50% | 0.791 | 0.751 | 0.401 | 0.292 | 0.262 | 0.26 |

| Method | Accuracy (%) |

|---|---|

| Adjoint3 | |

| SemiNorm3 | |

| Naive | |

| ACA | |

| MALI |

| Dataset | Continuous Flow (FFJORD) | Discrete Flow | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Vanilla4 | RNODE5 | SemiNorm3 | MALI | RealNVP6 | i-ResNet7 | Glow8 | Flow++9 | Residual Flow10 | |

| MNIST | 0.994 | 0.975 | 0.963 | 0.87 | 1.066 | 1.057 | 1.058 | - | 0.9710 |

| CIFAR10 | 3.404 | 3.385 | 3.353 | 3.27 | 3.496 | 3.457 | 3.358 | 3.289 | 3.2810 |

| ImageNet64 | - | 3.835 | - | 3.71 | 3.986 | - | 3.818 | - | 3.7610 |

6 Conclusion

Based on the asynchronous leapfrog integrator, we propose MALI to estimate the gradient for Neural ODEs. To our knowledge, our method is the first to achieve accuracy, fast speed and a constant memory cost. We provide comprehensive theoretical analysis on its properties. We validate MALI with extensive experiments, and achieved new state-of-the-art results in various tasks, including image recognition, continuous generative modeling, and time-series modeling.

References

- Behrmann et al. (2019) Jens Behrmann, Will Grathwohl, Ricky TQ Chen, David Duvenaud, and Jörn-Henrik Jacobsen. Invertible residual networks. In International Conference on Machine Learning, pp. 573–582, 2019.

- Chen et al. (2018) Ricky TQ Chen, Yulia Rubanova, Jesse Bettencourt, and David K Duvenaud. Neural ordinary differential equations. In Advances in Neural Information Processing Systems, pp. 6571–6583, 2018.

- Chen et al. (2019) Ricky TQ Chen, Jens Behrmann, David K Duvenaud, and Jörn-Henrik Jacobsen. Residual flows for invertible generative modeling. In Advances in Neural Information Processing Systems, pp. 9916–9926, 2019.

- Daulbaev et al. (2020) Talgat Daulbaev, Alexandr Katrutsa, Larisa Markeeva, Julia Gusak, Andrzej Cichocki, and Ivan Oseledets. Interpolated adjoint method for neural odes. arXiv preprint arXiv:2003.05271, 2020.

- De Almeida (1990) Alfredo M Ozorio De Almeida. Hamiltonian systems: chaos and quantization. Cambridge University Press, 1990.

- Dinh et al. (2016) Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio. Density estimation using real nvp. arXiv preprint arXiv:1605.08803, 2016.

- Dupont et al. (2019) Emilien Dupont, Arnaud Doucet, and Yee Whye Teh. Augmented neural odes. In Advances in Neural Information Processing Systems, pp. 3140–3150, 2019.

- Finlay et al. (2020) Chris Finlay, Jörn-Henrik Jacobsen, Levon Nurbekyan, and Adam M Oberman. How to train your neural ode: the world of jacobian and kinetic regularization. In International Conference on Machine Learning, 2020.

- Goodfellow et al. (2014) Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572, 2014.

- Grathwohl et al. (2018) Will Grathwohl, Ricky TQ Chen, Jesse Bettencourt, Ilya Sutskever, and David Duvenaud. Ffjord: Free-form continuous dynamics for scalable reversible generative models. arXiv preprint arXiv:1810.01367, 2018.

- Haber & Ruthotto (2017) Eldad Haber and Lars Ruthotto. Stable architectures for deep neural networks. Inverse Problems, 34(1):014004, 2017.

- Hanshu et al. (2019) YAN Hanshu, DU Jiawei, TAN Vincent, and FENG Jiashi. On robustness of neural ordinary differential equations. In International Conference on Learning Representations, 2019.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Ho et al. (2019) Jonathan Ho, Xi Chen, Aravind Srinivas, Yan Duan, and Pieter Abbeel. Flow++: Improving flow-based generative models with variational dequantization and architecture design. arXiv preprint arXiv:1902.00275, 2019.

- Jia & Benson (2019) Junteng Jia and Austin R Benson. Neural jump stochastic differential equations. In Advances in Neural Information Processing Systems, pp. 9847–9858, 2019.

- Kidger et al. (2020a) Patrick Kidger, Ricky T. Q. Chen, and Terry Lyons. “Hey, that’s not an ODE”: Faster ODE Adjoints with 12 Lines of Code. arXiv:2009.09457, 2020a.

- Kidger et al. (2020b) Patrick Kidger, James Morrill, James Foster, and Terry Lyons. Neural controlled differential equations for irregular time series. arXiv preprint arXiv:2005.08926, 2020b.

- Kingma & Dhariwal (2018) Durk P Kingma and Prafulla Dhariwal. Glow: Generative flow with invertible 1x1 convolutions. In Advances in Neural Information Processing Systems, pp. 10215–10224, 2018.

- Li et al. (2020) Xuechen Li, Ting-Kam Leonard Wong, Ricky TQ Chen, and David Duvenaud. Scalable gradients for stochastic differential equations. arXiv preprint arXiv:2001.01328, 2020.

- Liu (2017) Kuang Liu. Train cifar10 with pytorch. 2017. URL https://github.com/kuangliu/pytorch-cifar.

- Lu et al. (2018) Yiping Lu, Aoxiao Zhong, Quanzheng Li, and Bin Dong. Beyond finite layer neural networks: Bridging deep architectures and numerical differential equations. In International Conference on Machine Learning, pp. 3276–3285. PMLR, 2018.

- Massaroli et al. (2020) Stefano Massaroli, Michael Poli, Jinkyoo Park, Atsushi Yamashita, and Hajime Asama. Dissecting neural odes. arXiv preprint arXiv:2002.08071, 2020.

- Mutze (2013) Ulrich Mutze. An asynchronous leapfrog method ii. arXiv preprint arXiv:1311.6602, 2013.

- Pascanu et al. (2013) Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. On the difficulty of training recurrent neural networks. In International conference on machine learning, pp. 1310–1318, 2013.

- Pontryagin (1962) Lev Semenovich Pontryagin. Mathematical theory of optimal processes. Routledge, 1962.

- Quaglino et al. (2019) Alessio Quaglino, Marco Gallieri, Jonathan Masci, and Jan Koutník. Snode: Spectral discretization of neural odes for system identification. arXiv preprint arXiv:1906.07038, 2019.

- Queiruga et al. (2020) Alejandro F Queiruga, N Benjamin Erichson, Dane Taylor, and Michael W Mahoney. Continuous-in-depth neural networks. arXiv preprint arXiv:2008.02389, 2020.

- Rubanova et al. (2019) Yulia Rubanova, Ricky TQ Chen, and David K Duvenaud. Latent ordinary differential equations for irregularly-sampled time series. In Advances in Neural Information Processing Systems, pp. 5320–5330, 2019.

- Runge (1895) Carl Runge. Über die numerische auflösung von differentialgleichungen. Mathematische Annalen, 46(2):167–178, 1895.

- Ruthotto & Haber (2019) Lars Ruthotto and Eldad Haber. Deep neural networks motivated by partial differential equations. Journal of Mathematical Imaging and Vision, pp. 1–13, 2019.

- Ruthotto et al. (2020) Lars Ruthotto, Stanley J Osher, Wuchen Li, Levon Nurbekyan, and Samy Wu Fung. A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proceedings of the National Academy of Sciences, 117(17):9183–9193, 2020.

- Sanchez-Gonzalez et al. (2019) Alvaro Sanchez-Gonzalez, Victor Bapst, Kyle Cranmer, and Peter Battaglia. Hamiltonian graph networks with ode integrators. arXiv preprint arXiv:1909.12790, 2019.

- Silvester (2000) John R Silvester. Determinants of block matrices. The Mathematical Gazette, 84(501):460–467, 2000.

- Süli & Mayers (2003) Endre Süli and David F Mayers. An introduction to numerical analysis. Cambridge university press, 2003.

- Tabuada & Gharesifard (2020) Paulo Tabuada and Bahman Gharesifard. Universal approximation power of deep neural networks via nonlinear control theory. arXiv preprint arXiv:2007.06007, 2020.

- Tassa et al. (2018) Yuval Tassa, Yotam Doron, Alistair Muldal, Tom Erez, Yazhe Li, Diego de Las Casas, David Budden, Abbas Abdolmaleki, Josh Merel, Andrew Lefrancq, et al. Deepmind control suite. arXiv preprint arXiv:1801.00690, 2018.

- Verlet (1967) Loup Verlet. Computer” experiments” on classical fluids. i. Thermodynamical properties of Lennard-Jones molecules. Physical review, 159(1):98, 1967.

- Wanner & Hairer (1996) Gerhard Wanner and Ernst Hairer. Solving ordinary differential equations II. Springer Berlin Heidelberg, 1996.

- Wehenkel & Louppe (2019) Antoine Wehenkel and Gilles Louppe. Unconstrained monotonic neural networks. In Advances in Neural Information Processing Systems, pp. 1545–1555, 2019.

- Weinan (2017) E Weinan. A proposal on machine learning via dynamical systems. Communications in Mathematics and Statistics, 5(1):1–11, 2017.

- Yoshida (1990) Haruo Yoshida. Construction of higher order symplectic integrators. Physics letters A, 150(5-7):262–268, 1990.

- Zhong et al. (2019) Yaofeng Desmond Zhong, Biswadip Dey, and Amit Chakraborty. Symplectic ode-net: Learning hamiltonian dynamics with control. arXiv preprint arXiv:1909.12077, 2019.

- Zhuang et al. (2020) Juntang Zhuang, Nicha Dvornek, Xiaoxiao Li, Sekhar Tatikonda, Xenophon Papademetris, and James Duncan. Adaptive checkpoint adjoint method for gradient estimation in neural ode. International Conference on Machine Learning, 2020.

Appendix A Theoretical properties of ALF integrator

A.1 Algorithm of ALF

For the ease of reading, we write the algorithm for in ALF below, which is the same as Algo. 2 in the main paper, but uses slightly different notations for the ease of analysis.

| (1) | ||||

| (2) | ||||

| (3) | ||||

| (4) | ||||

| (5) | ||||

| (6) |

For simplicity, we can re-write the forward of ALF as

| (7) |

Similarly, the inverse of ALF can be written as

| (8) |

A.2 Preliminaries

For an ODE of the form

| (9) |

We have:

| (10) |

For the ease of notation, we re-write Eq. 10 as

| (11) |

where and represents the partial derivative of w.r.t and respectively.

A.3 Local truncation error of ALF

Theorem A.1 (Theorem 3.1 in the main paper).

For a single step in ALF with stepsize , the local truncation error of is , and the local truncation errof of is .

Proof.

Under the same notation as Algo. 5, denote the ground-truth state of and starting from as and respectively. Then the local truncation error is

| (12) |

We estimate and in terms of polynomial of .

Under mild assumptions that is smooth up to 2nd order almost everywhere (this is typically satisfied with neural networks with bounded weights), hence Taylor expansion is meaningful for . By Eq. 11, the Taylor expansion of around point is

| (13) | ||||

| (14) |

Next, we analyze accuracy of the numerical approximation. For simplicity, we directly analyze Eq. 7 by performing Taylor Expansion on .

| (15) |

| (16) |

Plug Eq. 14, Eq. 15 and E.q. 16 into the definition of , we get

| (17) | ||||

| (18) | ||||

| (19) |

Therefore, if is of order , is of order ; if is of order or smaller, then is of order . Specifically, at the start time of integration, we have , by induction, at end time is .

Next we analyze the local truncation error in , denoted as . Denote the ground truth as , we have

| (20) | ||||

| (21) |

Next we analyze the error in the numerical approximation. Plug Eq. 15 into Eq. 7,

| (22) | ||||

| (23) |

From Eq. 14, Eq. 21 and Eq. 23, we have

| (24) | ||||

| (25) | ||||

| (26) |

The last equation is derived by plugging in Eq. 14. Note that Eq. 26 holds for every single step forward in time, and at the start time of integration, we have due to our initialization as in Sec. 3.1 of the main paper. Therefore, by induction, is of order for consecutive steps. ∎

A.4 Stability analysis

Lemma A.1.1.

For a matrix of the form , if are square matrices of the same shape, and , then we have

Proof.

See (Silvester, 2000) for a detailed proof. ∎

Theorem A.2.

For ALF integrator with stepsize , if is 0 or is imaginary with norm no larger than 1, where is the -th eigenvalue of the Jacobian , then the solver is on the critical boundary of A-stability; otherwise, the solver is not A-stable.

Proof.

A solver is A-stable is equivalent to the eigenvalue of the numerical forward has a norm below 1. We calculate the eigenvalue of below.

For the function defined by Eq. 7, the Jacobian is

| (27) |

We determine the eigenvalue of by solving the equation

| (28) |

It’s trivial to check satisfies conditions for Lemma A.1.1.Therefore, we have

| (29) | ||||

| (30) |

Suppose the eigen-decompostion of can be written as

| (31) |

Note that , hence we have

| (32) | ||||

| (33) |

Hence the eigenvalues are

| (34) |

A-stability requires , and has no solution.

The critical boundary is , the solution is: is 0 or on the imaginary line with norm no larger than 1. ∎

A.5 Damped ALF

| (35) | ||||

| (36) | ||||

| (37) | ||||

| (38) | ||||

| (39) | ||||

| (40) |

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) | ||||

| (45) | ||||

| (46) | ||||

| (47) |

The main difference between ALF and Damped ALF is marked in blue in Algo. 6. In ALF, the update of is ; while in Damped ALF, the update is scaled by a factor between 0 and 1, so the update is . When , Damped ALF reduces to ALF.

Similar to Sec. A.1, we can write the forward as For simplicity, we can re-write the forward of ALF as

| (48) |

Similarly, the inverse of ALF can be written as

| (49) |

Theorem A.3.

For a single step in Damped ALF with stepsize , the local truncation error of is , and the local truncation errof of is .

Proof.

The proof is similar to Thm. A.3. By similar calculations using the Taylor Expansion in Eq. 15 and Eq. 14, we have

| (50) | ||||

| (51) |

Using Eq. 21, Eq. 15 and Eq. 14, we have

| (52) | ||||

| (53) |

Note that when , Eq. 51 reduces to Eq. 19, and Eq. 53 reduces to Eq. 26. By initialization, we have at initial time, hence by induction, the local truncation error for is ; the local truncation error for is when , and is when . ∎

Theorem A.4 (Theorem 3.2 in the main paper).

For Dampled ALF integrator with stepsize , where is the -th eigenvalue of the Jacobian , then the solver is A-stable if .

Proof.

The Jacobian of the forward-pass of a single step damped ALF is

| (54) |

when , reduces to Eq. 27. We can determine the eigenvalue of using similar techniques. Assume the eigenvalues for are , then we have

| (55) | ||||

| (56) | ||||

| (57) |

when , it’s easy to check that has non-empty solutions for . ∎

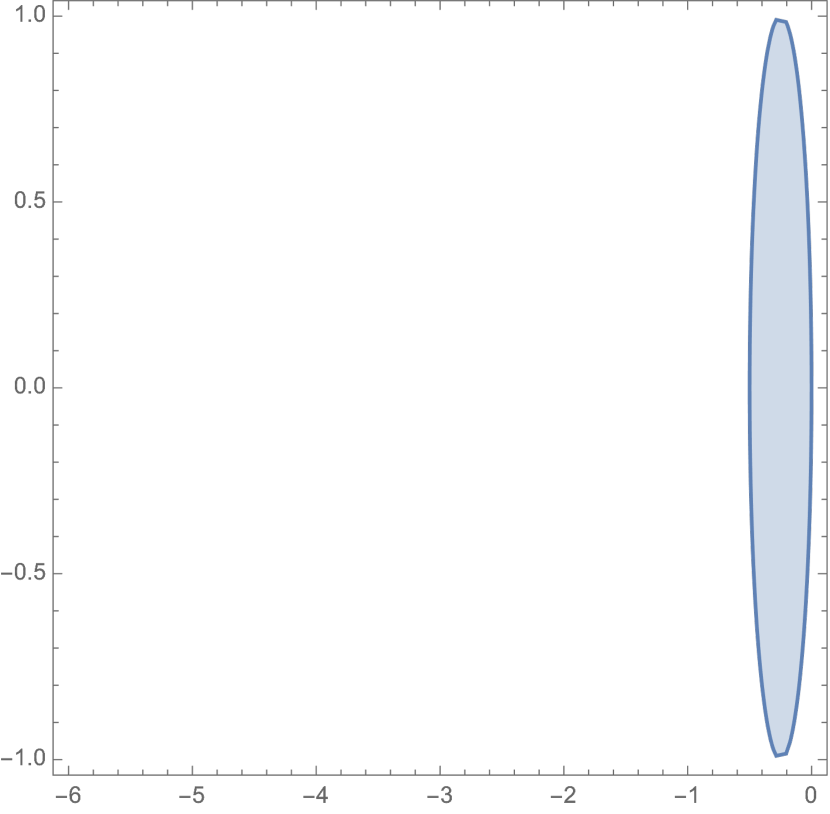

For a quick validation, we plot the region of A-stability on the imaginary plane for a single eigenvalue in Fig. 1. As increases, the area of stability decreases. When , the system is no-where A-stable, and the boundary for A-stability is on the imaginary axis where is the imaginary unit.

Appendix B Experimental Details

B.1 Image Recognition

B.1.1 Experiment on Cifar10

We directly modify a ResNet18 into a Neural ODE, where the forward of a residual block () and the forward of an ODE block ( where ) share the same parameterization , hence they have the same number of parameters. Our experiment is based on the official implementation by Zhuang et al. (2020) and an open-source repository (Liu, 2017).

All models are trained with SGD optimizer for 90 epochs, with an initial learning rate of 0.01, and decayed by a factor of 10 at 30th epoch and 60th epoch respectively. Training scheme is the same for all models (ResNet, Neural ODE trained with adjoint, naive, ACA and MALI). For ACA, we follow the settings in (Zhuang et al., 2020) and use the official implementation torch_ACA 111https://github.com/juntang-zhuang/torch_ACA, and use a Heun-Euler solver with during training. For MALI, we use an adaptive version and set . For the naive and adjoint method, we use the default Dopri5 solver from the torchdiffeq222https://github.com/rtqichen/torchdiffeq package with . We train all models for 5 independent runs, and report the mean and standard deviation across runs.

B.1.2 Experiments on ImageNet

Training scheme

We conduct experiments on ImageNet with ResNet18 and Neural-ODE18. All models are trained on 4 GTX-1080Ti GPUs with a batchsize of 256. All models are trained for 80 epochs, with an initial learning rate of 0.1, and decayed by a factor of 10 at 30th and 60th epoch. Note that due to the large size input , the naive method and ACA requires a huge memory, and is infeasible to train. MALI and the adjoint method requires a constant memory hence is suitable for large-scale experiments. For both MALI and the adjoint menthod, we use a fixed stepsize of 0.25, and integrates from 0 to . As shown in Table. 2 in the main paper, a stepsize of 0.25 is sufficiently small to train a meaningful continuous model that is robust to discretization scheme.

Invariance to discretization scheme

To test the influence of discretization scheme, we test our Neural ODE with different solvers without re-training. For fixed-stepsize solvers, we tested various step sizes including ; for adaptive solvers, we set rtol=0.1, atol=0.01 for MALI and Heun-Euler method, and set for RK23 solver, and set for Dopri5 solver. As shown in Table. 2, Neural ODE trained with MALI is robust to discretization scheme, and MALI significantly outperforms the adjoint method in terms of accuracy (70% v.s. 63% top-1 accuracy on the validation dataset). An interesting finding is that when trained with MALI which is a second-order solver, and tested with higher-order solver (e.g. RK4), our Neural ODE achieves 70.21% top-1 accuracy, which is higher than both the same solver during training (MALI, 69.59% accuracy) and the ResNet18 (70.09% accuracy).

Furthermore, many papers claim ResNet to be an approximation for an ODE (Lu et al., 2018). However, Queiruga et al. (2020) argues that many numerical discretizations fail to be meaningful dynamical systems, while our experiments demonstrate that our model is continuous hence invariant to discretization schemes.

Adversarial robustness

Besides the high accuracy and robustness to discretization scheme, another advantage of Neural ODE is the robustness to adversarial attack. The adversary robustness of Neural ODE is extensively studied in (Hanshu et al., 2019), but not only validated on small-scale datasets such as Cifar10. To our knowledge, our method is the first to enable effectuve training of Neural ODE on large-scale datasets such as ImageNet and achieve a high accuracy, and we are the first to validate the robustness of Neural ODE on ImageNet. We use the advertorch 333https://github.com/BorealisAI/advertorch toolbox to perform adversarial attack. We test the performance of ResNet and Neural ODE under FGSM attack. To be more convincing, we conduct experiment on the pretrained ResNet18 provided by the official PyTorch website 444https://pytorch.org/docs/stable/torchvision/models.html. Since Neural ODE is invariant to discretization scheme, it’s possible to derive the gradient for attack using one ODE solver, and inference on the perturbed image using another solver. As summarized in Table. 3, Neural ODE consistently achieves a higher accuracy than ResNet under the same attack.

B.2 Time series modeling

We conduct experiments on Latent-ODE models (Rubanova et al., 2019) and Neural CDE (controlled differential equation) (Kidger et al., 2020a). For all experiments, we use the official implementation, and only replace the solver with MALI. The latent-ODE model is trained on the Mujoco dataset processed with code provided by the official implementation, and we experiment with different ratios (10%,20%,50%) of training data as described in (Rubanova et al., 2019). All models are trained for 300 epochs with Adamax optimizer, with an initial learning rate of 0.01 and scaled by 0.999 for each epoch. For the Neural CDE model, for the naive method, ACA and MALI, we perform 5 independent runs and report the mean value and standard deviation; results for the adjoint and seminorm adjoint are from (Kidger et al., 2020a). For Neural CDE, we use MALI with ALF solver with a fixed stepsize of 0.25, and train the model for 100 epochs with an initial learning rate of 0.004.

B.3 Continuous generative models

B.3.1 Training details

Our experiment is based on the official implementation of (Finlay et al., 2020), with the only difference in ODE solver. For a fair comparison, we only use MALI for training, and use Dopri5 solver from torchdiffeq package (Chen et al., 2018) with . For MALI, we use adaptive ALF solver with , and use an initial stepsize of 0.25. Integration time is from 0 to 1.

On MNIST and CIFAR dataset, we set the regularization coefficients for kinetic energy and Frobenius norm of the derivative function as 0.05. We train the model for 50 epochs with an initial learning rate of 0.001.

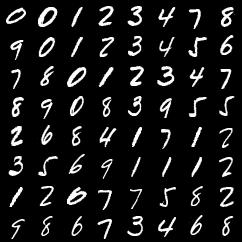

B.3.2 Addtional results

We show generated examples on MNIST dataset in Fig. 3, results for Cifar10 dataset in Fig. 4, and results for ImageNet64 in Fig. 5.

B.4 Error in gradient estimation for toy examples when

We plot the error in gradient estimation for the toy example defined by Eq.6 in the main paper in Fig. 6. Note that the integration time is set as smaller than 1, while the main paper is larger than 20. We observe the same results, MALI and ACA generate smaller error than the adjoint and the naive method.

B.5 Results of damped MALI

For all experiments in the main paper, we set and did not use damping. For completeness, we experimented with damped MALI using different values of . As shown in Table. 7, MALI is robust to different values.

| 1.0 | 0.95 | 0.9 | 0.85 | |||||

|---|---|---|---|---|---|---|---|---|

|

||||||||

| Test MSE of latent ODE on Mujoco (Lower is better) | 10% training data | 0.35 | 0.36 | 0.33 | 0.33 | |||

| 20% training data | 0.27 | 0.25 | 0.26 | 0.27 | ||||