Magnetic Resonance Parameter Mapping using Self-supervised Deep Learning with Model Reinforcement

Abstract

This paper proposes a novel self-supervised learning method, RELAX-MORE, for quantitative MRI (qMRI) reconstruction. The proposed method uses an optimization algorithm to unroll a model-based qMRI reconstruction into a deep learning framework, enabling the generation of highly accurate and robust MR parameter maps at imaging acceleration. Unlike conventional deep learning methods requiring a large amount of training data, RELAX-MORE is a subject-specific method that can be trained on single-subject data through self-supervised learning, making it accessible and practically applicable to many qMRI studies. Using the quantitative mapping as an example at different brain, knee and phantom experiments, the proposed method demonstrates excellent performance in reconstructing MR parameters, correcting imaging artifacts, removing noises, and recovering image features at imperfect imaging conditions. Compared with other state-of-the-art conventional and deep learning methods, RELAX-MORE significantly improves efficiency, accuracy, robustness, and generalizability for rapid MR parameter mapping. This work demonstrates the feasibility of a new self-supervised learning method for rapid MR parameter mapping, with great potential to enhance the clinical translation of qMRI.

Quantitative MRI, Self-supervised learning, Model reinforcement, Optimization

1 Introduction

Deep learning has had a profound impact on medical imaging, including MRI. The breadth of its impact includes disease diagnosis, prognosis, image analysis, and processing [1, 2]. In particular, deep learning methods for MRI reconstruction are becoming increasingly popular due to their capability to learn important image features directly from large datasets [3]. One popular method is estimating unacquired k-space data through training end-to-end convolutional neural networks (CNNs), using undersampled data as input and corresponding fully sampled data as a reference [4, 5, 6, 7, 8, 9, 10]. This enables the networks to learn the mapping function between the undersampled and fully sampled k-space data pairs. A well-trained network can then reconstruct undersampled k-space data for accelerated MRI.

Despite recent advances in deep learning MRI reconstruction [4, 5, 6, 7, 8, 9, 3, 10, 11, 12], several challenges remain, including limited training data access. Acquiring fully sampled k-space data can be time-consuming and expensive, and the data collection challenge for quantitative MRI is even more pronounced. Quantitative MRI is an advanced method to quantify tissue MR parameters by modeling the acquired MR signal. For example, a popular qMRI method called variable Flip Angle (vFA) [13] to quantify tissue spin-lattice relaxation time () requires acquisition for multiple repeated scans at different flip angles. Since each acquisition can take several minutes, the repeated scan time can be non-trivial. There has been some recent progress using deep learning-based methods to accelerate qMRI [14, 15, 16, 17, 18, 19, 20, 21, 22, 23], including MANTIS by Liu et al. [18, 19], DeepDTI by Tian et al. [20], MoDL-QSM by Feng et al. [21], and DOPAMINE by Jun et al. [22], all of which use supervised learning to enable rapid MR parameter mapping from undersampled k-space data.

Unsupervised or self-supervised learning methods have recently gained increasing attention due to their nature of reduced data demand. In unsupervised learning methods, the algorithm can identify patterns and structures of the input data without explicit reference pair. Self-supervised learning methods involve training a model to learn the properties and features of the input data through self-instruction. Liu et al. recently [23] proposed a self-supervised learning framework that incorporates an end-to-end CNN mapping and an MRI physics model to guide the generation of MR parameter maps. This method, referred to as REference-free LAtent map eXtraction (RELAX), has shown excellent performance in reconstructing undersampled qMRI data for and spin-spin relaxation time () mapping in the brain and knee. Notably, RELAX only trains on undersampled k-space datasets without the need for fully sampled k-space for reference, greatly alleviating the data limitation. The end-to-end CNN mapping in RELAX is critical in converting the undersampled k-space data into MR parameter maps through cross-domain learning, despite the end-to-end mapping (e.g., deep U-Net) requires high computing resources such as GPU memory, needs diverse training datasets to provide sufficient image features and lacks convergence guarantees due to its nonlinear network operations and deep structures.

Optimization algorithms can be used to unroll deep neural networks and have been applied for MRI reconstruction [24, 25, 26]. Those algorithms stem from classic mathematical methods to solve constrained optimization problems and often have well-defined solutions. For example, Variational Network (VN) [4] unrolls a variational model by iteratively updating along the gradient descent direction. ADMM-net [27] learns to unroll the alternating direction method of multipliers (ADMM). ISTA-net [28] unrolls iterative shrinkage thresholding algorithm (ISTA) to solve the reconstruction problem with regularizations. PD-net [29] iterates the learnable primal-dual hybrid gradient algorithm (PDHG) and learns the primal and dual variables in an alternative fashion.

This paper proposes a new deep learning method for qMRI reconstruction by marrying self-supervised learning and an optimization algorithm to unroll the learning process. This proposed method is an extension of RELAX [23], thus referred to as RELAX with MOdel REinforcement (RELAX-MORE), which jointly enforces the optimization and MR physics models for rapid MR parameter mapping. RELAX-MORE aims to enable efficient, accurate, and robust MR parameter estimation while achieving subject-specific learning, featuring training on single-subject data for further addressing the data limitation.

The rest of the paper is organized as follows: Section 2 introduces the theory and a bi-level optimization scheme of RELAX-MORE. Section 3 details the implementation of lower level and upper level optimization and describes the experiment setup. Section 4 provides the results and discussion. Section 5 concludes the paper.

2 Theory

2.1 Supervised Learning and Self-supervised Learning for Accelerated MRI Reconstruction

Let be the measured MR image with a resolution , where are spatial dimensions and denotes the number of coil elements in a typical multi-coil MRI reconstruction scenario. The corresponding undersampled k-space measurement and incoproates the MRI encoding process, where is an undersampling mask for accelerated acquisition, is the discrete Fourier transform, denotes the coil sensitivity map and is the signal noise during data acquisition, and are k-space dimensions.

Supervised learning uses the fully sampled image as reference and the goal is to restore the image content from undersampled k-space data by learning network parameters through minimizing the following loss function:

| (1) |

The loss function assesses the difference between the fully sampled and the network output as the reconstructed image . Once trained with a large amount of data pairs , the network can learn the mapping between undersampled measurements and fully sampled data for further inference.

In self-supervised learning, the network is trained to learn the mapping from undersampled k-space data to itself. However, the MR physics model for image formation must be incorporated into the learning pipeline to guide the training process. The loss function in Equation (1) can be reformulated as:

| (2) |

Notably, as minimizing Equation (2) involves no fully sampled data and solely relies on learning the intrinsic structure and properties of the undersampled data itself, making it a powerful concept for deep learning methods with limited data [30, 31, 11].

2.2 Self-supervised Learning for Accelerated qMRI Reconstruction

To extend from MRI reconstruction to qMRI reconstruction, the MR parameter maps can be denoted as where represents each MR parameter and is the total number of MR parameters to be estimated. Given the MR signal model , which is a function of , and the fully sampled image , the MR parameters can be estimated by minimizing the following problem:

| (3) |

This is also referred to as model-based qMRI reconstruction. Here, the MR image and its undersampled k-space data need to include measurements at varying imaging parameters dependent on individual qMRI method. The Equation (3) can be formulated as:

| (4) |

which incorporates the MRI encoding operators.

The above minimization problem (4) can be solved in self-supervised learning by minimizing the following loss function:

| (5) |

where the quantitative MR parameter are parametrized from deep neural networks with learnable network parameters. In RELAX, minimizing Equation (5) with respect to trainable network parameters is to train a deep neural network via network backpropagation for an end-to-end mapping network.

2.3 The RELAX-MORE Mechanism

The proposed RELAX-MORE extends the RELAX framework by further considering unrolling the mapping network using an optimization algorithm. This new method is designed to solve a bi-level optimization problem, where the mapping network learning and quantitative MR parameter estimation can be jointly reformulated as:

| (6a) | ||||

| (6b) | ||||

| where | (6c) | |||

Like RELAX, the upper level (6a) is a backward training process to optimize network parameters by minimizing loss function via normal network backpropagation. However, unlike RELAX, the lower level (6b) can be implemented as a forward process to solve for the optimal solution of via a gradient descent based method. The objective function is a regularized least square model for quantitative MR parameter optimization. The weighted regularization provides prior information pertinent to the desired MR parameter maps. Hand-crafted regularizations can be employed, such as total variation (TV) ( ) or norm () to promote different aspects of image features. Recent studies show that regularization using CNNs can improve feature characterization, suppressing the hand-crafted priors [32, 33]. The weight can be learned and integrated into . The objective function depends on the network parameters that learned from regularizers, which can be parametrized as the summation of CNNs with norm:

| (7) |

Each learns to extract the prior features of different MR parameters , for each . Therefore, the RELAX-MORE for qMRI reconstruction can be mathematically described as solving a CNN regularized bi-level optimization problem:

| (8a) | ||||

| (8b) | ||||

where data fidelity term .

3 Methods

3.1 The Lower Level Optimization: Forward Unrolling of A Learnable Descent Algorithm

Proximal gradient descent, a widely used iterative algorithm, is implemented to unroll the lower level optimization (8b) in RELAX-MORE. For each unrolled iterative phase , the following steps is used to update MR parameter for each :

| (9a) | ||||

| (9b) | ||||

where the proximal operator for regularization is defined as:

| (10) |

This step involves implementing the proximal operation for regularization , which is equivalent to finding the maximum-a-posteriori solution for the Gaussian denoising problem at a noise level [34, 35], thus the proximal operator can be interpreted as a Gaussian denoiser. However, because the proximal operator in the objective function (8b) does not admit closed form solution, a CNN is used to substitute , where the network is constructed as a residual learning network . Network is composed with CNN , which reflects Equation (7), and its adjoint with symmetric but reversed architecture to increase the network capability, and a soft shrinkage operator is applied in between. All the substitutions are mathematically proven to be effective by [28, 36]. Then step (9b) becomes

| (11a) | ||||

| (11b) | ||||

where the soft thresholding operator is for vector and is a soft thresholding parameter that is updated at each phase . In summary, the forward learnable proximal gradient descent algorithm to achieve unrolled lower level optimization in RELAX-MORE can be detailed in Algorithm:

The soft thresholding parameter and step size are learnable and updating during each phase. The final outputs of the algorithm are estimated intermediate MR images for all iterative phases and the reconstructed MR parameter maps of the last phase.

3.2 Initial Input of the Algorithm

Using an initial MR parameter input that is closer to the optimal solution of the Algorithm can lead to better results and eliminate the need for excessive iterations in gradient descent. To achieve a favorable input, initialization networks are used to learn from undersampled k-space data. First, the undersampled k-space data is converted into the image domain, followed by a Coil Combination Network to combine all coil-wise images. coil-combined images are produced to represent measurements during qMRI acquisition. Next, all images are concatenated together as input into the -initialization Network to obtain . The initial MR images can also be obtained from the signal model using , which will be subsequently used in the loss function for training the overall network framework in upper level optimization in Equation (8a).

3.3 The Upper Level Optimization: Backward Training of Overall Network

The loss function (8a) for RELAX-MORE is a summation of the weighted mean squared error (MSE) between undersampled k-space measurement and the k-space representation of estimated MR images for all unrolled phases. Therefore, the overall network training objective in the upper level optimization is to minimize the loss function explicitly expressed as

| (12) |

where the are the weighting factors for the MSE in order of the importance of the output from each phase and the initial .

The detailed framework of the proposed RELAX-MORE is illustrated in Fig. 1 The Coil combination Network and -initialization Network applied 4 repeats of Conv( kernel size, 32 kernels) followed by a ReLU activation function. The last convolution layer has a single kernel Conv(, 1). The CNN , applied 8 Conv(, 64) with a ReLU and the last convolution also has a single kernel Conv(, 1). The CNN applied a reversed network structure.

3.4 Experiment Setup

To investigate the feasibility of RELAX-MORE method for reconstructing accelerated qMRI, we used the widely studied mapping through the vFA method [13] as an example. Herein, the MR signal model can be expressed as:

| (13) |

where several MR images are acquired at multiple flip angles for . are the spin-lattice relaxation time map and proton density map, respectively, reflecting the imaged tissue relaxation properties, with value sensitive to many brain and knee joint diseases. The set of MR parameters estimated in this model are . The flip angles and other imaging parameters, such as repetition time TR are pre-determined.

The experiments include in-vivo studies on the brain and knee of healthy volunteers and ex-vivo phantom studies, all of which were carried out on a Siemens 3T Prisma scanner. For the brain study, the purpose is to investigate the efficiency and performance of RELAX-MORE and compare it with other state-of-the-art reconstruction methods. The vFA on the brain of five subjects was performed in the sagittal plane using a spoiled gradient echo sequence at imaging parameters TE/TR = ms, FA = , FOV = mm and matrix size = with a dedicated 20-channel head coil. For the knee study, the purpose is to investigate the robustness of RELAX-MORE against severe noise contamination and image imperfection. Therefore, the vFA on one knee was performed using a 4-channel receiving-only flex coil at parameters TE/TR = ms, FA = , FOV = mm and matrix size . For the phantom study, the purpose is to investigate the reconstruction accuracy of RELAX-MORE. The vFA phantom data was acquired along the coronal plane with sequence parameters TE/TR = ms, FA = , FOV = mm and matrix size using the 20-channel head coil. In all 3 studies, to minimize bias on estimation due to inhomogeneity and imperfect spoiling, maps were separately acquired for compensation [37], and linear RF phase increments between subsequent repetition cycles and strong gradient spoilers were applied to minimize the impact of imperfect spoiling [38].

The fully acquired MRI k-space data were undersampled retrospectively using two undersampling schemes: 1) 1D Cartesian variable density undersampling at acceleration factor AF = with the 16 central k-space lines fully sampled (Fig. 2(a)) and 2) 2D Poisson disk undersampling at AF = with the central k-space portion fully sampled (Fig. 2(b)). The undersampling patterns were varied for each flip angle, like in previous studies [18, 23]. The coil sensitivity maps were estimated from ESPIRiT [39].

The selection of network hyperparameters was non-trivial and optimized by empirically searching on a reasonably large range. More specifically, we used initial for brain images; initial for knee images; and for phantom images. The loss function applied at and for other phase steps. The network parameters were initialized using Xavier initialization [40]. Because RELAX-MORE trains on single-subject data, the batch size was set to include the entire image volume. The network was trained for epochs using the Adam optimizer [41]. The learning rate, which controls the step size taken in each iteration of optimizing the loss function, was set to for brain and knee images, and for the phantom image. It should be noted that, unlike conventional deep learning reconstruction methods where training and testing are two separate steps, in RELAX-MORE with subject-specific self-supervised learning, the reconstruction has readily concluded once the training has converged for one subject. All the programming in this study was implemented using Python language and PyTorch package, and experiments were conducted on one NVIDIA A100 80GB GPU and an Intel Xeon 6338 CPU at Centos Linux system.

4 Results

4.1 Impact of Unrolling Gradient Descent Algorithm

The reconstruction performance of RELAX-MORE is affected by the degree of unrolling the gradient descent algorithm. Fig. 3 demonstrates the evolution of the estimated maps from fully sampled data at different total unrolling phase numbers from to of the gradient descent algorithm. The larger reflects deeper unrolling operation and thus requires more computing resources. Referring to the zoom-in views of Fig. 3, one can observe that with increasing total phase number, the algorithm starts to better distinguish the values from white matter (WM), grey matter (GM), and cerebrospinal fluid (CSF) regions. Initially blurry at a lower , the tissue details start to sharpen with increasing , resembling better the fully sampled map obtained using the standard pixel-wise fitting. However, the differences between phase and are negligible, indicating that performance gain can reach a plateau with a sufficient depth of unrolling steps. Therefore, to ensure consistent experiment setup and balance the trade-off between algorithm complexity and reconstruction performance, the number of was set to for all the experiments thereafter. This result illustrates the effectiveness of unrolling gradient descent algorithm in the RELAX-MORE framework.

4.2 Comparison with State-of-the-Art Methods

RELAX-MORE was compared with two state-of-the-art non-deep learning qMRI reconstruction methods and one self-supervised deep learning method. These methods include 1) Locally Low Rank (LLR) [42], where image reconstruction was first performed, followed by pixel-wise parameter fitting. LLR exploits the rank-deficiency of local image regions along the acquisition parameter dimension to accelerate parameter mapping; 2) Model-TGV [43], a model-based qMRI reconstruction method that improves the piece-wise constant region restriction of total variation through a generalization of the total variation theory and 3) RELAX [23], an end-to-end self-supervised deep learning method for rapid MR parameter mapping. LLR and Model-TGV were implemented using the recommended optimization parameters in their original papers and code. Contrary to the original RELAX implementation, which was trained using many subjects, in the interest of a fair comparison, our implementation of RELAX was carried out on a single-subject training using an Adam optimizer with epochs.

maps estimated from 1D Cartesian variable density undersampling using the different methods are presented in Fig. 4. As can be observed in Fig. 4(a), the zero-filled map obtained by pixel-wise fitting the undersampled images is noisy, displaying ripple artifacts due to aliasing. Although LLR can partially remove these artifacts, it retains the noisy signature. Model-TGV averages out the noise, producing a cleaner tissue appearance and better contrast, but it is over-smoothed, resulting in blurry maps. On the other hand, RELAX removes noises and artifacts and produces sharpened maps but remains somewhat blocky. This is hypothesized due to the difficulty of converging the end-to-end network using single-subject data. RELAX-MORE produces artifact-free maps with anticipated excellent performance for noise removal, resulting in a good performance in appearance and contrast. This is further witnessed in the zoom-in view of Fig. 4(b), where the zero-filled, LLR, and even fully sampled images cannot reliably estimate in the cervical spine where the signal is low due to insufficient head coil coverage. Those pixel-wise fitting methods are typically prone to image noise contamination. Although Model-TGV and RELAX can better estimate in these low SNR regions, the blurry Model-TGV results make it difficult to distinguish between the spinal cord and subarachnoid space, whereas RELAX shows a disconnect in the subarachnoid space which may be due to over-sharpening. However, RELAX-MORE shows remarkably good performance in maintaining contrast and tissue details at those regions, enabling clear distinction between the spinal cord and subarachnoid space regions. In Fig. 4(c) of the cerebrum zoom-in view, RELAX-MORE consistently exhibits the best reconstruction performance in correcting artifacts, removing noises, and preserving tissue contrast and details. Referring to the error maps (Fig. 4(d)), which is the absolute difference between the estimated maps in Fig. 4(c) and the fully sampled map, overall, zero-filled shows the most significant error followed by LLR. Model-TGV and RELAX exhibit similar error maps, whereas RELAX-MORE produces the least error.

maps estimated from 2D Poisson disk undersampling are shown in Fig. 5. Comparing the images in Fig. 5(a), the overall signature differences are like the 1D undersampling case except for the undersampling artifacts being noise-like due to 2D undersampling. Referring to the zoom-in view and comparing the estimated maps again show similar differences to the 1D undersampling case. However, compared with other methods, RELAX-MORE can clearly distinguish between WM and GM of the cerebellum in Fig. 5(b), particularly in the posterior part. The absolute difference maps taken between the estimated maps in Fig. 5(c) and the fully sampled map, shown in Fig. 5(d), exhibit similar results to the 1D undersampling case with RELAX-MORE showing the least error. This is likely achieved through integrating deep learning capability and unrolling gradient descent algorithm with proximal operator prioritizing noise suppression without compromising the fidelity and clarity of the underlying tissue structure.

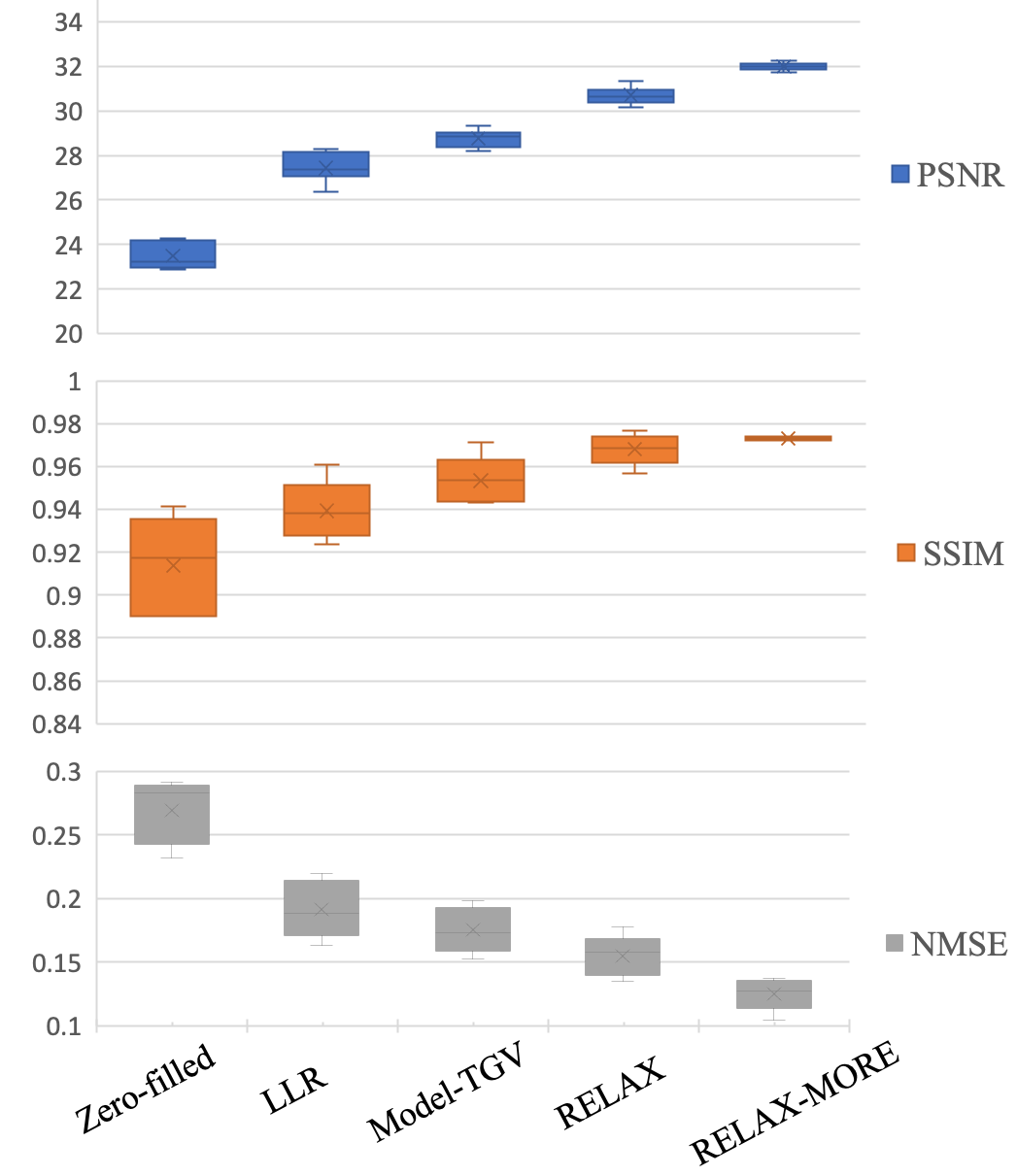

Further performance comparison was carried out using peak-signal-to-noise-ratio (PSNR), structural similarity index measure (SSIM), and normalized mean squared error (NMSE) as evaluation metrics for maps. PSNR is a measbure of the quality of the reconstruction, while SSIM is a measure of the similarity between two maps. PSNR, SSIM, and NMSE are defined in the following:

| (14) |

| (15) |

| (16) |

where represent the estimated map and reference map. are local means of pixel intensity, are the standard deviations and is covariance between and , are two constants that avoid denominator to be zero, and . is the largest pixel value of the magnitude of image.

maps obtained from fully sampled data were used for , as a reference to compare the performance among different methods. The metric calculations were carried out on results from 1D Cartesian variable density undersampling, using the brain regions from all five subjects.

The analysis results are presented in Fig. 6, where the average values of PSNR, SSIM and NMSE along with corresponding standard deviations among five subjects are presented using box-whisker plots. For both PSNR and SSIM, RELAX-MORE produces the highest mean value, followed by RELAX, Model-TGV and LLR. Both PSNR and SSIM of RELAX-MORE show the highest consistency, as evidenced by its small standard deviation compared to other methods. Zero-filled shows the lowest mean values for PSNR and SSIM with the largest standard deviation, which can be attributed to aliasing artifacts. RELAX-MORE shows the lowest mean NMSE, followed by RELAX, Model-TGV and LLR. Zero-filled shows the highest mean NMSE. Overall, in agreement with the qualitative observation in Figs. 4 and 5, RELAX-MORE performs superiorly in terms of reconstruction fidelity, structure and texture preservation, and noise suppression, outperforming other state-of-the-art methods.

4.3 Ablation Study: Accuracy and Robustness

An ablation study was carried out further to evaluate the parameter estimation accuracy of the proposed method. To analyze RELAX-MORE in discrete tissue environment, it was applied to undersampled data from a phantom composed of five 20 mL vials consisting of peanut oil and Agar dissolved in DI water and boiled egg white, all immersed in a 200 M water bath (Fig. 7(a)). From left to right, Fig. 7(b) shows the maps obtained from zero-filled data, RELAX-MORE and fully sampled data. Zero-filled map exhibits ripple artifacts due to the undersampling, whereas these artifacts are eliminated in RELAX-MORE. Comparing the line profile indicated by the line in Fig. 7(b) and shown in Fig. 7(c), zero-filled profile resembles amplitude modulation, where the periodic amplitude fluctuation stems from aliasing effects and the higher frequency fluctuation reflects noise. In good agreement with the fully sampled profile, RELAX-MORE’s profile not only removes the periodic amplitude fluctuation but also smoothens the oscillations, demonstrating its ability to average out noise, remove aliasing artifacts while maintaining high accuracy for parameter estimation in a wide range.

The ablation study was also carried out to evaluate the parameter estimation robustness of RELAX-MORE against severe imaging imperfection and the generalizability of RELAX-MORE on different anatomies such as knee joints. Fig. 8 shows the maps from a representative sagittal slice of the knee obtained from zero-filled, RELAX-MORE, and fully sampled data. The MRI data was purposely acquired using a 4-channel receiving-only flex coil, which provides insufficient coil coverage and a poor signal-to-noise ratio for knee MRI. As evident in Fig. 8, the fully sampled map presents enormous noises at bone, cartilage, ligament, meniscus, and muscle and the fine structure, such as the epiphyseal plate (highlighted by a white arrow) is contaminated by noises. The zero-filled map from 1D Cartesian acceleration also presents severe undersampling artifacts combined with the noise rendering erroneous quantification. However, RELAX-MORE successfully removes all the artifacts, suppresses the unwanted image noises in quantification, and provides a surprisingly favorable quantification of different knee joint structures. RELAX-MORE demonstrates its high robustness and generalizability for reconstructing the in-vivo brain and in-vivo knee joint, making it a widely applicable method for different anatomies.

4.4 Transfer Learning: Computing Time Efficiency

RELAX-MORE is a subject-specific method that utilizes self-supervised learning for efficient qMRI reconstruction. While RELAX-MORE can perform well using single-subject data, as shown in all other experiments, the reconstruction process (e.g., network training) needs to be conducted for each subject data. In this experiment, we investigated transfer learning to improve the computing time efficiency for the training/reconstruction of RELAX-MORE. Using transfer learning, the network weights after training on one brain data were then applied as the starting point to train the brain data of another subject.

In Fig. 9, the performance of RELAX-MORE with and without transfer learning is presented by plotting the SSIMs for estimations as a function of epoch number at 1D Cartesian acceleration. Comparing the two SSIM curves in Fig. 9(a), it is observed that transfer learning starts at a higher initial SSIM value during the initial training phase and increases faster compared to the case without transfer learning. This implies that transfer learning benefits the model to converge more efficiently due to commencing training with pre-trained weights. This is further confirmed by comparing the maps generated with (Fig. 9(b) top row) and without (Fig. 9(b) bottom row) transfer learning, where at low epoch values (500, 1000), transfer learning generated maps show better clarity and contrast between different tissue types compared to its non-transfer learning counterpart. As the training epoch increases, both SSIM curves flatten, indicating that the model has reached stable performance. The results suggest that transfer learning can improve the computing time efficiency of RELAX-MORE with reduced training/reconstruction time. At approximately 0.5 seconds per epoch using our GPU device for training the 3D brain data, transfer learning can reduce the reconstruction time to less than 10 min to reach good performance. With the advance of new techniques [44], transfer learning can be a practical approach to further improve the reconstruction timing efficiency for RELAX-MORE.

5 Conclusion

This paper proposes a novel self-supervised learning method, RELAX-MORE, for qMRI reconstruction. This proposed method uses an optimization algorithm to unroll a model-based qMRI reconstruction into a deep learning framework, enabling the generation of highly accurate and robust MR parameter maps at imaging acceleration. Unlike conventional deep learning methods requiring a large amount of training data, RELAX-MORE is a subject-specific method that can be trained on single-subject data through self-supervised learning. Furthermore, in our experiments, RELAX-MORE outperforms several state-of-the-art conventional and deep learning methods for accelerated maps. This work also demonstrates several superior aspects of RELAX-MORE in overall performance, accuracy, robustness, generalizability, and computing timing efficiency, making this method a promising candidate for advancing accelerated qMRI for many clinical applications.

References

- [1] G. Litjens, T. Kooi, B. E. Bejnordi, A. A. A. Setio, F. Ciompi, M. Ghafoorian, J. A. Van Der Laak, B. Van Ginneken, and C. I. Sánchez, “A survey on deep learning in medical image analysis,” Medical image analysis, vol. 42, pp. 60–88, 2017.

- [2] D. Shen, G. Wu, and H.-I. Suk, “Deep learning in medical image analysis,” Annual review of biomedical engineering, vol. 19, pp. 221–248, 2017.

- [3] F. Knoll, K. Hammernik, C. Zhang, S. Moeller, T. Pock, D. K. Sodickson, and M. Akcakaya, “Deep-learning methods for parallel magnetic resonance imaging reconstruction: A survey of the current approaches, trends, and issues,” IEEE signal processing magazine, vol. 37, no. 1, pp. 128–140, 2020.

- [4] K. Hammernik, T. Klatzer, E. Kobler, M. P. Recht, D. K. Sodickson, T. Pock, and F. Knoll, “Learning a variational network for reconstruction of accelerated mri data,” Magnetic resonance in medicine, vol. 79, no. 6, pp. 3055–3071, 2018.

- [5] J. Schlemper, J. Caballero, J. V. Hajnal, A. Price, and D. Rueckert, “A deep cascade of convolutional neural networks for mr image reconstruction,” in Information Processing in Medical Imaging: 25th International Conference, IPMI 2017, Boone, NC, USA, June 25-30, 2017, Proceedings 25. Springer, 2017, pp. 647–658.

- [6] Y. Han, J. Yoo, H. H. Kim, H. J. Shin, K. Sung, and J. C. Ye, “Deep learning with domain adaptation for accelerated projection-reconstruction mr,” Magnetic resonance in medicine, vol. 80, no. 3, pp. 1189–1205, 2018.

- [7] B. Zhu, J. Z. Liu, S. F. Cauley, B. R. Rosen, and M. S. Rosen, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, no. 7697, pp. 487–492, 2018.

- [8] G. Yang, S. Yu, H. Dong, G. Slabaugh, P. L. Dragotti, X. Ye, F. Liu, S. Arridge, J. Keegan, Y. Guo et al., “Dagan: deep de-aliasing generative adversarial networks for fast compressed sensing mri reconstruction,” IEEE transactions on medical imaging, vol. 37, no. 6, pp. 1310–1321, 2017.

- [9] D. Lee, J. Yoo, S. Tak, and J. C. Ye, “Deep residual learning for accelerated mri using magnitude and phase networks,” IEEE Transactions on Biomedical Engineering, vol. 65, no. 9, pp. 1985–1995, 2018.

- [10] F. Liu, A. Samsonov, L. Chen, R. Kijowski, and L. Feng, “Santis: sampling-augmented neural network with incoherent structure for mr image reconstruction,” Magnetic resonance in medicine, vol. 82, no. 5, pp. 1890–1904, 2019.

- [11] B. Yaman, S. A. H. Hosseini, S. Moeller, J. Ellermann, K. Uğurbil, and M. Akçakaya, “Self-supervised learning of physics-guided reconstruction neural networks without fully sampled reference data,” Magnetic resonance in medicine, vol. 84, no. 6, pp. 3172–3191, 2020.

- [12] M. Blumenthal, G. Luo, M. Schilling, M. Haltmeier, and M. Uecker, “Nlinv-net: Self-supervised end-2-end learning for reconstructing undersampled radial cardiac real-time data,” in ISMRM annual meeting, 2022.

- [13] H. Z. Wang, S. J. Riederer, and J. N. Lee, “Optimizing the precision in t1 relaxation estimation using limited flip angles,” Magnetic resonance in medicine, vol. 5, no. 5, pp. 399–416, 1987.

- [14] Y. Zhu, J. Cheng, Z.-X. Cui, Q. Zhu, L. Ying, and D. Liang, “Physics-driven deep learning methods for fast quantitative magnetic resonance imaging: Performance improvements through integration with deep neural networks,” IEEE Signal Processing Magazine, vol. 40, no. 2, pp. 116–128, 2023.

- [15] C. Cai, C. Wang, Y. Zeng, S. Cai, D. Liang, Y. Wu, Z. Chen, X. Ding, and J. Zhong, “Single-shot t2 mapping using overlapping-echo detachment planar imaging and a deep convolutional neural network,” Magnetic resonance in medicine, vol. 80, no. 5, pp. 2202–2214, 2018.

- [16] O. Cohen, B. Zhu, and M. S. Rosen, “Mr fingerprinting deep reconstruction network (drone),” Magnetic resonance in medicine, vol. 80, no. 3, pp. 885–894, 2018.

- [17] H. M. Luu, D.-H. Kim, J.-W. Kim, S.-H. Choi, and S.-H. Park, “qmtnet: Accelerated quantitative magnetization transfer imaging with artificial neural networks,” Magnetic Resonance in Medicine, vol. 85, no. 1, pp. 298–308, 2021.

- [18] F. Liu, L. Feng, and R. Kijowski, “Mantis: model-augmented neural network with incoherent k-space sampling for efficient mr parameter mapping,” Magnetic resonance in medicine, vol. 82, no. 1, pp. 174–188, 2019.

- [19] F. Liu, R. Kijowski, L. Feng, and G. El Fakhri, “High-performance rapid mr parameter mapping using model-based deep adversarial learning,” Magnetic resonance imaging, vol. 74, pp. 152–160, 2020.

- [20] Q. Tian, B. Bilgic, Q. Fan, C. Liao, C. Ngamsombat, Y. Hu, T. Witzel, K. Setsompop, J. R. Polimeni, and S. Y. Huang, “Deepdti: High-fidelity six-direction diffusion tensor imaging using deep learning,” NeuroImage, vol. 219, p. 117017, 2020.

- [21] R. Feng, J. Zhao, H. Wang, B. Yang, J. Feng, Y. Shi, M. Zhang, C. Liu, Y. Zhang, J. Zhuang et al., “Modl-qsm: Model-based deep learning for quantitative susceptibility mapping,” NeuroImage, vol. 240, p. 118376, 2021.

- [22] Y. Jun, H. Shin, T. Eo, T. Kim, and D. Hwang, “Deep model-based magnetic resonance parameter mapping network (dopamine) for fast t1 mapping using variable flip angle method,” Medical Image Analysis, vol. 70, p. 102017, 2021.

- [23] F. Liu, R. Kijowski, G. El Fakhri, and L. Feng, “Magnetic resonance parameter mapping using model-guided self-supervised deep learning,” Magnetic resonance in medicine, vol. 85, no. 6, pp. 3211–3226, 2021.

- [24] D. Liang, J. Cheng, Z. Ke, and L. Ying, “Deep magnetic resonance image reconstruction: Inverse problems meet neural networks,” IEEE Signal Processing Magazine, vol. 37, no. 1, pp. 141–151, 2020.

- [25] V. Monga, Y. Li, and Y. C. Eldar, “Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing,” IEEE Signal Processing Magazine, vol. 38, no. 2, pp. 18–44, 2021.

- [26] M. T. McCann, K. H. Jin, and M. Unser, “Convolutional neural networks for inverse problems in imaging: A review,” IEEE Signal Processing Magazine, vol. 34, no. 6, pp. 85–95, 2017.

- [27] Y. Yang, J. Sun, H. Li, and Z. Xu, “Deep admm-net for compressive sensing mri,” in Advances in Neural Information Processing Systems, vol. 29. Curran Associates, Inc., 2016.

- [28] J. Zhang and B. Ghanem, “Ista-net: Interpretable optimization-inspired deep network for image compressive sensing,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1828–1837.

- [29] J. Adler and O. Öktem, “Learned primal-dual reconstruction,” IEEE transactions on medical imaging, vol. 37, no. 6, pp. 1322–1332, 2018.

- [30] B. Yaman, S. A. H. Hosseini, and M. Akcakaya, “Zero-shot self-supervised learning for MRI reconstruction,” in International Conference on Learning Representations, 2022.

- [31] M. Akçakaya, S. Moeller, S. Weingärtner, and K. Uğurbil, “Scan-specific robust artificial-neural-networks for k-space interpolation (raki) reconstruction: Database-free deep learning for fast imaging,” Magnetic resonance in medicine, vol. 81, no. 1, pp. 439–453, 2019.

- [32] H. K. Aggarwal, M. P. Mani, and M. Jacob, “Modl: Model-based deep learning architecture for inverse problems,” IEEE transactions on medical imaging, vol. 38, no. 2, pp. 394–405, 2018.

- [33] S. Diamond, V. Sitzmann, F. Heide, and G. Wetzstein, “Unrolled optimization with deep priors,” arXiv preprint arXiv:1705.08041, 2017.

- [34] F. Heide, M. Steinberger, Y.-T. Tsai, M. Rouf, D. Pajak, D. Reddy, O. Gallo, J. Liu, W. Heidrich, K. Egiazarian et al., “Flexisp: A flexible camera image processing framework,” ACM Transactions on Graphics (ToG), vol. 33, no. 6, pp. 1–13, 2014.

- [35] S. V. Venkatakrishnan, C. A. Bouman, and B. Wohlberg, “Plug-and-play priors for model based reconstruction,” in 2013 IEEE Global Conference on Signal and Information Processing. IEEE, 2013, pp. 945–948.

- [36] W. Bian, Y. Chen, and X. Ye, “Deep parallel mri reconstruction network without coil sensitivities,” in Machine Learning for Medical Image Reconstruction: Third International Workshop, MLMIR 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, October 8, 2020, Proceedings 3. Springer, 2020, pp. 17–26.

- [37] L. I. Sacolick, F. Wiesinger, I. Hancu, and M. W. Vogel, “B1 mapping by bloch-siegert shift,” Magnetic resonance in medicine, vol. 63, no. 5, pp. 1315–1322, 2010.

- [38] C. Preibisch and R. Deichmann, “Influence of rf spoiling on the stability and accuracy of t1 mapping based on spoiled flash with varying flip angles,” Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, vol. 61, no. 1, pp. 125–135, 2009.

- [39] M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, and M. Lustig, “Espirit—an eigenvalue approach to autocalibrating parallel mri: where sense meets grappa,” Magnetic resonance in medicine, vol. 71, no. 3, pp. 990–1001, 2014.

- [40] X. Glorot and Y. Bengio, “Understanding the difficulty of training deep feedforward neural networks,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010, pp. 249–256.

- [41] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Y. Bengio and Y. LeCun, Eds., 2015.

- [42] T. Zhang, J. M. Pauly, and I. R. Levesque, “Accelerating parameter mapping with a locally low rank constraint,” Magnetic resonance in medicine, vol. 73, no. 2, pp. 655–661, 2015.

- [43] O. Maier, J. Schoormans, M. Schloegl, G. J. Strijkers, A. Lesch, T. Benkert, T. Block, B. F. Coolen, K. Bredies, and R. Stollberger, “Rapid t1 quantification from high resolution 3d data with model-based reconstruction,” Magnetic resonance in medicine, vol. 81, no. 3, pp. 2072–2089, 2019.

- [44] F. Zhuang, Z. Qi, K. Duan, D. Xi, Y. Zhu, H. Zhu, H. Xiong, and Q. He, “A comprehensive survey on transfer learning,” Proceedings of the IEEE, vol. 109, no. 1, pp. 43–76, 2020.