MAFA: Managing False Negatives for Vision-Language Pre-training

Abstract

We consider a critical issue of false negatives in Vision-Language Pre-training (VLP), a challenge that arises from the inherent many-to-many correspondence of image-text pairs in large-scale web-crawled datasets. The presence of false negatives can impede achieving optimal performance and even lead to a significant performance drop. To address this challenge, we propose MAFA (MAnaging FAlse negatives), which consists of two pivotal components building upon the recently developed GRouped mIni-baTch sampling (GRIT) strategy: 1) an efficient connection mining process that identifies and converts false negatives into positives, and 2) label smoothing for the image-text contrastive (ITC) loss. Our comprehensive experiments verify the effectiveness of MAFA across multiple downstream tasks, emphasizing the crucial role of addressing false negatives in VLP, potentially even surpassing the importance of addressing false positives. In addition, the compatibility of MAFA with the recent BLIP-family model is also demonstrated. Code is available at https://github.com/jaeseokbyun/MAFA.

1 Introduction

With large-scale web-crawled datasets [54, 4, 52, 53], majorities of vision-language pre-training (VLP) models are trained in a self-supervised learning manner using the combination of several pre-tasks and losses [35, 36, 3, 65, 67]: e.g., masked language modeling (MLM), image-text contrastive (ITC), and image-text matching (ITM) losses. Despite their promising results, one of the pressing challenges they face is the presence of noisy captions in image-text pairs that often provide incomplete or even incorrect descriptions [49, 43, 15, 61, 46, 66, 10]. Several recent works have focused on addressing such issue of noisy correspondence in image-text pairs [23, 20, 12, 21, 49, 36]. Notably, BLIP [36] introduced a caption refinement process by leveraging an image captioning model and a filter to generate synthetic clean captions and remove noisy captions. Such process can be seen as correcting the false positives that were injected by the noisy captions.

Contrastively, we note that there is another type of challenge for VLP that stems from the nature of many-to-many correspondence of image-text pairs. Namely, it is common for an image (resp. text) to have additional positive connections (blue lines in Figure 1) with other texts (resp. images), which are paired with their corresponding images (resp. texts). This is due to the fact that the existing image-text datasets are constructed by only collecting paired image-text instances, hence the information regarding non-paired but semantically close image-text combinations can be missed. Consequently, for each image (resp. text), the text (resp. image) that is given as the pair with the image (resp. text) is treated as the only positive sample during pre-training, while the other texts (resp. images) are all treated as negatives. This setup inevitably leads to the prevalence of false negatives during computing ITC and ITM losses and confuses the learning process. A naive solution would be to identify missing positive connections by examining all possible image-text combinations in the dataset. However, it is cleaerly infeasible for both manual and model-based evaluations due to prohibitive complexity. For example, even for a dataset of moderate size, e.g., containing 5 million image-text pairs, the number of combinations that need to be examined is , which amounts to approximately trillion.

We note such issue of false negatives has been more or less overlooked in recent studies [36, 65, 3], which incorporated the in-batch hard negative sampling for the ITM task as a standard tool for VLP. This sampling technique, initially proposed in ALBEF [35], involves selecting hard negative samples from the mini-batch based on the image-text similarity scores computed from the ITC task. More recently, GRIT-VLP [3] significantly improved the performance by proposing an improved hard negative sampling by first grouping the similar image-text pairs together before forming a mini-batch. Ideally, if all semantically close image-text pairs were correctly labeled, hard negative mining could effectively identify only informative hard negatives. However, in a typical VLP setting where such information is absent, the hard negatives in fact frequently become false negatives, resulting in sub-optimal model performance.

To address this challenge of false negatives, particularly prevalent when hard negative sampling is in action, we have implemented two significant enhancements. Firstly, we devise an Efficient Connection Mining (ECM) process that identifies missing positive connections between non-paired but semantically close images and texts. Rather than reviewing all possible combinations, ECM strategically extracts the plausible candidates which are selected as hard negatives. These candidates are inspected by a pre-trained discriminator, which determines their potential to be converted into positives. The candidates identified as positives by the discriminator are then incorporated as additional positives for calculating ITC, ITM, and MLM losses during the training process. Secondly, we introduce Smoothed ITC (S-ITC) which is based on the principle of label smoothing [58]. This approach is specifically designed to mitigate the over-penalization of false negative samples within grouped mini-batches, without incurring any additional memory or computational overhead.

Our experimental results demonstrate that the proposed method, dubbed as MAFA (MAnaging FAlse negatives), can substantially improve the VLP performance. For example, a model trained with MAFA on a standard 4M dataset (i.e., 4M-Noisy) can almost achieve the performance of a baseline model trained on a much larger 14M dataset, without exploiting any additional information such as bounding boxes or object tags. Our systematic ablation analyses demonstrate that such performance enhancement primarily results from mitigating effect of false negatives. Another finding from our experiments is that converting false negatives into additional positives is more advantageous than merely eliminating them. Moreover, we also demonstrate that the impact of addressing the false negative issue is orthogonal to and may outweigh that of addressing the false positive issue in VLP, which is done by comparing and combining MAFA with the BLIP [36] framework. Finally, we show MAFA is also compatible with recently proposed BLIP-2 [37], underscoring the generality of our method in VLP.

2 Related Work

Vision-language pre-training (VLP).

Initial VLP models [6, 69, 7, 39, 31, 16, 22, 41, 56, 42, 24, 62] which utilized a single multi-modal encoder, primarily employed random negative sampling during the ITM task.

Recently, ALBEF [35] incorporated the ITC loss and in-batch hard negative sampling strategy for ITM by leveraging image-text contrastive similarity scores.

Subsequently, the in-batch hard negative sampling strategy for ITM became an implicit rule for the BLIP-family models [65, 67, 3, 36, 29, 2, 37, 28] which adopt both ITC and ITM as training objectives.

While the significance of hard negative sampling for the ITM task has been highlighted in GRIT-VLP [3], limited attention has been given to addressing the issue of false negatives arising from the hard negative samples.

Existing studies have primarily focused on tackling false negatives only in the context of contrastive learning [9, 55, 35, 3] with particular emphasis on the vision domain [26, 8, 5, 51].

To that end, we highlight the need for effective strategies to address false negatives in VLP and demonstrate that false negatives can be managed.

Label smoothing. Label smoothing [58] is a widely adopted technique for improving generalization in various classification tasks. It converts the one-hot target labels into soft labels by mixing them with uniform distribution. This simple technique has demonstrated its efficacy in both visual [45, 38, 33] and language domains [17, 34]. Its benefits have also led to its incorporation as a supplementary technique to enhance the fine-tuning of image-text models like CLIP [50] for image classification tasks [27, 18, 63]. However, the application of label smoothing within VLP and its ability to address false negatives have not been thoroughly explored. Recently, some studies [35, 3] have introduced model-generated soft labels in the VLP domain. However, we show that such soft labels are insufficient for effectively addressing false negatives, justifying the need to incorporate label smoothing to the contrastive loss when the hard negative sampling is employed.

2.1 GRIT-VLP [3]

GRIT-VLP uses ITC, ITM with in-batch hard negative sampling, and MLM as the objectives proposed in ALBEF, except the utilization of the momentum encoder in the pre-training. However, it significantly extends ALBEF by implementing two key components as follows:

(a) GRouped mIni-baTch (GRIT) sampling aims to construct mini-batches containing highly similar example groups. This facilitates the selection of informative hard negative samples during in-batch hard negative sampling. To avoid excessive memory or computational overhead, the procedure of constructing grouped mini-batches for the next epoch is performed concurrently with the loss calculation at each epoch.

For this, additional queues are used to collect and search for the most similar examples, one by one, in which the similarity is measured by the ITC scores. These queues serve as the search space and are significantly larger than the mini-batch size . Thus, the size of the queue, denoted as search space , controls the level of hardness in selecting hard negative samples.

(b) ITC with consistency loss

attempts to address the issue of over-penalization in ITC that arises when GRIT is combined.

Contrary to ALBEF, similar examples are gathered in the GRIT-sampled mini-batch.

Thus, when one-hot labels are used for ITC, they result in equal penalization of all negatives, and it has been observed that the representations of similar samples may unintentionally drift apart.

To mitigate this, GRIT-VLP incorporates soft pseudo-targets generated from the same pre-trained model as a mean of regularization.

3 Motivation

In order to quantify the tendency of the number of false negatives in the ITM task, we report a quantitative analysis result in Table 1. We estimated the number of false negative pairs during a single epoch while training the ITM task, employing two distinct mini-batch sampling strategies: random sampling and GRIT sampling. These strategies were evaluated on both the original 4M dataset (4M-Noisy) and the BLIP-generated clean dataset (4M-Clean). Given the infeasibility of manually examining every negative pair to determine whether it is a false negative, we utilized a strong ITM model pre-trained on a large-scale 129M dataset from BLIP — i.e., a negative pair is regarded as false negative if the strong ITM model predicts it is “matched”. While the ITM model does not always classify false negatives with perfect accuracy, its reliability is deemed adequate for approximating the trend in false negative counts. More details and analyses regarding counting the number of false negatives can be found in the Supplementary Material (S.M).

| Dataset | Sampling | FN w.r.t. image | FN w.r.t. text |

|---|---|---|---|

| 4M-Noisy | Random | 127,130 (2.5%) | 118,080 (2.3%) |

| GRIT | 817,991 (16.4%) | 8111,145 (16.2%) | |

| 4M-Clean | Random | 153,006 (3.1%) | 148,729 (3.0%) |

| GRIT | 1,114,851 (23.2%) | 1,096,485 (22.2%) |

From the table, we observe that GRIT sampling exhibits significantly more false negatives than random sampling, as mentioned in the Introduction. The reason is that GRIT sampling generates challenging in-batch hard negatives, which are beneficial for learning fine-grained representations, but they also often end up being false negatives. Moreover, we observe this trend exacerbates in the 4M-Clean dataset.

In Figure 2, we examine the impact of an increasing number of false negatives on the downstream performance of GRIT-VLP. Specifically, we measured the average IRTR score of GRIT-VLP across different search space (queue) sizes , while keeping the batch size constant. We first clearly observe that the number of false negatives rises as increases. This is expected since expanding the search space for GRIT sampling leads to more similar examples being grouped together in a mini-batch, thereby generating more false negatives. In terms of the downstream performance of GRIT-VLP, we notice a decline when the value of exceeds a certain threshold (). We attribute this decline to the introduction of “noise” caused by the increasing presence of false negatives, which subsequently hampers the effectiveness of hard negative sampling in GRIT-VLP. Based on this analysis, we anticipate that effectively addressing the issue of false negatives while leveraging the potential of hard negative samples will be crucial for enhancing VLP models even further. In S.M, we further explore the impact of varying batch sizes on the occurrence of false negatives under the random sampling scenario, which highlights the significance of handling false negatives even in the typical VLP setting (large batch size under random sampling).

In response to this challenge, we propose MAFA, which effectively addresses the issue of false negatives and improves the downstream performance significantly compared to GRIT-VLP. The preview of the performance of MAFA is also shown in Figure 2 — it is evident that the IRTR score of MAFA continues to improve despite an increase in the number of false negatives.

4 Main Method: MAFA

Our MAFA consists of two integral components: we first present the intuition and details of the Efficient Connection Mining (ECM), then delineate the Smoothed ITC (S-ITC).

4.1 Efficient Connection Mining (ECM)

As outlined in the Introduction, the issue of false negatives originates from missing positive connections within a paired dataset, a challenge that is computationally infeasible to address naively. To tackle this, ECM process is strategically designed to exclusively examine hard negatives with significantly higher likelihoods of being false negatives. Namely, as described in Figure 4 and Algorithm 1 in S.M, the training model selects the hardest negatives for each anchor based on ITC similarity in the GRIT-sampled mini-batches. Once hard negatives are selected, a separate pre-trained ITM model, Connection Discriminator (Con-D), is employed to determine whether these hard negatives are true (hard) negatives or false negatives. If Con-D assigns a probability to a candidate image-text pair of being positive higher than a threshold (which was set to ), that candidate is adopted as a new positive pair in the pre-training losses.

We note that ECM process can be seamlessly integrated with the training process of BLIP-family models (ALBEF [35], BLIP [36], BLIP-2 [37]) as well by adopting GRIT sampling and Con-D, given that ITC and ITM are used as their training objectives. Moreover, due to the inherent randomness of mini-batches, Con-D encounters a variety of hard negatives in each batch and epoch, which enables ECM to create diverse positive connections during training.

Now, we will elaborate on the details of the three pre-training losses (ITC, ITM, and MLM) utilized in our model, and then explain how false negatives identified by ECM are integrated into these losses. Briefly, for ITC and ITM, the labels for identified false negatives are revised from negatives to positives. Moreover, these new positives are additionally used as inputs for MLM.

[ITC with ECM] In ITC, to measure the similarity between images and texts, the [CLS] tokens from the uni-modal encoders are utilized, as illustrated in Figure 3. We denote the cosine similarity between image and text as , where and are linear projections for [CLS] tokens of image and text embeddings, respectively. The objective of ITC is to maximize the similarity of positive pairs while minimizing that of negative pairs; hence, the loss becomes

| (1) |

in which and stand for the one-hot vectors for the correct sample pairs for image and text , respectively. CE denotes the cross-entropy loss. The softmax-normalized image-to-text and text-to-image similarities between image and text , and , are defined as

| (2) |

in which is the temperature and is the number of considered texts and images.

To incorporate missing positive connections identified by Con-D, the one-hot label is adjusted to . For example, for an anchor image , a single text is picked by in-batch hard negative sampling. Then, if is recognized as a new positive by Con-D, the -th element of the one-hot vector changes from to . Simultaneously, the original label value of in becomes , ensuring that the sum remains . If a positive connection is not newly established, the label remains unchanged. The above process is applied identically for text and image . Now, we denote the new ITC loss equipped with as .

[ITM with ECM] ITM task aims to predict whether the provided pair is matched or not. Similar to ITC, the labels for ITM are revised based on the missing positive connections identified by Con-D. We employ a re-sampling strategy for the ambiguous samples, which are uncertain whether they are false negatives or not (those with a probability of being positive between and ). These ambiguous samples are discarded, and the second hardest text (or image) is re-sampled for the anchor image (or text) to obtain a more certain negative for ITM. Thus, the form of the ITM loss is as follows:

| (3) |

in which represents the corrected one-hot label from Con-D.

[MLM with ECM] For MLM, the model is asked to predict masked tokens in the caption using unmasked text tokens and visual information. In addition to the original positive pairs in the dataset, new positive pairs detected by Con-D are additionally used:

| (4) |

in which denotes the set of newly constructed pairs, represents the one-hot label for the masked token, and indicates the model’s prediction for the masked token.

Remark: Con-D is pre-trained with the following pre-training objectives: S-ITC, MLM, and ITM with GRIT sampling. Then, it is fine-tuned on the Karpathy training split of MS-COCO [40], and the output of ITM head of Con-D serves as the probability for a candidate image-text pair to be a positive pair. The additional computation overhead of ECM during training depends only on the number of samples provided to Con-D and the number of new positives given to the multi-modal encoder of the training model for MLM. Since only labels are corrected for ITC and ITM, it does not require additional forward passes for the model being trained. Thus, despite the inclusion of additional forwarding passes for ECM, the extra overhead introduced by ECM is relatively low compared to the momentum distillation technique employed in ALBEF and BLIP. Detailed information regarding the computational cost is described in S.M.

4.2 Smoothed ITC (S-ITC)

To overcome the challenge of false negatives in ITC under GRIT sampling, we additionally introduce a computation-free approach named S-ITC, which employs label smoothing to contrastive loss, which has not been extensively explored in VLP. Specifically, we take the following loss form:

| (5) |

in which represents a mixing parameter, and denotes all-one vector.

We emphasize that label smoothing has not been widely adopted in typical VLP settings since it has not been very effective. As we show in Table 6 (Section 5), performance is significantly degraded when S-ITC is applied under the random sampling scenario. This decline is largely due to the detrimental effect of providing soft labels for the examples in the randomly sampled batch where true negatives are prevalent. In contrast, under GRIT sampling where each mini-batch is predominantly composed of samples that are likely to be false negatives, we observe that S-ITC, which ensures relatively high soft labels for all negatives, becomes highly effective.

There also have been other attempts to address the issue of false negatives in ITC, such as momentum distillation [35] and consistency loss [3]. Here, we explain only I2T-related terms for simplicity; T2I-related terms are similarly computed. Momentum distillation replaces by , where is the stop gradient operator, and denotes the probability obtained from the momentum encoder. Here, is equal to batch-size since the model is accompanied by a queue of size that stores embeddings to provide additional negatives. However, this approach suffers from inefficiency due to the additional forwarding of the momentum model, and it results in increasing the model size. In consistency loss, is substituted with , where is computed by the model itself. Here, is the same as since it does not involve a queue.

| Method | Sum of soft labels | ||

| Top 1 5 | Top | Top | |

| S-ITC | 0.5260 | 0.4740 | |

| Consistency Loss | 0.9822 | 0.0178 | |

| Momentum Distillation | 0.6746 | 0.0009 | 0.3245 |

However, as shown in Table 6 (in Section 5), the effectiveness of momentum distillation and consistency loss is limited. To explore the reason behind this, we examine the soft labels from the above methods in the GRIT sampling scenario as reported in Table 2, aiming to uncover the distribution shapes of the soft labels. The values in the table are obtained through the following process: the soft labels are sorted in descending order, and then averaged across all samples. Further details on the computation process are described in S.M. We observe that momentum distillation continues to assign almost zero labels to negative samples, which are likely to be false negatives under GRIT sampling. This result may stem from the large number of negatives in the queue, which prevents each negative sample from receiving non-negligible labels. On the other hand, consistency loss assigns comparatively higher soft labels (0.0178) than momentum distillation (0.0009) but overly concentrates on a few pairs, resulting in negligible labels for most negatives. In S.M, we provide an analysis that this phenomenon cannot be resolved by merely tuning .

Given that both consistency loss and the momentum distillation fail to achieve the intended objective of assigning non-negligible soft labels to the majority of negatives, we argue that S-ITC, which explicitly assigns higher soft labels to all negatives, can be a simple but effective solution. In S.M, we include an analysis of its robustness to .

Consequently, as illustrated in Figure 3 and Algorithm 1 in S.M, we adopt the following pre-training objective:

| (6) |

where represents the integrated ITC loss of and , which adopts the target labels as .

| Method | Pre-train # Images | COCO R@1 | Flickr R@1 | NLVR2 | VQA | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TR | IR | TR | IR | dev | test-P | test-dev | test-std | ||||||

| UNITER [6] | 4M | 65.7 | 52.9 | 87.3 | 75.6 | 77.18 | 77.85 | 72.70 | 72.91 | ||||

| VILLA [16] | 4M | - | - | 87.9 | 76.3 | 78.39 | 79.30 | 73.59 | 73.67 | ||||

| OSCAR [39] | 4M | 70.0 | 54.0 | - | - | 78.07 | 78.36 | 73.16 | 73.44 | ||||

| ALBEF [35] | 4M | 73.1 | 56.8 | 94.3 | 82.8 | 80.24 | 80.50 | 74.54 | 74.70 | ||||

| TCL [65] | 4M | 75.6 | 59.0 | 94.9 | 84.0 | 80.54 | 81.33 | 74.90 | 74.92 | ||||

| BLIP* (4M-Clean) [36] | 4M | 75.5 | 58.9 | 94.3 | 82.6 | 79.70 | 80.87 | 75.50 | 75.76 | ||||

| GRIT-VLP* [3] | 4M | 76.6 | 59.6 | 95.5 | 82.9 | 81.40 | 81.23 | 75.26 | 75.32 | ||||

| MAFA | 4M | 78.0 | 61.2 | 96.1 | 84.9 | 82.52 | 82.08 | 75.55 | 75.75 | ||||

| MAFA (4M-Clean) | 4M | 79.4 | 61.6 | 96.2 | 84.6 | 82.66 | 82.16 | 75.91 | 75.93 | ||||

| ALBEF | 14M | 77.6 | 60.7 | 95.9 | 85.6 | 82.55 | 83.14 | 75.85 | 76.04 | ||||

| BLIP | 14M | 80.6 | 63.1 | 96.6 | 87.2 | 82.67 | 82.30 | 77.54 | 77.62 | ||||

| Pre-train dataset | MAFA | COCO R@1 | Flickr R@1 | NLVR2 | VQA | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S-ITC | ECM | TR | IR | TR | IR | dev | test-P | test-dev | test-std | ||||

| 4M-Noisy | ✗ | ✗ | 76.6 | 59.6 | 95.5 | 82.9 | 81.40 | 81.23 | 75.26 | 75.32 | |||

| ✗ | ✓ | 77.4 | 60.2 | 95.5 | 83.3 | 82.03 | 81.76 | 75.39 | 75.52 | ||||

| ✓ | ✗ | 77.5 | 60.5 | 96.1 | 84.2 | 81.74 | 81.33 | 75.42 | 75.51 | ||||

| ✓ | ✓ | 78.0 | 61.2 | 96.1 | 84.9 | 82.52 | 82.08 | 75.55 | 75.75 | ||||

| 4M-Clean | ✗ | ✗ | 77.7 | 60.7 | 95.2 | 84.2 | 81.44 | 81.39 | 75.50 | 75.57 | |||

| ✓ | ✓ | 79.4 | 61.6 | 96.2 | 84.6 | 82.66 | 82.16 | 75.91 | 75.93 | ||||

5 Experimental Results

5.1 Data and experimental settings

During our training process, we utilize four datasets (MS-COCO [40], Visual Genome [32], Conceptual Captions [54], and SBU Captions [47]) with a total of 4M unique images (5M image-text pairs), as proposed by ALBEF [35] and UNITER [6]. We refer to this collective dataset as the “4M-Noisy” dataset due to a significant number of captions that offer either incomplete or incorrect descriptions, which can be seen as false positives. To analyze the impact of our approach in handling false negatives relative to the effect of removing false positives, we construct an additional same-sized training set named “4M-Clean” which is composed of clean image-text pairs, refined by the BLIP captioner [36]. Note that all the models are pre-trained with the “4M-Noisy” unless specifically stated as “4M-Clean” in our results table below. Further details on constructing the 4M-Clean dataset are in S.M.

Following ALBEF, we adopt our image encoder as a 12-layer Vision Transformer [13] with 86 million parameters, pre-trained on ImageNet-1k [59]. Both the text and multi-modal encoders utilize a 6-layer Transformer [60], initializing the former with the first 6 layers and the latter with the last 6 layers of BERT-base model (123.7M parameters) [11]. We use the same data augmentation method used in ALBEF and train our model for 20 epochs using 4 NVIDIA A100 GPUs, but excluding the momentum encoder in ALBEF. For Con-D, we use the exact same model architecture as the training model. Unless otherwise noted, we set and for GRIT sampling, and for all other hyper-parameter settings, we follow GRIT-VLP [3]. More details on the dataset, training, and hyperparameters are in S.M.

5.2 Downstream vision and language tasks

After completing the pre-training phase, we proceed to fine-tune our model on three downstream vision and language tasks: image-text retrieval (IRTR) [40], visual question answering (VQA) [19], and natural language for visual reasoning (NLVR2) [57]. For IRTR, we utilize the MS-COCO [40] and Flickr30K (F30K) [48] datasets, with F30K being re-splitted according to [30]. Following BLIP [36], we exclude the SNLI-VE dataset [64] due to reported noise in the data. Our fine-tuning and evaluation process mostly follows that of GRIT-VLP. More details of downstream tasks are in S.M.

5.3 Comparison with baselines

In Table 3, we observe that our approach consistently outperforms other baselines in multiple downstream tasks (IRTR, VQA, NLVR2). Notably, MAFA even surpasses ALBEF (14M) and competes with BLIP (14M) on certain metrics, despite being trained on a significantly smaller dataset. Specifically, MAFA achieves significant improvements over GRIT-VLP, with a substantial margin of IR/R@1, TR/R@1 on MS-COCO and on NLVR2 dev. These results clearly show the significance of addressing false negatives when leveraging hard negative mining. Additionally, we believe that the comparison between BLIP (4M-Clean) and our MAFA shows that the effectiveness of managing false negatives may surpass the impact of mitigating false positives. Furthermore, the enhanced performance of MAFA (4M-Clean) over MAFA shows the synergistic effect of addressing both false positives and negatives.

5.4 Ablation studies

Table 4 presents the effectiveness of two proposed components: efficient connection mining (ECM) and smoothed ITC (S-ITC). Here, all model variants adopt GRIT sampling, with row 1 representing the original GRIT-VLP model. The results clearly demonstrate that applying either the S-ITC (row 3) or the ECM (row 2) individually leads to performance improvements compared to a model that does not consider false negatives (row 1). By combining both S-ITC and ECM in our final model (row 4), we observe significant performance enhancements on the 4M-Noisy dataset. This tendency is validated again in the 4M-Clean dataset, confirming the consistent effectiveness of the proposed components (row 6). Moreover, by comparing the performance gap between MAFA trained on the noisy dataset (row 4) and GRIT-VLP trained on the clean dataset (row 5), we reaffirm that addressing false negatives outweighs the impact of handling false positives. Beyond the 4M dataset, we present additional results across a broader range of data scales (1M, 2M, and 14M) in S.M, demonstrating the robustness of MAFA with respect to data scale variations.

| Method | COCO R@1 | NLVR2 | VQA | ||||

|---|---|---|---|---|---|---|---|

| GRIT | MAFA | IR | TR | dev | test-P | test-dev | test-std |

| ✗ | ✗ | 74.4 | 57.6 | 79.75 | 79.94 | 74.49 | 74.67 |

| ✗ | ✓ | 74.3 | 57.8 | 81.20 | 81.03 | 74.61 | 74.78 |

| ✓ | ✗ | 76.6 | 59.6 | 81.40 | 81.23 | 75.26 | 75.32 |

| ✓ | ✓(ECM-E) | 77.1 | 61.1 | 82.33 | 81.95 | 75.50 | 75.54 |

| ✓ | ✓ | 78.0 | 61.2 | 82.52 | 82.08 | 75.55 | 75.75 |

Table 5 provides an additional comparative analysis on the effectiveness of MAFA, based on whether GRIT sampling and ECM are either applied or not. Here, row 1 denotes the ALBEF model without momentum distillation. Since S-ITC is ineffective under random sampling (as we show in Table 6 below), S-ITC is excluded when GRIT sampling is not utilized (row2). We observe that MAFA enhances the performance for both random and GRIT sampling. However, the effect of MAFA is much more vivid for GRIT sampling (row 4, 5), underscoring the critical role of managing false negatives in hard negative sampling. Moreover, our experiments reveal that converting false negatives into additional positives (row 5) is considerably more beneficial than merely removing them (row 4), which highlights the effect of leveraging new positive connections constructed by the model within the dataset itself.

Furthermore, in Table 6, we provide a comparative analysis on S-ITC, which supports our discussion in Section 4.2; S-ITC brings out a unique synergy only when combined with GRIT-sampling. Namely, in random sampling, we observe that S-ITC rather detrimentally affects performance (row 2). Conversely, under GRIT sampling, we verify that assigning relatively high nonzero labels to most negatives enhances performance. Namely, consistency loss (row 4), which assigns relatively higher soft labels to samples in a batch, outperforms momentum distillation (row 5). S-ITC significantly outperforms the other two variants, which highlights the importance of assigning substantial labels to the majority of negatives, rather than just a few.

5.5 Compatibility of MAFA with BLIP-2 [37]

In Tables 7 and 8, we demonstrate that our MAFA can be successfully integrated with the recent BLIP-2 [37], which is quite a successful vision-language pre-training framework. As described in S.M., the stage-1 of BLIP-2, which adopts ITC, ITM, and (auto-regressive) LM losses as objectives, closely resembles the pre-training procedures of both BLIP and ALBEF. Thus, MAFA can be effortlessly incorporated into stage-1 of BLIP-2, following the identical way described in Section 4.

In Table 7, we observe the performance of BLIP-2+GRIT is significantly degraded (row 2), which indicates that solely applying GRIT sampling leads to a failure of learning. We believe this performance degradation primarily stems from more frequent occurrences of false negatives in BLIP-2. In BLIP-2, due to the significantly enhanced capacity of the model, GRIT sampling, which mines hard negatives based on contrastive similarities calculated from the training model, includes a substantially higher number of false negatives in each batch. The integration of MAFA with BLIP-2 leads to enhanced performance, highlighting the importance of managing false negatives to increase the stability of the training process.

| Method | COCO R@1 | Flickr R@1 | |||

|---|---|---|---|---|---|

| GRIT | Soft labeling | TR | IR | TR | IR |

| ✗ | ✗ | 74.4 | 57.6 | 93.5 | 81.7 |

| Momentum Distillation | 74.2 | 57.4 | 93.5 | 81.9 | |

| S-ITC | 73.5 | 56.1 | 92.9 | 79.9 | |

| ✓ | Consistency Loss | 76.6 | 59.6 | 95.5 | 82.9 |

| Momentum Distillation | 76.1 | 58.9 | 94.4 | 82.7 | |

| S-ITC | 77.5 | 60.5 | 96.1 | 84.2 | |

| Model | TR | IR | ||||

|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | |

| BLIP-2 | 82.6 | 96.3 | 98.2 | 66.1 | 86.8 | 92.0 |

| BLIP-2 + GRIT | 65.9 | 89.1 | 95.0 | 52.5 | 79.4 | 87.3 |

| BLIP-2 + MAFA | 83.7 | 96.6 | 98.4 | 66.7 | 86.8 | 91.9 |

We further explore whether the integration of MAFA in stage-1 leads to improved generative learning capabilities after additional stage-2 training where the model is connected to a frozen LLM and pre-trained only with LM loss. We evaluate the performance of models in various zero-shot visual question answering benchmark datasets including GQA [25], OKVQA [44], and VQA [19]. Moreover, we assess the zero-shot image captioning ability on the Karpathy test split of MS-COCO [40]. Table 8 shows that MAFA significantly improves zero-shot performance across various VQA and image captioning tasks. This result not only underscores the compatibility of MAFA with BLIP-2 but also emphasizes that the exclusive integration of MAFA in stage-1 is also beneficial in generative learning capability (stage-2) as well. More detailed results, including those from fine-tuned image captioning and an analysis on how extra positive examples from ECM influence the BLIP-2 stage-2 performance, are provided in S.M.

| Model | VQAv2 | OK-VQA | GQA |

|

|||

| val | test | test-dev | BLEU@4 | CIDEr | |||

| BLIP-2 | 46.6 | 23.8 | 29.1 | 35.6 | 118.8 | ||

| BLIP-2 + MAFA | 50.8 | 29.0 | 31.8 | 37.6 | 125.4 | ||

6 Concluding Remarks

We introduce MAFA, a novel approach equipped with two key components (ECM, S-ITC), specifically designed to tackle the prevalent issue of false negatives in VLP. Our comprehensive experiments demonstrate that addressing false negatives plays a crucial role in VLP. Moreover, we believe that the concept of converting false negatives into additional positives paves the way for future research that leverages the inherent missing positive connections within a dataset.

Acknowledgment

This work was supported in part by the National Research Foundation of Korea (NRF) grant [No.2021R1A2C2007884] and by Institute of Information & communications Technology Planning & Evaluation (IITP) grants [No.2021-0-01343, No.2021-0-02068, No.2022-0-00113, No.2022-0-00959] funded by the Korean government (MSIT). It was also supported by SNU-Naver Hyperscale AI Center.

References

- Agrawal et al. [2019] Harsh Agrawal, Karan Desai, Yufei Wang, Xinlei Chen, Rishabh Jain, Mark Johnson, Dhruv Batra, Devi Parikh, Stefan Lee, and Peter Anderson. nocaps: novel object captioning at scale. In ICCV, 2019.

- Bi et al. [2023] Junyu Bi, Daixuan Cheng, Ping Yao, Bochen Pang, Yuefeng Zhan, Chuanguang Yang, Yujing Wang, Hao Sun, Weiwei Deng, and Qi Zhang. VL-Match: Enhancing vision-language pretraining with token-level and instance-level matching. In ICCV, 2023.

- Byun et al. [2022] Jaeseok Byun, Taebaek Hwang, Jianlong Fu, and Taesup Moon. GRIT-VLP: Grouped mini-batch sampling for efficient vision and language pre-training. In ECCV, 2022.

- Changpinyo et al. [2021] Soravit Changpinyo, Piyush Sharma, Nan Ding, and Radu Soricut. Conceptual 12M: Pushing web-scale image-text pre-training to recognize long-tail visual concepts. In CVPR, 2021.

- Chen et al. [2021] Tsai-Shien Chen, Wei-Chih Hung, Hung-Yu Tseng, Shao-Yi Chien, and Ming-Hsuan Yang. Incremental false negative detection for contrastive learning. arXiv preprint arXiv:2106.03719, 2021.

- Chen et al. [2020] Yen-Chun Chen, Linjie Li, Licheng Yu, Ahmed El Kholy, Faisal Ahmed, Zhe Gan, Yu Cheng, and Jingjing Liu. UNITER: Universal image-text representation learning. In ECCV, 2020.

- Cho et al. [2021] Jaemin Cho, Jie Lei, Hao Tan, and Mohit Bansal. Unifying vision-and-language tasks via text generation. In ICML, 2021.

- Chuang et al. [2020] Ching-Yao Chuang, Joshua Robinson, Yen-Chen Lin, Antonio Torralba, and Stefanie Jegelka. Debiased contrastive learning. In NeurIPS, 2020.

- Chun et al. [2021] Sanghyuk Chun, Seong Joon Oh, Rafael Sampaio De Rezende, Yannis Kalantidis, and Diane Larlus. Probabilistic embeddings for cross-modal retrieval. In CVPR, 2021.

- Chun et al. [2022] Sanghyuk Chun, Wonjae Kim, Song Park, Minsuk Chang, and Seong Joon Oh. ECCV Caption: Correcting false negatives by collecting machine-and-human-verified image-caption associations for ms-coco. In ECCV, 2022.

- Devlin et al. [2018] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- Diao et al. [2021] Haiwen Diao, Ying Zhang, Lin Ma, and Huchuan Lu. Similarity reasoning and filtration for image-text matching. In AAAI, 2021.

- Dosovitskiy et al. [2020] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An Image is Worth 16x16 Words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Fang et al. [2023] Yuxin Fang, Wen Wang, Binhui Xie, Quan Sun, Ledell Wu, Xinggang Wang, Tiejun Huang, Xinlong Wang, and Yue Cao. EVA: Exploring the limits of masked visual representation learning at scale. In CVPR, 2023.

- Gadre et al. [2024] Samir Yitzhak Gadre, Gabriel Ilharco, Alex Fang, Jonathan Hayase, Georgios Smyrnis, Thao Nguyen, Ryan Marten, Mitchell Wortsman, Dhruba Ghosh, Jieyu Zhang, et al. DataComp: In search of the next generation of multimodal datasets. In NeurIPS, 2024.

- Gan et al. [2020] Zhe Gan, Yen-Chun Chen, Linjie Li, Chen Zhu, Yu Cheng, and Jingjing Liu. Large-scale adversarial training for vision-and-language representation learning. In NeurIPS, 2020.

- Ghoshal et al. [2021] Asish Ghoshal, Xilun Chen, Sonal Gupta, Luke Zettlemoyer, and Yashar Mehdad. Learning better structured representations using low-rank adaptive label smoothing. In ICLR, 2021.

- Goyal et al. [2023] Sachin Goyal, Ananya Kumar, Sankalp Garg, Zico Kolter, and Aditi Raghunathan. Finetune like you pretrain: Improved finetuning of zero-shot vision models. In NeurIPS, 2023.

- Goyal et al. [2017] Yash Goyal, Tejas Khot, Douglas Summers-Stay, Dhruv Batra, and Devi Parikh. Making the V in VQA Matter: Elevating the role of image understanding in visual question answering. In CVPR, 2017.

- Hu et al. [2021] Peng Hu, Xi Peng, Hongyuan Zhu, Liangli Zhen, and Jie Lin. Learning cross-modal retrieval with noisy labels. In CVPR, 2021.

- Huang et al. [2022] Runhui Huang, Yanxin Long, Jianhua Han, Hang Xu, Xiwen Liang, Chunjing Xu, and Xiaodan Liang. NLIP: Noise-robust language-image pre-training. arXiv preprint arXiv:2212.07086, 2022.

- Huang et al. [2020] Zhicheng Huang, Zhaoyang Zeng, Bei Liu, Dongmei Fu, and Jianlong Fu. Pixel-BERT: Aligning image pixels with text by deep multi-modal transformers. arXiv preprint arXiv:2004.00849, 2020.

- Huang et al. [2021a] Zhenyu Huang, Guocheng Niu, Xiao Liu, Wenbiao Ding, Xinyan Xiao, Hua Wu, and Xi Peng. Learning with noisy correspondence for cross-modal matching. In NeurIPS, 2021a.

- Huang et al. [2021b] Zhicheng Huang, Zhaoyang Zeng, Yupan Huang, Bei Liu, Dongmei Fu, and Jianlong Fu. Seeing Out of tHe bOx: End-to-end pre-training for vision-language representation learning. In CVPR, 2021b.

- Hudson and Manning [2019] Drew A Hudson and Christopher D Manning. GQA: A new dataset for real-world visual reasoning and compositional question answering. In CVPR, 2019.

- Huynh et al. [2022] Tri Huynh, Simon Kornblith, Matthew R Walter, Michael Maire, and Maryam Khademi. Boosting contrastive self-supervised learning with false negative cancellation. In WACV, 2022.

- Ilharco et al. [2022] Gabriel Ilharco, Mitchell Wortsman, Samir Yitzhak Gadre, Shuran Song, Hannaneh Hajishirzi, Simon Kornblith, Ali Farhadi, and Ludwig Schmidt. Patching open-vocabulary models by interpolating weights. In NeurIPS, 2022.

- Jian et al. [2023] Yiren Jian, Chongyang Gao, and Soroush Vosoughi. Bootstrapping vision-language learning with decoupled language pre-training. arXiv preprint arXiv:2307.07063, 2023.

- Jiang et al. [2023] Chaoya Jiang, Haiyang Xu, Wei Ye, Qinghao Ye, Chenliang Li, Ming Yan, Bin Bi, Shikun Zhang, Fei Huang, and Songfang Huang. BUS: Efficient and effective vision-language pre-training with bottom-up patch summarization. In ICCV, 2023.

- Karpathy and Fei-Fei [2015] Andrej Karpathy and Li Fei-Fei. Deep visual-semantic alignments for generating image descriptions. In CVPR, 2015.

- Kim et al. [2021] Wonjae Kim, Bokyung Son, and Ildoo Kim. ViLT: Vision-and-language transformer without convolution or region supervision. arXiv preprint arXiv:2102.03334, 2021.

- Krishna et al. [2017] Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A Shamma, et al. Visual Genome: Connecting language and vision using crowdsourced dense image annotations. IJCV, 2017.

- Krothapalli and Abbott [2020] Ujwal Krothapalli and A Lynn Abbott. Adaptive label smoothing. arXiv preprint arXiv:2009.06432, 2020.

- Lee et al. [2022] Dongkyu Lee, Ka Chun Cheung, and Nevin L Zhang. Adaptive label smoothing with self-knowledge in natural language generation. In EMNLP, 2022.

- Li et al. [2021] Junnan Li, Ramprasaath Selvaraju, Akhilesh Gotmare, Shafiq Joty, Caiming Xiong, and Steven Chu Hong Hoi. Align before Fuse: Vision and language representation learning with momentum distillation. In NeurIPS, 2021.

- Li et al. [2022] Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In ICML, 2022.

- Li et al. [2023] Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. BLIP-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597, 2023.

- Li et al. [2020a] Weizhi Li, Gautam Dasarathy, and Visar Berisha. Regularization via structural label smoothing. In AISTATS, 2020a.

- Li et al. [2020b] Xiujun Li, Xi Yin, Chunyuan Li, Pengchuan Zhang, Xiaowei Hu, Lei Zhang, Lijuan Wang, Houdong Hu, Li Dong, Furu Wei, et al. Oscar: Object-semantics aligned pre-training for vision-language tasks. In ECCV, 2020b.

- Lin et al. [2014] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft COCO: Common objects in context. In ECCV, 2014.

- Lu et al. [2019] Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. ViLBERT: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. arXiv preprint arXiv:1908.02265, 2019.

- Lu et al. [2020] Jiasen Lu, Vedanuj Goswami, Marcus Rohrbach, Devi Parikh, and Stefan Lee. 12-in-1: Multi-task vision and language representation learning. In CVPR, 2020.

- Maini et al. [2023] Pratyush Maini, Sachin Goyal, Zachary C Lipton, J Zico Kolter, and Aditi Raghunathan. T-MARS: Improving visual representations by circumventing text feature learning. arXiv preprint arXiv:2307.03132, 2023.

- Marino et al. [2019] Kenneth Marino, Mohammad Rastegari, Ali Farhadi, and Roozbeh Mottaghi. OK-VQA: A visual question answering benchmark requiring external knowledge. In CVPR, 2019.

- Müller et al. [2019] Rafael Müller, Simon Kornblith, and Geoffrey E Hinton. When does label smoothing help? In NeurIPS, 2019.

- Nguyen et al. [2024] Thao Nguyen, Samir Yitzhak Gadre, Gabriel Ilharco, Sewoong Oh, and Ludwig Schmidt. Improving multimodal datasets with image captioning. In NeurIPS, 2024.

- Ordonez et al. [2011] Vicente Ordonez, Girish Kulkarni, and Tamara Berg. Im2Text: Describing images using 1 million captioned photographs. In NeurIPS, 2011.

- Plummer et al. [2015] Bryan A Plummer, Liwei Wang, Chris M Cervantes, Juan C Caicedo, Julia Hockenmaier, and Svetlana Lazebnik. Flickr30k Entities: Collecting region-to-phrase correspondences for richer image-to-sentence models. In ICCV, 2015.

- Radenovic et al. [2023] Filip Radenovic, Abhimanyu Dubey, Abhishek Kadian, Todor Mihaylov, Simon Vandenhende, Yash Patel, Yi Wen, Vignesh Ramanathan, and Dhruv Mahajan. Filtering, distillation, and hard negatives for vision-language pre-training. arXiv preprint arXiv:2301.02280, 2023.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. arXiv preprint arXiv:2103.00020, 2021.

- Robinson et al. [2020] Joshua Robinson, Ching-Yao Chuang, Suvrit Sra, and Stefanie Jegelka. Contrastive learning with hard negative samples. arXiv preprint arXiv:2010.04592, 2020.

- Schuhmann et al. [2021] Christoph Schuhmann, Richard Vencu, Romain Beaumont, Robert Kaczmarczyk, Clayton Mullis, Aarush Katta, Theo Coombes, Jenia Jitsev, and Aran Komatsuzaki. LAION-400M: Open dataset of clip-filtered 400 million image-text pairs. arXiv preprint arXiv:2111.02114, 2021.

- Schuhmann et al. [2022] Christoph Schuhmann, Romain Beaumont, Richard Vencu, Cade Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, Aarush Katta, Clayton Mullis, Mitchell Wortsman, et al. LAION-5B: An open large-scale dataset for training next generation image-text models. arXiv preprint arXiv:2210.08402, 2022.

- Sharma et al. [2018] Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. Conceptual Captions: A cleaned, hypernymed, image alt-text dataset for automatic image captioning. In ACL, 2018.

- Song and Soleymani [2019] Yale Song and Mohammad Soleymani. Polysemous visual-semantic embedding for cross-modal retrieval. In CVPR, 2019.

- Su et al. [2019] Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, and Jifeng Dai. VL-BERT: Pre-training of generic visual-linguistic representations. arXiv preprint arXiv:1908.08530, 2019.

- Suhr et al. [2018] Alane Suhr, Stephanie Zhou, Ally Zhang, Iris Zhang, Huajun Bai, and Yoav Artzi. A corpus for reasoning about natural language grounded in photographs. arXiv preprint arXiv:1811.00491, 2018.

- Szegedy et al. [2016] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. In CVPR, 2016.

- Touvron et al. [2021] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In ICML, 2021.

- Vaswani et al. [2017] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In NeurIPS, 2017.

- Wang et al. [2023] Alex Jinpeng Wang, Kevin Qinghong Lin, David Junhao Zhang, Stan Weixian Lei, and Mike Zheng Shou. Too Large; data reduction for vision-language pre-training. In ICCV, 2023.

- Wang et al. [2021] Zirui Wang, Jiahui Yu, Adams Wei Yu, Zihang Dai, Yulia Tsvetkov, and Yuan Cao. SimVLM: Simple visual language model pretraining with weak supervision. arXiv preprint arXiv:2108.10904, 2021.

- Wortsman et al. [2022] Mitchell Wortsman, Gabriel Ilharco, Jong Wook Kim, Mike Li, Simon Kornblith, Rebecca Roelofs, Raphael Gontijo Lopes, Hannaneh Hajishirzi, Ali Farhadi, Hongseok Namkoong, et al. Robust fine-tuning of zero-shot models. In CVPR, 2022.

- Xie et al. [2019] Ning Xie, Farley Lai, Derek Doran, and Asim Kadav. Visual Entailment: A novel task for fine-grained image understanding. arXiv preprint arXiv:1901.06706, 2019.

- Yang et al. [2022] Jinyu Yang, Jiali Duan, Son Tran, Yi Xu, Sampath Chanda, Liqun Chen, Belinda Zeng, Trishul Chilimbi, and Junzhou Huang. Vision-language pre-training with triple contrastive learning. In CVPR, 2022.

- Yuksekgonul et al. [2022] Mert Yuksekgonul, Federico Bianchi, Pratyusha Kalluri, Dan Jurafsky, and James Zou. When and why vision-language models behave like bags-of-words, and what to do about it? In ICLR, 2022.

- Zeng et al. [2022] Yan Zeng, Xinsong Zhang, and Hang Li. Multi-Grained Vision Language Pre-Training: Aligning texts with visual concepts. In ICML, 2022.

- Zhang et al. [2022] Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, Moya Chen, Shuohui Chen, Christopher Dewan, Mona Diab, Xian Li, Xi Victoria Lin, et al. OPT: Open pre-trained transformer language models. arXiv preprint arXiv:2205.01068, 2022.

- Zhou et al. [2020] Luowei Zhou, Hamid Palangi, Lei Zhang, Houdong Hu, Jason Corso, and Jianfeng Gao. Unified vision-language pre-training for image captioning and vqa. In AAAI, 2020.

Appendix A Data and Implementation Details

In this section, we provide information about the software and the dataset used in our study. We conducted experiments with four NVIDIA A100 GPUS with Python 3.8 and Pytorch with CUDA 11.1.

To construct the 4M-Clean dataset, we download the dataset corpus (CC3M+CC12M+SBU) from the official github of BLIP. The dataset corpus is generated and filtered by a model equipped with VIT-B/16 as its image transformer. From this corpus, we selectively utilize image-text pairs from the (CC3M+SBU) to align with the 4M-Noisy dataset. Note that the dataset refinement process (generating clean captions, and filtering noisy image-text pairs) is exclusively done with the web-crawled dataset (CC3M+SBU), and (COCO+VG) dataset is incorporated in the 4M-Clean dataset without any modification.

As described in the manuscript (Section 5), we mainly use a synthetically generated and filtered set from this dataset corpus. To ensure that the size of the 4M-Clean dataset is nearly identical to the 4M-Noisy dataset, we additionally use the small number of among the (CC3M+SBU) dataset due to the synthetically generated and filtered set being slightly smaller than the 4M-Noisy dataset. The total number of image-text pairs in the 4M-Noisy set and the 4M-Clean set is and , respectively.

Appendix B Details on counting false negatives in Section 3 (manuscript)

Here, we first clarify the purpose of using the pre-trained BLIP (129M) model in quantitative analysis in Section 3 (manuscript) for better understanding. The quantitative analysis represented in Table 1 and Figure 2 (manuscript) includes the estimated count of false negative pairs during the ITM task of training. The main goal is to compare the number of false negatives arising from randomly constructed mini-batches (typical VLP setting) to those from GRIT-sampled mini-batches that group similar pairs in each mini-batch. Here, to identify false negative pairs with perfect accuracy, it is essential to employ a human evaluation process, requiring manual examination of each individual negative pair (constructed for performing ITM task) to determine if it is matched or not. However, given the infeasibility of manually checking all negative pairs, we have opted to leverage the pre-trained BLIP (129M) model, a strong ITM model, as an alternative to human evaluation. While the BLIP (129M) does not always classify with perfect accuracy, it is deemed sufficiently reliable for approximating the tendency of the number of false negatives.

To validate this, we additionally conduct a human evaluation on randomly sampled 200 false negatives in the 4M-Clean dataset during training which are filtered by BLIP (129M) filter. For this, two ML researchers manually check whether each false negative is genuinely a false negative or not. In this analysis, among the samples classified as false negatives by BLIP (129M), over 83% are also determined to be false negatives upon human evaluation. While it’s not 100% accurate, we believe that using BLIP (129M) is reasonable for approximating the number of false negatives during training and, consequently, for comparing the occurrence of false negatives between random sampling and GRIT sampling. To report representative numbers, the values provided in Table 1 (manuscript) are obtained by multiplying the actual values from the BLIP (129M) model by 0.83, assuming that human correction is statistically significant. The raw values prior to multiplication are presented in rows 1 and 2 of Table 9.

Additionally, we examine the number of false negatives using other discriminators, as detailed in Table 9. Unlike the values in Table 1 (manuscript), these values are not multiplied by 0.83. ALBEF (14M) denotes the ALBEF model pre-trained on the 14M dataset and fine-tuned on the MS-COCO dataset, while BLIP-2 represents the BLIP-2 model (equipped with ViT-g) pre-trained on 129M and fine-tuned on the MS-COCO dataset. These results also indicate that the tendency of false negative ratios remains consistent across different discriminators.

| Discriminator | Dataset | Sampling | FN w.r.t. image | FN w.r.t. text |

|---|---|---|---|---|

| BLIP (129M) | 4M-Noisy | Random | 146,127 (2.9%) | 142,265 (2.8%) |

| GRIT | 985,531 (19.7%) | 977,283 (19.5%) | ||

| 4M-Clean | Random | 184,345 (3.7%) | 179,191 (3.6%) | |

| GRIT | 1,383,752 (28.0%) | 1,321,066 (26.8%) | ||

| ALBEF (14M) | 4M-Clean | Random | 216,179 (4.4%) | 210,976 (4.3%) |

| GRIT | 1,565,023 (31.7%) | 1,477,597 (29.9%) | ||

| BLIP-2 | 4M-Clean | Random | 118,118 (2.4%) | 115,281 (2.3%) |

| GRIT | 939,797 (19.0%) | 859,356 (17.4%) |

Appendix C Additional analyses on false negative ratio (FNR)

We quantify the FNR w.r.t. batch size under a random sampling scenario. As shown in Figure 5, increasing the batch size on 4M-Clean apparently results in a higher FNR. This finding suggests the presence of a significant number of false negatives even in a random sampling scenario with a large batch size, which is a common practice in recent VLP training schemes such as BLIP-2. Consequently, this result also underscores the applicability of MAFA in settings where hard negative sampling like GRIT is not employed.

We also measure the FNR using a considerably larger, web-crawled CC12M-Clean dataset with a default batch size of 96. In the GRIT sampling scenario, the FNR w.r.t. images and text is 22.2% and 20.6%, respectively, remaining notably high. This result can serve as a proxy for the FNR on larger-scale datasets (e.g., LAION400M [52]) since they share a similar dataset construction pipeline: i.e., randomly sourced from the web. Therefore, these findings highlight the significance of addressing false negatives in recent large-scale models and the broad applicability of MAFA.

Appendix D Detailed explanation on Table 2 (manuscript)

In Table 2 (manuscript), we present the shape of soft labels of three soft labeling methods: momentum distillation, consistency loss, and S-ITC. In this section, we explain the detailed process of constructing this table. The values in the table are obtained in the last epoch of the training with the following procedure.

-

1.

For each anchor image (resp. text ), a soft label is given to each text (resp. image ) in a batch. For the batch-size , is from to . For momentum distillation with queue size , is from to . The set of these soft labels can be represented as a (or )-dimensional vector, which is denoted as for anchor .

-

2.

(or ) values of are sorted in descending order, and it is denoted as .

-

3.

Given that the model sees anchors during an epoch of training, all s are averaged, and it is denoted as .

-

4.

Now, (or ) values in are categorized into three groups: Top, Top, and Top.

-

5.

The sum of the values within each category is written in the table.

Appendix E Pseudocode of MAFA

We provide pseudocode in Algorithm 1 for a thorough understanding of the MAFA framework. Although the pseudocode mostly follows the notation of the manuscript, there are some modified notations to explain the process in more detail. Specifically, and are used instead of . Also, in the manuscript, is a -dim one-hot vector for an image-text sample. If the sample is treated as a positive in ITM loss, is equal to . If not, it is equal to . On the other hand, in the pseudocode, and are -dim vectors in which the -th element represents whether the corresponding sample is treated as positive () or not () in ITM loss.

| Method | Pre-train # Images | MSCOCO (5K test set) | Flickr30K (1K test set) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TR | TR | TR | IR | ||||||||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||||

| UNITER | 4M | 65.7 | 88.6 | 93.8 | 52.9 | 79.9 | 88.0 | 87.3 | 98.0 | 99.2 | 75.6 | 94.1 | 96.8 | ||

| VILLA | 4M | - | - | - | - | - | - | 87.9 | 79.30 | 76.3 | 73.67 | ||||

| OSCAR | 4M | 70.0 | 91.1 | 95.5 | 54.0 | 80.8 | 88.5 | - | - | - | - | - | - | ||

| ALBEF | 4M | 73.1 | 91.4 | 96.0 | 56.8 | 81.5 | 89.2 | 94.3 | 99.4 | 99.8 | 82.8 | 96.7 | 98.4 | ||

| TCL | 4M | 75.6 | 92.8 | 96.7 | 59.0 | 83.2 | 89.9 | 94.9 | 99.5 | 99.8 | 84 | 96.7 | 98.5 | ||

| BLIP* (4M-Clean) | 4M | 76.5 | 93.2 | 96.8 | 58.9 | 83.1 | 89.6 | 94.3 | 99.4 | 99.9 | 82.6 | 96.2 | 98.3 | ||

| GRIT-VLP* | 4M | 76.6 | 93.4 | 96.9 | 59.6 | 83.3 | 89.9 | 95.5 | 99.6 | 99.8 | 82.9 | 96.2 | 97.9 | ||

| MAFA | 4M | 78.0 | 94.1 | 97.2 | 61.2 | 84.3 | 90.3 | 96.1 | 99.8 | 100 | 84.9 | 96.5 | 98.0 | ||

| MAFA (4M-Clean) | 4M | 79.4 | 94.4 | 97.5 | 61.6 | 84.5 | 90.4 | 96.2 | 99.9 | 100 | 84.6 | 96.4 | 98.1 | ||

| ALBEF | 14M | 77.6 | 94.3 | 97.2 | 60.7 | 84.3 | 90.5 | 95.9 | 99.8 | 100 | 85.6 | 97.5 | 98.9 | ||

| BLIP | 14M | 80.6 | 95.2 | 97.6 | 63.1 | 85.3 | 91.1 | 96.6 | 99.8 | 100 | 87.2 | 97.5 | 98.8 | ||

| Pre-train dataset | MAFA | MSCOCO (5K test set) | Flickr30K (1K test set) | ||||||||||||

| TR | IR | TR | IR | ||||||||||||

| S-ITC | ECM | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| 4M-Noisy | ✗ | ✗ | 76.6 | 93.4 | 96.9 | 59.6 | 83.3 | 89.9 | 95.5 | 99.6 | 99.8 | 82.9 | 96.2 | 97.9 | |

| ✗ | ✓ | 77.4 | 93.9 | 96.9 | 60.2 | 83.8 | 90.4 | 95.5 | 99.5 | 99.8 | 83.7 | 96.3 | 98.0 | ||

| ✓ | ✗ | 77.5 | 94.3 | 97.2 | 60.5 | 83.7 | 90.2 | 96.1 | 99.8 | 99.9 | 84.2 | 96.3 | 98.1 | ||

| ✓ | ✓ | 78.0 | 94.1 | 97.2 | 61.2 | 84.3 | 90.3 | 96.1 | 99.8 | 100.0 | 84.9 | 96.5 | 98.0 | ||

| 4M-Clean | ✗ | ✗ | 77.7 | 93.5 | 96.9 | 60.7 | 83.3 | 90.1 | 95.2 | 99.6 | 99.9 | 84.2 | 95.8 | 98.0 | |

| ✓ | ✓ | 79.4 | 94.4 | 97.5 | 61.6 | 84.5 | 90.4 | 96.2 | 99.9 | 100.0 | 84.6 | 96.4 | 98.1 | ||

Appendix F Details on Downstream tasks

In the Supplementary Materials, the term “total batch size” refers to the overall mini-batch size. Specifically, it represents the product of the “number of GPUs” and the “mini-batch size per GPU,” which is calculated as .

We primarily adhere to the implementation details of GRIT-VLP when performing fine-tuning.

During fine-tuning, we employ randomly cropped images with a resolution of 384 × 384.

Conversely, during the inference stage, we resize the images without cropping.

Additionally, we apply the exact same RandAugment, optimizer selection, cosine learning rate decay, and weight decay with GRIT-VLP.

Following GRIT-VLP, we do not utilize a momentum encoder in the pre-training phase.

Consequently, the momentum distillation (MD) technique is not employed for all downstream tasks,

[Image-Text Retrieval (IRTR)] IRTR aims to find the most similar image to a given text or text to a given image.

Following GRIT-VLP, we do not use the momentum distillation for ITC, but use the queue and negatives from the momentum encoder when calculating ITC loss in the fine-tuning step.

For model fine-tuning, we use the COCO and Flickr-30K datasets.

Specifically, the COCO dataset, comprising 113,000 training images, 5,000 for validation, and another 5,000 for testing, is fine-tuned over 5 epochs. Conversely, the Flickr-30K dataset, with 29,000 training images, 1,000 for validation, and 1,000 for testing, undergoes a longer fine-tuning phase of 10 epochs.

For evaluation, we use a 5K COCO test set and Flickr-1K set following previous works.

During the fine-tuning phase, we use a total batch size of 256 and an initial learning rate of 1e-5 for both datasets.

Following ALBEF, during evaluation, we employ a two-step process. First, we retrieve the top- candidates by calculating image-text contrastive similarities only using uni-modal encoders. Then, we re-rank them with ITM scores. Here, is set to and for COCO and Flickr, respectively

[Visual Reasoning (NLVR2)] NLVR2 is a classification task based on one caption and two images. Since the model architecture should be changed to get two images as an input, the fine-tuning step of NLVR2 requires an additional pre-training phase with the 4M-Noisy dataset for 1 epoch.

For this pre-training phase, we employ a batch size of 256 and set the learning rate to , and the image resolution is set as ().

After the single epoch pre-training phase, we fine-tune the model for 10 epochs while using a total batch size of .

[Visual Question Answering (VQA)] VQA is a task to obtain an answer given image and question pair. We perform experiments on the VQA2.0 dataset [19], which is divided into training, validation, and test sets with 83,000, 41,000, and 81,000, respectively.

Both the training and validation set are utilized for training. Following GRIT-VLP and ALBEF, we also include additional pairs from Visual Genome. Fine-tuning is conducted for epochs, employing a total batch size of and an initial learning rate of .

For a fair comparison, the decoder only generates answers from candidates.

| Model | Time per epoch | Parameters | Queue for MD | Queue for GRIT |

| ALBEF | 3h 10m | 210M (MD: 210M) | 65536 | - |

| BLIP | 3h 30m | 252M (MD: 252M) | 57600 | - |

| GRIT-VLP | 2h 30m | 210M | - | 48000 |

| MAFA | 3h 06m | 210M (Con-D: 210M) | - | 48000 |

Appendix G Additional Experimental Results

G.1 Experiments on diverse data scales

We carry out additional experiments on various data scales under GRIT-sampling. For a large-scale dataset, we utilize the 14M-Clean dataset (CC12M-Clean + 4M-Clean), and for small-scale datasets, we use 1M and 2M subsets randomly selected from the 4M-Clean dataset. To compare the overall performance, we compute the accuracy of each of the four tasks (COCO-IRTR, Flickr-IRTR, NLVR2, VQA) by averaging their respective metrics: TR/R@1, TR/R@5, TR/R@10, IR/R@1, IR/R@5, IR/R@10 for IRTR, dev and test-P for NLVR2, and text-dev and test-std for VQA. Then, we sum up the four averaged values. In Figure 6, we observe that MAFA consistently achieves considerable performance improvements across all data scales compared to GRIT-VLP, again demonstrating the robustness of MAFA w.r.t. data scale variations.

G.2 Computation comparison

We describe the computational cost for pre-training. Table 12 shows the pre-training time per epoch, the number of parameters of the model, and queue size. Here, the “MD” denotes additional model parameters for the momentum model, and “Con-D” denotes additional model parameters for Con-D in the pre-training phase. As pointed out in the manuscript (Section 4), although MAFA becomes relatively slower than the GRIT-VLP, it is still competitive with ALBEF, and faster than BLIP.

G.3 Detailed experiments on overall framework

We present a comprehensive comparison of various baselines on image-text retrieval tasks in Table 10. We observe a similar tendency in the manuscript (Section 5). Namely, MAFA mostly surpasses other baselines in performance when pre-trained on the 4M-Noisy dataset, outperforming even ALBEF pre-trained on the much larger dataset (14M). Moreover, in the case of the 4M-Clean dataset, MAFA again shows a significant improvement over BLIP, which is pre-trained on the same dataset.

G.4 Analysis on search space

When applying GRIT sampling, the degree of similarity between samples within a batch is influenced by the search space since GRIT groups together similar samples within that specific search space. Consequently, an increase in the search space leads to a higher number of false negatives as shown in Figure 2 (manuscript). We observe that the average IRTR performance of GRIT-VLP is decreased as the search space increases, as shown in Table 13. In contrast, MAFA shows a performance improvement with larger search spaces and consistently outperforms GRIT-VLP regardless of the search space. We believe this result shows the effectiveness of MAFA in managing false negatives.

| Method | MSCOCO (5K test set) | |||||||

|---|---|---|---|---|---|---|---|---|

| TR | IR | Avg | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | |||

| GRIT-VLP | 960 | 76.7 | 93.9 | 97.2 | 59.6 | 83.5 | 90.1 | 83.5 |

| 4800 | 76.6 | 93.4 | 96.9 | 59.6 | 83.3 | 89.9 | 83.3 | |

| 48000 | 77.0 | 93.1 | 96.6 | 59.2 | 83.1 | 89.8 | 83.1 | |

| MAFA | 960 | 77.6 | 94.4 | 97.1 | 60.7 | 84.1 | 90.2 | 84.0 |

| 4800 | 78.0 | 94.1 | 97.2 | 61.2 | 84.3 | 90.3 | 84.2 | |

| 48000 | 78.3 | 94.2 | 97.3 | 61.4 | 84.4 | 90.5 | 84.4 | |

G.5 Detailed experiments on ECM

[Comparative analysis with ECM variants] We conduct a comparative analysis of different uses of new missing positives constructed from ECM. Namely, we assess the performance of models when new positives are exclusively incorporated in ITC, ITM, and MLM, respectively. As can be verified in Table 14, the usage of new positives is effective for each of these objectives. We believe the relatively marginal impact of new positives in ITC arises from the possible inclusion of additional false negatives in the mini-batch, which highlights the necessity of S-ITC. Moreover, their combined usage across three objectives leads to more improvements. We believe this result clearly shows the benefits of using new missing positives constructed from ECM, across all objectives. Note that all variants do not use S-ITC as objectives here.

| ECM | MSCOCO (5K test set) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| TR | IR | ||||||||

| ITC | ITM | MLM | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | Avg |

| ✗ | ✗ | ✗ | 76.6 | 93.4 | 96.9 | 59.6 | 83.3 | 89.9 | 83.3 |

| ✓ | ✗ | ✗ | 77.1 | 93.5 | 96.8 | 59.7 | 83.4 | 90.1 | 83.4 |

| ✗ | ✓ | ✗ | 77.1 | 93.5 | 97.3 | 59.9 | 83.7 | 90.0 | 83.6 |

| ✗ | ✗ | ✓ | 77.4 | 93.7 | 97.0 | 59.9 | 83.2 | 89.9 | 83.5 |

| ✓ | ✓ | ✓ | 77.4 | 93.9 | 96.9 | 60.2 | 83.8 | 90.4 | 83.8 |

| COCO R@1 | NLVR2 | VQA | ||||

|---|---|---|---|---|---|---|

| IR | TR | dev | test-P | test-dev | test-std | |

| 0.5 | 78.0 | 61.2 | 82.06 | 82.35 | 75.60 | 75.78 |

| 0.8 | 78.0 | 61.2 | 82.52 | 82.08 | 75.55 | 75.77 |

[Robustness of MAFA for varying threshold ] We evaluate the impact of choosing different thresholds for Con-D. Table 15 demonstrates that ECM process exhibits stability across different thresholds. Here, if is set to , the re-sampling strategy for ITM is omitted.

[Comparison with oracle Con-D] We measure the performance of MAFA using different Con-D: one pre-trained on the 4M-Noisy dataset and the other obtained from BLIP (pre-trained with 129M dataset). In Table 16, we observe that MAFA exhibits only a little performance gap with the MAFA (Oracle). We believe this result shows that the Con-D constructed with a 4M-Noisy dataset is sufficient to reliably identify false negatives within that particular dataset. This aligns with the findings of BLIP, where it was shown that a filter trained with a noisy dataset of the same scale can effectively handle false positives within that specific dataset.

| Dataset | Method | COCO R@1 | Flickr R@1 | ||

|---|---|---|---|---|---|

| TR | IR | TR | IR | ||

| 4M-Noisy | GRIT | 76.6 | 59.6 | 95.5 | 82.9 |

| MAFA | 78.0 | 61.2 | 96.1 | 84.9 | |

| MAFA (Oracle) | 78.5 | 61.2 | 95.8 | 84.2 | |

G.6 Additional analyses on ITC

[Effect of Queue in Momentum Distillation] In this section, we present additional experiments and analyses that delve deeper into the topics discussed in the manuscript. Given the significance of larger batch sizes in contrastive learning, it is standard practice to maintain a sufficient number of negative samples by employing a queue. To investigate the impact of the queue, we compare the performance of momentum distillation with a queue (MD) and without a queue (MDNQ).

Table 17 shows the results of our observations regarding the effect of the queue in the GRIT sampling scenario. We observe that the queue has a detrimental effect on performance in this particular setting. This can be attributed to the substantial increase in the number of negatives, which consequently leads to significantly smaller labels assigned to negatives (compared to the case without a queue). The impact of the queue highlights the crucial requirement of non-zero soft labels in the GRIT sampling scenario.

| Method | Label smoothing | COCO R@1 | Flickr R@1 | ||

|---|---|---|---|---|---|

| TR | IR | TR | IR | ||

| ALBEF | X | 74.4 | 57.6 | 93.5 | 81.7 |

| MDNQ | 73.8 | 57.9 | 93.3 | 81.5 | |

| MD | 74.2 | 57.4 | 93.5 | 81.9 | |

| S-ITC | 73.5 | 56.1 | 92.9 | 79.9 | |

| GRIT-VLP | CS | 76.6 | 59.6 | 95.5 | 82.9 |

| MDNQ | 77.1 | 59.8 | 95.5 | 83.8 | |

| MD | 76.1 | 58.9 | 94.4 | 82.7 | |

| S-ITC | 77.5 | 60.5 | 96.1 | 84.2 | |

[Effect of Mixing parameter ] As depicted in Table 2 (manuscript), the failure of MD and CS to provide soft labels can be attributed to different reasons. MD fails due to the large size of the queue (48000), while CS excessively concentrates only on a few similar samples. Adjusting the parameter alone does not resolve this issue, as the underlying problem lies in the inclination of the model to favor the closest few samples. Moreover, naively increasing the value of poses another challenge in training the model which amplifies the portion of uncertain labels of the momentum model (or the model itself) rather than ground-truth labels.

On the contrary, as shown in Table 18, the S-ITC method demonstrates robustness across a wide range of values from 0.1 to 0.7. This highlights the distinct approach of S-ITC, which deviates from the inclination of the model towards the closest samples and effectively assigns non-zero labels to majorities of the negatives in the GRIT sampling scenario.

| MSCOCO (5K test set) | |||||||

|---|---|---|---|---|---|---|---|

| TR | IR | ||||||

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | Avg | |

| 0.1 | 75.5 | 93.3 | 96.9 | 58.4 | 82.7 | 89.3 | 82.7 |

| 0.3 | 75.8 | 93.0 | 96.4 | 58.8 | 82.7 | 89.4 | 82.7 |

| 0.5 | 75.6 | 92.9 | 96.6 | 59.0 | 82.8 | 89.6 | 82.7 |

| 0.7 | 75.3 | 93.1 | 96.7 | 58.3 | 82.6 | 89.3 | 82.6 |

| 0.9 | 73.0 | 92.1 | 96.2 | 56.4 | 81.3 | 88.6 | 81.3 |

| Model | NoCaps Zero-shot (validation set) | COCO Fine-tuned Karpathy test | ||||||||

| in-domain | near-domain | out-domain | overall | |||||||

| C | S | C | S | C | S | C | S | B@4 | C | |

| BLIP-2 | 109.55 | 14.46 | 105.83 | 14.26 | 106.33 | 13.51 | 106.47 | 14.14 | 40.7 | 139.7 |

| BLIP-2+MAFA | 114.67 | 15.19 | 111.74 | 14.88 | 113.29 | 14.27 | 112.48 | 14.80 | 41.6 | 142.5 |

G.7 Details on experiments with BLIP-2

BLIP-2 aims to propose an efficient vision-language pre-training framework that connects off-the-shelf frozen pre-trained image encoders and frozen large language models. To bridge the modality gap, BLIP-2 adopts a lightweight Querying Transformer (Q-Former), which is pre-trained in two stages. In stage 1, Q-Former is pre-trained to extract visual features relevant to text from a frozen image encoder with ITC, ITM, and (autoregressive) LM objectives. Thus, the training objectives are almost the same as those of ALBEF [35] and BLIP [36]. In stage-2, Q-Former is connected to a frozen LLM and pre-trained with LM loss to generate the text conditioned on the visual representation from Q-Former.

We mainly follow the default implementation setting of BLIP-2. Namely, we use ViT-G/14 from EVA-CLIP [14] as the vision encoder and OPT-2.7B [68] as a language decoder. Moreover, Q-Former is initialized with BERT-base [11] and has 32 learnable queries per single representation. For training and evaluation of BLIP-2, we use 8 A100 GPUs. For stage 1, we use the total batch size as which is the same as the previous setting (in the manuscript). For stage 2, we use the total batch size as . For image-text retrieval fine-tuning, we use as the total batch size. For other hyper-parameters, we use exactly the same as the default setting from BLIP-2 [37]. For GRIT sampling, we choose as search space, which is times of the batch size. Since BLIP-2 uses 32 queries per image, we extract a single query representation that has a maximum similarity with its corresponding text and then use this representation to find similar examples for GRIT sampling.

For MAFA, to accelerate the efficiency of the experiment, we omit the re-sampling strategy in ITM and usage of additional positives, and we set as for S-ITC. Note that GRIT sampling and MAFA are only applied in stage-1. In both two stages, we use the 4M-Noisy dataset. Moreover, as mentioned in Section 5 (manuscript), since the exclusive use of GRIT sampling causes the failure of learning, we omit the results of “BLIP-2+GRIT” in stage 2. In addition, to adopt MAFA, we utilize the original BLIP-2 model for Con-D, which is pre-trained with the 4M-Noisy dataset and then fine-tuned with the COCO dataset. For zero-shot VQA tasks, following BLIP-2, we utilize the prompt “Question: Answer:” and beam search with beam width 5 and set length-penalty to -1 for all models.

G.8 Additional results with BLIP-2

[Results on fine-tuned image captioning] We fine-tune models on COCO with epochs with a total batch size of . We use the prompt “a photo of” as the initial input for the LLM decoder (OPT-2.7B model) and train with autoregressive LM loss. For all other hyper-parameters, we use the exact same hyper-parameters as BLIP-2. In fine-tuning, the parameters of the Q-Former and image encoder are only updated while those of LLM are kept frozen. We evaluate models on both the Karpathy test split of MSCOCO and zero-shot transfer ability to NoCaps dataset [1]. The results, which can be observed in Table 19, indicate a similar trend in zero-shot ability. Namely, MAFA significantly enhances the captioning ability. Note that MAFA is exclusively used in stage 1 and not used in stage 2 and the fine-tuning stage.

| Model | VQAv2 | OK-VQA | GQA |

|

Sum | |||

| val | test | test-dev | BLEU@4 | CIDEr | ||||

| 4M | 46.6 | 23.8 | 29.1 | 35.6 | 118.8 | 253.9 | ||

| repeated-6M | 47.9 | 24.9 | 30.8 | 35.8 | 118.3 | 257.7 | ||

| ECM-6M | 47.4 | 27.0 | 30.9 | 37.5 | 123.6 | 266.4 | ||

[Results of stage-2 with extra positives from ECM] To further investigate the wider applicability of ECM in VLP models (w/o ITC and ITM), we conduct additional experiments on BLIP-2 stage-2 model with extra positives generated by ECM. Here, in contrast to the results in Tables 7, 8 and 19 (manuscript), stage-1 model is trained without MAFA. Namely, before training stage-2 model, by applying GRIT-sampling and using the frozen BLIP-2 stage-1 model as our Con-D, we generate 2M additional positives with a single forward pass, augmenting the original 4M-Noisy dataset. As reported in Table 20, we observe the stage-2 model, which is trained on this “ECM-6M” (4M-Noisy + ECM-generated 2M) dataset, significantly outperforms the baseline trained on “repeated-6M” (4MNoisy + 2M sampled from the same 4M-Noisy) dataset. The scores are 266.4 vs. 257.7 in which the zero-shot results across VQA, OK-VQA, GQA, and COCO captioning tasks are summed. We believe this result validates the effectiveness of ECM-generated positives and underscores the general applicability of our framework.

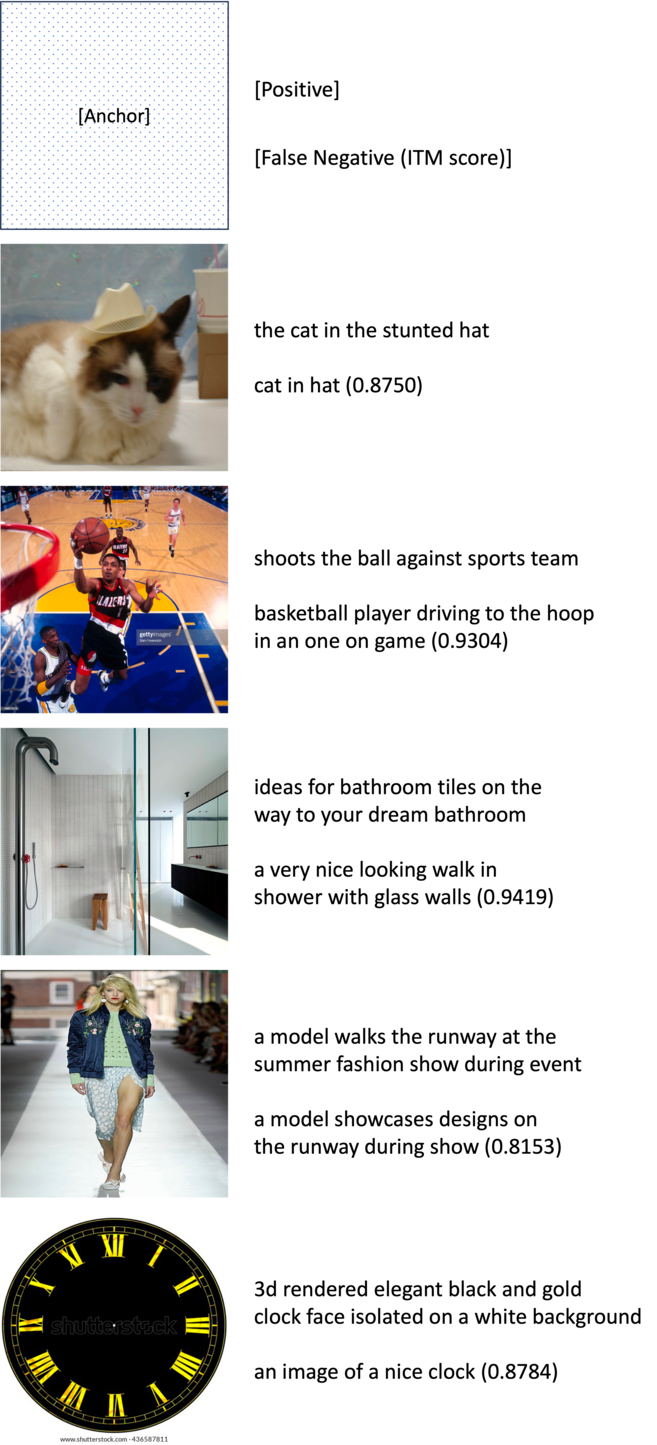

Appendix H Additional examples of new positive connections by ECM in training

We provide additional examples of new positive connections by ECM during training. Figures 7 and 8 show anchors ([Anchor]), their corresponding pairs ([Positive]), and new positives ([False Negative]) constructed by ECM during training. The number in parentheses indicates the ITM score between the anchor and the false negative computed by Con-D.