MachMap: End-to-End Vectorized Solution for Compact HD-Map Construction

Abstract

This report introduces the place winning solution for the Autonomous Driving Challenge - Online HD-map Construction♭. By delving into the vectorization pipeline, we elaborate an effective architecture, termed as MachMap, which formulates the task of HD-map construction as the point detection paradigm in the bird-eye-view space with an end-to-end manner. Firstly, we introduce a novel map-compaction scheme into our framework, leading to reducing the number of vectorized points by % without any expression performance degradation. Build upon the above process, we then follow the general query-based paradigm and propose a strong baseline with integrating a powerful CNN-based backbone like InternImage, a temporal-based instance decoder and a well-designed point-mask coupling head. Additionally, an extra optional ensemble stage is utilized to refine model predictions for better performance. Our MachMap-tiny with IN-K initialization achieves a mAP of on the Argoverse benchmark and the further improved MachMap-huge reaches the best mAP of , outperforming all the other online HD-map construction approaches on the final leaderboard with a distinct performance margin ( mAP at least).

1 Introduction

As one of the fundamental modules in the autonomous-driving, high-definition map (HD-map) provides centimeter level environment information for ego-vehicle navigation, including detailed geometric-topology relationships and semantic map categories, e.g. -, - and -. Recently, with the development of deep neural network, online construction of local HD-map from onboard sensors (cameras) has gradually become a more advantageous and potential solution.

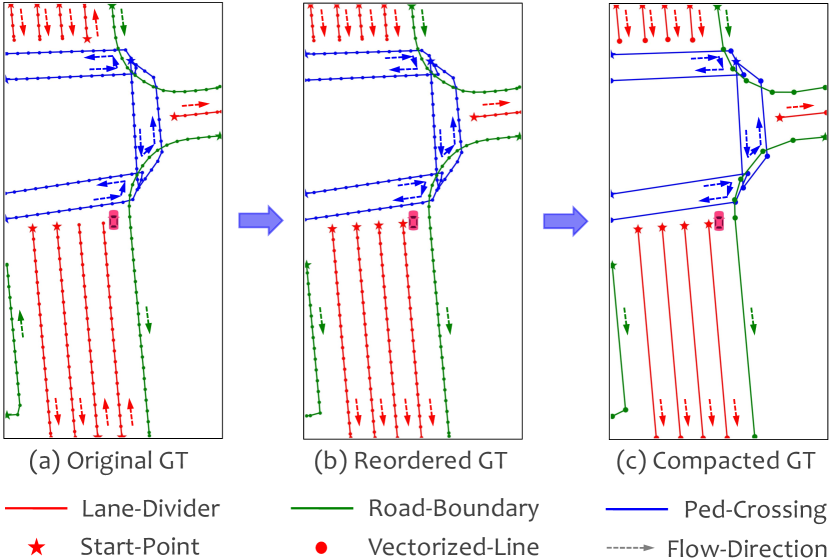

The Online HD-map Construction Track aims to dynamically construct local HD-map from onboard surrounding camera images. In this task, a local HD-map ground truth in Fig.1 (a) is described by a set of map elements with three semantic categories and each element is designed to a polyline, which consists of a set of ordered points, to deal with complicated and even irregular road structures. Our method mainly focuses on three aspects to handle the competition,

(1) map modeling principles. We propose the principles of inter-element direction consistency and intra-element sequence compactness to reduce the intrinsic redundancy of polyline-based map modeling. Concretely, without losing any expression performance, the flow directions of point sequences between different elements should be as consistent as possible, and the point sequences within the same map element should be reserved with as few points as possible.

(2) temporal-fusion instance decoder. Based on the multi-cameras features from image backbone, we then employ a temporal-fusion based bird-eye-view (BEV) feature decoder for view-transformation and a bottom-up point-wise instance decoder to extract point descriptor.

(3) point-mask coupling head. Considering that different map elements have distinct shape priors, e.g. - is usually polyline and - is convex polygon, we equip each semantic map category with both segmentation and detection heads under the MaskDINO [6] framework, which greatly improves the flexibility and scalability of our model. Furthermore, the above multi-task training strategy also accelerates the model convergence performance.

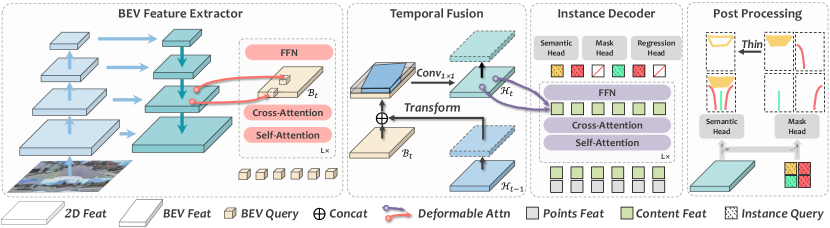

Inspired by the above motivations, we propose an end-to-end vectorized HD-map construction architecture, named as MachMap. The entire framework is illustrated in Fig.2 and all technical details are presented in the next section.

2 Method

This section introduces the details of our winning method. We first present the map compaction pipeline, which significantly reduces the difficulty of model training and makes the inference results more compact and efficient. Next the design scheme of each module is presented, and some task-specific improvements are integrated into some off-the-shelf methods. Lastly, we introduce our novel ensemble ideas, which can further enhance our approach.

2.1 Map Compaction Pipeline

Different from rasterized scheme, vectorized HD-maps in the given annotations explicitly express the spatial relation between map elements and instance information in their respective categories. Following the newly proposed map modeling principles, we compact the original evenly sampled map representation in two steps, namely orientation rearrangement and redundancy removal.

(1) inter-element direction consistency. The directions of elements in original map annotations are in a state of chaos, such as lane-dividers of moving forward from front-to-back or back-to-front as shown in Fig.1 (a). We noticed that the inconsistency of directions can negatively affect the training of the model. To reduce the discreteness of map organization, we follow a certain strategy to make the orientation of map elements as orderly as possible, and guarantee that this process does not lose any details of the map. Specifically, under the principle of conforming to the observation order of human eyes, a simple and intuitive strategy is to reorganize all polylines according to the rules from-front-to-back and from-left-to-right in bird-eye-view space.

(2) intra-element sequence compactness. Vectorized maps with evenly-distributed points have redundant semantic information, while compacted-points representation is sparse, which is more suitable for expression and storage of maps. To this end, we extract keypoints for all elements to supervise model training. Concretely, we adopt Douglas-Peucker algorithm [11] and Visvalingam algorithm [12] to condense a polyline composed of line segments to a similar polyline with fewer points. For these methods, points are removed in order of least to most importance, with importance related to the distance and triangular area respectively.

2.2 MachMap Architecture

We follow the general query-based design paradigm [5, 10], as illustrated in the Fig.2, where the overall structure can be roughly divided into three parts: BEV feature extractor, temporal-fusion instance decoder, and point-mask coupling head. Afterwards, we introduce each module sequentially according to the flow of information.

Backbone. Giving a list of D images , extracting unified textures representation within images is a top-priority task. With regards to this, we utilize a shared InternImage [13] as strong backbone to extract image features, which employs deformable convolutions [1] as its core operator and has been meticulously designed. During the downsampling process, a series of feature maps in varying scales are generated and then aggregated by the Bi-directional Feature Pyramid Network, i.e. BiFPN [16].

Multi-view Encoder. Since the map vectors we ultimately need to predict lay in D space, it is necessary to elevate surrounding features from camera-view to D ego-view space. Rather than direct transformation to D-view, we predefine a set of reference points and arrange them in a BEV raster. After that, we employ the camera intrinsics and extrinsics to project them onto several images and then aggregate the surrounding features. By averaging on the z-axis, we obtain the final bird-eye-view features .

Temporal Fusion Module. The provided dataset is collected and organized chronologically, with precise poses for each sample. This makes it possible to align current features with previous ones by poses, resulting in a larger real-world perception range beyond the current position. We follow the long-term fusion strategy proposed in VideoBEV [3], which affines the previous hidden state of BEV feature into the current one using vehicle ego pose. The latter is concatenated with the current BEV feature in the channel dimension and fused by a convolutional layer as,

| (1) | ||||

| (2) |

where denotes the concatenation operator. The fused features are cached as the next hidden state and used as input for subsequent instance decoder. In practice, since the timestamp offset between adjacent frame is too small, we group the timestamps at specific intervals to expand the performance gain brought by this temporal-fusion module.

Instance Decoder. To benefit from multi-task loss, we opt for the MaskDINO [6] framework, which conducts object detection and segmentation tasks simultaneously. Each query consists of content and position vectors, with the former is utilized to generate instance masks, while the latter undergoes iterative updates to yield normalized coordinates directly. Yet, due to the hierarchical relationship between map elements and their corresponding points sets, we adopt the query design paradigm in MapTR [7] for better adaptation to map element modeling. This implies that the query is point-wise, and a set of which can be aggregated to form a single instance and obtain its corresponding instance mask.

Output Head. Using only coordinates from point regression has some drawbacks. Firstly, there is a keypoint mismatching issue, where a well predicted instance may occur a mismatched point which belongs to other instance, as a result, a single bad apple spoils the whole bunch. Secondly, for -, there exists a strong geometric prior, which is difficult to depict through vectors. However, masks not only can effectively constrain the geometry shape of instances, but it also impose a significant penalty on mismatched points during training. Empirically, we obtain - and - through post-processing of instance masks, while point regression is employed only for -. As the common practice, we adopt cross-entropy and dice loss [9] for masks and L loss for point regression. In addition, we also add semantic loss to the BEV features as auxiliary supervision, and our final loss as,

| (3) |

where is the balance weight for different losses.

2.3 Ensemble Strategy

The predicted map vectors of our model are represented in normalized coordinates, which are then rescaled to the actual range in the ego coordinate system during the post-processing stage. Yet the actual visible content from images greatly exceeds this range, which often leads to ambiguities in the existence of certain elements at the border position of exact map region that may be ignored by a single model. Accordingly, the use of ensemble techniques can mitigate prediction variability and curb overfitting by summarizing multiple models together.

By utilizing chamfer distance as a metric for measuring the similarity between instances, we present the ensemble algorithm in the Algorithm 1. Given a base set and a list of proposals, which are derived from multiple other predictions and sorted by confidence in descending order, we can compare each proposal with the base set one by one. If their similarity is low, we can consider them as missed true positives and add them to the base set. In addition to multi-model ensemble, we also conduct multi-frame ensemble. Despite the utilization of temporal fusion module, some instances are still absent, which were accurately recalled in previous frames. This inspires us to compensate some erratic predictions by ensemble with predictions from previous frames. It’s worth noting that the integration of multi-frame and multi-model can share the same algorithm, with only modifying the source of candidate proposal list.

| Category | # images | # instances | # points (raw) | # points (compacted) | AP0.2m | AP0.3m | AP0.4m | AP0.5m |

|---|---|---|---|---|---|---|---|---|

| - | 19523 | 55686 | 3593548 | 252219 () | 98.33 | 99.46 | 99.92 | 100.00 |

| - | 26222 | 133186 | 7426425 | 335534 () | 99.91 | 99.98 | 99.99 | 100.00 |

| - | 27283 | 84384 | 7018193 | 469533 () | 97.38 | 99.70 | 99.92 | 100.00 |

| ID | Data | Backbone | PreTrain | # Epochs | w/o Opt. | AP | AP | AP | mAP |

| train | tiny | ADEK | 6 | ✗ | 61.01 | 65.87 | 65.70 | 64.19 | |

| train | tiny | ADEK | 72 | ✗ | 76.75 | 73.51 | 74.68 | 74.98 | |

| train+val | tiny | ADEK | 72 | ✗ | 78.34 | 74.74 | 76.02 | 76.37 | |

| train+val | tiny | 6 | ✓ | 84.82 | 79.66 | 80.63 | 81.70 | ||

| train+val | huge | ADEK | 36 | ✗ | 81.45 | 75.34 | 77.14 | 77.98 | |

| train+val | huge | from - | 12 | ✓ | 86.66 | 81.54 | 82.29 | 83.50 | |

| train+val | tiny | IN-K | 72 | ✗ | 76.46 | 72.32 | 75.91 | 74.90 | |

| train+val | tiny | from - | 6 | ✓ | 82.01 | 76.23 | 79.10 | 79.11 |

3 Experiments

3.1 Existing Benchmarks

The Argoverse [14] contains , and video clips in the training, validation, and testing sets respectively. Each sequence has -DOF map-aligned pose and seven ring views with the image resolution of or pixels. The given data from challenge is a subset of Argoverse. We utilize all frames from the challenge training set to verify the effect of different ablations but finally all frames from training and validation sets are used to reach better performance. We focus on three categories, i.e. -, - and -.

3.2 Implementation Details

Training Setup. We adopt common data augmentation, e.g. random scaling, cropping, and flipping. At the same time, an IDA [4] matrix is updated to record view transformation to maintain spatial consistency. Then the final input shape is fixed at , as this aspect ratio is close to the front view, i.e. , which contains the most abundant visual map information. For BEV features, the default spatial shape of BEV queries is , which corresponds to the perception ranges in lidar coordinate system are for the Y-axis and for the X-axis. Note all map masks are interpolated to to ensure that distinct elements can be easily distinguished without occupying too much memory. As for the hyperparameters of loss function, we set , , , to , , , and respectively.

| Rank | Team | AP | AP | AP | mAP |

|---|---|---|---|---|---|

| Mach (ours) | 86.66 | 81.54 | 82.29 | 83.50 | |

| MapNeXt | 68.94 | 76.66 | 75.34 | 73.65 | |

| SCR | 70.37 | 75.08 | 74.73 | 73.39 | |

| LTS | 72.67 | 73.20 | 71.80 | 72.56 | |

| USTC-VGG | 69.05 | 73.24 | 70.76 | 71.02 |

Training Strategy. We train our model with a total batch of on GPUs. The AdamW [8] optimizer is employed with a weight decay of and a learning rate of . Our training process consists of two stages: base training and fine-tuning. Firstly, we initialize the InternImage [13] with public pretrained weights [2, 15] and then train our model for epochs without any tricks except a multi-step schedule with milestone and . Afterward, we apply all proposed improving techniques to fine-tune the model for extra epochs with a learning rate of .

3.3 Experimental Results

Table 1. Our statistical results show the compacted map can reduce more than points without expression performance losing under the threshold of , even it can still maintain more than performance under stricter .

Table 2. Comparing the results in row-, training more epochs brings a performance gain of more than points, which shows that accelerating the convergence speed is still a vital future work. Compared with row-, using the proposed improving techniques can always bring more than points of increase. Moreover, even starting with IN-K as pretrained weights, our model still achieves 79.1.

Table 3. We succeed the championship with a performance advantage of mAP over the second place, demonstrating the effectiveness of our proposed MachMap method.

References

- [1] Jifeng Dai, Haozhi Qi, Yuwen Xiong, Yi Li, Guodong Zhang, Han Hu, and Yichen Wei. Deformable convolutional networks. In Proceedings of the IEEE international conference on computer vision, pages 764–773, 2017.

- [2] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pages 248–255. Ieee, 2009.

- [3] Chunrui Han, Jianjian Sun, Zheng Ge, Jinrong Yang, Runpei Dong, Hongyu Zhou, Weixin Mao, Yuang Peng, and Xiangyu Zhang. Exploring recurrent long-term temporal fusion for multi-view 3d perception. arXiv, 2023.

- [4] Junjie Huang, Guan Huang, Zheng Zhu, Ye Yun, and Dalong Du. Bevdet: High-performance multi-camera 3d object detection in bird-eye-view. arXiv preprint arXiv:2112.11790, 2021.

- [5] Feng Li, Hao Zhang, Shilong Liu, Jian Guo, Lionel M Ni, and Lei Zhang. Dn-detr: Accelerate detr training by introducing query denoising. In CVPR, 2022.

- [6] Feng Li, Hao Zhang, Huaizhe Xu, Shilong Liu, Lei Zhang, Lionel M Ni, and Heung-Yeung Shum. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In CVPR, 2023.

- [7] Bencheng Liao, Shaoyu Chen, Xinggang Wang, Tianheng Cheng, Qian Zhang, Wenyu Liu, and Chang Huang. Maptr: Structured modeling and learning for online vectorized hd map construction. arXiv preprint arXiv:2208.14437, 2022.

- [8] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. 2019.

- [9] F. Milletari, N. Navab, and S. A. Ahmadi. V-net: Fully convolutional neural networks for volumetric medical image segmentation. IEEE, 2016.

- [10] Limeng Qiao, Wenjie Ding, Xi Qiu, and Chi Zhang. End-to-end vectorized hd-map construction with piecewise bezier curve. In CVPR, 2023.

- [11] Mahes Visvalingam and J Duncan Whyatt. The douglas-peucker algorithm for line simplification: re-evaluation through visualization. In Computer Graphics Forum, volume 9, pages 213–225. Wiley Online Library, 1990.

- [12] Mahes Visvalingam and Peter J Williamson. Simplification and generalization of large scale data for roads: a comparison of two filtering algorithms. Cartography and Geographic Information Systems, 22(4):264–275, 1995.

- [13] Wenhai Wang, Jifeng Dai, Zhe Chen, Zhenhang Huang, Zhiqi Li, Xizhou Zhu, Xiaowei Hu, Tong Lu, Lewei Lu, Hongsheng Li, et al. Internimage: Exploring large-scale vision foundation models with deformable convolutions. In CVPR, 2023.

- [14] B. Wilson, W. Qi, T. Agarwal, J. Lambert, J. Singh, S. Khandelwal, B. Pan, R. Kumar, A. Hartnett, and J. K. Pontes. Argoverse 2: Next generation datasets for self-driving perception and forecasting. In Neural Information Processing Systems, 2021.

- [15] Bolei Zhou, Hang Zhao, Xavier Puig, Sanja Fidler, Adela Barriuso, and Antonio Torralba. Scene parsing through ade20k dataset. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 633–641, 2017.

- [16] Lei Zhu, Zijun Deng, Xiaowei Hu, Chi-Wing Fu, Xuemiao Xu, Jing Qin, and Pheng-Ann Heng. Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection. In ECCV, 2018.