Machine learning the nuclear mass

Abstract

Background: The masses of about 2500 nuclei have been measured experimentally, however more than 7000 isotopes are predicted to exist in the nuclear landscape from H (Z=1) to Og (Z=118) based on various theoretical calculations. Exploring the mass of the remains is a hot topic in nuclear physics. Machine learning has been served as a powerful tool in learning complex representations of big data in many fields. Purpose: We use Light Gradient Boosting Machine (LightGBM) which is a highly efficient machine learning algorithm to predict the masses of unknown nuclei and to explore the nuclear landscape in neutron-rich side from learning the measured nuclear masses. Methods: Several characteristic quantities (e.g., mass number, proton number) are fed into LightGBM algorithm to mimic the patterns of the residual between the experimental binding energy and the theoretical one given by the liquid-drop model (LDM), Duflo-Zucker (DZ) mass model, finite-range droplet model (FRDM), as well as the Weizsäcker-Skyrme (WS4) model, so as to refine these mass models. Results: By using the experimental data of 80 percent of known nuclei as the training dataset, the root mean square deviation (RMSD) between the predicted and the experimental binding energy of the remaining 20% is about 0.2340.022 MeV, 0.2130.018 MeV, 0.1700.011 MeV, and 0.2220.016 MeV for the LightGBM-refined LDM, DZ, WS4, and FRDM models, respectively. These values are of about 90%, 65%, 40%, and 60% smaller than the corresponding origin mass models. The RMSD for 66 newly measured nuclei that appeared in AME2020 is also significantly improved on the same foot. One-neutron and two-neutron separation energies predicted by these refined models are in consistence with several theoretical predictions based on various physical models. In addition, the two-neutron separation energy of several newly measured nuclei (e.g., some isotopes of Ca, Ti, Pm, Sm) predicted with LightGBM-refined mass models are also in good agreement with the latest experimental data. Conclusions: LightGBM can be used to refine theoretical nuclear mass models so as to predict the binding energy of unknown nuclei. Moreover, the correlation between the input characteristic quantities and the output can be interpreted by SHapley Additive exPlanations (SHAP, a popular explainable artificial intelligence tool), this may provide new insights on developing theoretical nuclear mass models.

I Introduction

The mass of nuclei, which is of fundamental importance to explore nuclear landscape and properties of nuclear force, plays a crucial role in understanding many issues in both the fields of nuclear physics and astrophysics 1 ; 2 ; 3 ; 4 ; Niu:2018olp ; 44 . It is known that more than 7000 nuclei in the nuclear landscape from H (Z=1) to Og (Z=118) are predicted to be existed according to various theoretical models, while about 3000 nuclei have been found or synthesized in experimental and the masses of about 2500 nuclei have been measured accurately 5 ; 6 . Exploring the masses of the remains is of particular interest for both nuclear experimental and theoretical community. On the experimental side, facilities, such as HIRFL-CSR in China, RIBF at RIKEN in Japan, cooler-storage ring ESR and SHIPTRAP at GSI in Germany, CPT at Argonne and LEBIT at MSU in US, ISOLTRAP at CERN, JYFLTRAP at Jyväskylä in Finland, TITAN at TRIUMPH in Canada, are partly dedicated to measuring the nuclear mass, especially for nuclei round the driplines. On the theoretical side, various models have been developed to study nuclear mass by considering different physics, such as finite-range droplet model (FRDM) 7 ; 8 , the Weizsäcker-Skyrme (WS) model 9 , Hartree-Fock-Bogoliubov (HFB) mass models 10 ; 11 ; 12 , the relativistic mean-field (RMF) model Geng:2005yu , relativistic continuum Hartree-Bogoliubov (RCHB) theory Xia:2017zka . Though tremendous progress has been made in both experimental and theoretical sides, exploring mass of nuclei around dirplines is still a great challenge for both sides.

Machine learning which is the subset of artificial intelligence has been widely applied for analyzing data in many branches of science, such as in physics, e.g., Refs. ROMP ; Nature1 . In nuclear physics, a Bayesian neural network (BNN) has been applied to reduce the mass residuals between theory and experiment, and a significant improvement in the mass predictions of several theoretical models was obtained after BNN refinement Utama:2015hva ; Utama:2017wqe ; Niu:2018csp , e.g., the root mean square deviation (RMSD) of the liquid-drop model (LDM) was reduced from about 3 MeV to 0.8 MeV. Later on, BNN approach is also applied to study nuclear charge radii Utama:2016tcl , -decay half-lives Niu:2018trk , fission product yield PJC , fragment Production in spallation reaction Ma:2020bic ; Ma:2020mbd . Besides BNN, other machine learning or deep learning algorithms also have been employed in studying of nuclear reactions, e.g., Refs. PLG ; Du:2019civ ; Steinheimer:2019iso ; Song:2021rmm ; Wang:2020tgb ; Li:2020qqn . Focusing on nuclear mass, besides BNN in Refs. Utama:2015hva ; Utama:2017wqe ; Niu:2018csp , the Levenberg-Marquardt neural network approach Zhang:2017zvb , Gaussian processes shelley ; Neufcourt:2018syo , decision tree algorithm Carnini:2020lvr , the Multilayer Perceptron (MLP) algorithm MLP also have been applied to refine nuclear mass models.

Indeed, studying nuclear mass with machine learning algorithms is not a new topic and it can be traced back to at least 1993, see e.g., Refs. Gernoth:1993dqa ; Athanassopoulos:2003qe ; Clark:2006ua and reference therein. In Ref. Gernoth:1993dqa , the capability of multilayer feedforward neural networks for learning the systematics of atomic masses and nuclear spins and parities with high accuracy has been found. This topic is flourishing again because of the rapid development of computer science and artificial intelligence. In 2016, Light Gradient Boosting Machine (LightGBM) which is a tree based learning algorithm was developed by Microsoft LightGBM . It is a state-of-the-art machine learning algorithm which has achieved better performances in many machine learning tasks. Therefore, it would be interesting to explore whether LightGBM algorithm can achieve better accuracy than BNN on the task of predicting nuclear mass.

II LightGBM and the input features

LightGBM refers to a recent improvement of gradient boosting decision tree (GBDT) that provides efficient implementation of gradient boosting algorithms. It is becoming more popular by the day due to its efficiency and capability of handling large amounts of data. LightGBM has leaf-wise growth of trees, rather than a level-wise growth. After the first partition, the next split is performed only on the leaf node that adds more to the information gain.

The primary advantage of LightGBM is the change in training algorithm that speeds up the optimization process dramatically and results in a more effective model in many cases. More concretely, to speed up the training process, LightGBM uses a histogram-based methodology to select the best segmentation. For any continuous variable, instead of using individual values, these are divided into bins or buckets, which can accelerate the training process and reduce memory usage. In addition, LightGBM contains two novel techniques: Gradient bases One-Side Sampling (GOSS), which keeps all the instances of large gradient and performs random sampling on the instances with small gradient, and Exclusive Feature Bundling (EFB), which helps to bundle multiple features into a single feature without losing any information. Furthermore, as a decision tree-based algorithm, LightGBM also has high level of interpretability, allowing the results obtained in machine learning model to be checked against previous knowledge regarding nuclear mass. For example, one can find which feature is more important for predicting nuclear mass, this would be helpful to further improve nuclear mass model.

In this work, the binding energies of 2408 nuclei between 16O and 270Ds from the atomic mass evaluation (AME2016) 6 are employed as the training and testing dataset. LightGBM is trained to learn the residual between the theoretical prediction and the experimental binding energy, . Four theoretical mass models are adopted in this work to obtain , including the LDM Zhang:2017zvb , DZ Duflo:1995ep , FRDM 7 ; 8 , and WS4 9 . After LightGBM learns the behaviour of the residual , the binding energy of an unknown mass nucleus can be obtained via =+. It is found that the RMSD of these four theoretical mass models can be significantly improved after LightGBM refinement.

For the LDM model, nucleus is regarded as a non-compressible droplet, which contains the volume energy, surface energy, Coulomb energy of proton repulsion, the symmetry energy related to the ratio of neutrons to protons, and the pairing energy of the neutron-proton pairing effect. It can be described as follows:

| (1) | ||||

Where is the pairing energy given by the following expression:

| (2) |

In the above formula, A, Z, N and are the mass number, proton number, neutron number and the third component of isospin , , , , , , , , , are adjustable parameters with the values given in Table 1. Based on these parameters the binding energy of 2408 nuclei theoretical and experimental values as an RMSD is MeV.

| Parameter | Value (MeV) |

|---|---|

| -15.4963 | |

| 17.7937 | |

| -1.8232 | |

| -2.2593 | |

| 0.7093 | |

| -1.2739 | |

| 4.6919 | |

| 4.7230 | |

| -6.4920 |

This work mainly aims to find the relationship between the feature quantity of each nucleus and with the LightGBM model. For each nucleus, we selected 10 physical quantities (cf. Table 2) as the input features. It is known that nuclear binding energy and nuclear structure are linked, therefore, we selected four physical quantities related to the shell structure, among which and are the shells where the last proton and neutron are located, and the level of the shell is given by the magic numbers. The number of protons between 8, 20, 50, 82 and 126 corresponds to of 1, 2, 3, 4, and the number of neutrons between 8, 20, 50, 82, 126 and 184 corresponds to of 1, 2, 3, 4, 5. In addition, and are the absolute values of the difference between the number of protons, the number of neutrons, and the nearest magic number, respectively, which represent the distance between the number of protons, the number of neutrons, and the nearest magic number. N pair is an index that considers the proton-neutron pairing effect, the odd-odd nucleus is 0, the odd-even nucleus or even-odd nucleus is 1, and the even-even nucleus is 2.

| Features | Description |

|---|---|

| A | mass number |

| Z | proton number |

| N | neutron number |

| N/Z | ratio of neutron to proton |

| theoretical value from LDM | |

| = 0,1,2 | dependence on pair effect; |

| for odd-odd,odd-even,even-even | |

| = 1,2 | shell of the last proton; |

| for ,, | |

| = 1,2 | shell of the last neutron; |

| for ,, | |

| the distance between the proton number | |

| and the nearest magic number; | |

| the distance between the neutron number | |

| and the nearest magic number; | |

In this work, the value of num_boost_round (maximum number of decision trees allowed) is 50000, num_leaves (maximum number of leaves allowed per tree) is 10, max_depth (maximum depth allowed per tree) is -1 and other parameters are basically set as their default values of the LightGBM model. Varying these parameters would not alter the results significantly. During the training process, LightGBM will generate a decision tree based on the relevant information between the features of the training set and . 10-fold cross-validation, which is a technique to evaluate models by partitioning the original dataset into 10 equal size subsamples., is also applied to prevent overfitting and selection bias. After training, the model will make predictions on the testing set. Each nucleus in the testing set traverses the decision tree grown during model training. Each decision tree will give its contribution to the predicted value according to the feature quantity of each nucleus. The sum of the contributions of all decision trees is the predicted value given by the final model.

III Result

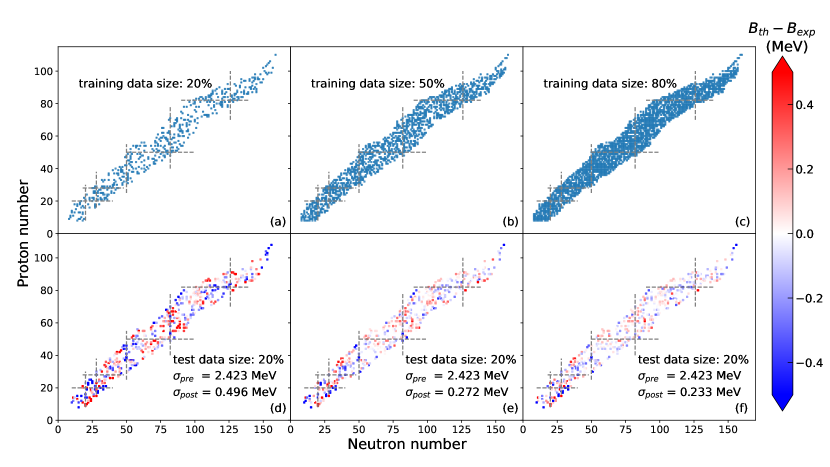

III.1 Predictions on the binding energy based on LDM

In this section, LightGBM is trained to learn the residual between the LDM and experimental binding energies. For this purpose, binding energies of the 2408 nuclei between 16O and 270Ds from AME2016 are split into training and testing data sets. We note here that, nuclei with proton (neutron) number smaller than 8 and with relatively large experimental uncertainties in AME2016 are not used. First, the influence of training size on the predicted binding energy is examined, as displayed in Fig.1. We randomly select 482 (about 20% of the 2408 nuclei) nuclei to constitute the training set. The RMSD of LDM for the selected 482 nuclei is about 2.458 MeV, after the LightGBM refinement, the RMSD is reduced to 0.496, 0.272, 0.233 MeV when 482, 1204, and 1926 nuclei are used to train the LightGBM, respectively. This means that LightGBM has been able to capture the missing physics of the LDM and to decode the correlation between the input features and the residual, so as to further improve the agreement with experimental data.

In addition, it can be seen that the deviation between the experimental and LightGBM-refined LDM predictions for nuclei with the small number of proton and neutron is usually larger, this may due to the fact that the microstructure effect in the light-mass nuclei is strong, and there are less data on the light-mass nuclei in the training set. The value of the above mentioned RMSD will fluctuate when the training and test data sets are randomly selected, because for some of nuclei (i.e., nuclei around magic number) are large and some of are small. To evaluate this issue, we randomly split the 2408 nuclei into training and testing data sets 500 times with each ratio (i.e., 4:1, 1:1, and 1:4), the RMSD and its density distribution are plotted in Fig.2 and Fig.3. As observed in Fig.2, fluctuation in the RMSD is the largest of all when the ratio of training size to testing size is 1:4. The RMSD for 1926 nuclei predicted by LightGBM-refined LDM with learning the binding energy of 482 nuclei is about 0.5080.035 MeV, this result is comparable to many physical mass models. With the training data set is built from 1926 nuclei and the remaining 482 nuclei constitute the testing data set, the RMSD is obtained to be 0.2340.022 MeV, this performance is better than many physical mass models.

Fig. 4 shows the residual obtained from the LDM and LightGBM-refined LDM. The results from nine runs with randomly selected 80% of 2408 nuclei as the training set and the remaining 20% as the testing set are displayed. It can be seen that the residual obtained with the original LDM is large, especially for nuclei around magic number, due to the absence of shell effect in the LDM. After the refinement of LightGBM, is considerably reduced, especially for nuclei with mass number larger than 60. The performance of LightGBM for nuclei with mass number smaller than 60 is not as good as that for nuclei with large mass number, the same as we already observed in Fig.1. This could be improved by feeding more relevant features to LightGBM.

III.2 Predictions on the binding energy based on different mass models

| LDM | DZ | WS4 | FRDM | |||

| Training set | ||||||

| LMNN by H. F. Zhang Zhang:2017zvb | 0.235 | 0.325 | — | 0.348 | ||

| BNN by Z. M. Niu Niu:2018csp | — | — | 0.176 | 0.187 | ||

| NN by R. Utama Utama:2015hva | 0.466 | 0.274 | — | 0.342 | ||

| NN by A. Pastore Pastore:2019lco | — | 0.324 | — | — | ||

| Trees by M. Carnini Carnini:2020lvr | 2.070 | 0.471 | — | — | ||

| LightGBM in this work | ||||||

| Testing set | ||||||

| LMNN by H. F. Zhang | 0.256 | 0.329 | — | 0.368 | ||

| BNN by Z. M. Niu | — | — | 0.212 | 0.252 | ||

| NN by R. Utama | 0.486 | 0.278 | — | 0.352 | ||

| NN by A. Pastore | — | 0.358 | — | — | ||

| Trees by M. Carnini | 2.881 | 0.569 | — | — | ||

| LightGBM in this work |

In the previous section, the capability of LightGBM to refine LDM has been exhibited. In this section, besides LDM, three popular mass models, i.e., DZ, WS4 and FRDM, are tested as well. To do so, the between experimental binding energy and the one obtained from each mass model is fed to LightGBM, and we randomly split the 2408 nuclei into training and testing groups with the ratio of 4:1, and run 500 times for each mass model. The distribution of RMSD on the training and testing data sets are displayed in Fig.5. In Tab. 3, the performance of serval ML refined mass models are compared. It can be seen that the typical value of RMSD on the training data set is only about 0.05-0.1 MeV, which is the smallest of all, to our best knowledge, the highest performance mass model. The typical value of RMSD on the testing data set is bout 0.2 MeV, which is also smaller than others. In general, significant improvements of about 90%, 65%, 40%, and 60% after the LightGBM refinement on the LDM, DZ, WS4, and FRDM are obtained, indicating the strong capability of LightGBM to improve theoretical nuclear mass models.

Very recently, the AME2020 was published, thus it is interesting to see whether the LightGBM-refined mass models also work well for newly measured nuclei that appeared in the AME2020 mass evaluation. The comparison of the binding energy obtained with LDM, DZ, WS4, and FRDM and LightGBM-refined mass models on the 66 newly measured nuclei that appeared in the AME2020 are illustrated in Fig.6. The RMSD of the original mass models on these newly measured nuclei are 2.468, 0.821, 0.350, and 0.778 MeV for the LDM, DZ, WS4, and FRDM, respectively. After the refinement of LightGBM, the RMSD of the above four mass models is significantly reduced to 0.452, 0.320, 0.222, and 0.292 MeV.

III.3 Extrapolation of Neutron Separation Energy

Single and two-neutron separation energies are of particular interest, because they provide information relevant to shell and subshell structure, nuclear deformation, paring effects, as well as the boundary of the nuclear landscape. They can be calculated by the following formula:

| (3) |

Good performance of the LightGBM-refined mass models on the prediction of nuclear binding has been shown, it is interesting to see whether the single and two-neutron separation energies also can be reproduced well on the same foot. Fig.7 compares the single neutron separation energy of Ca, Zr, Sn, and Pb isotopic chains given by different theoretical models and the experimental data from AME2016. All predictions are in good agreement with experimental data whenever there has data, while discrepancy appears as the increase of neutron number. The general trend of the as a function of neutron number obtained with LightGBM-refined LDM and WS4 are similar as that obtained with other nuclear mass models, e.g., the odd-even staggering also can be observed.

The latest experimental measurements of the two-neutron separation energy of the four elements (Ca, Ti, Pm, Sm) are compared with various theoretical model calculations in Fig. 8. It can be seen that the newly measured can be well reproduced by LightGBM-refined LDM and WS4 models, particularly, obtained with LightGBM-refined LDM lies much more closely to the experimental data than that obtained with LDM. For example, the sharp decrease of around magic number cannot be reproduced by LDM, while this issue can be fixed after the refinement of LightGBM. Good performance of LightGBM-refined mass models on both and indicating again the strong capacity of LightGMB on refining nuclear mass model.

III.4 Interpretability of the model

As a decision-tree based algorithm, one of advantage of LightGBM is its excellent degree of explainability. This is important because, as physicist, one expecting ML algorithm not only has a good performance on refining nuclear mass models, but also can provide some underlying physics that is absent from the original nuclear mass models. Understanding what happens when ML algorithms make predictions that could help us further improving our knowledge about the relationship between the input feature quantity and the predicted value. One of the possible way to understand how the LightGBM algorithm gives a particular prediction is to appreciate the most important features that drive the model. For this purpose, SHapley Additive exPlanation (SHAP) shap , which is one of the most popular feature attribution methods, is applied to obtain the contributions of each feature value to the prediction. Fig.10 illustrates the ranking of importance of the input 10 features. The top is the most important feature, while the bottom is the most irrelevant feature, to predict the residual between the experimental and theoretical binding energy. It can be seen that the importance ranks of input features are different for different mass models. Because shell effects are not included in LDM, the residual around magic numbers are usually larger (also can be seen in Fig.4). As a result, and are more important to predict the between LDM calculation and experimental data. To demonstrate the meaning of SHAP value, residual obtained from LDM and SHAP value are shown in Fig.10. In the upper panel of Fig.10, around magic number, i.e., is close to 0, larger difference between LDM calculated and experimental binding energy is existed, especially for nuclei with larger neutron number. While very similar behavior for SHAP value can be seen in the lower panel. It implies that by adding a -related term in the LDM, the accuracy of LDM on calculating nuclear binding energy can be improved to some extend. For FRDM, the neutron number stands for the most relevant feature and the SHAP value for smaller is usually larger. Indeed, the residual for nuclei with smaller neutron number is larger has already been observed in FRDM paper, i.e., Fig. 6 of Ref. Moller:2015fba . In addition, one sees that , , and are three of the most irrelevant features to predict the residual , it means that the performance on predicting may not be influenced if they are removed from input features.

IV Conclusion and outlook

To summarize, several features are fed into the LightGBM algorithm to study the residual between the theoretical and experimental binding energies, it turns out that LightGBM algorithm can mimic the patterns of with high accuracy, so as to refine theoretical mass models. In this work, significant reductions on the RMSD of about 90%, 65%, 40%, and 60% after the LightGBM refinement on the LDM, DZ, WS4, and FRDM are obtained, indicating the strong capability of LightGBM to improve theoretical nuclear mass models. In addition, the RMSD for various mass models with respect to the 66 newly measured nuclei that appeared in AME2020 (compared with AME2016) is reduced on the same level as well. Furthermore, it is found that single and two-neutron separation energies obtained with the LightGBM-refined mass models are in good agreement with the newly appeared experimental data. By using the SHAP package, the most relevant input features to predict the residual for each mass model are found out, which may provide guidance for the further developments of nuclear mass models.

The good performance of machine learning method on refining the nuclear mass model gives us a new tool to further investigate other properties of nuclei that we are interested in, such as, superheavy nuclei, halo nuclei, and nuclei around drip-line. In addition, with the development of the interpretable machine learning methods, more physical hints can be obtained thereby improving our understanding of present nuclear models.

Acknowledgements.

Fruitful discussions with Prof. Jie Meng, Prof. Hongfei Zhang, Prof. Yumin Zhao, Dr. Nana Ma are greatly appreciated. The authors acknowledge support by the computing server C3S2 in Huzhou University. The work is supported in part by the National Science Foundation of China Nos. U2032145, 11875125, and 12047568, and the National Key Research and Development Program of China under Grant No. 2020YFE0202002, and the “Ten Thousand Talent Program" of Zhejiang province (No. 2018R52017). The mass table for the LightGBM-refined mass models is available in the Supplemental Material.References

- (1) D. Lunney, J. M. Pearson and C. Thibault, Rev. Mod. Phys. 75, 1021-1082 (2003). doi:10.1103/RevModPhys.75.1021

- (2) K. Blaum, Phys. Rep. 425, 1 (2006).

- (3) F. Wienholtz, D. Beck, K. Blaum, C. Borgmann, M. Breitenfeldt, R. B. Cakirli, S. George, F. Herfurth, J. D. Holt and M. Kowalska, et al. Nature 498, no.7454, 346-349 (2013) doi:10.1038/nature12226

- (4) K. Blaum, J. Dilling and W. Nortershauser, Phys. Scripta T 152, 014017 (2013) doi:10.1088/0031-8949/2013/T152/014017 [arXiv:1210.4045 [physics.atom-ph]].

- (5) Z. Niu, H. Liang, B. Sun, Y. Niu, J. Guo and J. Meng, Sci. Bull. 63, 759-764 (2018) doi:10.1016/j.scib.2018.05.009 [arXiv:1807.05535 [nucl-th]].

- (6) Wang M, Zhang Y H, Zhou X H. Nuclear mass measurements (in Chinese). Sci Sin-Phys Mech Astron, 2020, 50: 052006, doi: 10.1360/SSPMA-2019- 0308

- (7) C. Ma, M. Bao, Z. M. Niu, Y. M. Zhao and A. Arima, Phys. Rev. C 101, no.4, 045204 (2020) doi:10.1103/PhysRevC.101.045204

- (8) M. Wang, G, Audi, F. G. Kondev, W. J. Huang, S. Naimi, and X. Xu, Chinese Physics C(2017).

- (9) P. Möller, W. D. Myers, H. Sagawa and S. Yoshida, Phys. Rev. Lett. 108, no.5, 052501 (2012) doi:10.1103/PhysRevLett.108.052501

- (10) P. Möller, J. R. Nix, W. D. Myers and W. J. Swiatecki, Atom. Data Nucl. Data Tabl. 59, 185-381 (1995) doi:10.1006/adnd.1995.1002 [arXiv:nucl-th/9308022 [nucl-th]].

- (11) N. Wang, M. Liu, X. Wu and J. Meng, Phys. Lett. B 734, 215-219 (2014) doi:10.1016/j.physletb.2014.05.049 [arXiv:1405.2616 [nucl-th]].

- (12) S. Goriely, N. Chamel and J. M. Pearson, Phys. Rev. C 93, no.3, 034337 (2016) doi:10.1103/PhysRevC.93.034337

- (13) S. Goriely, N. Chamel and J. M. Pearson, Phys. Rev. Lett. 102, 152503 (2009) doi:10.1103/PhysRevLett.102.152503 [arXiv:0906.2607 [nucl-th]].

- (14) Y. Aboussir, J. M. Pearson, A. K. Dutta and F. Tondeur, Atom. Data Nucl. Data Tabl. 61, 127-176 (1995) doi:10.1016/S0092-640X(95)90014-4

- (15) L. S. Geng, H. Toki and J. Meng, Prog. Theor. Phys. 113, 785-800 (2005) doi:10.1143/PTP.113.785 [arXiv:nucl-th/0503086 [nucl-th]].

- (16) X. W. Xia, Y. Lim, P. W. Zhao, H. Z. Liang, X. Y. Qu, Y. Chen, H. Liu, L. F. Zhang, S. Q. Zhang and Y. Kim, et al. Atom. Data Nucl. Data Tabl. 121-122, 1-215 (2018) doi:10.1016/j.adt.2017.09.001 [arXiv:1704.08906 [nucl-th]].

- (17) C. Giuseppe, C. Ignacio, C. Kyle et al., Rev. Mod. Phys. 91, 045002 (2019).

- (18) Radovic. A, Williams, Rousseau. D et al., Nature 560, 41 (2018).

- (19) R. Utama, J. Piekarewicz and H. B. Prosper, Phys. Rev. C 93, no.1, 014311 (2016) doi:10.1103/PhysRevC.93.014311 [arXiv:1508.06263 [nucl-th]].

- (20) R. Utama and J. Piekarewicz, Phys. Rev. C 96, no.4, 044308 (2017) doi:10.1103/PhysRevC.96.044308 [arXiv:1704.06632 [nucl-th]].

- (21) Z. M. Niu and H. Z. Liang, Phys. Lett. B 778, 48-53 (2018) doi:10.1016/j.physletb.2018.01.002 [arXiv:1801.04411 [nucl-th]].

- (22) R. Utama, W. C. Chen and J. Piekarewicz, J. Phys. G 43, no.11, 114002 (2016).

- (23) Z. A. Wang, J. C. Pei, Y. Liu, Phys. Rev. Lett 123, 122501 (2019).

- (24) C. W. Ma, D. Peng, H. L. Wei, Y. T. Wang and J. Pu, Chin. Phys. C 44, no.12, 124107 (2020).

- (25) C. W. Ma, D. Peng, H. L. Wei, Z. M. Niu, Y. T. Wang and R. Wada, Chin. Phys. C 44, no.1, 014104 (2020).

- (26) Z. M. Niu, H. Z. Liang, B. H. Sun, W. H. Long and Y. F. Niu, Phys. Rev. C 99, no.6, 064307 (2019) doi:10.1103/PhysRevC.99.064307 [arXiv:1810.03156 [nucl-th]].

- (27) L. G. Pang, K. Zhou, N. Su et al., Nat. Commun 9 210 (2018).

- (28) Y. L. Du, K. Zhou, J. Steinheimer, L. G. Pang, A. Motornenko, H. S. Zong, X. N. Wang and H. Stöcker, Eur. Phys. J. C 80, no.6, 516 (2020) doi:10.1140/epjc/s10052-020-8030-7 [arXiv:1910.11530 [hep-ph]].

- (29) J. Steinheimer, L. Pang, K. Zhou, V. Koch, J. Randrup and H. Stoecker, JHEP 12, 122 (2019).

- (30) Y. D. Song, R. Wang, Y. G. Ma, X. G. Deng and H. L. Liu, Phys. Lett. B 814, 136084 (2021) doi:10.1016/j.physletb.2021.136084 [arXiv:2101.10613 [nucl-th]].

- (31) R. Wang, Y. G. Ma, R. Wada, L. W. Chen, W. B. He, H. L. Liu and K. J. Sun, Phys. Rev. Res. 2, no.4, 043202 (2020)

- (32) F. Li, Y. Wang, H. Lü, P. Li, Q. Li and F. Liu, J. Phys. G 47, no.11, 115104 (2020).

- (33) H. F. Zhang, L. H. Wang, J. P. Yin, P. H. Chen and H. F. Zhang, J. Phys. G 44, no.4, 045110 (2017) doi:10.1088/1361-6471/aa5d78

- (34) M. Shelley and A. Pastore, arXiv:2102.07497 (2021).

- (35) L. Neufcourt, Y. Cao, W. Nazarewicz and F. Viens, Phys. Rev. C 98, no.3, 034318 (2018) doi:10.1103/PhysRevC.98.034318 [arXiv:1806.00552 [nucl-th]].

- (36) M. Carnini and A. Pastore, J. Phys. G 47, no.8, 082001 (2020) doi:10.1088/1361-6471/ab92e3 [arXiv:2002.10290 [nucl-th]].

- (37) Esra Yüksel, Derya Soydaner, and Hüseyin Bahtiyar, arXiv:2101.12117v1 (2021).

- (38) K. A. Gernoth, J. W. Clark, J. S. Prater and H. Bohr, Phys. Lett. B 300, 1-7 (1993) doi:10.1016/0370-2693(93)90738-4

- (39) S. Athanassopoulos, E. Mavrommatis, K. A. Gernoth and J. W. Clark, Nucl. Phys. A 743, 222-235 (2004) doi:10.1016/j.nuclphysa.2004.08.006 [arXiv:nucl-th/0307117 [nucl-th]].

- (40) J. W. Clark and H. Li, Int. J. Mod. Phys. B 20, no.30n31, 5015-5029 (2006) doi:10.1142/S0217979206036053 [arXiv:nucl-th/0603037 [nucl-th]].

- (41) J. Duflo and A. P. Zuker, Phys. Rev. C 52, R23 (1995) doi:10.1103/PhysRevC.52.R23 [arXiv:nucl-th/9505011 [nucl-th]].

- (42) A. Pastore, D. Neill, H. Powell, K. Medler and C. Barton, Phys. Rev. C 101, no.3, 035804 (2020) doi:10.1103/PhysRevC.101.035804 [arXiv:1912.11365 [nucl-th]].

- (43) N. N. Ma, H. F. Zhang, X. J. Bao and H. F. Zhang, Chin. Phys. C 43, no.4, 044105 (2019) doi:10.1088/1674-1137/43/4/044105

- (44) H. C. Yu, M. Q. Lin, M. Bao, Y. M. Zhao and A. Arima, Phys. Rev. C 100, no.1, 014314 (2019) doi:10.1103/PhysRevC.100.014314

- (45) S. Michimasa, M. Kobayashi, Y. Kiyokawa, S. Ota, R. Yokoyama, D. Nishimura, D. S. Ahn, H. Baba, G. P. A. Berg and M. Dozono, et al. Phys. Rev. Lett. 125, no.12, 122501 (2020) doi:10.1103/PhysRevLett.125.122501

- (46) M. Vilen, J. M. Kelly, A. Kankainen, M. Brodeur, A. Aprahamian, L. Canete, T. Eronen, A. Jokinen, T. Kuta and I. D. Moore, et al. Phys. Rev. Lett. 120, no.26, 262701 (2018) [erratum: Phys. Rev. Lett. 124, no.12, 129901 (2020)] doi:10.1103/PhysRevLett.120.262701 [arXiv:1801.08940 [nucl-ex]].

- (47) S. Lundberg, S. I. Lee. arXiv:1705.07874 (2017) [cs.AI].

- (48) P. Möller, A. J. Sierk, T. Ichikawa and H. Sagawa, Atom. Data Nucl. Data Tabl. 109-110, 1-204 (2016) doi:10.1016/j.adt.2015.10.002 [arXiv:1508.06294 [nucl-th]].

- (49) Guolin Ke, Qi Meng, Thomas Finley, Taifeng Wang, Wei Chen, Weidong Ma, Qiwei Ye, Tie-Yan Liu. “LightGBM: A Highly Efficient Gradient Boosting Decision Tree.” Advances in Neural Information Processing Systems 30 (NIPS 2017), pp. 3149-3157.