Machine Learning Percolation Model

Abstract

Recent advances in machine learning have become increasingly popular in the applications of phase transitions and critical phenomena. By machine learning approaches, we try to identify the physical characteristics in the two-dimensional percolation model. To achieve this, we adopt Monte Carlo simulation to generate dataset at first, and then we employ several approaches to analyze the dataset. Four kinds of convolutional neural networks (CNNs), one variational autoencoder (VAE), one convolutional VAE (cVAE), one principal component analysis (PCA), and one -means are used for identifying order parameter, the permeability, and the critical transition point. The former three kinds of CNNs can simulate the two order parameters and the permeability with high accuracy, and good extrapolating performance. The former two kinds of CNNs have high anti-noise ability. To validate the robustness of the former three kinds of CNNs, we also use the VAE and the cVAE to generate new percolating configurations to add perturbations into the raw configurations. We find that there is no difference by using the raw or the perturbed configurations to identify the physical characteristics, under the prerequisite of corresponding labels. In the case of lacking labels, we use unsupervised learning to detect the physical characteristics. The PCA, a classical unsupervised learning, performs well when identifying the permeability but fails to deduce order parameter. Hence, we apply the fourth kinds of CNNs with different preset thresholds, and identify a new order parameter and the critical transition point. Our findings indicate that the effectiveness of machine learning still needs to be evaluated in the applications of phase transitions and critical phenomena.

I Introduction

Machine learning methods have rapidly become pervasive instruments due to better fitting quality and predictive quality in comparison with traditional models in terms of phase transitions and critical phenomena. Usually, machine learning can be divided into supervised and unsupervised learning. In the former, the machine receives a set of inputs and labels. Supervised learning models are trained with high accuracy to predict labels. The effectiveness of supervised learning has been examined by many predecessors on Ising models [1, 2, 3, 4, 5, 6, 7, 8], Kitaev chain models [3], disordered quantum spin chain models [3], Bose-Hubbard models [6], SSH models [6], SU(2) lattice gauge theory [7], topological states models [9], -state Potts models [10, 11], uncorrelated configuration models [12], Hubbard models [13, 12], and models [14], ect.

On the other hand, in unsupervised learning models, there are no labels. Unsupervised learning can be used as meaningful analysis tools, such as sample generation, feature extraction, cluster analysis. Principal component analysis (PCA) is one of the unsupervised learning techniques. Recently investigators have examined the PCA’s effectiveness for exploring physical features without labels in the applications of phase transitions and critical phenomena [15, 14, 8, 13, 16, 17]. Variational autoencoder (VAE) and convolutional VAE (cVAE), another two classical unsupervised learning technique, incorporated into generative neural networks, are used for data reconstruction and dimensional reduction in respect of phase transitions and critical phenomena [8, 18].

Although machine learning approaches have been applied successfully in phase transitions and critical phenomena, there is only one study on the percolation model [17]. Motivated by predecessors, we conduct much more comprehensive studies, which combine supervised learning with unsupervised learning, to detect the physical characteristics in the percolation model.

Our work is considered from the following several aspects. First, we use the former three kinds of deep convolutional neural networks (CNNs) to deduce the two order parameters and the permeabilities in the two-dimensional percolation model. our inspiration and method come from [2, 3], whose study both focus on Ising model.

Nevertheless, the above CNNs are trained on the known configurations from the dataset obtained by Monte Carlo simulation. [8, 18] find that VAE and cVAE can reconstruct samples in Ising model. Hence, we use the VAE and the cVAE to generate new configurations that are out of the dataset. After generating the new configurations, we pour them into the former three kinds of CNNs, respectively.

Having explored supervised learning, we now move on to unsupervised learning. Here we try to identify physical characteristics without labels in the two-dimensional percolation model. [15] takes the first principal component obtained by PCA as the order parameter in Ising model. In contrast to [15], by using preprocessing on the unpercolating clusters, [17] also successfully finds the order parameter in percolation model by PCA. In this study, we try to use the PCA to extract relevant low-dimensional representations to discover physical characteristics.

In an actual situation, we may not know the labels when identifying order parameter. To overcome the difficulty associated with missing labels, [12] changes the preset threshold between the labels zero and one so as to make incorrect labels between the preset and the true thresholds. Hence, we deliberately change the preset thresholds, determined by -means, between the labels zero and one. Here we use the fourth kinds of CNNs which receives the raw configurations as input and the labels determined by the preset thresholds.

This paper is organized as follows. In Sec. II, we describe the two-dimensional percolation model and the dataset from Monte Carlo simulation. In Sec. III, we give a brief introduction to CNNs, VAE and cVAE, and PCA. Next, we provide dozens of machine learning models to capture the physical characteristics and discuss the results in Sec. IV. Finally, we conclude with a summary in Sec. V.

II The two-dimensional Percolation model

For percolation models, what we need to do is to capture the physical characteristics. A suitable dataset should be constructed to fulfill this objective. Various models in physical dynamics can be simulated mathematically by the Monte Carlo method, and it has been proved to be valid for using the Monte Carlo simulation to capture different physical features in phase transitions and critical phenomena [15, 14, 8].

In this study, the Monte Carlo simulation for the two-dimensional percolation model is carried out as follows. First, 40 values of permeability range from 0.41 to 0.80 with an interval of 0.01. For each permeability, the initial samples consist of 1000 percolating configurations. To train the machine learning models, the matrix with the size of (see Eq. 6) is used for storing 40,000 raw percolating configurations.

| (6) |

In Eq. (6), , , and . and represent the number of the configurations and the lattices, respectively. Each row () in the matrix is a configuration with one dimension, can be reshaped as the matrix () with the size of (see Eq. 10). Furthermore, each column () in the matrix represents one lattice with different configurations. Moreover, the element () in matrix and the element () in matrix take 0 when the corresponding lattice is occupied and take 1 otherwise.

| (10) |

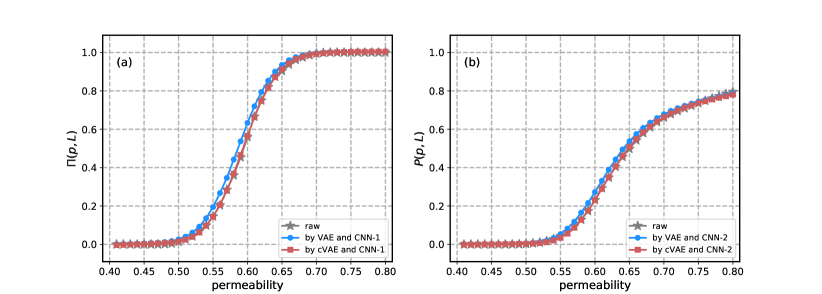

Except for the raw configurations, the dataset also encompasses order parameter. In the two-dimensional percolation model, order parameter includes the percolation probability and the density of the spanning cluster . refers to the probability that there is one connected path from one side to another in . That is to say, is a function of the permeability in the system with the size of (see Fig. 1(a)). With a connectivity of 4, we can identify the cluster for each lattice . The clusters are marked sequentially with an unique index. Note that the two lattices having the same index belong to the same cluster. If there are more than one cluster, the greatest cluster is chosen as the result. In this way, we can count up how many times the configurations are percolated for given and . For each permeability , is expressed in Eq. (11).

| (11) |

Where refers that the two-dimensional configurations for the permeability is percolated or not. Clearly, takes 0 if the corresponding lattice is occupied and takes 1 otherwise. , , , and .

Another representation of order parameter is the density of the spanning cluster . In contrast to the , is associated with spanning cluster. Therefore, for all configurations , is characterized by that whether or not each lattice belongs to the total spanning cluster. Similarly, is a function of the permeability in the system with the size of (see Fig. 1(b)). For each permeability , is expressed in Eq. (12).

| (12) |

Where counts up the total number of lattices that belong to the spanning cluster for each configuration in for the permeability . Obviously, , , , , and .

III Machine learning methods

III.1 CNNs

In this section, we will focus on the two-dimensional percolation model and the dataset obtained by the Monte Carlo simulation. This section will discuss several machine learning approaches, including CNNs, VAE and cVAE, and PCA, to deduce physical characteristics.

Let us first introduce CNNs. CNNs, supervised learning methods, are particularly useful in solving realistic problem for many disciplines, such as physics[3], chemistry [19], medicine [20], economics [21], biology [22], and geophysics [23, 24], ect. In the applications of phase transitions and critical phenomena, many predecessors utilize CNNs to detect physical features, especially order parameter [1, 25, 26, 27, 10, 5, 2, 6]. In this study, the four kinds of CNNs are not only used to detect the two order parameters ( and ), but also to detect the permeability .

Next, we demonstrate the architecture of the CNNs (see Fig. 2). The structure of the CNNs has four layers, including two convolution layers and two fully connected layers. The percolating configurations are taken as inputs. Consequently, the CNNs receive the corresponding order parameters ( and ) or the permeability as outputs.

III.2 VAE and cVAE

In the former section (see Sec. III.1), the raw configuration at different permeability is generated by Monte Carlo simulation. However, what if configurations are not in the raw configurations, can we still identify the physical features as well? Here we consider to use the VAE and the cVAE to generate new configuration and , respectively.

VAE (see Fig. 3), a generative network, bases on the variational Bayes inference proposed by [28]. Contracted with traditional AE (see Fig. S. 1), the VAE describes latent variables with probability. From that point, the VAE shows great values in data generation. Just like AE, the VAE is composed of an encoder and a decoder. The VAE uses two different CNNs as two probability density distributions. The encoder in the VAE, called the inference network , can generate the latent variables . And the decoder in the VAE, called the generating network , reconstructs the raw configuration . Unlike AE, the encoder and the decoder in VAE are constrained by the two probability density distributions.

To better reconstruct the raw two-dimensional configuration , the fully connected layer in the VAE is replaced by the convolution layer. Now we have got the cVAE. The architecture of the cVAE is shown in Fig. 3. Usually, the performance of the cVAE is better than the VAE due to the configuration with spatial attribute. In our work, the VAE and the cVAE are both used for generating THE new configuration .

III.3 PCA

The Sec. III.1 and Sec. III.2 focus on supervised learning, which hypothesize that the labels exist for the raw configuration on the percolation model. However, though we can detect the permeability values and the two order parameters ( and ) by supervised learning, label dearth often occurs. Thus, it is imperative to identify the labels, such as , , and . Some recent studies have shown that the first principal component obtained by PCA can be regarded as typical physical quantities [15, 8, 14, 17]. Base on these studies, we explore the meaning of the first principal component on the percolation model.

As it is well-known, PCA can reduce the dimension of the matrix . First, we compute the mean value for each column in the matrix . Then we get the centered matrix that is expressed as . After obtaining , by an orthogonal linear transformation expressed as , we extract the eigenvectors and the eigenvalues . The eigenvectors are composed with . The eigenvalues are sorted in the descending order, i.e., . The normalized eigenvalues are expressed as . The row in matrix can be transformed into . Eq. 13 represents the statistic average every 40 intervals for each permeability . This process is quite similar to the process of calculating the two order parameters, i.e., and . Table 1 shows the procedure of PCA algorithm.

| (13) |

| Require: the raw configuration |

|---|

| 1. Compute the mean value for the column in the matrix ; |

| 2. Get the centered matrix ; |

| 3. Compute the eigenvectors and the eigenvalues by an orthogonal linear transformation; |

| 4. Transform into ; |

| 5. Get the statistic average with every 40 intervals for each permeability . |

IV Results and Discussion

IV.1 Simulate the two order parameters by two CNNs

In this section, we consider to use the approches in Sec. III to capture the physic features. First we make use of TensorFlow 2.2 library to perform the CNNs with four layers. To predict the two order parameters ( and ), two kinds of CNNs (CNNs-I and CNNs-II) are constructed. The first two layers of the former two kinds of CNNs are composed of two convolution layers (“Con1” and “Con2”), both of which possessed “filter1”=32 and “filter2”=64 filters with the size of , and a stride of 1. Each convolution layer is followed by a max-pooling layer with the size of . The final convolution layer “Con2” is strongly interlinked to a fully connected layer “FC” with 128 variables. The output layer “Output”, following by “FC”, is a fully connected layer. For the two convolution layers and the fully connected layer “FC”, a rectified linear unit (ReLU) [29] is chosen as activation function due to its reliability and validity. However, the output layer has no activation function.

After determining the framework of CNNs-I and CNNs-II, here we mention how to train CNNs-I and CNNs-II for deducing and . First, we carry out an Adam algorithm [30] as an optimizer to update parameters, i.e., weights and biases. Then, a mini batch size of 256 and a learning rate of are selected for its timesaving. Following this treatment, CNNs-I and CNNs-II are trained on 1000 epochs for 40,000 uncorrelated and shuffled configurations, respectively. Before training, we split , and into 32,000 training set and 8,000 testing set. While training, we monitor three indicators, including the loss function (i.e., mean squared error (MSE, see Eq. 14)), mean average error (MAE, see Eq. 15), and root mean squared error (RMSE, see Eq. 16), for training and testing set [24]. In Eq. 14-16, and refer to / and its predictions. If the loss function in testing set reaches the minimum, then the optimal CNNs-I and CNNs-II will be obtained. As can be seen from Fig. S. 2, these indicators gradually decrease. In Table. S. 3, the errors of the optimal CNNs-I and CNNs-II are very small. What stands out in Fig. S. 2 is that CNNs-I and CNNs-II have high stability, consistency, and faster convergence rate.

| (14) |

| (15) |

| (16) |

Before assess CNNs-I and CNNs-II, we have to explain what is meant by statistic average. Statistic average can be defined as the averages of CNNs-I’s or CNNs-II’s outputs for each permeability . As shown in Fig. 5, there is a clear trend of phase transition between the permeability values and /. The two grey lines in Fig. 5, which are the same as the two blue lines in Fig. 1, represent the relationship between the permeability values and the raw or . Likewise, the two blue lines in Fig. 5, represent the relationship between the permeability values and the statistic average from the outputs of CNNs-I and CNNs-II. The overlapping of the two kinds of lines shows that CNNs-I and CNNs-II can be used to deduce the two order parameters and the process of phase transition.

To overcome the difficulty associated with the percolation model being near the critical transition point, we truncate the dataset. Specifically, we remove the data near the critical transition point, and only retain the data far away from the critical transition point. Here we take the simulation of as an example. The retained data with the raw rangs from 0 to 0.1, and 0.9 to 1. As shown in Fig. S. 3 and the middle red points in Fig. 6, we find that CNNs-I can extrapolate to missing data by learning the retained data.

This section demonstrate that CNNs-I and CNNs-II can be two effective tools for detecting and , respectively. Additional test should be made to verify that whether or not CNNs-I and CNNs-II are robust against noise. To address this issue, we deliberately invert a proportion, i.e., 5%, 10%, and 20%, of the labels for the raw and and verify that whether or not the “artificial” noises can affect the predicted and . Fig. 7 and Fig. S. 4 demonstrate that CNNs-I and CNNs-II are robust against noise. As the labeling error rates increase, the same trend is evident in the outputs of CNNs-I and CNNs-II within a relatively small difference (see Fig. 7). Therefore, we draw the conclusion that noises have little effect on detecting and .

IV.2 Simulate the permeability by one CNNs

Just like we use CNNs-I and CNNs-II in Sec. IV.1, here we use the same structure for CNNs-III and strategies for training the permeability . The only distinction between CNNs-III and CNNs-I/CNNs-II is that the outputs for CNNs-III are the permeability instead of the two order parameters. Fig. S. 5 shows the performance of CNNs-III. With successive increases in epochs, the MSE, MAE, and RMSE continue to decrease until no longer dropping.

Another measure of CNNs-III’s performance is concerned with the difference between the raw permeability and its prediction . The blue circles in Fig. 8 show that there is a strong positive correlation between the raw permeability and its prediction . Further statistical tests reveal that most of the gap between the raw permeability and its prediction is less than 0.1. The result proves that CNNs-III has the advantage of convenient use and high precision.

On the other hand, extrapolation ability can also reflect the performance of CNNs-III. To do this, we use Monte Carlo simulation to generate new dataset. The new permeability not only ranges from 0.01 to 0.40, but also from 0.81 to 1.00, with an interval of 0.01. Just like Sec. II, we perform 1050 Monte Carlo steps and keep the last 1000 steps. As a consequence, 60,000 configurations are generated. The red circles in Fig. 8 exhibit the extrapolation ability of CNNs-III. Our results show that no significant correlation between the extrapolated permeability ranging from 0.81 to 1.00 and their prediction . However, in Fig. 8, the results indicate that CNNs-III has good extrapolation ability for the extrapolated permeability from 0.01 to 0.40.

IV.3 Generate new configurations by one VAE and one cVAE

Though CNNs-I, CNNs-II, and CNNs-III are valid when detecting the two order parameters ( and ) and the permeability , the validity of these three CNNs is unkown for percolating configurations outside of the dataset. As is shown in Fig. 3 and Fig. 4, we use the same network structures for the VAE and the cVAE to generate new configurations ( and ). Actually, and can be regarded as adding some noise into the raw configuration .

Let us first consider VAE. Just like AE (see Fig. S. 1), the VAE is also composed of an encoder and a decoder. The encoder of the VAE owns two fully connected layers, both of which follows with a ReLU activation function. The first layer of the encoder possess “size1”=512 neurons. Another 512 neurons, including 256 mean “” and 256 variance “”, are taken into account for the second layer of the encoder. By resampling from the Gaussian distribution with the mean “” and the variance “”, we obtain 256 latent variables “” which are the inputs of the decoder. For the decoder of the VAE, two fully connected layers follow with the outputs of the encoder. For symmetry, the first layer of the decoder also contains “size1”=512 neurons and follows with a ReLU activation function. And the output layer of the decoder contains 784 neurons which are used to reconstruct the raw configuration . Thus, the neurons in the output layer are the same as that in the input layer.

Moving on now to consider cVAE. The encoder of the cVAE is composed of one input layer, two hidden convolution layers with “filter1”=32 and “filter2”=64 filters with the size of 3 and a stride of 2, and a ReLU activation function. The output layer in the encoder is a fully connected flatten layer with 800 neurons (400 mean “” and 400 variance “”) without activation function. By resampling from the Gaussian distribution with the mean “” and the variance “”, we obtain 400 latent variables “”. The reason why latent variables in the cVAE is more than the VAE is that the cVAE needs to consider more complex spatial characteristic. The decoder of the cVAE is composed of an input layer with 400 latent variables “”. A fully connected layer “FC” with 1,568 neurons is followed by “”. After reshaping the outputs of “FC” into three dimension, we feed the data into two transposed convolution layers (“Conv3” and “Conv4”) and one output layer “Output”. The filters in these deconvolution layers are 64, 32 and 1 with the size of 3 and the stride of 2. After excluding the output layer “Output” without activation functions, there exist two ReLU activation functions followed by “Conv3” and “Conv4”, respectively.

We train the VAE and the cVAE over epochs using the Adam optimizer, a learning rate of , and a mini batch size of 256. To train the VAE and the cVAE, we use the sum of binary cross-entropy (see Eq. 17) and the Kullback-Leibler (KL) divergence (see Eq. 18) as the loss function [18]. In Eq. 17-18, and represent each raw configuration with one/two dimension and its prediction. As shown in Fig. S. 6, the loss function, the binary cross-entropy, and the KL divergence vary with epochs for the VAE and the cVAE. Here we focus on the minimum value of loss function. From Fig. S. 6, the optimal cVAE performs better than the optimal VAE.

| (17) |

| (18) |

For a more visual comparison, we show the snapshots of the raw configurations , and compare them to the VAE-generated and cVAE-generated configurations in Fig. 9. As we can see from Fig. 9, the configurations from the Monte Carlo simulation, the VAE and the cVAE are very close to each other.

After reconstructing the configurations ( and ) through the VAE and the cVAE and pouring and into CNNs-I, CNNs-II, and CNNs-III, we can detect , , and the permeability . Fig. 10 shows the relationships between the permeability values and the statistic average from the outputs in CNNs-I and CNNs-II, respectively. From the red and purple lines in Fig. 10, by using and , we can obtain the two order parameters as well. From the Fig. 11, the raw permeability is remarkably correlated linearly with its prediction through the VAE/cVAE and CNNs-III. Thus, subtle change in the raw configurations does not effect the catch of physical features.

IV.4 Identify the characteristic of the first principal component by one PCA

This section will discuss how to capture the physic characteristics without labels. Various studies suggest to use PCA to identify order parameters [15, 8, 14, 17]. Therefore, we try to verify the feasibility of PCA in capturing order parameter on the percolation model.

First, we perform the PCA to the raw configuration . Fig. 12 exhibit the normalized eigenvalues . is also called as the explained variance ratios. The most noteworthy information in Fig. 12 is that there is one dominant principal component , whcih is the largest one among and much larger than other explained variance ratios. Thus, plays a key role when dealing with dimension reduction. Based on , the raw configuration are mapped to another matrix .

In Fig 13, we construct the matrix by the first two eigenvalues and their eigenvectors. We use 40,000 blue scatter points to plot the the relationship between and on 40 permeability values ranging from 0.41 to 0.8. Just like in Fig. 12, there is only one dominant representation on the percolation model due to the first principal component is much more important than the second principal component.

Having analysed the importance of the first principal component, we now move on to discuss the meaning of the first principal component. In Fig. 14, we focus on the quantified first principal component as a function of the permeability and the two order parameters, i.e., and . From Fig. 14, we can see that there is a strong linear correlation between the quantified first principal component and the permeability . And the relationships between the quantified first principal component and two order parameters ( and ) are similar to the relationships between the permeability values and the two order parameters. Our results are significant different from former study which demonstrates that the quantified first principal component can be taken as order parameter by data preprocessing [17]. A possible explanation may be that [17] evaluates the first principal component by removing the raw dataset with certain attributes on the percolation model. Therefore, we assume that the quantified first principal component obtained by PCA may not be taken as order parameter for various physical models. Another possible explanation is that, under certain conditions, the quantified first principal component can be regarded as order parameter.

IV.5 Identify physic characteristics by one -means and one CNNs

From Sec. IV.4, no significant corresponding is found between the normalized quantified first principal component and the two order parameters. Here we eagerly wonder how to identify order parameter. And another physical characteristics we desire to explore is the critical transition point. So we try to find a way to capture order parameter from the raw configurations and their permeability on the percolation model.

As it is well-known, for the two-dimensional percolating configurations, the critical transition point is equal to 0.593 in theoretical calculation. Though the critical transition point is already known, we wonder that whether or not the critical transition point can be found by machine. To do this, we first use a cluster analysis algorithm named -means [31] to separate the raw configurations into two categories. The minimum and maximum value of their permeability are 0.55 and 0.80 in the first cluster, and 0.41 and 0.65 in the second cluster. Note that there is an overlapping interval between 0.55 and 0.65 for the two categories. According to the overlapping interval, we hypothesize that the critical threshold is set to be 11 values, i.e., 0.55, 0.56, , and 0.65. For each raw configuration, if the permeability is smaller than , there will exist no percolating cluster and its label will be marked as 0; otherwise, there will exist at least one percolating cluster and its label will be marked as 1.

To detect physic characteristics, we use the fourth kinds of CNNs (CNNs-IV) with the structure in Fig. 2 for 11 preset critical thresholds from 0.55 to 0.65. Note that the output layer use a sigmoid activation function expressed as , to make sure the outputs are between 0 and 1. Another critical thing to pay attention is that the He normal distribution initializer [32] and L2 regularization [33] are used in the layer of “Conv1”, “Conv2”, and “FC” on CNNs-IV. To avoid overfiting, in addition to L2 regularization, we also use a dropout layer with a dropout rate of 0.5 on “FC”. A Mini batch size of 512 and a learning rate of are chosen while training CNNs-IV. The binary cross-entropy (see Eq. 17) is taken as the loss function on CNNs-IV. Another metric, used to measure the performance of CNNs-IV, is the binary accuracy (see Eq. 19). The other hyper-parameters are the same as the CNNs-I, CNNs-II, and CNNs-III.

| (19) |

Turning now to the experimental evidence on the inference ability of capturing relevant physic features. After obtaining the well-trained CNNs-IV with high accuracy (see Fig. S. 7), we obtain the outputs by pouring the raw 40,000 configurations into CNNs-IV. The statistical average of the outputs is calculated according to the 40 independent permeability values . The results of the correlational analysis are shown in Fig. 15. We set the horizontal dashed line as the threshold value 0.5. Hence, each curve is divided into two parts by the horizontal dashed line. The lower part indicates that the percolation system is not penetrated; while the upper part implies that the percolation system is penetrated. The crosspoint, where the horizontal dashed line and the red curve are intersected, has a permeability value of 0.594, which is very close to the theoretical value of 0.593 that is marked by the vertical dashed line in Fig. 15. Remarkably, the critical transition point can be calculated by CNNs-IV with the preset value of 0.60 for the sampling interval of 0.01. Therefore, CNNs-IV with the preset threshold value of 0.60 is the most effective model. In further studies, the preset threshold value may need to be enhanced by smaller sampling intervals for higher precision.

V Conclusions

As machine learning approaches have become increasingly popular in phase transitions and critical phenomena, predecessors have pointed out that these approaches can capture physic characteristics. However, previous studies about identifying physical characteristics, especially order parameter and critical threshold, need to be further mutually validated. To highlight the possibility of effectiveness by machine learning methods, we conduct a much more comprehensive research than predecessors to reassess the machine learning approaches in phase transitions and critical phenomena.

Our results show the effectiveness of machine learning approaches in phase transitions and critical phenomena than previous researchers. Precisely, we use CNNs-I, CNNs-II and CNNs-III to simulate the two order parameters, and the permeability values. To identify whether or not CNNs-I and CNNs-II are robust against noise, we add a proportion of the noises for the two order parameters. To validate the robustness of CNNs-I, CNNs-II and CNNs-III, we also use VAE and cVAE to generate new configurations that are slightly different from their raw configurations. After pouring the new configurations into the CNNs-I, CNNs-II, and CNNs-III, we achieve the results that these models are robust against noise.

However, after we use PCA to reduce the dimension of the raw configurations and make a statistically significant linear correlation between the first principal component and the permeability values, no statistically significant linear correlations are found between the first principal component and the two order parameters. Clearly, the first principal component fails to be regarded as an order parameter in the two-dimensional percolation model. To identify order parameter, we use the fourth kinds of CNNs, i.e., CNNs-IV. The results show that CNNs-IV can identify new order parameter when the preset threshold value is 0.60. Surprisingly, we find that the critical transition point value is 0.594 by CNNs-IV.

Although these machine learning methods are valid to explore the physical characteristics in the percolation model, the current study may still have some inevitable limitations that prevent us from making an overall judgement by these methods on the other models of phase transitions and critical phenomena. In other words, it must be acknowledged that this research is based on the two-dimensional percolation model.We are not sure of the usefulness of applying our methods to the other models. Consequently, our methods in this study may open an opportunity to other models on phase transitions and critical phenomena for further research.

Acknowledgements.

The authors gratefully thank Yicun Guo for revising the manuscript. We also thank Jie-Ping Zheng and Li-Ying Yu for helpful discussions and comments. The work of S.Cheng, H.Zhang and Y.-L.Shi is supported by Joint Funds of the National Natural Science Foundation of China (U1839207). The work of F.He and K.-D.Zhu is supported by the National Natural Science Foundation of China (No.11274230 and No.11574206) and Natural Science Foundation of Shanghai (No.20ZR1429900).References

- Tanaka and Tomiya [2017] A. Tanaka and A. Tomiya, Detection of phase transition via convolutional neural networks, Journal of the Physical Society of Japan 86, 063001 (2017).

- Carrasquilla and Melko [2017] J. Carrasquilla and R. G. Melko, Machine learning phases of matter, Nature Physics 13, 431 (2017).

- Van Nieuwenburg et al. [2017] E. P. Van Nieuwenburg, Y.-H. Liu, and S. D. Huber, Learning phase transitions by confusion, Nature Physics 13, 435 (2017).

- Shiina et al. [2020] K. Shiina, H. Mori, Y. Okabe, and H. K. Lee, Machine-learning studies on spin models, Scientific reports 10, 1 (2020).

- Suchsland and Wessel [2018] P. Suchsland and S. Wessel, Parameter diagnostics of phases and phase transition learning by neural networks, Physical Review B 97, 174435 (2018).

- Huembeli et al. [2018] P. Huembeli, A. Dauphin, and P. Wittek, Identifying quantum phase transitions with adversarial neural networks, Physical Review B 97, 134109 (2018).

- Wetzel and Scherzer [2017] S. Wetzel and M. Scherzer, Machine learning of explicit order parameters: From the ising model to su(2) lattice gauge theory, Physical Review B 96 (2017).

- Wetzel [2001] S. J. Wetzel, Unsupervised learning of phase transitions: From principal component analysis to variational autoencoders, Physical Review E 96, 022140 (2001).

- Deng et al. [2017] D.-L. Deng, X. Li, and S. D. Sarma, Machine learning topological states, Physical Review B 96, 195145 (2017).

- Bachtis et al. [2020] D. Bachtis, G. Aarts, and B. Lucini, Mapping distinct phase transitions to a neural network, Physical Review E 102, 053306 (2020).

- Zhao and Fu [2019] X. Zhao and L. Fu, Machine learning phase transition: An iterative proposal, Annals of Physics 410, 167938 (2019).

- Ni et al. [2019] Q. Ni, M. Tang, Y. Liu, and Y.-C. Lai, Machine learning dynamical phase transitions in complex networks, Physical Review E 100, 052312 (2019).

- Ch’ng et al. [2018] K. Ch’ng, N. Vazquez, and E. Khatami, Unsupervised machine learning account of magnetic transitions in the hubbard model, Physical Review E 97, 013306 (2018).

- Wetzel [2017] S. J. Wetzel, Discovering phases, phase transitions, and crossovers through unsupervised machine learning: A critical examination, Physical Review E 95, 062122 (2017).

- Wang [2016] L. Wang, Discovering phase transitions with unsupervised learning, Physical Review B 94, 195105 (2016).

- Zhang et al. [2018] W. Zhang, J. Liu, and T.-C. Wei, Machine learning of phase transitions in the percolation and xy models, Physical Review E 99 (2018).

- Yu and Lyu [2020] W. Yu and P. Lyu, Unsupervised machine learning of phase transition in percolation, Physica A: Statistical Mechanics and its Applications 559, 125065 (2020).

- D’Angelo and Böttcher [2020] F. D’Angelo and L. Böttcher, Learning the ising model with generative neural networks, Physical Review Research 2, 023266 (2020).

- Janet et al. [2020] J. P. Janet, H. Kulik, Y. Morency, and M. Caucci, Machine Learning in Chemistry (2020).

- Plant and Barton [2020] D. Plant and A. Barton, Machine learning in precision medicine: lessons to learn, Nature Reviews Rheumatology 17 (2020).

- Hull [2021] I. Hull, Machine learning and economics (2021) pp. 61–86.

- Buchanan [2020] M. Buchanan, Machines learn from biology, Nature Physics 16, 238 (2020).

- Poorvadevi et al. [2020] R. Poorvadevi, G. Sravani, and V. Sathyanarayana, An effective mechanism for detecting crime rate in chennai location using supervised machine learning approach, International Journal of Scientific Research in Computer Science, Engineering and Information Technology , 326 (2020).

- Cheng et al. [2021] S. Cheng, X. Qiao, Y. Shi, and D. Wang, Machine learning for predicting discharge fluctuation of a karst spring in north china, Acta Geophysica , 1 (2021).

- Kashiwa et al. [2019] K. Kashiwa, Y. Kikuchi, and A. Tomiya, Phase transition encoded in neural network, Progress of Theoretical and Experimental Physics 2019, 083A04 (2019).

- Arai et al. [2018] S. Arai, M. Ohzeki, and K. Tanaka, Deep neural network detects quantum phase transition, Journal of the Physical Society of Japan 87, 033001 (2018).

- Xu et al. [2019] R. Xu, W. Fu, and H. Zhao, A new strategy in applying the learning machine to study phase transitions, arXiv preprint arXiv:1901.00774 (2019).

- Kingma and Welling [2014] D. P. Kingma and M. Welling, Auto-encoding variational bayes, CoRR abs/1312.6114 (2014).

- Agarap [2018] A. F. Agarap, Deep learning using rectified linear units (relu), arXiv preprint arXiv:1803.08375 (2018).

- Kingma and Ba [2014] D. P. Kingma and J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980 (2014).

- Hartigan and Wong [1979] J. A. Hartigan and M. A. Wong, Algorithm as 136: A k-means clustering algorithm, Journal of the royal statistical society. series c (applied statistics) 28, 100 (1979).

- [32] https://www.tensorflow.org/api_docs/python/tf/keras/initializers/HeNormal.

- L [2] https://www.tensorflow.org/api_docs/python/tf/keras/regularizers/L2.