nestedcomment \bstctlciteIEEEexample:BSTcontrol

Machine Learning in NextG Networks via

Generative Adversarial Networks

Abstract

Generative Adversarial Networks (GANs) are Machine Learning (ML) algorithms that have the ability to address competitive resource allocation problems together with detection and mitigation of anomalous behavior. In this paper, we investigate their use in next-generation (NextG) communications within the context of cognitive networks to address i) spectrum sharing, ii) detecting anomalies, and iii) mitigating security attacks. GANs have the following advantages. First, they can learn and synthesize field data, which can be costly, time consuming, and nonrepeatable. Second, they enable pre-training classifiers by using semi-supervised data. Third, they facilitate increased resolution. Fourth, they enable the recovery of corrupted bits in the spectrum. The paper provides the basics of GANs, a comparative discussion on different kinds of GANs, performance measures for GANs in computer vision and image processing as well as wireless applications, a number of datasets for wireless applications, performance measures for general classifiers, a survey of the literature on GANs for i)–iii) above, and future research directions. As a use case of GAN for nextG communications, we show that a GAN can be effectively applied for anomaly detection in signal classification (e.g., user authentication) outperforming another state-of-the-art ML technique such as an autoencoder.

Generative adversarial networks (GANs), conditional GANs, generative modeling, spectrum sharing, anomaly detection, outlier detection, wireless security, unsupervised learning.

1 Introduction

The number of radios and their use are increasing exponentially. Over the last several years, wireless data transmission has grown up by approximately 50% per year [1, 2]. This increase in demand has largely been driven by novel applications such as streaming videos and social media on smart devices, and has strained the ability of the limited available wireless spectrum to support it. The coming era of the Internet-of-Things (IoT) and its anticipated goal of connecting tens of billions of devices via wireless will make this situation even more challenging for next-generation wireless communication networks (NextG). Classical methods for medium access control and physical layer operation, designed to handle a relatively small number of users in a given space-time-frequency resource, will soon be overwhelmed and alternatives must be sought.

Wireless systems of the future are anticipated to share the available spectrum rather than operating with exclusive assigned frequencies. It is generally expected that this sharing will be across all possible dimensions, including space, time, and frequency, and will involve a huge quantity of interactions among a very large number of radios. Flexible methods will be needed to efficiently use the limited available resources, quickly adapting themselves to changing environments and Quality-of-Service (QoS) requirements. This will be true even as wireless systems move to higher frequencies (such as anticipated for mmWave and THz bands) where available bandwidth is more abundant. Due to shorter propagation distances and the increased prevalence of blockages, it will require networks to continually reconfigure themselves via handoffs, cooperative relaying, beamforming, and so on. Given the exponentially increasing demand for wireless connectivity and the inevitable increase in the complexity of the networks that will supply it, it is no surprise that research is turning towards data-driven techniques of Artificial Intelligence (AI) and Machine Learning (ML) techniques for help in addressing these issues by learning from the spectrum data and solve complex tasks.

The Defense Advanced Research Projects Agency (DARPA) recently built a testbed named Colosseum for testing shared-spectrum communication systems [1, 2]. The vision is to emulate more than 65,000 unique interactions, such as text messages or video streams, among 128 radios at once. This testbed is built with the full expectation that the type of complicated spectrum sharing scenario described above is likely and can be efficiently managed by AI [1, 2].

There are two possible ways of sharing spectrum. One is an open access model, that is similar to an unlicensed band, such as the Industrial, Scientific, and Medical (ISM) band. A more desirable model from an interference viewpoint is known as the hierarchical access model, or the cognitive radio network. When the cognitive radio concept was first proposed, learning was already considered to be essential for its operation [3, 4]. There are three reasons why considering ML for cognitive radio is important. First, as discussed in [1, 2], due to the expected numbers of wireless devices and the complexity of the services they provide, the learning process suggested in [3, 4] cannot be managed by classical model-based techniques, and will need to employ ML algorithms. Second, for complex radios with many inputs and many outputs, a very large number of actions will be necessary to account for all possible radio states. In the past, most cognitive radio research focused on radios that are hard-coded to manage these states. ML provides an opportunity for a learning engine to auto-generate these actions, rather than pre-programming them [5]. Third, ML provides a framework in which to incorporate memory from past actions and results into current operations, and thus more quickly adapt to changing conditions in the future [5].

As ML techniques rely on the availability of representative data, it has become imperative to generate and curate data samples for training and testing of spectrum operations. To that end, synthetic data can be generated to help with ML training and testing processes, when there is a lack of sufficient number of real data samples. Building upon recent advances in deep neural networks, Generative Adversarial Networks (GANs) have been originally introduced to generate synthetic data that cannot be distinguished from real data [6]. One direct use case of GANs is to generate synthetic training data samples for data augmentation purposes to strengthen the training process of ML algorithms. Later, the use of GANs has been extended to support domain adaptation, defend against adversarial attacks, enable unsupervised learning of data, detect anomalies embedded in rich data representations, and security applications. As far as defending against adversarial attacks, GANs are capable of generating adversarial samples. These can be used for network attacks that may be missed by the ML detector. Or, they can be added to the training set to help the ML detector adjust its classification boundary in order to acquire better detection capabilities.

While these use cases also apply in wireless communication systems, their effective application hinges upon careful account of wireless communication characteristics:

-

1.

Training data for wireless communications is typically limited. There are sensing limitations regarding sampling rate (due to hardware effects) and time spent for sensing (balancing the sensing-communication tradeoff). Therefore, GANs can be used to augment the training data for wireless applications, such as spectrum sensing and wireless signal detection and classification (e.g., jammer identification) when the Radio Frequency (RF) data typically involves uncertainties due to noise, channel, traffic, and interference effects [7].

-

2.

Wireless data is heavily environment dependent. For example, the training data collected in a laboratory environment (e.g., indoor) does not necessarily match channel characteristics in test time (e.g., outdoor). Therefore, the adaptation of test or training data to channel conditions raises the need for domain adaptation with GANs [8].

- 3.

-

4.

Wireless communication systems are subject to attacks due to the open nature of wireless spectrum and anomalies due to hardware impairments and intended/unintended interference. GANs can be used to protect wireless communications against attacks and detect anomalies.

The unique characteristics of wireless communication systems that need to be considered for the GAN applications are summarized as follows.

-

1.

The input to the GAN is (real) RF data that is highly complex and dynamic, subject to noise, channel, traffic, and interference effects. Statistical modeling may not be effective to represent the spectrum data. Therefore, the complex structures of deep neural networks in the GAN formulation can be effectively used to represent the spectrum data [11, 12, 13].

-

2.

The format of the RF data is not universal (like pixels in computer vision) and may vary from time series of in-phase/quadrature components (I/Qs) or Received Signal Strength Indicators (RSSIs) to frequency domain representations.

-

3.

The generator and the discriminator of the GAN may need to be distributed at transmitter and receiver, respectively, as data transmission and the corresponding reception need to be separated through a wireless channel in online applications. In this context, the message exchange between the generator and the discriminator may be executed over noisy wireless channels.

- 4.

Contributions of the paper are as follows. Sec. 2 introduces the basics of GANs and a number of alternative GAN structures, together with their underlying mathematics. GAN structures were originally developed for computer vision and image processing. This paper is on adopting them for applications in NextG wireless communications. To that end, Sec. 3 first discusses performance measures used in computer vision and image processing. It introduces performance measures that can be used in wireless applications since the former measures cannot be used in this field. It introduces public datasets that can be employed in wireless applications. It also discusses performance measures for general classifiers, including wireless classifiers. It discusses the use of GANs to extend a given dataset, called data augmentation. Furthermore, Sec. 3 introduces a number of GAN techniques that have been used in spectrum sharing, anomaly detection, and security for NextG wireless. A summary of these is available in Table 7. Sec. 4 presents an anomaly detection problem for the modulation classification task and presents our results where we solve this problem using two unsupervised deep learning methods, a GAN-based and an autoencoder-based algorithm. We also provide interpretations of the results, tradeoffs between the two approaches, and implementation details. Sec. 5 provides a discussion of a number of potential future research directions, including their challenges. Finally, Sec. 6 provides our conclusions.

2 Preliminaries on Generative Adversarial Networks

GANs were introduced in 2014 [14, 6], and belong to the class of deep-learning-based generative models. Generative modeling is an unsupervised ML task that involves automatically discovering the regularities or patterns in input data in such a way that the model can be used to generate new samples that cannot be discriminated from the original dataset. Facebook’s Director of Artificial Intelligence Research Yann Le Cunn is famously known to have said in June 2016 that “GANs and the variations that are now being proposed is the most interesting idea in the last 10 years in ML, in my opinion.” Given the many subsequent applications of GANs in different fields, some of which we discuss below, it is difficult to argue with this opinion.

GANs train a generative model by decomposing the problem into two sub-models, as depicted in Fig. 1. The first is a generator network trained to generate new examples, while the second is a discriminator network that tries to classify examples as either real (from the domain) or fake (generated). The two models are jointly trained that continues until the generator network learns to synthesize plausible samples that cannot be distinguished from real data.

GANs and their variations have been used in a variety of applications. A few examples include creation of synthetic human faces [15, 16, 17] and transforming images from one domain (e.g., real scenery) to another domain (paintings by famous painters) [18]. Other examples of image-to-image translation in [18] include conversion of horse pictures to pictures of zebras and vice versa, pictures of summer scenery to pictures of the same scenery in winter, etc. Further applications include face aging [19], creation of super-resolution images from those of low resolution [20], music generation [21], audio synthesis [22], and video synthesis [23]. More relevant to this paper, GANs have been applied to problems in spectrum sensing and security in wireless networks, e.g., [8, 24, 25, 9, 26, 27, 11], and these will be discussed in more detail below. A list of the many different applications of GANs is available in [28].

To describe how GANs are trained, let x belong to the manifold of the real input data with distribution , and let z belong to the latent or noise prior space with distribution . Further, let be a differentiable function representing the generator with input z, and let be a differentiable function representing the discriminator with input x or , where the output of is mapped to the interval . Now consider the function

| (1) |

The first term increases when the real samples are more correctly classified, while the second term increases as the discriminator more successfully identifies the generated samples as fake. Thus, the discriminator acts to maximize (1). On the other hand, attempts to minimize (1), that is equivalent to minimizing just the second term, which increases the likelihood that the discriminator is fooled. This leads to a minimax optimization in which and work against each other to achieve the equivalent of a Nash equilibrium point [6, 29]:

| (2) |

The two optimizations in (2) are carried out by employing neural networks and backpropagation via gradient ascent and gradient descent, which is possible since and are differentiable.

Given a batch of training data and samples from the latent space, we can convert the optimization expressed via (1) and (2) into the optimization of two cost functions, for and , respectively, as

| (3) |

Details on implementing (3) with a neural network and gradient ascent and descent to train the GAN can be found in [6, Algorithm 1]. Note that by substituting the criterion in (1)–(2) with that in (3), an implicit assumption of ergodicity, or ensemble averages being equal to time averages, is made. This assumption, common in signal processing and communications, will be made again in the sequel.

Additional information that is correlated with the input data, such as class labels, can be used to improve GAN performance, either in the form of more stable or faster training, or generated images that have better quality. Such conditional GANs (CGANs) are trained in such a way that both the generator and the discriminator models are conditioned on the class label, so that when the trained generator is used as a standalone model to generate samples in the domain, samples of a given type can be generated [30]. For example, in the synthesis of faces one could focus on generating a female face, or one could convert a summer scene into a winter scene, etc. Popular face aging applications are also based on this principle. To describe this approach, let represent extra information on which the generator and discriminator are conditioned. One can perform the conditioning by feeding into both the discriminator and generator as additional input layer. As mentioned above, the information could be in the form of a class label, leading to a so-called class-conditional GAN, or some other kind of input such as a single image, in the case the GAN is performing an image-to-image translation task. Letting represent a potentially multidimensional label from a distribution , the optimization in (1) and (2) becomes

| (4) |

for CGANs, with (3) updated in a straightforward fashion based on (4).

A number of other GAN formulations exist beyond those mentioned above. For example, in the Least-Squares GAN (LSGAN) [31], the objective functions become

| (5) |

where and represent labels for real data and fake data, respectively, and be the value that wants to believe for fake data. Reference [27] employs an LSGAN with and . Another variation is known as Wasserstein GAN (WGAN) [32], which employs the loss function

| (6) |

where is a set referred to as the set of 1-Lipshitz functions. Reference [33] removes the condition but adds an additional term for stability, repeatability, and predictable behavior during the training process. Reference [11] employs a WGAN with the additional term proposed in [33]. An alternative way to enforce the Lipshitz constraint is to add a penalty on the gradient norm for random samples , and the updated loss function can be expressed as

| (7) |

This approach is known as WGAN with gradient penalty (WGAN-GP) [33] and it provides stability and predictable behavior during the GAN training process.

2.1 Anomaly Detection by Using GANs

One area of particular importance where GANs have been applied is in anomaly detection, which is the task of discovering anomalies, or patterns in the data that do not conform to “normal behavior.” While the use of GANs is based on modeling normal behavior using the adversarial training process, an anomaly score based on this model can be calculated and employed for anomaly detection. Several GAN-based approaches for anomaly detection have been published, all of which employ the technique of Adversarial Feature Learning idea [34]. This idea makes use of a novel architecture referred to as bidirectional GANs, or BiGANs.

BiGANs add an inverse mapping from the data space to the latent distribution, from which regular GANs generate artificial samples. The overall model is depicted in Fig. 2. A BiGAN includes an encoder which maps data x to latent representations z, in addition to the generator employed by the standard GAN architecture [34]. The BiGAN discriminator discriminates not only in data space (x versus ), but jointly in both the data and latent space (tuples versus , where the latent component is either an encoder output or a generator input .

The two modules and do not directly “communicate” with one another: the encoder never “sees” generator outputs ( is not computed), and vice versa. However, it is shown in [34] that the encoder and generator must learn to invert one another in order to fool the BiGAN discriminator. In other words, learns the inverse of the generator . The encoder is a nonlinear parametric function in the same way as and , and can be trained using a standard learning algorithm such as gradient descent. A latent representation z may be thought of as a “label” for x, but one which came for “free,” without the need for supervision. As discussed in [34], BiGANs make no assumptions about the structure or type of data to which they are applied.

In this paper, we will focus on three GAN architectures that conceptually employ the inverse generator concept of BiGANs. These are the AnoGAN [35], Efficient GAN-Based Anomaly Detection (EGBAD) [36], and a GAN + autoencoder approach [37]. AnoGAN is a deep convolutional GAN that learns a manifold of normal variability, together with a scoring scheme that labels anomalies based on the mapping from the image to the latent space. For results on medical imaging data, see [35]. Given a query image , the algorithm iterates through points in the latent space to find a representation that is close to . The algorithm begins by choosing a random point in the latent space, and generates a data sample . The algorithm then proceeds through a number of points based on the following loss function:

| (8) |

where

| (9) |

is the residual loss, and

| (10) |

is the discriminator loss, where is the output of one of the layers of the multilayer perceptron and is an interpolation coefficient. The residual loss enforces similarity between the query data and the generated data , while the discrimination loss constrains the generated data to lie near the learned manifold [38]. For each , is calculated by iteratively minimizing (8) via backpropagation steps. The iteration is on , the coefficients of and are not changed. The approach of using a linear combination of two loss functions (9) and (10) that employ the norm for training a deep neural network is different than the approaches discussed earlier for training GANs and CGANs. This approach originated with [39] and was adapted in [35].

Implementing the AnoGAN optimization over steps results in a relatively high computational load. The EGBAD approach [36] was developed as a more efficient alternative and is based on the methods in [34, 40], which enable learning an encoder by mapping input samples to their latent representation during adversarial training. GANomaly [37] is designed to be an improvement over [34, 35, 36] in terms of both performance and speed. The algorithm trains a generator network to learn the manifold of the input samples while at the same time training an autoencoder to encode the data in their latent representation. This approach uses a discriminator, a decoder, and two encoders, but the encoders have the same architecture. We describe this approach in more detail below.

As shown in Fig. 3–4, the generator network has three elements in series: an encoder , a decoder , and another encoder . The combination of and forms an autoencoder that functions as the generator . The encoder has the same structure as . takes the data sample and generates an encoded version in the latent space. Then employs to create , which is a reconstructed form of . Finally, is used to generate another point in the latent space, . Three loss functions are defined, which are combined to generate the overall generator loss. The first is the adversarial loss ,

| (11) |

where is the output of an intermediate layer in the multi-layer perceptron. The second loss function is the contextual loss and is given by

| (12) |

and the third is the encoder loss

| (13) |

Finally, the generator loss is given as

| (14) |

where , , and are coefficients between 0 and 1 that sum to 1. During the test stage, the model uses given in (13) for scoring the abnormality of a given image:

| (15) |

In order to make the anomaly score easier to interpret, [37] proposes to compute it for every sample in the test set to obtain the set of individual anomaly scores, and then apply a scaling to force the scores to lie within the range :

| (16) |

| Abbreviation | Application | Comment | Reference |

| GAN | General | Employs (1)-(3). Structure in Fig. 1. | [6], [14] |

| CGAN | Conditional GAN. Employs (4). | [30] | |

| LSGAN | Least Squares GAN. Employs (5). | [31] | |

| WGAN | Wasserstein GAN. Employs (6). | [32] | |

| WGAN-GP | Gradient Penalty WGAN. Employs (7). | [33] | |

| BiGAN | Anomaly Detection | Bidirectional GAN. Structure in Fig. 2. | [34] |

| AnoGAN | Anomaly GAN. Employs (8)-(10). | [35] | |

| EGBAD | Efficient GAN-Based Anomaly Detection. | [36] | |

| GANomaly | GAN+Autoencoder. Employs (11)-(16). Structure in Fig. 3. | [37] |

Fig. 4 shows a comparison of the architectures of the three GAN-based anomaly detection algorithms discussed above. Experiments show that EGBAD is faster than AnoGAN, and that GANomaly achieves better performance and speed than EGBAD [37, 41]. Table 1 provides a summary of GAN versions used for computer vision and image processing applications.

3 Application of GANs to 5G and Beyond

In what follows, we will first discuss, in Sec. 3.1, measures of performance and specific issues that have to do with applying GANs first to computer vision and image processing and then to wireless applications. We will discuss public datasets available for enabling the training and benchmarking the performance of GANs in the wireless domain. In the same section, we will discuss measures for general classifier performance. We will specifically use these measures in wireless classifier applications. In Sec. 3.2, we will discuss the technique of data augmentation by using GANs, which can be employed in the following three sections. Then, in Sec. 3.3–Sec. 3.5, we will discuss fast, accurate, and robust methods for analyzing large quantities of spectrum data in order to identify opportunities for i) spectrum sharing, ii) detecting anomalies, and iii) mitigating security attacks, respectively.

3.1 Performance Measures for GANs and General Classifiers

We will now discuss performance measures for GANs, first for computer vision and image processing, and then for wireless applications, and finally, general classifiers. There are basically two measures of the quality of images generated by GANs for computer vision and image processing applications. These are Inception Score (IS) [38] and Fréchet Inception Distance (FID) [42, 43]. IS offers a way to objectively and quantitatively evaluate the quality of generated images by a GAN. It is generally considered that this score is well-correlated with scores from human observers. Similarly, FID is used to evaluate the quality of images generated by GANs. FID compares the mean and standard deviation of one of the deeper layers in a Convolutional Neural Network (CNN) named Inception-v3. These layers are closer to output nodes and are believed to mimic human perception of similarity in images. Clearly, these two measures are useless for wireless applications. They need to be replaced with measures meaningful in the context of wireless communications.

For wireless applications, the goal is to calculate the similarity of two probability densities. A commonly used measure towards that end is the Jensen-Shannon distance, which is the square root of the Jensen-Shannon divergence [44]. Jensen-Shannon divergence, on the other hand, is a symmetric form of the well-known Kullback-Leibler divergence [45].

In passing, we would like to state that to facilitate the training of GANs in the wireless domain and benchmark the performance of GANs, there are increasingly more public datasets available. Examples of such sets are RADIOML 2016.04C, RADIOML 2016.10A, RADIOML 2018.01A by DeepSig [46], RFMLS 2016a by DARPA [47], CBRS by NIST [48], and synthetic data generated by GNU Radio [49].

To measure the performance of any classifier, Probability of Detection () and Probability of False Alarm () are calculated. Then, the Receiver Operating Characteristics (ROC), which plot against are drawn [50]. A commonly used measure is Area Under Curve of the ROC (AUROC). Accuracy (ACC) is defined as the ratio of the correct classifications to the total classifications. It can be calculated as Accuracy = (True Positives + True Negatives)/ (True Positives + False Positives + True Negatives + False Negatives). Furthermore, the variables Precision, Recall, and F1 Score determine the success of a binary classification problem. Precision equals the ratio of the true positives to total (true and false) classified positives. Recall equals the number of true positives to the sum of true positives and false negatives. Precision is a good measure to employ when the cost of the false positive is high whereas Recall is a good measure when the cost of the false negative is high. F1 Score, the harmonic mean [51] of Precision and Recall [52], is employed when it is desired to have a balance between Precision and Recall. All three measures take values between 0 and 1. For a given classification system, it is desirable that each be as close to 1 as possible. Please see Sec. 4 for combinations of these quantities and the definitions of Density and Coverage in [53].

An important lesson learned in this subsection is that while it is desirable to employ GAN structures from computer vision and image processing for NextG wireless applications, the performance measures in the two fields are different.

3.2 Data Augmentation via GANs for NextG

By recognizing that supervised ML requires significant number of training data samples and it is expensive and likely even infeasible to collect a sufficiently comprehensive and representative set of training data, [8] provides training data augmentation by adding synthetic data to an existing training set. This is achieved by a workflow of three steps. In the first step, a CGAN is trained using real training samples. In this step, the generator learns to synthesize new data samples. In the second step, the synthetic data samples are used along with the real samples to train a classifier. In the third step, as new data comes in, the classifier is used for classification purposes. The authors of [8] show that this approach significantly improves the adaptation time and accuracy of the resulting spectrum sensing.

In an attempt to come up with a good modulation recognition technique, [54] employs Auxiliary Classifier GANs (AC-GANs) [55] after a density transformation of the signal called Contour Stellar Image to enhance the performance of CNNs. Although employing a training sequence whose length is 10% of another algorithm, [54] achieves up to 6% gain in the recognition performance of the test sequence.

Reference [13] investigates training data augmentation for deep learning RF systems by asking basic questions about the augmentation process. The paper concentrates on the Automatic Modulation Classification (AMC) problem. These questions are: i) how useful a synthetically trained system will be when deployed without considering the environment within the synthesis, ii) how can augmentation be leveraged within the RF ML domain, and iii) what impact knowledge of degradations to the signal caused by the transmission channel contributes to the performance of a system. The results show that for data generation to a higher fidelity, the propagation path from the Digital-to-Analog Converter (DAC) to the Analog-to-Digital Converter (ADC) must be investigated and modeled. Second, although augmentation provides savings in terms of time and money, the authors suggest a cost analysis to achieve a balance between the two. Finally, a methodology is established for the quantity of data needed.

A lesson that should be derived here is that GANs can generate synthetic training data and thus augment the training set in ML. We will discuss this topic in more detail in Sec. 5.

3.3 Spectrum Sharing

As NextG networks rely on the co-existence of heterogeneous networks such as cellular and non-terrestrial networks, and radar systems, spectrum sharing is expected to play a major role in NextG communications and radar systems, while relying on ML techniques to identify and make use of spectrum opportunities. One particular example is Citizens Broadband Radio Service (CBRS) band at 3.5 GHz where the cellular wireless system needs to share the spectrum with the tactical radar while avoiding mutual interference [56]. As such, the use of ML for spectrum sensing problems is currently a growing area of interest, as evidenced in the survey [57] which summarizes results from [58, 59, 60, 61, 62, 63, 64, 65, 66, 67]. The work reported in [58, 59, 60, 61] focuses on ML issues including optimization of supervised classifiers, reduction of the feature space dimension, reduction of interference, and minimization of the number of sensors, respectively. Attacker detection and minimization of sensing time, delay, and operations in collaborative spectrum sensing are discussed in [62, 63]. Techniques that target Spectrum Sensing Data Falsification (SSDF) are developed in [64, 65, 66, 67]. Furthermore, [68, 69, 70, 71] discuss the use of neural networks for spectrum sensing under low Signal-to-Noise Ratio (SNR) conditions. References [72, 73] discuss employing neural networks for spectrum sensing in the face of parameter uncertainties in the transmitted signals.

Spectrum sharing techniques can be broadly characterized as belonging to open sharing or hierarchical access models [74, 75, 76]. In the open sharing model, each network accesses the same spectrum without any interference constraint from one network to its peers. The unlicensed band is an example of this model. The hierarchical or cognitive radio model consists of a primary network and a secondary network that accesses the primary spectrum without interfering with the Primary Users (PUs). We will focus on the concept of cognitive radio networks in order to achieve efficient spectrum sharing via ML, using the classification from [77] to organize potential approaches to spectrum sharing in cognitive radio networks. In this classification, spectrum sharing techniques are categorized as either underlay, overlay, or interweave.

| Underlay | Overlay | Interweave |

| SU knows the channel strengths | SU knows the channel gains, | When PU is not using the spectrum, |

| of PU | codebooks, and messages of PU | SU knows the spectral holes in |

| space, time, or frequency | ||

| As long as the interference is below | SU can simultaneously transmit | SU can simultaneously transmit |

| an acceptable limit, SU can | with PU; interference to PU | with PU only in the case of false |

| simultaneously transmit with PU | can be offset by using part of | spectral hole detection |

| SU’s power to relay PU’s message | ||

| SU’s transmit power is limited by | SU can transmit at any power; | SU’s power is limited by the |

| the interference constraint | the interference to PU can be | range of its spectral holes |

| offset by relaying PU’s message |

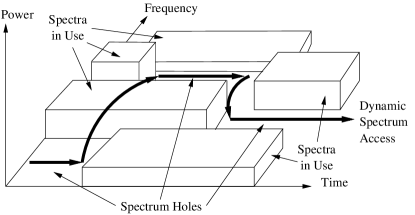

Table 2 summarizes the basic characteristics of underlay, overlay, and interweave techniques [77]. In the underlay technique, a Secondary User (SU) needs to be aware of the channels for all active PUs in order to know if the interference it generates will be below an acceptable limit. This consideration limits its transmit power. For the overlay technique, in addition to the PU channel characteristics, the SU also knows the codebooks and messages of the active PUs. The SU can transmit at

!b

any power, but to offset the interference it causes to the PUs, it relays their transmitted messages. In the interweave technique, the goal is to opportunistically communicate in the spectrum holes, or more generally the space-time-frequency voids that are not in use by either licensed and unlicensed users. As depicted in Fig. 6, these voids change with frequency and time. The interweave technique requires periodic monitoring of the spectrum for detection of user activity, so that the SU can transmit opportunistically over the space-time-frequency voids with minimal interference. All of the above approaches can benefit from SUs employing directional antennas or beamforming for more flexible control of the interference. Fig. 6 depicts spectrum sensing by a Software Defined Radio (SDR) in an actual setting.

For this research topic, implementing the spectrum sensing task using a GAN-based technique as in [8] is an interesting and potentially useful first step. It provides domain adaptation by creating synthetic data that enables the classifier to quickly adapt to changes in the spectrum environment. The approach in [8] can be extended to exploit voids in all possible dimensions including space, time, and frequency, which is of particular importance to interweave cognitive radios. To extend the research in this direction, an approach based on CGANs that emphasizes specific subsets of the space, time, and frequency spectrum using specific domain knowledge can be employed. For example, an approach based on CGANs, conditioned on specific subsets of the space, time, and frequency using specific domain knowledge can be employed. Such a CGAN will be able to generate synthetic data that is specific to certain “labels” that correspond for example to known locations (e.g., hot spots), time periods (e.g., rush hour), or occupancy patterns of certain frequency bands. A further step in this direction is the investigation of the performance of the algorithms in the presence of low SNR as in [68, 69, 70, 71] and parameter uncertainties as in [72, 73].

The approach in [8] consists of the following three steps: i) training a GAN using real training samples so that the generator learns to synthesize new samples, ii) training of a classifier with augmented data so that the original and limited training set is expanded with synthetic data, and, iii) regular operation by classifying data using the trained model. It is possible to extend this approach by training a CGAN for more typical scenarios, such as known locations, time periods, and occupancy patterns of certain bands, as discussed in the previous paragraph. In this context, unlike [8], it is possible to make a comparison of this ML-based approach with conventional narrowband spectrum sensing techniques based on energy detection, cyclostationary feature detection, matched filter detection, and wideband spectrum sensing techniques such as compressed sensing [79, 57] in terms of performance and complexity, specifically in the three areas discussed in Sec. 1.

In addition to [13] discussed in Sec. 3.2, another work on the AMC problem is provided in [80]. This work uses a modified version of CGAN and AC-GAN, which is operated in a semi-supervised (not unsupervised) mode. The modifications are needed to solve the following problems: i) the complexity of the modulation signals cause non-convergence due to the mapping from the high-dimensional parameter space to the low-dimensional classification space, and ii) lack of diversity of the generated samples cause mode collapse. Mode collapse is defined as the difficulty in convergence, especially during the training process, due to conditional constraints. The modifications consist of an encoder block , a transform block , and the splitting of the classification and discrimination functions of the conventional discriminator by adding an explicit classifier [80, Fig. 1]. The encoder and the transform are inspired by Conditional Variational Autoencoder (CVAE) [81] and Spatial Transformer Network (STN) [82], respectively. The definition of the loss functions involving all the blocks and their optimization expressions are given in [80, Eq. (10)-(20)]. To evaluate the performance of the system, the synthetic dataset in [49] is used. This dataset contains signals of eleven modulation modes at SNRs ranging from -20 dB to 20 dB, with channel distortion, frequency offset, phase offset, and Gaussian noise added. Comparisons with a number of well-known deep learning methods show gains in classification accuracy of up to 12%.

An important implementation technique for NextG networks is Cooperative Spectrum Sensing (CSS), where a number of SUs cooperate to improve sensing performance. CSS can be implemented using either a centralized or a distributed approach. In centralized sensing, the SUs send their sensing information to a Fusion Center (FC) that makes the sensing decision and broadcasts this information back to all SUs. In distributed sensing, the SUs share sensing information among each other and make independent sensing decisions locally. In both approaches, the SUs can either transmit their individual sensing decisions or soft information in the form of partial statistics. The information combining schemes used by the FC or SUs can be categorized as either hard or soft combining schemes. The most common hard combining algorithms are AND combining, OR combining, -out-of- combining, and quantized hard combining, while soft combining algorithms include selection combining, maximal ratio combining, equal gain combining, and square law combining [83]. The soft combining approach provides the best detection performance, but at the cost of additional control channel overhead.

It is desirable to extend the technique described above to include CSS. In one implementation, GANs can be placed at the SUs and classifications can be combined at the FC. An alternative is to have the SUs transmit partial statistics to be used by a single GAN at the FC. In this regard, it is worthwhile to investigate schemes that will employ combinations of centralized versus distributed CSS with hard or soft decisions. The number of combinations is high but it should be possible to reach quick conclusions. The main interest in this activity is to determine the optimal placement for GANs in interweave networks, and how much performance improvement they can provide.

There is an important lesson that can be drawn from this subsection. Due to the tremendous number of users and applications in NextG networks, spectrum sharing will need to be carried out in a dynamic environment employing principles of cognitive networking. The goal is to determine the voids based on time, frequency, and space and exploit them to accomodate more users. Two specific techniques for spectrum sharing based on GANs are discussed in [8, 80] and summarized in this subsection.

3.4 Detecting Anomalies

In a GAN, the generator captures the distribution of the training data, and the discriminator can detect false from real, making a GAN an attractive ML technique for anomaly detection. This observation has led to a number of anomaly detection techniques employing GANs [84, 85, 86, 87, 88, 89, 90, 91, 92, 93]. These applications are typically for various forms of image processing. There are some recent applications of using adversarial networks for anomaly detection in cyber-physical systems [94, 95], fraud detection in banking [96, 97, 98, 99], driver assistance systems [100], air surveillance [101], prognostics and health management in the aeronautics industry [102], and network traffic anomaly detection [103].

We will briefly discuss [103] since it is related to network anomaly detection. This work proposes BiGAN in conjunction with Principal Component Analysis (PCA) for network anomaly detection using the KDDCUP-99 dataset [104]. This database includes a wide variety of intrusions simulated in a military network environment used for a competition during a conference to build a network intrusion detector, a predictive model capable of distinguishing between “bad” connections, called intrusions or attacks, and “good” normal connections [104].

| Model | Precision | Recall | F1 Score |

|---|---|---|---|

| OC-SVM [105] | 0.7457 | 0.8523 | 0.7954 |

| DSEBM-r [105] | 0.8521 | 0.6472 | 0.7328 |

| DSEBM-e [105] | 0.8619 | 0.6446 | 0.7399 |

| DAGMM-NVI [106] | 0.9290 | 0.9447 | 0.9368 |

| BiGAN [36] | 0.9363 | 0.9512 | 0.9437 |

| PCA+BiGAN [103] | 0.9442 | 0.9592 | 0.9516 |

A number of works exist that study anomaly detection in cognitive radio networks with conventional methods [107, 108, 109, 110, 111, 112, 113] and with ML [114, 115, 116, 117, 118, 119, 120]. We refer the reader to [120] for brief descriptions of [115, 116, 117, 118, 119]. These works provide an introduction to this field, with the important observation that GAN-based methods tend to provide better results in general. However, it is certain that existing research in this area is still limited, and this provides an opportunity for significant contributions.

In [114], an adversarial autoencoder was implemented for wireless spectrum anomaly detection [121], which uses ideas similar to a GAN. We will go beyond this approach and leverage the advantages provided by AnoGAN, EGBAD, and GANomaly. We provide details here on an approach using GANomaly, although similar anomaly scores can be defined for other architectures. Consider a collection of received data vectors , whose elements are for example the I and Q components of a digitally modulated signal, or vectors of the sampled power spectral density. We seek a model that learns the source distribution , so that we can detect when the vector’s distribution is different from . This is a hypothesis testing problem, where for each vector in the test dataset , the two hypotheses are

| (17) | ||||

We have three assumptions: First, the probability of anomalous behavior in dataset is very low; second, no explicit anomaly labeling is done on the test dataset; and third, no feature extraction is performed before feeding the data to the model. The key insight in employing GAN-based anomaly detection is to bring the data to the latent space, which captures relevant features that can be used to reconstruct the actual input data, with a small anomaly score for normal data. A GAN is trained using (1) and (2), and during the test and operation stage, (LABEL:eqn:Ladv)–(15) are used. Once the training process is complete, the model weights are frozen and new data are input into the model. Anomalies are detected based on the anomaly score or the reconstruction loss of the model.

The term anomaly covers a very broad range of possibilities in the transmitted signal. Since the field of ML progresses based primarily on experimentation, a number of datasets should be created to study the performance of the algorithms under consideration. These can be in the form of time series corresponding to I/Q data from a radio receiver after downshifting in frequency and mixing with the local oscillator. This signal includes the effects of fading and multipath. To create anomalous test signals, there are a wide variety of possibilities. Reference [114] considered four types of normal signals and four types of anomalies. The normal signals were generated as either i) a single continuous signal with random bandwidth, SNR, and center frequency, ii) pulsed signals with parameters similar to i), iii) multiple continuous signals with possible frequency overlap, or iv) signals with random bandwidths and SNR with deterministic shifts/hops in frequency. The anomalous signals were chosen as either i) the same as normal signals in i) above, ii) random pulsed transmissions in the given band, iii) pulsed wideband signals covering the entire frequency band, or iv) signals from other classes in the synthetic dataset. Normal and anomalous signals such as these can be considered, as well as other random signal types with arbitrary power spectral densities.

Anomaly detection within the context of cognitive radio in the mmWave band is studied in [120], where the authors generate their own datasets with a number of anomalies and evaluate three methods including two based on GANs for their detection. As mmWave radios are vulnerable to malicious users due to the shared access medium and the complex radio environment, this opens up new threat possibilities. Moreover, since several security critical applications in the NextG networks such as the Vehicle-to-Everything (V2X) networks are based on mmWave communications, this problem is particularly important. In addressing this problem, [120] makes use of three ML techniques: Variational Autoencoder (VAE) [122], CGAN [30], and AC-GAN [55]. This work created its own dataset by using mmWave equipment in a laboratory environment with implementation using Field Programmable Gate Arrays (FPGAs) and building blocks such as local oscillators, intermediate frequency modules, mmWave radio heads, horn antennas, etc. The operation is at 28 GHz by using Cyclic-Prefix Orthogonal Frequency Division Multiplexing (CP-OFDM) with BPSK, QPSK, 16-QAM, and 64-QAM. Complex I/Q data is collected at baseband after the downconversion process. There are eight channels with 100 MHz bandwidth. The normal behavior is a fixed signal which occupies channel 4 and a sequentially moving signal among channels 8, 6, 3, and 1. This normal behavior is used in the training phase. For testing, three modalities are introduced. Modality 1 is a fixed signal in channel 4, and a moving signal that jumps among channels 5, 7, 2, and 5. Modality 2 is a fixed signal in channel 4 and a moving signal that jumps among channels 7, 5, 2, and 1. Modality 3 is a fixed signal in channel 4 and a moving channel that jumps among channels 5, 7, 6, and 5. Performance results are provided by AUROC and ACC. These results are given in Table 5.

| Modality | AUROC | ACC | |

|---|---|---|---|

| 1 | 0.9365 | 0.9356 | |

| VAE | 2 | 0.9577 | 0.9551 |

| 3 | 0.9232 | 0.9382 | |

| 1 | 0.9566 | 0.9657 | |

| CGAN | 2 | 0.9737 | 0.9696 |

| 3 | 0.9545 | 0.9668 | |

| 1 | 0.9741 | 0.9804 | |

| AC-GAN | 2 | 0.9751 | 0.9757 |

| 3 | 0.9742 | 0.9660 |

| Training [mm:ss] | Testing [mm:ss] | |

|---|---|---|

| VAE | 15:09 | 01:00 |

| CGAN | 15:16 | 01:36 |

| AC-GAN | 30:42 | 03:16 |

CGAN provides better performance than VAE and AC-GAN provides better performance than CGAN, namely, VAE performs an AUROC between 0.9365 and 0.9577, CGAN between 0.9545 and 0.9737, and AC-GAN between 0.9741 and 0.9751. Reference [120] attributes the better performance by GANs to the fact that they learn the relation between random noise and generated data such that the generated data is close to real data, and as a result, they can capture the dynamics in the real data. Whereas, VAEs return the posterior probability that an observation belongs to a cluster. They do so by learning the latent vector corresponding to an input, and as a result, all the dynamics in the real data that VAEs have access to are limited to the latent vectors already modeled. Due to a larger degrees of freedom with the former, it is generally considered that GANs can have better performance. Computational times for the three algorithms, performed on an NVIDIA GeForce GTX 1080 Ti GPU, are given in Table 5. Clearly, there is a big price paid for the better performance of AC-GAN (15 minutes vs. 30 minutes).

In [123], a deep learning based signal or modulation classification solution is described, where i) signal types may change over time, ii) some signal types are not known a priori, and therefore there is no training data available, iii) signals are potentially spoofed such as smart jammers replaying other signal types, iv) different signals may be superimposed due to interference from concurrent transmissions. The authors present a CNN that classifies the received I/Q samples as idle, in-network signal, jammer signal, or out-network signal. Traditional approaches for signal classification require expert design or knowledge of the signal. Modulations are classified into i) idle, ii) in-network user signal, iii) jamming signals, and iv) out-network user signals where there are 3, 4, and 3 different modulation techniques corresponding to ii)–iv). There are in-network users who try to access the channel opportunistically (SUs), out-network users with priority channel access (PUs), and jammers that all coexist. The authors use the dataset in [124]. There are ten modulations with SNRs from -20 dB to 18 dB in 2 dB increments. Radio fingerprinting via radio hardware imperfections such as I/Q imbalance, time or frequency drift, and power amplifier effects are used to identify the type of a transmitter. In addition to a CNN structure, Minimum Covariance Determinant (MCD) , -means clustering, and Independent Component Analysis (ICA) techniques are employed. Results demonstrate the feasibility of using deep learning to classify RF signals with high accuracy in unknown and dynamic spectrum environments. By using the signal classification results a distributed scheduling protocol is developed where SUs share the spectrum with each other while avoiding interference imposed to PUs and received from jammers.

The use of GANs that exploit the spatial domain to identify anomalous signal sources can be considered, using data received from antennas in different locations. In many applications, normal network operations are consistent with users transmitting from specific locations, such as in a stadium setting, a large hall, a shopping mall, etc. A GAN trained in this setting with an array of antennas or widely separated receivers can be used to differentiate between normal network traffic and signals that arrive from anomalous directions, even if their spectral characteristics are identical to normal users. A similar approach can be used to detect differences between mobile and stationary sources, even if no detectable Doppler shift can be measured. Mobile sources typically have lower power, reduced persistence, a time-varying polarization, as well as time-varying ranges and azimuth/elevation angles. Further differentiation is possible between ground-based and airborne sources. A particularly important direction is to investigate whether incorporating the spatial dimension together with the time and frequency dimensions can significantly improve the ability of GANs to detect anomalous network behavior.

In passing, we would like to state that more research is needed to understand the performance vs. computational complexity of autoencoders for anomaly detection [125, 126, 127, 128, 129], especially in terms of their performance for given computational complexity. Tables 5 and 5 provide an interesting comparison in this regard.

The most important lesson learned from this subsection is that the use of GANs result in powerful techniques for anomaly detection in wireless networks. In Sec. 4, we will expand this observation and provide more evidence to this fact by adopting a GAN-based anomaly detection algorithm for NextG wireless applications.

3.5 Security Applications of GANs in NextG

Another important application area of GANs is wireless security. In these applications, ML can be used to create an attack, defend against an attack, or both. Some specific cases are discussed below.

In [25], a laboratory study is carried out with eight Universal Software Radio Peripheral (USRP) radios as trusted transmitters. The goal is to use the imbalance in the detected I/Q components due to unique hardware differences in each transmitter for fingerprinting, i.e., identifying which transmitter is which. The authors use the generator of a conventional GAN to create fake transmitters, and then the GAN classifier attempts to distinguish between real and fake transmitters, achieving an accuracy of 99.9%. The authors also develop approaches to distinguish between the legitimate transmitters, one based on a CNN that obtains an accuracy of 81.6%, and another based on a Deep Neural Network (DNN) that scores 96.6%. Although this is a simple experiment, it shows the power of ML in wireless security.

A wireless spoofing algorithm based on a GAN architecture is proposed in [9], split between an adversary transmitter and an an adversary receiver placed close to the actual receiver . Feedback from based on its location allows to know the channel between the actual transmitter and as well as between and with sufficiently high accuracy. Then, a GAN is implemented between and in which the generator is trained at and the discriminator is implemented at . in turn adjusts its transmission parameters such that its signal will appear to as if they come from . In this process, trains the discriminator such that the classification error is minimized, i.e., it attempts to achieve

| (18) |

At the same time, trains the generator such that the classification error is maximized, i.e., it attempts to achieve

| (19) |

The process is continued until convergence. Thus, although they are at different locations, and train and while playing the following minimax game

| (20) |

Simulation results show that, while the probability of successful spoofing is only 7.9% for random signals and 36.2% in an amplify-and-forward architecture, the GAN is able to achieve a success rate of 76.2%.

The problem of thwarting an Intrusion Detection System (IDS) is studied in [26]. While this work is not specifically about wireless networks, the basic results are still applicable to wireless systems. While many ML approaches have been proposed for IDS, the authors of [26] show that such systems are vulnerable to an attack employing a GAN. On the other hand, the paper also shows that if the IDS system based on conventional ML is replaced by one based on GANs, it can be made more robust against adversarial perturbations.

In [27], an interesting problem involving two Unmanned Aerial Vehicles (UAVs) trying to communicate in the presence of an active jammer that eavesdrops their transmissions and jams only when the two are at communicating in the same frequency band is described. A three-way GAN is developed with the generator present at the jammer and two classifiers and present, one at each UAV. The generator operates without access to the classifiers. For reasons of stability, [27] does not employ the conventional -based formulation for driving the backpropagation algorithm, but instead, another version based on a least squares formulation called LSGAN [31]. The performance of the algorithm is compared against two GAN-based algorithms with three players and one non-GAN-based game theoretic approach. The algorithm in [27] is shown to outperform the other three in terms of average connection latency, attack probability, and packet delivery ratio in the presence of channel switching and jamming. This paper shows the sophistication of GAN-based approaches in wireless applications.

In addition to the GAN-based wireless security work described above, there are many other papers in the literature that cover various aspects of wireless security by employing other ML techniques, in terms of both designing attacks and ways to mitigate them. A sample listing is [130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 7, 144, 145, 146, 147, 148, 149, 150, 151, 10].

For spectrum sharing applications, not only traditional security threats such as receiver jamming, but also newly emerged cognitive-radio-specific security threats such as PU Emulation Attacks (PUEA), SSDF, common control channel jamming, selfish users, and intruding nodes should be considered. A detailed description of these threats can be found in [152, 153, 154, 155, 156].

Cognitive radio networks rely on a trustworthy spectrum sensing process, the key to which is the ability to distinguish PU signals from SU signals in a robust way. The use of licensed spectrum bands by PUs may be sporadic, so an SU must constantly monitor for the presence of PUs in candidate bands. If an SU detects the presence of a PU in the band under observation, it cannot use the current band. If there is no PU currently active in the band, then SUs employ a medium access control mechanism to share the band. In general, it is expected that no change will be required on the part of the PU transmission system or air interface in order to accommodate the SUs, e.g., [157], and thus it is the responsibility of the SUs to correctly identify PUs with their existing air interface. As a result, an SU’s ability to correctly identify PUs becomes very important not only for avoiding interference to the PUs, but also to be able to increase their own throughput. Distinguishing whether or not a user is a PU is nontrivial, especially when rogue users modify their air interface in order to mimic a PU’s signal characteristics. This is known as a PUEA [158].

In order to mitigate the PUEA problem, it is possible to draw parallels with a phenomenon known for adversarial attacks against classifiers that employ neural networks. This phenomenon became apparent in classification problems in conventional fields such as image processing, where it was observed that certain minor variations in the input, undetectable by humans, can make a classifier fail, see e.g., [159, 160]. An example that appeared in [160] is very well-known. Using a 22-layer deep CNN that is able to correctly identify a panda with 57.7% accuracy, adding a slight amount of noise results in it being characterized as a gibbon with 99.3% confidence. This vulnerability of deep neural networks to adversarial attacks has been well-documented, see e.g., [161, 162, 163, 164, 165].

A number of approaches exist to deal with the problem of adversarial attacks in the context of image processing; a good review is available in [166]. There is a recent study which finds that generative classifiers, such as GANs, are actually more robust to adversarial attacks [167]. Given the success of ML techniques developed for image processing and successfully adapted to problems in wireless networking, it is possible to leverage some of this prior work in the context of designing GAN-based ML systems to counteract adversarial attacks. The first such technique is described in [168], and is referred to as Defense-GAN. In this technique, the GAN is trained to model the distribution of unperturbed data. At inference time, it finds an output that does not contain any adversarial changes that is “close” in some sense to the given input data. This output is then fed to the classifier, which can employ any model, and the result is used as the classification of the data. This technique does not assume knowledge of the process for generating the adversarial examples. Reference [168] empirically shows that Defense-GAN is consistently effective against different attack methods and improves on existing defense strategies.

Fig. 7 illustrates the basic idea in [168]. For a given input observation x, the system finds the closest element (in the norm) of the data space that can be produced by the generator operating on an element of the latent space. The resulting element of the data space, presumably free from the affects of the adversarial perturbation, is then classified in the regular way. Intuitively, if is not perturbed, then the GAN can generate a sufficiently close local copy of it to be classified as “real.” On the other hand, a perturbed will not be among the possible outputs of the generator trained by using unperturbed data, and the classifier will declare it as “fake.” There is a mathematical development that justifies this intuition in [168]. The paper shows that the Defense-GAN technique is very effective against both black-box and white-box attacks, i.e., attacks that do not have any knowledge of and the classifier , as well as those that do have full knowledge of and , respectively. Other works that use a similar concept based on GANs are available in [169, 170].

In [10], the effects of a GAN-based spoofing attack to generate synthetic wireless signals that cannot be statistically distinguished from intended transmissions is studied. The spoofing attack can be used for various adversarial purposes such as emulating PUs in cognitive radio networks and fooling signal authentication systems to intrude protected wireless networks. The adversary is modeled as a pair of transmitter and a receiver that build the generator and discriminator of a GAN, respectively. The adversary transmitter trains a deep neural network to generate the best spoofing signals and fool the best defense trained as another deep neural network at the adversary receiver. Thus, the generator and the discriminator of the GAN are at different locations, collaborating over the air such that the GAN-generated signals cannot be reliably discriminated from intended signals. The adversary and the defender may have multiple transmitter or receiver antennas. Spoofing is accomplished by jointly capturing waveform, channel, and radio hardware effects inherent to wireless signals under attack. It is demonstrated in [8] that a GAN-based spoofing attack can be successfully performed and its performance is compared with random signal attack and replay attack. An important observation is that as the attacker’s transmitter gets close to the transmitter, the likelihood of the attacker to generate high-fidelity synthetic signals for the spoofing attack increases. In summary, the sussess probability of the GAN-based spoofing attack is very high. This holds for different network topologies and when node locations change from training to test time. This probability improves further when multiple antennas are used at the transmitter.

It is possible to devise defensive strategies against such attacks. A simple strategy is to change the idle and busy labels, so that the legitimate transmitter may not transmit if it senses the channel as idle, or it may transmit even if the channel is sensed as busy [134, 7], assuming that the unobserved transmitter is not a PU. This can be done over a subset of the transmit opportunities. For example, by introducing 10% of false labels, the legitimate transmitter, as the defender, can increase the misdetection performance of the attacker from 3.95% to 20.79% and the false alarm rate from 18.10% to 25.16%. The results for a single-input single-output (SISO) scenario from [134] are shown in Table 6. Clearly, this simple algorithm can result in a significant improvement.

| # of defense operations | Attacker error probabilities | Transmitter performance | ||

|---|---|---|---|---|

| / # of all samples | Misdetection | False alarm | Throughput | Success ratio |

| 0% (no defense) | 3.95% | 18.10% | 0.012 | 2.91% |

| 10% | 20.79% | 25.16% | 0.074 | 17.13% |

| 20% | 33.88% | 40.69% | 0.124 | 28.84% |

| 30% | 40.09% | 44.79% | 0.170 | 35.42% |

| 40% | 45.18% | 43.75% | 0.206 | 41.53% |

| 50% | 41.63% | 45.10% | 0.204 | 39.23% |

It is possible to extend this work to the case where NACKs, in addition to ACKs, are employed. ACKs are only positive acknowledgements, they do not reveal if there was a transmission. NACKs state there was a transmission but it was not received correctly, possibly due to a collision, but also potentially due to other effects such as fading. This increases the state space of the learning algorithm and may improve the performance of the attacker. A potential direction is to investigate if there is a simple algorithm similar to the one above that provides a defense against such attacks.

For the case of cooperative spectrum sensing, a recent study makes adversarial use of ML to construct a surrogate of the fusion center’s decision model [171]. The authors then propose an algorithm to create malicious sensing data, and show that this type of attack is very effective. Reference [171] shows, via experiments, that with existing defenses it can achieve up to an 82% success ratio while only manipulating a small number of malicious nodes. Then, a mechanism referred to as an influence-limiting policy is introduced which achieves a disruption ratio reduction of up to 80% of the attacks introduced in [171]. An important observation that can be made based on this work is that, as in many security problems, specific types of attacks require specific types of solutions. It is possible to pursue attacks similar to those in [171] and attempt to develop relevant solutions. However, it should be emphasized that a key aspect of any defense against a particular attack is first detecting that an attack has occurred, which is exactly the anomaly detection problem discussed above. Strong anomaly detection is the first step in mitigating attacks in spectrum sensing problems, both cooperative and noncooperative. To that end, it is worthwhile to investigate the effectiveness of the GAN-based anomaly detection techniques discussed in Sec. 2.1 against a number of different attacks such as those described above.

While many conventional countermeasures against security attacks in cognitive radio networks exist [172], there is very limited published work on ML techniques for this purpose [173]. Thus, this is a potentially very fruitful research area.

The most important lesson learned from this subsection is that GANs can be used for security attacks or as a mechanism for defense against any security attacks. We have discussed seven works from the literature towards both ends.

| Reference | Application | Comment |

|---|---|---|

| [8] | Spectrum Sharing | Spectrum sensing with domain adaptation and data augmentation. |

| [80] | AMC solution via modified CGAN and semi-supervised AC-GAN. | |

| [103] | Anomaly Detection | Use of BiGAN and PCA for anomaly detection. Comparisons in Table 3. |

| [114] | Unsupervised spectrum anomaly detection via adversarial autoencoder. | |

| [120] | For mmWave. Comparison of VAE, CGAN, AC-GAN in Tables 4-5. | |

| [123] | Modulation classification via CNN: Idle, in- or out-network, jammer. | |

| [25] | Security | Create and then classify fake transmitters via GAN. 99.9% accurate. |

| [9] | Spoofing with a GAN where its and are not colocated. High success rate. | |

| [26] | Intrusion detection with improved attack or defense by GANs. | |

| [27] | Three-way GAN among a jammer and two UAVs trying to communicate. | |

| [168] | Defense-GAN (Fig. 7). Effective against both black- and white-box attacks. | |

| [10] | Effects of GAN-based spoofing to generate synthetic signals like real ones. | |

| [7] | Strategy based on changing labels against GAN-based spoofing attacks. Table 6. |

4 Simulation Results

The problem of outlier detection in signal classification is a critically challenging one for NextG networks. Signal classification is needed for various NextG applications, including user equipment (UE) identification, spectrum sharing and coexistence (as in the CBRS band), and jammer (interference) detection. Specifically, for the case where there is no data about unknown waveforms (that can be considered as outliers), the signal classification problem becomes even more challenging. In this case, in general, the outlier detection algorithms are trained using only inlier waveforms and tested with inliers and outliers. Recent studies have shown that GANs can help solve the outlier detection problem. AnoGAN has been proposed as an unsupervised anomaly detection algorithm to calculate an anomaly score [35]. Despite its good performance and relaxed assumption that does not require labeled data, it suffers from instabilities in GAN training. In addition, AnoGAN is an iterative algorithm which hinders its real-time application. Fast AnoGAN (f-AnoGAN) extends AnoGAN by using a more stable GAN architecture, WGAN, and it does not require iterations (only one forward pass) [174]. F-AnoGAN uses an encoder to obtain the latent features of a generator. The latent features are input to the generator. The anomaly score is calculated as the mean squared error (MSE) of the input sample and its reconstruction plus the MSE of the discriminator features of the original and those of the reconstructed signal, , weighted by a scaling factor .

This approach can be applied to anomaly detection in RF spectrum data. As an initial result, we used the RFMLS 2016a dataset [124] that includes eleven different modulations collected over a wide SNR range between -20 dB and 18 dB. The f-AnoGAN algorithm is trained on only one (known) modulation and evaluated using all eleven modulations. We expect the model to label samples from the known (trained) modulation as inlier and samples from all others as outliers. In contrast to [33] that uses WGAN, we used the WGAN-GP which is known to be more stable. The f-AnoGAN model outputs an anomaly score per sample and the AUROC figures are calculated.

In our evaluations, the f-AnoGAN model is trained in three steps:

- 1.

-

2.

Second, we trained the encoder (architecture presented in Table 11 in the Appendix).

-

3.

Finally, we evaluated the performance of the anomaly detection using the anomaly score.

This three-step procedure closely follows the f-AnoGAN implementation of [174].111https://github.com/tSchlegl/f-AnoGAN

In evaluating the fidelity and diversity of the generative models, we compared the use of five different measures [53]: i) sum of recall and precison (S-RP), ii) harmonic mean of recall and precision (H-RP), iii) sum of density and coverage (S-DC), iv) harmonic mean of density and coverage (H-DC), and v) Jensen-Shannon distance (JSD) [175]. In the GAN training, these measures are evaluated every 10 epochs and if the fidelity/diversity measure has improved, the model is saved. For the JSD metric, a lower value indicates a better model, whereas for the remaining four measures, a higher value means a better model. We used 500 epochs for each of the GAN and encoder training steps. For all three networks, we used the Adam optimizer with a learning rate of 0.0002 [176].

As a comparison, we evaluated the performance of a convolutional autoencoder (CAE) system which is again trained on the only inlier modulation. The CAE architecture is presented in Table 12 in the Appendix. We measured the mean and standard deviation of the reconstruction loss in the training dataset. For any test data that has a reconstruction loss larger than a fixed threshold (i.e., the mean reconstruction loss plus one standard deviation), the sample is labeled as an outlier and for those samples with a reconstruction loss smaller or equal to threshold, the sample is labeled as an inlier. Note that to have a fair assessment between performance measures and minimize the effects of network architecture, we have used a CAE model that is very similar to the AE model of the f-AnoGAN.

Table 8 presents the AUROC values for the anomaly detection problem employing different modulations. We observe that the performance of the anomaly detection model depends significantly on the trained (inlier) modulation in both GAN-based and CAE-based approaches. There are several reasons for this. First, unlike image datasets, this dataset (and many other RF datasets) include the effects of channel, noise, and hardware impairments, which make the classification problem fundamentally challenging. Second, some modulations in this dataset are subsets of each other in the constellation plot. For example, AM-SSB modulation is a subset of the AM-DSB modulation. In comparing the results in Table 8, we see that the H-RP, JSD, and S-RP measures perform well across different modulations, whereas the S-DC and H-DC metrics did not provide good performance. We observe that anomalies can be precisely detected (achieving more than a 0.90 AUROC score) when f-AnoGAN models are trained on modulations such as the AM-DSB, CPFSK, GFSK, and WBFM using any of the S-RP, H-RP, and JSD measures. In these modulations, the f-AnoGAN method outperforms the CAE method. However, modulations such as AM-SSB and QAM16 present challenging problems as they are very similar to other classes (AM-DSB and QAM64, respectively). For these modulations, the f-AnoGAN method fails to reliably distinguish the anomalies from inliers, whereas the CAE model performs only as good as a random classifier.

| Mod. | S-RP | H-RP | S-DC | H-DC | JSD | CAE |

|---|---|---|---|---|---|---|

| AM-DSB | 0.963 | 0.964 | 0.962 | 0.965 | 0.968 | 0.899 |

| AM-SSB | 0.088 | 0.088 | 0.088 | 0.088 | 0.273 | 0.502 |

| BPSK | 0.797 | 0.777 | 0.349 | 0.716 | 0.439 | 0.529 |

| 8PSK | 0.558 | 0.597 | 0.540 | 0.540 | 0.636 | 0.528 |

| CPFSK | 0.968 | 0.970 | 0.853 | 0.732 | 0.939 | 0.533 |

| GFSK | 0.983 | 0.980 | 0.821 | 0.816 | 0.917 | 0.561 |

| PAM4 | 0.692 | 0.728 | 0.041 | 0.707 | 0.731 | 0.587 |

| QAM16 | 0.419 | 0.424 | 0.300 | 0.300 | 0.447 | 0.600 |

| QAM64 | 0.549 | 0.624 | 0.285 | 0.285 | 0.545 | 0.597 |

| QPSK | 0.664 | 0.678 | 0.547 | 0.547 | 0.559 | 0.530 |

| WBFM | 0.937 | 0.938 | 0.934 | 0.933 | 0.939 | 0.824 |

| Average | 0.693 | 0.706 | 0.520 | 0.603 | 0.672 | 0.608 |

Next, we discuss some implementation details. For the WGAN-GP training, there are two important parameters that we have tuned. The first parameter is the number of discriminator (critic) iterations per generator iteration. We have tested 1, 3, and 5 iterations and found that updating the generator every 3 discriminator updates provided the best results. The second parameter is the gradient penalty coefficient . This parameter had a profound effect on the performance. We tested different values () and provided the best results, which emphasizes the importance of the gradient penalty. For the f-AnoGAN model, the scaling factor is a parameter that can be tuned. We have tested values and found that provided the best results. Finally, as the f-AnoGAN approach requires only one forward pass and does not need any iteration to infer a sample, we can obtain the average inference time on hardware, which is an important measure for the embedded implementation. Note that this is in contrast to iterative algorithms such as AnoGAN that require setting parameters such as the maximum number of iterations to obtain precise timing measures. Towards this goal, we measured the average end-to-end inference time on hardware, which includes the forward pass of the input sample to the encoder, generator, and discriminator networks, and calculating the respective losses to obtain the anomaly score. The results are evaluated on an NVIDIA GeForce RTX 3060 GPU system. The average inference time is measured as 0.005784 seconds which enables us to process 172.9 samples per second without any parallel processing.

In concluding this section, we would like to emphasize that our simulations can only be considered as very initial results. To be able to come to more in-depth conclusions, substantially more work by the research community should be carried out.

5 Future Research Directions for GANs in NextG Communication Systems