Machine Learning for Detecting Data Exfiltration: A Review

Abstract

Context: Research at the intersection of cybersecurity, Machine Learning (ML), and Software Engineering (SE) has recently taken significant steps in proposing countermeasures for detecting sophisticated data exfiltration attacks. It is important to systematically review and synthesize the ML-based data exfiltration countermeasures for building a body of knowledge on this important topic. Objective: This paper aims at systematically reviewing ML-based data exfiltration countermeasures to identify and classify ML approaches, feature engineering techniques, evaluation datasets, and performance metrics used for these countermeasures. This review also aims at identifying gaps in research on ML-based data exfiltration countermeasures. Method: We used Systematic Literature Review (SLR) method to select and review 92 papers. Results: The review has enabled us to (a) classify the ML approaches used in the countermeasures into data-driven, and behaviour-driven approaches, (b) categorize features into six types: behavioral, content-based, statistical, syntactical, spatial and temporal, (c) classify the evaluation datasets into simulated, synthesized, and real datasets and (d) identify 11 performance measures used by these studies. Conclusion: We conclude that: (i) the integration of data-driven and behaviour-driven approaches should be explored; (ii) There is a need of developing high quality and large size evaluation datasets; (iii) Incremental ML model training should be incorporated in countermeasures; (iv) resilience to adversarial learning should be considered and explored during the development of countermeasures to avoid poisoning attacks; and (v) the use of automated feature engineering should be encouraged for efficiently detecting data exfiltration attacks.

Index Terms:

Data exfiltration, Data leakage, Data breach, Advanced persistent threat, Machine learningI Introduction

Data exfiltration is the process of retrieving, copying, and transferring an individual’s or company’s sensitive data from a computer, a server, or another device without authorization [1]. It is also called data theft, data extrusion, and data leakage. Data exfiltration is either achieved through physical means or network. In physical means, an attacker has physical access to a computer from where an attacker exfiltrates data through various means such as copying to a USB device or emailing to a specific address. In network-based exfiltration, an attacker uses cyber techniques such as DNS tunneling or phishing email to exfiltrate data. For instance, a recent survey shows that hackers often exploit the DNS port 53, if left open, to exfiltrate data [2]. The typical targets of data exfiltration include usernames, passwords, strategic decisions, security numbers, and cryptographic keys [3, 4] leading to data breaches. These breaches have been on the rise with 779 in 2015 to 1632 in 2017 [5]. In 2018, incidents of data exfiltration were reported out of which were successful [6]. The victims included tech giants like Google, Facebook, and Tesla [5]. Hackers successfully exploited a coding vulnerability to expose the accounts of 50 million Facebook users. Similarly, a security bug was exploited to breach data of around 53 million users of Google+.

Data exfiltration can be carried out by both external and internal actors of an organization. According to Verizon report [7] published in 2019, external and internal actors were responsible for 69% and 34% of data exfiltration incidents respectively. For the last few years, the actors carrying out data exfiltration have transformed from individuals to organized groups at times even supported by a nation-state with high budget and resources. For example, the data exfiltration incidents affiliated with nation-state actors has increased from 12% in 2018 to 23% in 2019 [6, 7].

Data exfiltration affects individuals, private firms, and government organizations, costing around $3.86 million per incident on average [8]. It can also profoundly impact the reputation of an enterprise. Moreover, losing critical data (e.g., sales opportunities and new products’ data) to a rival company can become a threat to the survival of a company. Since incidents related to nation-state actors supported with high budget and resources have been increasing (e.g., from 12% in 2018 to 23% in 2019 [6, 7]), it can also reveal national security secrets. or example, the Shadow network attack conducted over eight months targeted confidential, secret, and restricted data in the Indian Defense Ministry [9]. Hence, detecting these attacks is essential.

Traditionally, signature-based solutions (such as firewalls and intrusion detection systems) are employed for detecting data exfiltration. A signature-based system uses predefined attack signatures (rules) to detect data exfiltration attacks. For example, rules are defined that stop any outgoing network traffic that contains predefined security numbers or credit card numbers. Although these systems are accurate for detecting known data exfiltration attacks, they fail to detect novel (zero-day) attacks. For instance, if a rule is defined to restrict the movement of data containing a sensitive project’s name, then it will only restrict the exfiltration of data containing a specific project’s name. However, it will fail to detect the data leakage if an attacker leaks the information of the project without/concealing the name of the project. Hence, detecting data exfiltration can become a daunting task.

Detecting data exfiltration can be extremely challenging during sophisticated attacks. It is because

-

1.

The network traffic carrying data exfiltration information resembles normal network traffic. For example, hackers exploit existing tools (e.g., Remote Access Tools (RAT)) available in the environment. Hence, the security analytics not only need to monitor the access attempts but also the intent behind the access that is very much similar to usual business activity.

-

2.

The network traffic that is an excellent source for security analytics is mainly encrypted, making it hard to detect data exfiltration. For example, 32% of data exfiltration uses data encryption techniques [10].

-

3.

Internal trusted employees of an organization can also act as actors for Data exfiltration. SunTrust Bank Data Breach [11] and Tesla Insider Saboteur [12] are the two recent examples of insider attacks where trusted employees exfiltered personal data of millions of users. Whilst detecting data exfiltration from external hackers is challenging, monitoring and detecting the activities of an internal employee is even more challenging.

Given the frequency and severity of data exfiltration incidents, researchers and industry have been investing significant amount of resources in developing and deploying countermeasures systems that collect and analyze outgoing network traffic for detecting, preventing, and investigating data exfiltration [1]. Unlike traditional cybersecurity systems, these systems can analyze both the content (e.g., names and passwords) and the context (e.g. metadata such as source and destination addresses) of the data. ’at rest’ (e.g., data being leaked from a database), ’in motion’ (e.g., data being leaked while it is moving through a network) and ’in use’ (e.g., data being leaked while it is under processing in a computational server).

Furthermore, they detect the exfiltration of data available in different states – in rest (e.g., data being leaked from a database), in motion (e.g., data being leaked while it is moving through a network), and in use (e.g., data being leaked while it is under processing in a computational server). The deployment of data exfiltration countermeasures also varies with respect to the three states of data. For ’at rest’ data, the countermeasures are deployed on data hosting server and aims to protect known data by restricting access to the data; for data ’in motion’, they are deployed on the exit point of an organizational network; similarly, to protect ’in use’ data, they are installed on the data processing node to restrict access to the application that uses the confidential data, block copy and pasting of the data to CDs and USBs, and even block the printing of the data.

Based on the attack detection criteria, there are two main types of data exfiltration countermeasure systems: signature-based and Machine Learning (ML) based data exfiltration countermeasure [3]. Given these challenges, several Machine Learning (ML) based data exfiltration countermeasures have been proposed. ML-based systems are trained to learn the difference between benign and malicious outgoing network traffic (classification) and detect data exfiltration using the learnt model (prediction). They can also be trained to learn the normal patterns of outgoing network traffic and detect an attack based on deviation from the pattern (anomaly-based detection). To illustrate this mechanism, let’s suppose an organization’s normal behavior is to attach files of 1 MB -10 MB with outgoing emails. An ML-based system can learn this pattern during training. Now if an employee of an organization tries to send an email with a 20 MB file attachment, the system can detect an anomaly and flag an alert to the security operator of the organization. Depending on the organizational policy, the security operator can either stop or approve the transmission of the file. As such, they can detect previously unseen attacks and can be customized for different resources (e.g., hardware and software). Attacks detected through ML-based data exfiltration countermeasures can also be used to update signatures for signature-based data exfiltration countermeasures.

Over the last years, research communities have shown significant interest in ML-based data exfiltration countermeasures. However, a systematic review of the growing body of literature in this area is still lacking. This study aims to fulfil this gap and synthesize the current-state-of-the-art of these countermeasures to identify their limitations and the areas of further research opportunities.

We have conducted a Systematic Literature Review (SLR) of ML-based data exfiltration countermeasures. Based on an SLR of 92 papers, this article provides an in-depth understanding of ML based data exfiltration countermeasures. Furthermore, this review also identifies the datasets and the evaluation metrics used for evaluating ML-based data exfiltration countermeasures. The findings of this SLR purports to serve as guidelines for practitioners to become aware of commonly used and better performing ML models and feature engineering techniques. For researchers, this SLR identifies several areas for future research. The identified datasets and metrics can serve as a guide to evaluate existing and new ML-based data exfiltration countermeasures. This review makes the following contributions:

-

•

Development of a taxonomy of ML-based data exfiltration countermeasures.

-

•

Classification of feature engineering techniques used in these countermeasures.

-

•

Categorization of datasets used for evaluating the countermeasures.

-

•

Analytical review of evaluation metrics used for evaluating the countermeasures.

-

•

Mapping of ML models, feature engineering techniques, evaluation datasets, and performance metrics.

-

•

List of open issues to stimulate future research in this field.

Significance

The findings of our review are beneficial for both researchers and practitioners. For researchers, our review highlights several research gaps for future research. The taxonomies of ML approaches and feature engineering techniques can be utilized for positioning the existing and future research in this area. The identification of the most widely used evaluation datasets and performance metrics can serve as a guide for researchers to evaluate the ML-based data exfiltration countermeasures. Practitioners can use the findings to select suitable ML approaches and feature engineering techniques according to their requirements. The quantitative analysis of ML approaches, feature engineering techniques, evaluation datasets, and performance metrics guide practitioners on the most common industry-relevant practices.

The rest of this paper is organized as follows. Section II reports the background and the related work to position the novelty of this paper. Section III presents the research method used for conducting this SLR. Section IV presents our findings followed by Section V that reflects on the findings to recommend best practices and highlight the areas for future research. Section VI outlines the threats to the validity of our findings. Finally, Section VII concludes the paper.

II Foundation

This section presents the background of data exfiltration and Machine Learning (ML). It also provides a detailed comparison of our SLR with the related literature reviews.

II-A Background

This section briefly explains the Data Exfiltration Life Cycle (DELC) and ML workflow in order to contextualize the main motivators and findings of this SLR.

II-A1 Data Exfiltration Life Cycle (DELC)

According to several studies [1, 13, 14, 15], Data Exfiltration Life Cycle (DELC), also known as cyber kill-chain [16], has seven common stages. The first stage is Reconnassance; an attacker collects information about a target individual or organization system to identify the vulnerabilities in their system. After that, an attacker pairs the remote access malware or construct a phishing email in the Weaponization stage. Rest of the stages use various targeted attack vectors [1, 13, 17] to exfiltrate data from a victim. Fig 1 shows these stages and their mapping with attack vectors. The weapon created in the Weaponization stage such as an email or USB, is then delivered to a victim in the Delivery stage. In this stage, an attacker uses different attack vectors to steal information from a target. These include: Phishing [18], Cross Site Scripting (XSS) and SQL Injection attacks. In Phishing, an attacker uses social engineering techniques to convince a target that the weapon (email or social media message) comes from an official trusted source and fool naive users to give away their sensitive information or download malware to their systems. In XSS, an attacker injects malicious code in the trusted source which is executed on a user browser to steal information. SQL Injection is an attack in which an attacker injects SQL statements in an input string to steal information from the backend filesystem such as database. Delivery stage can result in either stealing the user information directly or downloading malicious software on a victims system. In Exploitation stage, the downloaded malware Root Access Toolkit (RAT) [19] is executed. The malware gains the root access and establishes a Command and Control Channel (C&C) with an attacker server. Through C&C, an attacker can control and exfiltrate data from a victim system remotely. C&C channels use Malicious domains [20] to connect to an attacker server. Different Overt channels [21, 13] such as HTTP post or Peer-to-Peer communication are used to steal data from a victim in C&C stage. Exploration stage often follows C&C stage in which an attacker uses remote access to perform lateral movement [22] and privilege escalation [23] attacks that aid an attacker to move deeper into the target organization cyberinfrastructure in search of sensitive and confidential information. The last stage is Concealment; in this stage, an attacker uses hidden channels to exfiltrate data and avoid detection. The attack vectors Data Tunneling, Side Channel, Timing Channel and Stenography represent this stage. Data Tunneling attacks use unconventional (that are not developed for sending information) channels such as Domain Name Server (DNS) to steal information from a victim, while in Side Channel [24], and Timing channel [25] an attacker gains information from physical parameters of a system such as cache, memory or CPU and time for executing a program to steal information from a victim respectively. Lastly, in Stenography attack, an attacker hides a victim’s data in images, audio or video to avoid detection. Two attack vectors: Insider attack and Advanced Persistent Attack (APT) uses multiple stages of DELC to exfiltrate data. In Insider attack, an insider (company employee or partner) leaks the sensitive information for personal gains. APT attacks are sophisticated attacks in which an attacker persists for a long time within a victim system and uses all the stages of DELC to exfiltrate data.

II-A2 Machine Learning Life Cycle

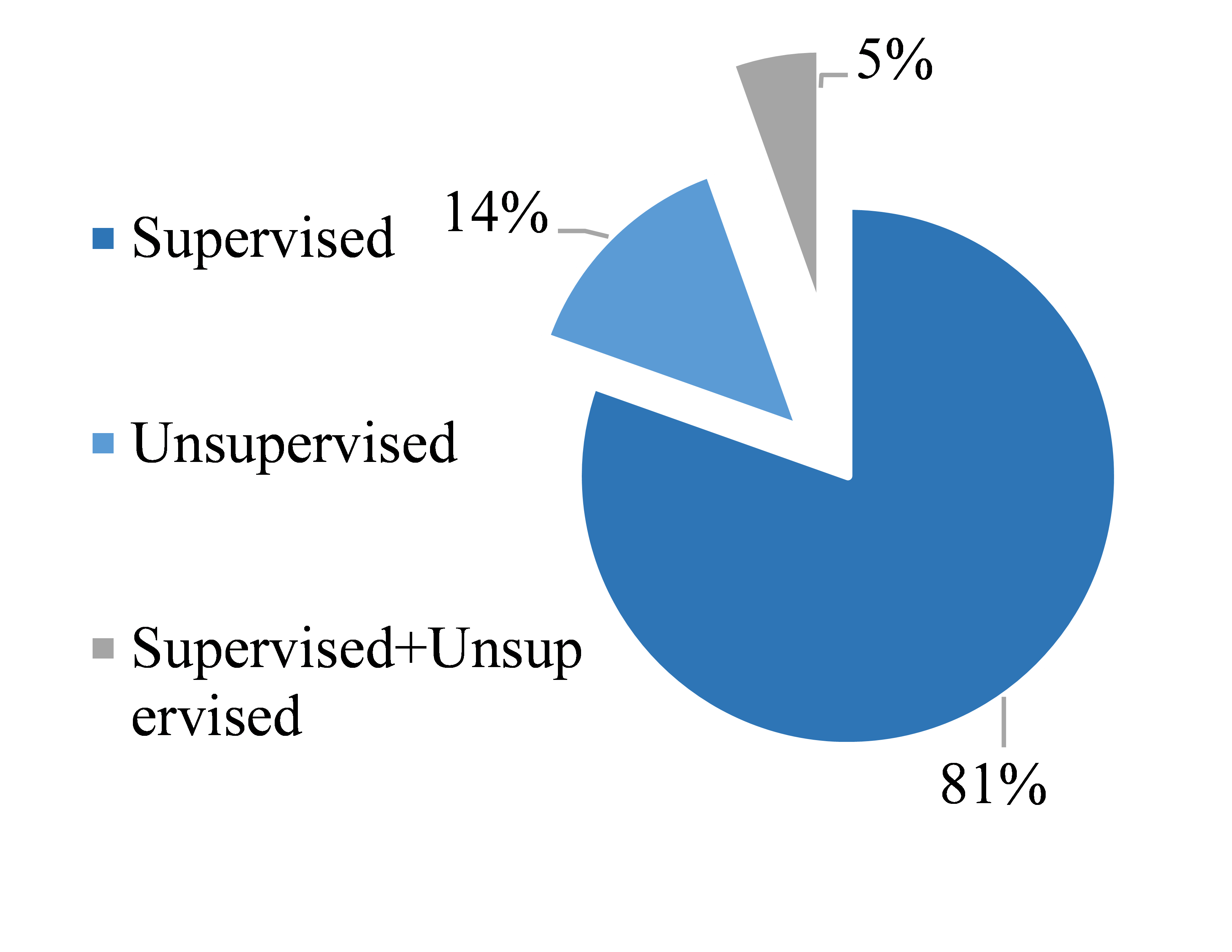

Fig 2 shows the Machine Learning Life Cycle. Each stage is briefly discussed below (refer to [26] for details). Data collection: In this phase, a dataset composed of historical data is acquired from different sources such as simulation tools, data provider companies, and the Internet to solve a problem (such as classifying malicious versus legitimate software). Data cleaning [20] is performed after data collection to ensure the quality of data and to remove noise and outliers. Data cleaning is also called pre-processing. Feature Engineering: This phase purports to extract useful patterns from data in order to segregate different classes (such as malicious versus legitimate) by using manual or automated approaches [27]. In Feature selection, various techniques (e.g., information gain, Chisquare test [28]) are applied to choose the best discriminant features from an initial set of features. Modelling: This phase has four main elements. Modelling technique: There are two main types of modelling techniques: anomaly detection and classification [29]; Each modelling technique uses various classifiers (learning algorithms) that learns a threshold or decision boundary to discriminate two or more than two classes. Anomaly-based detection learns the normal distribution of data and detects the deviation as an anomaly or an attack. The common anomaly classifiers include: K-mean [30] (Kmean), One Class SVM [31] (OCSVM), Gaussian Mixture Model [32] (GMM) and Hidden Markov Model [33] (HMM). Classification algorithms learns data distribution of both malicious and benign to model a decision boundary that segregates two or more than two classes. ML classifiers can be categorized into two main types: traditional ML classifiers and deep learning classifiers.The common Traditional ML classifiers include: Support Vector Machines [34] (SVM), Naïve Bayes [35] (NB), K-Nearest Neighbor [36] (KNN), Decision Trees [37] (DT) and Logistic Regression [38] (LR). Most popular traditional ensemble classifiers (combination of two or more classifiers) include Random Forest Trees [39] (RFT), Gradient Boosting (GB) and Ada-boost [40] classifiers. The Deep Learning (DL) classifiers include Neural Network [41] (NN), NN with Multi-layered Perception [42] (MLP), Convolutional NN [43] (CNN), Recurrent NN [44] (RNN), Gated RNN [45] (GRU) and Long Short Term Memory [46] (LSTM). In the rest of this paper, we will use these acronyms instead of their full names. Learning type [26] represents how a classifier learns from data. It has four main types: supervised, unsupervised, semi-supervised and re-enforcement learning [47]. The training phase is responsible for building an optimum model (the model resulting in best performance over the validation set) while a validation method is a technique used to divide data into training and validation (unseen testing data) set [48]; and Performance Metrics [26] evaluate how well a model performs. Various measures are employed to evaluate the performance of classifiers. The most popular metrics are Accuracy, Recall, True Positive Rate (TPR) or Sensitivity, False Positive Rate (FPR), True Negative Rate (TNR) or Specificity, False Negative Rate (FNR), Precision, F1-Score, Error-Rate, Area Under the Curve (AUC), Receiver operating characteristic Time to Train, Prediction Time and confusion matrix (please refer to [26, 49] for details of each metric).

II-B Existing Literature Reviews

The literature on data exfiltration has been studied from various perspectives. In [3], the authors identified several challenges (e.g., lots of leakage channels and human factor) in detecting and preventing data exfiltration. They also presented data exfiltration countermeasures. The authors of [4] identified and categorized 37 data exfiltration countermeasures. They provided a detailed discussion on the rationale behind data exfiltration attempts and vulnerabilities that lead to data exfiltration. In [50], the authors discussed the capabilities of data exfiltration countermeasures (e.g., discovering and monitoring how data is stored), techniques employed in countermeasures (e.g., content matching and learning), and various data states such as data in motion, data in rest, and data in use. The authors of [51] primarily focused on the challenges in detecting and preventing data exfiltration. They highlighted encryption, lack of collaborative approach among industry, and weak access controls as the main challenges. They also proposed social network analysis and test clustering as the main approaches for efficiently detecting data exfiltration. In [52], the authors highlighted the channels used for exfiltrating data from cloud environments, the vulnerabilities leading to data exfiltration, and the types of data most vulnerable to exfiltration attacks. The authors of [53] reported different states of data, challenges (e.g., encryption and access control) in detecting data exfiltration, detection approaches (e.g., secure keys), and limitations/future research areas in data exfiltration. In [1], the authors provided a comprehensive review of the data exfiltration caused by external entities (e.g., hackers). They presented the attack vectors for exfiltrating data and classified around 108 data exfiltration countermeasures into detective, investigative, and preventive countermeasures.

II-B1 Comparison with existing literature reviews

Compared to previous reviews, we focus on ML-based data exfiltration countermeasures. The bibliographic details of reviewed papers are given in Appendix LABEL:appendix. Our review included papers, most of which have not been reviewed in previous reviews. The summary of the comparison against previous works is shown in Table I.

| Topic Covered | [3] | [4] | [50] | [51] | [52] | [53] | [1] | Our Survey |

| Attack Vectors and their classification | ||||||||

| Data states with respect to data exfiltration | ||||||||

| Challenges in detecting data exfiltration | ||||||||

| Vulnerabilities leading to data exfiltration | ||||||||

| Channels used for data exfiltration | ||||||||

| ML/DM based classification of countermeasures | ||||||||

| Feature engineering for data exfiltration countermeasures | ||||||||

| Dataset used for evaluating data exfiltration countermeasures | ||||||||

| Metrics used for evaluating data exfiltration countermeasures | ||||||||

| Future Research Challenges |

II-B2 Comparison based on Objectives

All of the previous reviews focus on identifying the challenges (e.g., encryption and human factors) in detecting and preventing data exfiltration. Unlike previous reviews, our review focuses only on data exfiltration countermeasures. Some of the existing reviews [1, 3, 4], highlight data exfiltration countermeasures such as data identification and behavioral analysis. Unlike the previous reviews, our review mainly focuses on ML-based data exfiltration countermeasures.

II-B3 Comparison based on included papers

Our review included papers most of which have not been reviewed by the previous reviews; for example, there were only 3, 4, 1, and 6 papers that have been previously reviewed in [3], [4],[53] and [1] respectively Unlike the previous reviews, we used the Systematic Literature Review (SLR) method guidelines. There are approximately 61 papers published after the latest review (i.e., [1]) on data exfiltration.

II-B4 Comparison based on results

Our literature review covers various aspects of ML-based data exfiltration countermeasures as compared to the previous literature reviews that included a variety of topics such as the data states, challenges, and attack vectors. Whilst all of the previous reviews have identified the areas for future research, e.g., accidental data leakage and fingerprinting, the future research areas presented in our paper are different from others. Similar to our paper, one review [1] has also identified response time as being an important quality measure for data exfiltration countermeasures. However, unlike [1], our paper advocates the need for reporting assessment measures (e.g., training and prediction time) for data exfiltration countermeasures

II-B5 Our novel contributions

In comparison to previous reviews, our paper makes the following unique contributions:

-

•

Presents an in-depth and critical analysis of 92 ML-based data exfiltration countermeasures.

-

•

Provides rigorous analyses of the feature engineering techniques used in ML-based countermeasures.

-

•

Reports and discusses the datasets used for evaluating ML-based data exfiltration countermeasures.

-

•

Highlights the evaluation metrics used for assessing ML-based data exfiltration countermeasures.

-

•

Identifies several distinct research areas for future research in ML-based data exfiltration countermeasures.

II-B6 Research Significance

The findings of our review are beneficial for both researchers and practitioners. For researchers, our review has identified several research gaps for future research. The identification of the most widely used evaluation datasets and performance metrics along with their relative strengths and weaknesses, serve as a guide for researchers to evaluate the ML-based data exfiltration countermeasures. The taxonomies of ML approaches and feature engineering techniques can be used for positioning the existing and future research in this area. The practitioners can use the findings to select suitable ML approaches and feature engineering techniques according to their requirements. The quantitative analysis of ML approaches, feature engineering techniques, evaluation datasets, and performance metrics guide practitioners on what are the most common industry-relevant practices.

III Research Methodology

This section reports the literature review protocol that comprises of defining the research questions, search strategy, inclusion and exclusion conditions, study selection procedure and data extraction and synthesis.

III-A Research Questions and Motivation

This study aims to analyse, assess and summarise the current state-of-the-art Machine Learning countermeasures employed to detect data exfiltration attacks. We achieve this by answering five Research Questions (RQs) summarised in Table II.

| ID | Research Questions | Motivation |

|---|---|---|

| RQ1 | What ML-based countermeasures are used | The purpose is to identify the types of ML countermeasure used for detecting attack |

| to detect data exfiltration? | vector in DELC and recognize their relative strength and limitations. | |

| RQ2 | The goal here is to identify feature-engineering methods, feature selection techniques | |

| What constitutes the feature-engineering | feature type, and number of features used to detect DE attacks. Such an analysis is | |

| stage of these countermeasures? | beneficial for providing insights to practitioners and researcher about what types of | |

| feature engineering should be used in data exfiltration countermeasures. | ||

| RQ3 | Which datasets are used by these | The goal here is to identify the type of dataset used by these solutions to train and |

| countermeasures? | validate the ML models. This knowledge will provide comprehension to practitioners | |

| and researchers on available datasets for ML-based countermeasures. | ||

| RQ4 | Which modelling techniques have been | The motivation behind this is to determine what type of modelling technique, |

| used by these countermeasures? | learning and classifiers are used by ML-based data exfiltration countermeasures. | |

| RQ5 | How these countermeasures are validated | The objective here is to identify how these countermeasures are validated and how |

| and evaluated? | they relatively perform in relevance to an attack vectors and classifier used. |

III-B Search Strategy

We formulated our search strategy for searching the papers based on the guidelines provided in [54]. The strategy was composed of the following two steps:

Search Method

Search String

A set of search keywords were selected based on the research questions. The set was composed of three main parts: data exfiltration, detection, and machine learning. To ensure full coverage of our research objectives, all the identified attack vectors (section II-A1), relevant synonyms used for ”data exfiltration”, ”detection” and ”Machine learning” were incorporated. The search terms were then combined using logical operators (AND and OR) to create a complete search string. The final search string was attained by conducting multiple pilot searches on two databases (IEEE and ACM) until the search string returned the papers already known to us. These two databases were selected because they are considered highly relevant databases for computer science literature [56].

During one of the pilot searches, it was noticed that exfiltration synonym like ”leakage” and ”theft” resulted in too many unrelated papers (mostly related to electricity or power leakage or data mining leakage and theft). To ensure that none of the related papers was missed by the omission of these words, we searched the IEEE and ACM with a pilot search string ”((data AND (theft OR leakage)) AND (”machine learning” OR “data mining ”))”. We inspected all the titles of the returned papers (411 IEEE and 610 ACM), we found none of these papers matched to our inclusion criteria; hence, these terms were dropped in our final search string. Furthermore, for the infinitive verbs in the search string, we used the stem of the word for example tunnelling to tunnel* and stealing to steal* and we found that it did not make any difference in our search results. The complete search string is shown in Fig 3. The quotation marks used in the search string indicate phases (words that appear together).

III-C Data Sources

Six most well-known digital databases [57] were queried based on our search string. These databases include: IEEE, ACM, Wiley, ScienceDirect, Springer Link and Scopus. The search string was only matched with the title, abstract and keywords. The data sources were searched by keeping the time-frame intact, i.e., filter was applied on the year of the publication and only papers from 1st January 2009 to 31st December 2019 were considered. This time-frame was considered because machine learning-based methods for cyber-security and especially data exfiltration gained popularity after 2008 [58, 59].

III-D Inclusion and Exclusion Criteria

The assessment of the identified papers was done based on the inclusion and exclusion conditions. The selection process was steered by using these criteria to a set of ten arbitrarily selected papers. The selection conditions were refined based on the results of the pilot searches. Our inclusion and exclusion criteria are presented in Table III. The papers that provided countermeasures for detecting physical theft or dumpster diving [1] such as hard disk theft, copying data physically or printing data were not considered. Low-quality studies, that were published before 2017 and have a citation count of less than five or H-index of conference or journal is less than ten, were excluded. The three-year margin was considered because both quality metrics (citation count and H-index) are based on the citation and for papers less than three years old it is hard to compute their quality using these measures.

| Criteria | ID | Description |

| Inclusion | I1 | Studies that use ML techniques (e.g., supervised learning or unsupervised learning or anomaly-based detection) |

| to detect any stage or sequence of stages of data exfiltration attack. | ||

| I2 | The studies that are peer-reviewed. | |

| I3 | Studies that report contextual data (i.e., ML model, feature engineering techniques, evaluation datasets, and evaluation metrics). | |

| I4 | Published after 2008 and before 2020. | |

| Exclusion | E1 | Studies that use other than ML techniques (e.g., access control mechanism) to detect any stage of data exfiltration attack. |

| E2 | Studies based on physical attacks (e.g., physical theft or dumpster diving) | |

| E3 | Short papers less than five pages. | |

| E4 | Non-peer reviewed studies such as editorial, keynotes, panel discussions and whitepapers. | |

| E5 | Papers in languages other than English. | |

| E6 | Studies that are published before 2016 and have citation count less than 5, journal, or conference H-index less than 10. |

III-E Selection of Primary Studies

Fig 4 shows the steps we followed to select the primary studies. After initial data-driven search 32678 papers were retrieved i.e., IEEE (3112), ACM (26128), Wiley (325), Science Direct (794), Springer Link (2011) and Scopus (309). After following the complete process and repeatedly applying inclusion and exclusion criteria (Table III), 92 studies were selected for the review. Fig 5 shows the statistical distribution of the selected papers with respect to data sources, publication type, publication year and H-index. It can be seen from Fig 5(b) that ML countermeasures to detect DELC have gained popularity over the last four years, with a significant increase in the number of studies especially in the year 2017 and 2019.

III-F Data Extraction and Synthesis

Data Extraction

The data extraction form was devised to collect data from the reviewed papers. Table IV shows the data extraction form. A pilot study with eight papers was done to judge the comprehensiveness and applicability of the data extraction form. The data extraction form was inspired by [60] and consisted of three parts. Qualitative Data (D1 to D10): For each paper, a short critical summary was written to present the contribution and identify the strengths and weaknesses of each paper. Demographic information was extracted to ensure the quality of the reviewed papers. Context Data (D11 to D21): Context of a paper included the details in terms of the stage of data exfiltration, attack vector the study presented countermeasure for, dataset source, type of features, type of feature engineering methods, validation method, and modelling technique. Quantitative Data (D22-D24): The quantitative data such as the performance measure, dataset size and a number of features reported in each paper were extracted.

Data Synthesis

Each paper was analysed based on three types of data mentioned in Table IV as well as the research questions. The qualitative data (D1-D10) and context-based data (D11 to D21) were examined using thematic analysis [61] while quantitative data were synthesised by using box-plots [62] as inspired by [60, 63].

| Data Type | Id | Data Item | Description |

| Qualitative Data | D1 | Title | The title of the study. |

| D2 | Author | The author(s) of the study. | |

| D3 | Venue | Name of the conference or journal where the paper is published. | |

| D4 | Year Published | Publication year of the paper. | |

| D5 | Publisher | The publisher of the paper. | |

| D6 | Country | The location of the conference. | |

| D7 | Article Type | Publication type i.e., book chapter, conference, journal. | |

| D8 | H-index | Metric to quantify the impact of the publication. | |

| D9 | Citation Count | How many citations the paper has according to google scholar. | |

| D10 | Summary | A brief summary of the paper along with the major strengths and weaknesses | |

| Context (RQ1) | D11 | Stage | Data exfiltration stage the study is targeting (section 2.1.2). |

| D12 | Attack Vector | Data exfiltration attack vector the study is detecting (section II-A1). | |

| Context (RQ2) | D13 | Feature Type | Type of features used by the primary studies. |

| D14 | Feature Engineering Method | Type of feature engineering methods used. | |

| D15 | Feature Selection Method | The feature selection method used in the study. | |

| D16 | Dataset used | The dataset(s) used for the evaluation of the data exfiltration countermeasure. | |

| Context (RQ3) | D17 | Classifier Chosen | Which classifier is chosen after the experimentation by the study (Table 1) |

| D18 | Type of Classifier | What is the type of classifier? (section II-A2). | |

| D19 | Modelling Technique | What type of modelling technique is used (Fig 2). | |

| D20 | Type of Learning | Type of learning used by the study (Fig 2). | |

| D21 | Validation Technique | Type of validation method used by the study. | |

| Quantitative Data | D22 | Number of Features | Total number of features/dimensions used by the study |

| D23 | Dataset Size | Number of instances in the dataset | |

| (RQ2, RQ4) | D24 | Performance Measures | Accuracy, Sensitivity /TPR /Recall,Specificity/ TNR/Selectivity, |

| FPR (%), FNR (%), AUC/ROC,F-measure/F-Score, Precision/Positive | |||

| Predictive Value, Time-to-Train the Model, Prediction-Time, Error-Rate |

IV Results

This section presents the outcomes of our data synthesis based on RQs. Each section reports the result of the analysis performed followed by a summary of the findings.

IV-A RQ1: ML-based Countermeasures for Detecting Data Exfiltration

In this section, we present the classification of the reviewed studies followed by the mapping of the proposed taxonomy based on DELC.

IV-A1 Classification of ML-based Countermeasures

Since machine learning is a data-driven approach [64] and the success of ML-based systems is highly dependent on the type of data analysis performed to extract useful features. We classify the countermeasures presented in the reviewed studies into two main types based on data type and data analysis technique: Data-driven and Behavior-driven approaches.

Data-driven approaches

These approaches examine the content of data irrespective of data source i.e., network, system, or web application. They are further divided into three sub-classes: Direct, Distribution and Context inspection. In Direct Inspection, an analyst directly scans the content of data or consult external sources to extract useful features from data. For example, an analyst may look for lexical patterns like the presence of @ sign or not, count of hyperlinks, age of URL to extract useful features. The Distribution Inspection approach considers data as a distribution and performs different analyses such as statistical or temporal to extract useful features from data. These features cannot be directly obtained from data without additional computation such as average, entropy, standard deviation, and Term-frequency Inverse Document Frequency (TF-IDF). The Context Inspection approach analyses the structure and sequential relationship between multiple bytes, words or parts of speech to extract semantics or context of data. This type of analysis can unveil the hidden semantics and sequential dependencies between data automatically such as identifying words or bytes that appear together (n-grams).

Behavior-driven approaches

These approaches analyze the behavior exhibited by a particular entity such as system, network, or resource to detect data exfiltration. They are further classified into five sub-classes Event-based, Flow-based, Resource-usage based, Propagation-based, and Hybrid approaches. To detect the behavior (malicious or legitimate) of an entity (such as file and user), an Event-based approach approach studies the actions of an entity by analyzing the sequence and semantics of system calls made by them e.g., login event or file deletion event. The relationship between incoming and outgoing network traffic flow is considered by Flow-based approach approach, e.g., ratio of incoming to outgoing payload size or the correlation of DNS request and response packets. Resource usage-based approach approach aims to study the usage behavior of a particular resource, e.g., cache access pattern and CPU usage pattern. Propagation-based approach approach considers multiple stages of data exfiltration across multi-host to detect data exfiltration, e.g., several hosts affected or many hosts behaving similarly. Hybrid approach approach utilizes both event-based and flow-based approaches to detect the behavior of the overall system. Table V shows the classification of the reviewed studies (the numeral enclosed in a bracket depicts the number of papers classified under each category) and their strengths and weaknesses. The primary studies column shows the identification number of the study enlisted in Appendix LABEL:appendix.

|

Type |

Sub Type |

Strengths | Weakness | Primary Studies |

| Data-driven Approaches (44) | Direct Inspection (15) | • Do not require complex calculations to | ||

| obtain features. | • Time-consuming and labour-intensive as it | |||

| • Computationally faster as models are | requires domain knowledge. | |||

| trained over more focused and smaller | • Error-prone as it relies on human expertise. | [S5, S19, S25, S26, | ||

| number of features. | • Cannot extract hidden structural, | S28, S37, S46, S47, | ||

| • Can be implemented on client-side. | distributional, and sequential patterns. | S50, S51, S60, S69, | ||

| • Easy to comprehend the decision boundary | • Higher risk of performance degradation over | S70, S71, S86] | ||

| based on features values. | time due to concept drift and adversarial | |||

| • Flexible to adapt to new problems as not | manipulation. | |||

| dependent on type of data such as network or | ||||

| logs. | ||||

| Distribution Inspection (12) | • Capable of extracting patterns and | |||

| correlations that are not directly visible in | • Requires complex computations. | |||

| data. | • Requires larger amounts of data to be | [S3, S9, S10, S14, | ||

| • More reliable when trained over large | generalizable | S15, S17, S18, S20, | ||

| amounts of data. | • Cannot extract the structural and sequential | S22, S23, S55, S77] | ||

| • Easy to comprehend decision boundary | patterns in data. | |||

| by visualizing features. | ||||

| • Flexible to adapt to new problems. | ||||

| Context Inspection (17) | • Computationally expensive to train due to | |||

| • Capable of comprehending the structural, | complex models. | [S36, S53, S54, S58, | ||

| sequential relationship in data. | • Resource-intensive | S59, S62, S64, S66, | ||

| • Supports automation | • Requires large amounts of data to be | S67, S68, S75, S76, | ||

| • Flexible to adapt to new problems | generalizable. | S78, S82, S87, S88, | ||

| • Hard to comprehend the decision boundary. | S89] | |||

| Behaviour-driven Approaches (48) | Event -based Approach (16) | • Capable of capturing the malicious | • Sensitive to the role of user and type of | |

| activities by monitoring events on a | application e.g., the system interaction of a | [S1, S6, S7, S12, | ||

| host/server. | developer may deviate from manager or system | S13, S31, S35, S48, | ||

| • Capable of detecting insider and malware | administrator behavior. | S49, S61, S72, S73, | ||

| attacks. | • Require domain knowledge to identify critical | S74, S84, S90, S91] | ||

| events. | ||||

| Flow -based Approach (19) | • Capable of extracting correlation between | • Dependent on the window size to detect | [S11, S21, S27, S29, | |

| inbound and outbound traffic. | exfiltration. | S32, S33, S34, S38, | ||

| • Can detect network attacks such as data | • Cannot detect long term data exfiltration as it | S39, S40, S41, S42, | ||

| tunnelling and overt channels | requires large window sizes that is | S43, S44, S65, S80, | ||

| computationally expensive. | S81, S83, S92] | |||

| Resource usage-based Approach (4) | ||||

| • Can detect the misuse of a resource to | • Highly sensitive to the type of application | [S8, S52, S56, S63] | ||

| detect data exfiltration. | i.e., for video streaming program may | |||

| consume more memory and CPU than other | ||||

| legitimate programs. | ||||

| Propagation based Approach (3) | [S2, S16, S24] | |||

| • Capable for detecting data exfiltration as | • Complex and time-consuming | |||

| large scale i.e., enterprise level. | • Hard to visualize and comprehend | |||

| Hybrid Approach (6) | • Capable of detecting long term | • Data collection is time consuming as it | ||

| data exfiltration. | requires monitoring of both host and | |||

| • Capable of detecting complete DELC and | network traffic. | [S4, S30, S45, S57, | ||

| complex attacks like APT. | • Suffers from the limitations of both event and. | S79, S85] | ||

| flow-based approaches |

Although other criteria can be applied for classification, such as supervised versus unsupervised learning, automated versus manual feature engineering, classification versus anomaly-based detection and base-classifier versus ensemble classifier. These types of categorization fail to create semantically coherent, uniform and non-overlapping distribution of the selected primary studies as depicted in Fig 6. Consequently, they limit the opportunity to inspect the state-of-the-art in a more fine-grained level. Furthermore, our proposed classification increases the semantic coherence and uniformity between the multi-stage primary studies as depicted in Table VI. Hence, we conclude that our proposed classification is a suitable choice for analysing ML-based data exfiltration countermeasures.

| Stage | Attack Vector |

Direct Inspection |

Context Inspection |

Distribution Inspection |

Event-based Approach |

Flow-based Approach |

Hybrid Approach |

Propagation based Approach |

Resource usage -based Approach |

Study Ref |

| Delivery(32) | Phishing (24) | 12 | 9 | 2 | 1 | - | - | - | - | [S5, S17, S19, S25, S26, S28, S31, S36, S37, S46, S47, S53, S54, S58, S60, S69, S71, S75, S76,S77, S82, S86, S87, S88] |

| SQL Injection (5) | 1 | 3 | 1 | - | - | - | - | - | [S55, S59, S62, S70, S89] | |

| Cross Site Scripting (3) | 2 | - | - | 1 | - | - | - | - | [S50, S51, S84] | |

| Exploitation(7) | Malware/RAT (7) | - | - | - | 4 | 1 | 1 | 1 | - | [S1, S13, S16, S45, S48, S61, S81] |

| Command & Control(15) | Malicious Domain (5) | - | 3 | - | - | 2 | - | - | - | [S11, S40, S64, S66,S78] |

| Overt Channels (10) | - | - | 1 | 1 | 6 | 2 | - | - | [S22, S39, S41, S42, S43, S44, S49, S57, S83, S85] | |

| Exploration(2) | Lateral Movement (1) | - | - | - | - | - | - | 1 | - | [S24] |

| Privilege Escalation (0) | - | - | - | - | - | - | - | - | ||

| Concealment(21) | Data Tunneling (12) | - | 2 | 3 | - | 7 | - | - | - | [S14, S15, S18, S21, S29, S32, S33, S34, S65, S67, S68, S80] |

| Timing Channel (3) | - | - | 1 | - | 2 | - | - | - | [S23, S27, S92] | |

| Side Channel (3) | - | - | - | - | - | - | - | 3 | [S8, S52, S63] | |

| Steganography (3) | - | - | 3 | - | - | - | - | - | [S3, S9, S20] | |

| Multi-Stage(16) | Insider Attack (11) | - | - | 1 | 8 | - | 1 | - | 1 | [S4, S6, S7, S10, S12, S35, S56, S72, S74, S90, S91] |

| APT (5) | - | - | - | 1 | 1 | 2 | 1 | - | [S2, S30, S38, S73, S79] |

IV-A2 Mapping of proposed taxonomy with respect to DELC

Table VI shows the mapping of the proposed taxonomy with respect to DELC. It can be seen that 48% of the reviewed papers report data-driven approaches. These approaches are most frequently (i.e., 30/48 studies) used in detecting the Delivery stage of DELC. In this stage, they are primarily (i.e., 12/32 and 5/5 studies respectively) employed in detecting Phishing and SQL injection attacks. Furthermore, these approaches are also applied in detecting Concealment stage with 9/21 studies classified under this category. However, they are rarely (i.e., 5/44) used in detecting other stages of DELC.

Among data-driven approaches, Direct inspection approach is only applied to detect the Delivery stage in the reviewed studies, in particularly Phishing (12/24) attacks. One rationale behind it can be the presence of common guidelines identified by domain experts that differentiate normal website from phishing ones [18]. For example, in [S5, S37] examined the set of URLs and identified the presence of “@” symbol as one of the feature with an argument that legitimate URLs don’t use “@” symbol. Subsequently, Context Inspection approach is applied by 17/48 data-driven studies to detect Phishing (9/24), SQL Injection (3/5), Malicious Domain (3/5) and more recently in Data tunneling. For example, the authors in [S67] used bytes to represent the DNS packets and trained CNN using the sequence of bytes as input. This representation captured the full structural and sequential information of the DNS packets to detect DNS tunnels. Distribution Inspection on the other hand is commonly used to detect the Concealment stage (7/21) specifically Steganography (3/3) and Data tunnelling (3/12) attacks. The reason behind it is that both of these attacks use a different type of data encoding or wrapping to evade detection. The normal and encoded data have different probability distributions that are imperceptible in direct or context inspection [65]. For instance, [S20] used inter and intra-block correlation among Discrete Cosine Transform (DCT) of the JPEG coefficient of the image to detect Steganography.

52% of the primary studies are based on Behavior-driven approaches. In contrast to data-driven approaches, these approaches are recurrently used in detecting all the other stages of DELC except Delivery. This suggests that once the data exfiltration attack vector is delivered the analysis of the behavior exhibited by it is more significant than its content analysis. An interesting observation is that these approaches are capable to detect multi-stage and sophisticated attacks like APT (5/5) and Insider threat (10/11). One reason behind it is that they comprehend the behavior of a system in a particular context to create a logical inference that can be extended to manage complex scenarios, e.g., how a user interacts with a system or how many resources are utilized by a program? Among behavior-driven approaches, Event-based approach is most evident in detecting RAT (4/7) and insider threat (8/11) detection. This is because both of these attacks execute critical system events such as plugging a USB device or acquiring root access. The time or sequence of the event execution is leveraged by this approach to detect data exfiltration. For example, [S7] analyses the “logon” event based on working days, working hours, non-working hours, non-working days to detect Insider attack.

Flow-based Approach is notable in detecting data tunnelling (7/12) and Overt channels (6/10) attacks. The reason behind it is both of these attacks are used for exfiltrating data through the network. The only difference is the nature of the channel used. Overt channels transfer data using the conventional network protocols such as Peer to Peer or HTTP post while Data tunneling channels use channels that are not designed for data transfer such as DNS. Additionally, in contrast to Distribution inspection which is also used to detect these attacks, this approach manually monitors the relationship between the inbound and outbound flow. For example, [S43] used the ratio of the size of outbound packets with inbound packets to detect Overt channels attack. 6/48 behavior-driven studies utilize Hybrid-based approach. This approach is used in detecting APT and Overt Channels. It captures both the system and network behavior to detect data exfiltration. For example, [S30] detected an APT attack by monitoring both the system and network behavior using the frequency of system calls and the frequency of IP addresses in a session. The authors claimed that using the hybrid approach can be used to prevent long term data exfiltration. Propagation based approach is an interesting countermeasure because it examines multiple stages of DELC across multi-host to detect data exfiltration. For instance, [S2] identifies multiple stages of DELC to detect the APT attack. First, the approach detects the exploitation stage by using a threat detection module, then it uses malicious SSL certificate, malicious IP address, and domain flux detection to discover the Command and Control (C&C) communication. After that, it monitors the inbound and outbound traffic to disclose lateral movement, and finally it uses scanning and Tor connection [66] detection to notice the asset under threat and data exfiltration respectively. Lastly, Resource usage-based approach is used by side-channel (3/3) and Insider (1/1) attack detection. A reason behind it is that for Side-channel attacks, we only found studies that were detecting cache-based side-channel attacks. In this type of attack, an attacker exploits the cache usage pattern [67] to exfiltrate data. Hence, this approach monitors the cache usage behavior to detect Side-channel attacks. For example, [S52] used a ratio of cache misses and hit as one of the features to detect Side-channel attack.

Summary: ML countermeasures can be classified into data-driven and behavior-driven approaches. Data-driven approaches are frequently reported to detect Delivery stage attacks while behavior-driven approaches are employed to detect sophisticated attacks such as APT and insider threat.

IV-B RQ2: Features Extraction Process

Feature engineering produces the core input for ML models. We have analyzed data items: D13 to D14 and D21-D22 given in Table IV to answer this RQ. This question sheds light on three main techniques used in the feature extraction process, i.e., feature type, feature engineering method, and feature selection.

IV-B1 Feature Type and Feature engineering method

This section discusses the type of features and feature engineering methods used by the primary studies. The features used by the studies can be classified into six types: statistical, content-based, behavioral, syntactical, spatial and temporal. Table VII briefly describes these base features and enlist their strengths and limitations. While Table VIII describes the feature engineering methods [27] with their strength and weakness. These features are mined single-handedly or collectively as hybrid features by different studies, as shown in Fig 7(a). Furthermore, Fig 7(b) shows the distribution of these features types with respect to ML countermeasures and feature engineering method.

| Features | Description | Strengths | Weaknesses |

| Statistical | These features represent the statistical information | • Unveil hidden mathematical patterns. | • Computationally expensive to |

| About data, e.g., average, standard deviation, entropy, | • Reliable when extracted from large | compute [68]. | |

| median, and ranking of a webpage. | population. | • Sensitive to noise [65] | |

| Content | • Easy to extract. | • Requires security analyst to extract features. | |

| These features denote the information present in or | • Difficult to identify unless data | ||

| about data and do not require any complex computation. | is linear or simple in nature [65]. | ||

| -based | For example, presence of @ sign in a URL, IP address, | • Only suitable for data with regularly | |

| number of hyperlinks and steal keyword. | repeated patterns. | ||

| • Short-lived and suffer from concept | |||

| drift over time [69]. | |||

| Behavioural | These features signify the behaviour of an application, | ||

| user or data flow. They include a sequence of API calls, | • Offer good coverage to detect | • Unable to capture run-time attacks | |

| is_upload flag enabled or presence of POST method in | malicious events. [70] | [71] and [70]. | |

| request packet, connection successful or not, record type | |||

| in DNS flow and system calls made by users. | |||

| Syntactical | These features embody both syntax and semantics of | • Captures the sequential, structural, | • Produce sparse, noisy and high- |

| data. For example, grammatical rules like verb-object | and semantical information from | dimension feature vectors. | |

| pairs, n-grams, topic modelling, stylometric | data. | • Mostly based on n-grams, which | |

| and word or character embeddings features. | may result in arbitrary elements | ||

| [72]. | |||

| Spatial | Instead of a single host in a network, these features | • Suffer from high complexity because | |

| signify the information across multiple hosts. For | • Highly useful to detect multi-stage | they are extracted in a distributed | |

| example, the number of infected hosts or community | attack. | manner and evidence collection is | |

| maliciousness. | dependent on multi-hosts | ||

| Temporal | • Can fail in real-time because of the | ||

| These features represent the attributes of data that are | • Effective in capturing the temporal | volume of network stream and | |

| time dependent. For example, time interval between | patterns in data | computational effort required to | |

| two packets, time to live and the age of a domain, time between query | compute the features in a particular | ||

| and response,timestamp of an action, most frequent | time frame [68]. | ||

| interval between logged in time. |

| Technique | Description | Strengths | Limitations |

| Manual | • Return smaller number | • Time-consuming and labour-intensive. | |

| It requires domain | of meaningful features. | • Error prone. | |

| expert to analyze data to | • Suitable for homogeneous | • Not adaptable to new datasets as | |

| extract meaningful features. | data with linear patterns. | this approach extracts problem-dependent | |

| features and must be re-written for new dataset. | |||

| Automated | • Reduce Machine Learning | ||

| Advance ML techniques are | development time | Sparse and high dimensional feature vector. | |

| used to extract the features | • Can adapt to new dataset. | • Computationally expensive. | |

| from data automatically. | • Suitable for complex data with | • Require large amount of data to produce | |

| structural and sequential | good quality features. | ||

| dependencies such as network attack | |||

| detection. | |||

| Semi- | These techniques use both | • Suitable for complex task such | |

| manual and automated | as APT and lateral movement attack | • More time-consuming then automated. | |

| Automated | techniques to extract features | detection where human intervention | • Computationally expensive than manual. |

| (Both) | from data. | is mandatory. |

Statistical Features

Statistical features are the most commonly used features (i.e., 45/92 studies) among all the primary studies. It is because the statistical analysis can yield hidden patterns in data [73]. These features are used as base features by 13 studies, while 32 studies used them together with other types of features. (34/45) reviewed studies mined these features manually to detect data exfiltration attack. Whilst, it is intuitive to assume that Distribution inspection countermeasures utilize these features, this hypothesis is untrue. For example, [S17] used TF-IDF [74] to identify the most common keywords in data. However, instead of the frequency of keywords, direct keywords (content-based features) are used as features to train a classifier. Moreover, statistical features are not limited to Distribution inspection but are also used in Context inspection as shown in Fig 7. For instance, [S53] automatically extracts features from host, path and query part of URL. These features contain the probability of summation of each part per (for n=1 to 4) n-grams. The n-gram [75] is a context inspection approach; however, the study used this analysis to calculate the probability of summation of n-gram frequency. The probability was then used as a feature instead of n-grams.

Content-based Features

41/92 studies employ content-based features to detect data exfiltration attacks. The reason behind their extensive usage is the ease to extract them. Unlike the statistical features, these features do not require complex computation and can be extracted directly from data. These features are used as base features by ten studies while 31 studies used them with other features. These features are usually manually extracted (40/41) studies from data by using domain expertise. Only one study [S17] extracted these features automatically by using TF-IDF to mine the most common keywords and then scanned these keywords directly from the content as features. These features include IP address, gender, HTTP count, number of dots, brand name in URL and special symbols in URL. 80% of direct inspection countermeasures used these features. Content-based features are mostly (18 studies) combined with statistical and temporal features to extract more fine-grained features from data as illustrated in Fig 7.

Behavioral Features

These features are utilized mostly by (29/92 studies) behavior-driven approaches because they are capable of providing a better understanding of how an attack is implemented or executed. Only two studies [S48, S73] used these feature solely. [S48] extracted Malicious instruction sequence pattern to detect RAT malware whereas [S73] sequence of event codes as features to train LSTM classifier for detecting APT attacks. Both of these studies used automatic feature engineering techniques to extract these patterns. In [S48], a technique named malicious sequential mining is proposed to automatically extract features from Portable Executable (PE) files using instruction set sequence in assembly language. Similarly, in [S73] event logs is given as input to LSTM, which is then responsible for extracting the context of the features automatically. These features are usually combined with temporal features (12 studies) to represent time-based behavioral variation. For example, in [S12] attributes like logon during non-working hours, USB connected, or email sent in non-working hours depict these features. Also, behavioral features are often combined with content-based and statistical features (13 studies) to depict the activities of a system in terms of data distribution and static structure, as shown in Fig 7(b). For example, in [S13], features like the address of entry point, the base of code, section name represent content-based features. In contrast, number of sections, entropy denote statistical features whereas imported DLL files, imported functions type indicate behavioral features. Another interesting study is [S91], which combines these features with syntactical and temporal features. For obtaining a trainable corpus, the authors first transform the security logs into the word2vec [76] representation, which are then fed to auto-encoders to detect insider threat.

Syntactical Features

These are used as standalone features by 11, whereas eight studies used them combined with other features. These features are mostly (17/19) automatically or semi-automatically extracted as can be seen in Fig 7(b). These features are used by three event-based and one hybrid approach to extract the sequence of operations performed by a system, executable or user. For example, in [S61], two types of features were extracted: n-grams from executable file unpacked to binary codes using PE tools [77] and behavioral features, i.e., Window API Calls extracted using IDA Pro [78]. Whilst these features are well suited for extracting sequential structural or semantic information from data; they can result in sparse, noisy and high-dimension feature vectors. Most of these features are based on n-grams, which may result in arbitrary elements [72]. A high-dimensional and noisy feature vector can be detrimental to detection performance.

Spatial Features

Spatial features are used by flow-based (2), propagation-based (2/3) and three hybrid approaches. These features are manually extracted by all these studies. In [S16], the authors used a Fraction of malicious locations, number of malicious locations, files, malware, and community maliciousness as spatial features to detect RAT malware. These features include destination diversity, the fraction of malicious locations, number of malicious locations, files, malware, community maliciousness, number of lateral movement graph passing through a node, frequency of IP, MAC, and ARP modulation. Spatial features are advanced features that can be utilized to detect complex scenarios like APT. Besides, the spatial features are not used alone by any study but are extracted with other features as depicted in Fig 7(a).

Temporal Features

These features act as supportive features because no study has used them alone. However, these features are essential to reveal the hidden temporal pattern in data that is not evident via other features. 29 studies, along with other features, use these features. These features extracted manually by 28/29 studies except for [S91] that transformed the security logs from four weeks in a sequence into sentence template and trained word2vec to detect Insider attack.

IV-B2 Feature Engineering

Feature engineering process extracts features from data. Feature engineering methods are classified as manual, automated, and semi-automated as described in Table 10. 51 studies used manual, and four studies used a fully automated. In comparison, eight studies used semi-automated feature engineering method to extract features. Fig 10 shows the mapping of feature type with feature engineering method. It is evident that the studies based on syntactical features either used an automated or semi-automated feature engineering method. It was predictable as syntactical features are based on text structure and NLP techniques can be utilized to extract them automatically. However, it was unanticipated that one study utilizing behavioural features used this technique. In [S48], a technique named malicious sequential mining is proposed to automatically extract features from Portable Executable (PE) files using instruction set sequence in assembly language. Other studies that used automated feature engineering method used semantic inspection countermeasure but extracted statistical or Content-based features to train the classifiers.

IV-B3 Feature Selection

Feature selection is important, but an optional step in feature engineering process [79]. Table IX describes, and provides strengths and weaknesses of five different methods used by our reviewed study to select the discriminant features from the initial set of features.

| Methods | Description | Strengths | Weaknesses |

| Filter [79] | These approaches select the best features by | • Computational fast speed [79], | • Ignore classifier biases and |

| analyzing the statistical relationship between | • Simple to implement and comprehend | heuristics. | |

| features and their label. Popular filter methods | classifier independent | • Low performance [28] as not | |

| include information gain, correlation analysis and | classifier dependent | ||

| Chi-square test. | • Requires manually setting the | ||

| thresholds. | |||

| Wrapper [80] | These approaches consider the classifier used | • Good performance for specific | |

| for classification to select the best features. | model. | • Classifier dependent | |

| Popular wrapper method includes genetic | • Consider features dependencies | • Computationally expensive | |

| algorithm and sequential search. | |||

| Embedded [79] | These techniques use both advantages of filter and | • Learn good features in parallel | • Need for fine-grain |

| wrapper method to select the best features. Popular | to learning the decision | understanding of classifier | |

| embedded methods include Random Forest Trees, | boundary. | implementation | |

| XG-Boost, Ada-boost. | • Less expensive than wrappers. | • Computationally expensive | |

| than filter. | |||

| • Classifier specific | |||

| Dimension Reduction [81] | These techniques select the optimal feature set to | • Computational fast speed [79] | • Not interpretable. |

| decrease the dimensions of the feature vector. | • Transform features to low | • Manually setting for threshold | |

| Popular methods include PCA, LDA and Iso-maps. | dimension. | is required. | |

| • Classifier independent. | |||

| Attention [82] | In 2017 a mechanism for Deep Learning (DL) | • Decrease development time | • Not reliable [83, 84]. |

| approaches that focus on certain factors than | • of DL methods. | ||

| other when processing data [85]. | • Automatically learns relevant features | ||

| • without manual thresholds. | |||

| • Yield a significant performance gain [86]. |

The feature selection techniques used by the reviewed studies with respect to ML-based countermeasure, feature-engineering technique and the number of the features used are shown in Fig 8. This analysis is helpful to understand the relationship of feature selection techniques with data analysis and feature engineering process. It shows that 52% studies utilized feature selection methods. Among this filter is the most popular (16 studies) method. Almost all the approaches apply it except for hybrid, propagation-based and resource usage-based countermeasures. Additionally, this approach is not only applied to features extracted manually but also applied to automatic and semi-automatically (3 studies each) extracted features. For example, in [S1], the authors used semi-automatically extracted features i.e., Opcodes n-grams (automated)and gray-scale images (manual), and the frequency of Dynamic Link Library (DLL) in import function (manual) to classify known versus unknown malware families. The feature set dimensionality is then reduced and used information gain [87] a filter method to reduce the dimensions of the feature vector. Similarly, [S5] extracts 16 features manually based on frequency analysis assessment criteria to detect phishing websites. Chi-Square [87] feature selection algorithm a filter method is incorporated to select the features.

The second most popular technique is Dimensionality reduction is used by six studies. It is applied by all approaches except for context inspection and hybrid approaches. Five studies employ this technique over manually extracted features while only one study [S61] used it for semi-automatically extracted features. In [S61], two types of features were extracted n-grams from executable file and Window API Calls. Principle Component Analysis (PCA) is used to reduce the n-gram feature dimension, while class-wise document frequency [88] is utilized to select the top API call. Wrapper method has been reported in six studies. It is mostly used (i.e., 5/6) in data-driven countermeasures and is employed by 4 and 2 studies that extract features manually and semi-automatically, respectively. For instance, the study [S60] used two types of features webpage features and website embedded trojan (7) features. For feature selection, two wrapper methods: RFT based Gini Coefficient [89] and stability selection [90] are employed. Another interesting but relatively new feature selection method is Attention [86]. Although, it is not primarily known as a feature selection method because this algorithm is used to select most relevant features while training DNNs, we have treated it as a feature selection method, as also suggested by [86]. Attention is used by three studies, out of which two [S87, S88] used it on automatically extracted features as a part of DNN training whereas [S74] used it on manually extracted features that are fed as input to DNN classifier. In [S74], users action sequence are manually extracted from log files and feed into Attention-based LSTM that help a classifier to pay more or less focus to individual user actions. Lastly, the embedding method is just explored by two flow-based studies [S11, S65]. In [S11], an ensemble anomaly detector of Global Abnormal trees was constructed based on weights of feature computed by information gain (filter method). We believe that one reason for not using the embedding method is the need for fine-grain understanding of how classifier is constructed [91]. Most of the selected studies only apply ML classifiers as a black box.

In Fig 8b, Fig 8c, we analysed the number of features used viz-a-viz the ML countermeasure and types of features selection methods. The y-axis shows the log of the number of features used by the reviewed studies. We have used this because the total number of features used by the studies have high variance (ranging from 2 to 8000) and hence useful visualization was not possible with direct linear dimensions. The circular points show the outliers in the distribution while the solid line shows the quartile range and median based on the log of the number of features used by the studies. Fig 8b analysis is based on 69/92 studies that reported the total number of features used for training the classifier. Among the remaining 23 studies, 16 studies either extracted feature automatically or semi-automatically and 12 studies belong to context inspection ML countermeasure. It may be because these studies mostly rely on Natural Language Processing (NLP) techniques that produce high dimensional feature vectors [92]. Furthermore, the low median of context inspection approach is just based on the four studies that reported the number of features used. However, for other ML countermeasures, it can be seen that the distribution inspection studies use high feature dimensions (ranging from 258-8000). While other countermeasure use relatively low number of features, e.g., (11/16) event-based studies utilized only , all the direct inspection approaches use features while flow, hybrid, resource-usage and propagation-based approaches used features. Fig 8c shows the relationship between the number of features with feature selection methods. From the analysis it is inferred that those studies which did not use any feature selection technique and reported features dimensions (41 studies) already had low feature dimensions, i.e., having a log median of . Among these only 4/41 of these studies used features. Furthermore, the studies that apply dimensionality reduction methods and wrapper still result in a relatively high dimensional space (log median is approximately equal to 100).

Summary: In ML countermeasures to detect data exfiltration, statistical and content-based features, manual feature engineering process and filter feature selection method are most prevalent.

IV-C RQ3: Datasets

This question provides insight into the type of dataset used by the reviewed studies. We classify them as: 1) real, 2) simulated and 3) synthetic datasets as briefly described in Table X. These datasets can either be public or private. Fig 9 depicts the distribution of the type of dataset and their availability (public or private) with the type of ML countermeasure and attack vectors.

| Type | Description | Strengths | Weaknesses |

|---|---|---|---|

| Real Datasets | The datasets obtained from real data, | • Provides true distribution of | • Can suffer privacy and confidentiality issues. |

| e.g., public repositories containing | data. | • Heterogeneous especially for network | |

| actual Phishing-URLs like Phish-Tank | attacks. | ||

| [93] or original collection of spam | • Cannot depict all the misuse scenarios. | ||

| emails or real malware files. Both data | • Can suffer from imbalance | ||

| and environment are real. | |||

| Simulated Datasets | The dataset generated by software | • Able to reproduce balanced | |

| tools in a controlled environment to | datasets. | ||

| simulate an attack, e.g., collected from | • Able to generate rare misuse | • Tool specific | |

| some software tools to generate an | events. | • May not depict real distribution of data. | |

| attack scenario. Data is real (e.g., | • Useful for attacks for which | • Not a representation of real heterogeneous | |

| network packets) but environment is | real data is not available. | environment. | |

| controlled (i.e., network packets are | |||

| produced by a tunnelling tool). | |||

| Synthetic Datasets | The dataset produced by using | • Able to generate complex | • Not a true representation of real |

| mathematical knowledge of the | attack scenarios. | heterogeneous environment. | |

| required data. Both data and | • Useful for complex multi- | • Requires domain expertise to generate these | |

| environment is controlled (i.e., data | stage attacks with multiple | datasets. | |

| produced by a function f=sin(x)). | hosts. | • Hard to adapt new variations in data. |

| Attack Vector |

Type |

Dataset | Description | Study |

| Phishing | Real | CLAIR collection of | This dataset contains a real collection of ”Nigerian” Fraud emails from 1998 | [S17] |

| fraud email [94] | to 2007. It contains more than 2500 fraud emails. | |||

| URL-Dataset [95] | The dataset is composed of approximately URLs and features. | [S26] | ||

| URLs are collected from Phish-Tank [93], Open-Phish [96] feeds in 2009 . | ||||

| UCI-Repository | This dataset had 11055 data samples with 30 features extracted from 4898 | [S86] | ||

| [97, 98] | legitimate and 6157 phishing websites. | |||

| Spam URL | This dataset contains 7.7 million webpages from 11,400 hosts based on web | [S31] | ||

| Corpus [99] | crawling done on .uk domain on May 2007. Over 3,000 webpages are labelled | |||

| by at least two domain experts as “Spam”, “Borderline” or “Not Spam”. | ||||

| Enron Email | This dataset consists of emails from about hundred and fifty users, mostly | [S36] | ||

| Corpus [100, 101] | experienced management of Enron. A total of about 0.5M emails are present in | |||