∎

33email: [email protected]

Machine Learning for Cataract Classification/Grading on Ophthalmic Imaging Modalities: A Survey

Abstract

Cataracts are the leading cause of visual impairment and blindness globally. Over the years, researchers have achieved significant progress in developing state-of-the-art machine learning techniques for automatic cataract classification and grading, aiming to prevent cataracts early and improve clinicians’ diagnosis efficiency. This survey provides a comprehensive survey of recent advances in machine learning techniques for cataract classification/grading based on ophthalmic images. We summarize existing literature from two research directions: conventional machine learning methods and deep learning methods. This survey also provides insights into existing works of both merits and limitations. In addition, we discuss several challenges of automatic cataract classification/grading based on machine learning techniques and present possible solutions to these challenges for future research.

Keywords:

Cataract, classification and grading, ophthalmic image, machine learning, deep learning1 Introduction

According to World Health Organization (WHO) Bourne et al. (2017); Pascolini and Mariotti (2012), it is estimated that approximately 2.2 billion people suffer visual impairment. Cataract accounts for about 33% of visual impairment and is the number one cause of blindness (over 50%) worldwide. Cataract patients can improve life quality and vision through early intervention and cataract surgery, which are efficient methods to reduce blindness ratio and cataract-blindness burden for society simultaneously.

Clinically, cataracts are the loss of crystalline lens transparency, which occur when the protein inside the lens clumps together Asbell et al. (2005). They are associated with many factors Liu et al. (2017b), such as developmental abnormalities, trauma, metabolic disorders, genetics, drug-induced changes, ages, etc. Genetics and aging are two of the most important factors for cataracts. According to the causes of cataracts, they can be categorized as age-related cataract, pediatrics cataract (PC), and secondary cataract Asbell et al. (2005); Liu et al. (2017b). According to the location of the crystalline lens opacity, they can be grouped into nuclear cataract (NC), cortical cataract (CC), and posterior subcapsular cataract (PSC) Li et al. (2009, 2010b). NC denotes the gradual clouding and the progressive hardening in the nuclear region. CC is the form of white wedged-shaped and radially oriented opacities, and it develops from the outside edge of the lens toward the center in a spoke-like fashion Liu et al. (2017b); Chew et al. (2012). PSC is granular opacities, and its symptom includes small breadcrumbs or sand particles, which are sprinkled beneath the lens capsule Li et al. (2010b).

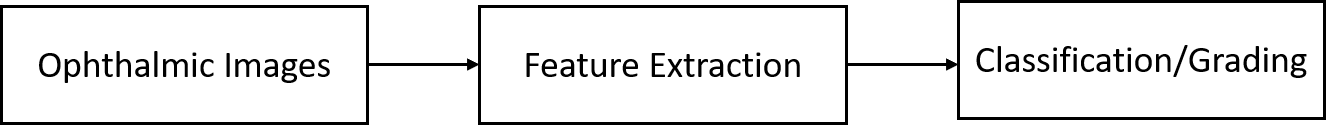

Over the past years, ophthalmologists have used several ophthalmic images to diagnose cataract based on their experience and clinical training. This manual diagnosis mode is error-prone, time-consuming, subjective, and costly, which is a great challenge in developing countries or rural communities, where experienced clinicians are scarce. To prevent cataract early and improve the precision and efficiency of cataract diagnosis, researchers have made great efforts in developing computer-aided diagnosis (CAD) techniques for automatic cataract classification/grading Long et al. (2017) on different ophthalmic images, including conventional machine learning methods and deep learning methods. The conventional machine learning method is a combination of feature extraction and classification/grading. In the feature extraction stage, a variety of image processing methods have been proposed to obtain visual features of cataract according to different ophthalmic images, such as density-based statistics method, density histogram method, bag-of-features (BOF) method, Gabor Wavelet transform, Gray level Cooccurrence Matrix (GLCM), Haar wavelet transform, etc Huang et al. (2009b); Xu et al. (2016); Li et al. (2010b); Xu et al. (2013); Gao et al. (2011); Srivastava et al. (2014); Patwari et al. (2011); Fuadah et al. (2015); Pathak and Kumar (2016). In the classification/grading stage, strong classification methods are applied to recognize different cataract severity levels, e.g., support vector machine (SVM) Khan et al. (2018); Yang et al. (2016); Qiao et al. (2017). Over the past ten years, deep learning has achieved great success in various fields, including medical image analysis, which can be viewed as a representation learning approach. It can learn low-, mid-, and high-level feature representations from raw data in an end-to-end manner (e.g., ophthalmic images). In the recent, various deep neural networks have been utilized to tackle cataract classification/grading tasks like convolutional neural networks (CNNs), attention-based networks, Faster-RCNN and multilayer perceptron neural networks (MLP). E.g., Zhang et al. Zhang et al. (2022c) proposed a multi-region fusion attention network to recognize nuclear cataract severity levels.

Previous surveys had summarized cataract types, cataract classification/grading systems, and ophthalmic imaging modalities, respectively Zhang et al. (2014); Liu et al. (2017b); Shaheen and Tariq (2019); Lopez et al. (2016); Zafar et al. (2018); Gali et al. (2019); Goh et al. (2020); however, none had summarized ML techniques based on ophthalmic imaging modalities for automatic cataract classification/ grading systematically. To the best of our knowledge, this is the first survey that systematically summarizes recent advances in ML techniques for automatic cataract classification/ grading. This survey mainly focuses on surveying ML techniques in cataract classification/grading, comprised of conventional ML methods and deep learning methods. We survey these published papers through Web of Science (WoS), Scopus, and Google Scholar databases. Fig. 1 provides a general organization framework for this survey according to collected papers, our summary, and discussion with experienced ophthalmologists. To understand this survey easily, we also review ophthalmic imaging modalities, cataract grading systems, and commonly-used evaluation measures in brief. Then we introduce ML techniques step by step. We hope this survey can provide a valuable summary of current works and present potential research directions of ML-based cataract classification/grading in the future.

2 Ophthalmic imaging modalities for cataract classification/grading

To our best understanding, this survey introduces six different eye images used for cataract classification/grading for the first time: slit-lamp image, retroillumination image, ultrasonic image, fundus image, digital camera image, and anterior segment optical coherence tomography (AS-OCT) image, as shown in Fig. 2. In the following section, we will introduce each ophthalmic image type step by step and then discuss their advantages and disadvantages.

2.1 Slit lamp image

The slit lamp camera Fercher et al. (1993); Waltman and Kaufman (1970) is a high-intensity light source instrument, which is comprised of the corneal microscope and the slit lamp. Silt lamp image can be accessed through slit lamp camera, which is usually used to examine the anterior segment and posterior segment structure of the human eye eyelid, sclera, conjunctiva, iris, crystalline lens, and cornea. Fig. 3 offers four representative slit lamp images for four different cataract severity levels.

2.2 Retroillumination image

Retroillumination image is a non-stereoscopic medical image, which is accessed through the crystalline lens camera Vivino et al. (1995); Gershenzon and Robman (1999). It can be used to diagnose CC and PSC in the crystalline lens region. Two types of retroillumination images through the crystalline lens camera can be obtained: an anterior image focused on the iris, which corresponds to the anterior cortex of the lens, and a posterior image focused on 3-5mm more posteriorly, which intends to image the opacity of PSC.

2.3 Ultrasonic image

In clinical cataract diagnosis, Ultrasound image is a commonly-used ophthalmic image modality to evaluate the hardness of cataract lens objectively Huang et al. (2009a). Frequently applied Ultrasound imaging techniques usually are developed based on measuring ultrasonic attenuation and sound speed, which may increase the hardness of the cataract lens Huang et al. (2007). High-frequency Ultrasound B-mode imaging can be used to monitor local cataract formation, but it cannot measure the lens hardness accurately Tsui and Chang (2007). To make up for the B-scan deficiency, the Ultrasound imaging technique built on Nakagami statistical model called Ultrasonic Nakagami imaging Tsui et al. (2010, 2007, 2016) was developed, which can be used for the visualization of local scatterer concentrations in biological tissues.

2.4 Fundus image

The fundus camera Plesch et al. (1987); Pomerantzeff et al. (1979) is a unique camera in conjunction with a low power microscope, which is usually used to capture fundus images operated by ophthalmologists or professional operators. Fundus image is a highly specialized form of eye imaging and can capture the eye’s inner lining and the structures of the back of the eye. Fig. 4 shows four fundus images of different cataract severity levels.

2.5 Digital camera image

Commonly used digital cameras can access digital camera images like smartphone cameras. Compared with the fundus camera and slit lamp device, the digital camera is easily available and easily used. Hence, using digital cameras for cataract screening has great potential in the future, especially for developing countries and rural areas, where people have limitations to access expensive ophthalmology equipment and experienced ophthalmologists.

2.6 Anterior segment optical coherence tomography image

Anterior segment optical coherence tomography (AS-OCT) Ang et al. (2018) imaging technique is one of optical coherence tomography (OCT) imaging techniques. It can be used to visualize and assess anterior segment ocular features, such as the tear film, cornea, conjunctiva, sclera, rectus muscles, anterior chamber angle structures, and lens Werkmeister et al. (2017); Hirnschall et al. (2013); Yamazaki et al. (2014); Hirnschall et al. (2017). AS-OCT image can provide high-resolution visualization of the crystalline lens in vivo in the eyes of people in real-time without impacting the tissue, which can help ophthalmologists get different information of the crystalline lens through the circumferential scanning mode. Recent works have suggested that the AS-OCT images can be used to locate the lens region and accurately characterize opacities of different cataract types quantitatively Grulkowski et al. (2018); Pawliczek et al. (2020). Fig. 5 offers an AS-OCT image, which can quickly help us know the crystalline lens structure.

Discussion: Though six different ophthalmic images are used for cataract diagnosis, slit lamp images and fundus images are the most commonly-used ophthalmic images for clinical cataract diagnosis and scientific research purposes. This is because existing cataract classification/grading systems are built on them. Slit lamp images can capture the lens region but cannot distinguish the boundaries between nuclear, cortical, and capsular regions. Hence, it is difficult for clinicians to diagnose different cataract types accurately based on slit lamp images. Fundus images only contain opacity information of cataract and do not contain location information of cataract, which is mainly applied to cataract screening.

Retroillumination images are usually used to diagnose CC and PSC clinically, which have not been widely studied. Digital camera images are ideal ophthalmic images for cataract screening because they can be collected through mobile phones, which are easy and cheap for most people. Like fundus images, digital camera images only have opacity information of cataract but do not contain location information of different cataract types. Ultrasonic images can capture the lens region and evaluate the hardness of the cataract lens, but they cannot distinguish different sub-regions, e.g., cortex region. AS-OCT image is a new ophthalmic image that can distinguish different sub-regions, e.g., cortex and nucleus regions, significant for cataract surgery planning and cataract diagnosis. However, there is no cataract classification/grading system built on AS-OCTs; thus, it is urgent to develop a clinical cataract classification/grading system based on AS-OCT images. Moreover, existing automatic AS-OCT image-based cataract classification has been rarely studied.

3 Cataract classification/grading systems

To classify or grade the severity levels of cataract (lens opacities) accurately and quantitatively, it is crucial and necessary to build standard/gold cataract classification/grading systems for clinical practice and scientific research purposes. This section briefly introduces six existing cataract classification/grading systems.

3.1 Lens opacity classification system

Lens opacity classification system (LOCS) was first introduced in 1988, which has developed from LOCS I to LOCS III Chylack et al. (1993, 1988, 1989). LOCS III is widely used for clinical diagnosis and scientific research. In the LOCS III, as shown in Fig. 6, six representative slit lamp images for nuclear cataract grading based on nuclear color and nuclear opalescence; five representative retroillumination images for cortical cataract grading; five representative retroillumination images for grading posterior subcapsular cataract. The cataract severity level is graded on a decimal scale by spacing intervals regularly.

3.2 Wisconsin grading System

Wisconsin grading system was proposed by the Wisconsin Survey Research Laboratory in 1990 Klein et al. (1990); Group et al. (2001); Fan et al. (2003). It contains four standard photographs for grading cataract severity levels. The grades for cataract severity levels are as follows: grade 1, as clear or clearer than Standard 1; grade 2, not as clear as Standard 1 but as clear or clearer than Standard 2; grade 3, not as clear as Standard 2 but as clear or clearer than Standard 3; grade 4, not as clear as Standard 3 but as clear or clearer than Standard 4; grade 5, at least as severe as Standard 4; and grade 6, 7 and 8, cannot grade due to severe opacities of the lens (please see detail introduction of Wisconsin grading system in literature Klein et al. (1990)). Wisconsin grading system also uses a decimal grade for cataract grading with 0.1-unit interval space, and the range of the decimal grade is from 0.1 to 4.9.

3.3 Oxford clinical cataract classification and grading system

Oxford Clinical Cataract Classification and Grading System (OCCCGS) is also a slit-lamp image-based cataract grading system Sparrow et al. (1986); Hall et al. (1997). Different to the LOCS III uses photographic transparencies of the lens as cataract grading standards, it adopts standard diagrams and Munsell color samples to grade the severity of cortical, posterior subcapsular, and nuclear cataract Hall et al. (1997). In the OCCCGS, five standard grading levels are used for evaluating the severity level of cataract based on cataract features, such as cortical features, nuclear features, morphological features, etc. Sparrow et al. (1986). E.g., the severity levels of nuclear cataract are graded as follows: Grade 0: No yellow detectable; Grade 1: yellow just detectable; Grade 2: definate yellow; Grade 3: orange yellow; Grade 4: reddish brown; Grade 5: blackish brown Sparrow et al. (1986).

3.4 Johns Hopkins system

Johns Hopkins system (JHS) was first proposed in 1980s West et al. (1988). It has four standard silt lamp images, which denotes the severity level of cataract based on the opalescence of the lens. For nuclear cataract, Grade 1: opacities that are definitely present but not thought to reduce visual acuity; Grade 2: opacities are consistent with visual acuity between 20/20 and 20/30; Grade 3 opacities are consistent with vision between 20/40 and 20/100; Grade 4: opacities are consistent with the vision of 20/200 or less.

3.5 WHO cataract grading system

WHO cataract grading system was developed by a group of experts in WHO Thylefors et al. (2002); for the Prevention of Blindness and Group (2001). The target to develop it is to enable relatively inexperienced observers to grade the most common types of cataracts reliably and efficiently. It uses four severity levels for grading NC, CC, and PSC based on four standard images accordingly.

3.6 Fundus image-based cataract classification system

Xu et al. Xu et al. (2010) proposed a fundus image-based cataract classification system (FCS) through observing the blur level. They used five levels to evaluate the blur levels on fundus images: grade 0: clear; grade 1: the small vessel region was blurred; grade 2: the larger branches of retinal vein or artery were blurred; grade 3: the optic disc region was blurred; grade 4: The whole fundus image was blurred.

Discussion: From the above-mentioned six existing cataract classification/grading systems, we can conclude that five cataract classification systems are built on slit lamp images, and one is built on fundus images, which can explain that most existing cataract works based on these two ophthalmic imaging modalities. However, these cataract classification/grading systems are subjective due to the limitations of these two imaging devices. Furthermore, to improve the precision of cataract diagnosis and the efficiency of cataract surgery, it is necessary to develop new and objective cataract classification systems on other ophthalmic image modalities, e.g., AS-OCT images.

4 Datasets

In this section, we introduce ophthalmic image datasets used for cataract classification/grading, which can be grouped in private datasets and public datasets.

4.1 Private datasets

ACHIKO-NC dataset Liu et al. (2013): ACHIKO-NC is the slit-lamp lens images dataset selected from the SiMES I database, used to grade nuclear cataracts. It comprised 5378 images with decimal grading scores (0.3 to 5.0). Professional clinicians determine the grading score of each slit lamp image. ACHIKO-NC is a widely used dataset for automatic nuclear cataract grading according to existing works.

ACHIKO-Retro dataset Liu et al. (2013): ACHIKO-Retro is the retro-illumination lens image dataset selected from SiMES I database, used to grade CC and PSC. Each lens has two eye image types: anterior image and posterior image. The anterior image focuses on the plane centered in the anterior cortex region, and the posterior image focuses on the posterior capsule region. Most previous CC and PSC grading works were conducted on the ACHIKO-Retro dataset.

CC-Cruiser dataset Jiang et al. (2021a): CC-Cruiser is the slit lamp image dataset collected from Zhongshan Ophthalmic Center (ZOC) of Sun Yat-Sen University, which is used for cataract screening. It is comprised of 476 normal images and 410 infantile cataract images.

Multicenter dataset Jiang et al. (2021a): Multicenter is the slit lamp image dataset, which is comprised of 336 normal images and 421 infantile cataract images. It was collected from four clinical institutions: the Central Hospital of Wuhan, Shenzhen Eye Hospital, Kaifeng Eye Hospital, and the Second Affiliated Hospital of Fujian Medical University.

4.2 Public datasets

EyePACS dataset Cuadros and Bresnick (2009): EyePACS is the fundus image dataset collected from EyePACS, LLC , a free platform for retinopathy screening, used to classify different levels of cataract. It is made available by California Healthcare Foundation. The dataset comprises 88,702 fundus retinal images in which 1000 non-cataract images and 1441 cataract images are provided.

HRF dataset Pratap and Kokil (2019): The high-resolution fundus (HRF) image database is selected from different open-access datasets: structured analysis of the retina (STARE) Hoover et al. (2000), standard diabetic retinopathy database (DIARETDB0) Kauppi et al. (2006), e-ophtha Decenciere et al. (2013), methods to evaluate segmentation and indexing techniques in the field of retinal ophthalmology (MESSIDOR) database Decencière et al. (2014), digital retinal images for vessel extraction (DRIVE) database Staal et al. (2004), fundus image registration (FIRE) Hernandez-Matas et al. (2017) dataset, digital retinal images for optic nerve segmentation database (DRIONS-DB) Carmona et al. (2008), Indian diabetic retinopathy image dataset (IDRiD) Porwal et al. (2018), available datasets released by Dr. Hossein Rabbani Mahmudi et al. (2014), and other Internet sources.

5 Machine learning techniques

This section mainly investigates on ML techniques for cataract classification/grading over the years, which is comprised of conventional ML methods and deep learning methods.

| Literature | Method | Image Type | Year | Application | Cataract Type | |

|---|---|---|---|---|---|---|

| Li et al. (2009) | ASM + SVR | Slit Lamp Image | 2009 | Grading | NC | |

| Huang et al. (2009b) | ASM + Ranking | Slit Lamp Image | 2009 | Grading | NC | |

| Li et al. (2010a) | ASM + SVR | Slit Lamp Image | 2010 | Grading | NC | |

| Huang et al. (2010) | ASM + Ranking | Slit Lamp Image | 2010 | Grading | NC | |

| Li et al. (2007) | ASM + LR | Slit Lamp Image | 2007 | Grading | NC | |

| Xu et al. (2016) | SF + SWLR | Slit Lamp Image | 2016 | Grading | NC | |

| Xu et al. (2013) | BOF + GSR | Slit Lamp Image | 2013 | Grading | NC | |

| Caixinha et al. (2016) | SVM | Slit Lamp Image | 2016 | Grading | NC | |

| Jiang et al. (2017) | SVM | Slit Lamp Image | 2017 | Grading | NC | |

| Wang et al. (2017) | SVM | Slit Lamp Image | 2017 | Grading | NC | |

| Srivastava et al. (2014) | IGB | Slit Lamp Image | 2014 | Grading | NC | |

| Jiang et al. (2017) | RF | Slit Lamp Image | 2017 | Classification | NC | |

| Cheng (2018) | SRCL | Slit Lamp Image | 2018 | Grading | NC | |

| Jagadale et al. (2019) | Hough Circular Transform | Slit Lamp Image | 2019 | Classification | NC | |

| Kai et al. (2019) | RF | Slit Lamp Image | 2019 | Classification | PC | |

| Kai et al. (2019) | NB | Slit Lamp Image | 2019 | Classification | PC | |

| Li et al. (2010b) | Canny + Spatial Filter | Retroillumination Image | 2010 | Classification | PSC | |

| Li et al. (2008b) | EF + PCT | Retroillumination Image | 2008 | Classification | PSC | |

| Chow et al. (2011) | Canny | Retroillumination Image | 2011 | Classification | PSC | |

| Zhang and Li (2017) | MRF | Retroillumination Image | 2017 | Classification | PSC | |

| Gao et al. (2011) | LDA | Retroillumination Image | 2011 | Classification | PSC & CC | |

| Li et al. (2008a) | Radial-edge & Region-growing | Retroillumination Image | 2008 | Classification | CC | |

| Caxinha et al. (2015) | PCA | Ultrasonic Image | 2015 | Classification | Cataract | |

| Caxinha et al. (2015) | Bayes | Ultrasonic Image | 2015 | Classification | Cataract | |

| Caxinha et al. (2015) | KNN | Ultrasonic Image | 2015 | Classification | Cataract | |

| Caxinha et al. (2015) | SVM | Ultrasonic Image | 2015 | Classification | Cataract | |

| Caxinha et al. (2015) | FLD | Ultrasonic Image | 2015 | Classification | Cataract | |

| Caixinha et al. (2016) | SVM | Ultrasonic Image | 2016 | Classification | Cataract | |

| Caixinha et al. (2016) | RF | Ultrasonic Image | 2016 | Classification | Cataract | |

| Caixinha et al. (2016) | Bayes Network | Ultrasonic Image | 2016 | Classification | Cataract | |

| Caixinha et al. (2014b) | PCA + SVM | Ultrasonic Image | 2014 | Classification | Cataract | |

| Caixinha et al. (2014a) | Nakagami Distribution +CRT | Ultrasonic Image | 2014 | Classification | Cataract | |

| Jesus et al. (2013) | SVM | Ultrasonic Image | 2013 | Classification | Cataract | |

| Fuadah et al. (2015) | GLCM + KNN | Digital Camera Image | 2015 | Classification | Cataract | |

| Fuadah et al. (2015) | GLCM + KNN | Digital Camera Image | 2015 | Classification | Cataract | |

| Pathak and Kumar (2016) | K-Means | Digital Camera Image | 2016 | Classification | Cataract | |

| Patwari et al. (2011) | IMF | Digital Camera Image | 2016 | Classification | Cataract | |

| Khan et al. (2018) | SVM | Digital Camera Image | 2016 | Classification | Cataract | |

| Guo et al. (2015) | WT + MDA | Fundus Image | 2015 | Classification | Cataract | |

| Yang et al. (2016) | Wavelet-SVM | Fundus Image | 2015 | Classification | Cataract | |

| Yang et al. (2016) | Texture-SVM | Fundus Image | 2015 | Classification | Cataract | |

| Yang et al. (2016) | Stacking | Fundus Image | 2015 | Classification | Cataract | |

| Fan et al. (2015) | PCA + Bagging | Fundus Image | 2015 | Classification | Cataract | |

| Fan et al. (2015) | PCA + RF | Fundus Image | 2015 | Classification | Cataract | |

| Zhang et al. (2019a) | Multi-feature Fusion&Stacking | Fundus Image | 2019 | Classification | Cataract | |

| Fan et al. (2015) | PCA + GBDT | Fundus Image | 2015 | Classification | Cataract | |

| Fan et al. (2015) | PCA + SVM | Fundus Image | 2015 | Classification | Cataract | |

| Cao et al. (2020b) | Haar Wavelet + Voting | Fundus Image | 2019 | Classification | Cataract | |

| Qiao et al. (2017) | SVM + GA | Fundus Image | 2017 | Classification | Cataract | |

| Huo et al. (2019) | AWM + SVM | Fundus Image | 2019 | Classification | Cataract | |

| Song et al. (2016) | DT | Fundus Image | 2016 | Classification | Cataract | |

| Song et al. (2016) | Bayesian Network | Fundus Image | 2016 | Classification | Cataract | |

| Song et al. (2019) | DWT+SVM | Fundus Image | 2019 | Classification | Cataract | |

| Song et al. (2019) | SSL | Fundus Image | 2019 | Classification | Cataract | |

| Zhang et al. (2022a) | RF | AS-OCT image | 2021 | Classification | NC | |

| Zhang et al. (2022a) | SVM | AS-OCT image | 2021 | Classification | NC |

5.1 Conventional machine learning methods

Over the past years, scholars have developed massive state-of-the-art conventional ML methods to automatically classify/grade cataract severity levels, aiming to assist clinicians in diagnosing cataract efficiently and accurately. These methods consist of feature extraction and classification /grading, as shown in Fig. 7. Table 1 summarizes conventional ML methods for cataract classification/grading based on different ophthalmic images.

5.1.1 Feature extraction

Considering the characteristics of different imaging techniques and cataract types, we introduce feature extraction methods based on ophthalmic image modalities.

Slit lamp image: The procedures to extract features from slit lamp images are comprised of the lens structure detection and feature extraction.

Fig. 8 offers a representative slit lamp image-based feature extraction flowchart. Firstly, according to the histogram analysis results of the lens, the foreground of the lens is detected by setting the pixel thresholding, and the background of the lens is even based on slit lamp images. Afterward, we analyze the profile on the horizontal median line of the image. The largest cluster on the line is detected as the lens, and the centroid of the cluster is detected as the horizontal coordinate of the lens center. Then, we get the profile on the vertical line through the point. Finally, the center of the lens is estimated, and the lens can be further estimated as an ellipse with the semi-major axis radius estimated from the horizontal and vertical profile.

The lens contour or shape needs to be captured by following the lens location. Researchers commonly used the active shape model (ASM) method for the lens contour detection Li et al. (2010a, b, 2008b) and achieved 95% accuracy of the lens structure detection. The ASM can describe the object shape through an iterative refinement procedure to fit an example of the object into a new image based on the statistical models Li et al. (2007). Based on the detected lens contour, many feature extraction methods have been proposed to extract informative features, such as bag-of-features (BOF) method, grading protocol-based method, semantic reconstruction-based method, statistical texture analysis Huang et al. (2009b); Xu et al. (2016); Li et al. (2010b); Xu et al. (2013); Gao et al. (2011); Srivastava et al. (2014), etc.

Retroillumination image: It also consists of two stages for feature extraction based on retroillumination images: pupil detection and opacity detection Li et al. (2008b, 2010b); Chow et al. (2011); Gao et al. (2011), as shown in Fig. 9. Researchers usually use a combination of the Canny edge detection method, the Laplacian method, and the convex hull method to detect the edge pixel in the pupil detection stage. The non-linear least-square fitting method is used to fit an ellipse based on the detected pixels. In the opacity detection stage, the input image is transformed into the polar coordinate at first. Based on the polar coordinate, classical image processing methods are applied to detect opacity such as global threshold, local threshold, edge detection, and region growing. Apart from the above methods, literature Zhang and Li (2017) uses Watershed and Markov random fields (MRF) to detect the lens opacity, and results showed that the proposed framework got competitive results of PSC detection.

Ultrasound Images & Digital Camera Images & AS-OCT Images: For ultrasound images, researchers adopt the Fourier Transform (FT) method, textural analysis method, and probability density to extract features Caixinha et al. (2016, 2014b, 2014a).

The procedures to extract features from digital camera images is the same to slit lamp images, but different image processing methods are used, such as Gabor Wavelet transform, Gray level Co-occurrence Matrix (GLCM), morphological image feature, and Gaussian filter Patwari et al. (2011); Fuadah et al. (2015); Pathak and Kumar (2016); Fuadah et al. (2015).

The steps to detect lens region for AS-OCT images are also similar to slit lamp images. Literature Zhang et al. (2022a) uses intensity-based statistics method and intensity histogram method to extract image features from AS-OCT images.

Fundus image: Over the years, researchers have developed various wavelet transform methods to preprocess fundus images for extracting valuable features, as shown in Fig. 10, such as discrete wavelet transform (DWT), discrete cosine transform (DCT), Haar wavelet transform, and top-bottom hat transformation Zhou et al. (2019); Guo et al. (2015); Cao et al. (2020b).

5.1.2 Classification & grading

In this section, we mainly introduce conventional machine learning methods for cataract classification/grading.

Support vector machine: Support vector machine (SVM) is a classical supervised machine learning technique, which has been widely be used for classification and linear regression tasks. It is a popular and efficient learning method for medical imaging applications. For the cataract grading task, Li et al. Li et al. (2009, 2010a) utilized support vector machine regression (SVR) to grade the severity level of cataract and achieved good grading results based on slit lamp images. The SVM classifier is widely used in different ophthalmic images for the cataract classification task. E.g., literature Jiang et al. (2017); Wang et al. (2017) applies SVM to classify cataract severity levels based on slit-lamp images. For other ophthalmic image types, SVM also achieves good results based on extracted features Khan et al. (2018); Yang et al. (2016, 2016); Qiao et al. (2017).

Linear regression: Linear regression (LR) is one of the most well-known ML methods and has been used to address different learning tasks. The concept of LR is still a basis for other advanced techniques, like deep neural networks. Linear functions determine its model in LR, whose parameters are learned from data by training. Literature Li et al. (2007) first studies automatic cataract grading with LR on slit lamp images and achieves good grading results. Followed by literature Li et al. (2007), Xu et al. Xu et al. (2013, 2016) proposed the group sparsity regression (GSR) and similarity weighted linear reconstruction (SWLR) for cataract grading and achieved better grading results.

K-nearest neighbors: K-nearest neighbors (KNN) method is a simple, easy-to-implement supervised machine learning method used for classification and regression tasks. It uses similarity measures to classify new cases based on stored instances. Y.N. Fuadah et al.Fuadah et al. (2015) used the KNN to detect cataract on digital camera images and achieved 97.2% accuracy. Literature Caxinha et al. (2015) also uses KNN for cataract classification on Ultrasonic images, which were collected from the animal model.

Ensemble learning method: Ensemble learning method uses multiple machine learning methods to solve the same problem and usually obtain better classification performance. Researchers Yang et al. (2016); Zhang et al. (2019a); Cao et al. (2020b) have used several ensemble learning methods for cataract classification, such as Stacking, Bagging, and Voting. Ensemble learning methods achieved better cataract grading results than single machine learning methods.

Ranking: Ranking denotes a relationship within the list in a/an descending/ascending order. Researchers Huang et al. (2009b, 2010) applied the ranking strategy to automatic cataract grading by computing the score of each image from the learned ranking function such as RankBoost and Ranking SVM and achieved competitive performance.

Other machine learning methods: Apart from the above-mentioned conventional ML methods, other advanced ML methods are also proposed for automatic cataract classification/grading, such as Markov random field (MRF), random forest (RF), Bayesian network, linear discriminant analysis (LDA), k-means, and decision tree (DT), etc. E.g., literature Zhang and Li (2017) applies Markov random field (MRF) to automatic CC classification and achieves good results. Song et al. (2019) uses semi-supervised learning (SSL) framework for cataract classification based on fundus images and achieves competitive performance.

Furthermore, we can draw the following conclusions:

-

•

For feature extraction, despite the characteristics of ophthalmic images, various image processing techniques are developed to extract useful features, like edge detection method, wavelet transform method, texture extraction methods, etc. However, no previous works systematically compare these feature extraction methods based on the same ophthalmic images, providing a standard benchmark for other researchers. Furthermore, existing works have not verified the effectiveness of a classical feature extraction method on different ophthalmic images, which is significant for the generalization ability of a feature extraction method and building commonly-used feature extraction baselines on cataract classification/grading tasks.

-

•

For classification/grading, researchers have made great efforts in developing state-of-the-art ML methods in recognizing cataract severity levels on ophthalmic images and demonstrated that ML methods can achieve competitive performance on extracted features. We found that no existing research has made a comparison between ML methods comprehensively based on the same ophthalmic image or different ophthalmic image types; thus, it is necessary to build conventional ML baselines for cataract classification/grading, which can help researchers reproduce previous works and prompt the development of cataract classification/grading tasks.

5.2 Deep learning methods

In recent years, with the rapid development of deep learning techniques, many deep learning methods ranging from the artificial neural network (ANN), multilayer perceptron (MLP) neural network, backpropagation neural network (BPNN), convolutional neural network (CNN), recurrent neural network (RNN), attention mechanism, to Transformer-based methods, which have been applied to solve different learning tasks such as image classification and medical image segmentation. In this survey, we mainly pay attention to deep learning methods in cataract classification tasks, and Table 2 provides a summary of deep learning methods for cataract classification/ grading based on different ophthalmic images.

| Literature | Method | Image Type | Year | Application | Cataract Type | |

|---|---|---|---|---|---|---|

| Gao et al. (2015) | CNN+RNN | Slit Lamp Image | 2015 | Grading | NC | |

| Liu et al. (2017a) | CNN | Slit Lamp Image | 2017 | Classification | PCO | |

| Jiang et al. (2017) | CNN | Slit Lamp Image | 2017 | Classification | PCO | |

| Long et al. (2017) | CNN | Slit Lamp Image | 2017 | Classification | Cataract | |

| Zhang et al. (2018a) | CNN | Slit Lamp Image | 2018 | Classification | Cataract | |

| Jiang et al. (2018) | CNN+RNN | Slit Lamp Image | 2018 | Classification | PCO | |

| Xu et al. (2019a) | Faster R-CNN | Slit Lamp Image | 2019 | Grading | NC | |

| Ting et al. (2019) | CNN | Slit Lamp Image | 2019 | Classification | NC | |

| Jun et al. (2019) | CNN | Slit Lamp Image | 2019 | Classification | NC | |

| Wu et al. (2019) | CNN | Slit Lamp Image | 2019 | Classification | NC | |

| Hu et al. (2020) | CNN | Slit Lamp Image | 2020 | Classification | NC | |

| Jiang et al. (2021b) | CNN | Slit Lamp Image | 2021 | Classification | Cataract | |

| Jiang et al. (2021a) | R-CNN | slit lamp Image | 2021 | Classification | PCO | |

| Hu et al. (2021) | CNN | slit lamp Image | 2021 | Classification | cataract | |

| Tawfik et al. (2018) | Wavelet + ANN | Digital Camera Image | 2016 | Classification | Cataract | |

| Caixinha et al. (2016) | MLP | Ultrasonic Image | 2016 | Classification | Cataract | |

| Zhang et al. (2020a) | Attention | Ultrasonic Image | 2020 | Classification | Cataract | |

| Wu et al. (2021) | CNN | Ultrasonic Image | 2021 | Classification | Cataract | |

| Yang et al. (2016) | BPNN | Fundus Image | 2015 | Classification | Cataract | |

| Linglin Zhang et al. (2017) | CNN | Fundus Image | 2017 | Classification | Cataract | |

| Dong et al. (2017) | CNN | Fundus Image | 2017 | Classification | Cataract | |

| Zhang et al. (2019a) | CNN | Fundus Image | 2019 | Classification | Cataract | |

| Zhou et al. (2019) | MLP | Fundus Image | 2019 | Classification | Cataract | |

| Zhou et al. (2019) | CNN | Fundus Image | 2019 | Classification | Cataract | |

| Zhang et al. (2017) | CNN | Fundus Image | 2019 | Classification | Cataract | |

| Pratap and Kokil (2019) | CNN | Fundus Image | 2019 | Classification | Cataract | |

| Xu et al. (2019b) | CNN | Fundus Image | 2020 | Classification | Cataract | |

| Pratap and Kokil (2021) | CNN | Fundus Image | 2021 | Classification | Cataract | |

| Imran et al. (2021) | CNN+RNN | Fundus Image | 2021 | Classification | Cataract | |

| Junayed et al. (2021) | CNN | Fundus Image | 2021 | Classification | Cataract | |

| Tham et al. (2022) | CNN | Fundus Image | 2022 | Classification | Cataract | |

| Zhang et al. (2020b) | CNN | AS-OCT Image | 2020 | Classification | NC | |

| Xiao et al. (2021a) | CNN | AS-OCT Image | 2021 | Classification | NC | |

| Xiao et al. (2021b) | Attention | AS-OCT Image | 2021 | Classification | NC | |

| Zhang et al. (2022c) | Attention | AS-OCT Image | 2022 | Classification | NC | |

| Zhang et al. (2022b) | Attention | AS-OCT Image | 2022 | Classification | NC |

5.2.1 Multilayer perceptron neural networks

Multilayer perceptron (MLP) neural network is one type of artificial neural network (ANNs) composed of multiple hidden layers. Researchers often combined the MLP with hand-engineered feature extraction methods for cataract classification to get expected performance. Zhou et al. Zhou et al. (2019) combined a shallow MLP model with feature extraction methods to classify cataract severity levels automatically. Caixinha et al. Caixinha et al. (2014a) utilized the MLP model for the cataract classification and achieved 96.7% of accuracy.

Deep neural networks (DNNs) comprise many hidden layers, and they are capable of capturing more informative feature representations from ophthalmic images through comparisons to MLP. DNN usually is used as dense (fully-connected) layers of CNN models. Literature Zhang et al. (2017); Dong et al. (2017); Zhang et al. (2019a) uses DNN models for cataract classification and achieves accuracy over 90% on fundus images.

Recently, several works Tolstikhin et al. (2021); Lian et al. (2021); Mansour et al. (2021); Touvron et al. (2021); Zheng et al. (2022) have demonstrated that constructing deep network architectures purely on multi-layer perceptrons (MLPs) can get competitive performance on ImageNet classification task with spatial and channel-wise token mixing, to aggregate the spatial information and build dependencies among visual features. These MLP-based models have promising results in classical computer vision tasks like image classification, semantic segmentation, and image reconstruction. However, to the best of our knowledge, MLP-based models have not been used to tackle ocular diseases tasks include cataract based on different ophthalmic images, which can be an emerging research direction for cataract classification/grading in the future.

5.2.2 Convolutional neural networks

Convolutional neural network (CNN) has been widely used in the ophthalmic image processing field and achieved surprisingly promising performance Szegedy et al. (2015); Krizhevsky et al. (2012); Chen et al. (2015); Fu et al. (2016); Abràmoff et al. (2016); Litjens et al. (2017); Mansoor et al. (2016); Xie et al. (2017); Szegedy et al. (2016); Huang et al. (2017); Zhang et al. (2018b). CNN consists of an input Layer, multiple convolutional layers, multiple pooling layers, multiple fully-connected layers, and an output layer. The function of convolutional layers is to learn low-, middle, and high-level feature representations from the input images through massive convolution operations in different stages.

For slit lamp images, previous works usually used classical image processing methods to localize the region of interest (ROI) of the lens, and the lens ROI is used as the inputs features of CNN models Liu et al. (2017a); Grewal et al. (2018); Xu et al. (2019a). E.g., Liu et al.Liu et al. (2017a) proposed a CNN model to detect and grade the severe levels of posterior capsular opacification (PCO), which used the lens ROI as the inputs of CNN. Literature Xu et al. (2019a) uses original images as inputs for CNN models to detect cataract automatically through Faster R-CNN. Literature Long et al. (2017) develops an artificial intelligence platform for congenital cataract diagnosis and obtains good diagnosis results. Literature Wu et al. (2019) proposes a universal artificial intelligence platform for nuclear cataract management and achieves good results.

For fundus images, literature Xu et al. (2019b); Zhang et al. (2017); Dong et al. (2017); Zhang et al. (2019a) achieves competitive classification results with deep CNNs. Zhou et al.Zhou et al. (2019) proposed the EDST-ResNet model for cataract classification based on fundus images where they used discrete state transition function Deng et al. (2018) as the activation function, to improve the interpretability of CNN models. Fig. 12 provides a representative CNN framework for cataract classification on fundus images, which can help audiences know this task easily.

For AS-OCT images, literature Yin et al. (2018); Zhang et al. (2019b); Cao et al. (2020a) proposes CNN-based segmentation frameworks for automatic lens region segmentation based on AS-OCT images, which can help ophthalmologists localize and diagnose different types of cataract efficiently. Zhang et al. Zhang et al. (2020b) proposed a novel CNN model named GraNet for nuclear cataract classification on AS-OCT images but achieved poor results. Xiao et al. (2021a) uses a 3D ResNet architecture for cataract screening based on 3D AS-OCT images. Zhang et al. Zhang et al. (2022b) tested the NC classification performance of state-of-the-art CNNs like ResNet, VGG, and GoogleNet on AS-OCT images, and the results showed that EfficientNet achieved the best performance.

5.2.3 Recurrent neural networks

Recurrent neural network (RNN) is a typical feedforward neural network architecture where connections between nodes form a directed or undirected graph along a temporal sequence. RNN are skilled at processing sequential data effectively for various learning tasks Hu et al. (2020), e.g., speech recognition. Over the past decades, many RNN variants have been developed to address different tasks, where Long short-term memory (LSTM) network is the most representative one. However, researchers have not used pure RNN architecture to classify cataract severity levels yet, which can be a research direction for automatic cataract classification.

5.2.4 Attention mechanisms

Over the past years, attention mechanisms have been proven useful in various fields, such as computer vision Choi et al. (2020), natural language processing (NLP) Vaswani et al. (2017), and medical data processing Zhang et al. (2019c). Generally, attention mechanism can be taken an adaptive weighting process in a local-global manner according to feature representations of feature maps. In computer vision, attention can be classified into five categories: channel, spatial, temporal attention, branch attention, and attention combinations such as channel & spatial attention Guo et al. (2021). Each attention category has a different influence on the computer vision field. Researchers have recently used attention-based CNN models for cataract classification on different ophthalmic images. Zhang et al. Zhang et al. (2020a) proposed a residual attention-based network for cataract detection on Ultrasound Images. Xiao et al. Xiao et al. (2021b) applied a gated attention network (GCA-Net) to classify nuclear cataract severity levels on AS-OCT images and got good performance. Zhang et al. (2022b) presents a mixed pyramid attention network for AS-OCT image-based nuclear cataract classification in which they construct the mixed pyramid attention (MPA) block by considering the relative significance of local-global feature representations and different feature representation types, as shown in Fig. 12.

Especially, self-attention is one representative kind of attention mechanism. Due to its effectiveness in capturing capture long-range dependencies and generality, it has been playing an increasingly important role in a variety of learning tasks Vaswani et al. (2017); Wang et al. (2018); Yu et al. (2021). Massive deep self-attention networks (e.g., Transformer) have achieved state-of-the-art performance through mainstream CNNs on visual tasks. Vision Transformer (ViT) Dosovitskiy et al. (2020) is a first-pure transformer architecture proposed for image classification and gets promising performance. Recently, researchers also extended the transformer-based models for different medical image analysis tasks He et al. (2022); however, no current research work has utilized transformer-based architectures to recognize cataract severity levels.

5.2.5 Hybrid neural networks

TA hybrid neural network indicates that a neural network is comprised of two or more two deep neural network types. In recent years, researchers have increasingly used hybrid neural networks to address different learning tasks Gulati et al. (2020). Due to its ability in inheriting the advantages from different neural network architectures, such as CNN, MLP, and transformer. Literature Jiang et al. (2018); Gao et al. (2015); Imran et al. (2021) proposes the hybrid neural network models for cataract classification /grading by considering the characteristics of RNN and CNN models, respectively. In the future, we believe that more advanced hybrid neural network models will be designed for cataract classification/grading based on different ophthalmic image modalities.

-

•

Ophthalmic image perspective: Slit lamp images and fundus images account for most automatic cataract classification/grading works, this is because these two ophthalmic image modalities are easy to access and have the clinical gold standards. Except for ultrasound images collected from the animal model and human subjects, five other ophthalmic imaging modalities were used for automatic cataract classification/grading.

-

•

Cataract classification/grading perspective: Existing works mainly focused on cataract screening, and most of them achieved over 80% accuracy. The number of cataract classification works is more than the number of cataract grading works. Since discrete labels are easy to access and be confirmed through comparisons continuous labels.

-

•

Publication year perspective: Conventional machine learning methods were first used to classify or grade cataract automatically. With the emergence of deep learning, researchers have gradually constructed advanced deep neural network architectures to automatically predict cataract severity levels due to their surprising performance. However, the interpretability of deep learning methods is worse than conventional machine learning methods.

-

•

Learning paradigm perspective: Most previous machine learning methods belong to supervised learning methods, and only two existing methods used semi-supervised learning methods. Specifically, unsupervised and semi-supervised learning methods have achieved competitive performance in computer vision and NLP, which have not been widely applied to automatic cataract diagnosis.

-

•

Deep neural network architecture perspective: According to Table 2, we can see that CNNs account for over 50% deep neural network architectures. Two reasons can explain that: 1) Ophthalmic images are the most commonly-used way for cataract diagnosis by clinicians. 2) Compared with RNN and MLP, CNN are skilled at processing image data. To enhance precision of cataract diagnosis, it is better to combine image data with non-imaging data, e.g., age.

-

•

Performance comparison on private/public datasets: Pratap et al. Pratap and Kokil (2021) made a comparison of AlexNet, GoogleNet, ResNet, ResNet50, and SqueezeNet for the cataract classification on the public EyePACS dataset, and results showed all CNN models obtained over 86% in the accuracy, and AlexNet got the best performance. Zhang et al. (2022b) offers the NC classification results of attention-based CNNs (CBAM, ECA, GCA, SPA, and SE on the private NC dataset, and results showed SE obtained better performance than other strong attention methods. Furthermore, Xu et al. (2019b, a); Pratap and Kokil (2021, 2019) used pre-trained CNNs for automatic cataract classification on the EyePACS dataset according to transfer learning strategy, and they also concluded that training pre-trained CNNs benefited the cataract classification performance than training CNNs from scratch.

-

•

Data augmentation techniques: Researchers use the commonly-used method for cataract classification/grading to augment ophthalmic image data for deep neural networks, such as flipping, cropping, rotation, and noise injection. To further validate or enhance the generalization ability of deep neural network models on cataract classification/grading tasks, other data augmentation techniques should be considered: translation, color space transformations, random erasing, adversarial training, meta-learning, etc Shorten and Khoshgoftaar (2019).

6 Evaluation measures

This section introduces evaluation measures to assess the performance of cataract classification/grading. In this survey, Classification denotes the cataract labels used for learning are discrete, e.g., 1,2,3,4, while grading denotes cataract labels are continuous, such as 0.1,0.5,1.0, 1.6, and 3.3.

For cataract classification, accuracy (ACC), sensitivity (recall), specificity, precision, F1-measure (F1), and G-mean are commonly used to evaluate the classification performance. Guo et al. (2015); Cao et al. (2020b); Jiang et al. (2018).

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

where TP, FP, TN, and FN denote the numbers of true positives, false positives, true negatives, and false negatives, respectively. Other evaluation measures like receiver operating characteristic curve (ROC), the area under the ROC curve (AUC), and kappa coefficient Cao et al. (2020b) are also applied to measure the overall performance.

For cataract grading, the following evaluation measures are used to evaluate the overall performance, titled the exact integral agreement ratio , the percentage of decimal grading errors , the percentage of decimal grading errors , and the mean absolute error Li et al. (2007); Cheung et al. (2011); Xu et al. (2016); Huiqi Li et al. (2008); Huang et al. (2010).

| (7) |

| (8) |

| (9) |

| (10) |

where and denote the ground-truth grade and the predicted grade. is the ceiling function, is the absolute function, a function that counts the number of non-zero values, and N denotes the number of images.

7 Challenges and possible solutions

Although researchers have made significant development in automatic cataract classification/grading over the years, this field still has challenges. This section presents these challenges and gives possible solutions.

7.1 Lacking public cataract datasets

PPublic cataract datasets are a very critical issue for cataract classification/grading. Previous works have made tremendous progress in automatic cataract/grading Xu et al. (2013); Foong et al. (2007); Caixinha et al. (2014b); Guo et al. (2015), there is no public and standard ophthalmology image dataset available except for public challenges datasets and multiple ocular diseases. Hence, it is difficult for researchers to follow previous works because the cataract dataset is unavailable. To this problem, it is necessary and significant to build public and standard ophthalmology image datasets based on standardized medical data collection and storage protocol. Public cataract datasets can be collected from hospitals and clinics with ophthalmologists’ help. This dataset collection mode can ensure the quality and diversity of cataract data and help researchers develop more advanced ML methods.

7.2 Developing standard cataract classification/grading protocols based on new ophthalmic imaging modalities

Most existing works used the LOCS III as the clinical gold diagnosis standard to grade/classify cataract severity levels for scientific research purposes and clinical practice. However, the LOCS III is developed based on slit-lamp images, which may not work well for ophthalmic images, such as fundus images, AS-OCT images, and Ultrasonic images. To solve this problem, researchers have made much effort in constructing new cataract classification/grading standards for other ophthalmic image types. E.g., researchers refer to the WHO Cataract Grading System and develop a cataract classification/grading protocol for fundus images Xu et al. (2010) based on clinical research and practice, which is widely used in automatic fundus-based cataract classification Xu et al. (2019b); Zhang et al. (2017); Dong et al. (2017); Zhang et al. (2019a).

To address the issue of developing standard cataract classification/grading protocols for new eye images, e.g., AS-OCT images. In this survey, we propose two possible solutions for reference as follows.

-

•

Developing a cataract grading protocol based on the clinical verification. Literature Grulkowski et al. (2018); Pawliczek et al. (2020) uses AS-OCT images to observe the lens opacities of patients based on the LOCS III in clinical practice, and statistical results showed that high correlation between cataract severity levels and clinical features with inter-class and intra-class analysis. The clinical finding may provide clinical support and reference. Hence, it is likely to develop a referenced cataract classification/grading protocol for ASOCT images based on the clinical verification method like the LOCS III.

-

•

Building the mapping relationship between two ophthalmic imaging modalities. The lens opacity is an important indicator to measure the severity level of cataracts, which are presented on different ophthalmic images through different forms in clinical research. Therefore, it is potential to construct the mapping relationship between two different ophthalmic imaging modalities to develop a new cataract classification/grading protocol by comparing the lens opacities, e.g., fundus image-based cataract classification system WHO Cataract Grading System.

Furthermore, to verify the effectiveness of new standard cataract grading protocols, we must collect multiple center data from hospitals in different regions.

7.3 How to annotate cataract images accurately

Data annotation is a challenging problem for the medical image analysis field including cataract image analysis, since it is the significant base for accurate ML-based disease diagnosis. However, clinicians cannot label massive cataract images manually Resnikoff et al. (2020, 2012), because it is expensive, time-consuming, and objective. To address this challenges, we offer the following solutions:

-

•

Semi-supervised learning: Song et al. (2016, 2019) uses the semi-supervised learning strategy to recognize cataract severity levels on fundus images and achieves expected performance. It is probably to utilize weakly supervised learning methods to learn useful information from labeled cataract images and let the method automatically label unlabeled cataract images according to learned information.

-

•

Unsupervised learning: Recent works have shown that deep clustering/unsupervised learning techniques can help researchers acquire labels positively rather than acquire labels negatively Ravi and Larochelle (2016); Grira et al. (2004); Fogel et al. (2019); Guo et al. (2017b); Hsu and Kira (2015). Thus, We can actively apply deep clustering/unsupervised learning methods to label cataract images in the future.

-

•

Content-based image retrieval: the content-based image retrieval (CBIR) Ramamurthy and Chandran (2011); Sivakamasundari et al. (2014); Fathabad and Balafar (2012); Fang et al. (2020) technique has been widely used for different tasks based on different image features, which also can be utilized to annotate cataract images by comparing testing images with standard images.

7.4 How to classify/grade cataract accurately for precise cataract diagnosis

Most previous works focused on cataract screening, and few works considered clinical cataract diagnosis, especially for cataract surgery planning. This is because different cataract severity levels and cataract types should clinically take the corresponding treatments. Hence, it is necessary to develop state-of-the-art methods to classify cataract severity levels accurately, and this survey provides the following research directions.

-

•

Clinical prior knowledge injection: Furthermore, cataracts are associated with various factors Lin et al. (2020), e.g., sub-lens regions Xu et al. (2016); Gao et al. (2011); Caxinha et al. (2015); Grulkowski et al. (2018); Pawliczek et al. (2020), which can be considered as domain knowledge of cataract. Thus, we can infuse the domain knowledge into deep networks for automatic cataract classification/grading according to the characteristics of ophthalmic images. E.g., Zhang et al. (2022b) incorporates clinical features in attention-based network design for classification.

-

•

Multi-task learning for classification and segmentation: Over the past decades, multi-task learning techniques have been successfully applied to various fields, including medical image analysis. Xu et al. Xu et al. (2019a) used the Faster-RCNN framework to detect the lens region and grade nuclear cataract severity levels on slit lamp images and achieved competitive performance. Literature Yin et al. (2018); Zhang et al. (2019b) proposes the deep segmentation network framework for automatic lens subregion segmentation based on AS-OCT images, which is a significant base for cataract diagnosis and cataract surgery planning. Moreover, more multi-task learning frameworks should be developed for cataract classification and lens segmentation, considering multi-task learning framework usually keeps a good balance between performance and complexity.

-

•

Transfer learning: Over the years, researchers have used the transfer learning method to improve the cataract classification performance Xu et al. (2019b, a) with pre-trained CNNs. Large deep neural network models usually perform better than small deep neural network models based on massive data, which previous works have demonstrated. However, it is challenging to collect massive data in the medical field; thus, it is vital for us to develop transfer learning strategies to take full use of pre-trained parameters for large deep neural network models, to further improve the performance of cataract-related tasks.

-

•

Multimodality learning: Previous works only used ophthalmic image type for cataract diagnosis Xu et al. (2016); Gao et al. (2011); Caxinha et al. (2015); Yang et al. (2013), multimodality learning Cardoso et al. (2017); Ma et al. (2019); Guo et al. (2019); Cheng et al. (2015) have been utilized to tackle different medical image tasks, which also can be used for automatic cataract classification/grading based on multi-ophthalmic images or the combination of ophthalmic images and non-image data. Multimodality data can be classified into image data and non-image data. However, it is challenging to use multimodality images for automatic cataract classification. Two reasons can account for it: 1) only silt lamp images and fundus images have standard cataract classification systems, which have no correlation relationship between them; 2) existing classification systems are subjective, it is challenging for clinicians to label two cataract severity levels correctly for different ophthalmic images. Furthermore, we can combine image data and non-image data for automatic cataract diagnosis because it is easy to collect non-image data such as age and sex associated with cataracts. Furthermore, recent studies have Mohammadi et al. (2012); Lin et al. (2020) used non-image data for PC and PCO diagnosis, which demonstrated that it is potential to use multimodality data to improve the precision of cataract diagnosis

-

•

Image denoising: Image noise is an important factor in affecting automatic cataract diagnosis on ophthalmic images. Researchers have proposed different methods to remove the noise from the images based on the characteristics of ophthalmic images, such as Gaussian filter, discrete wavelet transform (DWT), discrete cosine transform (DCT), Haar wavelet transform, and its variants Zhou et al. (2019); Guo et al. (2015); Fuadah et al. (2015). Additionally, recent research has begun to use the GAN model for medical image denoising and achieved good results.

7.5 Improving the interpretability of deep learning methods

Deep learning methods have been widely used for cataract classification/grading. However, deep learning methods are still considered a black box without explaining why they make good prediction results or poor prediction results. Therefore, it is necessary to give reliable explanations for the predicted results based on deep learning methods. Literature Zhou et al. (2019) visualizes weight distributions of deep convolutional layers and dense layers to explain the predicted results of deep learning methods. It is likely to analyze the similarities between the weights of convolutional layers to describe the predicted results.

Zhang et al. (2019b) indicated that infusing the domain knowledge into networks can improve the cataract segmentation results based on AS-OCT images and enhance the interpretability. It is promising for researchers to combine deep learning methods with different domain knowledge to enhance the interpretability of deep networks.

Modern neural networks Guo et al. (2017a) usually are poorly calibrated, Müller et al. (2019) indicated that improving the confidence of predicted probability can explain the predicted results. It is a potential solution for researchers to enhance interpretability by enhancing the confidence of predicted probability.

Moreover, researchers have achieved great progress in the interpretability of deep learning methods in the computer vision field.Zhou et al. (2016); Selvaraju et al. (2017); Guidotti et al. (2018); Lu et al. (2017) used the heat maps to localize discriminative regions where CNNs learned. Hinton et al. Kornblith et al. (2019) proposed the correlation analysis technique to analyze similarities of neural network representations to explain what neural networks had learned. These interpretability techniques are also used for deep learning-based cataract classification works for improving the interpretability.

7.6 Moblie cataract screening

In the past years, researchers have used digital camera images for cataract screening based on ML techniques and have achieved good results Supriyanti and Ramadhani (2011); Patwari et al. (2011); Fuadah et al. (2015); Supriyanti and Ramadhani (2012); Pathak and Kumar (2016); Rana and Galib (2017); Fuadah et al. (2015). Digital camera images can be accessed through mobile phones, which are widely used worldwide. Thus, there are endless potentials to embed powerful digital cameras into mobile phones for cataract screening. Although deep learning methods can achieve excellent cataract screening results on digital camera images, their parameters are too much. One possible solution is to develop lightweight network models. Researchers have achieved significant improvement on designing lightweight deep learning methods over the years Cheng et al. (2017); Jiang et al. (2019); He et al. (2017), which demonstrated that deep networks kept a good balance between accuracy and speed, which are important for developing cataract screening-based lightweight deep networks.

7.7 How to evaluate the generalization ability of machine learning methods for other eye disease classification tasks

Although researchers have proposed massive machine learning techniques for automatic cataract classification/grading, including conventional machine learning methods and deep learning methods, and obtained promising performance on different eye images. However, only Zhang et al. Zhang et al. (2022b) validated the generalization ability of the proposed attention-based network on cataract and age-related macular degeneration (AMD) classification task, while others focused on the pure cataract classification task. The following reasons can explain that: 1) due to characteristics of eye images, it is difficult to validate the effectiveness of a method on different eye disease classification tasks, especially for conventional machine learning methods. 2) most existing works focused on specific eye disease tasks like cataract and glaucoma rather than universal methods for medical analysis. 3) Appropriate open-access medical datasets are rare. 4) None previous works have systematically concluded the existing methods and built standard baselines.

This survey offers the following solutions:

-

•

More open-access medical datasets are supposed to be released.

-

•

it is significant to build baselines of different eye disease tasks, which can provide the standard benchmarks for others to follow.

7.8 Others

Apart from the above-mentioned challenges, there are other challenges for medical image analysis, including cataract, such as label noises Song et al. (2022), domain distribution shift across different datasets, and how to train more robust models. However, these challenges have not been studied in the cataract classification/grading field. Considering the real diagnosis requirements, it is necessary to address these challenges by following advanced machine learning methods, such as graph convolution method Zhong et al. (2019), knowledge distillation, adversarial learning, etc.

8 Conclusion

Automatic and objective cataract classification/grading is an important and hot research topic, helping reduce blindness ratio and improve life quality. In this survey, we survey the recent advances in the ML-based cataract classification/grading on ophthalmic imaging modalities, and highlight two research fields: conventional ML and deep learning methods. Though ML techniques have made significant progress in cataract classification and grading, there is still room for improvement. First, public cataract ophthalmic images are needed, which can help build ML baselines. Secondly, automatic ophthalmic image annotation has not been studied seriously and is a potential research direction. Thirdly, developing accurate and objective ML methods for clinical cataract diagnosis is efficient to help more people improve their vision. It is necessary to enhance the interpretability of deep learning, which can prompt applications in cataract diagnosis. Finally, previous works have used digital camera images for cataract screening, which is a good idea by devising lightweight ML techniques that address the cataract screening problem for developing countries with limited access to expensive ophthalmic devices.

Abbreviation

CAD: computer aided diagnosis; ML: machine learning; WHO: World Health Organization; NC: nuclear cataract; CC: cortical cataract; PSC: posterior subcapsular cataract; LR: linear regression ; RF: random forest; SVR: support vector machine regression; ASM: active shape model; NLP: natural language processing; OCT: optical coherence tomography; LOCS: Lens Opacity Classification System; OCCCGS: Oxford Clinical Cataract Classification and Grading System; SVM: support vector machine; artificial intelligent: AI; BOF: bag-of-features; PCA: principal component analysis; DWT: discrete wavelet transform; DCT: discrete cosine transform; KNN: K-nearest neighbor; ANN: artificial neural network; BPNN: back propagation neural network; CNN: convolutional neural network; RNN: recurrent neural network; DNN: deep neural network; MLP: multilayer perceptron; ROI: region of interes; AE: autoencoder; GAN: generative adversarial network; CBIR: content-based image retrieval; ASOCT: anterior segment ptical coherence tomography; 3D: three-dimensional; 2D: two-dimensional; SSL:Semi-supervised learning; AWM: Adaptive window model; GSR: Group Sparsity Regression; GLCM: gray level Co-occurrence matrix; Image morphological feature: IMF; SWLR: Similarity Weighted Linear Reconstruction; CBAM: Convolutional block attention module; ECA: Efficient channel attention; SE: squeeze-and-excitation; SPA: spatial pyramid attention.

References

- Abràmoff et al. (2016) Abràmoff MD, Lou Y, Erginay A, Clarida W, Amelon R, Folk JC, Niemeijer M (2016) Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investigative ophthalmology & visual science 57(13):5200–5206

- Ang et al. (2018) Ang M, Baskaran M, Werkmeister RM, Chua J, Schmidl D, dos Santos] VA, Garhöfer G, Mehta JS, Schmetterer L (2018) Anterior segment optical coherence tomography. Progress in Retinal and Eye Research 66:132 – 156, DOI https://doi.org/10.1016/j.preteyeres.2018.04.002, URL http://www.sciencedirect.com/science/article/pii/S135094621730085X

- Asbell et al. (2005) Asbell PA, Dualan I, Mindel J, Brocks D, Ahmad M, Epstein S (2005) Age-related cataract. The Lancet 365(9459):599–609

- for the Prevention of Blindness and Group (2001) for the Prevention of Blindness WP, Group WCG (2001) A simplified cataract grading system

- Bourne et al. (2017) Bourne RR, Flaxman SR, Braithwaite T, Cicinelli MV, Das A, Jonas JB, Keeffe J, Kempen JH, Leasher J, Limburg H, et al. (2017) Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: a systematic review and meta-analysis. The Lancet Global Health 5(9):e888–e897

- Caixinha et al. (2014a) Caixinha M, Jesus DA, Velte E, Santos MJ, Santos JB (2014a) Using ultrasound backscattering signals and nakagami statistical distribution to assess regional cataract hardness. IEEE Transactions on Biomedical Engineering 61(12):2921–2929

- Caixinha et al. (2014b) Caixinha M, Velte E, Santos M, Santos JB (2014b) New approach for objective cataract classification based on ultrasound techniques using multiclass svm classifiers. In: 2014 IEEE International Ultrasonics Symposium, IEEE, pp 2402–2405

- Caixinha et al. (2016) Caixinha M, Amaro J, Santos M, Perdigão F, Gomes M, Santos J (2016) In-vivo automatic nuclear cataract detection and classification in an animal model by ultrasounds. IEEE Transactions on Biomedical Engineering 63(11):2326–2335

- Cao et al. (2020a) Cao G, Zhao W, Higashita R, Liu J, Chen W, Yuan J, Zhang Y, Yang M (2020a) An efficient lens structures segmentation method on as-oct images. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), IEEE, pp 1646–1649

- Cao et al. (2020b) Cao L, Li H, Zhang Y, Zhang L, Xu L (2020b) Hierarchical method for cataract grading based on retinal images using improved haar wavelet. Information Fusion 53:196–208

- Cardoso et al. (2017) Cardoso MJ, Arbel T, Carneiro G, Syeda-Mahmood T, Tavares JMR, Moradi M, Bradley A, Greenspan H, Papa JP, Madabhushi A, et al. (2017) Deep learning in medical image analysis and multimodal learning for clinical decision support

- Carmona et al. (2008) Carmona EJ, Rincón M, García-Feijoó J, Martínez-de-la Casa JM (2008) Identification of the optic nerve head with genetic algorithms. Artificial Intelligence in Medicine 43(3):243–259

- Caxinha et al. (2015) Caxinha M, Velte E, Santos M, Perdigão F, Amaro J, Gomes M, Santos J (2015) Automatic cataract classification based on ultrasound technique using machine learning: a comparative study. Physics Procedia 70:1221–1224

- Chen et al. (2015) Chen H, Dou Q, Ni D, Cheng JZ, Qin J, Li S, Heng PA (2015) Automatic fetal ultrasound standard plane detection using knowledge transferred recurrent neural networks. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer, pp 507–514

- Cheng et al. (2015) Cheng B, Liu M, Suk HI, Shen D, Zhang D, Initiative ADN, et al. (2015) Multimodal manifold-regularized transfer learning for mci conversion prediction. Brain imaging and behavior 9(4):913–926

- Cheng (2018) Cheng J (2018) Sparse range-constrained learning and its application for medical image grading. IEEE Transactions on Medical Imaging 37(12):2729–2738

- Cheng et al. (2017) Cheng Y, Wang D, Zhou P, Zhang T (2017) A survey of model compression and acceleration for deep neural networks. arXiv preprint arXiv:171009282

- Cheung et al. (2011) Cheung CYl, Li H, Lamoureux EL, Mitchell P, Wang JJ, Tan AG, Johari LK, Liu J, Lim JH, Aung T, et al. (2011) Validity of a new computer-aided diagnosis imaging program to quantify nuclear cataract from slit-lamp photographs. Investigative ophthalmology & visual science 52(3):1314–1319

- Chew et al. (2012) Chew M, Chiang PPC, Zheng Y, Lavanya R, Wu R, Saw SM, Wong TY, Lamoureux EL (2012) The impact of cataract, cataract types, and cataract grades on vision-specific functioning using rasch analysis. American journal of Ophthalmology 154(1):29–38

- Choi et al. (2020) Choi M, Kim H, Han B, Xu N, Lee KM (2020) Channel attention is all you need for video frame interpolation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 10663–10671

- Chow et al. (2011) Chow YC, Gao X, Li H, Lim JH, Sun Y, Wong TY (2011) Automatic detection of cortical and psc cataracts using texture and intensity analysis on retro-illumination lens images. In: 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, IEEE, pp 5044–5047

- Chylack et al. (1988) Chylack LT, Leske MC, Sperduto R, Khu P, McCarthy D (1988) Lens opacities classification system. Archives of Ophthalmology 106(3):330–334

- Chylack et al. (1989) Chylack LT, Leske MC, McCarthy D, Khu P, Kashiwagi T, Sperduto R (1989) Lens opacities classification system ii (locs ii). Archives of ophthalmology 107(7):991–997

- Chylack et al. (1993) Chylack LT, Wolfe JK, Singer DM, Leske MC, Bullimore MA, Bailey IL, Friend J, McCarthy D, Wu SY (1993) The lens opacities classification system iii. Archives of ophthalmology 111(6):831–836

- Cuadros and Bresnick (2009) Cuadros J, Bresnick G (2009) Eyepacs: an adaptable telemedicine system for diabetic retinopathy screening. Journal of diabetes science and technology 3(3):509–516

- Decenciere et al. (2013) Decenciere E, Cazuguel G, Zhang X, Thibault G, Klein JC, Meyer F, Marcotegui B, Quellec G, Lamard M, Danno R, et al. (2013) Teleophta: Machine learning and image processing methods for teleophthalmology. Irbm 34(2):196–203

- Decencière et al. (2014) Decencière E, Zhang X, Cazuguel G, Lay B, Cochener B, Trone C, Gain P, Ordonez R, Massin P, Erginay A, et al. (2014) Feedback on a publicly distributed image database: the messidor database. Image Analysis & Stereology 33(3):231–234

- Deng et al. (2018) Deng L, Jiao P, Pei J, Wu Z, Li G (2018) Gxnor-net: Training deep neural networks with ternary weights and activations without full-precision memory under a unified discretization framework. Neural Networks 100:49–58

- Dong et al. (2017) Dong Y, Zhang Q, Qiao Z, Yang JJ (2017) Classification of cataract fundus image based on deep learning. In: 2017 IEEE International Conference on Imaging Systems and Techniques (IST), IEEE, pp 1–5

- Dosovitskiy et al. (2020) Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al. (2020) An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:201011929

- Fan et al. (2003) Fan S, Dyer CR, Hubbard L, Klein B (2003) An automatic system for classification of nuclear sclerosis from slit-lamp photographs. In: Ellis RE, Peters TM (eds) Medical Image Computing and Computer-Assisted Intervention - MICCAI 2003, Springer Berlin Heidelberg, Berlin, Heidelberg, pp 592–601