0 \vgtccategoryResearch

M2fNet: Multi-modal Forest Monitoring Network on Large-scale

Virtual Dataset

Abstract

Forest monitoring and education are key to forest protection, education and management, which is an effective way to measure the progress of a country’s forest and climate commitments. Due to the lack of a large-scale wild forest monitoring benchmark, the common practice is to train the model on a common outdoor benchmark (e.g., KITTI) and evaluate it on real forest datasets (e.g., CanaTree100). However, there is a large domain gap in this setting, which makes the evaluation and deployment difficult. In this paper, we propose a new photorealistic virtual forest dataset and a multimodal transformer-based algorithm for tree detection and instance segmentation. To the best of our knowledge, it is the first time that a multimodal detection and segmentation algorithm is applied to a large-scale forest scenes. We believe that the proposed dataset and method will inspire the simulation, computer vision, education and forestry communities towards a more comprehensive multi-modal understanding.

Computing methodologiesModeling and simulationSimulation support systemsSimulation environments; \CCScatTwelveComputing methodologiesArtificial intelligenceComputer visionImage segmentation / Object detection.

1 Introduction

Forest monitoring and simulation (e.g., tree detection, counting, segmentation and reconstruction) are highly active field in forestry, education and computer science [10, 21, 6, 7]. Existing datasets for natural forest monitoring (e.g., FinnWoodlands [14], FOR-instance [26]) are limited in scale and diversity due to the high costs and logistical challenges inherent in collecting ample annotated data within natural forest environments. As manual annotation relies on human labelers, it also inevitably introduces subjective errors, biases, and noises into the ground-truth labels, especially for trees with limited or complicated features. Given these limitations, an ideal dataset would circumvent the need for manual annotation. Indeed, it is possible to automatically generate photo-realistic training data with error-free labels by utilizing large-scale procedural synthesis.

In this project, we propose a multi-modal forest monitoring network (M2fNet) and train the model on the newly simulated virtual forest dataset to evaluate its performance (Fig. LABEL:fig:intro_fig). To rigorously validate the utility of the proposed dataset and model architecture, we benchmarked instance-level detection and segmentation methods with and without the inclusion of the simulated data, and compared our approach against recent baseline methods. Our project page and full dataset will be available at https://forestvrw.github.io/M2fNet/.

Leveraging our proposed data synthesis and learning framework, interdisciplinary researchers from diverse backgrounds can utilize this system for collaborative analysis of multiple tree attributes (e.g., density, species, etc.). In addition to quantitative benchmarking on segmentation IoU metrics, we conducted a user study engaging domain experts across computer graphics, computer science, design, and forestry. Results validate the efficacy of the overall pipeline for supporting flexible forest monitoring and interactions. The remainder of this paper is organized as follows:

-

•

Section 2 provides relevant research and core methodological background.

-

•

Section 3 presents our virtual forest dataset generation workflow, the multi-modal forest monitoring network (M2fNet) model architecture, and evaluation protocols.

-

•

Section 4 highlights potential applications enabled by our approach.

-

•

Section 5 discusses the future work.

-

•

Section 6 concludes with a summary of key contributions.

2 Background

This literature survey provides an overview of important advances in forest measurement and algorithm training. More specifically, we first summarize the work related to forest measurement and surveying, and then focus specifically on the introduction of vision-based tree detection and segmentation algorithms.

2.1 Forest measurement and surveying

Precision forestry relies on accurate measurements of forest biometrics (e.g., diameter, height, form, etc.). Conventional field-based forest inventories are limited in spatial coverage and can be prohibitively time- and labor-intensive [2]. Recent studies have sought to automate the acquisition and quantification of key forest biometrics through remote sensing techniques.

Airborne laser scanning (ALS) has been extensively used for area-based estimation of aboveground biomass (AGB) using regression models on metrics like canopy height [29, 31]. While verified against field measurements, ALS coverage can remain spatially sparse. Satellite imaging provides complementary wide-area spectral data, which can predict AGB via empirical model fitting [9, 1]. However, resolution limits the diagnosis of fine-grained forest properties. To capture intra-stand details, terrestrial laser scanning (TLS), structure-from-motion (SfM), and neural radiance fields (NeRF) are alternative approaches to construct 3D point clouds of sample plots [15, 23, 18, 16]. Though robust, plot-based sampling suffers from generalization and edge artifacts [22]. Meanwhile, unmanned aerial vehicles (UAVs) enable rapid, flexible surveys, but remain constrained by flight endurance and line-of-sight restrictions [25, 28].

Our work contributes a large-scale virtual forest data by generating dense, perfectly annotated forest data, which can be used for training on various deep learning models. We demonstrate applications from individual tree delineation to property prediction using diverse synthesized sensing modalities.

2.2 Vision-based Tree Detection and Segmentation

Reliable detection and segmentation of individual trees from imagery is a fundamental task in forest monitoring. Previous work in tree crown segmentation relies on hand-crafted features and morphological processing on color aerial imagery [4, 24]. With the advent of deep convolutional neural networks (CNNs), data-driven approaches have demonstrated superior performance.

For tree detection, recent methods fine-tune object detection networks such as Faster R-CNN using RGB imagery to generate 2D bounding boxes around tree crowns [27, 20]. Light detection and ranging (LiDAR) point clouds have also been used to detect individual trees represented as 3D bounding cylinders [5]. Meanwhile, semantic segmentation formulations can produce pixel-wise masks that label tree trunks and canopy extensions in RGB-D data [13, 19].

However, existing datasets for training and evaluating such models are limited in diversity, scalability, and annotation quality [12, 30]. Here, we introduce a large-scale photorealistic synthetic forest rendering engine to automatically generate dense ground-truth data for tree detection and segmentation tasks. We demonstrate the value of our simulation-to-reality paradigm on benchmark models.

3 Design of the Simulated Dataset

The manual annotation of vegetation in natural environments is often quite time-intensive and painful, particularly in densely forested areas. Furthermore, delineating tree boundaries and accounting for occlusion inevitably introduces some errors. To deal with these challenges, we have constructed a simulated virtual forest dataset to efficiently train machine learning algorithms for forestry tasks in a seamless manner. The dataset incorporates high-fidelity, photorealistic models of 19 tree species (e. g. Bucida Buceras, Conocarpus Erectus, Eucaliptus Gunni, Melia Azedara, Populus Alba, Quercus Robur, Shinus Molle, Tilia, etc.) rendered in the Unreal Engine, with customizable terrain (existing landscape models or from Unreal Engine landscape editor), weather (dynamic weather like sunset, rain, etc) and lighting (e. g. directional, sky, spot, point and rect light) parameters.

3.1 Dataset Creation

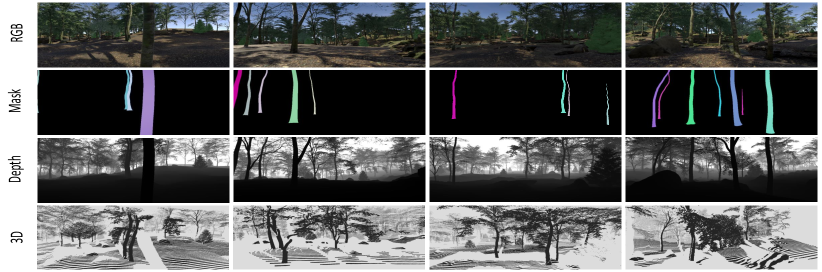

In order to precisely segment the regions of interest of the tree trunks from the high-quality tree models collected, Autodesk Maya was utilized to separate each tree model in FBX or OBJ format into its constituent trunk, branch, and leaf components. Only the trunk portions were rendered for the segmentation camera. Individual tree models of different species were populated within the designated terrain map with randomly assigned trunk densities and locations. Within this same terrain, a set of camera trajectories were defined, and the multi-sensor camera was moved along these trajectories to obtain synchronized multimodal collections (e.g., RGB, depth, mask) at each timestamp. This ensured proper alignment of the multimodal image sets. As a post-processing step to generate the COCO annotation files, OpenCV was employed to automatically detect object contours and bounding boxes, which were then exported as the final outputs. As a result, 50,000 video frames containing 3,000 for testing were obtained. Figure 1 gives some example data from the generated dataset.

The Figure 2 showcases our project setup based on Unreal Engine 4 for automated data generation within a forest environment. The virtual landscape features adjustable elements like trees, stones, and grass. A camera is in place to capture the scene, which is key for creating diverse datasets. The interface indicates that lighting needs to be rebuilt as pending update to enhance realism. This setup facilitates the generation of varied scenarios for simulation purposes.

The Figure 3 outlines a three-step data generation process using Unreal Engine. In the Model Preparation phase, raw models are imported into Unreal Engine for setup. Scene Generation follows, utilizing a random generation algorithm and a moving camera within the environment to create diverse scenarios. Finally, the Data Generation step outputs various data types, such as RGB images, masks, depth maps, and LiDAR. This structured approach enables the creation of a comprehensive dataset for simulation and analysis.

The Figure 4 displays a selection process for tree models in a simulation environment, showcasing the variety available for scene generation. The blueprint, BP_Tree, is designed to randomly select from an array of tree species, ensuring randomness and diversity in the virtual landscape. This collection of different tree models allows for a more realistic and varied representation of a forest ecosystem within the simulation.

3.2 Overview of M2fNet

An overview of the proposed multi-modal forest monitoring network is shown in Fig. LABEL:fig:intro_fig. The proposed M2fNet uses two independent encoders, which are hierarchical vision transformers with shifted windows (Swin) [17], to extract RGB and depth features. Considering that RGB and depth images have different emphasis on feature representation, both of which will be helpful for instance-level segmentation and detection. As the encoding stage increases, the features that can be extracted become more advanced. We then pass the features from different domains using a fusion module, which consists of a concatenation, a layer normalization, and a convolution layer. The output of the RGB encoder is added to the output of the fusion module to improve the segmentation performance on small trees and fine tree boundaries.

The fused features are then fed into a pixel decoder to transform the low-resolution features into high-resolution feature embeddings. Similar to Mask2former [3], we employ a query-based Transformer decoder with masked attention blocks to learn the semantic-level features from the interested tree objects. Specially, the tree query is updated by collecting the local tree features and context information within the foreground regions through self-attention. To deal with small and distant tree instances, high-resolution features from the pixel decoder are fed into the Transformer decoder layer. In this way, after obtaining the aggregated output query and the high-resolution feature from the pixel decoder, we predict the tree masks by multiplying the outputs from the two residual structures and adding simple linear layers for the different prediction heads. The predicted mask can be formulated as: , where is the multi-layer projection.

The loss is a combination of the binary cross-entropy loss and the dice loss for mask prediction and the smooth L1 loss for box regression. The total loss can be provided as:

| (1) |

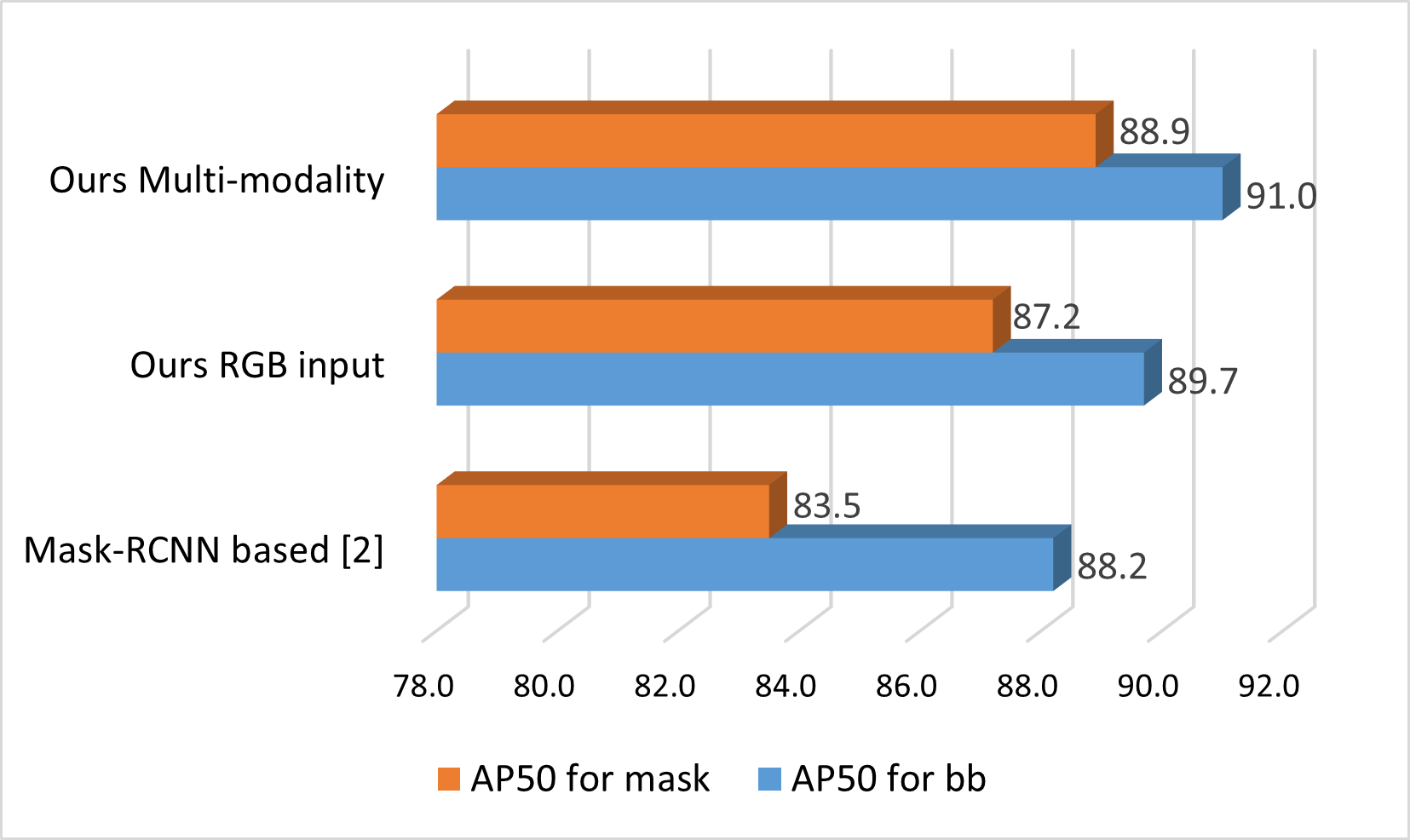

3.3 Metrics and Evaluations

To quantify the efficacy of the proposed M2fNet architecture using the created simulation data, we employ standard evaluation metrics including mean average precision (mAP) at an IoU threshold of 50%. As evident in the preliminary results depicted in Figure 5, employing our dataset for training precipitates considerable improvements in real-world segmentation and detection performance, especially for tree trunk masks. Furthermore, the multi-modal M2fNet outperforms networks relying solely on RGB imagery as input. Collectively, these outcomes underscore the potential for advancing machine learning algorithms, including low-level vision and multimodal frameworks, in unstructured forest environments and other analogous scenarios, by capitalizing on synthetic, controllable datasets.

In addition to the quantitative evaluation described in the previous section, we also conducted a user study aimed at investigating whether the simulated forestry scenes are realistic enough to support as a strong and useful pre-training for subsequent forestry education and research. While user study for measuring simulation system has been approached in VR training systems in the past [8, 11], to the best of our knowledge so far has rarely been used in the evaluation of forestry simulator. More specifically, a different level of simulated forest scenes ranging from the most fake to the most realistic (the real-world captured forestry) has been included, covering a broad range of situations to be investigated in our user study. 15 participants with different backgrounds (computer graphics, design, engineering, forestry) are invited in the study to evaluate the two questions 1 to 5, where the questionnaire for measuring the fidelity of our simulated data can be found in Table 1.

| RQ1: Please rate your sense of the fidelity of the rendered images |

| from the simulated forest environment. Which type did you think |

| the reference image came closest to? |

| 1 - Most fake; 5 - Most realistic |

| RQ2: How do you feel when the virtual forest scenes become real |

| for you? |

| 1 - Not at all; 5 - Very much |

4 Usage Scenarios

In addition to tree detection and segmentation, the proposed M2fNet framework and simulated dataset facilitate the training and evaluation of data-intensive algorithms for various forestry analytical tasks. By leveraging large-scale simulated data, models can mitigate overfitting issues commonly encountered when training on limited real data. Furthermore, the physically-based ground truth generation process provides highly credible labels, enabling robust quantitative analysis and validation of model performance across multiple forestry understanding and education objectives.

Forest DBH Measurement: Diameter at breast height (DBH) measurement constitutes a fundamental metric within forestry practices. Utilizing RGB and/or depth imagery, M2fNet employs a multi-modal Transformer architecture trained on the proposed simulated dataset for instance segmentation of individual trees. DBH estimation is subsequently performed by leveraging the predicted segmentation masks in conjunction with depth maps. In contrast to conventional manual techniques (e.g., tape measurements) that often introduce noise into the DBH labels, our framework derives accurate ground truth DBH from 3D tree reconstructions. This facilitates a robust quantitative analysis and validation of the model’s DBH predictions, thereby enhancing the overall reliability and validity of the results.

Video-level Tree Tracking: Advances in machine learning allow automated video analysis to identify and track individual trees in wild forest environments. Segmentation results from M2fNet can isolate each tree crown from the images in video frames. Tracking algorithms can then match the segmented tree regions across successive frames, tracking a single tree over time even as the camera angle changes. This allows long-term monitoring of specific trees. Researchers can use tracking to build complex tree inventories, measure growth metrics such as crown diameter and height, and detect subtle changes in foliage that may indicate disease. The tree-level data can be scaled up to assess forest population dynamics and health trends. Video-level tree monitoring overcomes many of the limitations of manual surveys, such as small sample size limitations. It also reduces costs and labor compared to field measurements.

Forest Mapping and Reconstruction: Mapping and reconstruction of forests is imperative for obtaining detailed data on tree species distribution, spatial coordinates, and health indicators. However, prevalent forestry inventory techniques, particularly in large-scale forests and those with dense canopy cover obstructing global navigation satellite systems, often cannot acquire high-quality, reliable measurements. Our simulated dataset offers a robust solution by providing meticulously-recorded camera parameters across trajectory viewpoints and dense point clouds aggregated from forest scenes. This dataset promotes the development and assessment of computational algorithms. Furthermore, the intentionally controlled and varied forest simulation settings (e.g., landscapes and lighting) present in the dataset enable more sophisticated algorithmic comprehension and interpretation of intricate real-world forest environments.

5 Future work

In the emerging field of digital forestry, our work develops a pioneering multi-modal forest monitoring network leveraging our large-scale photo-realistic simulations. We constructed a large-scale virtual forest dataset using the Unreal Engine, enabling the generation of synthetic data across modalities like LiDAR, spectral imagery, and semantics. This allows training data-hungry deep learning models for tasks like tree detection, species classification, biomass estimation, and forest mapping. Our simulated forest system provides an efficient and scalable platform to prototype and validate AI systems for precision forestry. Looking ahead, such digital twins of forests could transform forestry education, allowing immersive training through simulations. Our virtual forest system is a stepping stone toward digital replicas of natural forests, unlocking new capabilities in forest monitoring and sustainable forest management. By bridging the physical and virtual worlds, this research direction holds promise for understanding complex forest dynamics.

6 Limitations and social impacts

The methods developed on our virtual forest dataset could generalize well to other natural environments such as mountains and beaches. However, specific outdoor environments such as streets and indoor environments share a large gap in visual appearance with our training focus, and therefore does not perform well. A possible solution will be to adapt to different simulated environments such as cities and rooms. The proposed method assumes RGB or RGB with depth modalities as input, however, it may not be limited to visual modalities only, but can be extended to others, such as text.

For social impact, the virtual datasets may not accurately represent the diversity of real forests in terms of species composition and ecosystem variability, which may lead to biased decision-making in real-world applications. In addition, the use of virtual datasets may inadvertently neglect social aspects of forest management, such as impacts on local communities and indigenous knowledge.

7 Conclusion

In this work, we propose a new simulated large-scale forest dataset and a multimodal forest monitoring approach. The dataset contains high-quality and photorealistic rendering data with diverse and controllable properties, taking advantage of the powerful Unreal engine. The developed multimodal forest monitoring network allows precise tree detection and segmentation, enabling a wide range of forestry applications, including tree counting, localization, measurement and 3D mapping. According to the evaluation, using our new dataset and pipeline, the detection and segmentation performance lead to a considerable increase in accuracy. We hope that our efforts could facilitate further research and progress in the fields of computer vision, forestry, computer graphics, and educational training.

8 Acknowledgments

This ongoing work is supported by the U.S. Department of Agriculture (USDA) under grant No. 20236801238992.

References

- [1] V. Avitabile, M. Herold, G. B. Heuvelink, S. L. Lewis, O. L. Phillips, G. P. Asner, J. Armston, P. S. Ashton, L. Banin, N. Bayol, et al. An integrated pan-tropical biomass map using multiple reference datasets. Global change biology, 22(4):1406–1420, 2016.

- [2] J. Chave. Measuring wood density for tropical forest trees: a field manual. Sixth Framework Programme, 6, 2006.

- [3] B. Cheng, I. Misra, A. G. Schwing, A. Kirillov, and R. Girdhar. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1290–1299, 2022.

- [4] D. S. Culvenor. Tida: an algorithm for the delineation of tree crowns in high spatial resolution remotely sensed imagery. Computers & Geosciences, 28(1):33–44, 2002.

- [5] W. Dai, B. Yang, Z. Dong, and A. Shaker. A new method for 3d individual tree extraction using multispectral airborne lidar point clouds. ISPRS journal of photogrammetry and remote sensing, 144:400–411, 2018.

- [6] A. Firoze, C. Wingren, R. A. Yeh, B. Benes, and D. Aliaga. Tree instance segmentation with temporal contour graph. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2193–2202, 2023.

- [7] V. Grondin, J.-M. Fortin, F. Pomerleau, and P. Giguère. Tree detection and diameter estimation based on deep learning. Forestry, 96(2):264–276, 2023.

- [8] X. Guo, K. Kumar Nerella, J. Dong, Z. Qian, and Y. Chen. Understanding pitfalls and opportunities of applying heuristic evaluation methods to vr training systems: An empirical study. International Journal of Human–Computer Interaction, pp. 1–17, 2023.

- [9] M. C. Hansen, P. V. Potapov, R. Moore, M. Hancher, S. A. Turubanova, A. Tyukavina, D. Thau, S. V. Stehman, S. J. Goetz, T. R. Loveland, et al. High-resolution global maps of 21st-century forest cover change. science, 342(6160):850–853, 2013.

- [10] K. Itakura and F. Hosoi. Automatic tree detection from three-dimensional images reconstructed from 360 spherical camera using yolo v2. Remote Sensing, 12(6):988, 2020.

- [11] S. Jin, Y. Wang, L.-H. Lee, X. Luo, and P. Hui. Development of an immersive simulator for improving student chemistry learning efficiency. In Proceedings of the 16th International Symposium on Visual Information Communication and Interaction, 2023.

- [12] H. Kaartinen, J. Hyyppä, X. Yu, M. Vastaranta, H. Hyyppä, A. Kukko, M. Holopainen, C. Heipke, M. Hirschmugl, F. Morsdorf, et al. An international comparison of individual tree detection and extraction using airborne laser scanning. Remote Sensing, 4(4):950–974, 2012.

- [13] A. Kharroubi, F. Poux, Z. Ballouch, R. Hajji, and R. Billen. Three dimensional change detection using point clouds: A review. Geomatics, 2(4):457–485, 2022.

- [14] J. Lagos, U. Lempiö, and E. Rahtu. Finnwoodlands dataset. In Scandinavian Conference on Image Analysis, pp. 95–110. Springer, 2023.

- [15] X. Liang, V. Kankare, J. Hyyppä, Y. Wang, A. Kukko, H. Haggrén, X. Yu, H. Kaartinen, A. Jaakkola, F. Guan, et al. Terrestrial laser scanning in forest inventories. ISPRS Journal of Photogrammetry and Remote Sensing, 115:63–77, 2016.

- [16] L. Ling, Y. Sheng, Z. Tu, W. Zhao, C. Xin, K. Wan, L. Yu, Q. Guo, Z. Yu, Y. Lu, et al. Dl3dv-10k: A large-scale scene dataset for deep learning-based 3d vision. arXiv preprint arXiv:2312.16256, 2023.

- [17] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In ICCV, 2021.

- [18] Y. Lu, Y. Wang, Z. Chen, A. Khan, C. Salvaggio, and G. Lu. 3d plant root system reconstruction based on fusion of deep structure-from-motion and imu. Multimedia Tools and Applications, 80:17315–17331, 2021.

- [19] Y. Lu, J. Zhang, S. Sun, Q. Guo, Z. Cao, S. Fei, B. Yang, and Y. Chen. Label-efficient video object segmentation with motion clues. IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [20] M. Luo, Y. Tian, S. Zhang, L. Huang, H. Wang, Z. Liu, and L. Yang. Individual tree detection in coal mine afforestation area based on improved faster rcnn in uav rgb images. Remote Sensing, 14(21):5545, 2022.

- [21] Y. Luo, H. Huang, and A. Roques. Early monitoring of forest wood-boring pests with remote sensing. Annual Review of Entomology, 68:277–298, 2023.

- [22] R. Näsi, E. Honkavaara, P. Lyytikäinen-Saarenmaa, M. Blomqvist, P. Litkey, T. Hakala, N. Viljanen, T. Kantola, T. Tanhuanpää, and M. Holopainen. Using uav-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sensing, 7(11):15467–15493, 2015.

- [23] V. Nasiri, A. A. Darvishsefat, H. Arefi, M. Pierrot-Deseilligny, M. Namiranian, and A. Le Bris. Unmanned aerial vehicles (uav)-based canopy height modeling under leaf-on and leaf-off conditions for determining tree height and crown diameter (case study: Hyrcanian mixed forest). Canadian Journal of Forest Research, 51(7):962–971, 2021.

- [24] D. Pouliot, D. King, F. Bell, and D. Pitt. Automated tree crown detection and delineation in high-resolution digital camera imagery of coniferous forest regeneration. Remote sensing of environment, 82(2-3):322–334, 2002.

- [25] S. Puliti, H. O. Ørka, T. Gobakken, and E. Næsset. Inventory of small forest areas using an unmanned aerial system. Remote Sensing, 7(8):9632–9654, 2015.

- [26] S. Puliti, G. Pearse, P. Surovỳ, L. Wallace, M. Hollaus, M. Wielgosz, and R. Astrup. For-instance: a uav laser scanning benchmark dataset for semantic and instance segmentation of individual trees. arXiv preprint arXiv:2309.01279, 2023.

- [27] A. A. d. Santos, J. Marcato Junior, M. S. Araújo, D. R. Di Martini, E. C. Tetila, H. L. Siqueira, C. Aoki, A. Eltner, E. T. Matsubara, H. Pistori, et al. Assessment of cnn-based methods for individual tree detection on images captured by rgb cameras attached to uavs. Sensors, 19(16):3595, 2019.

- [28] C. Torresan, F. Carotenuto, U. Chiavetta, F. Miglietta, A. Zaldei, and B. Gioli. Individual tree crown segmentation in two-layered dense mixed forests from uav lidar data. Drones, 4(2):10, 2020.

- [29] M. Vehmas, K. Eerikäinen, J. Peuhkurinen, P. Packalén, and M. Maltamo. Airborne laser scanning for the site type identification of mature boreal forest stands. Remote Sensing, 3(1):100–116, 2011.

- [30] B. Wu, B. Yu, Q. Wu, Y. Huang, Z. Chen, and J. Wu. Individual tree crown delineation using localized contour tree method and airborne lidar data in coniferous forests. International Journal of Applied Earth Observation and Geoinformation, 52:82–94, 2016.

- [31] T. Yin, B. D. Cook, and D. C. Morton. Three-dimensional estimation of deciduous forest canopy structure and leaf area using multi-directional, leaf-on and leaf-off airborne lidar data. Agricultural and Forest Meteorology, 314:108781, 2022.