LSVOS Challenge 3rd Place Report: SAM2 and Cutie based VOS

Abstract

Video Object Segmentation (VOS) presents several challenges, including object occlusion and fragmentation, the dis-appearance and re-appearance of objects, and tracking specific objects within crowded scenes. In this work, we combine the strengths of the state-of-the-art (SOTA) models SAM2 and Cutie to address these challenges. Additionally, we explore the impact of various hyperparameters on video instance segmentation performance. Our approach achieves a J&F score of 0.7952 in the testing phase of LSVOS challenge VOS track, ranking third overall.

1 Introduction

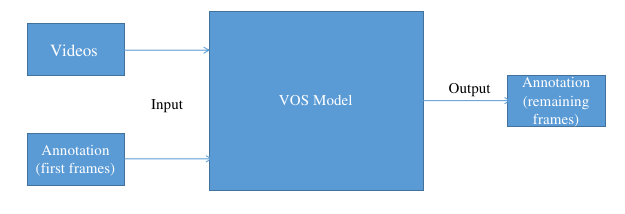

Video Object Segmentation (VOS) involves the identification and segmentation of target objects throughout a video sequence, starting with mask annotations in the first frame. This task is crucial in various domains, including autonomous driving, augmented reality, and interactive video editing, where the volume of video content is rapidly increasing. However, VOS faces significant challenges, such as drastic variations in object appearance, occlusions, and identity confusion caused by similar objects and background clutter. These issues are particularly challenging in long-term videos, where maintaining accurate tracking and segmentation becomes even more difficult. VOS techniques are also widely used in robotics, video editing, and data annotation, and can be integrated with Segment Anything Models (SAMs) [7] for universal video segmentation.Figure 1 illustrates the general framework of our VOS approach. The process begins with a memory module that stores segmented frames, followed by pixel-level matching and the use of object queries to ensure accurate segmentation across all frames, even in complex scenarios.

Recent Video Object Segmentation (VOS) methods predominantly utilize a memory-based approach. These methods compute a memory representation from previously segmented frames, whether provided as input or generated by the model itself. New query frames then access this memory to retrieve features essential for segmentation. Typically, these approaches rely on pixel-level matching during memory retrieval, where each query pixel is independently matched to a combination of memory pixels, often through an attention mechanism. However, this pixel-level matching can lack high-level consistency and is vulnerable to noise, particularly in challenging scenarios with frequent occlusions and distractors. This limitation is evident in the significantly lower performance of these methods on more complex datasets like MOSE, where they can score over 20 points lower in J&F compared to simpler datasets like DAVIS-2017. While there are methods specifically designed for VOS in long videos, they often compromise segmentation quality by compressing high-resolution features during memory insertion, leading to less accurate segmentations. In general, VOS techniques achieve segmentation by comparing test frames with previous frames, generating pixel-wise correlated features, and predicting target masks. Some approaches also employ memory modules to adapt to variations in target appearances over time and utilize object queries to differentiate between multiple objects, thereby reducing identity confusion.

This year’s LSVOS Challenge features two tracks: the Referring Video Object Segmentation (RVOS) Track and the Video Object Segmentation (VOS) Track. The RVOS track has been upgraded from the Refer-Youtube-VOS dataset to the newly introduced MeViS[3] dataset, which presents more challenging motion-guided language expressions and complex video scenarios. Similarly, the VOS track now utilizes the MOSE[4] dataset, replacing the previous Youtube-VOS dataset. MOSE introduces more complexity with scenes involving disappearing and reappearing objects, small and hard-to-detect objects, and heavy occlusions, making this year’s challenge significantly tougher than before. LVOS[5, 6], on the other hand, focuses on long-term videos, characterized by intricate object movements and extended reappearances. As a participant in the Video Object Segmentation (VOS) track, we are required to segment specific object instances across entire video sequences based solely on the first-frame mask, further pushing the boundaries of VOS in complex environments.

2 Method

Our approach is inspired by recent advancements in video object segmentation, specifically the SAM 2: Segment Anything in Images and Videos by Meta [8] and the Cutie framework by Cheng et al [1].

SAM2 is a unified model designed for both image and video segmentation, where an image is treated as a single-frame video. It generates segmentation masks for the object of interest, not only in single images but also consistently across video frames. A key feature of SAM2 is its memory module, which stores information about the object and past interactions. This memory allows SAM2 to generate and refine mask predictions throughout the video, leveraging the stored context from previously observed frames.

The Cutie framework, on the other hand, operates in a semi-supervised video object segmentation (VOS) setting. It begins with a first-frame segmentation and then sequentially processes the following frames. Cutie is designed to handle challenging scenarios by combining high-level top-down queries with pixel-level bottom-up features, ensuring robust video object segmentation. Moreover, Cutie extends masked attention mechanisms to incorporate both foreground and background elements, enhancing feature richness and ensuring a clear semantic separation between the target object and distractors. Additionally, Cutie constructs a compact object memory that summarizes object features over the long term. During the querying process, this memory is retrieved as a target-specific object-level representation, which aids in maintaining segmentation accuracy across the video.

As shown in Figure 2, the SAM2 model uses a memory-based approach for video segmentation, while Figure 3 demonstrates how the Cutie framework incorporates object queries for improved accuracy.

3 Experiment

3.1 Inference

In our inference pipeline, we utilized the SAM2 model, specifically the sam2-hiera-large variant, which balances model size and speed effectively with a size of 224.4M and a frame rate of 30.2 FPS. To optimize the model’s performance, we compiled the image encoder by setting compile_image_encoder: True in the configuration. This allowed for efficient processing of high-resolution input images with a size of 1024x1024 pixels.

The configuration included several key settings designed to enhance the model’s segmentation capabilities. We utilized a num_maskmem of 7, which refers to the number of memory tokens used in the mask memory. We also applied a scaled sigmoid function on the mask logits for the memory encoder, with parameters sigmoid_scale_for_mem_enc: 20.0 and sigmoid_bias_for_mem_enc: -10.0, to adjust the memory encoding process. Additionally, by setting use-mask-input-as-output-without-sam: true, the model directly outputs the input mask as the final mask in scenarios without SAM.

For memory management, the configuration directly_add_no_mem_embed: true ensures that new frames are directly added to the memory without additional embedding. We enabled use_high_res_features_in_sam: true to incorporate high-resolution features into the SAM mask decoder, which improves the accuracy of mask predictions. Moreover, multimask_output_in_sam: true allowed the model to output three masks upon the first interaction, enhancing the initial conditioning of frames.

The model also leverages advanced object tracking and occlusion prediction strategies. For instance, by setting use_obj_ptrs_in_encoder: true, we enabled cross-attention to object pointers from other frames during the encoding process. This, combined with pred_obj_scores: true and fixed_no_obj_ptr: true, facilitated robust object occlusion prediction and tracking across the video sequence. Furthermore, we adopted multimask tracking settings, such as multimask_output_for_tracking: true, to refine the segmentation accuracy over time.

In parallel, the Cutie framework was configured with a focus on efficient video segmentation at a standard testing resolution of 720p. In the context of the memory frame encoding, we update both the pixel memory and the object memory every -th frame. The default value of is set to 3, following the same configuration used in the XMem framework [2].For subsequent memory frames, we employ a First-In-First-Out (FIFO) strategy, which ensures that the most recent information is retained while older data is gradually phased out. This approach is designed to keep the memory footprint manageable and focused on the most relevant frames.The choice of a predefined limit of for the total number of memory frames is a practical compromise. This value balances the need to avoid excessive memory usage and maintain real-time performance while still capturing sufficient temporal evolution of the scene. Maintaining a history of 15 frames is generally adequate for effectively exploiting temporal correlations in VOS tasks. This enhances segmentation accuracy by providing enough context for object tracking and appearance prediction without imposing excessive computational overhead or compromising system responsiveness. Extending this limit further could lead to diminishing returns, as the additional frames may not significantly improve performance and could increase computational load unnecessarily. In the final testing phase, we employed Test-Time Augmentation (TTA) to enhance the model’s robustness and accuracy. Specifically, we utilized flip-based augmentation, which involves horizontally flipping the input frames during inference. This simple yet effective technique helped mitigate potential overfitting and improved the model’s generalization by allowing it to account for possible variations in object orientation. In the dynamic nature of video data, where frames may exhibit significant variations due to camera and object movement, flip-based TTA provided a more consistent and reliable segmentation across the video sequence.

3.2 Evaluation Metrics

To evaluate the performance of our model, we compute the Jaccard value (J), the F-Measure (F), and the mean of J and F.

Jaccard Value (J). The Jaccard value, also known as Intersection over Union (IoU), measures the similarity between two sets. For a predicted segmentation mask and a ground truth segmentation mask , the Jaccard value is defined as:

| (1) |

where and denote the value of the -th pixel in the predicted and ground truth masks, respectively. The Jaccard value ranges from to , with higher values indicating better performance.

F-Measure (F). The F-Measure is a metric that combines Precision and Recall, commonly used to evaluate the performance of binary classification models. It is calculated as follows:

| (2) |

where

| (3) |

and

| (4) |

The F-Measure also ranges from to , with higher values indicating better model performance in handling positive and negative samples.

Mean of J and F. To comprehensively evaluate the model’s performance, we compute the mean of the Jaccard value (J) and the F-Measure (F):

| (5) |

These metrics together provide a robust assessment of the segmentation model’s accuracy and consistency, offering insights into its performance in predicting segmentation masks.

In the 6th Large-Scale Video Object Segmentation (LSVOS) challenge, our method (Xy-unu) demonstrated significant performance improvements in both the development and test phases. The leaderboards for these phases are presented in Tables 1, respectively. Our method achieved Jaccard values (J) and F-Measures (F) that outperformed most other participants. Specifically, in the test phase, our method attained a Jaccard value of 0.7952 and an F-Measure of 0.7516, resulting in a combined J&F score of 0.8388. These results highlight the effectiveness and robustness of our approach.

Moreover, we present some of our quantitative results in Fig4. The results clearly demonstrate that our proposed method is capable of accurately segmenting small targets and differentiating between similar objects in challenging scenarios. These scenarios include significant variations in object appearance and instances where multiple similar objects or small objects cause confusion.

| User | J | F | J&F |

|---|---|---|---|

| yahooo | 0.8090 (1) | 0.7616 (2) | 0.8563 (1) |

| yuanjie | 0.8084 (2) | 0.7642 (1) | 0.8526 (3) |

| Xy-unu | 0.7952 (4) | 0.7516 (4) | 0.8388 (4) |

| Sch89.89 | 0.7635 (7) | 0.7194 (7) | 0.8076 (7) |

| Phan | 0.7579 (9) | 0.7125 (10) | 0.8033 (9) |

|

|

|

|

|

|

|

|

|

|

| 10d24fa6 #0 GT | #3 | #5 | #7 | #9 |

4 Conclusion

In this work, we propose a video object segmentation (VOS) inference solution that integrates the SAM2 and Cutie frameworks. Our approach leverages the strengths of both models to handle video data effectively.Our solution demonstrated its effectiveness in the LVOS challenge, achieving a notable J&F score of 0.7952, which secured us the third place. This result underscores the robustness and accuracy of our method in handling complex video segmentation tasks.

References

- [1] Cheng, H.K., Oh, S.W., Price, B., Lee, J.Y., Schwing, A.: Putting the object back into video object segmentation. In: arXiv (2023)

- [2] Cheng, H.K., Schwing, A.G.: XMem: Long-term video object segmentation with an atkinson-shiffrin memory model. In: ECCV (2022)

- [3] Ding, H., Liu, C., He, S., Jiang, X., Loy, C.C.: MeViS: A large-scale benchmark for video segmentation with motion expressions. In: ICCV (2023)

- [4] Ding, H., Liu, C., He, S., Jiang, X., Torr, P.H., Bai, S.: MOSE: A new dataset for video object segmentation in complex scenes. In: ICCV (2023)

- [5] Hong, L., Chen, W., Liu, Z., Zhang, W., Guo, P., Chen, Z., Zhang, W.: Lvos: A benchmark for long-term video object segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 13480–13492 (2023)

- [6] Hong, L., Liu, Z., Chen, W., Tan, C., Feng, Y., Zhou, X., Guo, P., Li, J., Chen, Z., Gao, S., et al.: Lvos: A benchmark for large-scale long-term video object segmentation. arXiv preprint arXiv:2404.19326 (2024)

- [7] Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C., Gustafson, L., Xiao, T., Whitehead, S., Berg, A.C., Lo, W.Y., Dollár, P., Girshick, R.: Segment anything. arXiv:2304.02643 (2023)

- [8] Ravi, N., Gabeur, V., Hu, Y.T., Hu, R., Ryali, C., Ma, T., Khedr, H., Rädle, R., Rolland, C., Gustafson, L., Mintun, E., Pan, J., Alwala, K.V., Carion, N., Wu, C.Y., Girshick, R., Dollár, P., Feichtenhofer, C.: Sam 2: Segment anything in images and videos. arXiv preprint arXiv:2408.00714 (2024), https://arxiv.org/abs/2408.00714