LowCon: A design-based subsampling approach in a misspecified linear model

Abstract

We consider a measurement constrained supervised learning problem, that is, (1) full sample of the predictors are given; (2) the response observations are unavailable and expensive to measure. Thus, it is ideal to select a subsample of predictor observations, measure the corresponding responses, and then fit the supervised learning model on the subsample of the predictors and responses. However, model fitting is a trial and error process, and a postulated model for the data could be misspecified. Our empirical studies demonstrate that most of the existing subsampling methods have unsatisfactory performances when the models are misspecified. In this paper, we develop a novel subsampling method, called “LowCon”, which outperforms the competing methods when the working linear model is misspecified. Our method uses orthogonal Latin hypercube designs to achieve a robust estimation. We show that the proposed design-based estimator approximately minimizes the so-called “worst-case” bias with respect to many possible misspecification terms. Both the simulated and real-data analyses demonstrate the proposed estimator is more robust than several subsample least squares estimators obtained by state-of-the-art subsampling methods.

Keywords: Least squares estimation; Experimental design; Condition number; Worst-case MSE.

1 Introduction

Measurement constrained supervised learning is an emerging problem in machine learning [35, 50, 9]. In this problem, the predictor observations (also called unlabeled data points in machine learning literature) are collected, but the response observations are unavailable and difficult or expensive to obtain. Considering speech recognition as an example, one may easily get plenty of unlabeled audio data, but the accurate labeling of speech utterances is extremely time-consuming and requires trained linguists. For an unlabeled speech of one minute, it can take up to ten minutes for the word-level annotation and nearly seven hours for the phoneme-level annotation [57]. A more concrete example is the task of predicting the soil functional property, i.e., the property related to a soil’s capacity to support essential ecosystem service [16]. Suppose one wants to model the relationship between the soil functional property and some predictors that can be easily derived from remote sensing data. To get the response, the accurate measurement of the soil property, a sample of soil from the target area, is needed. The response thus can be extremely time-consuming or even impractical to obtain, especially when the target area is off the beaten path. Thus, it is ideal to select a subsample of predictor observations, measure the corresponding responses, and then fit a supervised learning model on the subsample of the predictors and responses.

In this paper, we study the subsampling method and postulate a general linear model for linking the response and predictors. One of the natural subsampling methods is the uniform subsampling method (also called the simple random subsampling method), i.e., selecting a subsample with the uniform sampling probability. For many problems, uniform subsampling method performs poorly [8, 41]. Motivated by the poor performance of uniform sampling, there has been a large number of work dedicated to developing non-uniform random subsampling methods that select a subsample with a data-dependent non-uniform sampling probability [25]. One popular choice of the sampling probability is the normalized statistical leverage scores, leading to the algorithmic leveraging approach [23, 28, 56, 24]. Such an approach has already yielded impressive algorithmic and theoretical benefits in linear regression models [25, 10, 22]. Besides linear models, the idea of algorithmic leveraging is also widely applied in generalized linear regression [49, 2, 54], quantile regression [1, 45], streaming time series [52], and the Nystrm method [3].

Different from random subsampling methods, there also exist some deterministic subsampling methods which select the subsample based on certain rules, especially optimality criteria developed in the design of experiments [33], e.g., -, - and -optimality. wang2017computationally [50] proposed a computationally tractable subsampling approach based on the -optimality criterion. -optimality criterion was considered in wang2018information [48].

|

While the existing subsampling methods have already shown extraordinary performance on coefficient estimation and model prediction, their performance highly relies on the model specification. However, the model specification is a trial and error process, during which a postulated model could be misspecified. When the model is misspecified, most subsampling methods may lead to unacceptable results. We now demonstrate the issue of model misspecification using a toy example. In this example, data are generated from the model , , where are the i.i.d. standard normal errors. In Figure 1, the data points (gray points) and the true function (the gray curve) are shown in the left panel. The right panel shows the full-sample linear regression line (the solid line) based on only, without the nonlinear term. We postulate a linear model without the nonlinear term and randomly select a subsample of size ten (black dots) using the leverage subsampling method [22]. The subsample linear regression line (the dashed line) deviates severely from the solid line. Such an observation suggests that the performance of a subsample least squares estimator may deteriorate significantly when the model is misspecified. The poor performance under model misspecifications is not unique to random subsampling methods. The success of different deterministic subsampling methods depends on the optimality criteria being used. The optimality criteria, however, differ from model to model. An optimality criterion derived from a postulated model does not necessarily lead to a decent subsampling method for the true model. We provide more discussion of this example in the Supplementary Material.

In practice, the true underlying model is almost always unknown to practitioners. The subsampling hence is highly desirable to be robust to possible model misspecification. To achieve the goal, tsao2012subsampling [43] proposed to construct a robust estimator using bootstrap. One limitation of this method is that it can not be applied to the measurement-constrained setting since the response value for every predictor is needed in this method to compute the estimator. Another related approach is pena1999fast [32], which aims to carefully select some observations to generate starting points to compute a robust estimator. The literature on subsampling methods that yield robust estimations in the measurement-constrained setting is still meager.

In this paper, we bridge the gap by proposing a statistical analysis of the subsampling method in a linear model containing unknown misspecification. We do so in the context of coefficient estimation via the least-squares on a subsample taken from the full sample. Our major theoretical contribution is to provide an analytic framework for evaluating the mean squared error (MSE) of the subsample least squares (SLS) estimator in a misspecified linear model. Within this framework, we show that it is very easy to construct a “worst-case” sample and a misspecification term for which an SLS estimator will have an arbitrarily large mean squared error. We also show that an SLS estimator is robust if the information matrix of the subsample has a relatively low condition number, a traditional concept in numerical linear algebra [42].

Based on these theoretical results, we propose and analyze a novel subsampling algorithm, called “LowCon”. LowCon is designed to select a subsample, which balances the trade-off between bias and variance, to yield a robust estimation of coefficients. This algorithm involves selecting the subsample, which approximates a set of orthogonal Latin hypercube design points [53]. We show the proposed SLS estimator has a finite upper bound of the mean squared error, and it approximately minimizes the “worst-case” bias, with respect to all the possible misspecification terms. Our main empirical contribution is to provide a detailed evaluation of the robustness of the SLS estimators on both synthetic and real datasets. The empirical results indicate the proposed estimator is the only one, among all cutting-edge subsampling methods, that is robust to various types of misspecification terms.

The remainder of the paper is organized as follows. We start in Section 2 by introducing the misspecified linear model and deriving the so-called “worst-case” MSE. In Section 3, we present the proposed LowCon subsampling algorithm and its theoretical properties. We examine the performance of the proposed SLS estimator through extensive simulation and two real-world examples in Sections 4 and 5, respectively. Section 6 concludes the paper, and the technical proofs are relegated to the Supplementary Material.

2 Model Setup

In this section, we first introduce the linear model that contains unknown misspecification. We then consider the subsample least squares estimator and derive the mean squared error of these estimators under this model. We show that an SLS estimator is robust if the information matrix of the selected subsample has a relatively low condition number.

Throughout this paper, represents the Euclidean norm. Let and be the smallest and the largest eigenvalue of a matrix, and and be the corresponding eigenvectors, respectively. We use and to denote the largest and the smallest non-zero singular value of a matrix with columns, respectively.

2.1 Misspecified Linear Model

Suppose the underlying true model has the form

| (1) |

where ’s are the responses, ’s are the predictors, () is the vector of unknown coefficients, the random errors are independently distributed, and follows a non-centered normal distribution , . Let be the design space. In this paper, we assume that the unknown multivariate function satisfies

| (2) |

where is a finite positive constant. When has finite values, some examples of include and . Let be the response vector, be the predictor matrix, and be the misspecification term. For model identifiability, we assume the matrix has a full column rank. Under this assumption, we exclude the case that is a linear function of , i.e., cannot be a linear combination of .

We consider the scenario that practitioners have no prior information on the true model (1) and postulate a classical linear model,

| (3) |

where the random errors are i.i.d. and follow a normal distribution with mean zero and constant variance , i.e., . Model (3) is thus a misspecified linear model of the true model (1). Fitting model (3) without taking into account the model misspecification may result in the degenerated performance of the coefficient estimation and model prediction. For example, the full-sample ordinary least squares (OLS) estimate, known as the best linear unbiased estimate, is a biased estimate of the true coefficient when the model is misspecified [5]. More discussion on misspecified linear models can be found in kiefer1975optimal [20] and sacks1978linear [34].

In our measurement-constrained setting, practitioners are given the full sample of predictors . The responses in model (1), however, are hidden unless explicitly requested. Practitioners are then allowed to reveal a subset of , denoted by , where . The goal is to estimate the true coefficient using , where , and is the corresponding predictor for . A natural estimator for the coefficient is the subsample least squares estimator [50],

where . We derive the mean squared error (MSE) and the worst-case MSE of this estimator, in the next subsection.

2.2 Worst-case MSE

Let and . The MSE of the estimator (conditional on ) thus can be decomposed as

| (4) |

where the bias term is associated with the model misspecification. Note that when the bias term vanishes, , i.e., when the model is correctly specified, minimizing MSE is equivalent to minimizing the variance term . Further discussion following this line of thinking can be found in wang2017computationally [50] and wang2018information [48], in which the authors focused on selecting the subsample that minimizes the variance term. In our setting, where the model is misspecified, however, minimizing the variance term does not necessarily lead to a small MSE.

Recall that our goal is to select a subsample such that the corresponding SLS estimator is robust to various model misspecification. Since the misspecification term is unknown to practitioners, a natural and intuitive approach is to find the “minimax” subsample that minimizes the so-called “worst-case” MSE, i.e., the maximum value of with respect to all the possible choices of the misspecification term . The following lemma gives an explicit form of the worst-case MSE; the proof can be found in the Supplementary Material.

Lemma 2.1 (Worst-case MSE).

Two conclusions can be made from Lemma 2.1. First, the worst-case MSE of an SLS estimator can be inflated to arbitrarily large values by a very small value of . It is thus very easy to construct a “worst-case” sample and a misspecification term for which an SLS estimator will have unacceptable performance. Second, is the most robust SLS estimator if the selected subsample minimizes the worst-case MSE. Such a subsample, however, is impossible to obtain in real practice, since both values of and are unknown to practitioners.

In this paper, we are more interested in the setting where the misspecified term is large enough. In particular, the value of is large enough such that, on the right-hand side of the Inequality (5), the second term dominates the first term. Under this setting, the desired subsample should yield a relatively small value of . Notice that

| (6) |

where the equality holds when the condition number of the subsample information matrix, i.e., , takes the minimum value . Inequality (6) thus suggests the desired subsample is the one with a relatively small value of .

We now give another intuition about how is related to the robustness of the SLS estimator. casella1985condition [6] showed that

where and are perturbations of and respectively. Analogously, one can also show that

| (7) |

Inequality (7) thus suggests that a smaller value of associates with a more robust estimator .

It is worth noting that, if the subsample matrix minimizes the worst-case MSE, it does not necessarily minimize simultaneously since both the value of and are not available in practice. A robust subsample should at least yield a relatively small value of and balance the trade-off between the bias and the variance in the Equation (4). Following this line of thinking, we propose a novel subsampling algorithm, the details of which are presented in the next section.

3 LowCon Algorithm

In this section, we present our main algorithm, called “Low condition number pursuit” or “LowCon.” In Section 3.1, we introduce the notion of orthogonal Latin hypercube designs (OLHD) and how these can be used to generate a design matrix such that has a relatively small value. In Section 3.2, we present the detail of the proposed algorithm, which incorporates the idea of OLHD. In Section 3.3, we present the theoretical property of the proposed SLS estimator, which is obtained by the LowCon algorithm. We show that the proposed estimator has a relatively small upper bound of the MSE.

3.1 Orthogonal Latin Hypercube Design

Taking a subsample with some specific characteristics has many similarities to the design of experiments, which aims to place design points in a continuous design space, so that resulting design points have certain properties [51]. The theory and methods in the design of experiments are potentially useful for solving subsampling problems. The fundamental difference between the design of experiments and the subsampling is that, in subsampling, the selected points cannot be freely designed in a continuous space as the design of experiments but must be taken from the given finite sample . To borrow the strength of the design of experiments, we focus on space-filling designs, which aims to place the design points that cover a continuous design space as uniformly as possible [11, 21, 18, 29, 47]. In other words, for any point in the experimental region, space-filling designs have a design point close to it. We thus propose to round the design point to its nearest neighbor in the sample. Details are provided in Section 3.2.

We now introduce a specific space-filling design that is of interest, the Latin hypercube design (LHD) [37, 26, 46].

Definition 3.1 (Latin hypercube design).

Given the design space , is called a Latin hypercube design matrix if each column of is a random permutation of [38].

Intuitively, if one divides the design space into equally-sized slices in the th () dimension, a Latin hypercube design ensures that there is exactly one design point in each slice. The left panel of Figure 2 shows an example of a set of Latin hypercube design points (black dots). Although uniformly distributed on the marginal, the Latin hypercube design points do not necessarily spread out in the whole design space. That is to say, a set of LHD points may not be “space-filling” enough. To improve the “space-filling” property of LHD, various methods have been developed [40, 31, 12, 19]. Of particular interest in this paper is the orthogonal Latin hypercube design (OLHD), which achieves the goal by reducing the pairwise correlations of LHD [53]; see the right panel of Figure 2 for an example.

|

|

Consider the information matrix , where is an OLHD matrix. Intuitively, the matrix has a relatively small condition number, since all of the diagonal elements of are the same and all of the off-diagonal elements of have relatively small absolute value. Although there is a lack of theoretical guarantee, empirically, it is known that is in general no greater than 1.13 [7]. Such a fact motivates us to select the subsample that approximates a set of orthogonal Latin hypercube design points.

3.2 LowCon Subsampling Algorithm

Without loss of generality, we assume the data points are first scaled to . The proposed algorithm works as follows. We first generate a set of orthogonal Latin hypercube design points from a design space . We then search and select the nearest neighbor from the sample for every design point.

The key to success is that the selected subsample can well-represent the set of design points, i.e., each selected subsample point is close-enough to its nearest design point, respectively. We provide more discussion in Section 3.3 about when such a requirement is met in practice. Empirically, we find may not be a good choice for the design space . This is because, in such a scenario, the design points, which are close to the boundary of , may be too far away from their nearest neighbors, especially when the population density function has a heavy tail. As a result, a design space that is slightly smaller than would be a safer choice. We opt to set the design space as , where and are the -percentile and -percentile of the th column of the scaled data points, respectively. The algorithm is summarized below.

-

1.

Data normalization: The data points are first scaled to .

-

2.

Generate OLHD points: Given the parameter and the design space , generate a set of orthogonal Latin hypercube design points .

-

3.

Nearest neighbor search: Select the nearest neighbor for each design point from , denoted by . The selected subsample is thus given by .

Figure 3 illustrates LowCon algorithm. The synthetic data points in the left panel were generated from a bivariate normal distribution and are scaled to . A set of orthogonal Latin hypercube design points are then generated, labeled as black triangles in the middle panel. For each design point, the nearest data point is selected, marked as black dots in the right panel. The selected points can well-approximate the design points.

Note that the set of design points generated by the orthogonal Latin hypercube design technique is not unique; different sets of design points may result in different subsamples. Algorithm 1 thus is a random subsampling method instead of a deterministic subsampling method. In practice, the set of design points in Algorithm 1 can be randomly generated.

3.3 Theoretical Results

We now present the theoretical property of the proposed subsample least squares estimator, obtained by the LowCon algorithm. Some notations are needed before we show our main theorem. Recall that represents an orthogonal Latin hypercube design matrix. Let be the subsample matrix obtained by the proposed algorithm. One thus can decompose into a sum of the design matrix and a matrix , i.e., .

Following the notations in Algorithm 1, one can write and , where and represent the th design point and its corresponding nearest neighbor from the sample, respectively. One thus has , for . Intuitively, is a random perturbation matrix, and the selected data points can well-approximate the design points if is “negligible”. In such a case, , which is a function of , can be expanded around through Taylor expansion. From this, we can establish our main theorem below; the proof is relegated to the appendix.

Theorem 3.1.

Suppose the data follow the model (1) and the regularity condition (2) is satisfied. Assume , where and represent the largest and the smallest singular value of a matrix of columns, respectively. A Taylor expansion of around the point yields the following upper bound,

| (8) |

Here, is the Taylor expansion remainder.

When the Taylor expansion in Theorem 3.1 is valid, three significant conclusions can be made. First, the theorem indicates that the MSE of the proposed estimator is finite. Specifically, following the Definition 3.1, we have

Moreover, the value of is in general no greater than 1.13, as discussed in Section 3.1. Combining these two facts yields an informal but finite upper bound for , i.e.,

Recall that Lemma 2.1 shows that the worst-case MSE of an SLS estimator can be inflated to an arbitrarily large value by a very small value of . The fact that the proposed estimator has a finite MSE thus indicates the proposed estimator is robust.

Second, the upper bound of the squared bias of the proposed estimator, which equals , is very close to the minimum value of the worst-case squared bias. This is because the worst-case squared bias has the minimum value of , and the value of is close to 1. Consider the common situation when the value of is large enough such that, in Inequality (5), the bias term dominates the variance term. Under such a situation, the second conclusion thus indicates the proposed estimator is very close to the “most robust” estimator, which minimizes the worst-case squared bias.

Third, the proposed estimator has a finite variance. Recall that in Algorithm 1, sometimes we may choose a design space . The value of will decrease in such cases, compared to the case when the design space equals . The variance of the proposed estimator thus will increase in such cases. Nevertheless, the variance term will not be inflated to be arbitrarily large, as long as the design space is not too small. More discussion on the impact of the design space to the Inequality (8) is relegated to the Supplementary Material.

There are two essential assumptions in Theorem 3.1. One is that , and the other is that the Taylor expansion is valid, i.e., when is “small”. Although we will evaluate the quality of the proposed estimator empirically in the next section, a precise theoretical characterization of when these two assumptions are valid is currently not available. Here, we simply give an example such that converges to zero as goes to infinity, in which case the desired Taylor expansion is apparently valid. The assumption is also satisfied in such a case, as goes to infinity, since the value of is not relevant to . Consider the case when the non-zero support of the population distribution is , i.e., the sample and the design points have the same domain. In such a case, the distance between each design point and its nearest neighbor converges to zero, as goes to infinity. As a result, each entry of the matrix converges to zero, and thus converges to zero as well, as goes to infinity. Consequently, the desired Taylor expansion is valid in such a case.

4 Simulation Results

To show the effectiveness of the proposed LowCon algorithm in misspecified linear models, we compare it with the existing subsampling methods in terms of MSE. The subsampling methods considered here are uniform subsampling (UNIF), basic leverage subsampling (BLEV), shrinkage leverage subsampling (SLEV), unweighted-leverage subsampling (LEVUNW) [22, 23], and information-based optimal subset selection (IBOSS) [48]. The shrinkage parameter for SLEV is set as 0.9, as suggested in ma2015statistical [22]. Through all the experiments in this paper, we set . More simulation results with other values of can be found in the Supplementary Material.

We simulate the data from the model (1) with , and . Three different distributions are used to generate the matrix,

D1. ;

D2. ;

D3. ,

where the th element of is set to be for . For the coefficient , the first 20% and the last 20% entries are set to be 1, and the rest of them are set to be 0.1. To show the robustness of the proposed estimator under various misspecification terms, we consider five different ’s,

H1. ;

H2. ;

H3. ;

H4. ;

H5. ,

where the constants , and are selected so that , i.e., the response is not dominated by the misspecification term. Note that H1 does not have any misspecified terms. Figure 4 shows the heatmap of the misspecified terms from H2 to H5, where matrix is generated from D1. Only the third and eighth predictors are shown.

|

We illustrate the subsamples selected by different subsampling methods in Figure 5. The LEVUNW method is omitted here since the subsample identified by LEVUNW is the same as the subsample identified by BLEV. The data points (gray dots) are generated from distribution D3 with and , where only the third and the eighth predictors are shown. In each panel, a subsample of size 40 is selected (black dots). Figure 5 reveals some interesting facts. We first observe the subsamples selected by BLEV and SLEV are more dispersed than the subsample selected by UNIF. Such an observation can be attributed to the fact that BLEV and SLEV give more weight to the high-leverage-score data points. For the IBOSS method, the selected subsample includes all the “extreme” data points from all predictors. Such a subsample is most informative when the linear model assumption is valid. Finally, we observe that the subsample chosen by the proposed LowCon algorithm is most “uniformly distributed” among all. Intuitively, such a pattern indicates the selected subsample yields an information matrix that has a relatively small condition number.

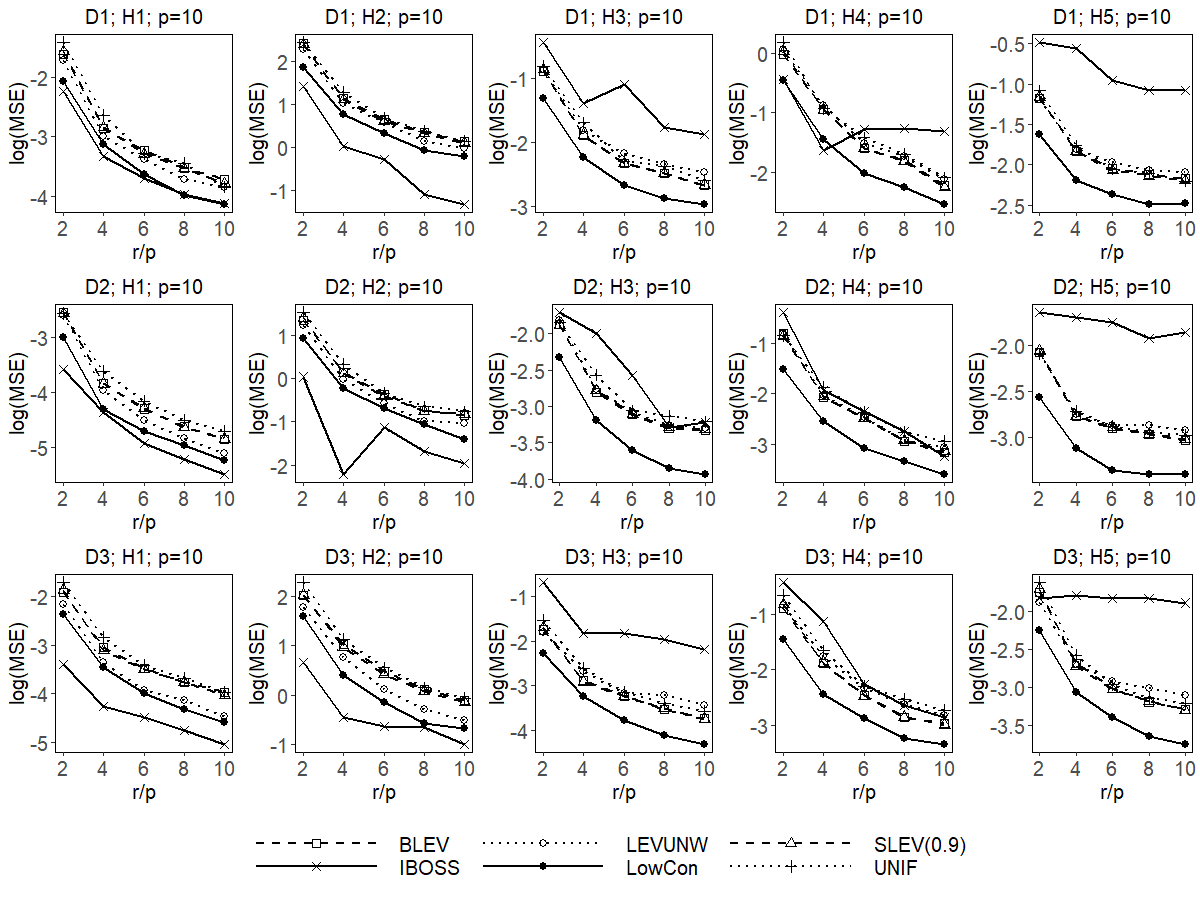

To compare the performance for different SLS estimators, we calculate the MSE for each of the SLS estimators based on 100 replicates, /100, where represents the SLS estimator in the th replication. Figure 6 and Figure 7 show the log(MSE) versus different subsample size under various settings, when and , respectively. In both figures, each row represents a particular data distribution D1D3 and each column represents a particular misspecification term H1H5.

In Figures 6 and 7, we first observe that UNIF, as expected, does not perform well. As two random subsampling methods, BLEV and SLEV perform similarly, and both perform better than UNIF in most of the cases. Such a phenomenon is attributed to the fact that both methods tend to select the data points with high leverage-scores, and these points are more informative for estimating the coefficient, compared to randomly selected points.

Next, we find both LEVUNW and IBOSS have decent performance when the misspecification term equals zero (the leftmost column). Their performance, however, is inconsistent when the non-zero misspecification term exists, i.e., they perform well in some cases and perform poorly on others. Note that these two methods, occasionally, are even inferior to the UNIF method. Such an observation indicates that these two methods are effective when the linear model assumption is correct, but are not robust when the model is misspecified. We attribute this observation to the fact that the most informative data points derived under the postulated model do not necessarily lead to a decent estimator when the postulated model is incorrect. In fact, the selected subsample can even be misleading and may dramatically deteriorate the performance of the subsample estimator.

Finally, we observe that the proposed LowCon method is consistently better than the UNIF method. Furthermore, LowCon has a decent performance in most of the cases, especially when the model is misspecified. This observation indicates LowCon is able to give a robust estimator under various misspecified linear models. Such success can be attributed to the fact that the proposed estimator has a relatively small upper bound for the worst-case MSE.

5 Real Data Analysis

We now evaluate the performance of different SLS estimators on two real-world datasets. One problem in real data analysis is that one does not know the true coefficient. It is thus impossible to calculate the mean squared error of a coefficient estimate. To overcome this problem, we consider the full-sample OLS estimator and the following three estimators as the surrogates for the true coefficient . One of them is the M-estimator , which is a well-known estimator in robust linear regression [27]. M-estimators can be calculated by using iterated re-weighted least squares, and it is known that such an estimator is more robust to the potential outliers in the data, compared to the OLS estimator [4]. We compute the M-estimator using the R package MASS with default parameters. We also consider the estimator yielded by the cellwise robust M regression method (CRM), denoted by [14]. Such a method improves the ordinary M-estimator by automatically identifying and replacing the outliers, resulting in a more robust estimator. We implement the CRM method using the R package crmReg. The results for the CRM method, however, are omitted in the second dataset, since the code did not stop within a reasonable amount of time. The last estimator we considered is the cubic smoothing spline estimator for the “null space” [44, 15, 55], denoted by . We now briefly introduce the cubic smoothing spline estimator in the following.

Suppose the response and the vector of predictors are related through the unknown functions such that , where . A widely used approach for estimating is via minimizing the penalized likelihood function,

| (9) |

where is the tuning parameter and is a penalty term. We refer to [44, 15, 39] for how to select the tuning parameter and how to construct the penalty term. The standard formulation of cubic smoothing splines performs the minimization of (9) in a reproducing kernel Hilbert space . In this case, the well-known representer theorem [44] states that there exist vectors and such that the minimizer of (9) is given by Here, the bivariate function is related to the reproducing kernel of , and we refer to [15] for technical details. Let be an matrix where the -th element equals . By construction of , one has [15]. Solving the minimization problem in (9) thus is equivalent to solving

| (10) |

We could then view the estimated in (10) as the “corrected” estimate of the true coefficient that takes into consideration the misspecified terms quantified by . We calculate such an estimate using the R package gss with the default parameters.

To compare the performance of different SLS estimators, we calculate the empirical MSE (EMSE) through one hundred replicates. In the th replicate, each subsampling method selects a subsample leading to an SLS estimator . For each of the four full-sample estimators (, , , and ), the corresponding EMSE is then calculated as

We emphasize that none of these full-sample estimators can be regarded as the gold standard. However, a robust SLS estimator should at least be relatively “close” to all of these estimators. That is to say, intuitively, a robust SLS estimator yields relatively small values of EMSEOLS, EMSEM, EMSECRM, and EMSESS.

Throughout this section, we set the parameter for the proposed LowCon method as 1. We opt to choose the subsample size as , and . The results in this section show that the proposed SLS estimator yields the smallest empirical mean squared error in almost all of the scenarios.

5.1 Africa Soil Property Prediction

Soil functional properties refer to the properties related to a soil’s capacity to support essential ecosystem services, which include primary productivity, nutrient and water retention, and resistance to soil erosion [16]. The soil functional properties are thus important for planning sustainable agricultural intensification and natural resource management. To measure the soil functional properties in a target area, a natural paradigm is to first collect a sample of soil in this area, then analyze the sample using the technique of diffuse reflectance infrared spectroscopy [36]. Such a paradigm might be time-consuming or even impractical if the desired sample of soil from the target area is difficult to obtain. Predicting the soil functional properties is thus a measurement-constrained problem.

With the help of greater availability of Earth remote sensing data, the practitioners are provided new opportunities to predict soil functional properties at unsampled locations. One of the Earth remote sensing databases is provided by the Shuttle Radar Topography Mission (SRTM), which aims to generate the most complete high-resolution digital topographic database of Earth [13]. In this section, we consider the Africa Soil Property Prediction dataset, which contains the soil samples from 1157 different areas (). We aim to analyze the relationship between the sand content, one of the soil functional properties, and the five features () derived from the SRTM data. The features include compound topographic index calculated from SRTM elevation data (CTI), SRTM elevation data (ELEV), topographic Relief calculated from SRTM elevation data (RELI), mean annual precipitation of average long-term Tropical Rainfall Monitoring Mission data (TMAP), and modified Fournier index of average long-term Tropical Rainfall Monitoring Mission data (TMFI). We assume the data follow the model,

| (11) |

where the random errors are i.i.d. and follow a non-centered normal distribution . Here, and represents a multivariate function that is unknown to the practitioner. The postulated model is thus a misspecified linear model. In our measurement-constrained setting, we assume the response vector is hidden unless explicitly requested. We then estimate the true coefficient of Model (11), i.e., , using subsampling methods.

The subsampling methods considered here are uniform subsampling (UNIF), basic leverage subsampling (BLEV), shrinkage leverage subsampling (SLEV) with parameter , unweighted-leverage subsampling (LEVUNW) [22, 23], information-based optimal subset selection (IBOSS) [48] and the proposed LowCon method. Table 1 summarizes the EMSEs for all six SLS estimators, and the best result in each row is in bold letter. We observe that the proposed LowCon method yields the best result in every row.

| UNIF | BLEV | SLEV | LEVUNW | IBOSS | LowCon | ||

|---|---|---|---|---|---|---|---|

| EMSEOLS | 5.39 | 2.92 | 3.44 | 2.09 | 34.87 | ||

| EMSEM | 5.38 | 2.97 | 3.50 | 2.13 | 34.07 | ||

| EMSECRM | 5.32 | 3.01 | 3.52 | 2.20 | 34.98 | ||

| EMSESS | 9.82 | 6.71 | 7.31 | 5.71 | 43.59 | ||

| EMSEOLS | 1.34 | 1.13 | 1.37 | 0.88 | 18.62 | ||

| EMSEM | 1.36 | 1.17 | 1.35 | 0.92 | 17.97 | ||

| EMSECRM | 1.38 | 1.21 | 1.37 | 1.00 | 18.51 | ||

| EMSESS | 5.49 | 5.04 | 5.71 | 4.55 | 27.09 | ||

| EMSEOLS | 0.61 | 0.45 | 0.64 | 0.38 | 2.84 | ||

| EMSEM | 0.62 | 0.44 | 0.65 | 0.39 | 2.64 | ||

| EMSECRM | 0.66 | 0.47 | 0.68 | 0.47 | 2.90 | ||

| EMSESS | 4.68 | 4.71 | 4.72 | 4.25 | 8.30 |

5.2 Diamond Price Prediction

The second real-data example we consider is the Diamond Price Prediction dataset 111The dataset can be downloaded from https://www.kaggle.com/shivam2503/diamonds., which contains the prices and the features of around 54,000 diamonds. Of interest is to analyze the relationship between the price of the diamond, and three continuous features (=3): weight of the diamond (caret), total depth percentage (depth), and width of top of diamond relative to widest point (table).

As the same setting used in Section 5.1, we assume the data follow a misspecified linear model,

Here, the random errors are i.i.d. and follow a non-centered normal distribution , where , and is a multivariate function that is unknown to the practitioner. Note that the price of a diamond might be time-consuming or even impossible to obtain if the diamond has not been on the market yet. We thus assume the value of the response vector is hidden unless explicitly requested, and we estimate the true coefficient using subsampling methods.

Table 2 summarizes the EMSEs for all the subsample estimators, and the best result in each row is in bold letter. From Table 2, we observe that the proposed LowCon algorithm yields decent performance in all the cases and the best result in most of the cases.

| UNIF | BLEV | SLEV | LEVUNW | IBOSS | LowCon | ||

|---|---|---|---|---|---|---|---|

| EMSEOLS | 7.01 | 4.24 | 5.29 | 4.67 | 8.96 | ||

| EMSEM | 7.08 | 4.52 | 5.60 | 4.96 | 6.07 | ||

| EMSESS | 11.16 | 9.13 | 10.13 | 9.69 | 10.32 | ||

| EMSEOLS | 2.54 | 2.09 | 1.76 | 2.68 | 8.68 | ||

| EMSEM | 2.88 | 2.37 | 2.89 | 5.82 | 2.19 | ||

| EMSESS | 7.41 | 6.83 | 6.53 | 7.54 | 10.18 | ||

| EMSEOLS | 1.36 | 0.83 | 1.03 | 1.28 | 8.16 | ||

| EMSEM | 1.72 | 1.40 | 1.32 | 5.38 | 1.33 | ||

| EMSESS | 6.27 | 5.50 | 5.91 | 5.67 | 9.56 |

6 Concluding Remarks

We considered the problem of estimating the coefficients in a misspecified linear model, under the measurement-constrained setting. When the model is correctly specified, various subsampling methods have been proposed to solve this problem. When the model is misspecified, however, we found the worst-case bias for a subsample least squares estimator can be inflated to be arbitrarily large. To overcome this problem, we aim to find a robust SLS estimator whose variance is bounded, and the worst-case bias is relatively small. We found such a goal can be achieved by selecting a subsample whose information matrix has a relatively small condition number. Motivated by this, we proposed the LowCon subsampling algorithm, which utilizes the orthogonal Latin hypercube design to identify sampling points. We proved the proposed estimator based on the subsample has a finite mean squared error. Furthermore, the bias of the proposed estimator has an upper bound, which approximately achieves the minimum value of the worst-case bias. We evaluated the performance of the proposed estimator through extensive simulation and real data analysis. Consistent with the theorem, the empirical results showed the proposed method has a robust performance.

The proposed algorithm can be easily extended to the cases when the predictor variables are categorical or are a mixture of categorical and continuous variables. The key idea is to replace the OLHD in Algorithm 1 by a proper design in a categorical (or mixture) design space. We refer to [30] and the reference therein for more discussion of such designs. Intuitively, utilizing such designs in Algorithm 1 will result in a subsample in a categorical (or mixture) design space with relatively low “condition number”.

Acknowledgment

The authors thank the associate editor and two anonymous reviewers for provided helpful comments on earlier drafts of the manuscript. The authors would like to acknowledge the support from the U.S. National Science Foundation under grants DMS-1903226, DMS-1925066, the U.S. National Institute of Health under grant R01GM122080.

SUPPLEMENTARY MATERIALS

- Title:

-

Proofs of theoretical results and related materials.

- More Discussion of Figure 1:

-

More discussion on the performance of the subsampling methods in the example shown in Figure 1.

- More Simulation Results:

-

We illustrate the impact of different choices of the parameter through more simulation results.

- Proof of Lemma 2.1:

-

A proof of the lemma stating the upper bound of the worst-case MSE.

- Proof of Theorem 3.1:

-

A proof of the main theorem stating the property of the proposed subsample least squares estimator.

- More discussion on Theorem 3.1:

-

A discussion of the impact of on Theorem 3.1.

- R-code:

-

A package containing code to perform the methods described in the article, the simulation studies, and the real data analysis.

- Dataset:

-

Data are publicly available.

Africa soil property prediction dataset: https://www.kaggle.com/c/afsis-soil-properties/data

Diamond price prediction dataset: https://www.kaggle.com/shivam2503/diamonds

Appendix A More Discussion of Figure 1

In the example shown in Figure 1, one may wonder about the chances of poor performances of the existing subsampling methods. To answer this question, we compare the proposed method (LowCon) with the uniform subsampling (UNIF) and the basic leverage subsampling method (BLEV) in terms of estimation error. We consider the mean squared error for each of the subsample least squares (SLS) based on one hundred replicates, /100, where represents the SLS estimator in the th replication. We consider different subsample sizes from ten to fifty. Table 3 summarizes the results, and the best result in each row is in bold letters. We observe that although the BLEV method performs better than UNIF, it still yields pretty large MSE. In other words, both UNIF and BLEV may result in unacceptable performance, especially when is small. We also observe the proposed LowCon method yields the best result in every row, indicating the performance of LowCon is robust to the misspecification term.

| UNIF | BLEV | LowCon | |

|---|---|---|---|

| 0.148 | 0.091 | ||

| 0.118 | 0.075 | ||

| 0.111 | 0.068 | ||

| 0.108 | 0.067 | ||

| 0.105 | 0.060 |

Appendix B More Simulation Results

Recall that the design space in Algorithm 1 is set to be , where and are the -percentile and -percentile of the th column of the scaled data points, respectively. Throughout Section 4, we set . In this section, we illustrate the impact of different choices of the parameter . We consider three different choices of , i.e., . Here, means the design space . We let the dimension . Other simulation settings are the same as the ones we used in Section 4. The results are shown in Figure 8, Figure 9, and Figure 10, respectively.

Consider the cases when . First, we observe that LowCon gives the best of the results in most of the cases when the model is correctly-specified, as shown in the leftmost column. Such an observation is expected since when , the LowCon method tends to select more data points with large leverage scores, resulting in a better estimation. We then observe that the performance of LowCon when is not as good as its performance when , indicating that a positive value of is essential for LowCon to work well in misspecified models. Consider the cases when . We observe LowCon yields unacceptable performance in many cases. Such an observation indicates the choice yields a large sampling bias to LowCon, resulting in poor performance. Finally, when , we observe LowCon yields reasonably well performance in most of the cases.

In summary, it is essential to select a that is neither too large nor too small for LowCon to perform well in misspecified models. In practice, we find works reasonably well in most of the cases.

Appendix C Proofs of Theoretical Results

C.1 Proof of Lemma 2.1

Proof.

Inequality (2) yields One thus has

| (12) | ||||

| (13) |

C.2 Proof of Theorem 3.1

The following Weyl’s inequalities are needed in the proof.

Theorem C.1.

Weyl’s inequalities [17] Let and be two matrices and . Let , and be the singular values of , and , respectively. Then

Proof of Theorem 3.1.

Let ; the Weyl’s inequalities yield

| (14) |

Let ; Weyl’s inequalities yield

| (15) |

Recall that, in Theorem 3.1, we assume . Combining Inequality (14) and Inequality (15) thus yields

| (16) |

Performing a Taylor expansion of the right-hand side of Inequality (16), which can be viewed as a function of , around the point yields

| (17) |

where is the remainder. Plugging Inequality (17) back into (16) yields

| (18) |

We now derive an upper bound for the first term on the right-hand side of Inequality (4). Note that

| (19) |

where Inequality (15) is used in the last step.

Appendix D More discussion on Theorem 3.1

We now discuss the impact of on Theorem 3.1. Recall that we have . For simplicity, we assume all the marginal distributions of the probability density function are symmetric, i.e., , for . Note that the data are first scaled to , and thus we have . Let to denote the design matrix generated from . By the definition of orthogonal Latin hypercube design, it is easy to check that . We also have

In summary, we have

| (22) |

Inequality (D) indicates that a large is associated with a larger upper bound of . Furthermore, for fixed , a “heavy-tailed” probability density function also yields a larger upper bound of .

∎

References

- [1] M. Ai, F. Wang, J. Yu, and H. Zhang. Optimal subsampling for large-scale quantile regression. Journal of Complexity, pages in press, DOI:10.1016/j.jco.2020.101512, 2020.

- [2] M. Ai, J. Yu, H. Zhang, and H. Wang. Optimal subsampling algorithms for big data regressions. Statistica Sinica, pages in press, DOI:10.5705/ss.202018.0439, 2019.

- [3] A. Alaoui and M. W. Mahoney. Fast randomized kernel ridge regression with statistical guarantees. In Advances in Neural Information Processing Systems, pages 775–783, 2015.

- [4] R. Andersen. Modern Methods for Robust Regression. SAGE, 2007.

- [5] G. E. Box and N. R. Draper. A basis for the selection of a response surface design. Journal of the American Statistical Association, 54(287):622–654, 1959.

- [6] G. Casella. Condition numbers and minimax ridge regression estimators. Journal of the American Statistical Association, 80(391):753–758, 1985.

- [7] T. M. Cioppa and T. W. Lucas. Efficient nearly orthogonal and space-filling Latin hypercubes. Technometrics, 49(1):45–55, 2007.

- [8] W. G. Cochran. Sampling Techniques. John Wiley & Sons, 2007.

- [9] M. Derezinski, M. K. Warmuth, and D. J. Hsu. Leveraged volume sampling for linear regression. In Advances in Neural Information Processing Systems, pages 2510–2519, 2018.

- [10] P. Drineas, M. Magdon-Ismail, M. W. Mahoney, and D. P. Woodruff. Fast approximation of matrix coherence and statistical leverage. Journal of Machine Learning Research, 13:3475–3506, 2012.

- [11] K.-T. Fang, R. Li, and A. Sudjianto. Design and Modeling for Computer Experiments. Chapman and Hall/CRC, 2005.

- [12] K.-T. Fang, C.-X. Ma, and P. Winker. Centered L2-discrepancy of random sampling and Latin hypercube design, and construction of uniform designs. Mathematics of Computation, 71(237):275–296, 2002.

- [13] T. G. Farr, P. A. Rosen, E. Caro, R. Crippen, R. Duren, S. Hensley, M. Kobrick, M. Paller, E. Rodriguez, L. Roth, et al. The shuttle radar topography mission. Reviews of Geophysics, 45(2), 2007.

- [14] P. Filzmoser, S. Höppner, I. Ortner, S. Serneels, and T. Verdonck. Cellwise robust m regression. Computational Statistics & Data Analysis, page 106944, 2020.

- [15] C. Gu. Smoothing Spline ANOVA Models. Springer Science & Business Media, 2013.

- [16] T. Hengl, G. B. Heuvelink, B. Kempen, J. G. Leenaars, M. G. Walsh, K. D. Shepherd, A. Sila, R. A. MacMillan, J. M. de Jesus, L. Tamene, et al. Mapping soil properties of Africa at 250 m resolution: random forests significantly improve current predictions. PLoS ONE, 10(6), 2015.

- [17] R. A. Horn and C. R. Johnson. Matrix Analysis. Cambridge University Press, 1990.

- [18] V. R. Joseph. Space-filling designs for computer experiments: A review. Quality Engineering, 28(1):28–35, 2016.

- [19] V. R. Joseph and Y. Hung. Orthogonal-maximin Latin hypercube designs. Statistica Sinica, pages 171–186, 2008.

- [20] J. Kiefer. Optimal design: Variation in structure and performance under change of criterion. Biometrika, 62(2):277–288, 1975.

- [21] J. P. Kleijnen. Design and Analysis of Simulation Experiments. Springer, 2008.

- [22] P. Ma, M. W. Mahoney, and B. Yu. A statistical perspective on algorithmic leveraging. Journal of Machine Learning Research, 16:861–911, 2015.

- [23] P. Ma and X. Sun. Leveraging for big data regression. Wiley Interdisciplinary Reviews: Computational Statistics, 7(1):70–76, 2015.

- [24] P. Ma, X. Zhang, X. Xing, J. Ma, and M. W. Mahoney. Asymptotic analysis of sampling estimators for randomized numerical linear algebra algorithms. The 23nd International Conference on Artificial Intelligence and Statistics. 2020, 2020.

- [25] M. W. Mahoney et al. Randomized algorithms for matrices and data. Foundations and Trends® in Machine Learning, 3(2):123–224, 2011.

- [26] M. D. McKay, R. J. Beckman, and W. J. Conover. A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics, 42(1):55–61, 2000.

- [27] P. Meer, D. Mintz, A. Rosenfeld, and D. Y. Kim. Robust regression methods for computer vision: A review. International journal of computer vision, 6(1):59–70, 1991.

- [28] C. Meng, Y. Wang, X. Zhang, A. Mandal, P. Ma, and W. Zhong. Effective statistical methods for big data analytics. Handbook of Research on Applied Cybernetics and Systems Science, page 280, 2017.

- [29] C. Meng, X. Zhang, J. Zhang, W. Zhong, and P. Ma. More efficient approximation of smoothing splines via space-filling basis selection. Biometrika, 107:723–735, 2020.

- [30] B. Minasny and A. B. McBratney. A conditioned Latin hypercube method for sampling in the presence of ancillary information. Computers & Geosciences, 32(9):1378–1388, 2006.

- [31] J.-S. Park. Optimal Latin hypercube designs for computer experiments. Journal of Statistical Planning and Inference, 39(1):95–111, 1994.

- [32] D. Pena and V. Yohai. A fast procedure for outlier diagnostics in large regression problems. Journal of the American Statistical Association, 94(446):434–445, 1999.

- [33] F. Pukelsheim. Optimal Design of Experiments. SIAM, 2006.

- [34] J. Sacks and D. Ylvisaker. Linear estimation for approximately linear models. The Annals of Statistics, pages 1122–1137, 1978.

- [35] B. Settles. Active learning. Synthesis Lectures on Artificial Intelligence and Machine Learning, 6(1):1–114, 2012.

- [36] K. D. Shepherd and M. G. Walsh. Development of reflectance spectral libraries for characterization of soil properties. Soil Science Society of America Journal, 66(3):988–998, 2002.

- [37] M. Stein. Large sample properties of simulations using Latin hypercube sampling. Technometrics, 29(2):143–151, 1987.

- [38] D. M. Steinberg and D. K. Lin. A construction method for orthogonal Latin hypercube designs. Biometrika, pages 279–288, 2006.

- [39] X. Sun, W. Zhong, and P. Ma. An asympirical smoothing parameters selection approach for smoothing spline anova models in large samples. arXiv preprint arXiv:2004.10271, 2020.

- [40] B. Tang. Orthogonal array-based Latin hypercubes. Journal of the American Statistical Association, 88(424):1392–1397, 1993.

- [41] S. K. Thompson. Simple random sampling. Sampling, Third Edition, pages 9–37, 2012.

- [42] L. N. Trefethen and D. Bau. Numerical Linear Algebra. SIAM, 1997.

- [43] M. Tsao and X. Ling. Subsampling method for robust estimation of regression models. Open Journal of Statistics, 2(03):281, 2012.

- [44] G. Wahba. Spline Models for Observational Data. SIAM, 1990.

- [45] H. Wang and Y. Ma. Optimal subsampling for quantile regression in big data. arXiv preprint arXiv:2001.10168, 2020.

- [46] H. Wang, Q. Xiao, and A. Mandal. Lhd: An R package for efficient Latin hypercube designs with flexible sizes. arXiv preprint arXiv:2010.09154, 2020.

- [47] H. Wang, Q. Xiao, and A. Mandal. Lhd: Latin hypercube designs (LHDs) algorithms. 2020. R package version 1.1.0.

- [48] H. Wang, M. Yang, and J. Stufken. Information-based optimal subdata selection for big data linear regression. Journal of the American Statistical Association, pages 1–13, 2018.

- [49] H. Wang, R. Zhu, and P. Ma. Optimal subsampling for large sample logistic regression. Journal of the American Statistical Association, 113(522):829–844, 2018.

- [50] Y. Wang, A. W. Yu, and A. Singh. On computationally tractable selection of experiments in measurement-constrained regression models. The Journal of Machine Learning Research, 18(1):5238–5278, 2017.

- [51] C. F. J. Wu and M. S. Hamada. Experiments: Planning, Analysis, and Optimization. John Wiley & Sons, 2011.

- [52] R. Xie, Z. Wang, S. Bai, P. Ma, and W. Zhong. Online decentralized leverage score sampling for streaming multidimensional time series. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 2301–2311, 2019.

- [53] K. Q. Ye. Orthogonal column Latin hypercubes and their application in computer experiments. Journal of the American Statistical Association, 93(444):1430–1439, 1998.

- [54] J. Yu, H. Wang, M. Ai, and H. Zhang. Optimal distributed subsampling for maximum quasi-likelihood estimators with massive data. Journal of the American Statistical Association, pages in press, DOI: 10.1080/01621459.2020.1773832, 2020.

- [55] J. Zhang, H. Jin, Y. Wang, X. Sun, P. Ma, and W. Zhong. Smoothing spline ANOVA models and their applications in complex and massive datasets. Topics in Splines and Applications, page 63, 2018.

- [56] X. Zhang, R. Xie, and P. Ma. Statistical leveraging methods in big data. In Handbook of Big Data Analytics, pages 51–74. Springer, 2018.

- [57] X. Zhu, J. Lafferty, and R. Rosenfeld. Semi-supervised learning with graphs. PhD thesis, Carnegie Mellon University, Language Technologies Institute, School of Computer Science, 2005.