Low-rank Tensor Learning with

Nonconvex

Overlapped Nuclear Norm

Regularization

Abstract

Nonconvex regularization has been popularly used in low-rank matrix learning. However, extending it for low-rank tensor learning is still computationally expensive. To address this problem, we develop an efficient solver for use with a nonconvex extension of the overlapped nuclear norm regularizer. Based on the proximal average algorithm, the proposed algorithm can avoid expensive tensor folding/unfolding operations. A special “sparse plus low-rank” structure is maintained throughout the iterations, and allows fast computation of the individual proximal steps. Empirical convergence is further improved with the use of adaptive momentum. We provide convergence guarantees to critical points on smooth losses and also on objectives satisfying the Kurdyka-Łojasiewicz condition. While the optimization problem is nonconvex and nonsmooth, we show that its critical points still have good statistical performance on the tensor completion problem. Experiments on various synthetic and real-world data sets show that the proposed algorithm is efficient in both time and space and more accurate than the existing state-of-the-art.

Keywords: Low-rank tensor, Proximal algorithm, Proximal average algorithm, Nonconvex regularization, Overlapped nuclear norm.

1 Introduction

Tensors can be seen as high-order matrices and are widely used for describing multilinear relationships in the data. They have been popularly applied in areas such as computer vision, data mining and machine learning (Kolda and Bader, 2009; Zhao et al., 2016; Song et al., 2017; Papalexakis et al., 2017; Hong et al., 2020; Janzamin et al., 2020). For example, color images (Liu et al., 2013), hyper-spectral images (Signoretto et al., 2011; He et al., 2019), and knowledge graphs (Nickel et al., 2015; Lacroix et al., 2018) can be naturally represented as third-order tensors, while color videos can be seen as 4-order tensors (Candès et al., 2011; Bengua et al., 2017). In YouTube, users can follow each other and belong to the same subscribed channels. By treating channel as the third dimension, the users’ co-subscription network can also be represented as a third-order tensor (Lei et al., 2009).

In many applications, only a few entries in the tensor are observed. For example, each YouTube user usually only interacts with a few other users (Lei et al., 2009; Davis et al., 2011), and in knowledge graphs, we can only have a few labeled edges describing the relations between entities (Nickel et al., 2015; Lacroix et al., 2018). Tensor completion, which aims at filling in this partially observed tensor, has attracted a lot of recent interest (Rendle and Schmidt-Thieme, 2010; Signoretto et al., 2011; Bahadori et al., 2014; Cichocki et al., 2015).

In the related task of matrix completion, the underlying matrix is often assumed to be low-rank (Candès and Recht, 2009), as its rows/columns share similar characteristics. The nuclear norm, which is the tightest convex envelope of the matrix rank (Boyd and Vandenberghe, 2009), is popularly used as its surrogate in low-rank matrix completion (Cai et al., 2010; Mazumder et al., 2010). In tensor completion, the low-rank assumption also captures relatedness in the different tensor dimensions (Tomioka et al., 2010; Acar et al., 2011; Song et al., 2017; Hong et al., 2020). However, tensors are more complicated than matrices. Indeed, even the computation of tensor rank is NP-hard (Hillar and Lim, 2013). In recent years, many convex relaxations based on the matrix nuclear norm have been proposed for tensors. Examples include the tensor trace norm (Cheng et al., 2016), overlapped nuclear norm (Tomioka et al., 2010; Gandy et al., 2011), and latent nuclear norm (Tomioka et al., 2010). Among these convex relaxations, the overlapped nuclear norm is the most popular as it (i) can be evaluated exactly by performing SVD on the unfolded matrices (Cheng et al., 2016), (ii) has better low-rank approximation (Tomioka et al., 2010), and (iii) can lead to exact recovery (Tomioka et al., 2011; Tomioka and Suzuki, 2013; Mu et al., 2014).

The (overlapped) nuclear norm equally penalizes all singular values. Intuitively, larger singular values are more informative and should be less penalized (Mazumder et al., 2010; Lu et al., 2016b; Yao et al., 2019b). To alleviate this problem in low-rank matrix learning, various adaptive nonconvex regularizers have been recently introduced. Examples include the capped- norm (Zhang, 2010b), log-sum-penalty (LSP) (Candès et al., 2008), truncated nuclear norm (TNN) (Hu et al., 2013), smoothed-capped-absolute-deviation (SCAD) (Fan and Li, 2001) and minimax concave penalty (MCP) (Zhang, 2010a). All these assign smaller penalties to the larger singular values. This leads to better recovery performance in many applications such as image recovery (Lu et al., 2016b; Gu et al., 2017) and collaborative filtering (Yao et al., 2019b), and lower statistical errors of various matrix completion and regression problems (Gui et al., 2016; Mazumder et al., 2020).

Motivated by the success of adaptive nonconvex regularizers in low-rank matrix learning, there are recent works that apply nonconvex regularization in learning low-rank tensors. For example, the TNN regularizer is used with the overlapped nuclear norm regularizer on video processing (Xue et al., 2018) and traffic data processing (Chen et al., 2020). In this paper, we propose a general nonconvex variant of the overlapped nuclear norm regularizer for low-rank tensor completion. Unlike the standard convex tensor completion problem, the resulting optimization problem is nonconvex and more difficult to solve. Previous algorithms in (Xue et al., 2018; Chen et al., 2020) are computationally expensive, and have neither convergence results nor statistical guarantees.

To solve this issue, based on the proximal average algorithm (Bauschke et al., 2008), we develop an efficient solver with much smaller time and space complexities. The keys to its success are on (i) avoiding expensive tensor folding/unfolding, (ii) maintaining a “sparse plus low-rank” structure on the iterates, and (iii) incorporating the adaptive momentum (Li and Lin, 2015; Li et al., 2017; Yao et al., 2017). Convergence guarantees to critical points are provided under the usual smoothness assumption for the loss and further Kurdyka-Łojasiewicz (Attouch et al., 2013) condition on the whole learning objective.

Besides, we study its statistical guarantees, and show that critical points of the proposed objective can have small statistical errors under the restricted strong convexity condition (Agarwal et al., 2010). Informally, for tensor completion with unknown noise, we show that the recovery error can be bounded as (see Theorem 16), where omits constant terms, (resp. ) is the ground-truth (resp. recovered) tensor, is the tensor order and is the rank of unfolding matrix on the th mode. When Gaussian additive noise is assumed, we show that the recovery error also depends linearly with the noise level (see Corollary 17) and where is the tensor size and is the number of observed elements (see Corollary 18).

We further extend it for use with Laplacian regularizer as in spatial-temporal analysis and non-smooth losses as in robust tensor completion. Experiments on a variety of synthetic and real-world data sets (including images, videos, hyper-spectral images, social networks, knowledge graphs and spatial-temporal climate observation records) show that the proposed algorithm is more efficient and has much better empirical performance than other low-rank tensor regularization and decomposition methods.

Difference with the Conference Version

A preliminary conference version of this work (Yao et al., 2019a) was published in ICML-2019. The main differences with this conference version are as follows.

- 1).

-

2).

Statistical guarantee of the proposed model for the tensor completion problem is added in Section 3.5, which shows that tensors that are not too spiky can be recovered. We also show how the recovery performance can depend on noise level, tensor ranks, and the number of observations.

-

3).

In Section 4, we enable the proposed method work with robust loss function (which is nonconvex and nonsmooth) and Laplacian regularizer. These enable the proposed algorithm to be applied to a wider range of application scenarios such as knowledge graph completion, spatial-temporal analysis and robust video recovery.

- 4).

Notation

Vectors are denoted by lowercase boldface, matrices by uppercase boldface, and tensors by Euler.

For a matrix (without loss of generality, we assume that ), denotes its th singular, its nuclear norm is ; returns its maximum singular.

For tensors, we follow the notation in (Kolda and Bader, 2009). For a -order tensor (without loss of generality, we assume ), its th entry is . One can unfold along its th mode to obtain the matrix with , whose entry is with . One can also fold a matrix back to a tensor , with , and as defined above. A slice in a tensor is a matrix obtained by fixing all but two ’s indices. The inner product of two -order tensors and is , the Frobenius norm is , returns the value of the element in with the maximum absolute value.

For a proper and lower-semi-continuous function , denotes its Frechet subdifferential (Attouch et al., 2013).

Finally, is the observer operator, i.e., given a binary tensor and an arbitrary tensor , if and otherwise.

2 Related Works

2.1 Low-Rank Matrix Learning

Learning of a low-rank matrix can be formulated as the following optimization problem:

| (1) |

where is a low-rank regularizer, is a hyperparameter, and is a loss function that is -Lipschitz smooth111In other words, for any .. Existing methods for low-rank matrix learning generally fall into three types: (i) nuclear norm minimization; (ii) nonconvex regularization; and (iii) matrix factorization.

2.1.1 Nuclear Norm Minimization

A common choice for is the nuclear norm regularizer. Using the proximal algorithm (Parikh and Boyd, 2013) on (1), the iterate at iteration is given by , where

| (2) |

Here, controls the stepsize (), and

| (3) |

is the proximal step. The following Lemma shows that can be obtained by shrinking the singular values of , which encourages to be low-rank.

Lemma 1

(Cai et al., 2010) , where is the SVD of , and .

A special class of low-rank matrix learning problems is matrix completion, which attempts to find a low-rank matrix that agrees with the observations in data :

| (4) |

Here, positions of the observed elements in are indicated by ’s in the binary matrix . Setting in (1), in (2) becomes:

| (5) |

Note that is low-rank and is sparse. thus has a “sparse plus low-rank” structure. This allows the SVD computation in Lemma 1 to be much more efficient (Mazumder et al., 2010). Specifically, on using the power method to compute ’s SVD, most effort is spent on multiplications of the forms and (where and ). Let in (5) be low-rank factorized as , where and with rank . Computing

| (6) |

takes time. Usually, and . Thus, this is much faster than directly multiplying and , which takes time. The same holds for computing . The proximal step in (3) takes a total of time, while a direct computation without utilizing the “sparse plus low-rank” structure takes time. Besides, as only and the factorized form of need to be kept, the space complexity is reduced from to .

2.1.2 Nonconvex Low-Rank Regularizer

Instead of using a convex in (1), the following nonconvex regularizer has been commonly used (Gui et al., 2016; Lu et al., 2016b; Gu et al., 2017; Yao et al., 2019b):

| (7) |

where is nonconvex and possibly nonsmooth. We assume the following on .

Assumption 1

is a concave and non-decreasing function on , with and for a positive constant .

Table 1 shows the ’s corresponding to the popular nonconvex regularizers of capped- penalty (Zhang, 2010b), log-sum-penalty (LSP) (Candès et al., 2008), truncated nuclear norm (TNN) (Hu et al., 2013), smoothed-capped-absolute-deviation (SCAD) (Fan and Li, 2001), and minimax concave penalty (MCP) (Zhang, 2010a). These nonconvex regularizers have similar statistical guarantees (Gui et al., 2016), and perform empirically better than the convex nuclear norm regularizer (Lu et al., 2016b; Yao et al., 2019b). The proximal algorithm can also be used, and converges to a critical point (Attouch et al., 2013). Analogous to Lemma 1, the underlying proximal step

| (8) |

can be obtained as follows.

Lemma 2

(Lu et al., 2016b) , where is the SVD of , and .

2.1.3 Matrix Factorization

Note that the aforementioned regularizers require access to individual singular values. As computing the singular values of a matrix (with ) via SVD takes time, this can be costly for a large matrix. Even when rank- truncated SVD is used, the computation cost is still . To reduce the computational burden, factored low-rank regularizers are proposed (Srebro et al., 2005; Mazumder et al., 2010). Specifically, equation (1) is rewritten into a factored form as

| (9) |

where is factorized as with and , is a regularizer on and , and is a hyperparameter. When , this reduces to matrix factorization (Vandereycken, 2013; Boumal and Absil, 2015; Tu et al., 2016; Wang et al., 2017). After factorization, gradient descent or alternative minimization are usually used for optimization. When certain conditions (such as proper initialization, restricted strong convexity (RSC) (Negahban and Wainwright, 2012), or restricted isometry property (RIP) (Candès and Tao, 2005)) are met, statistical guarantees can be obtained (Zheng and Lafferty, 2015; Tu et al., 2016; Wang et al., 2017).

Note that in Table 1, the nuclear norm is the only regularizer that has an equivalent factored form . For a matrix with ground-truth rank no larger than , it has been shown that the nuclear norm can be rewritten in a factored form as (Srebro et al., 2005)

However, the other nonconvex regularizers need to penalize individual singular values, and so cannot be written in factored form.

2.2 Low-Rank Tensor Learning

A -order tensor has rank one if it can be written as the outer product of vectors, i.e., where denotes the outer product (i.e., ). In general, the rank of a tensor is the smallest number of rank-one tensors that generate as their sum (Kolda and Bader, 2009).

To impose a low-rank structure on tensors, factorization methods (such as the Tucker / CP (Kolda and Bader, 2009; Hong et al., 2020), tensor-train (Oseledets, 2011) and tensor ring (Zhao et al., 2016) decompositions) have been used for low-rank tensor learning. These methods assume that the tensor can be decomposed into low-rank factor matrices (Kolda and Bader, 2009), which are then learned by alternating least squares or coordinate descent (Acar et al., 2011; Xu et al., 2013; Balazevic et al., 2019). Kressner et al. (2014) proposed to utilize the Riemannian structure on the manifold of tensors with fixed multilinear rank, and then perform nonlinear conjugate gradient descent. It can be speeded up by preconditioning (Kasai and Mishra, 2016). However, these models suffer from the problem of local minimum, and have no theoretical guarantee on the convergence rate. Moreover, its per-iteration cost depends on the product of all the mode ranks, and so can be expensive. Thus, they may lead to worse approximations and inferior performance (Tomioka et al., 2011; Liu et al., 2013; Guo et al., 2017).

Due to the above limitations, the nuclear norm, which has been commonly used in low-rank matrix learning, has been extended to the learning of low-rank tensors (Tomioka et al., 2010; Signoretto et al., 2011; Gu et al., 2014; Yuan and Zhang, 2016; Zhang and Aeron, 2017). The most commonly used low-rank tensor regularizer is the following (convex) overlapped nuclear norm:

Definition 3

(Tomioka et al., 2010) The overlapped nuclear norm of a -order tensor is , where are hyperparameters.

Note that the nuclear norm is a convex envelop of the matrix rank (Candès and Recht, 2009). Similarly, is a convex envelop of the tensor rank (Tomioka et al., 2010, 2011). Empirically, has better performance than the other nuclear norm variants in many tensor applications such as image inpainting (Liu et al., 2013) and multi-relational link prediction (Guo et al., 2017). On the theoretical side, let be the ground-truth tensor, and be the tensor obtained by solving the overlapped nuclear norm regularized problem. The statistical error between and has been established in tensor decomposition (Tomioka et al., 2011) and robust tensor decomposition problems (Gu et al., 2014). Specifically, under the restricted strong convexity (RSC) condition (Negahban and Wainwright, 2012), can be bounded by , where is the noise level and is the rank of . Furthermore, we can see that when (no noise), exactly recovery can be guaranteed.

2.3 Proximal Average (PA) Algorithm

Let be a Hilbert space of , which can be a scalar/vector/matrix/tensor variable. Consider the following optimization problem:

| (10) |

where is the loss and each is a regularizer with hyper-parameter . Often, is complicated, and its proximal step does not have a simple solution. Hence, the proximal algorithm cannot be efficiently used. However, it is possible that the proximal step for each individual can be easy obtained. For example, let and . The closed-form solution on the proximal step for (resp. ) is given by the soft-thresholding operator (Efron et al., 2004) (resp. singular value thresholding operator (Cai et al., 2010)). However, the closed-form solution does not exist for the proximal step with .

In this case, the proximal average (PA) algorithm (Bauschke et al., 2008) can be used instead. The PA algorithm generates ’s as

| (11) | ||||

| (12) | ||||

| (13) |

As each individual proximal step in (13) is easy, the PA algorithm can be significantly faster than the proximal algorithm (Yu, 2013; Zhong and Kwok, 2014; Yu et al., 2015; Shen et al., 2017). When both and are convex, the PA algorithm converges to an optimal solution of (10) with a proper choice of stepsize (Yu, 2013; Shen et al., 2017). Recently, the PA algorithm has also been extended to nonconvex and ’s (Zhong and Kwok, 2014; Yu et al., 2015). Moreover, can be adaptively changed to obtain an empirically faster convergence (Shen et al., 2017).

3 Proposed Algorithm

Analogous to the low-rank matrix completion problem in (1), we consider the following low-rank tensor completion problem with a nonconvex extension of the overlapped nuclear norm:

| (14) |

Here, the observed elements are in , is the tensor to be recovered, is a smooth loss function, and is a nonconvex regularizer in the form in (7). Unlike the overlapped nuclear norm in Definition 3, here we only sum over modes. This is useful when some modes are already small (e.g., the number of bands in color images), and so do not need to be low-rank regularized. When and in (7), problem (14) reduces to (convex) overlapped nuclear norm regularization. When and is the square loss, (14) reduces to the matrix completion problem:

which can be solved by the proximal algorithm as in (Lu et al., 2016b; Yao et al., 2019b). In the sequel, we only consider .

3.1 Issues with Existing Solvers

First, consider the case where in (7) is convex. While may not be equal to , it can be easily shown that existing optimization solvers in (Tomioka et al., 2010; Boyd et al., 2011; Liu et al., 2013) can still be used. However, when is nonconvex, the fast low-rank tensor completion (FaLRTC) solver (Liu et al., 2013) cannot be applied, as the dual of (14) cannot be derived. Tomioka et al. (2010) used the alternating direction of multiple multipliers (ADMM) (Boyd et al., 2011) solver for the overlapped nuclear norm. Recently, it is used in (Chen et al., 2020) to solve a special case of (14), in which is the truncated nuclear norm (TNN) regularizer (see Table 1). Specifically, (14) is first reformulated as

Using ADMM, it then generates iterates as

| (15) | ||||

| (16) | ||||

| (17) |

where ’s are the dual variables, and . The proximal step in (16) can be computed from Lemma 2. Convergence of this ADMM procedure is guaranteed in (Hong et al., 2016; Wang et al., 2019). However, it does not utilize the sparsity induced by . Moreover, as the tensor needs to be folded and unfolded repeatedly, the iterative procedure is expensive, taking space and time per iteration.

On the other hand, the proximal algorithm (Section 2.1) cannot be easily used, as the proximal step for is not simple in general.

3.2 Structure-aware Proximal Average Iterations

Note that in (7) admits a difference-of-convex decomposition (Hartman, 1959; Le Thi and Tao, 2005), i.e., can be decomposed as where and are convex (Yao et al., 2019b). The proximal average (PA) algorithm (Section 2.3) has been recently extended for nonconvex and ’s, where each admits a difference-of-convex decomposition (Zhong and Kwok, 2014). Hence, as (14) is in the form in (10), one can generate the PA iterates as:

| (18) | |||||

| (19) | |||||

| (20) |

where is a sparse tensor with

| (21) |

In (20), each individual proximal step can be computed using Lemma 2. However, tensor folding and unfolding are still required. A direct application of the PA algorithm is as expensive as using ADMM (see Table 2).

In the following, we show that by utilizing the “sparse plus low-rank” structure, the PA iterations can be computed efficiently without tensor folding/unfolding. In the earlier conference version (Yao et al., 2019a), we only considered the case . Here, we extend this to by noting that the coordinate format of sparse tensors can naturally handle tensors with arbitrary orders (Section 3.2.1) and the proximal step can be performed without tensor folding/unfolding (Section 3.2.2).

3.2.1 Efficient Computations of and in (18), (19)

First, rewrite (20) as , where and . Recall that obtained from the proximal step is low-rank. Let its rank be . Hence, can be represented as , where and . In each PA iteration, we avoid constructing the dense by storing the above low-rank factorized form of instead. Similarly, we also avoid constructing in (18) by storing it implicitly as

| (22) |

in (19) can then be rewritten as

| (23) |

The sparse tensor in (21) can be constructed efficiently by using the coordinate format222For a sparse -order tensor, its th nonzero element is represented in the coordinate format as , where are indices on each mode and is the value. (Bader and Kolda, 2007). Using (22), each can be computed by finding the corresponding rows in as shown in Algorithm 1. This takes time.

3.2.2 Efficient Computation of in (20)

Recall that the proximal step in (20) requires SVD, which involves matrix multiplications in the form (where ) and (where ). Using the “sparse plus low-rank” structure in (23), these can be computed as

| (24) |

and

| (25) |

The first terms in (24) and (25) can be easily computed in space and time. The last terms ( and ) are sparse, and can be computed in space and time by using sparse tensor packages such as the Tensor Toolbox (Bader and Kolda, 2007). However, a direct computation of the and terms involves tensor folding/unfolding, and is expensive. By examining how elements are ordered by folding/unfolding, the following shows that these multiplications can indeed be computed efficiently without explicit folding/unfolding.

Proposition 4

Let , , and (resp.) be the th column of (resp.). For any , and , we have

| (26) | ||||

| (27) |

where is the Kronecker product, , and reshapes a vector of length into a matrix of size .

In the earlier conference version (Yao et al., 2019a), Proposition 3.2 there (not the proposed algorithm) limits the usage to . Without Proposition 4, the algorithm can suffer from expensive computation cost, and thus has no efficiency advantage over the simple use of the PA algorithm. Specifically, when mapping the vector back to a matrix, we do not need to take a special treatment on the size of matrix. The reason is that, has elements and we just need to map it back to a matrix of size . Thus, we do not have parameters for mat operation in the conference version. However, when , has elements, we need to check whether ideas used in the conference version can be done in a similar way. As a result, we have two more parameters for the mat operation here, which customize reshaping matrix to a proper size.

3.2.3 Time and Space Complexities

A direct computation of takes time and space. By using the computation in Proposition 4, these are reduced to time and space. This is also the case for . Details are in the following.

| operation | time | space | |

| reshaping | |||

| multiplication | |||

| Kronecker product | |||

| summation | |||

| total for (26) | |||

| operation | time | space | |

| reshaping | |||

| multiplication | |||

| reshaping | |||

| multiplication | |||

| summation | |||

| total for (27) | |||

Combining the above, and noting that we have to keep the factorized form of , computing all the proximal steps in (20) takes

| (28) |

space and

| (29) |

time. Empirically, as will be seen in the experimental results in Section 5.1.2, , . Hence, (28) and (29) are much smaller than the complexities with a direct usage of PA and ADMM in Section 3.1 (Table 2).

3.3 Use of Adaptive Momentum

The PA algorithm uses only first-order information, and can be slow to converge empirically (Parikh and Boyd, 2013). To address this problem, we adopt adaptive momentum, which uses historical iterates to speed up convergence. This has been popularly used in stochastic gradient descent (Duchi et al., 2011; Kingma and Ba, 2014), proximal algorithms (Li and Lin, 2015; Yao et al., 2017; Li et al., 2017), cubic regularization (Wang et al., 2020), and zero-order black-box optimization (Chen et al., 2019). Here, we adopt the adaptive scheme in (Li et al., 2017).

The resultant procedure, which will be called NOnconvex Regularized Tensor (NORT), is shown in Algorithm 2. When the extrapolation step achieves a lower function value (step 4), the momentum is increased to further exploit the opportunity of acceleration; otherwise, is decayed (step 7). When step 5 is performed, . in step 9 becomes

| (30) |

which still has the “sparse plus low-rank” structure. When step 7 is performed, , and obviously the resultant is “sparse plus low-rank”. Thus, the more efficient reformulations in Proposition 4 can be applied in computing the proximal steps at step 11. Note that the rank of in step 11 is determined implicitly by the proximal step. As and are implicitly represented in factorized forms, and (in step 3) do not need to be explicitly constructed. As a result, the resultant time and space complexities are the same as those in Section 3.2.3.

3.4 Convergence Properties

In this section, we analyze the convergence properties of the proposed algorithm. As can be seen from (14), we have here. Moreover, throughout this section, we assume that the loss is (Lipschitz-)smooth.

Note that existing proofs for PA algorithm (Yu, 2013; Zhong and Kwok, 2014; Yu et al., 2015) cannot be directly used, as adaptive momentum has not been used with the PA algorithm on nonconvex problems (see Table 2), and also that they do not involve tensor folding/unfolding operations. Our proof strategy will still follow the three main steps in proving convergence of PA:

-

1.

Show that the proximal average step with ’s in (13) corresponds to a regularizer;

-

2.

Show that this regularizer, when combined with the loss in (10), serves as a good approximation of the original objective .

-

3.

Show that the proposed algorithm finds critical points of this approximate optimization problem.

First, the following Proposition shows that the average step in (18) and proximal steps in (20) together correspond to a new regularizer .

Proposition 6

For any , , where

Analogous to (10), let the objective corresponding to regularizer be

| (31) |

The following bounds the difference between the optimal values ( and , respectively) of the objectives in (10) and . It thus shows that serves as an approximation to , which is controlled by .

Proposition 7

, where is defined in Assumption 1.

Before showing the convergence of the proposed algorithm, the following Proposition first shows the condition of being critical points of .

Proposition 8

If there exists such that , then is a critical point of .

Finally, we show how convergence to critical points can be ensured by the proposed algorithm under smooth assumption of loss (Section 3.4.1) and Kurdyka-Łojasiewicz condition for the approximated objective (Section 3.4.2).

3.4.1 With Smoothness Assumption on Loss

Theorem 9

The sequence generated from Algorithm 2 has at least one limit point, and all limits points are critical points of .

Proof [Sketch, details are in Appendix B.5.] The main idea is as follows. First, we show that (i) if step 5 is performed, ; (ii) if step 7 is performed, we have . Combining the above two conditions, we obtain

where and are partitions of such that when step 5 is performed, and when step 7 is performed. Finally, when , we discuss three cases: (i) is finite, is infinite; (ii) is infinite, is finite; and (iii) both and are infinite. Let be a limit point of , and be a subsequence that converges to . In all three cases, we show that

Thus,

we must have

is also a critical point

based on Proposition 8.

It is easy to see that

we have not made any specifications

on the limit points.

Thus, all limit points are also critical points.

Recall that is generated from in steps 9-12 and indicates convergence to a critical point (Proposition 8). Thus, we can measure convergence of Algorithm 2 by . Corollary 10 shows that a rate of can be obtained, which is also the best possible rate for first-order methods on general nonconvex problems (Nesterov, 2013; Ghadimi and Lan, 2016).

Corollary 10

, where .

3.4.2 With Kurdyka-Łojasiewicz Condition on Approximated Objective

In Section 3.4.1, we showed the convergence results when is smooth and is of the form in (7). In this section, we consider using the Kurdyka-Łojasiewicz (KL) condition (Attouch et al., 2013; Bolte et al., 2014) on , which has been popularly used in nonconvex optimization, particularly in gradient descent (Attouch et al., 2013) and proximal gradient algorithms (Bolte et al., 2014; Li and Lin, 2015; Li et al., 2017). For example, the class of semi-algebraic functions satisfy the KL condition. More examples can be found in (Bolte et al., 2010, 2014).

Definition 12

A function : has the uniformized KL property if for every compact set on which is a constant, there exist , such that for all and all , one has , where for some , and .

Since the KL property (Attouch et al., 2013; Bolte et al., 2014) does not require to be smooth or convex, it thus allows convergence analysis under the nonconvex and nonsmooth setting. However, such a property cannot replace the smoothness assumption in Section 3.4.1, as there are example functions which are smooth but fail to meet the KL condition (Section 4.3 of (Bolte et al., 2010)).

The following Theorem extends Algorithm 2 to be used with the uniformized KL property.

Theorem 13

Proof [Sketch, details are in Appendix B.7.] The proof idea generally follows that for (Bolte et al., 2014) with a special treatment for here. First, we show

Next, using the KL condition, we have

Then, let . From its definition, we have

Combining the above three inequalities, we obtain

Since

, then .

The above inequality becomes

,

where .

It is shown in (Bolte et al., 2014; Li and Lin, 2015; Li et al., 2017) that

for the sequence satisfying the above inequality, we have convergence to zero

with the

different rates stated in the Theorem.

In Corollary 10 and Theorem 13, the convergence rates do not depend on , and thus do not demonstrate the effect of momentum. Empirically, the proposed algorithm does have faster convergence when momentum is used, and will be shown in Section 5. This also agrees with previous studies in (Duchi et al., 2011; Kingma and Ba, 2014; Li and Lin, 2015; Li et al., 2017; Yao et al., 2017).

3.5 Statistical Guarantees

Existing statistical analysis on nonconvex regularization has been studied in the context of sparse and low-rank matrix learning. For example, the SCAD (Fan and Li, 2001), MCP (Zhang, 2010a) and capped- (Zhang, 2010b) penalties have shown to be better than the convex -regularizer on sparse learning problems; and SCAD, MCP and LSP have shown to be better than the convex nuclear norm in matrix completion (Gui et al., 2016). However, these results cannot be extended to the tensor completion problem here as the nonconvex overlapped nuclear norm regularizer in (10) is not separable. Statistical analysis on tensor completion has been studied with CP and Tucker decompositions (Mu et al., 2014), tensor ring decomposition (Huang et al., 2020), convex overlapped nuclear norm (Tomioka et al., 2011), and tensor nuclear norm (Yuan and Zhang, 2016; Cheng et al., 2016). They show that tensor completion is possible under the incoherence condition when the number of observations is sufficiently large. In comparison, in this section, we will (i) use the restricted strong convexity condition (Agarwal et al., 2010; Negahban et al., 2012)) instead of the incoherence condition, and (ii) study nonconvex overlapped nuclear norm regularization.

3.5.1 Controlling the Spikiness and Rank

In the following, we assume that elements in are drawn i.i.d. from the uniform distribution. However, when the sample size , tensor completion is not always possible. Take the special case of matrix completion as an example. If is an almost-zero matrix with only one element being , it cannot be recovered unless the nonzero element is observed. However, when gets larger, there is a vanishing probability of observing the nonzero element, and so with high probability (Candès and Recht, 2009; Negahban and Wainwright, 2012).

To exclude tensors that are too “spiky” and allow tensor completion, we introduce

| (32) |

which is an extension of the measure in (Negahban and Wainwright, 2012; Gu et al., 2014) for matrices. Note that is invariant to the scale of and . Moreover, when all elements in have the same value (least spiky); and when has only one nonzero element (spikiest). Similarly, to measure how close is to low-rank, we use

| (33) |

where ’s are pre-defined constants depending on the penalty strength. This is also extended from the measure in (Negahban and Wainwright, 2012; Gu et al., 2014) on matrices. Note that , with equality holds when all nonzero singular values of ’s are the same. The target tensor should thus have small and . In (14), assume for simplicity that and for . We then have the following constrained version of (14):

| (34) |

where encourages to be low-rank (i.e., small ), and the constraint on avoids to be spiky (i.e., small ).

3.5.2 Restricted Strong Convexity (RSC)

Following (Tomioka et al., 2011; Negahban and Wainwright, 2012; Loh and Wainwright, 2015; Zhu et al., 2018), we introduce the restricted strong convexity (RSC) condition.

Definition 14

(Restricted strong convexity (RSC) condition (Agarwal et al., 2010)) Let be an arbitrary -order tensor. It satisfies the RSC condition if there exist constants and such that

| (35) |

Let for . Consider the set of tensors parameterized by :

where is a positive constant. The following Lemma shows that the RSC condition holds when the low-rank tensor is not too spiky. If the RSC condition does not hold, the tensor can be too hard to be recovered.

Lemma 15

There exists , , , such that , where , we have

| (36) |

with a high probability of at least .

Another condition commonly used in low-rank matrix/tensor learning is incoherence (Candès and Recht, 2009; Mu et al., 2014; Yuan and Zhang, 2016), which prevents information of the row/column spaces of the matrix/tensor from being too concentrated in a few rows/columns. However, as discussed in (Negahban and Wainwright, 2012), the RSC condition is less restrictive than the incoherence condition, and can better describe “spikiness” (details are in Appendix A). Thus, we adopt the RSC instead of the incoherence condition here.

3.5.3 Main results

Let be the ground-truth tensor, and be an estimate of obtained as a critical point of (34). The following bounds the distance between and .

Theorem 16

Assume that is differentiable, and the RSC condition holds with . Assume that there exists positive constant such that , and satisfies

| (37) |

where . Then,

| (38) |

where , , and is the rank of .

Proof [Sketch, details are in Appendix B.9.2.] The general idea of this proof is inspired from (Loh and Wainwright, 2015).333Note, however, that Loh and Wainwright (2015) use different mathematical tools as they consider sparse vectors with separable dimensions, while we consider overlapped tensor regularization with coupled singular values. There are three main steps:

-

•

Let . We prove by contradiction that . Thus, we only need to consider the first condition in (35).

- •

- •

Since in (34), we have (as for a rank- matrix ). Thus, in Theorem 16, we can take , which is finite and cannot be arbitrarily large. While we do not have access to the ground-truth in practice, Theorem 16 shows that the critical point can be bounded by a finite distance from , which means that an arbitrary critical point may not be bad. From (38), we can also see that the error increases with the tensor order and rank . This is reasonable as tensors with higher orders or larger ranks are usually harder to estimate. Besides, recall that in Assumption 1 reflects how nonconvex the function is; while in Definition 14 measures strong convexity. Thus, these two quantities play opposing roles in (38). Specifically, a larger leads to a larger , and subsequently smaller ; whereas a larger leads to a larger , and subsequently larger .

Finally, note that the range for is (37) can be empty, which means there can be no to ensure Theorem 16. To understand when this can happen, consider the two extreme cases:

-

C1.

There is no noise in the observations, i.e., : In this case, (37) reduces to

Thus, such a may not exist when the number of observations is too small.

- C2.

Overall, when does not exist, it is likely that the tensor completion problem is too hard to have good recovery performance.

On the other hand, there are cases that always exists. For example, when , we have . The requirement on is then , and such a always exists.

3.5.4 Dependencies on Noise Level and Number of Observations

In this section, we demonstrate how the noise level affects (38). We assume that the observations are contaminated by additive Gaussian noise, i.e.,

| (39) |

where is a random variable following the normal distribution . The effects of the noise level and number of observations in are shown in Corollaries 17 and 18, respectively, which can be derived from Theorem 16.

Corollary 17

Let and . When is sufficiently large and (to ensure satisfies (37)), then .

Corollary 17 shows that the recovery error decreases as the noise level gets smaller, and we can expect an exact recovery when , which is empirically verified in Section 5.1.4. When , becomes the convex overlapping nuclear norm. In this case, Theorem 2 in (Tomioka et al., 2011) shows that the recovery error can be bounded as . Thus, Corollary 17 can be seen as an extension of Theorem 2 in (Tomioka et al., 2011) to the nonconvex case.

Corollary 18

Let . Suppose that the noise level is sufficiently small and (to ensure satisfies (37)). Then, .

Corollary 18 shows that the recovery error decays as gets larger. Such a dependency on the number of observed elements is the same as in matrix completion problems with nonconvex regularization (Gui et al., 2016). Corollary 18 can be seen as an extension of Corollary 3.6 in (Gui et al., 2016) to the tensor case.

4 Extensions

In this section, we show how the proposed NORT algorithm in Section 3 can be extended for robust tensor completion (Section 4.1) and tensor completion with graph Laplacian regularization (Section 4.2).

4.1 Robust Tensor Completion

In tensor completion applications such as video recovery and shadow removal, the observed data often have outliers (Candès et al., 2011; Lu et al., 2016a). Instead of using the square loss, more robust losses like the loss (Candès et al., 2011; Lu et al., 2013; Gu et al., 2014) and capped- loss (Jiang et al., 2015), are preferred.

In the following, we assume that the loss is of the form , where is smooth and satisfies Assumption 1. The optimization problem then becomes

| (40) |

Since is non-differentiable at , Algorithm 2 cannot be directly used. Motivated by smoothing the loss with the Huber loss (Huber, 1964) and the difference-of-convex decomposition of (Le Thi and Tao, 2005; Yao and Kwok, 2018), we propose to smoothly approximate by

| (41) |

where is in Assumption 1, is a smoothing parameter, and is the Huber loss (Huber, 1964):

The following Proposition shows that is smooth, and a small ensures that it is a close approximation to .

Proposition 19

is differentiable and .

Problem (40) is then transformed to

| (42) |

In Algorithm 3, we gradually reduce the smoothing factor in step 3, and use Algorithm 2 to solve the smoothed problem (42) in each iteration.

Convergence of Algorithm 3 is ensured in Theorem 20. However, the statistical guarantee in Section 3.5 does not hold as the robust loss is not smooth.

Theorem 20

The sequence generated from Algorithm 3 has at least one limit point, and all limits points are critical points of .

4.2 Tensor Completion with Graph Laplacian Regularization

The graph Laplacian regularizer is often used in tensor completion (Narita et al., 2012; Song et al., 2017). For example, in Section 5.5, we will consider an application in spatial-temporal analysis (Bahadori et al., 2014), namely, climate prediction based on meteorological records. The spatial-temporal data is represented by a 3-order tensor , where is the number of locations, is the number of time stamps, and is the number of variables corresponding to climate observations (such as temperature and precipitation). Usually, observations are only available at a few stations, and slices in corresponding to the unobserved locations are missing. Learning these entries can then be formulated as a tensor completion problem. To allow generalization to the unobserved locations, correlations among locations have to be leveraged. This can be achieved by using the graph Laplacian regularizer (Belkin et al., 2006) on a graph with nodes being the locations (Bahadori et al., 2014). Let the affinity matrix of be , and the corresponding graph Laplacian matrix be , where . As the spatial locations are stored along the tensor’s first dimension, the graph Laplacian regularizer is defined as , which encourages nearby stations to have similar observations. When , it reduces to the commonly used Frobenius-norm regularizer (Hsieh et al., 2015). With regularizer , problem (14) is then extended to:

| (43) |

where is a hyperparameter.

Using the PA algorithm, it can be easily seen that the updates in (18)-(20) for and remain the same, but that for becomes

To maintain efficiency of NORT, the key is to exploit the low-rank structures. Using (22), can be written as

| (44) |

can also be rewritten in low-rank form as

For matrix multiplications of the forms and involved in the SVD of the proximal step, we have

| (45) |

and

| (46) |

Thus, one can still leverage the efficient computational procedures in Proposition 4 to compute , where in (45), and in (46).

5 Experiments

In this section, experiments are performed on both synthetic (Section 5.1) and real-world data sets (Sections 5.2-5.5), using a PC with Intel-i9 CPU and 32GB memory. To reduce statistical variation, all results are averaged over five repetitions.

5.1 Synthetic Data

We follow the setup in (Song et al., 2017). First, we generate a 3-order tensor (i.e., ) , where , and , denotes the outer product (i.e., ). denotes the ground-truth rank and is set to 5, with all ’s equal to . All elements in ’s, ’s, ’s and ’s are sampled independently from the standard normal distribution. Each element of is then corrupted by noise from to form . A total of random elements are observed from . We use of them for training, and the remaining for validation. Testing is evaluated on the unobserved elements in .

We use the square loss and three nonconvex penalties: capped- (Zhang, 2010a), LSP (Candès et al., 2008) and TNN (Hu et al., 2013). The following methods are compared:

-

•

PA-APG (Yu, 2013), which solves the convex overlapped nuclear norm minimization problem;

- •

- •

- •

All algorithms are implemented in Matlab, with sparse tensor and matrix operations performed via Mex files in C. All hypeprparamters (including the ’s in (14) and hyperparameter in the baselines) are tuned by grid search using the validation set. We early stop training if the relative change of objective in consecutive iterations is smaller than or reaching the maximum of iterations.

5.1.1 Recovery Performance Comparison

In this experiment, we set , where and . Following (Lu et al., 2016b; Yao et al., 2017, 2019b), performance is evaluated by the (i) root-mean-square-error on the unobserved elements: , where is the low-rank tensor recovered, and contains the unobserved elements in ; (ii) CPU time; and (iii) space, which is measured as the memory used by MATLAB when running each algorithm.

Results on RMSE and space are shown in Table 3. We can see that the nonconvex regularizers (capped-, LSP and TNN, with methods GDPAN, LRTC, sNORT and NORT) all yield almost the same RMSE, which is much lower than that of using the convex nuclear norm regularizer in PA-APG. As for the space required, sNORT and NORT take orders of magnitude smaller space than the others. LRTC takes the largest space due to the use of multiple auxiliary and dual variables. Convergence of the optimization objective is shown in Figure 1. As can be seen, NORT is the fastest, followed by sNORT and GDPAN, while LRTC is the slowest. These demonstrate the benefits of avoiding repeated tensor folding/unfolding operations and faster convergence of the proximal average algorithm.

| (sparsity:) | (sparsity:) | ||||

| RMSE | space (MB) | RMSE | space (MB) | ||

| convex | PA-APG | 0.01100.0007 | 600.870.4 | 0.00980.0001 | 4804.5598.2 |

| GDPAN | 0.00100.0001 | 423.111.4 | 0.00060.0001 | 3243.3489.6 | |

| nonconvex | LRTC | 0.00100.0001 | 698.921.5 | 0.00060.0001 | 5870.6514.0 |

| (capped-) | sNORT | 0.00100.0001 | 10.10.1 | 0.00060.0001 | 44.60.3 |

| NORT | 0.00090.0001 | 14.40.1 | 0.00060.0001 | 66.30.6 | |

| GDPAN | 0.00100.0001 | 426.99.7 | 0.00060.0001 | 3009.3376.2 | |

| nonconvex | LRTC | 0.00100.0001 | 714.024.1 | 0.00060.0001 | 5867.7529.1 |

| (LSP) | sNORT | 0.00100.0001 | 10.80.1 | 0.00060.0001 | 44.60.2 |

| NORT | 0.00100.0001 | 14.00.1 | 0.00060.0001 | 62.10.5 | |

| GDPAN | 0.00100.0001 | 427.310.1 | 0.00060.0001 | 3009.2412.2 | |

| nonconvex | LRTC | 0.00100.0001 | 759.024.3 | 0.00060.0001 | 5865.5519.3 |

| (TNN) | sNORT | 0.00100.0001 | 10.20.1 | 0.00060.0001 | 44.70.2 |

| NORT | 0.00100.0001 | 14.40.2 | 0.00060.0001 | 63.10.6 | |

5.1.2 Ranks during Iteration

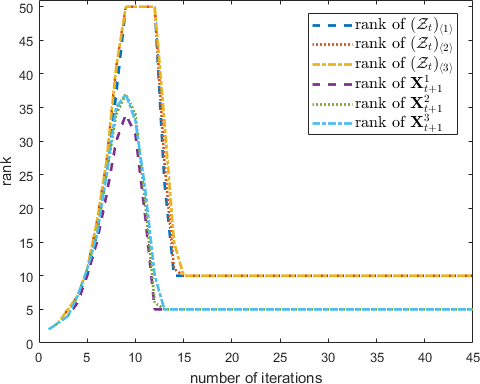

Unlike factorization methods which explicitly constrain the iterate’s rank, in NORT (Algorithm 2), this is only implicitly controlled by the nonconvex regularizer. As shown in Table 2, having a large rank during the iteration may affect the efficiency of NORT. Figure 2 shows the ranks of and at step 11 of Algorithm 2. As can be seen, the ranks of the iterates remain small compared with the tensor size (). Moreover, the ranks of , , and all converge to the true rank (i.e., ) of the ground-truth tensor.

5.1.3 Quality of Critical Points

In this experiment, we empirically validate the statistical performance of critical points analysed in Theorem 16. Note that and are initialized as the zero tensor in Algorithm 2, and is implicitly stored by a summation of factorized matrices in (22). We randomly generate , where elements in ’s and ’s follow . The statistical error is measured as the RMSE between during iterating of NORT (Algorithm 2) and the underlying ground-truth (i.e., ), while the optimization error is measured as the RMSE between iterate and the globally optimal solution of (14) (i.e., ). We use the same experimental setup as in Section 5.1.1. As the exact is not known, it is approximated by the which obtains the lowest training objective value over 20 repetitions.

Figure 3 shows the statistical error versus optimization error obtained by NORT with the (smooth) LSP regularizer and (nonsmooth) capped- regularizer. While both the statistical and optimization errors decrease with more iterations, the statistical error is generally larger than the optimization error since we may not have exact recovery when noise is present. Moreover, the optimization errors for different runs terminate at different values, indicating that NORT indeed converges to different local solutions. However, all these have similar statistical errors, which validates Theorem 16. Finally, while the capped- regularizer does not satisfy Assumption 1 (which is required by Theorem 16), Figure 3(b) still shows a similar pattern as Figure 3(a). This helps explain the good empirical performance obtained by the capped- regularizer (Jiang et al., 2015; Lu et al., 2016b; Yao et al., 2019b).

5.1.4 Effects of Noise Level and Number of Observations

In this section, we show the effects of noise level and number of observed elements on the testing RMSE and training time. We use the same experimental setup as in Section 5.1.1. Since PA-APG is much worse (see Table 3) while LRTC and sNORT are slower than NORT (see Figure 1), we only use GDPAN as comparison baseline.

Figure 4(a) shows the testing RMSE with at different ’s (here, we plot ). As can be seen, the curves show a linear dependency on when is sufficiently large, which agrees with Corollary 17. Figure 4(b) shows the testing RMSE versus at different ’s. As can be seen, there is a linear dependency when the noise level is small, which agrees with Corollary 18. Finally, note that NORT and GDPAN obtain very similar testing RMSEs as both solve the same objective (but with different algorithms).

Figure 5 shows the effects of noise level on the convergence of testing RMSE versus (training) CPU time. As can be seen, testing RMSEs generally terminates at a higher level when the noise level gets larger, and NORT is much faster than GDPAN under all noise level.

Figure 6 shows the effects of numbers of observations on the convergence of testing RMSE versus (training) CPU time. First, we can see that NORT is much faster than GDPAN under various numbers of observations. Then, when gets smaller and the tensor completion problem is more ill-posed, more iterations are needed by both NORT and GDPAN, which makes them take more time to converge.

5.1.5 Effects of Tensor Order and Rank

In this experiment, we use a similar experimental setup as in Section 5.1.1, except that the tensor order is varied from to . As high-order tensors have large memory requirements, while we always set , we set when and when . Figure 7(a) shows the testing RMSE versus . As can be seen, the error grows almost linearly, which agrees with Theorem 16. Moreover, note that at , GDPAN runs out of memory because it needs to maintain dense tensors in each iteration.

Figure 7(b) shows the testing RMSE w.r.t. (where is the ground-truth tensor rank). As can be seen, the error grows linearly w.r.t. , which again agrees with Theorem 16.

Figure 8(a) shows the convergence of testing RMSE versus (training) CPU time at different tensor orders. As can be seen, while both GDPAN and NORT need more time to converge for higher-order tensors, NORT is consistently faster than GDPAN. Figure 8(b) shows the convergence of testing RMSE at different ground-truth ranks. As can be seen, while NORT is still faster than GDPAN at different ground-truth tensor ranks (), the relative speedup gets smaller when gets larger. This is because NORT needs to construct sparse tensors (e.g., Algorithm 1) before using them for multiplications, and empirically, the handling of sparse tensors requires more time on memory addressing as the rank increases (Bader and Kolda, 2007).

5.2 Tensor Completion Applications

| algorithm | model | basic solver | |

|---|---|---|---|

| convex | ADMM (Tomioka et al., 2010) | ADMM | |

| FaLRTC (Liu et al., 2013) | overlapped nuclear norm | accelerated proximal algorithm on dual problem | |

| PA-APG (Yu, 2013) | accelerated PA algorithm | ||

| FFW (Guo et al., 2017) | latent nuclear norm | efficient Frank-Wolfe algorithm | |

| TR-MM (Nimishakavi et al., 2018) | squared latent nuclear norm | Riemannian optimization on dual problem | |

| TenNN (Zhang and Aeron, 2017) | tensor-SVD | ADMM | |

| factorization | RP (Kasai and Mishra, 2016) | Turker decomposition | Riemannian preconditioning |

| TMac (Xu et al., 2013) | multiple matrices factorization | alternative minimization | |

| CP-WOPT (Hong et al., 2020) | CP decomposition | nonlinear conjugate gradient | |

| TMac-TT (Bengua et al., 2017) | tensor-train decomposition | alternative minimization | |

| TRLRF (Yuan et al., 2019) | tensor-ring decomposition | ADMM | |

| non-convex | GDPAN (Zhong and Kwok, 2014) | nonconvex PA algorithm | |

| LRTC (Chen et al., 2020) | nonconvex overlapped nuclear norm regularization | ADMM | |

| NORT (Algorithm 2) | proposed algorithm |

In this section, we use the square loss. As different nonconvex regularizers have similar performance, we will only use LSP in the sequel. The proposed NORT algorithm is compared with:444We used our own implementations of LRTC, PA-APG and GDPAN as their codes are not publicly available.

-

(i)

algorithms for various convex regularizers including: ADMM (Boyd et al., 2011)555https://web.stanford.edu/~boyd/papers/admm/, PA-APG (Yu, 2013), FaLRTC (Liu et al., 2013)666https://github.com/andrewssobral/mctc4bmi/tree/master/algs_tc/LRTC, FFW (Guo et al., 2017)777https://github.com/quanmingyao/FFWTensor, TR-MM (Nimishakavi et al., 2018)888https://github.com/madhavcsa/Low-Rank-Tensor-Completion, and TenNN (Zhang and Aeron, 2017)999http://www.ece.tufts.edu/~shuchin/software.html;

-

(ii)

factorization-based algorithms including: RP (Kasai and Mishra, 2016)101010https://bamdevmishra.in/codes/tensorcompletion/, TMac (Xu et al., 2013)111111http://www.math.ucla.edu/~wotaoyin/papers/tmac_tensor_recovery.html, CP-WOPT (Hong et al., 2020)121212https://www.sandia.gov/~tgkolda/TensorToolbox/, TMac-TT (Bengua et al., 2017)131313https://sites.google.com/site/jbengua/home/projects/efficient-tensor-completion- for-color-image-and-video-recovery-low-rank-tensor-train, and TRLRF (Yuan et al., 2019)141414https://github.com/yuanlonghao/TRLRF;

- (iii)

More details are in Table 4. We do not compare with (i) sNORT, as it has already been shown to be slower than NORT; (ii) iterative hard thresholding (Rauhut et al., 2017), as its code is not publicly available and the more recent TMac-TT solves the same problem; (iii) the method in (Bahadori et al., 2014), as it can only deal with cokriging and forecasting problems.

Unless otherwise specified, performance is evaluated by (i) root-mean-squared-error on the unobserved elements: , where is the low-rank tensor recovered, and contains the unobserved elements in ; and (ii) CPU time.

5.2.1 Color Images

We use the Windows, Tree and Rice images from (Hu et al., 2013), which are resized to (Figure 9). Each pixel is normalized to . We randomly sample 5% of the pixels for training, which are then corrupted by Gaussian noise ; and another 5% clean pixels are used for validation. The remaining unseen clean pixels are used for testing. Hyperparameters of the various methods are tuned by using the validation set.

Table 5 shows the RMSE results. As can be seen, the best convex methods (PA-APG and FaLRTC) are based on the overlapped nuclear norm. This agrees with our motivation to build a nonconvex regularizer based on the overlapped nuclear norm. GDPAN, LRTC and NORT have similar RMSEs, which are lower than those by convex regularization and the factorization approach. Convergence of the testing RMSE is shown in Figure 10. As can be seen, while ADMM solves the same convex model as PA-APG and FaLRTC, it has slower convergence. FFW, RP and TR-MM are very fast but their testing RMSEs are higher than that of NORT. By utilizing the “sparse plus low-rank” structure and adaptive momentum, NORT is more efficient than GDPAN and LRTC.

| dataset | Rice | Tree | Windows | |

| convex | ADMM | 0.06800.0003 | 0.09150.0005 | 0.07090.0004 |

| PA-APG | 0.05830.0016 | 0.04880.0007 | 0.05850.0002 | |

| FaLRTC | 0.05760.0004 | 0.04940.0011 | 0.05670.0005 | |

| FFW | 0.06340.0003 | 0.05990.0005 | 0.07720.0004 | |

| TR-MM | 0.05960.0005 | 0.05150.0011 | 0.06340.0002 | |

| TenNN | 0.06470.0004 | 0.05620.0004 | 0.05860.0003 | |

| factorization | RP | 0.05410.0011 | 0.05750.0010 | 0.03880.0026 |

| TMac | 0.19230.0005 | 0.17500.0006 | 0.13130.0005 | |

| CP-WOPT | 0.09120.0086 | 0.07500.0060 | 0.09640.0102 | |

| TMac-TT | 0.07290.0022 | 0.06650.0147 | 0.10450.0107 | |

| TRLRF | 0.06400.0004 | 0.07800.0048 | 0.05880.0035 | |

| nonconvex | GDPAN | 0.04670.0002 | 0.03940.0006 | 0.03060.0007 |

| LRTC | 0.04680.0001 | 0.03920.0006 | 0.03040.0008 | |

| NORT | 0.04680.0001 | 0.03860.0009 | 0.02970.0007 | |

Finally, Table 6 compares NORT with PA-APG and RP, which are the best convex-regularization-based and factorization-based algorithms, respectively, as observed in Table 5. Table 6 shows the testing RMSEs at different noise levels ’s. As can be seen, the testing RMSEs of all methods increase as increases. NORT has lower RMSEs at all settings. This is because natural images may not be exactly low-rank, and adaptive penalization of the singular values can better preserve the spectrum. A similar observation has also been made for nonconvex regularization on images (Yao et al., 2019b; Lu et al., 2016b). However, when the noise level becomes very high ( with pixel values in ), though NORT is still the best, its testing RMSE is not small.

| (convex) | PA-APG | 0.0149 (35.8%) | 0.0488 (24.6%) | 0.1749 (18.6% ) |

| (factorization) | RP | 0.0139 (26.0%) | 0.0575 (15.6%) | 0.1623 (10.1% ) |

| (nonconvex) | NORT | 0.0110 | 0.0386 | 0.1474 |

5.2.2 Remote Sensing Data

Experiments are performed on three hyper-spectral images (Figure 11): Cabbage (131243249), Scene (131295149) and Female (592409148).151515Cabbage and Scene images are from https://sites.google.com/site/hyperspectralcolorimaging/dataset, while the Female images are downloaded from http://www.imageval.com/scene-database-4-faces-3-meters/. : The third dimension is for the bands of images.

We use the same setup as in Section 5.2.1, and hyperparameters are tuned on the validation set. ADMM, TenNN, GDPAN, LRTC, TMac-TT and TRLRF are slow and so not compared. Results are shown in Table 7. Again, NORT achieves much lower testing RMSE than convex regularization and factorization approach. Figure 12 shows convergence of the testing RMSE. As can be seen, NORT is the fastest.

| Cabbage | Scene | Female | ||

| convex | PA-APG | 0.09130.0006 | 0.19650.0002 | 0.11570.0003 |

| FaLRTC | 0.09090.0002 | 0.19200.0001 | 0.11330.0004 | |

| FFW | 0.09620.0004 | 0.20370.0002 | 0.20960.0006 | |

| TR-MM | 0.09590.0001 | 0.19650.0002 | 0.13970.0006 | |

| factorization | RP | 0.04910.0011 | 0.18040.0005 | 0.06470.0003 |

| TMac | 0.49190.0059 | 0.59700.0029 | 1.98970.0006 | |

| CP-WOPT | 0.18460.0514 | 0.48110.0082 | 0.18680.0013 | |

| nonconvex | NORT | 0.03760.0004 | 0.17140.0012 | 0.05920.0002 |

5.2.3 Social Networks

In this experiment, we consider multi-relational link prediction (Guo et al., 2017) as a tensor completion problem. Experiment is performed on the YouTube data set161616http://leitang.net/data/youtube-data.tar.gz (Lei et al., 2009), which contains 15,088 users and five types of user interactions. Thus, it forms a 15088150885 tensor, with a total of 27,257,790 nonzero elements. Besides the full set, we also experiment with a YouTube subset obtained by randomly selecting 1,000 users (leading to 12,101 observations). We use of the observations for training, another for validation and the rest for testing. Table 8 shows the testing RMSE, and Figure 13 shows the convergence. As can be seen, NORT achieves smaller RMSE and is also much faster.

| subset | full set | ||

|---|---|---|---|

| convex | FaLRTC | 0.6570.060 | — |

| PA-APG | 0.6510.047 | — | |

| FFW | 0.6970.054 | 0.3950.001 | |

| TR-MM | 0.6700.098 | — | |

| factorization | RP | 0.5220.038 | 0.4100.001 |

| TMac | 0.7950.033 | 0.6110.007 | |

| CP-WOPT | 0.7850.040 | — | |

| nonconvex | NORT | 0.4820.030 | 0.3700.001 |

5.3 Link Prediction in Knowledge Graph

Knowledge Graph (KG) (Nickel et al., 2015; Toutanova et al., 2015) is an active research topic in data mining and machine learning. Let be the entity set and be the relation set. In a KG, nodes are the entities, while edges are relations representing the triplets , where is the head entity, is the tail entity, and is the relation between and .

KGs have many downstream applications, such as link prediction and triplet classification. It is common to store KGs as tensors, and solve the KG learning tasks with tensor methods (Lacroix et al., 2018; Balazevic et al., 2019). Take link prediction as an example. The KG can be seen as a 3-order incomplete tensor , where and . when entities and have the relation , and otherwise. Let be a mask tensor denoting the observed values in , i.e., if is observed and otherwise. The task is to predict elements in which are not observed. Since is binary, it is common to use the log loss as in (14). The objective then becomes:

| (47) |

In step 9 of Algorithm 2, it is easy to see that

Experiments are performed on two benchmark data sets: WN18RR171717https://github.com/TimDettmers/ConvE (Dettmers et al., 2018) and FB15k-237181818https://www.microsoft.com/en-us/download/details.aspx?id=52312 (Toutanova et al., 2015), which are subsets of WN18 and FB15k (Bordes et al., 2013), respectively. WN18 is a subset of WordNet (Miller, 1995), and FB15k is a subset of the Freebase database (Bollacker et al., 2008). To avoid test leakage, WN18RR and FB15k-237 do not contain near-duplicate and inverse-duplicate relations. Hence, link prediction on WN18RR and FB15k-237 is harder but more recommended than that on WN18 and FB15k (Dettmers et al., 2018). To form the entity set , we keep the top 500 (head and tail) entities that appear most frequently in the relations (’s). Relations that do not link to any of these 500 entities are removed, and those remained form the relation set . Following the public splits on entities in and relations in (Han et al., 2018), we split the observed triplets in into a training set , validation set and testing set . For each observed triplet , we sample a negative triplet from . We avoid duplicate negative triplets during sampling. We then represent the KG’s by tensors ’s of size for WN18RR, and for FB15k-237 with corresponding mask tensors ’s.

Following (Bordes et al., 2013; Dettmers et al., 2018), performance is evaluated on the testing triplets in by the following metrics: (i) mean reciprocal ranking: , where is the ranking of score among with in descending order; (ii) Hits, where is the indicator function which returns 1 if the constraint is satisfied and 0 otherwise; and (iii) Hits. For these three metrics, the higher the better.

The aforementioned algorithms are designed for the square loss, but not for the log loss in (47). We adapt the gradient-based algorithms including PA-APG, ADMM and CP-WOPT, as we only need to change the gradient calculation for (47). As a further baseline, we implement the classic Tucker decomposition (Tucker, 1966; Kolda and Bader, 2009) to optimize (47). While RP (Kasai and Mishra, 2016) is the state-of-the-art Tucker-type algorithm, it uses Riemannian preconditioning and cannot be easily modified to handle nonsmooth loss.

Results on WN18RR and FB15k-237 are shown in Tables 9 and 10, respectively. As can be seen, NORT again obtains the best ranking results. Figure 14 shows convergence of MRR with CPU time, and NORT is about two orders of magnitude faster than the other methods.

| MRR | Hits@1 | Hits@3 | ||

| convex | ADMM | 0.3620.029 | 0.1560.024 | 0.4220.038 |

| PA-APG | 0.3990.017 | 0.2030.023 | 0.5000.038 | |

| factorization | Tucker | 0.4390.013 | 0.3090.016 | 0.4380.026 |

| CP-WOPT | 0.4170.018 | 0.2660.027 | 0.4530.019 | |

| nonconvex | NORT | 0.5230.022 | 0.3750.033 | 0.5780.024 |

| MRR | Hits@1 | Hits@3 | ||

| convex | ADMM | 0.4660.006 | 0.4110.006 | 0.4520.011 |

| PA-APG | 0.5140.013 | 0.4630.015 | 0.5900.016 | |

| factorization | Tucker | 0.4710.018 | 0.3550.017 | 0.4650.015 |

| CP-WOPT | 0.4200.021 | 0.3730.015 | 0.4880.014 | |

| nonconvex | NORT | 0.6770.007 | 0.6090.007 | 0.6980.011 |

5.4 Robust Tensor Completion

In this section, we apply the proposed method on robust video tensor completion. Three videos (Eagle191919http://youtu.be/ufnf_q_3Ofg, Friends202020http://youtu.be/xmLZsEfXEgE and Logo212121http://youtu.be/L5HQoFIaT4I) from (Indyk et al., 2019) are used. Example frames are shown in Figure 15. For each video, consecutive frames are downloaded from Youtube, and the pixel values are normalized to . Each video can then be represented as a fourth-order tensor with size . Each element of is normalized to . This clean tensor is corrupted by a noise tensor to form . is a sparse random tensor with approximately 1% nonzero elements. Each entry is first drawn uniformly from the interval , and then multiplied by times the maximum value of . Hyperparameters are chosen based on performance on the first 100 noisy frames. Denoising performance is measured by the RMSE between the clean tensor and reconstructed tensor on the last 100 frames.

For the robust tensor completion, we take RTDGC (Gu et al., 2014) as the baseline, which adopts the loss and overlapped nuclear norm in (40) (i.e., and is the nuclear norm). As this is non-smooth and non-differentiable, RTDGC uses ADMM (Boyd et al., 2011) for the optimization, which handles the robust loss and low-rank regularizer separately. As discussed in Section 4.1, we use the smoothing NORT (Algorithm 3, with ) to optimize (42), the smoothed version of (40). Table 11 shows the RMSE results. As can be seen, NORT obtains better denoising performance than RTDGC. This again validates the efficacy of nonconvex low-rank learning. Figure 16 shows convergence of the testing RMSE. As shown, NORT leads to a lower RMSE and converges much faster as folding/unfolding are avoided.

| Eagle | Friends | Logo | ||

|---|---|---|---|---|

| convex | RTDGC | 0.1220.007 | 0.1280.005 | 0.1120.008 |

| nonconvex | NORT | 0.0900.003 | 0.0750.002 | 0.0880.004 |

5.5 Spatial-temporal Data

In this experiment, we predict climate observations for locations that do not have any records. This is formulated as a regularized tensor completion problem in (43). We use the square loss with a graph Laplacian regularizer constructed as in (43).

We use the CCDS and USHCN data sets from (Bahadori et al., 2014). CCDS222222https://viterbi-web.usc.edu/~liu32/data/NA-1990-2002-Monthly.csv contains monthly observations of 17 variables (such as carbon dioxide and temperature) in 125 stations from January 1990 to December 2001. USHCN232323http://www.ncdc.noaa.gov/oa/climate/research/ushcn contains monthly observations of 4 variables (minimum, maximum, average temperature and total precipitation) in 1218 stations from from January 1919 to November 2019. As discussed in Section 4.2, these records are collectively represented by a 3-order tensor , where is the number of locations, is the number of recorded time stamps, and is the number of variables corresponding to climate observations. Consequently, CCDS is represented as a tensor and USHCN is represented as a tensor. The affinity matrix is denoted , with being the similarity between locations and ( is the Haversine distance between and ). Following (Bahadori et al., 2014), we normalize the data to zero mean and unit variance, then randomly sample 10% of the locations for training, another 10% for validation, and the rest for testing.

Algorithms FaLRTC, FFW, TR-MM, RP and TMac cannot be directly used for this graph Laplacian regularized tensor completion problem, while PA-APG, ADMM, Tucker and CP-WOPT can be adapted by modifying the gradient calculation. Hence we adapt and implement PA-APG, ADMM, Tucker and CP-WOPT as baselines in this section. In addition, we compare with a greedy algorithm (denoted “Greedy”)242424This method is denoted “ORTHOGONAL” in (Bahadori et al., 2014) and obtains the best results there. from (Bahadori et al., 2014), which successively adds a rank-1 matrix to approximate the mode- unfolding with the rank constraint. For the factorization-based algorithms Tucker and CP-WOPT, the graph Laplacian regularizer takes the corresponding factor matrix rather than as the input. Specifically, recall that Tucker factorizes into , where , , , and ’s are hyperparameters. When and is superdiagonal, this reduces to the CP-WOPT decomposition. The graph Laplacian regularizer is then constructed as to leverage location proximity. As an additional baseline, we also experiment with a NORT variant that does not use the Laplacian regularizer (denoted “NORT-no-Lap”).

| CCDS | USHCN | ||

|---|---|---|---|

| convex | ADMM | 0.8900.016 | 0.6910.005 |

| PA-APG | 0.8660.014 | 0.6800.009 | |

| factorization | Tucker | 0.8560.026 | 0.6470.006 |

| CP-WOPT | 0.8870.018 | 0.6880.009 | |

| rank constraint | Greedy | 0.8710.008 | 0.6580.012 |

| nonconvex | NORT-no-Lap | 0.9970.001 | 1.3910.001 |

| NORT | 0.7930.002 | 0.5830.012 | |

Table 12 shows the RMSE results. Again, NORT obtains the lowest testing RMSEs. Moreover, when the Laplacian regularizer is not used, the testing RMSE is much higher, demonstrating that the missing slices cannot be reliably completed. Figure 17 shows the convergence. As can be seen, NORT is orders of magnitude faster than the other algorithms. The gaps on the performance and speed between NORT and the other baselines are more obvious on the larger USHCN data set. Further, note from Figures 17(a) and 17(b) that though NORT-no-Lap has converged, it cannot decrease the testing RMSE during learning (Figures 17(c) and 17(d)). This validates the efficacy of the graph Laplacian regularizer.

6 Conclusion

In this paper, we propose a low-rank tensor completion model with nonconvex regularization. An efficient nonconvex proximal average algorithm is developed, which maintains the “sparse plus low-rank” structure throughout the iterations and incorporates adaptive momentum. Convergence to critical points is guaranteed, and the obtained critical points can have small statistical errors. The algorithm is also extended for nonsmooth losses and additional regularization, demonstrating broad applicability of the proposed algorithm. Experiments on a variety of synthetic and real data sets are performed. Results show that the proposed algorithm is more efficient and more accurate than existing state-of-the-art.

In the future, we will extend the proposed algorithm to simultaneous completion of multiple tensors, e.g., collaborative tensor completion (Zheng et al., 2013) and coupled tensor completion (Wimalawarne et al., 2018). Besides, it is also interesting to study how the proposed algorithm can be efficiently parallelized on GPUs and distributed computing environments (Phipps and Kolda, 2019).

Acknowledgement

BH was supported by the RGC Early Career Scheme No.22200720 and NSFC Young Scientists Fund No. 62006202.

Appendix

Appendix A Comparison with Incoherence Condition

The matrix incoherence condition (Candès and Recht, 2009; Candès et al., 2011; Negahban and Wainwright, 2012) is in form of the singular value decomposition , where (resp. ) contains the left (resp. right) singular vectors and is the diagonal matrix containing singular values. The purpose of this condition is to enforce that the left and right singular vectors should not be aligned with the standard basis (i.e., vector ’s with the th dimension being and others being ). Typically, this condition is stated as

| (48) |

for some constant . Note that (48) does not depend on the singular values of . However, this condition can be restrictive in realistic settings, where the underlying matrix is contaminated by noise. In this case, the observed matrix can have small singular values. Therefore, we need to impose conditions related to the singular values, and (32) shows such a dependency. An example matrix satisfying the matrix RSC condition but not the incoherence condition is in Section 3.4.2 of (Negahban and Wainwright, 2012). As a result, the RSC condition, which involves singular values, is less restrictive than the incoherence condition, and can better describe “spikiness”.

Appendix B Proofs

B.1 Proposition 4

Proof For simplicity, we consider the case where (resp. ) has only one single column (resp. ). We need to fold along with the th mode and then unfold it along its th mode. Let us consider the structure of , we can express it as

where with each . When unfolding with the th mode, the unfolding matrix is

| (49) |

Thus,

| (50) | |||||

Similarly, let , where each . From (49), we have

| (51) | |||||

When (resp. ) has columns,

combining with the fact that

with (50) and (51),

we obtain (26) and (27).

B.2 Proposition 6

Proof Define , then

| (52) |

Next, we introduce an extra parameter as , and express (52) as

| (53) |

We transform the above equation as

where is defined as

| (54) | ||||

Thus, there exists such that

.

B.3 Proposition 7

Let . Before proving Proposition 7, we first extend Proposition 2 in (Zhong and Kwok, 2014) in the following auxiliary Lemma.

B.3.1 Auxiliary Lemma

Lemma 21

.

Proof From the definition of in (54), if , we have

Thus, . Next, we prove the “” part in the Lemma. Note that

| (55) |

Since is -Lipschitz continuous, let , we have

| (56) |

Next, we have

| (57) | ||||

| (58) | ||||

| (59) |

Note that (57) comes from the fact that

then (58) is from the definition of in Proposition 6,

and (59) is from (56).

B.3.2 Proof of Proposition 7

B.4 Proposition 8

Proof The proof of this proposition can also be found in (Zhong and Kwok, 2014), we add one here for the completeness. Recall that

Let . Thus,

When ,

we have

.

Thus,

is a critical point of .

B.5 Theorem 9

First, we introduce the following Lemmas, which are basic properties for the proximal step.

Lemma 22

(Parikh and Boyd, 2013) Let and . Then, .

Lemma 23

(Parikh and Boyd, 2013) If , then is a critical point of .

Lemma 24

(Hare and Sagastizábal, 2009) The proximal map is continuous.

Proof (of Theorem 9) Recall that . From Lemma 22,

-

•

If step 7 is performed, we have

(60) -

•

If step 5 is performed,

(61)

Combining (60) and (61), we have

| (62) |

where and are a partition of such that when step 5 is performed, and when step 7 is performed. As is bounded from below and , taking in (62), we have

where is a positive constant. Thus, the sequence is bounded, and it must have limit points. Besides, one of the following three cases must hold.

- 1.

-

2.

is infinite, is finite. Let be a limit point of , and be a subsequence that converges to . In this case, we have

Thus, , and is a critical point of from Lemma 23.

-

3.

Both and are infinite. From the above cases, we can see that either or is infinite, and limit points are also the critical points of .

Thus, all limit points of are critical points of .

B.6 Corollary 10

This corollary can be easily derived from the proof of Theorem 9.

B.7 Theorem 13

Lemma 25

For iterations in Algorithm 2, we have .

Proof Since is generated from the proximal step, i.e., , from its optimality condition, we have

Let . We have

Thus,

.

Proof (of Theorem 13). From Theorem 9, we have . Then, from Lemma 25, we have

Thus, for any , and where is a sufficiently large positive integer, we have

Then, the uniformized KL property implies for all ,

| (63) |

Moreover, from Lemma 22, we have

| (64) |

Let , we have

| (65) |

Combine (63), (64) and (65), we have

| (66) |

Since

, then ,

(66) becomes

,

where .

Finally,

it is shown in (Bolte et al., 2014; Li and Lin, 2015; Li et al., 2017) that

the sequence satisfying the above inequality, convergence to zero with

different rates stated in the Theorem.

B.8 Lemma 15

First, we introduce the following Lemma.

Lemma 26 (Theorem 1 in (Negahban and Wainwright, 2012))