Low-rank Bayesian matrix completion via geodesic Hamiltonian Monte Carlo on Stiefel manifolds

Abstract.

We present a new sampling-based approach for enabling efficient computation of low-rank Bayesian matrix completion and quantifying the associated uncertainty. Firstly, we design a new prior model based on the singular-value-decomposition (SVD) parametrization of low-rank matrices. Our prior is analogous to the seminal nuclear-norm regularization used in non-Bayesian setting and enforces orthogonality in the factor matrices by constraining them to Stiefel manifolds. Then, we design a geodesic Hamiltonian Monte Carlo (-within-Gibbs) algorithm for generating posterior samples of the SVD factor matrices. We demonstrate that our approach resolves the sampling difficulties encountered by standard Gibbs samplers for the common two-matrix factorization used in matrix completion. More importantly, the geodesic Hamiltonian sampler allows for sampling in cases with more general likelihoods than the typical Gaussian likelihood and Gaussian prior assumptions adopted in most of the existing Bayesian matrix completion literature. We demonstrate an applications of our approach to fit the categorical data of a mice protein dataset and the MovieLens recommendation problem. Numerical examples demonstrate superior sampling performance, including better mixing and faster convergence to a stationary distribution. Moreover, they demonstrate improved accuracy on the two real-world benchmark problems we considered.

Keywords. Matrix Completion, Markov Chain Monte Carlo, Low-rank matrices

1. Introduction

We consider the problem of Bayesian low rank matrix completion. The matrix completion problem considers reconstructing a matrix through indirect and noisy evaluations of a subset of its elements. A low rank matrix completion seeks to reduce the ill-posed nature of this problem by assuming that the underlying matrix has an approximately low rank factorization. A low rank factorization of a matrix matrix is defined by the couple , where and with and

| (1) |

Recall that the best rank approximation is provided by the singular value decomposition

| (2) |

where and are the first columns of the left and right singular vectors of ; is an ordered set of the largest singular values; and where we can identify and

Bayesian low-rank matrix completion

We consider the Bayesian setting aiming to identify distributions over the factors and to account for uncertainty stemming from an ability to uniquely identify the correct matrix. Data consists of pairs of element indices and values where denotes the rows and denotes the columns . We use the index set to denote the set of indices corresponding to the observed data points and let be its cardinality. The full data vector is denoted by . We consider the following likelihood models: a Gaussian likelihood

| (3) |

where is an observation function, e.g., identity or a soft-plus function to ensure positivity, and a Binomial likelihood

| (4) |

where is the number of possible trials and denotes the success probability. Given the low rank factorization of Equation (1), here is obtained by multiplying the th row of with the th row of . The Binomial likelihood is applied in categorical settings where the categories might have a natural ordering. However, we emphasize that our proposed sampling methodology is more broadly applicable to other likelihood formulations.

Challenge

One challenge to Bayesian matrix completion specifically, is the non-uniqueness of the low-rank representation. This challenge is pictorally represented in Figure 1, which demonstrates the geometry of posteriors that are prevalent in the problem. It is typically quite challenging to design a sampling scheme that is able to sample such posteriors, and even variational approaches are challenging to tune here. This challenge arises due to the following symmetry in the problem. A low-rank factorization can be obtained by an arbitrary linear transformation of the factors via

| (5) |

for any invertible . The precise ramifications of this symmetry in the Gaussian likelihood case was extensively documented in [7].

Contribution

We develop an approach to address these issues in two ways: (i) we alter the factorization format that constrains the factor matrices on Stiefel manifolds; and (ii) we develop a geodesic Markov Chain Monte Carlo approach that specifically target sampling distributions that lie on the manifold. Specifically, we propose to parameterize the low-rank matrix in the SVD format

| (6) |

where we seek to learn semi-orthogonal matrices and and a positive diagonal matrix . Within a Bayesian sampling context, we develop a geodesic Hamiltonian Monte Carlo (HMC) [4] within Gibbs algorithm to enable efficient sampling of the semi-orthogonal factor matrices.

This approach is closely related to the SVD models used in optimization-based methods [5] that solve a nuclear norm minimization problem

| (7) |

where is an operator that subselects the observed elements of and flattens them into a vector of equal size to . Moreover, an SVD parameterization is commonly used in this optimization procedure. From this perspective, the optimization problem can be seen as equivalently seeking a MAP estimate with a uniform prior over semi-orthogonal matrices. Prior work in Bayesian matrix completion has not used this equivalent prior to our knowledge. As a result, we provide the first Bayesian analogue of this extremely popular low-rank matrix characterization.

Related work

A large amount of work has addressed the low-rank Bayesian matrix completion problem in the variational setting. For example, [18] and [25] each apply variational Bayes approximations of this inference model to analyze the Netflix prize challenge [3] to great success. Further, [21] and [22] develop a theoretical framework to analyze the variational Bayes low-rank matrix factorization. One of the issues with these existing approaches is the mean field-type approxiamtion that assumes independence of the factors. Sampling-based approaches target the full posterior and, while more expensive, have shown that this more complete characterization can outer-perform variational inference performance [27]. This approach was later extended to other settings [6, 1], and have also been extended to the case of recovering low-rank tensor factorizations [24, 28, 29].

The majority of these algorithms leverage the bilinear nature of the low-rank factorization to develop Gibbs samplers via conditional distributions for each factor. In this setting each factor is endowed with an independent Gaussian prior, and the variance of this prior is endowed with a hyperprior. This approach is mainly used to try to circumvent the sampling difficulties previously described, but comes at the cost of increased uncertainty. This approach uses a Gaussian log likelihood function

| (8) |

The prior on the factors assigns i.i.d. zero mean Gaussians for entries of the matrices and and assumes the prior variance is unknown. This leads to a hierarchical prior, conditioned on the variance

where is some unknown hyperparameter that controls the prior variance. We endow with a Gamma prior. Finally, Gibbs sampling is used to estimate both the factors and , the prior variance and the likeihood standard deviation .

Outline

This paper is structured as follows. Section 2 provides background on sampling on the Stiefel manifold and our proposed HMC within Gibbs algorithm. Section 3 provides numerical results that compare our proposed approach to Bayesian approach described above. The comparisons are made both on synthetic examples, to gain intuition, and on two real-world data sets that consider positivity constraints on the matrix elements and categorical valued matrices, respectively.

2. Sampling on low-rank manifolds

In this section we first describe the geodesic Monte Carlo and then describe our Gibbs sampling procedure. The factor matrices we learn lie on the Stiefel manifold. Traditional Markov Chain Monte Carlo approaches like Hamiltonian Monte Carlo or Riemannian-Manifold Hamiltonian Monte Carlo are not directly applicable to distributions for parameters that are on such manifolds embedded in the Euclidean space. Instead so-called geodesic Monte Carlo approaches propose modifications of HMC and related methods to directly apply to distributions in the embedded space [4].

2.1. Operations on the Stiefel manifold

We begin with description of operations on the Stiefel manifold. Let denote an dimensional manifold that is embedded in a higher dimensional Euclidean space At every point there exists bijective mapping denoting a coordinate system on the manifold. The union of all possible coordinate systems across the manifold is called an atlas for a manifold. For our low-rank reconstruction purposes, we consider the Stiefel manifold of semi-orthogonal matrices

The main challenge is describing motion along the manifold since standard Euclidean vector-space addition does not apply. Instead, one must follow curves on the manifolds between points. A curve on the manifold over a time interval starting at some point is defined by , with . At each point , the tangent vector is an equivalence class of these curves111Two curves and are equivalent if and , and the set of tangent vectors form a tangent vector space [2].

These tangent vectors are time derivatives of the curves Every point on the Stiefel manifold statisfies , so differentiation in time yields Therefore the tangent space is

The geodesic Monte Carlo algorithm makes use of the space normal to the manifold. This normal space is the orthogonal complement to , and therefore a definition of an inner product is needed. For the Stiefel manifold this inner product is defined through the trace One can then verify that for any on the manifold, the orthogonal projection of onto the normal space is

| (9) |

and the orthogonal projection of onto the tangent space becomes . Finally we will require geodesics, the curve of shortest length, between two points on the manifold. Since the Steifel manifold is embedded in a Euclidean space, the geodesic can be defined by the fact that the acceleration vector at each point is normal to the manifold as long as the curve is traced with uniform speed. For Stiefel manifolds we are able to obtain the following closed form expression [11]

| (10) |

where and

2.2. Sampling on the Stiefel manifold

While the Lebesgue measure is the reference measure for probability distributions in the Euclidean space, the Hausdorff measure can be used as the reference measure on manifolds [8]. It will be useful to move between an -dimensional Hausdorff measure and the -dimensional Lebesgue measure . In the context of Riemannian manifolds, such as the Stiefel manifold, the relation between these measures is provided by the following formula [12]

| (11) |

Where is the Riemannian metric provided by the trace. Using the metric and the target density with respect to the Lebesgue measure in the coordinate system provided by , we can form the non-separable Hamiltonian function [13]

| (12) |

where is the target density with respect to the Lebesgue measure in the coordinate system provided by , and is an auxiliary momentum variable following . Note that defines a unnormalized joint probability density over the pair , in which the marginal density over recovers the target density. As shown by the landmark paper of [10], the Hamiltonian system

implicitly defines a time-reversible and one-to-one mapping from the state at time , , to the state at time , . This mapping leaves the target distribution invariant. Thus, discretizing the Hamiltonian system using numerical integrators naturally leads MCMC proposal distributions that in general can reduce sample correlations in the resulting Markov chain. Moreover, using time-reversible and symplectic numerical integrators often yields simplified and more dimension-scalable MCMC proposals. See [23] and references therein.

To define HMC on Riemannian manifold [13] , we can use the relation (11) to write the Hamiltonian function (12) with respect to the Hausdorff measure as The corresponding Hamiltonian system evolves the pair according to

| (13) |

A key innovation of [4] is the introduction of a time-reversible and symplectic integrator that integrates this non-separable Hamiltonian system via splitting, where and The corresponding systems are

| (14) | ||||||

| (15) |

respectively. The integrator first solves (14) for a timestep of , then solves (15) for a timestep of , and finally propagates (14) again for a time-step of . The solution of the first system (14) is given simply by , and the solution to the second system(15) is given by the geodesic flow to enforce it on the manifold. If we denote as the embedded parameter and momentum, rather than , the final HMC algorithm, made specific to the case of the Stiefel manifold, is provided in Algorithm 1. Note that the Metropolis-Hastings rejection rule is applied at the end of the time integration in Algorithm 1 to endure the HMC generates Markov chains that have the target distribution as the correct invariant distribution.

2.3. Application to low-rank matrix completion

We utilize geodesic HMC within Gibbs to solve the matrix completion problem. The pseudocode is provided in Algorithm 2. Here we see that the HMC on the Stiefel manifold is used to sample the left and right singular vectors, while a standard HMC approach is used for the singular values. In all cases we use the No-U-Turn-Sampler variant of HMC [17] to adaptively tune the number of steps.

Prior

For all the SVD based matrix-completion problems in this work, we consider independent prior distributions for the matrices and . The priors of the left and right factors are defined as uniform in the Hausdorff measure, whereas the prior of the singular values are defined as the independent exponential distributions in a Euclidean space. This way, we have the prior

| (16) |

for some positive rate parameter . The exponential prior can be interpreted as the exponential of the negative nuclear norm of the underlying matrix , i.e., , which is a convex envelope of used in the optimization literature to promote low-rankness in matrix estimation problems [5, 20, 15]. Thus, the exponential prior can be viewed as a rank revealing prior. We provide further justifications and numerical illustrations in Section C of the supplementary material.

In the rest of this section, we describe three likelihood models used for the numerical experiments: (1) real-valued data, (2) positive real-valued data for the mice protein expression example, and (3) positive integer-valued rating data for the movie recommendation.

Model SVD:

In this model, we treat the observed data as real-valued, and consider the Gaussian likelihood arising from the model

| (17) |

where The log likelihood becomes

Model S-SVD:

In this model, we treat the observed data as positive real-valued, and consider the Gaussian likelihood arising from the model

| (18) |

where The log likelihood becomes

For Model SVD and Model S-SVD, the maximum a-posteriori (MAP) of the posterior becomes equivalent to a penalized version of the nuclear norm minimization problem (7). This equivalence is obtained by taking the log of the prior (16) and adding either of the log likelihoods described above.

Model B-SVD:

In this model, we treat the observed data as positive integer-valued, and consider the Binomial likelihood,

| (19) |

This yields the log likelihood

3. Numerical examples

We now demonstrate the efficacy of the proposed SVD-based models and the geodesic HMC sampling methods on three examples, including partially observed synthetic matrices, the mice protein expression data set [16], and the MovieLens data set [14].

Problem setups

Example 1 includes the following synthetic test cases that aim to benchmark the proposed methods.

-

Case #1:

We construct a true matrix , where entries of are drawn from i.i.d. standard Gaussian, and then partially observed data are given by randomly selecting a subset of matrix indices.

-

Case #2:

We construct a true positive matrix , where entries of are drawn from i.i.d. and entries of are drawn from i.i.d. . Then partially observed data are given by randomly selecting a subset of matrix indices.

-

Case #3:

We construct a true matrix , where entries of are drawn from i.i.d. standard Gaussian. Then partially observed data are drawn from the Binomial model, i.e., , where the indices are randomly chosen.

For each of the cases, we use matrices with rank . We conduct two sets of experiments that respectively select and of matrix entries as training data. The cross validation data sets are chosen as of matrix entries that do not overlap with the training data sets.

Example 2 is the mice protein expression data set, which consists of protein expressions, measured in terms of nuclear fractions, from mice specimens. The matrix contains positive real-valued entries. This data set is openly available at the UCI Machine Learning Repository [9]. We use rank in the training. Here we also conduct two sets of experiments that respectively select and of matrix entries at random as training data. The cross validation data sets are chosen as of matrix entries at random that do not overlap with the training data sets.

Example 3 uses the ml-latest-small of the MovieLens data set, which contains non-negative ratings across movies rated by 610 users. This gives a matrix with partially observed rating data222The data set is openly available at https://files.grouplens.org/datasets/movielens/. For the rating data, each of the observed data entries is a categorical variable, which takes value from a finite sequence in the range with increment , i.e., . To apply the Binomial likelihood, we scale the rating data by a factor of two, so they become integer-valued in the ranging with increment . We use rank in the training. In this example, we randomly select of the data in the data set as the training data, and use the rest of as cross validation data.

Here Case #1 of the synthetic example aims to benchmark the expression power and the sampling efficiency of the SVD model in comparison with the model. For this reason, the ground truth is generated from the prior distribution of the model. The synthetic cases 2 and 3 mimic the behavior of the mice protein expression data set [16] and the MovieLens data set [14], respectively.

| MCMC mixing | quantiles of MADs | ||||

| Gibbs | HMC | SVD | -SVD | ||

| Case #1 | ✗ | ✓ | - | ||

| Case #2 | ✗ | ✓ | |||

| Case #3 | ✗ | ✓ | |||

| Mice | ✗ | ✓ | |||

| MovieLens | ✗ | ✓ | |||

A summary of the sampling performance and the prediction accuracy of various methods is provided in Table 1. The table specifies if the sampling method converges to the posterior distribution within 10000 iterations including 2000 iterations of burnin, and also reports the estimated median absolute deviations (MAD) on the cross validation data sets and the standard deviations of the MADs. Since both the HMC and the Gibbs sampling algorithms discussed here are asymptotically converging methods when they are simulated for an infinite number of iterations [19, 26], here we only determine the converging behavior with finite iterations using sample traces. For Markov chains with reasonable mixing, we then compute the autocorrelation time to benchmark the rate of convergence [19]. More details about the sampling performance and the prediction accuracy of various models applied to the abovementioned examples are provided in the rest of this section.

Sampling performance

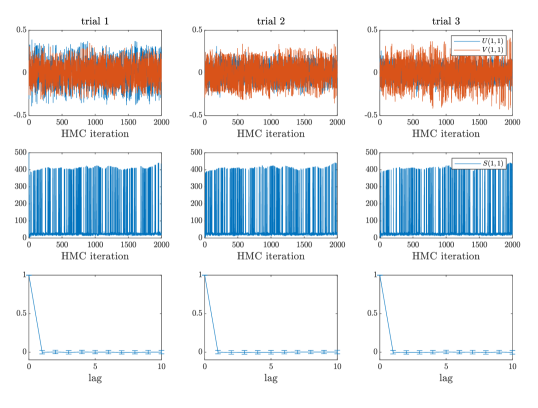

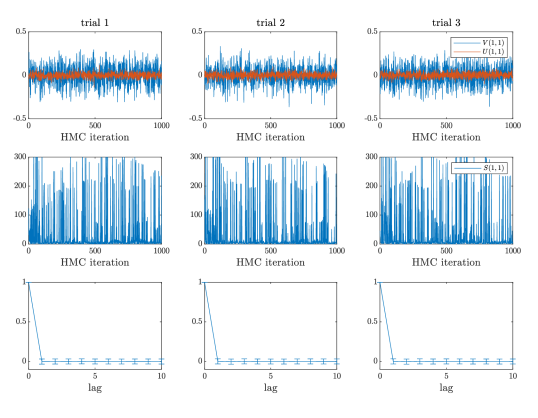

Overall, the Gibbs sampling on the model demonstrates a poor mixing behavior in our examples. The Markov chains often do not converge to the stationary distribution after many iterations. In comparison, all Markov chains generated by the HMC on the SVD-based model demonstrate a superior mixing property. For brevity, we only report the mixing of Markov chains in Case #1 of the synthetic example here, in which the ground truth is generated by the model. As shown in Figure 2, after sweeps of Gibbs updates on the parameters , the traces of the Markov chain cannot reach the stationary distribution. In comparison, the traces of the geodesic HMC can reach the stationary distribution very quickly. To further demonstrate the sampling efficiency of HMC, we reported the estimated average autocorrelation time over all parameters in Figure 2 (right), which shows that the autocorrelation of the Markov chains generated by HMC decreases to zero after one iteration. We observe similar mixing behavior in all other test cases in the synthetic example and also in examples 2 and 3. See the appendices for the mixing diagnostics of the rest of examples.

We observe that each iteration of the geodesic HMC requires more computational effort compared to one sweep of the Gibbs. For Case #1 of the synthetic example, the CPU time per iteration of the geodesic HMC is about times of that of the Gibbs. For positive matrices (Case #2 and the mice protein data), the CPU time per iteration of the geodesic HMC is about times of that of the Gibbs. For the rating data (Case #3 and the MovieLens), the CPU time per iteration of the geodesic HMC is about times of that of the Gibbs. However, the rather high computational cost of the geodesic HMC clearly yields a superior sampling performance.

Prediction accuracy

For all the examples, we report the histograms of the posterior MADs (compared to the cross validation data sets) generated by various models. For all the synthetic test cases, the uncertainty intervals on the histogram are computed using 30 random experiments. For examples 2 and 3, the uncertainty intervals are computed using 10 random experiments.

Figure 3 shows the MADs for Case #1 of the synthetic example. For both data sampling rates, the SVD model and the model produce comparable MADs in this case.

Figure 4 shows the MADs for Case #2 of the synthetic example and the mice protein data set. Both examples here involve matrices with positive entries. We apply the S-SVD model (which preserves the positivity), the SVD model and the model. For Case #2, Figure 4 (a) shows that with a high data sampling rate (), all three models produce comparable MADs. However, with a low data sampling rate (), the S-SVD model and the SVD model can still generate similar results compared to the data sampling rate case, whereas the MADs of the model exhibits some heavy-tail behavior. For the mice protein data set, Figure 4 (b) shows that the S-SVD model outperforms the SVD model and the model in the low data sampling rate case, while all three models have similar performance in the high data sampling rate case (note that the S-SVD model has the smallest uncertainty interval). Overall, the S-SVD model demonstrates a clear advantage.

Figure 5 shows the MADs for Case #3 of the synthetic example and the MovieLens data set. Both examples here involve rating data. We apply the B-SVD model (which uses the Binomial likelihood), the SVD model and the model. For Case #3, Figure 5 (a) shows that for both data sampling rates, the two SVD-based models generate comparable results and are both more accurate compared to that of the model.

For the MovieLens case, Figure 5 (b) shows that the S-SVD model outperforms the SVD model and the model in the low data sampling rate case. All three models have similar performance in the high data sampling rate case. Furthermore, the S-SVD model has the smallest uncertainty interval. Overall, the S-SVD model demonstrates a clear advantage in the prediction accuracy.

4. Acknowledgments

T. Cui acknowledges support from the Australian Research Council Discovery Project DP210103092. A. Gorodetsky acknowledges support from the Department of Energy Office of Scientific Research, ASCR under grant DE-SC0020364.

Appendix A Vectorization of conditional posteriors of SVD-based models

In this section we derive the conditional posteriors of , , and in the singular value decomposition model with a Gaussian likelihood. The same procedure can be undertaken for the posteriors associated with all the proposed likelihood models.

As a starting point, we let to denote the precision parameter of the Gaussian observation noise and assign it a conjugate Gamma prior with . We have the full posterior distribution

| (20) |

where and are uniform prior densities in the Hausdorff measure and is some prior density for the singular values. Given , the conditional posterior distribution for is

| (21) |

where . In the implementation it is easier to work directly with rather than repeated indexing into its rows. To this end, it is convenient to reorder according to the row indices so that we have

| (22) |

where is the number of observed entries in the -th row of the data matrix so that ; is a matrix consisting of vertically concatenated blocks of corresponding to the columns of the observed entries in row ; is each row of ; and is a block-diagonal matrix. Together with the uniform prior in the Hausdorff measure, this results in the following conditional posterior

| (23) |

This density does not correspond to that of a Gaussian distribution over Euclidean space because the matrix must satisfy orthogonality properties. Thus there is no closed-form approach for sampling from this posterior, and some MCMC method is needed. The conditional posterior for can be obtained in a similar way.

Next we consider the conditional posterior of the singular values lying along the diagonal of the matrix . We can assign any prior with positive support for the diagonal elements of this matrix. In this paper we choose an exponential prior , which draws an analogy with the nuclear norm regularization. Then, Equation (20) leads to the conditional posterior

| (24) |

where is the hyperparameters of the exponential distribution. To further simplify the computation, we can also express the above conditional posterior in the form of

| (25) |

where the vector is the same as the one defined in (22); maps the diagonal entries of the matrix into a vector in consisting of all the singular values; and the matrix is given as

where denotes the Hadamard product.

The computational complexity of evaluating the conditional densities (23) and (25) and their gradient are governed by the cost of matrix-vector-products with and , which are both .

Finally, the conditional posterior of the precision parameter takes the form

| (26) |

which is another Gamma distribution that can be sampled directly.

Appendix B Gibbs model

In this section we provide the pseudocode of the Gibbs sampler used for sampling the model. Recall that the unknown parameters are the low-rank factors , the measurement noise variance , and the prior variance of the factors . Similar to the SVD model, we use the precision parameters and and assign conjugate Gamma distributions and , respectively. Here all the rate and shape parameters of the Gamma prior is set to . Together these facts lead to the following full posterior

| (27) |

The conditional distributions for and are both Gaussian distributions and so samples can be drawn using Gibbs sampling. However, directly sampling those Gaussian distributions needs to factorize rather large covariance matrices, e.g., a matrix with dimension for . This can have superlinear computational complexity, for instance, cubic complexity if directly applying the Cholesky factorization. One way to reduce the computational cost is to apply Gibbs sampling to each of and row-by-row. Denoting the -th row of and the matrix containing remaining rows of , the conditional posterior of given is

where is the index set containing all the column indices such that for a given row index . Note the second conditional refers to the situation where row of the matrix is not observed at all, in which case sampling proceeds from the prior. The conditional posterior of can be derived in a similar way. Each of the conditional Gaussian distributions has dimension , and thus can be computationally much cheaper to simulate. The pseudocode is provided in Algorithm 3.

Appendix C Rank revealing of SVD-based models

Here we demonstrate the rank revealing property of the SVD-based models. Recall that the low-rank matrix is parametrized in the SVD format

The nuclear norm of is given as the summation of its singular values, i.e.,

Since the nuclear norm is a convex envelope of the rank of a matrix, , it often used as a regularization term in optimization problems to estimate low-rank matrices. See [5, 15, 20] and references therein for further discussions. Drawing an analogy with the nuclear norm regularization, a natural choice of the prior distribution for the singular values of the SVD-based model is to use the exponential of the negative of the nuclear norm of , which leads to

where is a penalty parameter.

Connection with nuclear norm regularization

Given a likelihood function , the maximum a posteriori (MAP) estimate can be expressed As

where and are uniform in the Hausdorff measure and implicitly imply the constraints and on Stiefel manifolds. In this way, the MAP estimate can be equivalently expressed as

The above formulation explains that the MAP estimate of our proposed SVD-based model is equivalent to a matrix estimation problem regularized by the nuclear norm, which is a widely-accepted way to impose low-rank properties.

Numerical demonstration

The above justification hinted that the SVD-based model together with the nuclear-norm-type prior can be capable of determining the effective rank of a Bayesian matrix estimation problem. To demonstrate this rank revealing property, we use the Case #1 of the synthetic example presented in Section 3 of the paper. Here we construct a true matrix , where entries of are drawn from i.i.d. standard Gaussian, and partially observed data are given by randomly selecting a subset of of matrix indices.

The true matrix is rank by construction, we impose a maximum rank in the parameterization and run the proposed geodesic HMC algorithm to sample the posterior distribution. The quantile, median and quantile of the posterior singular values are reported in Figure 6. Here, we observe that beyond rank 10, the estimated singular values are close to zero and the sizes of the corresponding credible intervals are near zero. This is a clear indication that the actual rank of the estimated matrix is about . Using our proposed SVD-based models, the columns of and that do not contribute to the estimation problem will be automatically assigned near zero singular values in this example.

Appendix D Sampling efficiency

In this section, we provide a detailed summary of the sampling efficiency of the geodesic HMC and Gibbs sampling respectively applied to SVD-based models and the model. To preserve anonymity, the git repositories containing the code for reproducing the results will be made public after the completion of the review process. URLs and instructions for running the code will also be provided in the final submission.

Table 2 summarizes the CPU time per iteration. Here the number of steps and the time step used by geodesic HMC are automatically tuned by the No-U-Turn-Sampler variant of HMC. Each iteration of Gibbs consists of a full sweep of updates of . The CPU times are measured on a DELL workstation with dual Intel Xeon(R) E5-2680 v4 CPU.

| meanstandard deviation of CPU time (in second) | |||

|---|---|---|---|

| SVD | -SVD | ||

| Case #1 | - | ||

| Case #2 | |||

| Case #3 | |||

| Mice | |||

| MovieLens | |||

For each of the examples summarized in Table 2, we also show the MCMC traces generated by the corresponding samplers in Figures 7–20. For each of examples, we provide the traces of three random experiments. For all examples, the Gibbs sampling applied to the model do not have satisfactory mixing property—after several thousands iterations, the traces of Markov chains clearly suggest that the chains are still not in the stationary phase. In comparison, all the geodesic HMC counterparts can produce well mixed chains after several hundreds iterations. Although each iteration of the geodesic HMC is computationally more expensive than that of the Gibbs, the geodesic HMC is able to significantly improve the convergence of the MCMC sampling in these examples.

References

- [1] Sungjin Ahn, Anoop Korattikara, Nathan Liu, Suju Rajan, and Max Welling. Large-scale distributed bayesian matrix factorization using stochastic gradient mcmc. In Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining, 2015.

- [2] Vladimir Igorevich Arnol’d. Mathematical methods of classical mechanics, volume 60. Springer Science & Business Media, 2013.

- [3] James Bennett, Stan Lanning, et al. The netflix prize. In Proceedings of KDD cup and workshop, 2007.

- [4] Simon Byrne and Mark Girolami. Geodesic monte carlo on embedded manifolds. Scandinavian Journal of Statistics, 40(4):825–845, 2013.

- [5] Emmanuel J Candès and Terence Tao. The power of convex relaxation: Near-optimal matrix completion. IEEE Transactions on Information Theory, 56(5):2053–2080, 2010.

- [6] Tianqi Chen, Emily Fox, and Carlos Guestrin. Stochastic gradient hamiltonian monte carlo. In International conference on machine learning, 2014.

- [7] Saibal De, Hadi Salehi, and Alex Gorodetsky. Efficient mcmc sampling for bayesian matrix factorization by breaking posterior symmetries. arXiv preprint arXiv:2006.04295, 2020.

- [8] Persi Diaconis, Susan Holmes, and Mehrdad Shahshahani. Sampling from a manifold. In Advances in modern statistical theory and applications: a Festschrift in honor of Morris L. Eaton, pages 102–125. Institute of Mathematical Statistics, 2013.

- [9] Dheeru Dua and Casey Graff. UCI machine learning repository, 2017.

- [10] Simon Duane, Anthony D Kennedy, Brian J Pendleton, and Duncan Roweth. Hybrid monte carlo. Physics letters B, 195(2):216–222, 1987.

- [11] Alan Edelman, Tomás A Arias, and Steven T Smith. The geometry of algorithms with orthogonality constraints. SIAM journal on Matrix Analysis and Applications, 20(2):303–353, 1998.

- [12] Herbert Federer. Geometric measure theory. Springer, 2014.

- [13] M. Girolami and B. Calderhead. Riemann manifold Langevin and Hamiltonian Monte Carlo methods. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 73(2):123–214, 2011.

- [14] F Maxwell Harper and Joseph A Konstan. The movielens datasets: History and context. ACM transactions on interactive intelligent systems (TIIS), 5(4):1–19, 2015.

- [15] Trevor Hastie, Rahul Mazumder, Jason D Lee, and Reza Zadeh. Matrix completion and low-rank svd via fast alternating least squares. The Journal of Machine Learning Research, 16(1):3367–3402, 2015.

- [16] Clara Higuera, Katheleen J Gardiner, and Krzysztof J Cios. Self-organizing feature maps identify proteins critical to learning in a mouse model of down syndrome. PloS one, 10, 2015.

- [17] Matthew D Hoffman, Andrew Gelman, et al. The no-u-turn sampler: adaptively setting path lengths in hamiltonian monte carlo. J. Mach. Learn. Res., 15(1):1593–1623, 2014.

- [18] Yew Jin Lim and Yee Whye Teh. Variational bayesian approach to movie rating prediction. In Proceedings of KDD cup and workshop, 2007.

- [19] Jun S Liu. Monte Carlo strategies in scientific computing, volume 10. Springer, 2001.

- [20] Rahul Mazumder, Trevor Hastie, and Robert Tibshirani. Spectral regularization algorithms for learning large incomplete matrices. The Journal of Machine Learning Research, 11:2287–2322, 2010.

- [21] Shinichi Nakajima and Masashi Sugiyama. Theoretical analysis of bayesian matrix factorization. Journal of Machine Learning Research, 12, 2011.

- [22] Shinichi Nakajima, Masashi Sugiyama, S. Derin Babacan, and Ryota Tomioka. Global analytic solution of fully-observed variational bayesian matrix factorization. Journal of Machine Learning Research, 14, 2013.

- [23] Radford M Neal et al. Mcmc using hamiltonian dynamics. Handbook of markov chain monte carlo, 2(11):2, 2011.

- [24] Piyush Rai, Yingjian Wang, Shengbo Guo, Gary Chen, David Dunson, and Lawrence Carin. Scalable bayesian low-rank decomposition of incomplete multiway tensors. In International Conference on Machine Learning, 2014.

- [25] Tapani Raiko, Alexander Ilin, and Juha Karhunen. Principal component analysis for large scale problems with lots of missing values. In European Conference on Machine Learning, 2007.

- [26] Christian P Robert and George Casella. Monte Carlo statistical methods, volume 2. Springer, 1999.

- [27] Ruslan Salakhutdinov and Andriy Mnih. Bayesian probabilistic matrix factorization using markov chain monte carlo. In Proceedings of the 25th international conference on Machine learning, 2008.

- [28] Qibin Zhao, Liqing Zhang, and Andrzej Cichocki. Bayesian cp factorization of incomplete tensors with automatic rank determination. IEEE transactions on pattern analysis and machine intelligence, 37, 2015.

- [29] Qibin Zhao, Guoxu Zhou, Liqing Zhang, Andrzej Cichocki, and Shun-Ichi Amari. Bayesian robust tensor factorization for incomplete multiway data. IEEE transactions on neural networks and learning systems, 27, 2015.