Low-Light Image and Video Enhancement: A Comprehensive Survey and Beyond

Abstract

This paper presents a comprehensive survey of low-light image and video enhancement, addressing two primary challenges in the field. The first challenge is the prevalence of mixed over-/under-exposed images, which are not adequately addressed by existing methods. In response, this work introduces two enhanced variants of the SICE dataset: SICE_Grad and SICE_Mix, designed to better represent these complexities. The second challenge is the scarcity of suitable low-light video datasets for training and testing. To address this, the paper introduces the Night Wenzhou dataset, a large-scale, high-resolution video collection that features challenging fast-moving aerial scenes and streetscapes with varied illuminations and degradation. This study also conducts an extensive analysis of key techniques and performs comparative experiments using the proposed and current benchmark datasets. The survey concludes by highlighting emerging applications, discussing unresolved challenges, and suggesting future research directions within the LLIE community. The datasets are available at https://github.com/ShenZheng2000/LLIE_Survey.

Index Terms:

Low-Light Image and Video Enhancement, Low-Level Vision, Deep Learning, Computational Photography.I Introduction

Images are often captured under sub-optimal illumination conditions. Due to environmental factors (e.g., poor lightening, incorrect beam angle) or technical constraints (e.g., small ISO, short exposure) [1], these images could have deteriorated features, and low contrast (Shown in Fig. 1), which not only deteriorate the low-level perceptual quality but also degrade the high-level vision tasks such as object detection [2], semantic segmentation [3], and depth estimation [4].

The aforesaid problem can be addressed in a logical manner from the camera side. The brightness of the images will undoubtedly improve with higher ISO and exposure. However, boosting ISO causes noise, whereas prolonged exposure produces motion blur [21], which makes the images look even worse. The other viable option is to enhance the visual appeal of low-light images using image editing tools like Photoshop or Lightroom. But both tools demand artistic taste and take a long time on large datasets.

Contrasting with camera and software approaches that require manual efforts, Low-Light Image Enhancement (LLIE) is designed to autonomously enhance the visibility of images captured in low-light conditions. It is an active research field that is related to various system-level applications, such as visual surveillance [40], autonomous driving [41], unmanned aerial vehicle [42], photography [43], remote sensing [44], microscopic imaging [45], and underwater imaging [46].

In pre-deep learning eras, the only option for LLIE is the traditional approaches. Most traditional LLIE methods utilize Histogram Equalization [47, 48, 49, 50, 51, 52, 53], Retinex theory [54, 55, 56, 57, 58, 59, 60], Dehazing [61, 62, 63, 64], or Statistical methods [65, 66, 67, 52, 68]. While these traditional approaches have solid theoretical foundations, in practice they deliver unsatisfactory results.

The popularity of deep learning LLIE approaches can be attributed to their superior effectiveness, efficiency, and generalizability. Deep learning-based LLIE methods can be divided into the following categories: supervised learning [17, 18, 19, 69, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39], unsupervised learning [8, 9], semi-supervised learning [10] and zero-shot learning [11, 12, 13, 14, 15, 16] methods. In the past five years, there have been a handful of publications on deep learning-based LLIE (See Fig. 2 and Tab. I). Each of these learning algorithms exhibits its own set of strengths and limitations. For instance, unsupervised and zero-shot learning perform on unknown datasets, whereas supervised learning achieves state-of-the-art performance on benchmark datasets. It is crucial to carefully examine previous developments since they can offer a detailed knowledge, highlight present challenges, and suggest potential research directions for the LLIE community.

| Name | Publications | Network Structure | Loss Functions | Evaluation Metrics | Training Dataset | Testing Dataset | One-line Summary | |||||||||||

| PIE [6] | TIP | - | - |

|

- | Custom Dataset | Probabilistic method for image enhancement via illumination & reflectance estimation. | |||||||||||

| LIME [7] | TIP | - | - | LOE | - | LIME | LLIE using illumination map. | |||||||||||

| LLNet [17] | PR | U-Net | L2, KL | PSNR, SSIM | NORB | CVG-UGR | Deep autoencoder for adaptive high dynamic range image brightening. | |||||||||||

| MBLLEN [18] | BMVC | Multi-scale | SSIM, Region, Perceptual |

|

Custom Dataset | Custom Dataset | Multi-branch fusion network for LLIE/LLVE. | |||||||||||

| LightenNet [19] | PRL | Others | L2 |

|

Custom Dataset | Custom Dataset | Retinex-based CNN for enhancing weakly illuminated images. | |||||||||||

| Retinex-Net [69] | BMVC | Multi-scale |

|

NIQE | LOL |

|

Retinex network for LLIE via decomposition & illumination adjustment. | |||||||||||

| SID [21] | CVPR | U-Net, Multi-scale | L1 | PSNR, SSIM | SID | SID | End-to-end trained FCN for low-light image processing. | |||||||||||

| DeepUPE [22] | CVPR | Others |

|

PSNR, SSIM, US | Custom Dataset | MIT, Custom Dataset | Enhancement of underexposed images using constrained intermediate illumination. | |||||||||||

| EEMEFN [23] | AAAI | U-Net | L1, Edge | PSNR, SSIM | SID | SID | Edge-Enhanced Multi-Exposure Fusion Network for enhancing extreme low-light images. | |||||||||||

| ExCNet [11] | ACMMM | Others | Energy Minimization | CDIQA, LOD, US | Custom Dataset | IEpsD | Zero-shot CNN for back-lit image restoration via ‘S-curve’ estimation. | |||||||||||

| KinD [24] | ACMMM | U-Net |

|

|

LOL |

|

Retinex network with light adjustment & degradation removal for LLIE. | |||||||||||

| Zero-DCE [12] | CVPR | Others |

|

|

SICE |

|

Zero-shot network for LLIE using high-order curves & dynamic range adjustment. | |||||||||||

| DRBN [10] | CVPR | U-Net, Recursive | Perceptual, Detail, Quality |

|

LOL | LOL | Semi-supervised deep recursive band network using band decomposition for LLIE. | |||||||||||

| Xu et al. [25] | CVPR | U-Net, Multi-scale | L2, Perceptual | PSNR, SSIM | Custom Dataset | SID, Custom Dataset | Frequency-based decomposition model for LLIE. | |||||||||||

| DLN [26] | TIP | Others | SSIM, TV |

|

Custom Dataset | Custom Dataset | Deep Lightening Network with Lightening Back-Projection blocks for LLIE. | |||||||||||

| DeepLPF [27] | CVPR | U-Net | L1, SSIM |

|

MIT, SID | MIT, SID | Deep Local Parametric Filters model with spatially localized filters for LLIE. | |||||||||||

| EnlightenGAN [8] | TIP | U-Net, Multi-scale |

|

NIQE, US, Accuracy | Custom Dataset |

|

Unsupervised GAN with global-local discriminator & attention for LLIE. | |||||||||||

| KinD++ [28] | IJCV | U-Net |

|

|

LOL |

|

KinD extension. | |||||||||||

| Zero-DCE++ [13] | TPAMI | Others |

|

|

SICE | SICE | Zero-DCE extension. | |||||||||||

| Zhang et al. [29] | CVPR | U-Net, Optical Flow | L1, Consistency |

|

Custom Dataset | Custom Dataset | Optical flow modelfor temporal stability in LLVE. | |||||||||||

| RUAS [14] | CVPR | NAS, Unfolding |

|

|

MIT, LOL |

|

Retinex-inspired model with Neural Architecture Search for LLIE. | |||||||||||

| UTVNet [30] | ICCV | U-Net, Unfolding | TV |

|

sRGB-SID | sRGB-SID | Adaptive unfolding TV network with noise level approximation for LLIE. | |||||||||||

| SDSD [31] | ICCV | Others |

|

PSNR, SSIM, US | SDSD, SMID | SDSD, SMID | Retinex method with self-supervised noise reduction for LLVE. | |||||||||||

| RetinexDIP [15] | TCSVT | U-Net |

|

NIQE, NIQMC, CPCQI | - |

|

Retinex zero-shot method using ’generative’ decomposition for LLIE. | |||||||||||

| SGZ [16] | WACV | U-Net, Recurrent |

|

|

SICE |

|

Zero-shot LLIE/LLVE network via light deficiency estimation & semantic segmentation. | |||||||||||

| LLFlow [32] | AAAI | Normalizing Flow | NLL | PSNR, SSIM, LPIPS | LOL, VE-LOL | LOL, VE-LOL | Normalizing flow-based model conditioned on low-light images/features. | |||||||||||

| SNR-Aware [33] | CVPR | Transformer |

|

PSNR, SSIM, US |

|

|

Signal-to-Noise-Ratio aware transformer for LLIE. | |||||||||||

| SCI [9] | CVPR | Others | Fidelity, Smoothness |

|

|

|

Self-Calibrated Illumination learning for LLIE. | |||||||||||

| URetinex-Net [34] | CVPR | Unfolding |

|

|

LOL | LOL, SICE, MEF | Retinex-based Deep Unfolding Network with unfolding optimization for LLIE. | |||||||||||

| Dong et al. [35] | CVPR | U-Net | L1 | PSNR, SSIM | MCR, SID | MCR, SID | Fusion of colored & synthesized monochrome raw image for LLIE. | |||||||||||

| MAXIM [36] | CVPR | Transformer, Multi-scale |

|

PSNR, #Params, FLOPs | MIT, LOL | MIT, LOL | Multi-axis MLP transformer for low-level image processing tasks. | |||||||||||

| BIPNet [37] | CVPR | U-Net, Multi-scale | L1 | PSNR, SSIM, LPIPS | SID | SID | Burst image enhancement using pseudo-burst features. | |||||||||||

| LCDPNet [38] | ECCV | U-Net, Multi-scale |

|

PSNR, SSIM | Custom Dataset | MSEC, Custom Dataset | Over-/under-exposed region localization and enhancement via local color distribution. | |||||||||||

| IAT [39] | BMVC | Transformer | L1 |

|

|

|

Illumination Adaptive Transformer with ISP parameter adjustment. |

Three recent surveys have been conducted on LLIE. Wang et al. [70] provide an overview of traditional learning-based LLIE techniques. Liu et al. [71] propose a new LLIE dataset named VE-LOL, review LLIE methods, and introduce a joint image enhancement and face detection network named EDTNet. Li et al. [1] unveil a new LLIE dataset named LLIV-Phone, reviews deep learning-based LLIE methods, and design an online demo platform for LLIE methods.

The existing surveys have the following limitations.

-

•

Their proposed dataset has either overexposure or underexposure for single images. This assumption contradicts images from the real world, which frequently contain both overexposure and underexposure.

-

•

Their proposed dataset contains few videos, and even these videos are filmed in fixed shooting positions. This oversimplification is also inconsistent with real-world videos that are often captured in motion.

-

•

These studies emphasize low-level perceptual quality and high-level vision tasks while neglecting system-level application, which is essential when LLIE approaches are implemented in real-world products.

This paper makes the following contributions to existing LLIE surveys:

-

•

We present the most recent comprehensive survey on low-light image and video enhancement. In particular, we conducted an extensive qualitative and quantitative comparison with various full-reference and non-reference evaluation metrics and made a modularized discussion focusing on structures and strategies. Based on these analysis, we identity the emerging system-level applications, point out the open challenges and suggest directions for future works.

-

•

We introduce two image datasets named SICE_Grad and SICE_Mix. They are the first datasets that include both overexposure and underexposure in single images. This preliminary effort highlights the LLIE community’s unresolved mixed over- and underexposure challenge.

-

•

We propose Night Wenzhou, a large-scale high-resolution video dataset. Night Wenzhou is captured during fast motions and contains diverse illuminations, various landscapes, and miscellaneous degradation. It will facilitate the application of LLIE methods to real-world challenges like autonomous driving and UAV.

The rest of the paper is organized as follows. Section II provides a systematic overview of existing LLIE methods (Fig. 3 and 6). Section III introduces the benchmark datasets and the proposed datasets. Section IV makes empirical analysis and comparisons for representative LLIE methods. Section V identifies the system-level applications. Section VI discusses the open challenges and the corresponding future works. Section VII provides the concluding remarks.

II Methods Review

II-A Selection Criteria

We select the LLIE methods according to the following rubrics.

-

•

We focus on LLIE methods in the recent 5 years (2018-2022) and lay emphasis on deep learning-based LLIE methods in the recent 2 years (2021-2022) because of their rapid development.

-

•

We pick LLIE methods published in prestigious conferences (e.g., CVPR) and journals (e.g., TIP) with official codes to ensure credibility and authenticity.

-

•

For the paper published in the same year, we prefer works with more citations and Github stars.

-

•

We include LLIE methods that significantly surpass previous state-of-the-art benchmark LLIE datasets.

II-B Learning Strategies

Before 2017, traditional learning-based (TL) methods are the ad-hoc solution for LLIE. As shown in Fig. 4, mainstream traditional learning methods in LLIE utilize Histogram Equalization, Retinex, Dehazing or Statistical techniques. Since 2017, deep learning-based (DL) methods have started to dominate this field. Supervised learning (67.6 %) is so far the most popular strategy. From 2019, there are several methods using zero-shot learning (17.6 %), unsupervised learning (5.9 %), and semi-supervised (2.9 %), as shown in Fig. 5.

(TL) Histogram Equalization: Histogram Equalization (HE)-based methods spread out the frequent intensity values of an image to improve its global contrast. In this way, the low-contrast region of an image gains higher contrast, and the visibility improves. The original HE-based method [47] consider global adjustment only, leading to poor local illumination and amplified degradation (e.g., noise, blur, and artifacts). The follow-up works attempt to address these issues using different priors and constraints. For example, Pizer et al. [48] perform HE on partitioned regions with local contrast constraints to suppress noise. Ibrahim et al. [72] utilize mean brightness preservation to prevent visual deterioration, whereas Arici et al. [49] additionally integrate contrast adjustment, noise robustness, and white/black stretching. The later works integrate gray-level differences [50], depth information [51], weighting matrix [52], and visual importance [53] to guide low-light image enhancement towards better fine-grained details. On the flip side, the HE-based methods’ performance gains are predominantly attributed to manually-designed constraints upon the vanilla histogram equalization.

(TL) Retinex: Retinex-based methods are based on the Retinex theory of color vision [54, 55], which assumes that an image can be decomposed into a reflectance map and an illumination map. The enhanced image can be obtained by fusing the enhanced illumination map and the reflectance map. Lee et al. [56] is the pioneering work incorporating Retinex theory into image enhancement. After that, Wang et al. [57] utilizes lightness-order-error and bi-log transform to improve the naturalness and details during enhancement. Another work by Wang et al. [58] leverages Gibbs distributions as priors for the reflectance and illumination and gamma distributions as priors for the network parameters. Fu et al. [59] design a weighted variational model (instead of logarithmic transform) for better prior modeling and edge preservation. Guo et al. [7] introduce a structure prior to refining the initial illumination map. Cai et al. [60] propose a shape prior for structural information preservation, a texture prior for reflection estimation, and an illumination prior for luminous modeling, respectively. Still, the Retinex-based models extensively rely on hand-crafted priors to achieve satisfactory image enhancement.

(TL) Dehazing: Dehazing-based methods treat the inverted low-light images as haze images and then apply dehazing algorithms to enhance the image [61]. Instead of attending the whole image, Li et al. [62] decompose the images into base layers and details layers and use a dark channel prior [73]-guided dehazing process for image enhancement. Although less prevalent in LLIE, the dehazing-based methods have been widely used in underwater image enhancement. For example, Chiang et al. [63] leverage wavelength compensation, depth map estimation, and dehazing. The other work by Li et al. [64] builds an underwater image enhancement pipeline using the minimum information loss principle, histogram prior, and dehazing. Nonetheless, the dehazing largely depends on the insubstantial dehazing assumptions for performing enhancement.

(TL) Non-HE Statistical: Statistical methods involve statistical models and physical properties for image processing and enhancement. Compared with other traditional learning-based methods, statistical methods require a solid mathematical foundation and expert domain knowledge. The pioneering statistical method by Celik et al. [65] performs contrast adjustment using 2-D interpixel contextual information. Similar to HE-based approaches, the follow-up works to improve the previous research using additional constraints and assumptions. For example, Liang et al. [66] propose a variational model based on discrete total variation for local contrast adjustment. Yu et al. [67] utilize Gaussian surrounding function for light estimation, followed by light-scattering attenuation with information loss constraint for light refinement. Ying et al. [52] utilizes a weighting matrix for illumination estimation and a camera response model for best exposure ratio finding. Su et al. [68] exploit noise level function and a just noticeable difference model for noise suppression during image enhancement. Nevertheless, the Statistical methods are associated with computationally expensive optimization processes for performance improvements.

(TL) Hybrid Methods: Hybrid traditional learning methods aims to synergize the strengths of techniques like HE, Retinex, Dehazing and Statistical (Non-HE) for enhanced performances. HE-MSR-COM [74] combines histogram equalization and multiscale Retinex to extract illumination from low-frequency HE-enhanced images and edge details from high-frequency Retinex-enhanced images for image enhancement. Li et al. [75] presents an underwater image enhancement method that integrates dehazing, color correction, histogram equalization, saturation, intensity stretching, and bilateral filtering. Galdran et al. [76] explores the connection between Retinex and dehazing by applying Retinex to hazy images with inverted intensities. However, hybrid methods, in attempting to merge multiple techniques, can sometimes accumulate the weaknesses of each approach and may fail to deliver the expected synergistic benefits.

(DL) Supervised Learning: In LLIE, supervised learning refers to the learning strategy with paired images. For example, supervised learning may use one dataset with 1,000 low-exposure images and another with 1,000 normal-exposure images that are different in only illuminations. The pioneering work LLNet [17] utilizes a stacked sparse denoising autoencoder to exploit the multi-scale information for image enhancement. The subsequent work MBLLEN [18], for the first time, applies low-light image enhancement techniques to videos. Later, KinD [24] and KinD++ [28] combine model-based Retinex theory with data-driven image enhancement to cope with light adjustment and degradation removal. After that, Zhang et al. [29] exploit optical flow to promote stability in low-light video enhancement, whereas LLFlow leverages normalizing flow for illumination adjustment and noise suppression. The recent work IAT [39] proposes a lightweight illumination adaptive transformer for exposure correction and image enhancement. It is worth mentioning that the supervised learning method has achieved state-of-art-results on benchmark datasets.

(DL) Unsupervised Learning: In LLIE, unsupervised learning refers to the learning strategy without paired images. For instance, unsupervised learning may use a dataset with low-exposure images and another with normal-exposure images that are different in more than illuminations. There are two unsupervised learning existing in LLIE literature. EnlightenGAN [8] is an unsupervised generative adversarial network (GAN) that regularizes unpaired learning using a multi-scale discriminator, a self-regularized perceptual loss, and the attention mechanism. SCI [9] introduces a self-calibrated illumination framework that utilizes a cascaded illumination learning process with weight sharing. Compared with supervised learning methods, unsupervised learning approaches avoids the tedious work of collecting paired training images.

(DL) Semi-supervised learning: In LLIE, semi-supervised learning is a learning strategy with a small quantity of paired images and many unpaired images. An example will be a dataset with low-exposure images and another with normal-exposure images where most images are different in more than illuminations, and few images are different in only illuminations. To our knowledge, semi-supervised learning has been used by only one representative LLIE method named DRBN [10], which is based upon a recursive neural network with band decomposition and recomposition. Compared with supervised and unsupervised learning techniques, the potential of semi-supervised learning remains to be excavated.

(DL) Zero-shot learning: In LLIE, zero-shot learning is a learning strategy that requires neither paired data nor unpaired training datasets. Instead, zero-shot learning learns image enhancement at test time using data-free loss functions such as exposure loss or color loss. For example, ExCNet [11] introduces a zero-shot CNN based on estimating the ”S-curve” that best fits the exposure of the back-lit images. Zero-DCE [12] and Zero-DCE++ [13] utilize zero-reference deep curve estimation and dynamic range adjustment. RUAS [14] develops a Retinex-inspired unrolling model with Neural Architecture Search (NAS). RetinexDIP [15] proposes a Retinex-based zero-shot method using generative decomposition and latent component estimation. SGZ [16] leverages pixel-wise light deficiency estimation and unsupervised semantic segmentation. Thanks to the zero-reference loss functions, zero-shot learning methods have outstanding generalization ability, require few parameters, and have fast inference speed.

Discussion: The aforementioned traditional and deep learning strategies for LLIE have the following limitations.

-

•

Traditional Learning methods’ performances lag behind deep learning methods, even with their handcrafted priors and intricate optimization steps, which result in poor inference latency.

-

•

Supervised Learning methods rely heavily on the paired training dataset, but none of the approaches for obtaining such a dataset is feasible. Specifically, it is difficult to capture image pairs that are only different in illuminations; it is hard to synthesize images that fit the complex real-world scenes; it is expensive and time-consuming to retouch large-scale low-light images.

- •

-

•

Semi-supervised learning methods inherit the limitations of both supervised and unsupervised learning methods without fully utilizing their strengths. That’s why semi-supervised learning has been used by only one representative LLIE method DRBN [10].

-

•

Zero-shot learning methods require elaborate designs for the data-free loss functions. Still, they cannot cover all the necessary properties of real-world low-light images. Besides, their performances lag behind supervised learning methods like LLFlow [32] on benchmark datasets.

II-C Network Structures

Many LLIE methods utilize a U-Net-like (37.2 %) structure or multi-scale information (18.6 %). Some methods use transformers (7.0 %) or unfolding networks (7.0 %). A few methods (2.3 % for each) use Neural Architecture Search (NAS), Normalizing Flow, Optical Flow, Recurrent Network, or Recursive Network.

U-Net and Multi-Scale: U-Net-like [77] structure is the most popular in LLIE since it preserves high-resolution rich detail features and low-resolution rich semantic features, which are both essential for LLIE. Similarly, other structures that use multi-scale information are also welcomed in LLIE.

Transformers: Recently, the transformers-based [78] method has surged in computer vision, especially high-level vision tasks, due to its ability to track long-range dependencies and capture global information in an image.

Unfolding and NAS: The unfolding network (a.k.a. unrolling network) [79] has been used by several methods because it combines the wisdom of model-based and data-based approaches. NAS [80] is the automating design of a neural network that generates the optimal result with a given dataset.

Normalizing Flow: Normalizing flow-based [81] method transforms a simple probability distribution into a complex distribution with a sequence of invertible mappings.

Optical Flow: Optical flow-based [82] methods estimate the pixel-level motions of adjacent video frames.

Recurrent and Recursive: Recurrent network [83] is a type of neural network that repeatedly process the input in chain structures, whereas recursive network [84] is a variant of the recurrent network that processes the input in hierarchical structures.

Discussion: The aforementioned network structures for LLIE have the following limitations.

-

•

Transformers is currently unpopular in low-level vision tasks like LLIE. Perhaps this is due to their impotence to integrate local and non-local attention and inefficiency at processing high-resolution images.

-

•

The unfolding strategy requires elaborate network design, whereas NAS requires expensive parameter learning.

-

•

The normalizing flow and optical flow-based methods have poor computational efficiency.

-

•

The recurrent network have the vanishing gradient problem at large-scale data [85], whereas the recursive network relies on the inductive bias of hierarchical distribution, which is unrealistic.

II-D Loss Functions

The choice of loss functions is highly diverse among LLIE methods. 55.2 % of the LLIE methods use non-mainstream loss functions. Among mainstream loss functions, (11.5 %) is the most popular, whereas (6.9 %), Negative SSIM (6.9 %), and Illumination Smoothness (6.9 %) are also popular. A small quantity of methods use Total Variation (5.7 %), Perceptual (3.4 %), or Illumination Adjustment (3.4 %).

Full-Reference loss: loss, loss, Negative SSIM loss, Perceptual loss [86] and Illumination adjustment loss [24] are full-reference loss functions (i.e., loss requiring paired images). loss targets the absolute difference between image pairs, whereas loss targets the squared difference. Therefore, loss is rigid for large errors but tolerant for small errors, whereas loss does the opposite. Like other low-level vision tasks [87], loss in LLIE is more popular than loss. Negative SSIM loss is based upon the negative SSIM score. Essentially, it reflects the difference of image pairs in terms of luminance, contrast, and structure. However, Negative SSIM loss is uncommon in LLIE. That is different from other low-level vision tasks like image deraining, where it gains great popularity [88, 89, 90]. Perceptual loss is the difference of image pairs based on their features extracted from a pretrained convolutional neural network (e.g., VGG-16 [91]). It is popular in low-level vision tasks like style transfer [86, 92] but is less explored in LLIE. Illumination adjustment loss is the difference for illumination and illumination gradients between image pairs. Due to its task-specific nature, it has only been applied in LLIE algorithms [24, 28, 34].

Non-Reference loss: Total Variation (TV) loss [93] and illumination smoothness loss [24] are non-reference loss functions (i.e., losses that do not require paired images). TV loss measures the sum of the difference between adjacent pixels in vertical and horizontal directions for an image. Therefore, TV loss suppresses irregular patterns like noise and blur and promotes smoothness in the image. Illumination smoothness loss is similar to TV loss since it is written as the norm of illumination divided by the maximum variation. Despite their success at other low-level vision tasks like denoising [94] and deblurring [95], the variation-based methods have been less explored in LLIE.

II-E Evaluation Metrics

Many LLIE methods choose PSNR (18.8 %) or SSIM (18.1 %) as the evaluation metrics. Apart from PSNR and SSIM, the User Study (US) (8.1 %) is a popular method. Several methods use NIQE (5.4 %), Inference Time (5.4 %), #Params (4.7 %), FLOPs (4.0 %), LOE (4.0 %), or LPIPS (3.4 %) as the evaluation metrics.

Full-Reference Metrics: Peak Signal-to-Noise Ratio (PSNR), Structure Similarity Index (SSIM), and Learned Perceptual Image Patch Similarity (LPIPS) [96] are full-reference image quality evaluation metrics. PSNR measures the pixel-level similarity between image pairs, whereas SSIM measures the similarity according to luminance, contrast, and structure. LPIPS measures the patch-level difference between two images using a pretrained neural network. Higher PSNR and SSIM and lower LPIPS indicate better visual quality.

Non-Reference Metrics: Natural Image Quality Evaluator (NIQE) [97] and Lightness order Error (LOE) [57] are non-reference image quality evaluation metrics. Specifically, NIQE is based on the naturalness score for an image using a model trained with natural scenes, whereas LOE indicate the lightness-order errors for that image. A lower NIQE and LOE indicate better visual quality.

Subjective Metrics: User study is the only subjective metric used for representative LLIE methods. Typically, the user study score is the mean opinion score from a group of participants. A high user study score means better perceptual quality from human perspectives.

Efficiency Metrics: Efficiency metrics include inference time, Numbers of Parameters (#Params), and Floating Point Operations (FLOPs). A shorter inference time and a smaller #Params and FLOPs indicates better efficiency.

II-F Training and Testing Data

Popular benchmark training data for LLIE include LOL (20.8 %), SID (12.5 %), and MIT-Adobe FiveK (8,3 %). Alternative choices include SICE (6.2 %), ACDC (4.2 %), SDSD (4.2 %), and SMID (4.2 %). Meanwhile, many utilize their custom dataset (16.7 %). Popular benchmark testing data for LLIE include LOL (11.0 %) and LIME (8.0 %). Some utilize MEF (7.0 %), NPE (7.0 %), and SID (7.0 %), while others use DICM (6.0 %), MIT-Adobe FiveK (5.0 %), VV (5.0 %), ExDark (4.0 %), or SICE (4.0 %). Similar to the case in training data, many methods utilize their custom dataset (7.0 %) for testing. A detailed discussion for training and testing dataset is given in Section IV.

| Topics | Yes (%) | No (%) |

|---|---|---|

| RGB | 81.8 | 18.2 |

| Limitations | 64.7 | 35.3 |

| New datasets | 44.1 | 55.9 |

| Retinex | 41.2 | 58.8 |

| Applications | 20.6 | 79.4 |

| Video | 11.8 | 88.2 |

II-G Others

New Dataset: The number of LLIE methods that introduce new datasets (55.9 %) surpasses the number of LLIE methods that only use existing datasets (44.1 %). This reflects the importance of data for LLIE.

Limitations: Most LLIE methods (64.7 %) do not mention their limitations and future works. This makes it hard for future researchers to improve upon their work.

Applications: Most LLIE methods (79.4 %) do not relate low-level image enhancement to high-level applications like detection or segmentation. Therefore, the practical values of these methods remain a question.

Retinex: Lots of methods (41.2 %) utilize Retinex theory for LLIE enhancement. However, most LLIE methods (58.8 %) do not utilize Retinex theory. Hence, the Retinex theory remains a popular but non-dominant choice for LLIE.

Video: A majority of LLIE methods (88.2 %) do not consider Low-Light Video Enhancement (LLVE) tasks. Sadly, most real-world low-light visual data are stored in video format.

RGB: A majority of LLIE methods (81.8 %) uses RGB data for training. This is great since RGB is much more popular than RAW for modern digital devices like laptops or smartphones.

| Dataset | Number | Resolutions | Type | Train | Paired | Task |

|---|---|---|---|---|---|---|

| NPE [57] | 8 | Various | Real | N | N | N |

| LIME [7] | 10 | Various | Real | N | N | N |

| MEF [98] | 17 | Various | Real | N | N | N |

| DICM [50] | 64 | Various | Real | N | N | N |

| VV | 24 | Various | Real | N | N | N |

| LOL [69] | 500 | 400 600 | Real | Y | Y | N |

| VE-LOL [71] | 13,440 | Various | Both | B | Y | Y |

| ACDC [99] | 4,006 | 1080 1920 | Real | Y | N | Y |

| DCS [16] | 150 | 1024 2048 | Syn | N | Y | Y |

| DarkFace [100] | 6,000 | 720 1080 | Real | Y | N | Y |

| ExDark [2] | 7,363 | Various | Real | Y | N | Y |

| MIT [101] | 5,000 | Various | Both | Y | Y | N |

| MCR [35] | 3,944 | 1024 1280 | Both | Y | Y | N |

| LSRW [102] | 5,650 | Various | Real | Y | Y | N |

| TYOL [103] | 5,991 | Various | Syn | Y | Y | N |

| SID [21] | 5,094 | Various | Real | Y | Y | N |

| SDSD [31] | 37,500 | 1080 1920 | Real | Y | Y | N |

| SMID [104] | 22,220 | 3672 5496 | Real | Y | Y | N |

| SICE [5] | 4,800 | Various | Both | Y | Y | N |

| SICE_Grad | 589 | 600900 | Both | Y | Y | N |

| SICE_Mix | 589 | 600900 | Both | Y | Y | N |

III Datasets

III-A Benchmark Datasets

NPE[57]/ LIME[7]/ MEF[98]/ DICM[50] carries 8/10/17/64 real low-light images of various resolutions. They contain indoor items and decorations, outdoor buildings, streetscapes, and natural landscapes, and they are all for testing.

VV111https://sites.google.com/site/vonikakis/datasets contains 24 real multi-exposure images of various resolutions. It contains traveling photos with indoor and outdoor persons and natural landscapes for testing.

LOL [69] contains 500 pairs of real low-light images of 400 600 resolutions. It only contains indoor items and divides into 485 training images and 15 testing images.

VE-LOL [71] contains 13,440 real and synthetic low-light images and image pairs of various resolutions. It has diversified scenes, such as natural landscapes, streetscapes, buildings, human faces, etc. The paired portion VE-LOL-L has 2,100 pairs for training and 400 pairs for testing, whereas the unpaired portion VE-LOL-H has 6,940 images for training and 4,000 for testing. Additionally, the VE-LOL-H portion contains detection labels for high-level object detection tasks.

ACDC [99] contains 4,006 real low-light images of resolution 1,080 1,920. It includes autonomous driving scenes with adverse conditions (1,000 foggy, 1,000 snowy, 1,000 rainy, and 1,006 nighttime) and has 19 classes. In particular, the ACDC nighttime contains 400 training images, 106 validation images, and 500 test images. Besides, ACDC contains semantic segmentation labels which allow high-level semantic segmentation tasks.

DCS [16] contains 150 synthetic low-light images of resolution 1,024 2,048. Specifically, it is synthesized with gamma correction upon the original CityScape [105] dataset, and it contains urban scenes with fine segmentation labels (30 classes). Therefore, it permits high-level instance segmentation, semantic segmentation, and panoptic segmentation tasks. The Dark CityScape (DCS) dataset is intended for testing only.

DarkFace [100] contains 10,000 real low-light images of resolution 720 1,080. It contains nighttime streetscapes with many human faces in each image. It consists of 6,000 training and validation images and 4,000 testing images. With object detection labels, it can be applied to high-level object detection tasks.

ExDark [2] contains 7,363 real low-light images of Various resolutions. It contains images with diversified indoor and outdoor scenes under 10 illumination conditions with 12 object classes. It is split into 4,800 training images and 2,563 testing images. It contains object detection labels and can be applied to high-level object detection tasks.

SICE [5] contains 4,800 real and synthetic multi-exposure images of various resolutions. It contains images with diversified indoor and outdoor scenes with different exposure levels. The train/val/test follows a 7:1:2 ratio. In particular, SICE contains both normal-exposed and ill-exposed images. Therefore, it can be used for supervised, unsupervised, and zero-shot learning.

SID [21] contains 5,094 real short-exposure images, each with a matched long-exposure reference image. The resolution is 4,240 2,832 for Sony and 6,000 4,000 for Fuji images. The train/val/test follows a 7:1:2 ratio. It contains indoor and outdoor images, where the illuminance of the outdoor scene is 0.2lux 5lux, and the illuminance of the indoor scene is 0.03lux 0.3lux.

SDSD [31] contains 37,500 real images of resolution 1,080 1,920. It is the first high-quality paired video dataset for dynamic scenarios, containing identical scenes and motion in high-resolution video pairs in both low- and normal-light conditions.

SMID [104] contains 22,220 real low-light images of resolution 3672 5496. The dataset was randomly divided into 3 groups: training (64 %), validation (12 %), and testing (24 %). Some scenarios include various lighting setups, including light sources with various color temperatures, levels of illumination, and placements.

MIT Adobe FiveK [101] contains 5,000 real and synthetic images in diverse light conditions in various resolutions, including the RAW images taken directly from the camera and the edited versions created by 5 professional photographers. The dataset is divided into 80 % for training and 20 % for testing.

MCR [35] contains 3,944 real and synthetic short-exposure and long-exposure images of 1,024 1,280 resolutions with monochrome and color raw pairs. The dataset is divided into train and test sets with a 9:1 ratio. The image are collected in both indoor fixed positions, and indoor/outdoor sliding platforms conditions.

LSRW [102] contains 5,650 real low-light paired images of various resolutions with indoor and outdoor scenes. 5,600 paired images are selected for training and the remaining 50 pairs are for testing.

TYOL [103] contains 5,991 synthetic images and most images conform to VGA resolution. It splits into 2,562 training images, 1,680 test images, and 1,669 test targets. The subset of TYOL, TYO-L (Toyota Light) contains texture-mapped 3D models of 12 objects with a wide range of sizes.

Discussion: The current benchmark datasets for LLIE have the following limitations.

-

•

Many datasets use synthetic images to meet the paired image requirement for supervised learning methods. These image synthesis techniques often follow simple gamma correction or exposure adjustment, which does not fit the diverse illuminations in the real-world. Consequently, methods trained with these synthetic images generalize poorly to the real-world images.

-

•

The existing datasets consider single images rather than both images and videos. That is because that high-quality low-light videos are hard to capture and that many methods cannot process high-resolution video frames in real-time. However, the real-world applications (e.g., visual surveillance, autonomous driving, and UAV) are heavily dependent on videos rather than single images. Hence, the lack of low-light video dataset greatly undermines the benefits of LLIE for these fields.

-

•

The existing datasets either consider underexposure or overexposure only, or consider underexposure and overexposure in separate images in a dataset. There is no dataset that contains mixed under-/overexposure in single images. See the detailed discussions in Subsection III-C.

III-B New Image Dataset

We synthesize two new datasets, dubbed SICE_Grad and SICE_Mix, based on the SICE [5] dataset. To obtain these two datasets, we first reshape the original SICE dataset to a resolution of 600 900. After that, we obtain panels from images in SICE with the same background but different exposures. The next step is different for SICE_Grad and SICE_Mix. For SICE_Grad, we arrange the panels from low exposure to high exposure. To make it more challenging, we randomly placed some normally exposed panels at the end instead of at the mid. For SICE_Mix, we permute all panels at random. Example images for the original SICE and the proposal SICE_Grad and SICE_Mix are in Fig. 7. The panel width is of the image width. The table summary for SICE_Grad and SICE_Mix is shown in Tab. III.

Our SICE_Grad and SICE_Mix dataset has two distinct advantages over existing datasets. (1) SICE_Grad and SICE_Mix are synthesized through permuting the panels within the SICE dataset. which enables their use in conjunction with the SICE dataset for training supervised learning methods. Meanwhile, they are suitable as testing datasets. (2) SICE_Grad and SICE_Mix exhibit extremely uneven exposure within individual images, a common occurrence in real-world scenarios like UAV.

III-C Exposure Analysis

We conduct an exposure analysis to study the overexposure and underexposure for benchmark datasets with paired images. Specifically, we pick DCS [16], LOL [69], VE-LOL (Syn) [71], VE-LOL (Real) [71], and SICE [5] to compare with the proposed SICE_Grad and SICE_Mix.

Fig. 8 presents our exposure analysis using pixel-to-pixel scatter plots. The horizontal axis shows pixel values of input images, and the vertical axis represents ground truth images. Key characteristics include: (1) Each color-coded curve corresponds to an image pair. (2) Uniformly concave or convex plots indicate exclusively under or over-exposed input images. (3) Plots featuring both curve types signify a mix of under and over-exposure in the dataset. (4) Individual curves with both concave and convex segments suggest mixed exposure within single images.

We notice that DCS [16], LOL [69], VE-LOL (Syn) [71], and VE-LOL (Real) [71] contain under-exposed images only. SICE (low-exposure) has a majority of under-exposed images and a minority of over-exposed images, whereas SICE (high-exposure) has a majority of over-exposed images and a minority of under-exposed images. SICE_Grad and SICE_Mix are unique because they not only have over-exposed and under-exposed images across the whole dataset but also mixed overexposure and underexposure in single images. These characteristics make SICE_Grad and SICE_Mix particularly challenging for image enhancement. Our experiments in Section IV show that no current representative LLIE method shows satisfactory result on SICE_Grad or SICE_Mix.

III-D New Video Dataset

We collect a large-scale dataset named Night Wenzhou to comprehensively analyze the performance of existing methods in real-world low-light conditions. In particular, our dataset contains aerial videos captured with DJI Mini 2 and streetscapes captured with GoPro HERO7 Silver. All videos are taken nighttime in Wenzhou, Zhejiang, China, and have an FPS of 30. The table summary for Night Wenzhou is displayed in Tab. IV. As we can see, Night Wenzhou contains videos of 2 hours and 3 minutes and has a size of 26.144 GB.

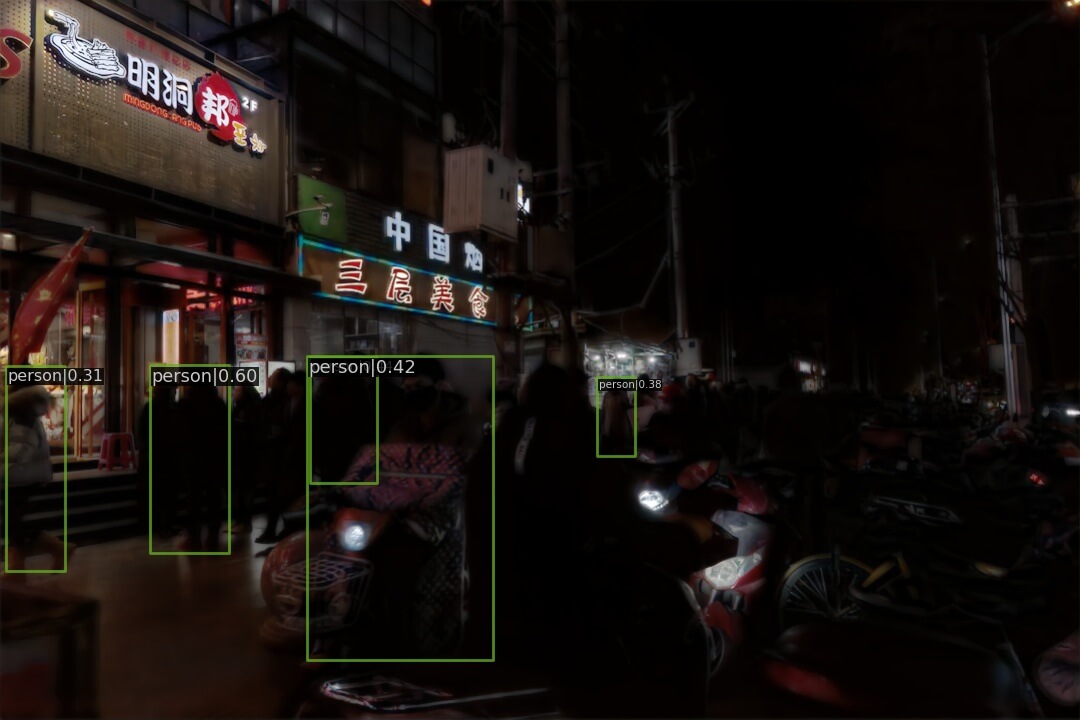

Our Night Wenzhou dataset is challenging since (1) it contains large-scale high-resolution videos of diverse illumination conditions (e.g., extremely dark, underexposure, moonlight, uneven illumination, etc.). (2) it features various degradation (e.g., noise, blur, shadows, artifacts) commonly seen in real-world applications like autonomous driving. Our Night Wenzhou dataset can be used to train unsupervised and zero-shot learning methods and to test LLIE methods with any learning strategy. Sample images for our Night Wenzhou dataset are in Fig. 9.

| Device | Resolution (H W) | Duration (h:m:s) | Size (GB) |

|---|---|---|---|

| GoPro | 1440 1920 | 0:09:08 | 1.871 |

| 0:13:04 | 2.675 | ||

| 0:17:41 | 3.727 | ||

| 0:17:40 | 3.727 | ||

| 0:17:43 | 3.727 | ||

| 0:11:15 | 2.316 | ||

| 0:05:14 | 1.029 | ||

| 0:17:57 | 3.727 | ||

| 0:02:25 | 0.526 | ||

| GoPro Total | 1:52:07 | 23.325 | |

| DJI | 1530 2720 | 0:01:47 | 0.500 |

| 0:00:27 | 0.126 | ||

| 0:00:42 | 0.198 | ||

| 0:00:42 | 0.177 | ||

| 0:00:27 | 0.127 | ||

| 0:06:21 | 1.604 | ||

| 0:00:27 | 0.087 | ||

| DJI Total | 0:10:53 | 2.819 | |

| Total | 2:03:00 | 26.144 |

IV Evaluations

IV-A Quantitative Comparisons

In this subsection, we leverage full-reference metrics including PSNR, SSIM, and LPIPS [106], and non-reference metrics including UNIQUE [107], BRISQUE [108], and SPAQ [109]. Note that some entries are ‘-’ due to the out-of-memory error during inference.

Results on Synthetic Datasets: Tab. V shows the quantitative comparison on LOL [69], DCS [16], VE-LOL [71], SICE_Mix, and SICE_Grad. We observe that LLFlow [32] demonstrates superior performance: it achieves the best PSNR, SSIM, and LPIPS on LOL [69] and VE-LOL (Real) [71], and the best PSNR and SSIM on DCS [16]. KinD++ [28] exhibits excellent performance with the best PSNR, SSIM, and LPIPS on VE-LOL (Syn) and SICE_Mix, and the best PSNR and LPIPS on SICE_Grad. Furthermore, Zero-DCE [12] has the best LPIPS on DCS [16], whereas KinD [24] has the best SSIM on SICE_Grad. No other methods achieve the best score at any metrics.

Results on Simple Real-World Datasets: Tab. VI displays the quantitative comparison on NPE [57], LIME [7], MEF [98], DICM [50], and VV. We note that the competition for Tab. VI is much more intense than Tab. V. The only method with 4 best scores is Zero-DCE [12], whereas the only method with 3 best scores is KinD++ [28]. Besides, the methods with 2 best scores are RetinexNet [69] and SGZ [16]. Furthermore, the methods with only 1 best score include KinD [24], LLFlow [32], URetinexNet [34], and SCI [9]. RUAS [14] is the only method that does not perform the best at any metric.

Results on Complex Real-World Datasets: Tab. VII presents the quantitative comparison on DarkFace [100] and ExDark [2]. We notice that RUAS [14] earns the best UNIQUE and BRISQUE on DarkFace [100], whereas RetinexNet [69] scores the best SPAQ on DarkFace [100] and ExDark [2]. Additionally, LLFlow [32] obtains the best UNIQUE on ExDark [2].

Results for Model Efficiency: Tab. VIII presents the quantitative comparison of model efficiency. We choose ACDC [99] as the benchmark for efficiency comparison since it contains images of 2K resolution (i.e., 1080 1920), which is closer to real-world applications such as autonomous driving, UAV, and photography. It is shown that SGZ [16] obtains the best FLOPs and Inference Time, whereas SCI [9] gains the best #Params. Besides, it is worth mentioning Zero-DCE [12], RUAS [14], SGZ [16], and SCI [9] achieve real-time processing on a single GPU.

Results for Semantic Segmentation: Tab. IX shows the quantitative comparison of semantic segmentation. For both ACDC [99] and DCS [16], we feed the enhanced image into a semantic segmentation model named PSPNet [110] and calculate the mPA and mIoU score with the default thresholds. On ACDC [99], LLFlow [32] leads to the best mPA, whereas SCI [9] results in the best mIoU. On DCS [16], SGZ [16] contributes to the best mPA and mIoU.

Results for Object Detection: Tab. X displays the quantitative comparison of object detection. In particular, we feed the enhanced image into a face detection model named DSFD [111] and calculate the IoU score with different IoU thresholds (0.5, 0.6, and 0.7). It is shown that LLFlow [32] yields the best IoU with all given thresholds.

| Method | LOL [69] | DCS [16] | VE-LOL (Syn) [71] | VE-LOL (Real) [71] | SICE_Mix | SICE_Grad | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | |

| RetinexNet [69] | 17.559 | 0.645 | 0.381 | - | - | - | 15.606 | 0.449 | 0.769 | 17.676 | 0.642 | 0.441 | 12.397 | 0.606 | 0.407 | 12.450 | 0.619 | 0.364 |

| KinD [24] | 15.867 | 0.637 | 0.341 | 13.145 | 0.720 | 0.304 | 16.259 | 0.591 | 0.432 | 20.588 | 0.818 | 0.143 | 12.986 | 0.656 | 0.346 | 13.144 | 0.668 | 0.302 |

| Zero-DCE [12] | 14.861 | 0.562 | 0.330 | 16.224 | 0.849 | 0.172 | 14.071 | 0.369 | 0.652 | 18.059 | 0.580 | 0.308 | 12.428 | 0.633 | 0.362 | 12.475 | 0.644 | 0.314 |

| KinD++ [28] | 15.724 | 0.621 | 0.363 | - | - | - | 16.523 | 0.613 | 0.411 | 17.660 | 0.761 | 0.218 | 13.196 | 0.657 | 0.334 | 13.235 | 0.666 | 0.295 |

| RUAS [14] | 11.309 | 0.435 | 0.377 | 11.601 | 0.412 | 0.449 | 12.386 | 0.357 | 0.642 | 13.975 | 0.469 | 0.329 | 8.684 | 0.493 | 0.525 | 8.628 | 0.494 | 0.499 |

| SGZ [16] | 15.345 | 0.573 | 0.334 | 16.369 | 0.854 | 0.204 | 13.830 | 0.385 | 0.664 | 18.582 | 0.584 | 0.309 | 10.866 | 0.607 | 0.415 | 10.987 | 0.621 | 0.364 |

| LLFlow [32] | 19.341 | 0.839 | 0.142 | 20.385 | 0.897 | 0.240 | 15.440 | 0.476 | 0.517 | 24.152 | 0.895 | 0.098 | 12.737 | 0.617 | 0.388 | 12.737 | 0.617 | 0.388 |

| URetinexNet [34] | 17.278 | 0.688 | 0.302 | 16.009 | 0.755 | 0.369 | 15.273 | 0.466 | 0.591 | 21.093 | 0.858 | 0.103 | 10.903 | 0.600 | 0.402 | 10.894 | 0.610 | 0.356 |

| SCI[9] | 14.784 | 0.525 | 0.333 | 14.264 | 0.689 | 0.249 | 12.542 | 0.373 | 0.681 | 17.304 | 0.540 | 0.307 | 8.644 | 0.529 | 0.511 | 8.559 | 0.532 | 0.484 |

| Method | NPE [57] | LIME [7] | MEF [98] | DICM [50] | VV | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| UNI | BRI | SPAQ | UNI | BRI | SPAQ | UNI | BRI | SPAQ | UNI | BRI | SPAQ | UNI | BRI | SPAQ | |

| RetinexNet [69] | 0.801 | 16.533 | 71.264 | 0.787 | 24.310 | 70.468 | 0.742 | 14.583 | 69.333 | 0.778 | 22.877 | 62.550 | - | - | - |

| KinD [24] | 0.792 | 20.239 | 70.444 | 0.766 | 39.783 | 67.180 | 0.747 | 32.019 | 63.266 | 0.776 | 33.092 | 59.946 | 0.814 | 29.439 | 61.453 |

| Zero-DCE [12] | 0.814 | 17.456 | 72.945 | 0.811 | 20.437 | 67.736 | 0.762 | 17.321 | 66.864 | 0.777 | 27.560 | 57.402 | 0.836 | 34.656 | 60.716 |

| KinD++ [28] | 0.801 | 19.507 | 71.742 | 0.748 | 19.954 | 73.414 | 0.732 | 27.781 | 67.831 | 0.774 | 27.573 | 62.744 | - | - | - |

| RUAS [14] | 0.706 | 47.852 | 61.598 | 0.783 | 27.589 | 62.076 | 0.713 | 23.677 | 60.701 | 0.710 | 38.747 | 47.781 | 0.770 | 38.370 | 47.443 |

| SGZ [16] | 0.783 | 14.615 | 72.367 | 0.789 | 20.046 | 67.735 | 0.755 | 14.463 | 66.134 | 0.777 | 25.646 | 55.934 | 0.824 | 31.402 | 58.789 |

| LLFlow [32] | 0.791 | 28.861 | 67.926 | 0.805 | 27.060 | 66.816 | 0.710 | 30.267 | 67.019 | 0.807 | 26.361 | 61.132 | 0.800 | 31.673 | 61.252 |

| URetinexNet [34] | 0.737 | 25.570 | 70.066 | 0.816 | 24.222 | 67.423 | 0.715 | 22.346 | 66.310 | 0.765 | 26.453 | 59.856 | 0.801 | 30.085 | 55.399 |

| SCI | 0.702 | 28.948 | 64.054 | 0.747 | 23.344 | 64.574 | 0.733 | 15.335 | 64.616 | 0.720 | 31.263 | 48.506 | 0.779 | 26.132 | 48.667 |

| Method | DarkFace [100] | ExDark [2] | |||

|---|---|---|---|---|---|

| UNI | BRI | SPAQ | UNI | SPAQ | |

| RetinexNet [69] | 0.737 | 18.574 | 54.966 | 0.708 | 66.330 |

| KinD [24] | 0.737 | 48.311 | 41.070 | 0.728 | 55.690 |

| Zero-DCE [12] | 0.720 | 26.194 | 47.868 | 0.729 | 52.700 |

| KinD++ [28] | 0.719 | 32.492 | 52.905 | 0.723 | 61.036 |

| RUAS [14] | 0.740 | 13.770 | 42.329 | 0.712 | 47.785 |

| SGZ [16] | 0.713 | 24.647 | 47.392 | 0.729 | 51.236 |

| LLFlow [32] | 0.708 | 22.284 | 51.544 | 0.735 | 56.116 |

| URetinexNet [34] | 0.739 | 15.148 | 51.290 | 0.722 | 57.291 |

| SCI [9] | 0.719 | 19.511 | 46.046 | 0.709 | 50.618 |

| Method | FLOPs | #Params | Time |

|---|---|---|---|

| RetinexNet [69] | - | 0.5550 | - |

| KinD [24] | 1103.9117 | 8.1600 | 3.5288 |

| Zero-DCE [12] | 164.2291 | 0.0794 | 0.0281 |

| KinD++ [28] | - | 8.2750 | - |

| RUAS [14] | 6.7745 | 0.0034 | 0.0280 |

| SGZ [16] | 0.2135 | 0.0106 | 0.0026 |

| LLFlow [32] | 892.7097 | 1.7014 | 0.3926 |

| URetinexNet [34] | 1801.4110 | 0.3401 | 0.2934 |

| SCI [9] | 0.7465 | 0.0003 | 0.0058 |

| ACDC [99] | DCS [16] | ||||

|---|---|---|---|---|---|

| Method | mPA | mIoU | Method | mPA | mIoU |

| KinD [24] | 60.79 | 49.18 | PIE [6] | 68.89 | 61.97 |

| Zero-DCE [12] | 59.00 | 49.51 | RetinexNet [69] | 66.76 | 57.96 |

| RUAS [14] | 50.42 | 44.48 | MBLLEN [18] | 59.06 | 51.98 |

| SGZ [16] | 61.65 | 49.50 | KinD [24] | 71.69 | 63.42 |

| LLFlow [32] | 62.68 | 49.30 | Zero-DCE [12] | 74.20 | 64.36 |

| URetinexNet [34] | 62.32 | 48.71 | Zero-DCE++ [13] | 74.43 | 65.51 |

| SCI [9] | 57.52 | 49.66 | SGZ [16] | 74.50 | 65.87 |

| Learning | Method | [email protected] | [email protected] | [email protected] |

|---|---|---|---|---|

| TL | LIME [7] | 0.244 | 0.083 | 0.010 |

| SL | LLNet [17] | 0.228 | 0.063 | 0.006 |

| LightenNet [19] | 0.270 | 0.085 | 0.011 | |

| MBLLEN [18] | 0.269 | 0.092 | 0.012 | |

| KinD [24] | 0.255 | 0.081 | 0.010 | |

| KinD++ [28] | 0.271 | 0.090 | 0.011 | |

| URetinexNet [34] | 0.283 | 0.101 | 0.015 | |

| LLFlow [32] | 0.290 | 0.103 | 0.016 | |

| UL | EnlightenGAN [8] | 0.261 | 0.088 | 0.012 |

| ZSL | ExCNet [11] | 0.276 | 0.092 | 0.010 |

| Zero-DCE [12] | 0.281 | 0.092 | 0.013 | |

| Zero-DCE++ [13] | 0.278 | 0.090 | 0.012 | |

| SGZ [16] | 0.279 | 0.092 | 0.012 |

IV-B User Studies

Since there are few effective metrics to evaluate the visual quality of low-light video enhancement, we conducted a user study to assess the performances of different methods on the proposed Night Wenzhou dataset. Specifically, we ask 100 adult participants to watch the enhancement results of 7 models, including EnlightenGAN [8], KinD [24], KinD+ [28], MBLLEN [18], RetinexNet [69], SGZ [16], and Zero-DCE [12]. They are asked to vote ‘1’ to ‘5’ for each method, where ‘1’ indicates the worst performance, and ‘5’ indicates the best.

We make a stacked bar graph in Fig. 10 to show the category-wise information for different methods. It can be seen that RetinexNet [69] has the most (37 %) of ‘1’s; Zero-DCE [12] has the most (33 %) of ‘2’s; EnlightenGAN [8] has the most (40 %) of ‘3’s; MBLLEN has the most (45 %) of ‘4’s; SGZ [16] has the most (39 %) of ‘5’s. Therefore, RetinexNet [69] is voted to have the worst performance, whereas SGZ [16] is voted to have the best performance.

IV-C Qualitative Comparisons

Results on the LOL Dataset: Fig. 11 presents the qualitative comparison on an image from the LOL [69] dataset. Our finding are as follows: 1) RUAS [14] produce over-exposed result 2) MBLLEN [18] over-smooth the image. 3) PIE [6], LIME [7], Zero-DCE [12], SGZ [16], and SCI [9] yield noise. 3) KinD [24], LLFlow [32], and URetinexNet [34] are close to GT.

Results on the VV Dataset: Fig. 12 shows the qualitative comparison for an image from the VV dataset. Our finding are as follows: 1) RUAS [14] produces under-exposed trees. 2) RUAS [14], SCI [9], and URetinexNet [34] renders over-exposed skies. 3) LIME [7], Zero-DCE [12], and LLFlow [32] generates artifacts. 4) MBLLEN [18] oversmooths the image. 5) LLFlow [32] yield color distortion. 6) PIE [6], KinD [24], and SGZ [16] have a promising perceptual quality.

Results on the SICE_Grad and SICE_Mix Dataset: Fig. 13 and Fig. 14 display the qualitative comparison for an image from our SICE_Grad dataset and the SICE_Mix dataset, respectively. We find that no method yields faithful result on SICE_Grad or SICE_Mix. In particular, most methods successfully enhanced the under-exposed regions but made the over-exposed region even brighter. The lack of contrast from the homogeneous over-exposure makes it hard to distinguish any detail in these enhanced regions.

Results on the DarkFace Dataset: Fig. 15 shows the qualitative comparison (w/ object detection) for an image from the DarkFace dataset [100]. In particular, the bounding box in the figure is annotated with the predicted class and probability. Our findings are as follows: 1) KinD [24], Zero-DCE [12], RUAS [14], SGZ [16], and SCI [9] produces under-exposure images, especially for the right half. Therefore, many objects in their enhanced image are not detected. 2) KinD [24] produces oversmoothed result. That is why the object detector is way off target in its enhanced image. 3) KinD++ [28], URetinexNet [34] and LLFlow [32] are good in terms of image enhancement. However, both KinD++ [28] and URetinexNet [34] yield artifacts. That’s why LLFlow’s [32] enhancement yields better object detection results.

Results on the DCS Dataset: Fig. 16 displays the qualitative comparison (w/ semantic segmentation) for an image from the DCS dataset [16]. Particularly, different colors are used to distinguish pixels from different predicted categories. Our findings are as follows 1) RetinexNet [69], MBLLEN [18], and KinD [24] causes large areas of incorrect segmentation on pedestrians and sidewalks. 2) Zero-DCE [12] and SGZ [16] are close to the GT. However, SGZ [16] results in better segmentation results for the objects in the distance.

Results on the Night Wenzhou Dataset: Fig. 17 presents the qualitative comparison for a video frame from our Night Wenzhou dataset. Our findings are as below. 1) RetinexNet [69], KinD [24], and Zero-DCE [12] produces images with poor contrast, extreme color deviation, oversmoothed details, and significant noises, blurs, and artifacts. 2) EnlightenGAN [8] produces over-exposed images. 3) MBLLEN [18], KinD++ [28], and SGZ [16] produces images with good exposure. However, MBLLEN [18] oversmooths the detail, whereas KinD++ [28] generates artifacts and has more color deviation than SGZ [16].

V Applications

V-A Visual Surveillance

Visual surveillance systems have to operate 24 hours per day to capture all the essential information. However, at low-light conditions like night or dusk, it is challenging for visual surveillance systems to collect images or videos with good contrast and sufficient details [40]. This may lead to failure to catch the illegal or criminal acts, or failure to capture the party responsible for a specific accident. Over the past years, Kinect depth map [112], enhanced CNN-enabled learning [113], and SVD-DWT [114] have been used to address the visual surveillance challenges in low-light conditions.

V-B Autonomous Driving

Real-world autonomous driving often has to operate at nighttime with low-light conditions. Autonomous vehicles perform poorly at low-light conditions because their visual recognition algorithms are trained with visual data captured in good illumination conditions (e.g., sunny day). For example, an autonomous vehicle may fail to recognize (and possibly collide into) a moving pedestrian who is wearing black pants and jacket on a road without street light. Recently, Retinex-Net [20], LE-Net [41], and SFNet-N [3] have been developed to improve the recognition performance for autonomous vehicles at low-light conditions.

V-C Unmanned Aerial Vehicle

Unmanned Aerial Vehicle (UAV) is often used in aerial photography and military reconnaissance. Both tasks are usually performed in low-light conditions (e.g. dawn, dusk, or night) for better framing or concealment. For the same reason as autonomous driving, UAVs have poor performance at these low-light conditions. For instance, a UAV flying at low-light conditions may collide into a non luminous building or a tree with dark leaves. Recently, HighlightNet [115], LighterGAN [116], LLNet [17] and the IOU-predictor network [117] have been developed to improve the recognition performance for UAV at low-light conditions.

V-D Photography

Photography is often done in dawn and dusk. That’s because the light at these periods are more gentle, and can help emphasize the shapes of objects. However, the low-light conditions at dawn and dusk significantly degrade the quality of mages captured at these periods. In particular, a higher ISO and a longer exposure time will not only enhance the image brightness but also introduce noise, blurs and artifacts [43]. Recent methods like MorphNet [118], RNN [119] and a learning-based auto white balancing algorithm [120] is shown helpful for photography at low-light conditions.

V-E Remote Sensing

Remote sensing operates at all-weather, and is often used for climate change detection, urban 3D modeling, and global surface monitoring [44]. However, low-light conditions make remote sensing challenging, since the object visibility are degraded, especially from the far-distance of the remote satellite. For example, It is difficult for remote sensing satellites to accurately detect the intensity shift of human activities in regions with few artificial light sources. Methods like RICNN [21], SR [121] and RSCNN [122] can help improve the quality of remote sensing images which boost their interpretability.

V-F Microscopic Imaging

Microscopic imaging has been widely applied to automatic cell segmentation, fluorescence labeling tracking, and high-throughput experiments [45]. However, microscopic imaging is challenging at low-light conditions due to the poor visibility of micro-level details. For instance, it is difficult to identify the cell structures with the microscope under low-light conditions. VELM [123], SalienceNet [124] and CARE network [125] can be applied to improve the visibility outcomes of optical microscopy and the video documentation of organelle motion processes at low-light conditions.

V-G Underwater Imaging

Underwater imaging often occurs low-light conditions, since the lightening in deep water in very weak. This is a challenge for underwater imaging, since the visibility in these conditions is very poor, especially for the object in the far distance [46]. For example, with the existing camera technology, it is difficult to clearly capture the details of coral reefs 30 meters below the sea level [126]. L2UWE [127], UIE-Net [128], and WaterGAN [129] have been proven useful for underwater imaging at low-light conditions.

VI Future Prospects

VI-A Uneven Exposure

In the Low-Light Image Enhancement (LLIE) domain, addressing uneven exposure remains a pressing issue. While current strategies proficiently brighten under-exposed regions, they tend to over-amplify already bright areas. The ideal LLIE technique would concurrently amplify dim sections and reduce brightness in over-illuminated zones. Future investigations could chart a promising course by creating a comprehensive real-world dataset, spotlighting images with both over- and under-exposure. Our contributions with SICE_Grad and SICE_Mix are initial steps in this direction, but there’s a pressing need for more encompassing datasets.

Henceforth, embracing frameworks such as the Laplacian Pyramid, exemplified in DSLR [130], or the multi-branch fusion approach from TBEFN [131], can help grasp the variances of multi-scale exposure. Additionally, the emerging realm of vision transformers, notably highlighted in [78], offers a unique perspective with their superior capacity to comprehend global exposure nuances compared to traditional CNNs. The preliminary work of IAT [39] in this space underscores potential avenues for refining techniques to model intricate exposure scenarios.

VI-B Preserving and Utilizing Semantic Information

Brightening low-light images without sacrificing their semantic information is a pivotal challenge in low-light image enhancement (LLIE). This careful balance affects both human interpretation and the performance of high-level algorithms. The significance of semantic information is evident in Yang et al.’s work [132], where semantic priors aid in navigation tasks. Similarly, Xie et al. [133] underscore the potential mishaps in domain adaptation when semantic details are overlooked. SAPNet [90] combines image processing with semantic segmentation, emphasizing the importance of retaining semantic details.

Going forward, we envision the development of datasets enriched with semantic annotations to guide LLIE models. Additionally, the integration of segmentation techniques with LLIE promises images that are both luminous and semantically robust. The convergence of these strategies could pave the way for the next generation of LLIE solutions.

VI-C Low-Light Video Enhancement

Low-light video enhancement (LLVE) presents unique challenges not found in image enhancement. Real-time processing is pivotal for videos, but many existing methods struggle to achieve this criterion. Even when real-time requirements are met, these methods can introduce temporal inconsistencies like flickering artifacts [1]. Chen et al. [104] ventured into extreme low-light video enhancement, underscoring the challenge of obtaining dynamic scene ground truths. Triantafyllidou et al. [134] proposed a synthetic approach to mitigate data collection hurdles, creating dynamic video pairs. Jiang et al. [135] focused on temporal consistency but their solution leans on specific preconditions or synthetic datasets. Zhang et al. [29] explored inferred motion from single images to remedy temporal inconsistencies, indicating the persistent challenge in ensuring stability across diverse scenes.

In essence, while advancements address aspects of temporal consistency, achieving it robustly in varied scenes remains an open challenge. The focus should shift toward enabling real-time processing, capturing diverse scene intricacies, and managing motion-related complexities. Future strategies might also benefit from lightweight architectures [136] and more comprehensive low-light video datasets.

VI-D Benchmark datasets

Presently, there’s an absence of a universally-accepted benchmark dataset for LLIE. This poses challenges on two fronts. Firstly, many LLIE models are trained on proprietary datasets in a supervised way, which might lead to domain-specific solutions that struggle to generalize. Secondly, testing on custom datasets can introduce bias, as they could be overly tailored to a specific method, thus not offering a fair playing field for comparisons.

Drawing inspiration from the success of CityScapes [105] and BDD100k [137], a reliable benchmark should include diverse real-world images and videos, ensure a balanced train-test split, and come with comprehensive annotations. Importantly for LLIE, this dataset should also span a wide range of lighting conditions and exposures to truly test the robustness and versatility of enhancement methods.

VI-E Better Evaluations Metrics

Current evaluation metrics for LLIE, such as PSNR and SSIM, often fail to capture the nuances important for human perceptual judgments. As a result, many researchers turn to user studies, which provide a more realistic assessment of image quality but are expensive and time-consuming. Deep learning-based metrics, harnessing the advanced perceptual capabilities of architectures like CNNs or transformers, offer an efficient alternative.

For instance, transformer-based metrics such as MUSIQ [138] and MANIQA [139], and CNN-based metrics like HyperIQA [140] and KonIQ++ [141], suggest a path forward. Future endeavors should be targeted at developing metrics of this kind that align with human judgments, while also being efficient and scalable for LLIE evaluations.

VI-F Low-Light and Adverse Weather

Vision in joint adverse weather and low-light conditions is inherently challenging. When combined, these conditions introduce unique complexities as the effects of limited lighting intertwine with disturbances from bad weather like rain, snow, haze, etc. A few pioneering works have ventured into this domain. Li et al. [142] proposes a fast haze removal method for nighttime images, leveraging a novel maximum reflectance prior to estimate ambient illumination and restore clear images. ForkGAN [143] introduces a fork-shaped generator for task-agnostic image translation, enhancing multiple vision tasks in rainy night without explicit supervision.

Current challenges in this field encompass handling multiple degradations at once, the lack of diverse datasets for all conditions, and the need for real-time processing, especially in applications like autonomous driving. Future research is likely to focus on developing versatile models, enriching datasets to reflect real-world adversities, fine-tuning network structures, and using domain adaptation to bridge knowledge across varying conditions.

VI-G Near-Infrared (NIR) Light Techniques

Emerging methods underscore the transformative role of NIR in low-light image enhancement. Wang et al. [144] utilize a dual-camera system combining conventional RGB and near-infrared/near-ultraviolet captures to produce low-noise images without visible flash disturbances. Wan et al. [145] employ NIR enlightened guidance and disentanglement to refine low-light images by separating structure and color components.

Despite these innovations, challenges remain: seamless hardware integration for synchronized RGB-NIR capture, precise image fusion without quality compromise, and the development of robust dual-spectrum datasets. Looking forward, research should optimize camera configurations, advance fusion algorithms, and curate comprehensive datasets. Addressing these can unlock NIR’s full potential in low-light imaging.

VI-H Noise Distribution Modeling

Recent advances in noise distribution modeling have taken significant strides in addressing low-light denoising. Wei et al. [146] present a model that emulates the real noise from CMOS photosensors, focusing on synthesizing more realistic training samples. Feng et al. [147] respond to the learnability constraints of limited real data, introducing shot noise augmentation and dark shading correction. Lastly, Monakhova et al. [148] push the boundaries with a GAN-tuned physics-based noise model, capturing photorealistic video under starlight.

However, the contemporary domain still grapples with refining noise models, enhancing the richness of training datasets, and managing the complexities of noise distributions in varied scenarios. Future endeavors should concentrate on improving noise modeling accuracy, expanding diverse training datasets, and integrating physics-based and data-driven techniques.

VI-I Joint Enhancement and Detection

Joint enhancement and detection in low-light conditions are gaining traction in the research community. Wang et al. [149] have proposed an adaptation technique to train face detectors for low-light scenarios without the need for specific low-light annotations. Ma et al. [150] focus on a parallel architecture, using an illumination allocator to achieve simultaneous low-light enhancement and object detection.

The current challenge lies in effectively transferring knowledge from normal lighting to low-light conditions and ensuring these models generalize well in diverse scenarios. Future work should focus on optimizing architectures for both enhancement and detection tasks, improving adaptability, and further exploring joint preprocessing techniques, as seen in other fields like deblurring [151] and denoising [152].

VII Conclusion

This paper presents a comprehensive survey of low-light image and video enhancement. Firstly, we propose SICE_Grad and SICE_Mix to simulate the challenging over-/under-exposure scenes under-performed by current LLIE methods. Secondly, we introduce Night Wenzhou, a large-scale, high-resolution video dataset with various illuminations and degradation. Thirdly, we analyze the critical components of LLIE methods, including learning strategy, network structures, loss functions, evaluation metrics, training and testing datasets, etc. We also discuss the emerging system-level applications for low-light image and video enhancement algorithms. Finally, we conduct qualitative and quantitative comparisons of LLIE methods on the benchmark datasets and the proposed datasets to identify the open challenges and suggest future directions.

References

- [1] C. Li, C. Guo, L.-H. Han, J. Jiang, M.-M. Cheng, J. Gu, and C. C. Loy, “Low-light image and video enhancement using deep learning: A survey,” IEEE transactions on pattern analysis and machine intelligence, 2021.

- [2] Y. P. Loh and C. S. Chan, “Getting to know low-light images with the exclusively dark dataset,” Computer Vision and Image Understanding, vol. 178, pp. 30–42, 2019.

- [3] H. Wang, Y. Chen, Y. Cai, L. Chen, Y. Li, M. A. Sotelo, and Z. Li, “Sfnet-n: An improved sfnet algorithm for semantic segmentation of low-light autonomous driving road scenes,” IEEE Transactions on Intelligent Transportation Systems, 2022.

- [4] M. Lamba, K. K. Rachavarapu, and K. Mitra, “Harnessing multi-view perspective of light fields for low-light imaging,” IEEE Transactions on Image Processing, vol. 30, pp. 1501–1513, 2020.

- [5] J. Cai, S. Gu, and L. Zhang, “Learning a deep single image contrast enhancer from multi-exposure images,” IEEE Transactions on Image Processing, vol. 27, no. 4, pp. 2049–2062, 2018.

- [6] X. Fu, Y. Liao, D. Zeng, Y. Huang, X.-P. Zhang, and X. Ding, “A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation,” IEEE Transactions on Image Processing, vol. 24, no. 12, pp. 4965–4977, 2015.

- [7] X. Guo, Y. Li, and H. Ling, “Lime: Low-light image enhancement via illumination map estimation,” IEEE Transactions on image processing, vol. 26, no. 2, pp. 982–993, 2016.

- [8] Y. Jiang, X. Gong, D. Liu, Y. Cheng, C. Fang, X. Shen, J. Yang, P. Zhou, and Z. Wang, “Enlightengan: Deep light enhancement without paired supervision,” IEEE Transactions on Image Processing, vol. 30, pp. 2340–2349, 2021.

- [9] L. Ma, T. Ma, R. Liu, X. Fan, and Z. Luo, “Toward fast, flexible, and robust low-light image enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 5637–5646.

- [10] W. Yang, S. Wang, Y. Fang, Y. Wang, and J. Liu, “From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 3063–3072.

- [11] L. Zhang, L. Zhang, X. Liu, Y. Shen, S. Zhang, and S. Zhao, “Zero-shot restoration of back-lit images using deep internal learning,” in Proceedings of the 27th ACM International Conference on Multimedia, 2019, pp. 1623–1631.

- [12] C. Guo, C. Li, J. Guo, C. C. Loy, J. Hou, S. Kwong, and R. Cong, “Zero-reference deep curve estimation for low-light image enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 1780–1789.

- [13] C. Li, C. Guo, and C. C. Loy, “Learning to enhance low-light image via zero-reference deep curve estimation,” arXiv preprint arXiv:2103.00860, 2021.

- [14] R. Liu, L. Ma, J. Zhang, X. Fan, and Z. Luo, “Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 10 561–10 570.

- [15] Z. Zhao, B. Xiong, L. Wang, Q. Ou, L. Yu, and F. Kuang, “Retinexdip: A unified deep framework for low-light image enhancement,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 3, pp. 1076–1088, 2021.

- [16] S. Zheng and G. Gupta, “Semantic-guided zero-shot learning for low-light image/video enhancement,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, pp. 581–590.

- [17] K. G. Lore, A. Akintayo, and S. Sarkar, “Llnet: A deep autoencoder approach to natural low-light image enhancement,” Pattern Recognition, vol. 61, pp. 650–662, 2017.

- [18] F. Lv, F. Lu, J. Wu, and C. Lim, “Mbllen: Low-light image/video enhancement using cnns.” in BMVC, vol. 220, no. 1, 2018, p. 4.

- [19] C. Li, J. Guo, F. Porikli, and Y. Pang, “Lightennet: A convolutional neural network for weakly illuminated image enhancement,” Pattern recognition letters, vol. 104, pp. 15–22, 2018.

- [20] L. H. Pham, D. N.-N. Tran, and J. W. Jeon, “Low-light image enhancement for autonomous driving systems using driveretinex-net,” in 2020 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia). IEEE, 2020, pp. 1–5.

- [21] G. Cheng, P. Zhou, and J. Han, “Learning rotation-invariant convolutional neural networks for object detection in vhr optical remote sensing images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 54, no. 12, pp. 7405–7415, 2016.

- [22] R. Wang, Q. Zhang, C.-W. Fu, X. Shen, W.-S. Zheng, and J. Jia, “Underexposed photo enhancement using deep illumination estimation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 6849–6857.

- [23] M. Zhu, P. Pan, W. Chen, and Y. Yang, “Eemefn: Low-light image enhancement via edge-enhanced multi-exposure fusion network,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 13 106–13 113.

- [24] Y. Zhang, J. Zhang, and X. Guo, “Kindling the darkness: A practical low-light image enhancer,” in Proceedings of the 27th ACM international conference on multimedia, 2019, pp. 1632–1640.

- [25] K. Xu, X. Yang, B. Yin, and R. W. Lau, “Learning to restore low-light images via decomposition-and-enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2281–2290.

- [26] L.-W. Wang, Z.-S. Liu, W.-C. Siu, and D. P. Lun, “Lightening network for low-light image enhancement,” IEEE Transactions on Image Processing, vol. 29, pp. 7984–7996, 2020.

- [27] S. Moran, P. Marza, S. McDonagh, S. Parisot, and G. Slabaugh, “Deeplpf: Deep local parametric filters for image enhancement,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 12 826–12 835.