Low-Energy Electron-Track Imaging for a Liquid Argon Time-Projection-Chamber Telescope Concept using Probabilistic Deep Learning

Abstract

The GammaTPC is an MeV-scale single-phase liquid argon time-projection-chamber gamma-ray telescope concept with a novel dual-scale pixel-based charge-readout system. It promises to enable a significant improvement in sensitivity to MeV-scale gamma-rays over previous telescopes. The novel pixel-based charge readout allows for imaging of the tracks of electrons scattered by Compton interactions of incident gamma-rays. The two primary contributors to the accuracy of a Compton telescope in reconstructing an incident gamma-ray’s original direction are its energy and position resolution. In this work, we focus on using deep learning to optimize the reconstruction of the initial position and direction of electrons scattered in Compton interactions, including using probabilistic models to estimate predictive uncertainty. We show that the deep learning models are able to predict locations of Compton scatters of MeV-scale gamma-rays from simulated 500 m pixel-based data to better than 1 mm root-mean-squared error, and are sensitive to the initial direction of the scattered electron. We compare and contrast different deep learning uncertainty estimation algorithms for reconstruction applications. Additionally, we show that event-by-event estimates of the uncertainty of the locations of the Compton scatters can be used to select those events that were reconstructed most accurately, leading to improvement in locating the origin of gamma-ray sources on the sky.

1 Introduction

Astrophysical sources that produce MeV-scale gamma-rays are some of the least well characterized over the entire electromagnetic spectrum (see, for instance Caputo et al. (2019)). Such photons Compton scatter multiple times over a relatively large volume (Figure 1), and imaging with Compton telescopes requires an accurate measurement of the energy and locations of these scatters with minimal loss of energy in inert material. This can be a formidable instrumentation challenge, but if accomplished, enables kinematic reconstruction of the path, and hence initial direction, of the gamma-ray as we describe in more detail in Section 2.

We are developing a detector concept, GammaTPC, that is based on liquid argon (LAr) time-projection-chamber (TPC) technology (Aramaki et al., 2022). The energy and spatial resolution promises to be comparable, if not better, than that of the central silicon tracking detectors proposed for missions such as AMEGO-X (Fleischhack, 2021). That is, energy depositions measured at a few percent level near 1 MeV, and, as discussed in this paper, the location of scatter interactions measured to better than mm, and a powerful determination of the electron track direction. Moreover, the TPC readout potentially allows this capability over a much larger instrument for the same cost, with a highly uniform response over the active volume. The science opportunities for such an instrument would be substantial. The most complete catalogs of MeV sources include only dozens of sources (V. Schönfelder, 2000), as compared to the thousands of sources seen by Fermi-LAT (Abdollahi et al., 2020), or the billions of sources seen or expected by optical surveys such as the Dark Energy Survey (Abbott et al., 2018), Kilo-Degree Survey (Kuijken et al., 2019), and Vera Rubin Observatory/Legacy Survey of Space and Time (Ivezić et al., 2019). Extrapolations from X-ray and higher-energy gamma-ray data suggest that many source types are expected to have spectral energy density peaks in the MeV range. An instrument with a very large effective area, large field of view, and high efficiency — which is the goal of our design — will be key in observing a large number of transients, including the electromagnetic counterparts to gravitational-wave detections of compact-object mergers, such as GW170817 (Abbott et al., 2017). It will also open a new window to search for signatures of decaying dark matter (Bartels et al., 2017). Furthermore, given the presence of nuclear transition lines, as well as the 511 keV electron-positron annihilation line, the bright diffuse galactic signal observed in the MeV band (see, e.g., Weidenspointner et al. (2000)) can provide otherwise unobtainable information about the evolution and particle content of our galaxy.

A key and novel feature of the GammaTPC concept is a high-fidelity pixel-based readout of the electron-recoil tracks that result from each Compton scatter of the gamma-ray, as shown in the right panel of Figure 1. To make optimal use of this feature, we need methods to accurately determine both the interaction locations (the heads of the tracks) and their initial directions from the pixel-based data. This is possible at these electron energies because the large amount of energy deposited in the Bragg peak at the end of the track makes the tail distinguishable from the head. Electron track shapes vary widely due to the random nature of the multiple scattering that electrons undergo, which makes algorithmic reconstruction of the initial scattering position and direction nontrivial. Bellazzini et al. (2003) and Black et al. (2007) used moment-based methods to do this, which were effective for detecting the distribution of polarized X-rays. Li et al. (2017) developed a graph-based technique and showed that it significantly outperformed the moment-based techniques, and Yoneda et al. (2018) applied a modified version of it to a proposed Compton camera detector.

Since the development of the moment-based techniques, the field of deep learning has advanced dramatically, most importantly for this case in the area of computer vision. Because a human can usually identify the head and tail of an electron track just from visual inspection based on the Bragg peak (e.g. in Figure 1), modern deep learning computer vision techniques have the potential to perform well in locating the initial location and direction of a Compton-scattered electron. Indeed, Ikeda et al. (2021) have already developed a model based on Convolutional Neural Networks (CNNs) for the Electron Tracking Compton Camera (ETCC), while Peirson et al. (2021) have developed a model for the IXPE detector making use of ensembles of deterministic neural networks for uncertainty estimation of the electron track direction.

In contrast to the ETCC and IXPE, GammaTPC would be a liquid-based detector, and therefore best able to reconstruct electrons with energies of (100 keV) or above, while the techniques referenced above were all designed for electrons of (10 keV). Furthermore, our detector would provide full 3D images of electron tracks, unlike strip-based detectors which create two 2D images to represent 3D information, or pixel detectors like IXPE that integrate the charge over each pixel for the entire event, also producing a 2D image. Therefore, while some of the same basic concepts that informed Ikeda et al. (2021) also underpin our approach, our models are designed differently to accommodate these distinctions. We show that we are able to achieve good accuracy in electron track head and initial direction reconstruction with two deterministic 3D CNNs, with mean absolute error in position less than 0.8 mm, and information on initial track direction for electron energies as low as 300 keV.

Additionally, having an estimate of the uncertainty in the position and initial direction of Compton-scattered electrons allows us to select data for which the predictive uncertainty is low, which we show improves accuracy in determining the direction of incident gamma-rays. We also expect that reconstruction of the correct sequence of gamma-ray interactions will improve with an uncertainty estimate, although we have not yet investigated this and intend to do so in future work.

Commonly used deterministic deep learning models do not produce prediction intervals or uncertainties for their predictions, making it impossible to tell when a prediction may be reliable. As an example, state of the art deterministic neural networks are frequently unable to recognize out of sample examples and habitually make incorrect predictions for such cases (Amodei et al., 2016; Nguyen et al., 2015; Hendrycks & Gimpel, 2016). To this end, we compare and contrast different deep learning uncertainty quantification (UQ) approaches for predicting the initial location and direction of Compton-scattered electrons. We select the best performing UQ method and apply it to the reconstruction problem. We hope this will provide a guide-map for subsequent investigators, for instance in similar reconstruction tasks involving particle tracking data.

2 The GammaTPC Instrument Concept and Compton Event Reconstruction

Liquid-noble TPC technology has undergone tremendous development in recent years, having a transformative impact in direct dark matter searches (Aprile et al., 2018; Akerib et al., 2020). It is now the chosen technology for the massive DUNE neutrino program (Abi et al., 2020), showing the scale of investment the particle physics community is making into this technology. In an upcoming article (Shutt et al., 2023), we will discuss in detail how this technology will be implemented for GammaTPC. Here we provide a description of how the parts of the instrument relevant to the imaging of electron tracks will function.

The GammaTPC instrument concept is shown schematically in Figure 2. Particle interactions in the liquid argon target create scintillation light and free electrons. An applied electric field drifts electrons to the anode readout planes where their collection on the pixels is digitized, enabling measurement of their locations, effectively pixelating the charge readout in 3 dimensions. Measurement of the light by silicon photomultipliers on the cathode planes establishes the depth of events, , as the time difference between the prompt scintillation signal (with 6 ns and 1.6 s time constants) and the arrival of the slower (170 s over 20 cm) drifting electrons. The event energy is inferred from a combination of the charge and scintillation signals. The high particle rate in low earth orbit combined with the relatively slow charge drift requires vertical segmentation of approximately 20 cm. The curved geometry provides a very large field of view with relatively uniform response, and also minimizes the mass of the carbon-fiber pressure vessel. A 10 kV voltage difference between anode and cathode establishes a 0.5 kV/cm charge drift-field. The lateral segmentation is provided by thin reflecting walls. The overall detector thickness, here 40 cm, is chosen to have a high interaction efficiency for gamma-rays up to 10 MeV.

The core advantage of a TPC is that it provides 3D readout of a uniform target volume with sensors deployed on only a portion of the 2D surfaces. This enables a large instrument with relatively few channels, and hence low cost and power. This in turn allows a high-granularity readout, which directly leads to good angular resolution, while the minimal interior dead material maximizes event reconstruction efficiency. In our case, the readout, which builds on recent advances in cryogenic complementary metal-oxide semiconductor (CMOS) charge-readout (Pena-Perez et al., 2020; Dwyer et al., 2018; Adams et al., 2020), uses a triggered, ultra low power sub-mm pixel readout to provide sub-mm 3D sampling of the tracks. The trigger for pixel readout is provided by a separate set of “coarse grid” wire electrodes with a nominal 1 cm pitch. This wide spacing avoids loss of signal due to diffusion spreading the signal over several channels and thus will provide the charge integral. When read out with cryogenic CMOS technology, we expect noise of 20 electrons per wire of 2-3 pF capacitance (Deng et al., 2018), with the signal shared amongst several wires. Initial studies show an energy threshold per scatter set by this coarse grid readout near 10-20 keV. The event energy comes from the summed coarse grid charge signals from all scatters, combined with the integral light signal. The pixel readout, by contrast, is used primarily to image the electron tracks for extraction of the location and direction of Compton electron recoils, the analysis of which is the focus of this paper. The pixels have substantially lower capacitance than the coarse wires, but also shorter shaping times, and we estimate their noise to be 20-30 electrons.

A key event reconstruction challenge for this technology is to correctly determine the true sequence of the interactions, or at minimum, correctly establish the first two interactions. This is primarily done by kinematic testing of possible sequences, with the precision of the measurements of the energies and positions of the interactions driving the efficiency of selecting the correct sequence. The direction of the original gamma-ray then lies at an angle relative to the vector established by the locations of the first two Compton scatters, as seen in Figure 1. This angle is determined by the energy lost by the gamma-ray in the first Compton interaction. This creates a ring on the sky of possible locations for the original gamma-ray. For an ensemble of gamma-rays from a given source, the overlap of these rings gives the specific location of the source. Because neither the energies nor interaction locations are perfectly measured, there is an effective width to these rings, and therefore an uncertainty in the reconstructed source position. Minimizing this width, i.e. achieving the best possible pointing, is a key driver of the overall instrument sensitivity, and is achieved by having the most precise and accurate measurements of the energies and locations of the Compton interactions, which also maximizes the efficiency and power of the kinematic tests used to reconstruct the event. It is also important to choose a low Z material to minimize Doppler broadening (Zoglauer & Kanbach, 2003).

If the initial track direction can also be estimated, the ring reduces to an arc on the sky, depending on the precision of the measurement. We also expect that the initial track direction estimate will improve the quality of kinematic tests used for reconstruction, though the power of this has not yet been quantified. This would have the strong benefit of reducing the number of gamma-rays needed to locate a source.

3 Machine Learning Based Electron Track Reconstruction

We now turn to the application of data driven modeling techniques to electron track reconstruction and the estimation of predictive uncertainty, which is the primary focus of this work. There are a variety of different modeling algorithms that can be used for the electron track reconstruction task. A guiding metric for selection amongst them would be the degree to which the inductive bias of each algorithm agrees with the nature of the problem. Inductive bias refers to the set of assumptions a learning algorithm uses to generalize beyond its training data (Mitchell, 1980; Baxter, 2000). For the estimation of the track head and initial direction, we require translational invariance. In deep learning architectures, convolutional layers are equivariant to spatial translation. When coupled with pooling layers, they are approximately translation invariant. Consequently, CNN-based feature extraction is not affected by the absolute position of the feature in the feature map. Thus, we use a CNN-based model for estimation, treating the pixelated electron track as a 3D image. We use this model to estimate the track head, the initial scattering direction of each electron, as well as the uncertainty on each estimate produced by the model.111Jupyter notebooks that will reproduce the results in this paper can be found at https://gitlab.com/probabilistic-uncertainty-for-gammatpc. Other code used in this study, such as the detector response model, can be made available upon request.

3.1 Data simulation and model evaluation technique

We prepared the training data used to develop the models presented in this paper in a two-step process. We used the PENetration and Energy LOss of Positrons and Electrons (PENELOPE) code (NEA, 2019) to generate raw electron tracks, and then subsequently applied detector-response effects with a custom code. PENELOPE provides a detailed microphysical simulation of electron energy loss processes in matter, including simulations of elastic and inelastic scattering off of other particles in the media, bremsstrahlung emission, and generation of delta-rays. The custom code voxelizes the raw-track energy depositions, simulating digitized pixel readout with a specified pixel pitch determining the pixel spacing. The spacing is determined by the specified electric field, electron mobility, and digitization frequency. The electric field is set to 500 V/cm, and the electron mobility is calculated according to Equation 21 from Li et al. (2016). The digitization frequency is then set to achieve an effective spacing equal to the spacing. For the track head reconstruction, we simulated data with a 500 m pixel pitch at 4 different drift depths (1 cm, 5 cm, 10 cm, and 20 cm)222These parameters are a good starting point based on initial instrument design considerations, but may eventually change as the design evolves.. The training data for the track direction reconstruction also uses a 500 m pitch, and is simulated at a drift depth of 5 cm. Each sample therefore consists of a 3D array of floating-point numbers, representing a simulated pixel readout at each position in a 3D grid. The size of this grid in each training data set is given by the bounding box of the largest sample in that data set. All other simulated charge depositions are zero-padded symmetrically to match that dimension.

All simulations compute detected quanta from first principles based on raw energy depositions in a detailed manner similar to that of the Noble Element Simulation Technique (NEST) (Szydagis et al., 2011). All simulations also have a transverse diffusion coefficient of 12 cm2/s, taken from Li et al. (2016). The longitudinal diffusion coefficient is computed from Equation 23 of Li et al. (2016), and is approximately 6 cm2/s, giving 0.4 mm root-mean-squared (RMS) diffusion over a 10 cm drift. We also apply an effective electronic noise of approximately 30 electrons, and a simulated digitization threshold of 3 above noise. We simulated a number of electrons equal to within 6% at 5 energies (50, 300, 500, 750, and 1,000 keV), obtaining 24,472 simulated electrons in total. We then split the data randomly into training and testing sets in a 90:10 ratio for the track head and direction deterministic models, and a 92.5:7.5 ratio for the track head uncertainty quantification model.

We assess subsequent performance of the trained models not only with standard measures such as accuracy and coverage, but also with figures-of-merit for the quality of the overall Compton event reconstruction. We do this using data simulated with the Medium-Energy Gamma-ray Astronomy library, or MEGAlib (Zoglauer, 2019). After implementing the detector geometry using MEGAlib’s built-in geometry specification, we generate 1,000 keV gamma-rays originating at 64 degrees from the zenith using the MEGAlib FarFieldPointSource generator. We then process the generated energy depositions with custom code that applies the detector response, including a parameterization of the interaction locations (with uncertainties) based on the output of the trained neural networks on test electron track simulations. We present a description of this parameterization in Sections A.1 and A.2. We then feed this simulated detector output, in the form of a MEGAlib-specific file type, into the MEGAlib reconstruction code revan, which determines the time-ordering of the Compton scatters. At this point we remove any events where revan was unable to determine the first two Compton scatters correctly. Because events with an incorrect reconstruction of the scatter order tend to have significantly larger pointing error, this selection will produce a smaller pointing error than would occur in a real experiment. However, since we are primarily concerned with understanding specifically the behavior of the electron scatter position reconstruction, and not the Compton ordering reconstruction, we choose to do this so as to eliminate that possibly confounding source of error from our assessment of the performance of our models. These events are then then used by a modified version of the ComPair Python library (Moiseev et al., 2015) to compute the location of and uncertainty in the gamma-ray origin.

3.2 Electron Track Head Reconstruction

In the first model, we use a deterministic CNN-based model to predict the location of the electron track head. We report the architecture of the CNN used for electron track head reconstruction in Figure 3. The input to the model is a 3D image of an electron track from the detector. We include spatial dropout (Tompson et al., 2015) (as opposed to conventional dropout (Srivastava et al., 2014)) and Batch Normalization layers after each convolutional layer to ameliorate overfitting. We use a 3-dimensional global average pooling operation at the end of the feature extraction stage, as opposed to a conventional flattening operation, to reduce the parameters in the ensuing fully connected layers and improve generalization. The final output from the fully connected layers is a 3-dimensional regression prediction for the location of the electron track head. The model architecture and ancillary hyperparameters were established based on Bayesian Optimization (O’Malley et al., 2019), followed by subsequent manual fine tuning. This model was implemented with Keras (Chollet et al., 2015), using the Adam optimizer (Kingma & Ba, 2014), a mean-squared-error loss function, and was trained for 200 epochs on an NVIDIA Tesla A100 GPU at the SLAC Scientific Data Facility, which took about 10 minutes.

Since the model predicts the location of the , , and components of the electron track head independently, and treats each physical dimension identically, we can combine the predictions of all 3 dimensions and analyze them in the same distribution. The distribution of errors on the test data set in all 3 dimensions is shown in Figure 4, broken down by initial electron energy. However, from an applications perspective, the goal is a sub-mm accuracy of the electron track head location across all three spatial dimensions. Therefore, we add in quadrature the , , and error for each prediction to obtain the absolute error (i.e. the Euclidean distance between the true and predicted electron track head position) for each sample in the test data set. We show this distribution in Figure 5. Clearly the distribution of errors is wider for higher energy electron tracks. Because the beginning of an electron track tends to become straighter as the electron’s initial energy increases (this effect is illustrated in more detail in Section 3.3), one might expect that the beginning of the track should therefore become easier to identify, not harder. We hope to investigate this with a future interpretability study.

We plot the mean of each of these distributions in Figure 6, as a function of drift depth. As expected, the RMS error increases for all energies with drift depth, consistent with diffusion effects spreading out the signal and making it harder to pinpoint the track head location. However it is important to note that this increase with drift length is modest, and we readily achieve sub-mm accuracy up to at least a 20 cm drift length. There are no error bars given for the points in Figure 6 because the statistical errors are negligible, and the deterministic approach does not provide a good way of estimating a systematic uncertainty. We approach this problem with uncertainty estimation techniques in Section 3.4.

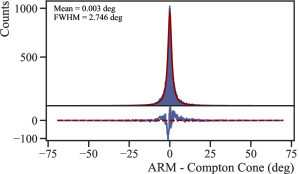

In Figure 7 we present an assessment of the deterministic model using the MEGAlib-simulated performance of the GammaTPC instrument. We apply a parameterization of the deterministic model output to the simulated data produced by MEGAlib and our custom detector-effects code, which is described in detail in Appendix Section A.1. The distribution of the Angular Resolution Measure (ARM), which is the angular difference between the true and reconstructed origin of gamma-rays, is peaked at zero degrees, and is shown fitted to a Lorentz distribution333We also attempted a fit to a Voigt profile, but the best fit contained no Gaussian component and was therefore also a Lorentzian.. The Full-Width-at-Half-Maximum (FWHM) of this distribution for a 5 cm drift depth (as shown) is 2.746°.

3.3 Electron Scatter Direction Reconstruction

We also developed a model to reconstruct the direction of the initial electron scatter. Obtaining the direction is very useful, because, as explained in Section 2, breaking the axial degeneracy of the reconstructed gamma-ray origin can greatly improve the pointing accuracy of a well-reconstructed event.

The model is mostly identical to that used in Section 3.2. The difference is that the final 3 outputs shown Figure 3 are concatenated with the predicted electron track head position produced by the trained model from Section 3.2, and then reduced with a feed-forward layer back to 3 numbers which predict the , , and components of the scattered electron’s initial direction unit vector. In principle the initial direction vector has only two independent variables. Removing the degeneracy of the third fitted parameter may further improve the direction reconstruction in future studies. The model was implemented with Keras, using the Adam optimizer, a mean-squared-error loss function, and was trained for 20 epochs on an NVIDIA Tesla A100 GPU at the SLAC Scientific Data Facility, which took about 1 minute. In Figure 8 we show the performance of this model using a histogram of the cosine of the angle between the predicted electron direction and the true electron direction. A model that can reconstruct the direction with perfect accuracy would present as a delta function at 1 in this plot, while random guessing would produce a flat distribution. Since the distribution is skewed towards higher values of , this indicates it is able to extract useful information on the initial electron direction from the pixel data.

It can be seen in Figure 8 that the quality of the direction reconstruction increases with electron energy, as the distributions peak more towards 1. Specifically, we find that the median angle between the true and predicted initial electron direction is 87°, 71°, 59°, 50°, and 48° for 50, 300, 500, 750, and 1,000 keV electrons respectively.444A median angle of 90° would indicate no ability to reconstruct the direction, while a median angle of 0° would indicate perfect reconstruction. This could be because high energy electrons travel in a straighter line initially, making it easier to establish the initial direction the electron was traveling. This effect is visible in Figure 9, which shows the cosine of the angle between each electron’s current and initial direction of travel, vs. the distance between its current and original positions. By comparing the 1,000 keV electrons (purple) and the 300 keV electrons (green), we see that the 1,000 keV electrons travel further before starting to deviate from their initial direction (i.e. moving away from a -value of 1). This trend of higher-energy electrons traveling straight initially for longer than low-energy electrons holds up as a general trend in this energy regime. This runs counter to the effect of energy on position reconstruction, where lower-energy scatters create more localized charge clouds, which facilitate better position reconstruction. The fact that these effects offset is encouraging, as it suggests that superior electron direction reconstruction for high-energy electrons could counteract lower-quality interaction position reconstruction.

3.4 Uncertainty Quantification for Deep Learning Based Electron Track Head Reconstruction

Deep learning models are often considered to be high-fidelity models with accurate predictive powers. However, even predictions from a well-trained neural network may contain substantial errors and uncertainties due to bias, noise and complexity inherent in the data, the volume of the training data, the error minimum chosen during optimization, etc. In this light, it is important to be able to construct reliable prediction intervals in addition to point predictions. This can enable otherwise unavailable techniques when the data-driven model is to be used in scientific discovery. Reliable uncertainty quantification for deep learning has been identified as a priority for scientific machine learning (SciML) applications (Baker et al., 2019; Ihme et al., 2022). In our case, since electron tracks are highly random, they will have great diversity in shape, particularly at higher energies. It is reasonable to assume that the accuracy of a machine learning model may vary as a function of electron energy and/or track shape. The ability to select only tracks where the model has high confidence in its reconstruction should improve the overall pointing accuracy of the instrument.

There exist different algorithms for uncertainty quantification for deep learning models. These include purely Bayesian approaches, non-Bayesian approaches and hybrid methodologies. A priori, one is typically unable to determine the best algorithm for a specific application. As of yet, there have been very few studies focusing on evaluating, comparing and contrasting these approaches for scientific problems. This is exacerbated by the fact that most computer science studies comparing these algorithms have focused on classification problems, as opposed to problems requiring regression as is the case in many science applications, including this one. To this end, we evaluate different deep learning uncertainty quantification approaches on the data sets for electron track head reconstruction. These include Bootstrapped Ensembles of neural networks, Monte Carlo Dropout, Probabilistic Neural Networks (PNNs), and Evidential Deep Learning, a brief description of which follows. Additionally, we also outline the hyperparameters of the algorithm and their selection using the validation dataset.

Bootstrapped ensembles use bootstrap aggregation for uncertainty estimation. Bootstrap data sets of samples each are produced for training each individual model in the ensemble by sampling uniformly with replacement from the original training data set of unique samples. Individual neural networks in the model ensemble are trained separately on each of these bootstrap data sets. The predictions of the individual trained models in the ensemble are averaged to get a mean prediction. The variance in predictions provides a measure of the predictive uncertainty. Boostrapped ensembles are very commonly used in uncertainty quantification for scientific problems (Manly, 2018; Jeong & Maddala, 1993). Herein, the key hyperparameter is the number of constituent neural network models in the ensemble. In this investigation, the number of neural network models in the ensemble was increased until the coverage and MPIW became constant over the validation dataset, beyond a value of individual neural network models in the ensemble. Thus, the comparison and the comparative results correspond to a bootstrapped ensemble with neural network models.

Monte Carlo Dropout is a Bayesian technique introduced in Gal & Ghahramani (2016), where one approximates the network’s posterior distribution of class predictions by collecting samples obtained from multiple forward passes of dropout regularized networks. Dropout regularization (Srivastava et al., 2014) involves random omissions of feature vector dimensions during training time, which is equivalent to masking rows of weight matrices. Inclusion of dropout layers mitigates model overfitting and is empirically known to improve model accuracy (Srivastava et al., 2014). A key observation of Gal & Ghahramani (2016) is that under suitable assumptions on the Bayesian neural network prior and training procedure, sampling predictions from the BNN’s posterior is equivalent to performing stochastic forward passes with dropout layers fully activated. In this manner, the full posterior distribution may be approximated by Monte Carlo integration of the posterior predicted probability vector. Monte Carlo Dropout networks may also be interpreted as a form of ensemble learning (Srivastava et al., 2014), where each stochastic forward pass corresponds to a different realization of a trained neural network. In the usage of MC Dropout, key hyperparameters include the placement and number of dropout layers, the strength of the dropout rate (Kendall et al., 2015; DeVries & Taylor, 2018), the number of forward passes sampled during prediction, etc. During model selection, the best performance over the validation set was engendered by the architecture utilizing a dropout layer after each convolutional block and a single dropout layer after the global average pooling operation. Explicitly, in this architecture, no dropout was performed between the input and the first convolutional layer and neither between the last dense layer and the output. Additionally, in between the convolutional layers the best dropout rate was and in the dense layers the best dropout rate was . All the hyperparameters were selected using cross-validation. While using higher dropout rates led to larger prediction intervals, they also had a deleterious effect on the model accuracy leading to poorer calibration of the uncertainty estimates. Beyond 50 samples for prediction, we did not observe any improvement in the metrics and thus, 50 samples in the prediction were used.

Probabilistic Neural Networks (PNNs) use a proper scoring rule (Gneiting & Raftery, 2007) to evaluate their performance. For regression tasks, this scoring rule is the negative log likelihood (NLL). Consequently, the training of the model ascertains network parameters that give an optimal mapping from the space of raw features to the mean and standard deviations of the predictions, so as to minimize the NLL over the training data set. Strictly speaking, this approach entails no additional hyperparameters and only requires a change to the cardinality of the output and the usage of the NLL as the loss function to be optimized. However, the learning rate of the Adam optimizer was adjusted to ensure optimal convergence for this algorithm as well.

Evidential Deep Learning (EDL) (Amini et al., 2020), refers to a class of deep neural networks that exploit conjugate-prior relationships to model the posterior distribution analytically. EDL methods have the immediate advantage of requiring only one single pass to access the full posterior distribution, at the price of restricting the space of possible posterior functions to that containing only those which have the appropriate conjugate prior forms. They also only require one to modify the loss function and the final layer of its deterministic baseline, which allows flexible integration with complex, hierarchical deep neural architectures. Under the assumption that the input data are drawn from a Gaussian distribution of unknown mean and standard deviation, the conjugate prior is then a normal-inverse-gamma distribution. In our case, we place an independent prior on each Cartesian coordinate of the electron track head position. For EDL-based regression, the hyperparameter to be determined is the coefficient for the regularization loss defined by Equation 9 of Amini et al. (2020) (thus, for EDL, total loss is the sum of the NLL loss and the product of the regularization coefficient with the regularization loss). While comparing the EDL model to the other UQ models, the regularization coefficient was utilized at a value of 1.

To ascertain the quality of the uncertainty estimates from the different algorithms, we compute three metrics: accuracy, coverage and sharpness. Accuracy denotes the fidelity of the mean prediction, evaluated via the RMS error on the test data set. Coverage (Romano et al., 2019) measures the calibration of the uncertainty estimates. The prediction interval is the estimate provided by a model of the range in which the target value will lie with a certain probability, given the input features. If the actual value lies in the predicted interval, it is said to be covered by the interval. Coverage measures the ratio of test samples that lie in the prediction interval of a specific quantile. A critical consideration for prediction intervals is their reliability at reflecting the quantiles in the distribution of the target quantity. As an illustration, a prediction interval that subsumes less than of the target samples will lead to predictions with higher error than expected, which could have significant consequences, depending on the application. Prediction intervals with incorrect coverage indicate poorly calibrated models, where calibration here indicates the degree to which the predicted uncertainty matches the true underlying uncertainty in the data. In our comparison between algorithms in Table 1, we utilize a uniform prediction interval of for all the algorithms and report the coverage at this prediction interval. Finally, sharpness measures the size of the prediction interval, at a fixed value of coverage. We measure the sharpness of the prediction intervals using the Mean Prediction Interval Width (MPIW) (Pearce et al., 2018), which is the average size of the prediction intervals over the test data set. We require that the prediction intervals provide a minimum value of the coverage, while maintaining the MPIW to low values. To ensure a fair comparison, we compute the MPIW at a fixed coverage, by increasing the prediction interval until a set coverage is reached before making the calculation. Specifically, we measure MPIW values at prediction intervals that ensure coverage over the test data set.

The evaluation of deep learning uncertainty quantification algorithms for the electron head reconstruction is reported in Table 1. We observe that the ensemble-based approaches lead to a decrease in prediction error over the single deterministic model. This is due to the aggregation over decorrelated individual models and forms the basis of bagging-based meta-models (Zhou, 2021). However, the variance between the predictions of individual models in the ensemble is not a reliable indicator of predictive uncertainty. As an illustration, we find that the Bootstrapped ensembles are leading to a highly overconfident estimate of the predictive uncertainty with a coverage of only for a prediction interval. To this end, we would caution against reliance on uncertainties from Bootstrapped ensembles in use cases similar to this one. The Evidential Deep Learning approach gives well calibrated uncertainties, where the coverage is for a prediction interval. The mean prediction interval width generated by the Evidential Deep Learning models is moderated as well. This indicates that the EDL models provide sharpness of the predicted intervals. Thus, based on this empirical study, we utilize the Evidential Deep Learning approach for uncertainty estimation in this investigation. After selection of the EDL approach, we tuned the regularization coefficient to 0.02 to enforce correct coverage. The model uses the Evidential Deep Learning for Regression framework (Amini et al., 2020). Herein the model architecture is identical as the prior model in Section 3.2. However, the final layer outputs the parameters of the posterior distribution on the position of the head, as opposed to the actual estimate for the position of the head. The final model was trained using the Adam optimizer with a learning rate of over 1500 epochs. It was trained at the SLAC Scientific Data Facility on an NVIDIA Tesla A100 GPU, which took about 90 minutes.

| Algorithm | RMSE | Coverage | MPIW |

|---|---|---|---|

| Single Deterministic Model | 0.43 | – | – |

| Bootstrapped Ensembles | 0.39 | 0.22 | 4.75 |

| MC Dropout | 0.40 | 0.46 | 4.33 |

| PNN | 0.42 | 0.41 | 4.47 |

| Evidential Deep Learning | 0.40 | 0.79 | 3.35 |

Figure 10 shows several examples of simulated 1,000 keV electron tracks. The amount of charge in each pixel is given by the colored scatter plot of circles, where the size and color of each circle is proportional to the charge signal. The true and reconstructed origin of the electron track are given by the orange and green dots respectively, with an ellipsoid around the reconstructed origin representing the predicted uncertainty. The examples have been chosen to illustrate different possible outcomes of the reconstruction. Panels 10a, 10b, and 10c show three different views of the event with the largest absolute reconstruction error. Here the model appears to have identified the wrong end of the track as the head. However, it has compensated for this mistake somewhat by correctly estimating a large error on the dimension. While perhaps not obvious from these examples, it is the case that the uncertainty ellipsoids have no diagonal components. This is because the 3 cardinal directions are treated independently by the model, and so it lacks the ability to include non-diagonal components in the covariance matrix for the uncertainty, leading to ellipsoids with axes always aligned with the cardinal axes. Figure 10d shows the 20 cm drift track with the smallest reconstruction error. Here we clearly see the Bragg peak in the lower center of the image (larger/lighter circles), and the model has correctly identified that the opposite end of the track is the true track head.

To verify the model’s uncertainty calibration, it is instructive to plot the output of the model in a 2D space: the model-predicted squared error on the electron track head position against the true prediction error (i.e. the difference between the true and predicted position of the electron track head). As can be seen in Figure 11, these two parameters have a parabolic relationship, and achieve a small non-zero minimum value for the predicted squared error at a true error of zero. This is expected, since the posterior distribution of the predicted squared error is inverse-gamma, which approaches zero exponentially as its variable approaches zero. This can be seen in the marginal Y-axes of Figure 11. As can also be seen in the marginal X-axes of Figure 11, the 1D distribution of the true error is normally distributed, as expected given the normal-inverse-gamma posterior distribution of the EDL loss function.

Similar to Figure 5, Figure 12 shows the distribution of absolute errors for the trained EDL model at a 5 cm drift depth, broken down by initial electron energy. We show the dependence on drift depth of the RMS error for each initial electron energy in Figure 13. Since the EDL approach provides us with a modeled uncertainty, we can turn that into an estimate of the systematic error on the points in Figure 13. The error bars on the points in Figure 13 are an estimate of the variation in electron track shapes, leading to incorrect location predictions, that is not modeled by the EDL approach. Unmodeled track shape variation can arise not only from imperfect design/training of the model, but also from variation at a scale too small to be distinguishable by the model, once the simulated drift and detector response has been applied. While there does appear to be a clear increasing trend of the RMSE as the drift length increases, the fluctuations about that trend mostly seem to be within the estimated systematic uncertainties. For each point, its error bars are constructed by 1) fitting inverse-gamma distributions to slices of the version of Figure 11 with the appropriate initial electron energy and drift depth, 2) computing the standard deviation of those inverse-gamma distributions, 3) computing the mean standard deviation across the slices, weighted by the number of samples in each slice, 4) using that mean standard deviation to compute the uncertainty on the mean squared error by dividing it by where is the number of samples, and finally 5) propagating the uncertainty on the mean squared error through to the root mean squared error. The statistical uncertainty on the points in Figure 13 is negligible. The RMS error increases as a function of drift depth for all electron energies.

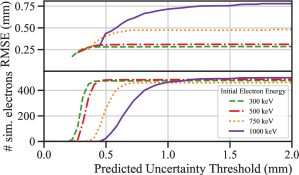

Figure 14 shows the RMS error as function of an applied threshold in the predicted uncertainty, meaning that electron tracks with an uncertainty on the reconstructed track head position greater than the threshold are excluded from the calculation of the RMS error. The top panel shows the effect of excluding these tracks on the RMS error, while the bottom panel shows how many events are retained as a function of the uncertainty threshold. For reference (as seen in Figure 6), in the deterministic model the RMS error for 1,000 keV electron tracks at a 5 cm drift depth is slightly more than 0.6 mm. According to Figure 14, an RMS error similar to that of the deterministic model can be obtained by placing a threshold of approximately 0.6 mm on the predicted uncertainty. However, this requires rejecting approximately 75% of all 1,000 keV electron tracks.

Having verified the model’s calibration, we know that removing events with high predicted uncertainty from the analysis should improve the pointing accuracy of the instrument. This effect can be seen in Figure 15. Figure 15a shows the true error of the reconstructed gamma-ray initial direction against the component of the pointing uncertainty that is attributable to the position uncertainty predicted by our EDL model555This is computed using Equations 6-8 of Boggs, S. E. & Jean, P. (2000), for the simulated gamma source described in Section 3.1 using a parameterization of the EDL model output described in detail in Appendix Section A.2. The solid blue curve in Figure 15b plots the FWHM of a Lorentz distribution fitted to the projection of Figure 15a onto the -axis, with a cut on the position-related pointing uncertainty given by the -axis value. The dashed green curve gives the fraction of total events remaining after applying such a cut. With no uncertainty cut (i.e. the -value of the solid blue curve at the right edge of Figure 15b), the FWHM of this distribution is 2.788 degrees, very close to the 2.746 degrees for the deterministic model (Figure 7). We intend to study the extent to which a cut on this parameter can be used in concert with other standard acceptance criteria (requiring sufficiently large separation between Compton scatters, choosing low-angle Compton scatters, etc.) to improve the overall pointing performance of the instrument.

4 Conclusions

The GammaTPC is a telescope concept based on a single-phase liquid argon time-projection chamber with a novel dual-scale pixel-based readout system to enable a significant improvement in sensitivity to MeV-scale gamma-rays over previous telescopes. The novel pixel-based charge readout allows for imaging of tracks of electrons scattered by Compton interactions of incident gamma-rays. The field of image recognition using deep neural networks is quite advanced, and we take advantage of that to explore several different network designs for reconstruction and uncertainty quantification of the initial position and direction of scattered electrons. We use a CNN for a deterministic reconstruction of the electron track head, and a similar CNN for a deterministic reconstruction of the initial scattering direction of the electron. We find that the deterministic model is able to locate electron-track heads with sub-millimeter precision, and is able to effectively reconstruct the initial scatter direction as well. We explore several options for uncertainty quantification of the electron-track head location reconstruction, focusing most of our attention on Evidential Deep Learning (EDL) (Amini et al., 2020). We find that an EDL model is able to accurately estimate its uncertainty, but that this comes at some cost in the overall accuracy in track head location estimation. An accuracy matching that of the deterministic model can be achieved by sacrificing approximately 75% of the data. However, we further find that the EDL model produces a pointing accuracy close to that of the deterministic model, given by the Angular Resolution Measurement (ARM). Removing events with large uncertainty in pointing due to large uncertainties in position location can improve the ARM further.

There are some likely avenues for improvement. We did not emphasize optimization of training hyperparameters and procedures in this analysis, so it is feasible that changes there could improve the performance of this model. A larger data set would also likely improve the training, and open up the possibility of a deeper model, which could also improve performance. We also envision refinements in both the base model and the uncertainty estimation approach. The base model at present uses 3D convolutions as feature extractors. This operation treats the data as embedded in a space with a Euclidean metric and tends to favor shorter range spatial interactions. Furthermore, most of the voxels in each sample are empty, leading to sparse data, which CNNs were not originally designed for. In this context, we intend to apply Graph Neural Networks (GNNs) as the base model, which have shown success in reconstruction tasks for neutrino physics applications (Ju et al., 2020; Thais et al., 2022). With respect to the uncertainty estimation, the absence of the off-diagonal terms in the predicted covariance between the different spatial directions likely led to a tendency towards larger prediction intervals than would have existed if off-diagonal terms were included. To address this issue, we intend to utilize Variational-Inference based Bayesian Neural Networks and a posteriori Laplace’s-Approximation based uncertainty estimation. Finally, a model that can predict both the Compton-scattered electron’s initial direction and an uncertainty estimate would enable us to perform a similar operation, placing a threshold on that uncertainty estimate. Given that, as we showed in Section 3.3, higher-energy tracks tend to have superior direction reconstruction (as opposed to the opposite effect with position reconstruction), combining these two estimates could be powerful. We intend to develop an uncertainty-estimating model for the direction reconstruction going forward.

Appendix A Parameterization of model outputs for use with revan

A.1 Deterministic track head location only model

To simulate the model response on the MEGAlib data set of Compton scatters, we interpolate in electron energy between the fitted standard deviations in Figure 4 to get an effective Gaussian error distribution as a function of initial electron energy, and sample from those distributions for each energy deposition. We then apply this sampled reconstruction error to all 3 dimensions (each one independently sampled) for a given Compton scatter to simulate the reconstruction error that would arise from using this model. We do not simulate incident gamma-rays with energies above 1,000 keV, therefore there are never electrons with energies higher than the highest value in our training data set, which is also 1,000 keV. Compton scattered electrons with energies below 50 keV are treated with the same error distribution as the 50 keV electron data, as at those energies the electron track is point-like on the scale of the pixel readout. For simplicity, and since the final detector configuration not yet determined (including the full drift length), we use the 5 cm drift model for all scatters. Regardless, the RMS error of the model predictions does not change much between 5 and 10 cm drift depth (see Figure 5), and furthermore this part of the analysis is only relevant for Figure 7.

A.2 Evidential Deep Learning model

As we established in Section 3.4, the predicted squared error and the true error follow a parabolic relationship. Figure 16 presents a slice of the 2D distribution from the top panel of Figure 11 at a constant true error of 0.3 cm (or a constant true squared error of 0.09 cm2), showing that the predicted squared error is indeed inverse-gamma distributed. The means and modes of these inverse-gamma distributions at each bin in true error can be reasonably approximated by parabolas centered at , seen in Figure 17. We can then use these parabolas to obtain shape parameters for the appropriate inverse-gamma distribution values for a given true error.

The steps for sampling a pair of true error and predicted uncertainty for a Compton scatter at a given energy are then as follows: First, we obtain the standard deviation of the Gaussian distribution describing the true error for a given energy by interpolating between the true error distributions fitted at the training energies. These distributions are shown in Figure 18. We again round up to 50 keV any scatters with an energy less than 50 keV. We next use our sampled error to draw a predicted uncertainty from an inverse-gamma distribution. To do this, we interpolate in energy parameters for parabolas characterizing the mean and mode of the inverse-gamma distribution based on the values fitted at the training energies. We then use the two parabolas to get the mean and mode of our inverse-gamma distribution at the value of our true error sample. We then draw a random value from the inverse-gamma distribution for the predicted squared error (e.g. Figure 16), and take its square root to obtain the predicted uncertainty. We again use the results of the 5 cm drift model for all scatters for the same reasons stated in Section A.2. This parameterization is used only to produce Figure 15.

References

- Abbott et al. (2017) Abbott, B., et al. 2017, Astrophys. J. Lett., 848, L12, doi: 10.3847/2041-8213/aa91c9

- Abbott et al. (2018) Abbott, T., et al. 2018, Astrophys. J. Suppl., 239, 18, doi: 10.3847/1538-4365/aae9f0

- Abdollahi et al. (2020) Abdollahi, S., et al. 2020, Astrophys. J. Suppl., 247, 33, doi: 10.3847/1538-4365/ab6bcb

- Abi et al. (2020) Abi, B., et al. 2020, Deep Underground Neutrino Experiment (DUNE), Far Detector Technical Design Report, Volume I: Introduction to DUNE. https://arxiv.org/abs/2002.02967

- Adams et al. (2020) Adams, D., Bass, M., Bishai, M., et al. 2020, Journal of Instrumentation, 15, P06017, doi: 10.1088/1748-0221/15/06/p06017

- Akerib et al. (2020) Akerib, D., et al. 2020, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 953, 163047, doi: 10.1016/j.nima.2019.163047

- Amini et al. (2020) Amini, A., Schwarting, W., Soleimany, A., & Rus, D. 2020, Advances in Neural Information Processing Systems, 33, 14927

- Amodei et al. (2016) Amodei, D., Olah, C., Steinhardt, J., et al. 2016, arXiv preprint arXiv:1606.06565

- Aprile et al. (2018) Aprile, E., et al. 2018, Physical Review Letters, 121, doi: 10.1103/physrevlett.121.111302

- Aramaki et al. (2022) Aramaki, T., Boezio, M., Buckley, J., et al. 2022, arXiv preprint arXiv:2203.06894

- Baker et al. (2019) Baker, N., Alexander, F., Bremer, T., et al. 2019, Workshop report on basic research needs for scientific machine learning: Core technologies for artificial intelligence, Tech. rep., USDOE Office of Science (SC), Washington, DC (United States)

- Bartels et al. (2017) Bartels, R., Gaggero, D., & Weniger, C. 2017, JCAP, 05, 001, doi: 10.1088/1475-7516/2017/05/001

- Baxter (2000) Baxter, J. 2000, J. Artif. Intell. Res., 12, 149

- Bellazzini et al. (2003) Bellazzini, R., Angelini, F., Baldini, L., et al. 2003, in Polarimetry in Astronomy, ed. S. Fineschi, Vol. 4843, International Society for Optics and Photonics (SPIE), 383 – 393, doi: 10.1117/12.459381

- Black et al. (2007) Black, J., Baker, R., Deines-Jones, P., Hill, J., & Jahoda, K. 2007, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 581, 755, doi: https://doi.org/10.1016/j.nima.2007.08.144

- Boggs, S. E. & Jean, P. (2000) Boggs, S. E., & Jean, P. 2000, Astron. Astrophys. Suppl. Ser., 145, 311, doi: 10.1051/aas:2000107

- Caputo et al. (2019) Caputo, R., et al. 2019, Astro2020 White Paper. https://arxiv.org/abs/1907.07558

- Chollet et al. (2015) Chollet, F., et al. 2015, Keras, https://keras.io

- Deng et al. (2018) Deng, Z., He, L., Liu, F., et al. 2018, Journal of Instrumentation, 13, P08019, doi: 10.1088/1748-0221/13/08/p08019

- DeVries & Taylor (2018) DeVries, T., & Taylor, G. W. 2018, arXiv preprint arXiv:1807.00502

- Dwyer et al. (2018) Dwyer, D., Garcia-Sciveres, M., Gnani, D., et al. 2018, Journal of Instrumentation, 13, P10007, doi: 10.1088/1748-0221/13/10/p10007

- Fleischhack (2021) Fleischhack, H. 2021, arXiv e-prints, arXiv:2108.02860. https://arxiv.org/abs/2108.02860

- Gal & Ghahramani (2016) Gal, Y., & Ghahramani, Z. 2016, in International Conference on Machine Learning, PMLR, 1050–1059

- Gneiting & Raftery (2007) Gneiting, T., & Raftery, A. E. 2007, Journal of the American statistical Association, 102, 359

- Hendrycks & Gimpel (2016) Hendrycks, D., & Gimpel, K. 2016, arXiv preprint arXiv:1610.02136

- Ihme et al. (2022) Ihme, M., Chung, W. T., & Mishra, A. A. 2022, Progress in Energy and Combustion Science, 91, 101010

- Ikeda et al. (2021) Ikeda, T., Takada, A., Abe, M., et al. 2021, Progress of Theoretical and Experimental Physics, 2021, doi: 10.1093/ptep/ptab091

- Ivezić et al. (2019) Ivezić, v. Z., et al. 2019, Astrophys. J., 873, 111, doi: 10.3847/1538-4357/ab042c

- Jeong & Maddala (1993) Jeong, J., & Maddala, G. 1993, Handbook of Statistics, 11, 573

- Ju et al. (2020) Ju, X., Farrell, S., Calafiura, P., et al. 2020, arXiv preprint arXiv:2003.11603

- Kendall et al. (2015) Kendall, A., Badrinarayanan, V., & Cipolla, R. 2015, arXiv preprint arXiv:1511.02680

- Kingma & Ba (2014) Kingma, D. P., & Ba, J. 2014, Adam: A Method for Stochastic Optimization, arXiv, doi: 10.48550/ARXIV.1412.6980

- Kuijken et al. (2019) Kuijken, K., et al. 2019, Astron. Astrophys., 625, A2, doi: 10.1051/0004-6361/201834918

- Li et al. (2017) Li, T., Zeng, M., Feng, H., et al. 2017, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 858, 62, doi: https://doi.org/10.1016/j.nima.2017.03.050

- Li et al. (2016) Li, Y., Tsang, T., Thorn, C., et al. 2016, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 816, 160, doi: https://doi.org/10.1016/j.nima.2016.01.094

- Manly (2018) Manly, B. F. 2018, Randomization, Bootstrap and Monte Carlo Methods in Biology: Texts in Statistical Science (chapman and hall/CRC)

- Mitchell (1980) Mitchell, T. M. 1980, The need for biases in learning generalizations, Cbm-tr-117, Computer Science Department, Rutgers University

- Moiseev et al. (2015) Moiseev, A. A., Ajello, M., Buckley, J. H., et al. 2015, Compton-Pair Production Space Telescope (ComPair) for MeV Gamma-ray Astronomy, arXiv, doi: 10.48550/ARXIV.1508.07349

- NEA (2019) NEA. 2019, PENELOPE 2018: A code system for Monte Carlo simulation of electron and photon transport: Workshop Proceedings, Barcelona, Spain, 28 January – 1 February 2019, (OECD Publishing, Paris), doi: https://doi.org/10.1787/32da5043-en

- Nguyen et al. (2015) Nguyen, A., Yosinski, J., & Clune, J. 2015, in Proceedings of the IEEE conference on computer vision and pattern recognition, 427–436

- O’Malley et al. (2019) O’Malley, T., Bursztein, E., Long, J., et al. 2019, Keras Tuner, https://github.com/keras-team/keras-tuner

- Pearce et al. (2018) Pearce, T., Brintrup, A., Zaki, M., & Neely, A. 2018, in International Conference on Machine Learning, PMLR, 4075–4084

- Peirson et al. (2021) Peirson, A., Romani, R., Marshall, H., Steiner, J., & Baldini, L. 2021, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 986, 164740, doi: https://doi.org/10.1016/j.nima.2020.164740

- Pena-Perez et al. (2020) Pena-Perez, A., Doering, D., Gupta, A., et al. 2020, in 2020 IEEE Nuclear Science Symposium and Medical Imaging Conference Record (NSS/MIC), 1–2, doi: 10.1109/NSS/MIC42677.2020.9507812

- Romano et al. (2019) Romano, Y., Patterson, E., & Candès, E. J. 2019, arXiv preprint arXiv:1905.03222

- Seabold & Perktold (2010) Seabold, S., & Perktold, J. 2010, in 9th Python in Science Conference

- Shutt et al. (2023) Shutt, T., et al. 2023, in preparation

- Srivastava et al. (2014) Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. 2014, The Journal of Machine Learning Research, 15, 1929

- Szydagis et al. (2011) Szydagis, M., Barry, N., Kazkaz, K., et al. 2011, Journal of Instrumentation, 6, P10002, doi: 10.1088/1748-0221/6/10/p10002

- Thais et al. (2022) Thais, S., Calafiura, P., Chachamis, G., et al. 2022, arXiv preprint arXiv:2203.12852

- Tompson et al. (2015) Tompson, J., Goroshin, R., Jain, A., LeCun, Y., & Bregler, C. 2015, in Proceedings of the IEEE conference on computer vision and pattern recognition, 648–656

- V. Schönfelder (2000) V. Schönfelder, e. a. 2000, Astronomy and Astrophysics Supplement Series, 143, 145, doi: 10.1051/aas:2000101

- Weidenspointner et al. (2000) Weidenspointner, G., Varendorff, M., Kappadath, S. C., et al. 2000, AIP Conference Proceedings, 510, 467, doi: 10.1063/1.1307028

- Yoneda et al. (2018) Yoneda, H., Saito, S., Watanabe, S., Ikeda, H., & Takahashi, T. 2018, Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, 912, 269, doi: https://doi.org/10.1016/j.nima.2017.11.078

- Zhou (2021) Zhou, Z.-H. 2021, in Machine learning (Springer), 181–210

- Zoglauer (2019) Zoglauer, A. 2019, MEGAlib: Medium Energy Gamma-ray Astronomy library. http://ascl.net/1906.018

- Zoglauer & Kanbach (2003) Zoglauer, A., & Kanbach, G. 2003, in X-Ray and Gamma-Ray Telescopes and Instruments for Astronomy, ed. J. E. Truemper & H. D. Tananbaum, Vol. 4851, International Society for Optics and Photonics (SPIE), 1302 – 1309, doi: 10.1117/12.461177