Low-Dimensional Gradient Helps Out-of-Distribution Detection

Abstract

Detecting out-of-distribution (OOD) samples is essential for ensuring the reliability of deep neural networks (DNNs) in real-world scenarios. While previous research has predominantly investigated the disparity between in-distribution (ID) and OOD data through forward information analysis, the discrepancy in parameter gradients during the backward process of DNNs has received insufficient attention. Existing studies on gradient disparities mainly focus on the utilization of gradient norms, neglecting the wealth of information embedded in gradient directions. To bridge this gap, in this paper, we conduct a comprehensive investigation into leveraging the entirety of gradient information for OOD detection. The primary challenge arises from the high dimensionality of gradients due to the large number of network parameters. To solve this problem, we propose performing linear dimension reduction on the gradient using a designated subspace that comprises principal components. This innovative technique enables us to obtain a low-dimensional representation of the gradient with minimal information loss. Subsequently, by integrating the reduced gradient with various existing detection score functions, our approach demonstrates superior performance across a wide range of detection tasks. For instance, on the ImageNet benchmark with ResNet50 model, our method achieves an average reduction of 11.15 in the false positive rate at 95 recall (FPR95) compared to the current state-of-the-art approach. The code would be released.

Index Terms:

out-of-distribution detection, gradient dimension reduction, deep neural networks1 Introduction

In an open-world setting, a reliable system should not only provide accurate predictions but also issue appropriate warnings when encountering unknown data. Consequently, the field of out-of-distribution (OOD) detection has emerged as an essential area of research alongside the rapid development of deep neural networks (DNNs). Typically, DNNs are assumed to be trained and tested on datasets drawn from the same distribution. This assumption allows DNNs to generate precise predictions on test data, known as in-distribution (ID) data. However, in open-world scenarios, this assumption is frequently invalidated. For instance, in autonomous driving, it is impractical for the training dataset to encompass all potential scenarios. Therefore, the presence of OOD inputs necessitates the ability to recognize them for a DNN to be reliable. In this context, the development of algorithms for OOD detection holds substantial practical significance.

A rich line of research has been developed for OOD detection. For instance, output-based methods have revealed that the prediction confidence of ID data is generally higher than that of OOD data. Consequently, multiple score functions have been devised based on model outputs [1, 2, 3]. Additionally, several studies have investigated the disparities in intermediate features between ID and OOD data [4, 5, 6, 7]. These studies have found that features of ID data tend to cluster together and differ significantly from those of OOD data. Building upon this observation, various score functions based on features, such as Maha [6] and KNN [7], have been proposed for OOD detection.

All the aforementioned methods utilize forward information, i.e., information generated in the forward process, to detect OOD inputs. However, the disparities between ID and OOD data are also significant in the backward process of DNNs [8, 9, 10, 11, 12, 13]. For instance, the parameter gradient magnitude for ID data is generally smaller than that for OOD data, given the well-trained nature of DNNs on ID data. This observation has inspired several intriguing OOD detection methods, such as GradNorm [9], which uses the gradient norm as its score function. However, a comprehensive investigation into the divergence of parameter gradients between ID and OOD data is still lacking. The primary challenge arises from the high dimensionality of parameter gradients in modern DNNs. The distance measurement within the high dimensional space is inherently unreliable due to the ”curse of dimensionality” [14], which refers to the tendency for distances between data points to become increasingly similar as the number of dimensions increases, thereby rendering the distinction between ID and OOD data difficult to discern in such space.

| Method | Type | Simple Modification | FPR95 | AUROC |

|---|---|---|---|---|

| MSP [1] | Output-based | An additional linear network | 32.28 (32.38) | 91.87 (9.05) |

| Energy [2] | Output-based | An additional linear network | 27.90 (29.57) | 93.09 (6.04) |

| ReAct [4] | Feature-based | Adaptive threshold | 23.03 (7.67) | 95.45 (2.15) |

| BTAS [5] | Feature-based | Adaptive threshold | 23.42 (34.13) | 95.40 (8.36) |

| Maha [6] | Feature-based | None | 87.30 (5.79) | 49.31 (14.78) |

| KNN [7] | Feature-based | None | 41.18 (15.12) | 88.10 (2.84) |

| Ensemble | - | - | 19.55(11.15) | 96.12(2.82) |

To address the challenge posed by high dimensionality, we propose to perform dimension reduction on the gradient. Admittedly, dimension reduction involves the potential loss of crucial information associated with significant dimensions, which may undermine its efficacy in OOD detection. Nevertheless, recent studies indicate that the gradient of ID data may primarily fall into a relatively low-dimensional space. The related theoretical discussions and empirical evaluations include:

- •

-

•

Low-rank adaptation [17]: The change of network parameters for a fine-tuning task is a low-rank adaptation matrix;

- •

These discussions shed light on an important insight: parameter gradients lie in a relatively low-dimensional space, offering the possibility of linear dimension reduction while preserving significant information for effective OOD detection. The key problem now revolves around identifying the subspace where the principal components of gradients reside. One approach we use comes from NFK [21], which employs an efficient algorithm to compute the top eigenvectors of a gradient covariance matrix. However, this method incurs significant computational costs due to the high dimensionality of gradients. To mitigate this burden, we propose an alternative method to estimate the PCA subspace. Building on the observation that gradients of samples from the same class exhibit a pronounced degree of directional similarity [19, 22], we suggest using the average gradient of each class to compose the subspace. This approach is easier to implement, and our empirical results in Sec. 3.2 demonstrate that it can represent the top-C (C is the number of classes of ID dataset) principal components of PCA. In practical applications, users can flexibly choose the above two approaches based on data volume and model size considering that PCA subspace is more accurate but requires longer computational time. Once the subspace is determined, the gradients can be projected onto it, yielding low-dimensional representations. These representations can then be effectively utilized to distinguish between ID and OOD samples.

Given that the reduced gradients serve as a distinctive form of data representation [23, 13], the approaches that use forward embeddings can be seamlessly integrated with our reduced gradients. The possible choices include MSP[1] and Energy[2], which utilize output differences; ReAct[4] and BATS[5], which employ feature distribution differences; and Mahalanobis [6] and KNN [7], which use pairwise distance information. With simple modifications to these approaches (see Table I), our reduced gradients can be effectively applied on them and show remarkable improvements in detection performance. Additionally, we discover that an ensemble of forward and backward information can further improve our detection performance since data samples exhibiting minimal disparities in the forward features may exhibit substantial variations in the backward gradients.

The overall framework of our method is illustrated in Figure 1. To evaluate its effectiveness, we conduct experiments on two widely used benchmark datasets: CIFAR10 [24] and ImageNet [25], along with seven OOD datasets and six different architectures. We evaluate the performance of the methods [1, 2, 4, 5, 7, 6] mentioned above based on forward features and backward gradients respectively. The experimental results demonstrate the superior performance of our low-dimensional gradients on both benchmarks, see Table I. Notably, on the large-scale ImageNet benchmark with ResNet50 model, our method outperforms the best baseline by in terms of False Positive Rate at True Positive Rate (FPR95), and by in terms of Area Under the Receiver Operating Characteristic Curve (AUROC). The contributions of this paper can be summarized as follows:

-

•

We present a pioneering investigation into the utilization of complete gradient information for OOD detection, offering novel insights into this field.

-

•

Our proposed gradient dimension reduction algorithm offers a valuable technical contribution, providing a solid foundation for future studies on leveraging gradients in OOD detection.

-

•

We demonstrate the remarkable performance of our low-dimensional gradients across a diverse range of OOD detection tasks.

2 Related Work

The existing OOD detection approaches can be categorized into three types: 1) density-based; 2) post-hoc; 3) confidence enhancement.

Density-based methods explicitly model the ID data with some probabilistic models and flag samples in low-density regions as OOD data. For example, Mahalanobis [6] uses class-conditional Gaussian distribution to model the ID data and detect OOD samples with their likelihoods. Flow-based methods [26, 27, 28, 29] are also effective in probabilistic modeling for OOD detection. Besides, researchers also investigate the effect of an ensemble of multiple density models [30]. However, due to the difficulty of optimizing generative models, density-based methods are hardly used in practice.

Post-hoc methods are the most popular in OOD detection because of their portability and effectiveness. These approaches aim to design a score function that assigns high scores to ID data and low scores to OOD data, enabling the separation of OOD samples by applying an appropriate threshold. Based on the way of calculating scores, these methods can be broadly divided into three types: 1) output-based; 2) feature-based; and 3) gradient-based. Classical output-based methods, such as MSP[1], ODIN[3], and Energy[2], design score functions based on the observation that classification probabilities of OOD samples tend to be lower than those of normal data. In pursuit of enhanced detection performance, ReAct [4] and BATS [5] propose to truncate the abnormal values of intermediate features, thereby amplifying the disparities between ID and OOD data in the output space. Feature-based approaches, as exemplified by KNN [7], Maha [6], and other related works [31, 32], primarily leverage the aggregation property of ID features to identify OOD samples. For instance, Maha [6] utilizes the Mahalanobis distance between the feature of inputs and the mean feature of corresponding classes as its score function for OOD detection. Similarly, KNN [7] employs the Euclidean distance to the -th nearest neighborhood as a measurement to detect OOD data. Furthermore, several studies [31, 32] leverage the inherent low-dimensional characteristics of features and introduce reconstruction errors as detection scores to identify OOD data. Gradient-based approaches, such as GradNorm [9], Purview [10], GraN [33], and others [12, 11, 8], mainly focus on utilizing the norm of parameter gradients to detect OOD samples. For instance, GradNorm [9] shows that the gradient norm calculated from the categorical cross-entropy loss is generally lower on OOD data, thereby designing detection functions based on the norm. Other studies employ the gradient norm in different ways, like introducing binary networks [12], etc [10, 33, 11, 8]. Apart from the above-mentioned approaches, Vim [34] empirically demonstrates that a combination of output and feature information can further improve detection performance. Our proposed method also belongs to the post-hoc type, which is plug-and-play in practice.

Confidence enhancement methods design various regularization terms to amplify the difference between ID and OOD data. One type of them is to utilize auxiliary OOD datasets to fine-tune the model with designed training losses that explicitly enlarge the output difference between ID and auxiliary OOD data. The most classical method is outlier exposure [35], which encourages the output of OOD data to be uniformly distributed. Another type is to apply contrastive losses on ID dataset to promote stronger ID-OOD separability, like SimCLR [36], SupCon [37], and Cider[38]. However, confidence enhancement methods typically require model retraining, and in some cases, even necessitate the acquisition of additional OOD data. These requirements render such methods less practical for real-world applications.

3 Method

3.1 Preliminary

The framework of OOD detection can be described as follows. We consider a classification problem with classes, where stands for the input space and for the label space. The joint data distribution over is denoted as . Let : be a model trained on samples drawn i.i.d. from with parameter . Then the distribution of ID data is denoted as , which is the marginal distribution of over . The distribution of OOD data is presented as , whose label set has no intersection with . The goal of OOD detection is to decide whether a test input is from or . For post hoc methods, the decision is made through a score function as follows:

where is a threshold and samples with scores higher than are classified as ID data. The threshold is usually set based on ID data to guarantee that a high fraction of ID data (e.g. 95) is correctly identified as ID samples.

3.2 Low-dimensional Gradient Extraction

In this section, we progressively answer two questions: 1) how to calculate gradients in the label-agnostic condition? 2) how to reduce the gradient dimension and simultaneously retain as much information as possible? In the context of OOD detection, input labels are inaccessible to users, and thus the normal cross-entropy loss used in the training process is not applicable to calculating gradients for test data. Borrowing the idea from JEM [39], we induce a label-free energy function that has the same conditional probability as the original model:

| (1) |

It essentially reframes a conditional distribution over given to an induced unconditional distribution over . With the energy-based model , we can calculate the gradient given any input without as follows:

| (2) |

It is equivalent to a weighted average of the derivative of outputs for all labels. To eliminate the scale discrepancies among different dimensions, we normalize it using the mean and variance matrix calculated from the training data:

where and are the mean and variance matrix, and is the normalized gradient of sample . After obtaining the label-agnostic gradient, the next crucial problem is reducing its dimension to facilitate its practical utilization. Recent empirical studies, such as LoRA [17] and DLDR [15], have shown that the gradient of ID data resides in a low-dimensional subspace. The low-rank spectrum analysis [18, 19, 20] of neural tangent kernel (NTK, [40]) has also provided theoretical evidence explaining the above phenomenon. Therefore, a simple linear dimension reduction method is sufficient for extracting a low-dimensional representation of the gradient. The most commonly used algorithm is principal component analysis (PCA, [41]), which employs the top-K eigenvectors of the gradient covariance matrix as its dimension reduction matrix. Denote the eigendecomposition as follows:

| (3) |

where is the gradient covariance matrix computed on all the training data. Based on the diagonal values of , PCA selects column vectors of corresponding to the first large eigenvalues. Then the low-dimensional representation is calculated as follows:

Because of the high dimensionality of gradients, the above eigen decomposition cannot be calculated directly in practice. Hence, we adopt a power iteration method [42, 43] to obtain the eigenvectors under reasonable computation costs, following the idea in NFK [21]. Specific algorithms are in Appendix A.

In addition to the PCA algorithm, in this paper, we also propose an alternative approach to estimate the low-dimensional subspace encompassing principal components. Drawing upon the observation that the parameter update induced by a sample has a more pronounced influence on samples belonging to the same class while exerting minimal influence on samples from other classes [44, 45, 46], it is reasonable to infer that parameter gradients from samples within the same class tend to align in similar directions. Previous empirical studies [19, 22] support the above inference by demonstrating that parameter gradients from samples of the same class exhibit a high degree of direction similarity. Moreover, our empirical experiments, detailed in Appendix B, further validate the above claim by revealing a high cosine similarity between the gradients of samples from the same class and their corresponding gradient centers. Based on these findings, we propose utilizing the average gradient (AG) of each class as an alternative subspace estimation:

| (4) |

In this way, the extraction of low-dimensional subspace becomes easy to implement. However, this subspace is inaccurate compared to the PCA one. Specifically, we measure the reconstruction error of gradients of ID training and test data to the PCA and AG subspace, which reflects the amount of information lost of gradients after projecting them into the low-dimensional subspace. The results in Table II indicate that the gradient information is partially lost in the AG subspace while almost completely retained in the PCA subspace. Furthermore, the similar error of AG subspace and 10-dimensional PCA subspace implies that the average gradient direction is consistent with the top-C (C is the number of classes of ID dataset) principal components of PCA, which demonstrates the rationality of using AG subspace to reduce the gradient dimension. Considering the computational cost of PCA, the AG subspace is more suitable for scenarios involving large volumes of data and complex models.

| Subspace | Dim | Train | Test |

|---|---|---|---|

| Avg Grad | 10 | 0.6569±0.0163 | 0.6791±0.0237 |

| PCA | 10 | 0.6004±0.0141 | 0.6165±0.0225 |

| PCA | 200 | 0.0790±0.0116 | 0.0841±0.0214 |

Based on the above two subspace extraction methodologies, the overall algorithm for calculating the low-dimensional gradient is shown in Alg 1. It is worth noticing that although the subspace extraction process takes some computing time, the follow-up projection process can be fast with efficient parallel calculation. Specifically, the subspace can be partitioned into multiple lower-dimensional subspaces, each of which can be stored on different GPUs. Then, the projection process can be independently executed on each GPU. By subsequently concatenating the outcomes obtained from each GPU, the final low-dimensional gradient can be obtained efficiently. Specific analysis about the computation time can be seen in Sec 5.4.

3.3 Score Function Design

This section elucidates the application of low-dimensional gradients in OOD detection. To design effective score functions based on the gradients, we first analyze their properties to figure out the divergence between ID and OOD data. The visualization of our low-dimensional gradients presented in Figure 2 reveals a discernible phenomenon: the low-dimensional gradients derived from ID data tend to exhibit clustering, forming cohesive groups, while simultaneously manifesting a distinctive separation from the gradients originating from OOD data. This observation demonstrates a striking parallel to the clustering and separation patterns observed in forward features. Therefore, prior distance-based methods such as Maha [6] and KNN [7] can be seamlessly adapted to operate on our gradients. Furthermore, since our gradients show the separability between samples of different classes, they can serve as a distinctive form of data representation and be used to classify samples. Thus, output-based methods [1, 2] can also be combined with our gradients. Apart from the visualization analysis, we also investigate the distribution of our gradients to dig up their distinctions between ID and OOD data. As Figure 3 shows, the values of OOD gradients fall within the range of ID gradients in the top-n dimensions (n is a number close to the number of classes ), while in the rest dimensions, their values are noticeably larger. Hence, we can employ previous feature rectification methods [4, 5] on the rest dimensions of our gradients to obtain improved detection performance compared to the output-based methods.

Subsequently, we provide detailed explanations regarding the integration of our gradients with output-based methods [1, 2], feature rectification methods [4, 5], and distance-based methods [6, 7]. Besides, inspired by the ensemble strategy proposed in Vim [48], we also explore a simple ensemble technique that combines forward and backward information to achieve better OOD detection performance.

3.3.1 Combination with output-based methods

Output-based approaches aim to capture the dissimilarities in feature representations between ID and OOD data by modulating the network output using a linear layer. Consequently, we introduce an auxiliary linear network, trained on our reduced gradient, to generate the necessary output for computing score functions. The network architecture is:

| (5) |

The input is a low-dimensional gradient and the output is a -dimensional tensor that indicates the prediction probability of each class ( is the number of categories of ID data). To train the network, we adopt a common training setting, where low-dimensional gradients of ID training data as the training dataset, the cross-entropy loss with the label of training data as the loss function, and the stochastic gradient descent (SGD) as the optimization approach. The simplicity of the network enables a rapid training process. Once the linear network has been effectively trained, we can leverage its output to discriminate between ID and OOD samples. The basic score function is the maximum softmax confidence [1], i.e.,

| (6) |

Furthermore, the energy [2] score function is proved to be more efficient in OOD detection, which quantifies the free energy function associated with each sample:

| (7) |

Both and exhibit large values on ID data and vice versa.

3.3.2 Combination with feature rectification methods

To further amplify the output difference between ID and OOD data, prior studies have explored the distribution of penultimate features and proposed feature rectification techniques, as OOD data is observed to exhibit larger feature values [4, 5]. However, our experimental findings indicate that applying these methods directly to our reduced gradients yields inferior performance compared to output-based approaches. Based on the distribution observation in Figure 3, we propose to exclusively rectify our low-dimensional gradients in certain dimensions and then feed them into the aforementioned linear network in (5). The overall process can be expressed as:

| (8) |

where and we denote as the last- dimensional vector of . Different rectification approaches employ different Clip functions to truncate the values of gradients. For example, ReAct [4] method truncates activations above a threshold to limit the effect of noises:

where a threshold that is determined based on the -th percentile of estimated on ID data. For example, when , it indicates that 90 percent of ID gradients are less than . Apart from ReAct approach, BATS [5] method proposes to rectify extreme activations to the boundary values of typical sets with the guidance of batch normalization:

where and are the learnable bias and weight of batch normalization layer, respectively, and is a hyperparameter. The typical set is defined as the interval [, ], within which all activations are constrained to reside. After the rectification of gradients, both the ReAct and BATS methods utilize the energy score function (Eq. (7)) to detect OOD samples.

3.3.3 Combination with distance-based methods

Features of ID data tend to cluster together and be away from those of OOD data; thus, previous works designed various distance measurements as score functions to detect OOD data. For our low-dimensional gradients, these methods should be equally effective since gradients share the same aggregation and separation properties as features, as shown in Figure 2. One typical measurement is the Mahalanobis distance [6] as follows:

| (9) |

where and are the empirical class mean and covariance of training samples. The Mahalanobis distance-based method imposes a class-conditional Gaussian distribution assumption about the underlying gradient space, while another distance-based approach named KNN[7] is more flexible and general without any distributional assumptions. It utilizes the Euclidean distance to the -th nearest neighbor of training data as its score function, which can be expressed as follows:

| (10) |

Both and functions exhibit larger values on ID data and smaller values on OOD data since they take the negative value of the distance measurements.

3.3.4 Ensemble of forward and backward information

Drawing inspiration from the concept of integrating multiple sources of information to enhance detection performance, as demonstrated in [48], our work also tries to combine the scores obtained from the feature/output space with those derived from our reduced gradients. The algorithm for this combination is mathematically expressed as follows:

| (11) |

where is the weight coefficient to ensure that and are of similar magnitude. In this paper, we set as 1 in our experiments.

4 Experiments

In this section, we conduct a comparative analysis of the performance attained by employing gradients and features independently across six distinct detection methods. The analysis is performed on two widely recognized benchmark datasets, namely CIFAR10 [24] and ImageNet [25], with six different network architectures. Additionally, we evaluate another three advanced detection approaches to validate our superior performance in OOD detection. Moreover, we assess the detection performance of utilizing the combined information from both forward and backward sources, which further reduces FPR95 and improves AUROC. At the end of this section, we investigate the impact of reduced dimensionality on our detection performance as well as the influence of various hyperparameters in the underlying methods. Through a comprehensive series of experiments, we provide compelling evidence for the effectiveness of employing low-dimensional gradients in OOD detection, thereby offering novel insights for future research endeavors. The detailed experimental configurations and settings are presented below.

OOD Datasets

We evaluate our method on two popular benchmarks: CIFAR10 [24] and ImageNet [25]. When using CIFAR10 as ID data, we consider four OOD datasets commonly used in literature [7, 49, 4, 50, 51, 1, 2]: Textures [52], SVHN [53], LSUN-C [54], and iSUN [55]. As for the evaluation on ImageNet, we choose four commonly used OOD datasets that are subsets of: iNaturalist [56], SUN [57], Places [58], and Texture [52] with non-overlapping categories w.r.t. ImageNet.

Evaluation Metrics

Two classical metrics are reported in this paper: 1) FPR95: the false positive rate of OOD samples when the true positive rate of ID samples is at 95. 2) AUROC: the area under the receiver operating characteristic curve. The smaller the FPR95 and the higher the AUROC, the better the performance.

Models and Hyper-parameters

In the case of CIFAR10, we respectively adopt the ResNet18 [59], MobileNet-v2 [60], DenseNet121 [61] and Wideresnet-28-10 [62] as our base model.

We train them using the SGD algorithm with weight decay 0.0005, momentum 0.9, cosine schedule, initial learning rate 0.1, epoch 200, and batch size 128. For MobileNet-v2 architecture, we change its stride from 2 to 1 to make it more suitable for CIFAR10 dataset. Noticing the high ratio of infinite values in the variance matrix for MobileNet-v2 and DenseNet121, we excluded from gradient normalization for these models. The pivotal hyper-parameter of our low-dimensional gradient is the reduced dimensionality, denoted as . For the ResNet18, MobileNet-v2 and DenseNet architectures, we adopt the PCA subspace with to reduce the gradient dimension. For the Wideresnet-28-10 model, we adopt the average gradient subspace considering that it has much more parameters.

Regrading the ImageNet dataset, we use the pre-trained model in Pytorch [63] with different architectures including ResNet50 [59], MobileNet-v2 [60], DenseNet121 [61] and ViT-B16 [64]. The top-1 test accuracy of them are respectively , , and . We adopt the average gradient subspace for all the models to obtain the low-dimensional gradient.

Baseline Methods

In this paper, we apply gradients and features separately within six different detection methods, including two output-based methods, namely MSP [1] and Energy [2], two feature rectification methods, namely ReAct [4] and BATS [5], and two distance-based methods, namely Mahanobias [6] and KNN [7]. Additionally, we validate the superiority of our proposed approach by comparing it with three other advanced detection methods, namely ODIN [3], GradNorm [9], and Vim [48]. Notably, all of the aforementioned approaches employ pre-trained networks in a post hoc manner.

4.1 Evaluation on Large-scale ImageNet Benchmark

We first evaluate our method on the large-scale ImageNet benchmark, which poses significant challenges due to the substantial volume of training data and model parameters. The comparison results are presented in Table III, where we evaluate six different detection methods on four networks using either features or our low-dimensional gradients as inputs. Given the poor performance of the Mahalanobis method [6] on the ResNet50 model, we do not evaluate it on other networks. Additionally, since ViT uses layer normalization instead of batch normalization, we exclude the BATS method [5] from testing on the ViT model. To control our computational overhead, we randomly select 50000 training samples as the training dataset for our detection algorithms. We train the linear network in Eq.(5) with learning rate, batch size, and epochs, achieving a test accuracy of on ResNet50, on MobileNet-v2, on DenseNet121 and on ViT. For ReAct and BATS, we choose to truncate the last 50 dimensions of the 1000-dimensional gradients with and . Regarding the KNN method, we set for both forward and backward embeddings.

It is worth noticing that our reduced gradient integrated with the simplest detection algorithm MSP always achieves remarkable performance. For example, on the ResNet50 architecture, our method has FPR95 of and AUROC of , reducing the FPR95 by and improving the AUROC by compared to the original MSP. Moreover, combing with feature rectification methods like ReAct [4] and BATS [5], our performance can be further improved. For instance, on ResNet50 model, our approach achieves SOTA average performance with FPR95 of and AUROC of based on ReAct method, surpassing the best baseline by in FPR95 and in AUROC. One expectation is for SUN dataset, where ReAct works better for features, indicating that the choice of embedding is indeed task-dependent. But overall our low-dimensional gradients are quite promising for distinguishing ID and OOD data.

There is an impeccable performance of our method on the Places OOD dataset with ResNet50 model, which implies a significant disparity in the gradient space between the ImageNet and Places datasets. To gain further insight into this disparity, we randomly choose an OOD sample in the Places dataset and an ID sample in the ImageNet dataset and analyze the density distribution of their forward features and reduced gradients. The result presented in Figure 4 demonstrates that their distinctions are marginal in the feature space but pronounced in the gradient space, which aligns with the performance we achieved on the Places dataset.

| Methods | Embedding | iNaturalist | SUN | Places | Texture | Average | |||||

| FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | ||

| ResNet50, ID Acc | |||||||||||

| MSP | Feature | 52.77 | 88.42 | 68.58 | 81.75 | 71.57 | 80.63 | 66.13 | 80.46 | 64.76 | 82.82 |

| Gradient | 42.20 | 90.05 | 67.82 | 81.45 | 0.00 | 99.99 | 19.50 | 95.98 | 32.38 | 91.87 | |

| Energy | Feature | 53.93 | 90.59 | 58.27 | 86.73 | 65.40 | 84.13 | 52.29 | 86.73 | 57.47 | 87.05 |

| Gradient | 28.79 | 93.39 | 67.52 | 82.26 | 0.00 | 100.00 | 15.28 | 96.70 | 27.90 | 93.09 | |

| ReAct | Feature | 19.49 | 96.40 | 24.07 | 94.40 | 33.48 | 91.92 | 45.74 | 90.47 | 30.70 | 93.30 |

| Gradient | 19.87 | 95.77 | 45.34 | 90.52 | 0.00 | 100.00 | 26.90 | 95.50 | 23.03 | 95.45 | |

| BATS | Feature | 54.12 | 90.59 | 58.31 | 86.74 | 65.39 | 84.14 | 52.38 | 86.70 | 57.55 | 87.04 |

| Gradient | 20.23 | 95.69 | 45.40 | 90.53 | 0.00 | 100.00 | 28.05 | 95.38 | 23.42 | 95.40 | |

| Maha | Feature | 93.70 | 65.81 | 97.56 | 49.91 | 97.63 | 49.44 | 37.13 | 91.18 | 81.51 | 64.09 |

| Gradient | 95.41 | 47.93 | 96.88 | 52.66 | 100.00 | 37.87 | 56.90 | 58.78 | 87.30 | 49.31 | |

| KNN | Feature | 63.85 | 85.40 | 70.46 | 81.63 | 76.27 | 77.52 | 14.63 | 96.49 | 56.30 | 85.26 |

| Gradient | 42.76 | 91.20 | 86.98 | 66.92 | 33.06 | 94.94 | 10.90 | 97.30 | 43.43 | 87.59 | |

| MobileNet-v2, ID Acc | |||||||||||

| MSP | Feature | 59.83 | 86.72 | 74.18 | 78.88 | 76.88 | 78.14 | 70.99 | 78.95 | 70.47 | 80.67 |

| Gradient | 42.94 | 91.27 | 61.20 | 85.72 | 67.94 | 82.84 | 61.45 | 80.77 | 58.38 | 85.15 | |

| Energy | Feature | 55.31 | 90.34 | 59.36 | 86.24 | 66.27 | 83.21 | 54.52 | 86.58 | 58.87 | 86.59 |

| Gradient | 39.65 | 92.81 | 60.77 | 86.71 | 66.66 | 83.93 | 53.12 | 86.45 | 55.05 | 87.48 | |

| ReAct | Feature | 45.16 | 92.40 | 53.24 | 87.58 | 60.95 | 84.39 | 41.08 | 90.85 | 50.11 | 88.81 |

| Gradient | 34.80 | 93.22 | 50.09 | 89.44 | 57.05 | 87.02 | 40.19 | 90.91 | 45.53 | 90.15 | |

| BATS | Feature | 55.31 | 90.34 | 59.36 | 86.24 | 66.27 | 83.21 | 54.52 | 86.58 | 58.87 | 86.59 |

| Gradient | 32.42 | 93.35 | 46.96 | 89.72 | 53.65 | 87.30 | 39.60 | 90.93 | 43.16 | 90.33 | |

| KNN | Feature | 99.81 | 29.23 | 98.90 | 46.94 | 99.42 | 42.40 | 47.85 | 85.47 | 86.50 | 51.01 |

| Gradient | 50.68 | 89.44 | 74.61 | 76.74 | 78.88 | 73.59 | 57.09 | 88.94 | 65.32 | 82.18 | |

| DenseNet121, ID Acc | |||||||||||

| MSP | Feature | 49.26 | 89.05 | 67.04 | 81.54 | 69.22 | 81.05 | 67.07 | 79.19 | 63.15 | 82.71 |

| Gradient | 38.20 | 89.96 | 67.90 | 78.91 | 50.54 | 86.17 | 54.60 | 82.75 | 52.81 | 84.45 | |

| Energy | Feature | 39.69 | 92.66 | 51.98 | 87.40 | 57.84 | 85.17 | 52.11 | 85.42 | 50.41 | 87.66 |

| Gradient | 34.50 | 91.34 | 67.70 | 79.96 | 48.12 | 88.40 | 51.90 | 85.73 | 50.56 | 86.36 | |

| ReAct | Feature | 28.08 | 94.51 | 45.34 | 90.27 | 52.70 | 87.18 | 47.75 | 89.31 | 43.47 | 90.32 |

| Gradient | 24.40 | 95.46 | 58.50 | 85.56 | 39.03 | 91.97 | 40.71 | 91.88 | 40.66 | 91.22 | |

| BATS | Feature | 39.69 | 92.66 | 51.98 | 87.40 | 57.84 | 85.17 | 52.11 | 85.42 | 50.41 | 87.66 |

| Gradient | 22.14 | 95.78 | 54.30 | 85.69 | 35.29 | 92.08 | 40.10 | 92.01 | 37.96 | 91.39 | |

| KNN | Feature | 99.95 | 26.91 | 98.99 | 42.09 | 99.38 | 39.14 | 57.13 | 80.13 | 88.86 | 47.07 |

| Gradient | 58.39 | 90.20 | 97.30 | 36.94 | 70.56 | 83.31 | 53.76 | 85.94 | 70.00 | 74.10 | |

| ViT-base-p16-384, ID Acc | |||||||||||

| MSP | Feature | 19.04 | 96.11 | 56.74 | 86.10 | 60.08 | 85.04 | 48.55 | 87.10 | 46.10 | 88.59 |

| Gradient | 13.80 | 96.96 | 56.60 | 84.10 | 50.60 | 90.61 | 43.10 | 92.34 | 41.03 | 91.00 | |

| Energy | Feature | 6.16 | 98.66 | 36.93 | 91.68 | 45.38 | 89.18 | 28.22 | 93.39 | 29.17 | 93.23 |

| Gradient | 5.80 | 98.42 | 35.82 | 91.79 | 43.76 | 91.31 | 22.14 | 94.99 | 26.88 | 94.13 | |

| ReAct | Feature | 5.58 | 98.63 | 43.36 | 90.33 | 50.99 | 87.85 | 33.23 | 90.98 | 33.29 | 91.95 |

| Gradient | 5.40 | 98.56 | 31.40 | 95.63 | 37.00 | 94.05 | 21.20 | 94.48 | 23.75 | 95.68 | |

| KNN | Feature | 48.70 | 91.33 | 77.50 | 77.61 | 72.50 | 78.87 | 43.00 | 88.64 | 60.43 | 84.11 |

| Gradient | 40.30 | 91.53 | 74.40 | 77.36 | 68.40 | 80.25 | 41.91 | 89.02 | 56.25 | 84.54 | |

4.2 Evaluation on CIFAR10 Benchmark

To further demonstrate the effectiveness of our low-dimensional gradients, we also evaluate our method on the CIFAR10 benchmark. The comparison results are presented in Table IV. The hyper-parameters of baseline methods are set to the recommended values, i.e., for Energy, for ReAct, for BATS, and for KNN. The Mahalanobis method we tested solely relies on the original distance formulation in Eq.(9) without incorporating any input pre-processing techniques or feature ensemble approaches. Thus, its performance is expected to be inferior to what has been reported in previous works where such enhancements were employed. Comparing all the results, our proposed gradients integrated with the KNN [7] method demonstrate superior performance on diverse models, e.g., achieving the smallest FPR95 of and the highest AUROC of on ResNet18. Notably, the Mahalanobis method shows a terrible performance on the MobileNet-v2 model, which implies its high sensitivity to network architectures. In contrast, the performance of our method is consistently outstanding across different models.

| Methods | MSP | Energy | ReAct | BATS | Maha | KNN | Ours |

|---|---|---|---|---|---|---|---|

| ResNet18, ID Acc | |||||||

| FPR95 | 33.08 | 29.47 | 36.46 | 21.66 | 22.27 | 27.59 | 21.55 |

| AUROC | 90.06 | 89.40 | 83.34 | 96.21 | 96.31 | 95.84 | 96.33 |

| MobileNet-v2, ID Acc | |||||||

| FPR95 | 69.49 | 48.26 | 49.29 | 48.26 | 95.40 | 56.42 | 36.83 |

| AUROC | 87.51 | 91.00 | 91.58 | 91.00 | 40.92 | 88.95 | 92.81 |

| DenseNet121, ID Acc | |||||||

| FPR95 | 63.88 | 55.33 | 66.81 | 55.33 | 54.69 | 55.80 | 53.90 |

| AUROC | 82.13 | 77.83 | 78.08 | 77.83 | 90.11 | 90.06 | 90.61 |

| Wideresnet-28-10, ID Acc | |||||||

| FPR95 | 32.48 | 33.69 | 40.21 | 33.69 | 23.34 | 23.00 | 22.63 |

| AUROC | 81.87 | 79.12 | 84.08 | 79.12 | 96.30 | 96.13 | 96.74 |

| Methods | MSP | Energy | ReAct | BATS | Maha | KNN | Ours |

|---|---|---|---|---|---|---|---|

| CIFAR100 as OOD data and CIFAR10 as ID data | |||||||

| FPR95 | 50.42 | 47.89 | 54.45 | 43.34 | 58.19 | 47.96 | 41.28 |

| AUROC | 81.94 | 80.87 | 72.94 | 91.15 | 84.97 | 90.45 | 91.54 |

| Tiny-ImageNet as OOD data and CIFAR10 as ID data | |||||||

| FPR95 | 48.03 | 44.90 | 50.78 | 41.97 | 56.10 | 44.23 | 40.45 |

| AUROC | 82.82 | 81.93 | 75.73 | 90.47 | 86.07 | 90.66 | 90.92 |

4.3 Hard OOD detection

In addition to the commonly-used OOD datasets, we further conduct experiments under the near-OOD setting proposed in [65]. Specifically, in the setting of CIFAR10 as ID dataset, we evaluate our method on two hard OOD datasets including CIFAR100 [66] and Tiny-ImageNet [67] with ResNet18 model. We adopt the reduced dimension and KNN basic score function [7], the same as our common settings on CIFAR10 benchmark. The results are shown in Table V, where our method still achieves surpassing performance compared to other approaches. Specifically, our method exceeds the best baseline in terms of FPR95 by on CIFAR100 dataset and on Tiny-ImageNet dataset.

4.4 Comparison with Other Methods

In addition to the aforementioned approaches, there are other detection algorithms, such as ODIN [3], GradNorm [9], and Vim [48], that demonstrate outstanding performance but are not suitable for integration with our low-dimensional gradients. In this section, we compare our method with these algorithms to demonstrate our superior performance in OOD detection.

ODIN is an output-based method that incorporates the gradient of inputs as a data enhancement mechanism to obtain good detection performance. However, since that our low-dimensional gradients are not normalized to a scale of 0-1, it is difficult to determine an appropriate enhancement hyper-parameter. Hence, we do not combine ODIN with our reduced gradients.

GradNorm leverages the norm of parameter gradients as its scoring function to effectively identify OOD samples, exhibiting outstanding performance on the ImageNet benchmark dataset. Nevertheless, in our specific setting, where gradients are used as inputs to our linear network, GradNorm is not suitable.

Vim, a novel approach, capitalizes on the low dimensionality of forward features and introduces a fusion strategy that combines the reconstruction error and energy score function as its detection function. However, the high dimensionality of gradients makes it computationally intensive to calculate the reconstruction error, thereby rendering this method inappropriate to apply to the gradients. Instead, we draw inspiration from the fusion concept and propose an ensemble strategy to detect OOD samples in Section 4.5.

The comparison results are presented in Figure 5, where we use ResNet18 and ResNet50 respectively on CIFAR10 and ImageNet. Our method demonstrates comparable performance on CIFAR10 benchmark and superior performance on ImageNet benchmark, surpassing the best baseline by in AUROC.

4.5 Ensemble of forward and backward information

To further improve our detection performance, we explore strategies for integrating forward and backward information, as depicted in Eq.(11). We select the best-performing baseline method using our reduced gradients to conduct this ensemble approach. The experimental results are presented in Table VI, revealing the effectiveness of information ensemble on both CIFAR10 and ImageNet benchmarks. Particularly noteworthy is the further improvement in our performance on the ImageNet dataset, achieving an FPR95 of and an AUROC of .

| Dataset | Base Method | Metric | Embeddings | ||

|---|---|---|---|---|---|

| Feature | Gradient | Ensemble | |||

| CIFAR10 | KNN | FPR95 | 27.59 | 21.55 | 20.24 (7.35) |

| AUROC | 95.84 | 96.33 | 96.79 (0.95) | ||

| ImageNet | ReAct | FPR95 | 30.70 | 23.03 | 19.55 (11.15) |

| AUROC | 93.30 | 95.45 | 96.12 (2.82) | ||

4.6 Hyper-parameter study

In this section, we provide further analysis of the influence of hyper-parameters on our detection performance to demonstrate the stability of our approach. Specifically, we study the influence of the reduced dimension on our detection performance and accordingly propose the strategy of choosing . Besides, since our reduced gradients are applied in different methods, we also investigate how the hyper-parameters in these methods impact our detection performance.

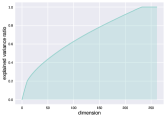

Effect of K. In Figure 6, we systematically analyze the effect of on six baseline methods. We vary the number of 10, 30, 50, 70, 90, 110, 130, 150, 170, 190, 210, 230, 250 for CIFAR10 and 50, 100, 150, 200, 250, 300, 350, 400, 450, 500, 550, 600, 650, 700, 750, 800, 850, 900, 950, 1000 for ImageNet. Our analysis reveals that the performance of our gradient-based methods improves as the dimension increases, both for the CIFAR10 and ImageNet datasets, except that the FPR95 of the KNN method reaches its lowest value when and exhibits a slight increase when on CIFAR10 benchmark.

Choice of . The exception result of KNN score on is actually consistent with our ratio analysis presented in Appendix A, where we observe that the first 230 principal components account for nearly of the total variance. If the dimension is lower than , the information of ID gradients cannot be covered. If the dimension is higher than , the information on residual dimensions may be unexpectedly included, which accounts for a large proportion of OOD gradients while negligible in ID gradients. Since the difference between ID and OOD gradients can be more significant if magnitudes of OOD gradients are filtered out while magnitudes of ID gradients are retained, we do not expect to induce larger values for OOD gradients as the dimension increases. Therefore, the optimal reduction dimension should be the least dimension that can account for the total variance of ID gradients. Our experiments on CIFAR10 benchmark demonstrate that is a suitable dimension that is widely applicable on different architectures.

Effect of Hyper-parameters in Baseline Methods. We analyze how the following hyper-parameters influence our detection performance on their corresponding methods:

-

•

The temperature in Energy [2]

-

•

The percent in ReAct [4]

-

•

The tuning parameter in BATS [5]

-

•

The sort in KNN [7]

Our experiments are conducted on ImageNet with a fixed reduced dimension of . The results are presented in Figure 7. For the BATS and KNN methods, we observe that our performance remains stable with the change of hyper-parameters. In the case of the Energy method, the performance deteriorates with an increase in temperature, aligning with the observation in the original Energy [2] paper. Regarding the ReAct method, we observe a notable performance improvement when the percent changes from 0.5 to 0.6, which is expected since the gradients of ID data undergo a substantial impact when is small. These results provide valuable insights into the sensitivity and stability of our approach with respect to specific hyper-parameters in different baseline methods.

5 Discussion

5.1 Benefit of Dimensionality Reduction

Beyond addressing the curse of dimensionality [14], in this part, we take a deeper look at the benefits of dimensionality reduction for OOD detection. Firstly, we evaluate the reconstruction error of gradients to analyze the influence of dimensionality reduction on ID and OOD data. The reconstruction error is defined as , which reflects the ratio of the gradient component in the residual space to the whole gradient magnitude. Experiments are conducted on CIFAR10 benchmark with ResNet18 model, using SVHN [53] as OOD dataset. The result is reported in Table VIII, which reveals that gradients of ID data approximately cluster in the low-dimensional space while gradients of OOD data do not fall into it. This difference induces a larger distance between ID and OOD gradients in the low-dimensional space compared to the whole space, which is verified by our empirical results in Table IX. Therefore, the benefits of our method are not limited to utilizing complete gradient information, but also to widening the difference between ID and OOD gradients in the low-dimensional space.

| Subspace | Dim | SVHN | Texture | LSUN | iSUN | Average | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | FPR95 | AUROC | ||

| PCA | 200 | 12.72 | 97.51 | 32.20 | 94.62 | 13.36 | 97.41 | 27.91 | 95.79 | 21.55 | 96.33 |

| PCA | 10 | 33.18 | 95.47 | 48.12 | 92.95 | 35.18 | 95.66 | 28.00 | 95.40 | 36.12 | 94.87 |

| AG | 10 | 36.60 | 95.16 | 49.57 | 92.52 | 42.39 | 95.21 | 28.01 | 95.39 | 39.14 | 94.57 |

| Data | Test | OOD |

| Reconstruction Error | 0.08 | 0.87 |

| Metric | In whole space | In low-dimensional space | ||||

|---|---|---|---|---|---|---|

| Train-Test | Train-OOD | Diff | Train-Test | Train-OOD | Diff | |

| Distance | 1.32 | 1.87 | 0.55 | 0.49 | 1.84 | 1.35 |

5.2 Comparison between PCA and Average Subspace

In this part, we compare the detection performance of using PCA subspace and the alternative cheaper one (average gradient subspace, AG) on the CIFAR10 benchmark with ResNet18 model. The results are shown in Table VII, where we adopt the KNN [7] as our basic score function. From it, we can find that the 200-dimensional PCA subspace significantly outperforms the AG subspace. Meanwhile, the performance of the 10-dimensional PCA subspace slightly exceeds that of AG, consistent with our analysis in Sec 3.2. According to the result, using PCA subspace for dimensionality reduction is a better choice. But in practice, we can flexibly choose the subspace considering the trade-off between detection performance and computational overhead, since our analysis in Sec 5.4 reveals that computing the average gradient only requires little time overhead.

5.3 Impact of Outlier Exposure

Our previous experiments have verified that in vanilla training, gradient provides a more significant difference between ID and OOD data compared to output/feature information. To study the universal applicability of our approach, we explore the influence of outlier exposure (OE, [35]), a model fine-tuning method using auxiliary OOD data, on the gradients. We follow the common training setting in OE [35] to fine-tune a vanilla ResNet18 model with CIFAR10 [24] as ID dataset and Tiny Images [68] as auxiliary OOD Dataset. Specific settings are in Appendix C. Based on the OE-trained model, we evaluate the detection performance of common post-hoc scores including MSP [1], Energy [2], ReAct [4], BATS [5] and KNN [7] on the forward feature, and our detection performance based on the low-dimensional gradient with MSP score. The first four scores [1, 2, 4, 5] are calculated based on model outputs, and the KNN score [7] is computed using the k-th nearest distance defined on feature space. Experiment results are reported in Table X, from which we can see that OE makes the difference between ID and OOD more significant for both forward and backward information. Notice here we directly used the existing OE method that is designed for output-based scores, which hence explicitly enlarges the discrepancy of output space. In this case, our low-dimensional gradient achieves comparable performance, fully demonstrating the versatility of our approach. We believe that there is potential to further improve our detection performance by designing a suitable training loss using auxiliary OOD data to explicitly enlarge the discrepancy between ID and OOD gradients.

| Methods | MSP | Energy | ReAct | BATS | KNN | Ours |

|---|---|---|---|---|---|---|

| FPR95 | 2.46 | 2.35 | 2.35 | 4.06 | 8.60 | 2.36 |

| AUROC | 98.57 | 98.55 | 98.55 | 98.40 | 94.73 | 97.37 |

5.4 Time Overhead

In this part, we report the specific time cost of our approach. All the experiments are conducted on four NVIDIA GeForce RTX 2080Ti GPUs. Generally, our method consists of two stages: offline subspace extraction and online score calculation. The offline stage can be pre-computed and saved using the in-distribution (ID) training dataset, meaning it only needs to be performed once. The results presented in Table XI and Table XII exhibit the computation time of each stage. We can observe that the PCA-based subspace extraction takes a longer time compared to the average gradient approach (Table XI). However, as to the inference cost, the computation time of our method is almost equal to the time required for a single backward propagation as shown in Table XII, which is considered acceptable for practical applications.

| Dataset | Architecture | Param | Subspace Type | Dim | Time(h) |

|---|---|---|---|---|---|

| CIFAR10 | ResNet18 | 11.17M | AVG | 10 | 0.006 |

| PCA | 200 | 14.04 | |||

| ImageNet | ResNet50 | 25.56M | AVG | 1000 | 0.75 |

| Architecture | Param | Dim | Time(ms) | Overall(ms) | ||

|---|---|---|---|---|---|---|

| Backward | Projection | Prediction | ||||

| ResNet18 | 11.17M | 10 | ||||

| 200 | ||||||

| ResNet50 | 25.56M | 1000 | 747.22 | 0.32 | 0.70 | 748.24 |

6 Limitation

Our method involves certain computational overhead for both the offline subspace extraction and online score calculation stages. In practice, it is necessary to consider the available computing resources and optimize the implementation for efficient execution of these processes. One possible way to accelerate our subspace extraction is by random sampling [69, 70], i.e., employing a subset of the training data to calculate the principal components. Moreover, while our results are promising, there are some cases where feature-based methods may yield better performance compared to gradient-based ones. As the primary focus of this paper is to investigate the feasibility of utilizing gradient embedding, we leave further improvements and comparative studies for future research.

7 Conclusion

This paper presents a pioneering study exploring the utilization of complete parameter gradient information for OOD detection. To solve the problem arising from the high dimensionality of gradients, we propose to conduct dimension reduction on gradients using our designated subspace, which comprises the principal components of gradients. With the low-dimensional representations of gradients, we subsequently explore their integration with various detection algorithms. Our extensive experiments demonstrate that our low-dimensional gradients can notably improve performance across a wide range of detection tasks. We hope our work inspires further research on leveraging parameter gradients for OOD detection.

Acknowledgment

The authors would like to thank the anonymous reviewers for their insightful comments and valuable suggestions.

References

- [1] D. Hendrycks and K. Gimpel, “A baseline for detecting misclassified and out-of-distribution examples in neural networks,” arXiv preprint arXiv:1610.02136, 2016.

- [2] W. Liu, X. Wang, J. Owens, and Y. Li, “Energy-based out-of-distribution detection,” Advances in neural information processing systems, vol. 33, pp. 21 464–21 475, 2020.

- [3] S. Liang, Y. Li, and R. Srikant, “Enhancing the reliability of out-of-distribution image detection in neural networks,” arXiv preprint arXiv:1706.02690, 2017.

- [4] Y. Sun, C. Guo, and Y. Li, “React: Out-of-distribution detection with rectified activations,” Advances in Neural Information Processing Systems, vol. 34, pp. 144–157, 2021.

- [5] Y. Zhu, Y. Chen, C. Xie, X. Li, R. Zhang, H. Xue, X. Tian, Y. Chen et al., “Boosting out-of-distribution detection with typical features,” arXiv preprint arXiv:2210.04200, 2022.

- [6] K. Lee, K. Lee, H. Lee, and J. Shin, “A simple unified framework for detecting out-of-distribution samples and adversarial attacks,” Advances in neural information processing systems, vol. 31, 2018.

- [7] Y. Sun, Y. Ming, X. Zhu, and Y. Li, “Out-of-distribution detection with deep nearest neighbors,” in International Conference on Machine Learning. PMLR, 2022, pp. 20 827–20 840.

- [8] C. Igoe, Y. Chung, I. Char, and J. Schneider, “How useful are gradients for ood detection really?” arXiv preprint arXiv:2205.10439, 2022.

- [9] R. Huang, A. Geng, and Y. Li, “On the importance of gradients for detecting distributional shifts in the wild,” Advances in Neural Information Processing Systems, vol. 34, pp. 677–689, 2021.

- [10] J. Lee, C. Lehman, M. Prabhushankar, and G. AlRegib, “Probing the purview of neural networks via gradient analysis,” IEEE Access, vol. 11, pp. 32 716–32 732, 2023.

- [11] J. Sun, L. Yang, J. Zhang, F. Liu, M. Halappanavar, D. Fan, and Y. Cao, “Gradient-based novelty detection boosted by self-supervised binary classification,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 8, 2022, pp. 8370–8377.

- [12] J. Lee, M. Prabhushankar, and G. AlRegib, “Gradient-based adversarial and out-of-distribution detection,” arXiv preprint arXiv:2206.08255, 2022.

- [13] V. Szolnoky, V. Andersson, B. Kulcsar, and R. Jörnsten, “On the interpretability of regularisation for neural networks through model gradient similarity,” Advances in Neural Information Processing Systems, vol. 35, pp. 16 319–16 330, 2022.

- [14] A. Zimek, E. Schubert, and H.-P. Kriegel, “A survey on unsupervised outlier detection in high-dimensional numerical data,” Statistical Analysis and Data Mining: The ASA Data Science Journal, vol. 5, no. 5, pp. 363–387, 2012.

- [15] T. Li, L. Tan, Z. Huang, Q. Tao, Y. Liu, and X. Huang, “Low dimensional trajectory hypothesis is true: Dnns can be trained in tiny subspaces,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 3, pp. 3411–3420, 2022.

- [16] G. Gur-Ari, D. A. Roberts, and E. Dyer, “Gradient descent happens in a tiny subspace,” arXiv preprint arXiv:1812.04754, 2018.

- [17] E. J. Hu, Y. Shen, P. Wallis, Z. Allen-Zhu, Y. Li, S. Wang, L. Wang, and W. Chen, “Lora: Low-rank adaptation of large language models,” arXiv preprint arXiv:2106.09685, 2021.

- [18] A. Baratin, T. George, C. Laurent, R. D. Hjelm, G. Lajoie, P. Vincent, and S. Lacoste-Julien, “Implicit regularization via neural feature alignment,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2021, pp. 2269–2277.

- [19] V. Papyan, “Traces of class/cross-class structure pervade deep learning spectra,” The Journal of Machine Learning Research, vol. 21, no. 1, pp. 10 197–10 260, 2020.

- [20] A. Canatar, B. Bordelon, and C. Pehlevan, “Spectral bias and task-model alignment explain generalization in kernel regression and infinitely wide neural networks,” Nature communications, vol. 12, no. 1, p. 2914, 2021.

- [21] R. ZHANG, S. Zhai, E. Littwin, and J. M. Susskind, “Learning representation from neural fisher kernel with low-rank approximation,” in International Conference on Learning Representations, 2021.

- [22] S. Fort and S. Ganguli, “Emergent properties of the local geometry of neural loss landscapes,” arXiv preprint arXiv:1910.05929, 2019.

- [23] F. Mu, Y. Liang, and Y. Li, “Gradients as features for deep representation learning,” in International Conference on Learning Representations, 2019.

- [24] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [25] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

- [26] I. Kobyzev, S. J. Prince, and M. A. Brubaker, “Normalizing flows: An introduction and review of current methods,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 11, pp. 3964–3979, 2020.

- [27] E. Zisselman and A. Tamar, “Deep residual flow for out of distribution detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 994–14 003.

- [28] D. P. Kingma and P. Dhariwal, “Glow: Generative flow with invertible 1x1 convolutions,” Advances in neural information processing systems, vol. 31, 2018.

- [29] D. Jiang, S. Sun, and Y. Yu, “Revisiting flow generative models for out-of-distribution detection,” in International Conference on Learning Representations, 2021.

- [30] H. Choi, E. Jang, and A. A. Alemi, “Waic, but why? generative ensembles for robust anomaly detection,” arXiv preprint arXiv:1810.01392, 2018.

- [31] I. Ndiour, N. Ahuja, and O. Tickoo, “Out-of-distribution detection with subspace techniques and probabilistic modeling of features,” arXiv preprint arXiv:2012.04250, 2020.

- [32] M. Cook, A. Zare, and P. Gader, “Outlier detection through null space analysis of neural networks,” arXiv preprint arXiv:2007.01263, 2020.

- [33] J. Lust and A. P. Condurache, “Gran: An efficient gradient-norm based detector for adversarial and misclassified examples,” arXiv preprint arXiv:2004.09179, 2020.

- [34] H. Wang, Z. Li, L. Feng, and W. Zhang, “Vim: Out-of-distribution with virtual-logit matching,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 4921–4930.

- [35] D. Hendrycks, M. Mazeika, and T. Dietterich, “Deep anomaly detection with outlier exposure,” arXiv preprint arXiv:1812.04606, 2018.

- [36] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in International conference on machine learning. PMLR, 2020, pp. 1597–1607.

- [37] P. Khosla, P. Teterwak, C. Wang, A. Sarna, Y. Tian, P. Isola, A. Maschinot, C. Liu, and D. Krishnan, “Supervised contrastive learning,” Advances in neural information processing systems, vol. 33, pp. 18 661–18 673, 2020.

- [38] Y. Ming, Y. Sun, O. Dia, and Y. Li, “Cider: Exploiting hyperspherical embeddings for out-of-distribution detection,” arXiv preprint arXiv:2203.04450, vol. 7, no. 10, 2022.

- [39] W. Grathwohl, K.-C. Wang, J.-H. Jacobsen, D. Duvenaud, M. Norouzi, and K. Swersky, “Your classifier is secretly an energy based model and you should treat it like one,” arXiv preprint arXiv:1912.03263, 2019.

- [40] A. Jacot, F. Gabriel, and C. Hongler, “Neural tangent kernel: Convergence and generalization in neural networks,” Advances in neural information processing systems, vol. 31, 2018.

- [41] S. Wold, K. Esbensen, and P. Geladi, “Principal component analysis,” Chemometrics and intelligent laboratory systems, vol. 2, no. 1-3, pp. 37–52, 1987.

- [42] K.-J. Bathe, Solution methods for large generalized eigenvalue problems in structural engineering. National Technical Information Service, US Department of Commerce, 1971.

- [43] G. H. Golub and H. A. Van der Vorst, “Eigenvalue computation in the 20th century,” Journal of Computational and Applied Mathematics, vol. 123, no. 1-2, pp. 35–65, 2000.

- [44] G. Charpiat, N. Girard, L. Felardos, and Y. Tarabalka, “Input similarity from the neural network perspective,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [45] H. He and W. J. Su, “The local elasticity of neural networks,” arXiv preprint arXiv:1910.06943, 2019.

- [46] S. Fort, P. K. Nowak, S. Jastrzebski, and S. Narayanan, “Stiffness: A new perspective on generalization in neural networks,” arXiv preprint arXiv:1901.09491, 2019.

- [47] L. Van der Maaten and G. Hinton, “Visualizing data using t-sne.” Journal of machine learning research, vol. 9, no. 11, 2008.

- [48] H. Wang, Z. Li, L. Feng, and W. Zhang, “Vim: Out-of-distribution with virtual-logit matching,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 4921–4930.

- [49] Y. Ming, Y. Sun, O. Dia, and Y. Li, “How to exploit hyperspherical embeddings for out-of-distribution detection?” arXiv preprint arXiv:2203.04450, 2022.

- [50] Q. Wang, Z. Fang, Y. Zhang, F. Liu, Y. Li, and B. Han, “Learning to augment distributions for out-of-distribution detection,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- [51] J. Li, X. Zhou, P. Guo, Y. Sun, Y. Huang, W. Ge, and W. Zhang, “Hierarchical visual categories modeling: A joint representation learning and density estimation framework for out-of-distribution detection,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 23 425–23 435.

- [52] M. Cimpoi, S. Maji, I. Kokkinos, S. Mohamed, and A. Vedaldi, “Describing textures in the wild,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 3606–3613.

- [53] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng, “Reading digits in natural images with unsupervised feature learning,” 2011.

- [54] F. Yu, A. Seff, Y. Zhang, S. Song, T. Funkhouser, and J. Xiao, “Lsun: Construction of a large-scale image dataset using deep learning with humans in the loop,” arXiv preprint arXiv:1506.03365, 2015.

- [55] P. Xu, K. A. Ehinger, Y. Zhang, A. Finkelstein, S. R. Kulkarni, and J. Xiao, “Turkergaze: Crowdsourcing saliency with webcam based eye tracking,” arXiv preprint arXiv:1504.06755, 2015.

- [56] G. Van Horn, O. Mac Aodha, Y. Song, Y. Cui, C. Sun, A. Shepard, H. Adam, P. Perona, and S. Belongie, “The inaturalist species classification and detection dataset,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8769–8778.

- [57] J. Xiao, J. Hays, K. A. Ehinger, A. Oliva, and A. Torralba, “Sun database: Large-scale scene recognition from abbey to zoo,” in 2010 IEEE computer society conference on computer vision and pattern recognition. IEEE, 2010, pp. 3485–3492.

- [58] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, “Places: A 10 million image database for scene recognition,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 6, pp. 1452–1464, 2017.

- [59] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [60] M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, “Mobilenetv2: Inverted residuals and linear bottlenecks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4510–4520.

- [61] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4700–4708.

- [62] S. Zagoruyko and N. Komodakis, “Wide residual networks,” in British Machine Vision Conference 2016. British Machine Vision Association, 2016.

- [63] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer, “Automatic differentiation in pytorch,” 2017.

- [64] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [65] J. Yang, K. Zhou, Y. Li, and Z. Liu, “Generalized out-of-distribution detection: A survey,” arXiv preprint arXiv:2110.11334, 2021.

- [66] A. Krizhevsky, V. Nair, and G. Hinton, “Cifar-10 and cifar-100 datasets,” URl: https://www. cs. toronto. edu/kriz/cifar. html, vol. 6, no. 1, p. 1, 2009.

- [67] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, no. 6, pp. 84–90, 2017.

- [68] A. Torralba, R. Fergus, and W. T. Freeman, “80 million tiny images: A large data set for nonparametric object and scene recognition,” IEEE transactions on pattern analysis and machine intelligence, vol. 30, no. 11, pp. 1958–1970, 2008.

- [69] K. Tsuyuzaki, H. Sato, K. Sato, and I. Nikaido, “Benchmarking principal component analysis for large-scale single-cell rna-sequencing,” Genome biology, vol. 21, no. 1, p. 9, 2020.

- [70] G. Abraham and M. Inouye, “Fast principal component analysis of large-scale genome-wide data,” PloS one, vol. 9, no. 4, p. e93766, 2014.

Appendix A Efficient PCA for DNNs

Let us denote the gradient over the training dataset as , where denotes the sample number and denotes the parameter number. To extract the low-dimensional subspace where the gradient principal components reside, we need to compute the top-K eigenvector of the gradient covariance . The main difficulties lie in two aspects: 1. The gradient matrix is too large to calculate and save since both and are enormous for modern DNNs. 2. Even if and are obtained, the eigen decomposition of cannot be directly calculated because of its high dimensionality.

To solve the above problems, we employ the power iteration method [42, 43] to efficiently calculate the top- eigenvectors of , while ensuring computational feasibility. Noticing that the covariance matrix is a dot product of and , each iteration step of the power method can be decomposed into two steps, as shown in Algorithm 2. And for each decomposed step, there exist efficient implementations in Pytorch [63] called Jacobian Vector Product (JVP) and Vector Jacobian Product (VJP), respectively, calculated at the same order of computational costs of one vanilla backward-pass and forward-pass of DNNs. Specific algorithms are shown in Algorithm 3 and Algorithm 4, where we integrate dataloaders into the algorithm. With this practical subspace extraction algorithm, we can obtain a low-dimensional subspace denoted by that encompasses the principal components of gradients.

Figure 8a shows the magnitude of the eigenvalues of the gradient covariance matrix calculated by our algorithm. It can be observed that the eigenvalues tend to be concentrated within the initial 200 dimensions, with subsequent eigenvalues abruptly diminishing to zero. Additionally, we present Figure 8b, which illustrates the explained variance ratio as a function of the number of components. The results reveal that the top-200 principal components account for approximately 90 of the total variance. The above findings provide empirical validation for the low-dimensional nature of gradients.

Appendix B Gradient Directional Similarity

We examine the cosine similarity between the parameter gradient of a sample and its corresponding class-mean gradient on both the CIFAR10 and ImageNet datasets. In a high-dimensional space, the cosine similarity between two random vectors tends to approach zero, as verified in [14]. However, the average cosine similarity values presented in Table XIII are significantly higher than zero, which implies that gradients of samples belonging to the same class tend to align in similar directions.

| Dataset | Training Set | Test Set |

|---|---|---|

| CIFAR10 | 0.59 | 0.47 |

| ImageNet | 0.51 | 0.45 |

Appendix C Training Setting for Outlier Exposure

The outlier exposure method [35], which utilizes auxiliary OOD data to fine-tune the model, has shown excellent performance in OOD detection. It can be formalize as the following optimization problem:

| (12) |

where is the model hypothesis, is the cross-entropy loss on ID data, and is the outlier exposure loss on auxiliary OOD data defined as the cross-entropy between OOD outputs and uniformly distributed labels for classification problem. The is a balance hyper-parameter, usually set to in experiments. In the following, we present the detailed experimental settings.

OOD Datasets. We randomly choose 300K samples from the 80 Million Tiny Images [68] as our auxiliary OOD dataset.

Pre-training Setups. We employ ResNet18 [59] trained for 200 epochs, with batch size 128, init learning rate 0.1, momentum 0.9, weight decay 0.0005, and cosine schedule.

Fine-tuning Setups. We adopt the model parameter of the 99th epoch in the pre-training process as our initial network parameters, and then add auxiliary OOD data to train the model for 50 epochs with ID batch size 128, OOD batch size 256, initial learning rate 0.07, momentum 0.9, weight decay 0.0005 and cosine schedule.