Long-Term Visitation Value for Deep Exploration

in Sparse-Reward Reinforcement Learning

Abstract

Reinforcement learning with sparse rewards is still an open challenge. Classic methods rely on getting feedback via extrinsic rewards to train the agent, and in situations where this occurs very rarely the agent learns slowly or cannot learn at all. Similarly, if the agent receives also rewards that create suboptimal modes of the objective function, it will likely prematurely stop exploring.

More recent methods add auxiliary intrinsic rewards to encourage exploration.

However, auxiliary rewards lead to a non-stationary target for the Q-function.

In this paper, we present a novel approach that (1) plans exploration actions far into the future by using a long-term visitation count, and (2) decouples exploration and exploitation by learning a separate function assessing the exploration value of the actions.

Contrary to existing methods which use models of reward and dynamics, our approach is off-policy and model-free.

We further propose new tabular environments for benchmarking exploration in reinforcement learning.

Empirical results on classic and novel benchmarks show that the proposed approach outperforms existing methods in environments with sparse rewards, especially in the presence of rewards that create suboptimal modes of the objective function. Results also suggest that our approach scales gracefully with the size of the environment.

The source code is available at https://github.com/sparisi/visit-value-explore

Keywords: reinforcement learning, sparse reward, exploration, upper confidence bound, off-policy

1 Introduction

Reinforcement learning (RL) is a process where an agent learns how to behave in an environment by trial and error. The agent performs actions and, in turn, the environment may provide a reward, i.e., a feedback assessing the quality of the action. The goal of RL is then to learn a policy, i.e., a function producing a sequence of actions yielding the maximum cumulative reward. Despite its simplicity, RL achieved impressive results, such as learning to play Atari videogames from pixels (Mnih et al., 2013; Schulman et al., 2017), or beating world-class champions at Chess, Go and Shogi (Silver et al., 2017). However, a high-quality reward signal is typically necessary to learn the optimal policy, and without it RL algorithms may perform poorly even in small environments (Osband et al., 2019).

Characteristics of high-quality rewards.

One important factor that defines the quality of the reward signal is the frequency at which rewards are emitted. Frequently emitted rewards are called “dense”, in contrast to infrequent emissions which are called “sparse”. Since improving the policy relies on getting feedback via rewards, the policy cannot be improved until a reward is obtained. In situations where this occurs very rarely, the agent learns slowly or cannot learn at all.

Furthermore, reinforcement signals should encourage the agent to find the best actions to solve the given task. However, the environment may also provide distracting rewards, i.e., rewards that create suboptimal modes of the objective function. In this case, the agent should be able to extensively explore the environment without prematurely converge to locally optimal solutions caused by distracting rewards.

Reward engineering.

In the presence of sparse distracting rewards, efficiently exploring the environment to learn the optimal policy is challenging.

Therefore, many RL algorithms rely on well-shaped reward functions such as quadratic costs.

For example, in a collect-and-deliver task, we could design an additional reward function that rewards proximity to the items to be collected.

These well-shaped functions help to guide the agent towards good solutions and avoid bad local optima.

However, from the perspective of autonomous learning, this so-called reward engineering is unacceptable for three reasons.

First, the commonly utilized reward functions heavily restrict the solution space and may prevent the agent from learning optimal behavior (especially if no solution to the task is known).

Second, it is easy to misspecify the reward function and cause unexpected behavior. For instance, by getting excessively high rewards for proximity to the items the agent may never learn to deliver them.

Third, reward engineering requires manual tuning of the reward function and the manual addition of constraints. This process requires expertise and the resulting reward function and constraints may not transfer to different tasks or environments.

In this paper, we address the problem of reinforcement learning with sparse rewards without relying on reward engineering, or requiring prior knowledge about the environment or the task to solve. In particular, we highlight the problem of learning in the presence of rewards that create suboptimal modes of the objective function, which we call “distractors”.

The exploration-exploitation dilemma. Learning when rewards are sparse and possibly distracting challenges classic RL algorithms. Initially, the agent has no knowledge about the environment and needs to explore it in search for feedback. As it gathers rewards, the agent develops a better understanding of the environment and can estimate which actions are “good”, i.e., yield positive rewards, and which are not.

Then, at any point, it needs to decide whether it should explore the environment in search for better rewards by executing new actions, or exploit its knowledge and execute known good actions.

This “exploration-exploitation dilemma” is frequently addressed naively by dithering (Mnih et al., 2013; Lillicrap et al., 2016). In continuous action spaces Gaussian noise is added to the action, while in discrete spaces actions are chosen -greedily, i.e., optimally with probability and randomly with probability . Typically, the initial noise and are large and then decay over time.

These approaches work in environments where random sequences of actions are likely to cause positive rewards. However, if rewards are sparse or are given only for specific sequences of actions, it is very unlikely that the agent will receive any feedback. In this case, the worst-case sample complexity for learning the optimal policy with dithering exploration is exponential in the number of states and actions (Kakade, 2003; Szepesvari, 2010; Osband et al., 2016b). Furthermore, if distractor rewards are easily reachable, the agent may prematurely converge to local optima. An example of this problem is depicted in Figure 1.

Deep exploration.

Well-performing exploration strategies should solve long-horizon problems with sparse and possibly distracting rewards in large state-action spaces while remaining computationally tractable. This can be achieved if the agent takes several coherent actions to explore unknown states instead of just locally choosing the most promising actions. These behaviors are usually referred as “deep exploration” and “myopic exploration”, respectively (Osband et al., 2016a).

RL literature has a long history of algorithms for deep exploration, even though the term “deep” became popular only in recent years.

The first algorithms to guarantee full exploration of tabular environments date back to Kearns and Singh (2002) and Brafman and Tennenholtz (2002). These algorithms learn a model of the environment, i.e., the transition and reward functions, and plan actions accordingly to a state-action visitation count in order to favor less visited states.

Since then, other model-based (Jaksch et al., 2010; Hester and Stone, 2013) as well as model-free algorithms (Strehl et al., 2006; Bellemare et al., 2016; Jin et al., 2018; Dong et al., 2020) have been proposed. However, despite their strong guarantees, these algorithms either require to learn the complete model of the environment, rely on optimistic initialization, or have impractically high sample complexity.

In continuous environments, intrinsic motivation and bootstrapping are the most prominent approaches.

The former defines an additional intrinsic reward added to the environment extrinsic reward. If the extrinsic reward is sparse, the intrinsic reward can fill the gaps between the sparse signals, possibly giving the agent quality feedback at every timestep. This approach can be used in conjunction with any RL algorithm by just providing the modified reward signal.

However, the quality of exploration strongly depends on the intrinsic reward, which may not scale gracefully with the extrinsic reward and therefore needs hand-tuning. Furthermore, combining exploration and exploitation by summing intrinsic and extrinsic rewards can result in undesired behavior due to the non-stationarity of the augmented reward function.

Moreover, convergence is harder to guarantee (Kolter and Ng, 2009), and in general, there is no agreement on the definition of the best intrinsic reward (Houthooft et al., 2016; Pathak et al., 2017).

Bootstrapping, instead, is used to estimate the actions value posterior distribution over the environment, which then drives exploration. Because actions are selected considering the level of uncertainty associated with their value estimates, bootstrapping incentivizes experimentation with actions of highly uncertain value and, thus, induces exploration (Osband et al., 2019).

However, these methods rely on approximated posteriors and usually lack guarantees of convergence, unless either the environment model is learned or a time-dependent policy is used (Osband et al., 2019).

Deep exploration via long-term visitation value.

In this paper, we present a novel approach that (1) plans exploration actions far into the future using a long-term visitation count, and (2) decouples exploration and exploitation by learning a separate function assessing the exploration value of the actions.

Contrary to existing methods that use models of reward and dynamics, our approach is off-policy and model-free.

We further comprehensively benchmark our approach against existing algorithms on several environments, stressing the challenges of learning with sparse and distracting rewards. Empirical results show that the proposed approach outperforms existing algorithms, and suggest that it scales gracefully with the size of the environment.

2 Preliminaries

We start with a description of the RL framework, providing the reader with the notation used in the remainder of the paper. Subsequently, we review the most prominent exploration strategies in literature, discuss their shortcomings, and identify open challenges.

2.1 Reinforcement Learning and Markov Decision Processes

We consider RL in an environment governed by a Markov Decision Process (MDP). An MDP is described by the tuple , where is the state space, is the action space, defines a Markovian transition probability density between the current and the next state under action , is the reward function, and is initial distribution for state . The state and action spaces could be finite, i.e., and , or continuous, i.e., and . The former case is often called tabular MDP, as a table can be used to store and query all state-action pairs if the size of the state-action space is not too large. If the model of the environment is known, these MDPs can be solved exactly with dynamic programming. The latter, instead, requires function approximation, and algorithms typically guarantee convergence to local optima. In the remainder of the paper, we will consider only tabular MDPs and only model-free RL, i.e., the model of the environment is neither known nor learned.

Given an MDP, we want to learn to act. Formally, we want to find a policy to take an appropriate action when the agent is in state . By following such a policy starting at initial state , we obtain a sequence of states, actions and rewards , where is the reward at time , and is the total timesteps also called horizon, which can possibly be infinite. We refer to such sequences as trajectories or episodes. Our goal is thus to find a policy that maximizes the expected return

| (1) | ||||

| (2) |

where is the (discounted) state distribution under , i.e., the probability of visiting state under , and is the discount factor which assigns weights to rewards at different timesteps. is the action-state value function (or Q-function) which is the expected return obtained by executing in state and then following afterwards. In tabular MDPs, the Q-function can be stored in a table, commonly referred as Q-table, and in stationary infinite-horizon MDPs the greedy policy is always optimal (Puterman, 1994). However, the Q-function of the current policy is initially unknown and has to be learned.

Q-learning.

For tabular MDPs, Q-learning by Watkins and Dayan (1992) was one of the most important breakthroughs in RL, and it is still at the core of recent successful algorithms. At each training step , the agent chooses an action according to a behavior policy , i.e., , and then updates the Q-table according to

| (3) | ||||

| (4) |

where is the learning rate and is the temporal difference (TD) error. The blue term is often referred to as TD target. This approach is called off-policy because the policy used to explore the environment is different from the target greedy policy .

The behavior policy and the “exploration-exploitation dilemma”.

As discussed in Section 1, an important challenge in designing the behavior policy is to account for the exploration-exploitation dilemma. Exploration is a long-term endeavor where the agent tries to maximize the possibility of finding better rewards in states not yet visited. On the contrary, exploitation maximizes the expected rewards according to the current knowledge of the environment. Typically, in continuous action spaces Gaussian noise is added to the action, while in discrete spaces actions are chosen -greedily, i.e., optimally with probability and randomly with probability . In this case, the exploration-exploitation dilemma is simply addressed by having larger noise and at the beginning, and then decaying them over time. This naive dithering exploration is extremely inefficient in MDPs with sparse rewards, especially if some of them are “distractors”, i.e., rewards that create suboptimal modes of the objective function. In the remainder of this section, we review more sophisticated approaches addressing exploration with sparse rewards. We first show theoretically grounded ideas for tabular or small MDP settings which are generally computationally intractable for large MDPs. We then give an overview of methods that try to apply similar principles to large domains by making approximations.

2.2 Related Work

Since finding high-value states is crucial for successful

RL, a large body of work has been devoted to

efficient exploration in the past years. We begin by discussing an optimal solution to exploration, then continue with confidence-bound-based approaches. Then, we discuss methods based on intrinsic motivation, and describe posterior optimality value-distribution-based methods. We conclude by

stating the main differences between our approach and these

methods.

Optimal solution to exploration. In this paper, we consider model-free RL, i.e., the agent does not know the MDP dynamics.

It is well-known that in model-free RL the optimal solution to exploration can be found by a Bayesian approach. First,

assign an initial (possibly uninformative) prior distribution over

possible unknown MDPs. Then, at each time step the posterior belief

distribution over possible MDPs can be computed using the Bayes’ theorem

based on the current prior distribution, executed action, and made

observation. The action that optimally explores is then found

by planning over possible future posterior distributions, e.g., using tree search, sufficiently far forward. Unfortunately,

optimal exploration is intractable even for very small

tasks (Szepesvari, 2010; Strens, 2000; Poupart et al., 2006). The worst-case computational effort is exponential w.r.t. the planning horizon in tabular MDPs, and can be even more challenging with continuous state-action spaces.

Optimism.

In tabular MDPs, many of the provably efficient algorithms are

based on optimism in the face of uncertainty (OFU)

(Lai and Robbins, 1985). In OFU, the agent acts greedily

w.r.t. an optimistic action value estimate composed of the value

estimate and a bonus term that is proportional to the uncertainty of

the value estimate. After executing an optimistic action, the agent

then either experiences a high reward learning that the value of the action was indeed high, or the agent experiences a low reward and learns that the action was not optimal. After visiting a state-action pair, the exploration bonus is reduced. This approach is superior to naive approaches in that it avoids actions where low value and low information gain are possible. Under the assumption that the agent can visit every

state-action pair infinitely many times, the overestimation will

decrease and almost optimal behavior can be obtained

(Kakade, 2003). Most algorithms are optimal up to a

polynomial amount of states, actions, or horizon length. RL literature provides many variations of these algorithms which use

bounds with varying efficacy or different simplifying assumptions, such as (Kearns and Singh, 2002; Brafman and Tennenholtz, 2002; Kakade, 2003; Auer and Ortner, 2007; Jaksch et al., 2010; Dann and Brunskill, 2015).

Upper confidence bound.

Perhaps the best well-known OFU algorithm is the upper confidence

bound (UCB) algorithm (Auer et al., 2002). UCB chooses actions based on a

bound computed from visitation counts: the lower the count, the higher the bonus. Recently, Jin et al. (2018)

proved that episodic Q-learning with UCB exploration converges to a

regret proportional to the square root of states, actions, and timesteps, and to the square root of the cube of the episode

length. Dong et al. (2020) gave a similar proof for infinite horizon

MDPs with sample complexity proportional to the number of states and

actions. In our experiments, we compare our approach to Q-learning

with UCB exploration as defined by Auer et al. (2002).

Intrinsic motivation.

The previously discussed algorithms are often computationally intractable,

or their guarantees no longer apply in continuous state-action

spaces, or when the state-action spaces are too

large. Nevertheless, these provably efficient algorithms inspired more practical algorithms. Commonly, exploration is conducted by adding a bonus reward,

also called auxiliary reward, to interesting states. This is often

referred to as intrinsic motivation (Ryan and Deci, 2000). For

example, the bonus can be similar to the

UCB (Strehl and Littman, 2008; Bellemare et al., 2016), thus encouraging actions of high uncertainty or of low visitation count.

Recent methods, instead, compute the impact of actions based on state embeddings and reward the agent for performing actions changing the environment (Raileanu and Rocktäschel, 2020).

Other forms of bonus are based on the prediction error of some

quantity. For example, the agent may learn a model of the dynamics and try to predict the next state (Stadie et al., 2015; Houthooft et al., 2016; Pathak et al., 2017; Schmidhuber, 1991, 2006). By giving a bonus proportional to the prediction error, the agent is incentivized to explore unpredictable states. Unfortunately, in the presence of noise, unpredictable states are not necessarily interesting states.

Recent work addresses this issue by training an ensemble of models (Pathak et al., 2019) or predicting the output of a random neural network (Burda et al., 2019).

As we will discuss in Section 3.2, our approach has

similarities with the use of auxiliary reward. However, the

use of auxiliary rewards can be inefficient for long-horizon

exploration. Our approach addresses this issue by using a decoupled

long-horizon exploration policy and, as the experiments in

Section 4 show, it outperforms auxiliary-reward-based

approaches (Strehl and Littman, 2008).

Thompson sampling.

Another principled well-known technique for exploration is

Thompson sampling (Thompson, 1933). Thompson sampling

samples actions from a posterior distribution which specifies the

probability for each action to be optimal. Similarly to UCB-based methods, Thompson sampling is guaranteed to converge to an optimal policy in multi-armed bandit problems (Kaufmann et al., 2012; Agrawal and Goyal, 2013), and has shown

strong empirical performance (Scott, 2010; Chapelle and Li, 2011). For a discussion of known

shortcomings of Thompson sampling, we refer to (Russo and Van Roy, 2014; Russo et al., 2017, 2018).

Inspired by these successes, recent methods have followed

the principle of Thompson sampling in

Q-learning (Osband et al., 2013, 2019; D’Eramo et al., 2019).

These methods

assume that an empirical distribution

over Q-functions –an ensemble of randomized Q-functions– is similar to the

distribution over action optimalities used in Thompson sampling. Actions are thus sampled from such ensemble. Since the

Q-functions in the ensemble become similar when updated with new samples,

there is a conceptual similarity with the action optimality

distribution used in Thompson sampling for which variance also

decreases with new samples. While there are no general proofs for randomized Q-function approaches, Osband et al. (2019) proved a bound on the Bayesian regret of an algorithm based on

randomized Q-functions in tabular time-inhomogeneous MDPs with a

transition kernel drawn from a Dirichlet prior, providing a starting

point for more general proofs.

Other exploration methods approximating a distribution over action

optimalities include for example the work of Fortunato et al. (2017) and Plappert et al. (2018), who apply noise to the parameters of the model. This may be interpreted as approximately inferring a posterior

distribution (Gal and Ghahramani, 2016), although this is not without contention (Osband, 2016). Osband et al. (2016a) more

directly approximates the posterior over Q-functions through

bootstrapping (Efron, 1982). The lack of a proper prior

is an issue when rewards are sparse, as it causes the uncertainty of

the posterior to vanish quickly. Osband et al. (2018) and

Osband et al. (2019) try to address this by enforcing a prior

via regularization or by adding randomly initialized but fixed neural

network on top of each ensemble member, respectively.

Methods based on posterior distributions have yielded high performance in some tasks. However,

because of the strong approximations needed to model posterior optimality

distributions instead of maintaining visitation counts or explicit

uncertainty estimates, these approaches may often have problems in

assessing the state uncertainty correctly.

In our experiments, the proposed approach outperforms state-of-the-art methods based on approximate posterior distributions, namely the algorithms proposed by Osband et al. (2016a, 2019) and D’Eramo et al. (2019).

Above, we discussed state-of-the-art exploration methods that (1) use confidence bounds or distribution over action optimalities to take the exploration into account in a principled way, (2) modify the reward by adding an intrinsic reward signal to encourage exploration, and (3) approximate a posterior distribution over value functions. The main challenge that pertains to all these methods is that they do not take long-term exploration into account explicitly. Contrary to this, similarly to how value iteration propagates state values, we propose an approach that uses dynamic programming to explicitly propagate visitation information backward in time. This allows our approach to efficiently find the most promising parts of the state space that have not been visited before, and avoid getting stuck in suboptimal regions that at first appear promising.

3 Long-Term Visitation Value for Deep Exploration

In this section, we present our novel off-policy method for improving exploration with sparse and distracting rewards. We start by motivating our approach with a toy example, showing the limitation of current count-based algorithms and the need for “long-term visitation counts”. Subsequently, we formally define the proposed method, and show how it solves the toy example.

3.1 Example of the Limitations of Existing Immediate Count Methods

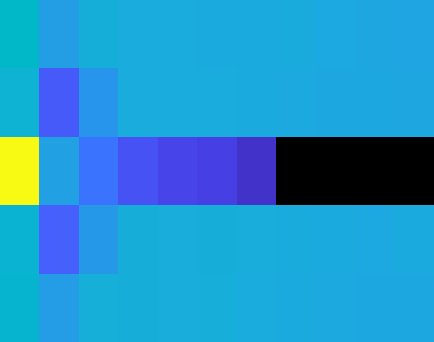

Consider the toy problem of an agent moving in a grid, shown in Figure 2. Reward is 0 everywhere except in and , where it is 1 and 2, respectively. The reward is given for executing an action in the state, i.e., on transitions from reward states. The agent initial position is fixed in and the episode ends when a reward is collected or after five steps. Any action in the prison cells has only an arbitrarily small probability of success. If it fails, the agent stays in . In practice, the prison cell almost completely prevents any further exploration. Despite its simplicity, this domain highlights two important challenges for exploration in RL with sparse rewards. First, there is a locally optimal policy collecting the lesser reward, which acts as distractor. Second, the prison state is particularly dangerous as it severely hinders exploration.

Assume that the agent has already explored the environment for four episodes performing the following actions: (1) left, down, left, down, down (reward 1 collected); (2) up, down, right, right (fail), right (fail); (3) right, left, right, down, down (fail); (4) right, down, left (fail), up (fail), right (fail). The resulting state-action visitation count is shown in Figure 3(a). Given this data, we initialize to zero, train it with Q-learning with until convergence, and then derive three greedy policies. The first simply maximizes . The second maximizes the behavior based on UCB1 (Auer et al., 2002), defined as

| (5) |

where is the state-action count, and is a scaling coefficient111In classic UCB, the reward is usually bounded in and no coefficient is needed. This is not usually the case for MDPs, where Q-values can be larger/smaller than 1/0. We thus need to scale the square root with , which upper-bounds the difference between the largest and smallest possible Q-value.. The third maximizes an augmented Q-function trained by adding the intrinsic reward based on the immediate count (Strehl and Littman, 2008), i.e.,

| (6) |

where is a scaling coefficient set to as in (Strehl and Littman, 2008; Bellemare et al., 2016). Figure 3(a) shows the state-action count after the above trajectories, and Figures 3(b), 3(c), and 3(d) depict the respective Q-functions (colormap) and policies (arrows). Given the state-action count, consider what the agent has done and knows so far.

-

a)

It has executed all actions at least once in (1,3) and (2,2) (the prison cell),

-

b)

It still did not execute “up” in (1,2),

-

c)

It visited (1,3) once and experienced the reward of 1, and

-

d)

It still did not execute “up” and “right” in (2,1).

In terms of exploration, the best decision would be to select actions not executed yet, such as “up” in (1,2). But what should the agent do in states where it has already executed all actions at least once? Ideally, it should select actions driving it to unvisited states, or to states where it has not executed all actions yet. However, none of the policies above exhibits such behavior.

-

a)

The greedy policy (Figure 3(b)), as expected, points to the only reward collected so far.

-

b)

The UCB1 policy (Figure 3(c)) yields Q-values of much larger magnitude. Most of the actions have been executed few times, thus their UCB is larger than their Q-value. The policy correctly selects unexecuted actions in (1,2) and (2,3), but fails (1,3). There it also selects the least executed actions (‘up” and “left”) which, however, have already been executed once. The agent should know that these actions do not bring it anywhere (the agent hits the environment boundaries and does not move) and should avoid them. However, it selects them because the policy is based on the immediate visitation count. Only when the count will be equal for all actions, the policy will select a random action. This behavior is clearly myopic and extremely inefficient.

-

c)

The policy learned using the auxiliary bonus (Figure 3(d)) is even worse, as it acts badly not only in (1,3) but also in (1,2) and (2,3). There, it chooses “left”, which was already selected once, instead of “up” (or “right in (2,3)), which instead has not been selected yet. The reason for such behavior is that this auxiliary reward requires optimistic initialization (Strehl and Littman, 2008). The agent, in fact, is positively rewarded for simply executing any action, with value proportional to the visitation count. However, if the Q-function is initialized to zero, the positive feedback will make the agent believe that the action is good. This mechanism encourages the agent to execute the same action again and hinders exploration. This problem is typical of all methods using an auxiliary reward based on visitation count, unless optimistic initialization of the Q-table is used (Strehl and Littman, 2008). More recent methods use more sophisticated rewards (Jin et al., 2018; Dong et al., 2020). However, despite their strong theoretical convergence guarantees, for large-size problems these methods may require an impractical number of samples and are often outperformed by standard algorithms.

3.2 The Long-Term Visitation Value Approach

Despite its simplicity, the above toy problem highlights the limitations of current exploration strategies based on the immediate count. More specifically, (1) considering the immediate count may result in shallow exploration, and (2) directly augmenting the reward to train the Q-function, i.e., coupling exploration and exploitation, can have undesired effects due to the non-stationarity of the augmented reward function. To address these limitations, we propose an approach that (1) plans exploration actions far into the future by using a long-term visitation count, and (2) decouples exploration and exploitation by learning a separate function assessing the exploration value of the actions. Contrary to existing methods that use models of reward and dynamics (Hester and Stone, 2013), which can be hard to come by, our approach is off-policy and model-free.

Visitation value.

The key idea of our approach is to train a visitation value function using the temporal difference principle. The function, called W-function and denoted by , approximates the cumulative sum of an intrinsic reward based on the visitation count, i.e.,

| (7) |

where is the visitation count discount factor. The higher the discount, the more in the future the visitation count will look. In practice, we estimate using TD learning on the samples produced using the behavior policy . Therefore, we use the following update

| (8) |

where is the learning rate, and is the W-function TD error, which depends on the reward (can be either maximized or minimized). Subsequently, following the well-known upper confidence bound (UCB) algorithm (Lai and Robbins, 1985), the behavior policy is greedy w.r.t. the sum of the Q-function and an upper confidence bound, i.e.,

| (9) |

where is an upper confidence bound based on , and is the same exploration constant used by UCB1 in Eq. (5). This proposed approach (1) considers long-term visitation count by employing the W-function, which is trained to maximize the cumulative –not the immediate– count. Intuitively, the W-function encourages the agent to explore state-actions that have not been visited before. Given the current state , in fact, specifies how much exploration we can expect on (discount-weighted) average in future states if we choose action . Furthermore, since the Q-function is still trained greedily w.r.t. the extrinsic reward as in Q-learning, while the W-function is trained greedily on the intrinsic reward and favors less-visited states, our approach (2) effectively decouples exploration and exploitation. This allows us to more efficiently prevent underestimation of the visitation count exploration term. Below, we propose two versions of this approach based on two different rewards and upper bounds .

W-function as long-term UCB.

The visitation reward is the UCB of the state-action count at the current time, i.e.,

| (10) | ||||

| This W-function represents the discount-weighted average UCB along the trajectory, i.e., | ||||

| (11) | ||||

| and its TD error is | ||||

| (12) | ||||

Notice how we distinguish between terminal and non-terminal states for the reward in Eq. (10). The visitation value of terminal states, in fact, is equal to the visitation reward alone, as denoted by the TD target in Eq. (10).

Consequently, the visitation value of terminal states could be significantly smaller than the one of other states, and the agent would not visit them again after the first time. This, however, may be detrimental for learning the Q-function, especially if terminal states yield high rewards. For this reason, we assume that the agent stays in terminal states forever, getting the same visitation reward at each time step. The limit of the sum of the discounted reward is the reward in Eq. (10).

Furthermore, given proper initialization of and under some assumptions, the target of non-terminal states will still be higher than the one of terminal states despite the different visitation reward, if the count of the former is smaller.

We will discuss this in more detail later in this section.

Another key aspect of this W-function regards the well-know overestimation bias of TD learning.

Since we can only update the W-function approximately based on limited samples, it is beneficial to overestimate its target with the operator. While it is known that in highly stochastic environments the overestimation can degrade the performance of value-based algorithm (van Hasselt, 2010; D’Eramo et al., 2017), it has been shown that underestimation does not perform well when the exploration is challenging (Tateo et al., 2017), and for a wide class of environments, overestimation allows finding the optimal solution due to self-correction (Sutton and Barto, 2018).

Assuming identical visitation over state-action pairs, the W-function becomes the discounted immediate UCB, i.e.,

| (13) | ||||

| thus, we can use directly in the behavior policy | ||||

| (14) | ||||

In Section 3.3, we discuss how to initialize . Finally, notice that when , Eq. (13) is the classic UCB, and Eq. (14) is equivalent to the UCB1 policy in Eq. (5).

W-function as long-term count.

The visitation reward is the state-action count at the current time, i.e.,

| (15) | ||||

| This W-function represents the discount-weighted average count along the trajectory, i.e., | ||||

| (16) | ||||

| and its TD error is | ||||

| (17) | ||||

Once again we distinguish between terminal and non-terminal states, but in the TD error the operator has been replaced by the operator.

Contrary to the previous case, in fact, the lower the W-function the better, as we want to incentivize the visit of lower-count states.

If we would use only the immediate count as reward for terminal states, the agent would be constantly driven there. Therefore, similarly to the UCB-based reward, we assume that the agent stays in terminal states forever getting the same reward.

In the TD target, since we want to prioritize low-count state-action pairs, the operator yields an optimistic estimate of the W-function, because the lower the W-function the more optimistic the exploration is.

Assuming identical visitation over state-action pairs, the W-function becomes the discounted cumulative count, i.e.,

| (18) | ||||

| We then compute the pseudocount and use it in the UCB policy | ||||

| (19) | ||||

When the counts are zero, , thus we need to initialize it to zero. We discuss how to avoid numerical problem in the square root in Section 3.3. Finally notice that when , Eq. (19) is equivalent to the UCB1 policy in Eq. (5).

Example of Long-Term Exploration with the W-function.

Consider again the toy problem presented in Section 3.1. Figure 4 shows the behavior policies of Eq. (14) and Eq. (19) with . The policies are identical and both drive the agent to unvisited states, or to states where it has not executed all actions yet, successfully exhibiting long-term exploration. The only difference is the magnitude of the state-action pair values (the colormap, especially in unvisited states) due to their different initialization.

-

a)

In (1,3), they both select “down” despite “up” and “left” having lower count. The agent, in fact, knows that from (1,2) it can go “down” to (1,1) where it executed only one action. Thus, it knows that there it can try new actions. On the other hand, the only thing it knows about (2,3) is that it leads to the prison state, where the agent already executed all actions. Thus, (1,2) has higher long-term exploration value than (2,3).

-

b)

In (1,2) both select “up”, overturning the greedy action “down” (Figure 3(b)). This is important, because to fully explore the environment we need to select unexecuted actions.

-

c)

The same happens in (2,3), where actions with count zero are selected, rather than the greedy ones.

-

d)

None of the policies lead the agent to the prison state, because it has already been visited and all actions have been executed in there.

-

e)

They both do not select “right” in (2,2) because it has been executed one more time than other actions. Nonetheless, the difference in the action value is minimal, as shown by the action colormap (almost uniform).

3.3 W-function Initialization and Bounds

In UCB bandits, it is assumed that each arm/action will be executed once before actions are executed twice. In order to enforce this, we need to make sure that the upper confidence bound is high enough so that an action cannot be executed twice before all other actions are executed once. Below, we discuss how to initialize the W-functions and set bounds in order to achieve the same behavior. For the sake of simplicity, below we drop subscripts and superscripts, i.e., we write in place of , in place of in the first part, and in place of in the second part.

upper bound.

To compute the initialization value we consider the worst-case scenario, that is, in state all actions but have been executed. In this scenario, we want that is an upper bound of . Following Eq. (14) and (12), we have

| (20) | ||||

| where the blue term is the TD target for non-terminal states, and is the immediate visitation reward for executing in the worst-case scenario (all other actions have been executed, and has not been executed yet). In this scenario, is an upper bound of under the assumption of uniform count, i.e., | ||||

| (21) | ||||

| We can then write Eq. (20) as | ||||

| (22) | ||||

In order to guarantee to select , which has not been select yet, we need to initialize such that the following is satisfied

| (23) | ||||

| where denotes the smallest possible Q-value. We get | ||||

| (24) | ||||

Initializing the W-function according to Eq. (24) guarantees to select .

upper bound.

Since this W-function represents the discounted cumulative count, it is initialized to zero. However, numerical problems may occur in the square root of Eq. (19) because the pseudocount can be smaller than one or even zero. In these cases, the square root of negative numbers and the division by zero are not defined. To address these issues, we add +1 inside the logarithm and replace the square root with an upper bound when . Similarly to the previous section, the bound needs to be high enough so that an action cannot be executed twice before all other actions are executed once. Following Eq. (19), we need to ensure that

| (25) |

However, this time assuming uniform count does not guarantee a uniform pseudocount, i.e.,

| (26) |

To illustrate this issue, consider the chain MDP in Figure 5. The agent always starts in and can move left, right, and down’ The episode ends after eight steps, or when terminal state is reached. The goal of this example is to explore all states uniformly. In the first episode, the agent follows the blue trajectory (). In the second episode, it follows the red trajectory (). In both trajectories, an action with count zero was always selected first. At the beginning of the third episode, all state-action pairs have been executed once, except for “left” in , i.e., . Thus, we need to enforce the agent to select it. However, the discounted cumulative count is not uniform. For example with , and .

More in general, the worst-case scenario we need to consider to satisfy Eq. (25) is the one where (1) action has not been executed yet, (2) an action has been executed once and has the smallest pseudocount, and (3) all other actions have been executed once and have the highest pseudocount. In this scenario, to select we need to guarantee . Since we assume that counts are uniform and no action has been executed twice, the smallest pseudocount is , while the highest is 1. Then, Eq. (25) becomes

| (27) |

Replacing in Eq. (19) with the above bound when guarantees to select .

Example of exploration behavior under uniform count.

Consider our toy problem again. This time, all state-action pairs have been executed once except for “left” in (1,3). The Q-table is optimal for visited state-action pairs, thus the greedy policy simply brings the agent to (3,1) where the highest reward lies. Figure 6 shows baseline and proposed behavior policies in two scenarios, depending on whether reward states are terminal (upper row) or not (bottom row).

-

a)

The augmented reward policy (Figure 6(b)) has no interest in trying “left” in (1,3), and its value is the lowest. The agent, in fact, cannot get the visitation bonus without first executing the action. This shows once more that this passive way of exploration is highly inefficient.

-

b)

UCB1 policy (Figure 6(c)) acts greedily everywhere except in (1,3), where it correctly selects the unexecuted action. However, since UCB1 considers only the immediate visitation, it has no way of propagating this information to other state-action pairs.

-

c)

By contrast, for the proposed W-function policies (Figure 6(d) and 6(e)) the unexecuted state-action pair is the most interesting, and the policy brings the agent there from any state. Thanks to the separate W-function, we can propagate the value of unexecuted actions to other states, always prioritizing the exploration of new state-action pairs. Under uniform count , then the agent acts greedily w.r.t. the Q-function.

Finally, if the count is uniform for all state-action pairs, including for “left” in (1,3), then all policies look like the greedy one (Figure 6(a)).

Terminal

Non-Term.

3.4 Final Remarks

In this section, we discuss the uniform count assumption used to derive the bounds, the differences between the proposed W-functions and intrinsic motivation, the benefit of using the count-based one, and potential issues in stochastic environments and continuous MDPs.

Uniform count assumption.

In Section 3.3 we derived an initialization for and a bound for to guarantee that an action is not executed twice before all other actions are executed once. For that, we assumed the visitation count to be uniform for all state-action pairs. We acknowledge that this assumption is not true in practice. First, it may be necessary to revisit old states in order to visit new ones. Second, when an episode is over the state is reset and a new episode starts. This is common practice also for infinite horizon MDPs, when usually the environment is reset after some steps. Thus, the agent’s initial state will naturally have a higher visitation count. Nonetheless, in Section 4.2.1, we will empirically show that our approach allows the agent to explore the environment as uniformly as possible.

W-function vs auxiliary rewards.

Using an auxiliary reward such as an exploration bonus or an intrinsic motivation signal has similarities with the proposed approach, especially with , but the two methods are not equivalent. Consider augmenting the reward with the same visitation reward used to train the W-function, i.e., . Using TD learning, the augmented Q-function used for exploration can be rewritten as222For the sake of simplicity, we consider only non-terminal states.

| (28) | ||||

| Then consider the behavior policy derived from in Eq. (14), and decompose it as well | ||||

| (29) | ||||

where we considered . The red terms are equivalent, but the blue terms are not because of the operator, since .

This explicit separation between exploitation () and exploration () has two important consequences. First, it is easy to implement the proposed behavior policy for any off-policy algorithm, as the exploration term is separate and independent from the Q-function estimates. This implies that we can propagate the exploration value independently from the

action-value function estimate. With this choice we can select a

discount factor which differs from the MDP discount . By choosing a high exploration

discount factor , we do long-term exploration allowing us to find the

optimal policy when exploration is crucial. By choosing a low exploration

discount factor , instead, we perform short-term exploration which may converge faster to a greedy policy. In the evaluation in Section 4.2, we show experiments for which the discount factors are the same, as well as experiments where they differ. Furthermore, we investigate the effect of changing the W-function discount while keeping the Q-function discount fixed.

Second, auxiliary rewards are non-stationary, as the visitation count changes at every step. This leads to a non-stationary target for the Q-function. With our approach, by decoupling exploration and exploitation, we have stationary target for the Q-function while moving all the non-stationarity to the W-function.

Terminal states and stochasticity.

The visitation rewards for training the W-functions penalize terminal state-action pairs (Eq. (10) and (15)). Consequently, once visited, the agent will avoid visiting them again. One may think that this would lead to poor exploration in stochastic environments, where the same state-action pair can lead to both terminal and non-terminal states. In this scenario, trying the same action again in the future may yield better exploration depending on the stochastic transition function. However, as we empirically show in Section 4.2.5, stochasticity in the transition function does not harm our approach.

Pseudo-counts and continuous MDPs.

In the formulation of our method –and later in the experiments section– we have considered only tabular MDPs, i.e., with discrete states and actions. This makes it easy to exactly count state-action pairs and accurately compute visitation rewards and value functions. We acknowledge that the use of counts may be limited to tabular MDPs, as real-world problems are often characterized by continuous states and actions. Nonetheless, pseudo-counts (Tang et al., 2017) have shown competitive performance in continuous MDPs and can be used in place of exact counts without any change to our method. Alternatively, it is possible to replace counts with density models (Ostrovski et al., 2017) with little modifications to our method. Finally, if neural networks would be used to learn the visitation-value function , it may be necessary to introduce target networks similarly to deep Q-networks (Mnih et al., 2013).

4 Evaluation

In this section, we evaluate the proposed method against state-of-the-art methods for exploration in model-free RL on several domains.

The experiments are designed to highlight the challenges of learning with sparse rewards, and are split into two parts. In the first, we present the environments and we address (1) learning when only few states give a reward, (2) learning when a sequence of correct actions is needed to receive a reward, (3) exploring efficiently when few steps are allowed per training episode, (4) avoiding local optima and distracting rewards, and (5) avoiding dangerous states that stop the agent preventing exploration.

In the second, we further investigate (6) the visitation count at convergence, (7) the empirical sample complexity, (8) the impact of the visitation value hyperparameter , (9) the performance in the infinite horizon scenario, and (10) with stochastic transition function.

To ensure reproducibility, the experiments are performed over fixed random seeds. For deterministic MDPs, the seeds are . For stochastic MDPs, the seeds are .

Pseudocode and further details of the hyperparameters are given in Appendix A.

The source code is available at https://github.com/sparisi/visit-value-explore

Baseline Algorithms.

The evaluated algorithms all use Q-learning (Watkins and Dayan, 1992) and share the same hyperparameters, e.g., learning rate and discount factor. In most of the experiments, we use infinite replay memory as presented by Osband et al. (2019).

In Appendix A.5 we also present an evaluation without replay memory on some domains.

The algorithms all learn two separate Q-tables, a behavior one for exploration, and a target one for testing the quality of the greedy policy.

The difference among the algorithms is how the behavior policy performs exploration. In this regard, we compare against the following exploration strategies: random, -greedy, bootstrapped, count-based.

For bootstrapping, we evaluate the original version proposed by Osband et al. (2016a), as well as the more recent versions of D’Eramo et al. (2019) and Osband et al. (2019).

These methods all share the same core idea, i.e., to use an ensemble of Q-tables, each initialized differently and trained on different samples, to approximate a distribution over Q-values via bootstrapping.

The differences are the following. Osband et al. (2016a) select a random Q-table at the beginning of each episodes, and use it until the episode is over. Osband et al. (2019) further regularize the Q-tables using the squared -norm to “prior tables” representing a prior over the Q-function.

D’Eramo et al. (2019) instead select the Q-table randomly within the ensemble at each step to approximate Thompson sampling, but do not use any prior.

For count-based algorithms, we compare against UCB1 exploration (Auer et al., 2002) on the immediate state-action count as in Eq. (5), and against augmenting the reward with the exploration bonus proposed by Strehl and Littman (2008) as in Eq. (6). Notice that Strehl and Littman (2008) apply the exploration bonus to a model-based algorithm, but the same bonus has since then been used by many model-free algorithms (Bellemare et al., 2016; Dong et al., 2020).

More recent methods use more sophisticated bonus to derive strong convergence guarantees (Jin et al., 2018; Dong et al., 2020).

However, for large-size problems these methods may require an impractical number of samples and are often outperformed by standard algorithms.

4.1 Part 1: Discounted Return and States Discovered

We use the following environments: deep sea (Osband et al., 2018, 2019), taxi driver (Asadi and Littman, 2017), and four novel benchmark gridworlds. All the environments have sparse discounted rewards, and each has peculiar characteristics making it challenging to solve.

Evaluation Criteria.

For each environment, we evaluate the algorithms on varying two different conditions: optimistic vs zero initialization of the behavior Q-tables, and short vs long horizon, for a total of four scenarios. For each of them, we report the expected discounted return of , which is greedy over , and the number of visited states over training samples. In all environments, the initial state is fixed.

Optimistic initialization (Lai and Robbins, 1985) consists of initializing the Q-tables to a large value. After visiting a state-action pair, either the agent experiences a high reward confirming his belief about its quality, or the agent experiences a low reward and learns that the action was not optimal and will not execute it again in that state. By initializing the Q-tables to the upper bound we are guaranteed to explore all the state-action pairs even with just an -greedy policy. However, this requires prior knowledge of the reward bounds, and in the case of continuous states and function approximation the notion of a universally “optimistic prior” does not carry over from the tabular setting (Osband et al., 2019). Thus, it is important that an algorithm is able to explore and learn even with a simple zero or random initialization.

The length of the horizon is also an important factor for exploration. If the agent can perform a limited number of actions during an episode, it has to carefully choose them in order to explore as efficiently as possible. Prominent examples in this regard are real-world tasks such as videogames, where the player has limited time to fulfill the objective before the game is over, or robotics, where the autonomy of the robot is limited by the hardware. Thus, it is important that an algorithm is able to explore and learn even when the training episode horizon is short. In the “short horizon” scenario, we allow the agent to explore for a number of steps slightly higher than the ones needed to find the highest reward. For example, if the highest reward is eight steps away from the initial position, the “short horizon” is ten steps. The “long horizon” scenario is instead always twice as long.

Results are first presented in plots showing the number of states discovered and discounted return against training steps averaged over 20 seeds. Due to the large number of evaluated algorithms, confidence intervals are not reported in plots. At the end of the section, a recap table summarizes the results and reports the success of an algorithm, i.e., the number of seeds the algorithm learned the optimal policy, and the percentage of states visited at the end of the learning, both with 95% confidence interval.

4.1.1 Deep Sea

In this environment (Figure 7), the agent (the ship) always starts at the top-left corner of a gridworld of size , and has to descend through the grid to a chest in the bottom-right corner. At the beginning of the episode, either a treasure or a bomb appear inside the chest, with a 50-50. The outcome is known to the agent, as the state consists of the ship position and a flag denoting if the bomb or the treasure has appeared. At every step, the ship descends by one step and must choose to turn left or right. If it hits the left boundary, it will descend without turning. For example, from the starting position , doing “left” will move the ship to , while doing “right” will move it to . The episode ends when the ship reaches the bottom of the grid, and thus the horizon is fixed to . The reward is 1 if the ship finds the treasure, -1 if it finds the bomb, and if the ship goes “right” in any cell along the diagonal (denoted by sea waves). The optimal policy selects always “right” if there is the treasure, otherwise “left” at the initial state and then can act randomly.

The challenge of this environment lies in having to select the same action (“right”) times in a row in order to receive either 1 or -1. Doing “left” even only once prevents the ship from reaching the chest. At the same time, the agent is discouraged from doing “right”, since doing so along the diagonal yields an immediate penalty. Consequently, an -greedy policy without optimistic initialization would perform poorly, as the only short-term reward it receives would discourage it from doing “right”. A random policy is also highly unlikely to reach the chest. In particular, the probability such a policy reaches it in any given episode is . Thus, the expected number of episodes before observing either the bomb or the treasure is . Even for a moderate value of , this is an impractical number of episodes (Osband et al., 2019).

Results.

Figure 8 shows the results on a grid of depth . Since this domain has a fixed horizon, the evaluation does not include the “short vs long horizon” comparison. With zero initialization, only our algorithms and bootstrapping by Osband et al. (2016a) and Osband et al. (2019) discover all states and converge to the optimal policy within steps limit. Both proposed visitation-count-based (blue and orange) outperform the other two, converging twice or more as faster. Bootstrapped exploration with random prior (green) comes second, reproducing the results reported by Osband et al. (2018) and Osband et al. (2019). Results with optimistic initialization are similar, with the main difference being that also approximate Thompson sampling by D’Eramo et al. (2019) (purple) converges. Bootstrapping by Osband et al. (2018) (green) matches the performance of visitation-count-based with UCB (blue), and the two lines almost overlap. For more details about the percentage of successful trials and the percentage of states discovered by each algorithm with confidence intervals, we refer to Table 1.

This evaluation shows that the proposed exploration outperforms state-of-the-art bootstrapping on a domain with a fixed horizon and no local optima. In the next sections, we investigate domains with absorbing states, i.e., that can prematurely end the episode, and distractor reward. In Section 4.2.3, we also present an empirical study on sample complexity on varying the deep sea depth . Furthermore, in Section 4.2.1, we show the state visitation count at the end of the learning for the deep sea and other domains, discussing why the proposed visitation-value-based exploration achieves new state-of-the-art results.

4.1.2 Multi-Passenger Taxi Driver

In this environment (Figure 9), the agent (the taxi) starts at the top-left corner of a grid and has to pick up three passengers and drop them off at a goal (the flag). It can carry multiple passengers at the same time. If one, two, or all three passengers reach the goal, the agent is rewarded with 1, 3, or 15, respectively. Otherwise the reward is 0. The state consists of the taxi position and three booleans denoting if a passenger is in the taxi. The agent can move left, right, up, or down. Black cells cannot be visited. An episode ends if the taxi goes to the flag, even without passengers. The optimal policy picks up all passengers and drops them off in 29 steps33328 steps to pick all passengers and reach the goal, plus an additional action to get the reward..

This domain is challenging for two reasons. First, it admits many locally optimal policies, depending on how many passengers reach the goal. Second, with a short horizon learning to pick up and drop off all passengers is difficult and requires effective exploration.

Results.

In this domain, the “short horizon” consists of 33 steps444Our algorithms could learn also with a horizon of only 29 steps. However, with zero initialization other algorithms performed extremely poorly. We thus decided to give the agent 33 steps., while the “long” of 66. As shown in Figure 10, bootstrapped algorithms perform significantly worse than before. None of them, in fact, learned to pick all passengers if Q-tables are initialized to zero, and the “long horizon” does not help. In particular, random prior bootstrapping (green) converged prematurely due to the presence of local optima (the algorithm does not discover new states after 750-1,000 steps). The proposed algorithms (blue and orange), instead, perform extremely well, quickly discovering all states and then learning the optimal policy. Other algorithms learned to pick one or two passengers, and only the auxiliary visitation bonus (pink) learned to pick all passengers in some seeds but only with a long horizon. With optimistic initialization, most of the algorithms match the performance of our proposed ones. Unsurprisingly, -greedy (gray) learns slowly. Bonus-based exploration (pink) is also slow, either because the small bonus coefficient (see Eq. (6)), or due to the issues discussed in Section 3.1. Only random exploration (light green) still cannot pick all passengers. For the percentage of successful trials and the percentage of states discovered by each algorithm with confidence intervals, we refer to Table 1.

This evaluation shows that the proposed exploration outperforms existing algorithms in the presence of local optima and that its performance is not affected by the length of the horizon. Next, we investigate what happens when we combine the challenges of the deep sea and the taxi.

4.1.3 Deep Gridworld

The first gridworld we propose is inspired by the deep sea domain. In this environment (Figure 11), the agent navigates in a grid by moving left, right, up, or down. All states can be visited, but the blue corridor can be entered only from its left side. The agent can exit the corridor anytime by moving either “up” or “down”. A treasure of value 2 lies at the end of the corridor, while two treasures of value 1 serve as distractors next to the corridor entrance. The corridor is filled with puddles giving a penalty of -0.01. The agent always starts next to the entrance and the episode ends when the agent collects any of the treasures. The optimal policy consists of going always “right”.

The deep gridworld combines the challenges of both the deep sea and the taxi domains. Like the former, to discover the highest reward the agent needs to execute the action “right” multiple times in a row, receiving negative immediate rewards due to the puddles. However, doing a different action does not prevent reaching the end of the corridor, because the agent can still go back to the entrance and try again within the same episode. At the same time, the presence of the two distractor treasures results in local optima, as in the taxi driver domain.

Results.

Because it is possible to exit the corridor without ending the episode, we set a longer horizon compared to the previous domains, i.e., 55 steps for the “short” scenario and 110 for the “long” one. Results shown in Figure 12 confirm previous results. The proposed exploration quickly discovers all states and learns to navigate through the corridor. By contrast, other algorithms, including bootstrapping, get stuck in local optima and learn to collect one of the two lesser treasures. Similarly to the taxi domain, with optimistic initialization all algorithms –but random exploration (light green)– learn to navigate through the corridor.

This evaluation shows that distractor rewards are challenging for existing algorithms but not for ours. Next, we increase the difficulty by adding more distractors and new special states.

The Gridworlds Environments

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a4e94b5d-0269-413a-8b5c-bd27af2a8fe0/x30.png) Figure 13: The “toy” gridworld.

Figure 13: The “toy” gridworld.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a4e94b5d-0269-413a-8b5c-bd27af2a8fe0/x31.png) Figure 14: The “prison” gridworld.

Figure 14: The “prison” gridworld.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a4e94b5d-0269-413a-8b5c-bd27af2a8fe0/x32.png) Figure 15: The “wall” gridworld.

Figure 15: The “wall” gridworld.

4.1.4 Gridworlds

These environments (Figure 15, 15, and 15) share the same structure. The agent navigates in a grid by moving left, right, up, or down. Black cells cannot be visited, while any action in the prison has only an arbitrarily small probability of success. If it fails, the agent does not move. In practice, the prison almost completely prevents any further exploration. The reward is 0 everywhere except in treasure or penalty cells and is given for executing an action in the state, i.e., on transitions. Treasure cells (denoted by a money bag or a green number) give a bonus reward of different magnitude and end the episode. Penalty cells (denoted by a red number) give a penalty reward and end the episode. The agent also gets a small penalty of -0.01 at each step. The goal, thus, is to find the biggest treasure using as few steps as possible. The initial position is fixed such that it is far from the biggest treasure and close to the smallest one.

Similarly to the deep gridworld, these domains are designed to highlight the difficulty of learning with many distractors (the smaller treasures) and, thus, of many local optima. The addition of the constant penalty at each step further discourages exploration and makes the distractors more appealing, since ending the episode will stop receiving penalties. Each domain has increasing difficulty and introduces additional challenges, as we explain below.

The “toy” gridworld. We start with a simple gridworld with one single reward of value 1, as shown in Figure 15. The focus of this domain is learning with sparse reward, without distractors or additional difficulties. The optimal policy takes only nine steps to reach the treasure, and the “short horizon” scenario ends after eleven steps.

The “prison” gridworld. Shown in Figure 15, this domain increases the value of the furthest reward to 5, adds two distractors of value 1 and 2, and adds a prison cell. The first distractor is close to the initial position, while the second is close to the goal reward. The prison cell is also close to the goal. The optimal policy navigates around the first distractor, then right below the prison, and finally up to the goal. The prison

highlights the importance of seeking states with low long-term visitation count, rather than low immediate count. As discussed in Section 3.1, in fact, current count-based algorithms cannot deal with these kinds of states efficiently.

The “wall” gridworld. Shown in Figure 15, the last gridworld is characterized by increased grid size () and reward magnitude, and by the wall separating the grid into two areas.

The first area, where the agent starts, has six small rewards (both treasures and penalties). The second area has two bigger treasures in the upper-right and bottom-right corners, of value 500 and 10,000, respectively. The optimal policy brings the agent beyond the wall and then to the bottom right corner, where the highest reward lies. The wall significantly increases the difficulty, due to the narrow passage that the agent needs to find in order to visit the second area of the grid. Learning is even harder when the horizon is short, as the agent cannot afford to lose time randomly looking for new states. To increase the chance of success of all algorithms, we set the “short horizon” to 330 steps, and 135 are needed to reach the reward of 10,000.

Results.

The gridworld environments confirm previous results. Without distractors (toy gridworld, Figure 16), bootstrapped algorithms (green and red) perform well, and so does using the auxiliary visitation bonus (pink). Neither, however, match ours (blue and orange, almost overlapping). Increasing the horizon helps the remainder algorithms, including random exploration (light green), except for approximate Thompson sampling (purple).

In the next gridworld, however, the distractors and the prison cell substantially harm all algorithms except ours (Figure 17). Without optimistic initialization, in fact, existing algorithms cannot find the highest reward even with a long horizon, and all converge to local optima. This behavior was expected, given the study of Section 3.1. Finally, the wall gridworld results emphasize even more the superiority of our algorithms (Figure 18). With zero initialization, in fact, every other algorithm cannot go beyond the wall and find even the reward of 500. The results also stress that using the UCB reward visitation value (blue) over the count reward (orange) performs slightly best.

This evaluation strengthens the findings of previous experiments. First, it stresses how difficult it is for existing algorithms to learn with distracting rewards and a short horizon. Second, it shows that our proposed approach overcomes these challenges. Next, we show how efficiently the algorithms explore in terms of final visitation count and sample complexity.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a4e94b5d-0269-413a-8b5c-bd27af2a8fe0/legend_hor.png)

| Algorithm | Discovery (%) | Success (%) | |

|---|---|---|---|

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Deep Sea | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random | |||

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Taxi | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random | |||

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Deep Gridworld | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random | |||

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Gridworld (Toy) | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random | |||

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Gridworld (Prison) | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random | |||

| Ours (UCB Reward) | |||

| Ours (Count Reward) | |||

| Rand. Prior (Osband 2019) | |||

| Bootstr. (Osband 2016a) | |||

| Gridworld (Wall) | Bootstr. (D’Eramo 2019) | ||

| UCB1 (Auer 2002) | |||

| Expl. Bonus (Strehl 2008) | |||

| -greedy | |||

| Random |

4.2 Part 2: In-Depth Investigation

In this section, we present an in-depth analysis of issues associated with learning with sparse rewards, explaining in more detail why our approach outperformed existing algorithms in the previous evaluation. We start by reporting the visitation count at the end of the learning, showing that our algorithms explore the environment uniformly. We continue with a study on the impact of the visitation discount , showing how it affects the performance of the W-function. Next, we present the empirical sample complexity analysis of all algorithms on the deep sea domain, showing that our approach scales gracefully with the number of states. Finally, we evaluate the algorithms in the infinite horizon setting and in stochastic MDPs, evaluating the accuracy of the Q-function and the empirical sample complexity. Even in these scenarios, our approach outperforms existing algorithms.

Deep Sea

Deep Grid

Grid (Prison)

Grid (Wall)

Ours

Bootstr.

UCB1

Expl. Bonus

-greedy

4.2.1 Visitation Count at the End of the Learning

In Section 3.3 and 3.4, we discussed that the proposed behavior policies guarantee that an action is not executed twice before all actions are executed once, under the uniform count assumption. We also acknowledged that this assumption cannot hold in practice because of state resets, or because the agent may need to revisit the same state to explore new ones. For example, in the deep sea, the agent needs to traverse diagonal states multiple times to visit every state. Nonetheless, in this section, we empirically show that our approach allows the agent to explore the environment as uniformly as possible.

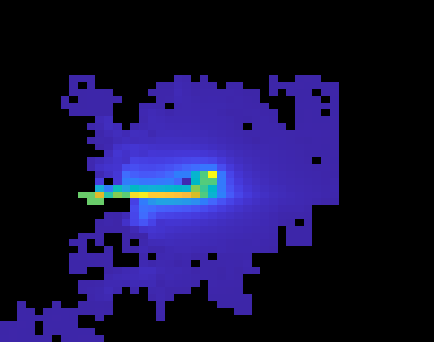

Figure 19 reports the state visitation count at the end of learning in the “short-horizon zero-initialization” scenario for some domains.

Only the proposed method (first column) uniformly explores all states. Recall, in fact, that episodes reset after some steps or when terminal states are reached. Thus initial states (which are fixed) are naturally visited more often. The count in our method uniformly decreases proportionally to the distance from the initial states. This denotes that at each episode, starting from the same state, the agent followed different paths, exploring new regions of the environment uniformly.

Other algorithms suffer from distractors and local optima.

In particular, UCB1 (third column) explores very slowly. The reason is that the agent myopically selects actions based on the immediate count, which makes exploration highly inefficient. By selecting the action with the lowest immediate count, the agent does not take into account where the action will lead it, i.e., if future states have already been visited or not.

By contrast, our approach achieves deep exploration by taking into account the long-term visitation count of future states.

The performance of bootstrapping (second column) is peculiar. It immediately converges to the first reward found in the gridworlds, barely exploring afterward, but performs very well on the deep sea.

This hints that bootstrapping may be sensitive to any kind of reward, including negative penalties.

In the deep sea, in fact, diagonal states provide the only intermediate feedback besides the final reward/penalty. Coincidentally, traversing the diagonal also leads to the only positive reward.

The agent may thus be guided by the reward received on the diagonal, even if it is a negative penalty.

This behavior can be explained by recalling that Osband et al. (2019) regularize TD learning with the -distance from a prior Q-table. The agent may therefore be attracted to any state providing some kind of feedback in order to minimize the regularization.

4.2.2 Impact of Visitation Value Discount Factor

Here, we investigate the effect of the visitation discount factor on the “toy” gridworld (Figure 15). Figure 20 shows that the higher the discount, the better. As expected, with the algorithms behave very similarly to UCB1. In this case, in fact, the proposed approach and UCB1 are equivalent. However, the learning curves are not exactly the same because the W-function is updated with TD learning at every step.

The better exploration of our approach is also confirmed by Figure 21, showing the count at the end of the learning. The higher , the more uniform the exploration is because with a higher discount the agent will look further in the future for visitation rewards. With smaller , instead, the agent rarely discovers states far from the initial position, as it does not look for future rewards. As a rule of thumb, it is appropriate to have equal or larger than .

Grid (Toy)

4.2.3 Empirical Sample Complexity on the Deep Sea

Here, we propose the same evaluation presented by Osband et al. (2018) to investigate the empirical sample complexity of the proposed algorithms, and to assess how they scale to large problems. Figure 22 plots training steps to learn the optimal policy as a function of the environment size . Only our approach (blue and orange) scales gracefully to large problem sizes. Results suggest an empirical scaling of for the visitation-value-based with count reward, and even smaller for UCB reward. Bootstrapping attains an empirical complexity of , confirming the findings of Osband et al. (2019). However, in many cases () there was one seed for which bootstrapping either did not learn within the step limit (green dashed line, empty dots) due to premature convergence, or learned after substantially more steps than the average (red dots, large error bar). Missing algorithms (random, -greedy, UCB1, approximate Thompson sampling) performed extremely poorly, often not learning within 500,000 steps even for small , and are thus not reported.

Timestep

Timestep

State

State

State

4.2.4 Infinite Horizon Stochastic Chainworld

This evaluation investigates how the algorithms perform in a stochastic MDP with infinite horizon. In particular, we are interested in (1) the mean squared value error (MSVE) at each timestep, (2) the sample complexity when varying the number of states, and (3) how behavior policies explore and if they converge to the greedy policy.

Evaluation Criteria.

The MSVE is computed between the true value function of the optimal policy and the learned value function of the current policy , i.e.,

| (30) |

The value function is computed according to the Bellman expectation equation in matrix form, i.e., , where is the identity matrix, and and are the transition and reward functions induced by the policy, respectively (Sutton and Barto, 2018). This error indicates how much the learned greedy policy deviates from the optimal policy.

Similarly, the sample complexity is defined as the number of timesteps such that the non-stationary policy at time is not -optimal for current state , i.e, (Strehl and Littman, 2008; Dong et al., 2020).

For the behavior of exploration policies and their empirical convergence, we show how the visitation count changes over time.

MDP Characteristics.