Local Reweighting for Adversarial Training

Local Reweighting for Adversarial Training

Ruize Gao1,† Feng Liu2,† Kaiwen Zhou1 Gang Niu3 Bo Han4 James Cheng1

1Department of Computer Science and Engineering, The Chinese University of Hong Kong, HKSAR, China

2DeSI Lab, Australian AI Institute, University of Technology Sydney, Sydney, Australia

3RIKEN Center for

Advanced Intelligence Project (AIP), Tokyo, Japan

4Department of Computer Science, Hong Kong Baptist University, HKSAR, China

†Equal Contribution

Emails: [email protected], [email protected], [email protected], [email protected],

[email protected], [email protected]

Abstract

Instances-reweighted adversarial training (IRAT) can significantly boost the robustness of trained models, where data being less/more vulnerable to the given attack are assigned smaller/larger weights during training. However, when tested on attacks different from the given attack simulated in training, the robustness may drop significantly (e.g., even worse than no reweighting). In this paper, we study this problem and propose our solution—locally reweighted adversarial training (LRAT). The rationale behind IRAT is that we do not need to pay much attention to an instance that is already safe under the attack. We argue that the safeness should be attack-dependent, so that for the same instance, its weight can change given different attacks based on the same model. Thus, if the attack simulated in training is mis-specified, the weights of IRAT are misleading. To this end, LRAT pairs each instance with its adversarial variants and performs local reweighting inside each pair, while performing no global reweighting—the rationale is to fit the instance itself if it is immune to the attack, but not to skip the pair, in order to passively defend different attacks in future. Experiments show that LRAT works better than both IRAT (i.e., global reweighting) and the standard AT (i.e., no reweighting) when trained with an attack and tested on different attacks.

1 Introduction

A growing body of research shows that neural networks are vulnerable to adversarial examples, i.e., test inputs that are modified slightly yet strategically to cause misclassification [4, 11, 12, 16, 20, 26, 30, 39]. It is crucial to train a robust neural network to defend against such examples for security-critical computer vision systems, such as autonomous driving and medical diagnostics [7, 17, 19, 20, 26]. To mitigate this issue, adversarial training methods have been proposed in recent years [2, 13, 18, 25, 29, 32]. By injecting adversarial examples into training data, adversarial training methods seek to train an adversarial-robust deep network whose predictions are locally invariant in a small neighborhood of its inputs [1, 15, 22, 23, 27, 34].

Due to the diversity and complexity of adversarial data, over-parameterized deep networks have insufficient model capacity in adversarial training (AT) [38]. To obtain a robust model given fixed model capacity, Zhang et al. [38] suggest that we do not need to pay much attention to an instance that is already safe under the attack, and propose instance-reweighted adversarial training (IRAT), which performs global reweighting with a given attack. To identify safe/non-robustness instances, they propose a geometric distance between natural data points and current class boundary. Instances being closer to/farther from the class boundary is more/less vulnerable to the given attack, and should be assigned larger/smaller weights during AT. This geometry-aware IRAT (GAIRAT) significantly boosts the robustness of the trained models when facing the given attack.

However, when tested on attacks that are different from the given attack simulated in IRAT, the robustness of IRAT drops significantly (e.g., even worse than no reweighting). First, we find that a large number of instances are actually overlooked during IRAT. Figure 1(a) shows that, for approximately four-fifths of the instances, their corresponding adversarial variants are assigned very low weights (less than ). Second, we find that the robustness of the IRAT-trained classifier drops significantly when facing an unseen attack. Figures 1(b) and 1(c) show that the classifier trained by GAIRAT (with projected gradient descent (PGD) attack [18]) has lower robustness when attacked by the unseen Carlini and Wagner attack (CW) [5] compared with the robustness of standard adversarial training (SAT) [18].

In this paper, we investigate the reasons behind this phenomenon. Unlike the common scenario of the classification problem where the training and testing data are fixed, there are different adversarial variants for the same instance in AT, e.g., PGD-based or CW-based adversarial variants. A natural question comes with this—whether there are inconsistent vulnerabilities in the view of different attacks? The answer is affirmative. Figure 2 visualizes this phenomenon using t-SNE [28]. The subfigures in Figure 2 visualize inconsistent vulnerable instances in different views using t-SNE. The red dots in all subfigures represent consistently vulnerable instances between different views, while the blue dots represent the inconsistent vulnerable instances. From both the SAT-trained classifier (Figure 2(a)-2(d)) and GAIRAT-trained classifier (Figure 2(e)-2(h)), we can clearly see that a large number of vulnerable instances are inconsistent (the blue dots dominate in all the subfigures).

Given the above investigation, we argue that the safeness of instances is attack-dependent, that is, for the same instance, its weight can change given different attacks based on the same model. Thus, if the attack simulated in training is mis-specified, the weights of IRAT are misleading. In order to ameliorate this pessimism of IRAT, we propose our solution—locally reweighted adversarial training (LRAT). As shown in Figure 3, LRAT pairs each instance with its adversarial variants and performs local reweighting inside each pair, while performing no global reweighting. The rationale of LRAT is to fit the instance itself if it is immune to the attack, and in order to passively defend different attacks in future, LRAT does not skip the pair. For the realization of LRAT, we propose a general vulnerability-based reweighting strategy that is applicable to various attacks instead of the geometric distance that is only compatible with the PGD attack [38].

Our experimental results show that LRAT works better than both SAT (i.e., no reweighting) and IRAT (i.e., global reweighting) when trained with an attack but tested on different attacks. For other existing adversarial training methods, e.g., TRADES [36], we also design LRAT for TRADES [36] (i.e., LRAT-TRADES). Our results also show that LRAT-TRADES works better than both TRADES and IRAT-TRADES.

2 Adversarial Training

In this section, we briefly review existing adversarial training methods [18, 38]. Let be the input feature space with a metric , and be the closed ball of radius centered at in . The dataset , where , . We use to denote a deep neural network parameterized by . Specifically, predicts the label of an input instance via:

| (1) |

where denotes the predicted probability (softmax on logits) of belonging to class .

2.1 Standard Adversarial Training

The objective function of SAT [18] is

| (2) |

where . The selected is the most adversarial variant within the -ball center at . The loss function is a composition of a base loss (e.g., the cross-entropy loss) and an inverse link function (e.g., the soft-max activation), where is the corresponding probalility simplex. Namely, . PGD [18] is the most common approximation method for searching the most adversarial variant. Starting from , PGD (with step size ) works as follows:

| (3) |

where is the number of iterations; refers to the starting point that natural instance (or a natural instance perturbed by a small Gaussian or uniformly random noise); is the corresponding label for ; is the adversarial variant at step ; is the projection function that projects the adversarial variant back into the -ball centered at if necessary.

2.2 Geometry-Aware Instance-Reweighted Adversarial Training

GAIRAT is a typical IRAT proposed by Zhang et al. [38]. GAIRAT argues that natural training data farther from/close to the decision boundary are safe/non-robustness, and should be assigned with smaller/larger weights. Let be the geometry-aware weight assignment function on the loss of the adversarial variant , where the generation of follows SAT. GAIRAT aims to

| (4) |

Eq. (4) rescales the loss using a function . This function is non-increasing w.r.t. GD, which is defined as the least steps that the PGD method needs to successfully attack the natural instances. The method then normalizes to ensure that and . Finally GAIRAT employs a bootstrap period in the initial part of the training by setting , thereby performing regular training and ignoring the geometric-distance of input .

3 The Limitation of IRAT: Inconsistent Vulnerability in Different Views

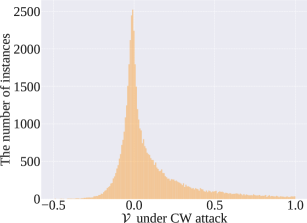

The difference between Eq. (2) and Eq. (4) is the addition of the geometry-aware weight . According to GAIRAT [38], more vulnerable instances should be assigned larger weights. However, the relative vulnerability between instances may vary in different situations, such as for different adversarial variants. As shown in Figure 4, represents the selected variable to measure the vulnerability between the classifier and adversarial variants, and the smaller , the more vulnerable is the instance, which is formally defined in Section 4.3. The dark yellow and dark blue are top-20% vulnerable instances in the view of PGD and CW, respectively. The frequency distribution of dark yellow in Figure 4(b) and dark blue in Figure 4(c) is clearly different, and the frequency distribution of dark blue in Figure 4(e) and dark yellow in Figure 4(f) is different. Namely, the most vulnerable 10,000 PGD adversarial variants and the most vulnerable 10,000 CW adversarial variants are not from the same 10,000 instances. As a consequence of this inconsistency, if the attack simulated in training is mis-specified, the weights of IRAT are misleading.

4 Locally Reweighted Adversarial Training

To break the limitation of IRAT and train a robust classifier against various attacks, we propose LRAT in this section, for which we perform local reweighting instead of global/no reweighting.

4.1 Motivation of LRAT

The Reweighting is Beneficial. As suggested by GAIRAT [38], the global reweighting indeed improves the robustness when tested on the given attack simulated in training. Figures 1(b) and 1(c) also show that, as a global reweighting, GAIRAT improves the robustness against PGD (when PGD is simulated in training). Thus, when training and testing on the same attack, the rationale that we do not need to pay much attention to an already-safe instance under the attack is significant.

Local Reweighting can Take Care of Various Attacks Simultaneously. As introduced in Section 3, there is inconsistent vulnerability between instances in different views. Thus, we should perform local reweighting inside each pair, while performing no global reweighting—the rationale is to fit the instance itself if it is immune to the attack, but not to skip the pair, in order to passively defend different attacks in future. In addition, it is inefficient (practically impossible) to simulate all attacks in training, so a gentle lower bound on the instance weights is necessary to defend against potentially adaptive adversarial attacks.

4.2 Learning Objective of LRAT

Let be the weight assignment function on the loss of adversarial variant . The inner optimization for generating depends on attacks, such as PGD (Eq. (2)). The outer minimization is:

| (5) |

where is the number of instances in one mini-batch; is the number of used attacks; is a constant representing the minimum weight sum of each instance; the notation stands for . We impose two constraints on our objective Eq. (5): the first constraint ensures that and the second constraint ensures that . The non-negative coefficient assigns some weight to the natural data term, which serves as a gentle lower bound to avoid discarding instances during training. It can also be seen that different weights are assigned to different adversarial variants, respectively. LRAT pairs each instance with its adversarial variants and performs local reweighting inside each pair. Figure 3 provides an illustrative schematic of the learning objective of LRAT. If , , and is generated by PGD, LRAT recovers the SAT [18], which assigns equal weights to the losses of PGD adversarial variant.

4.3 Realization of LRAT

The objective in Eq. (5) implies the optimization process of an adversarially robust network, with one step generating adversarial variants from natural counterparts and then reweighting loss on them, and one step minimizing the reweighted loss w.r.t. the model parameters .

It is still an open question how to calculate the optimal for different variants. Zhang et al. [38] heuristically design some non-increasing functions , such as:

| (6) |

where , , and . is the GD defined as the least steps that the PGD method needs to successfully attack the natural instance. is the maximally allowed steps.

However, the efficacy of this heuristic reweighting function is limited to PGD. When CW is simulated in training, Figure 5 shows the same decrease as in Figure 1 (c) when tested on CW.

Therefore, in this section, we propose a general vulnerability-based and attack-dependent reweighting strategy to calculate the weights of the corresponding variants:

| (7) |

where is a predetermined function that measures the vulnerability between the classifier and a certain adversarial variant, and is a decreasing function of the variable .

The generations of adversarial variants follow different rules under different attacks. For example, the adversarial variant generated by PGD misleads the classifier with the lowest probability of predicting the correct label [18]. In contrast, the adversarial variant generated by CW misleads the classifier with the highest probability of predicting a wrong label [5]. Motivated by these different generating processes, we define the vulnerability in the view of PGD and CW in the following.

Definition 1 (Vulnerability in the view of PGD).

In the view of PGD, the vulnerability regarding (generated by PGD) is defined as

| (8) |

where denotes the predicted probability (softmax on logits) of belonging to the true class .

For the PGD-based adversarial variant, the lower predicted probability it has on true class, the smaller in Eq. (8).

Definition 2 (Vulnerability in the view of CW).

In the view of CW, the vulnerability regarding (generated by CW) is defined as

| (9) |

where denotes the predicted probability (softmax on logits) of (CW) belonging to the true class . The denotes the maximum predicted probability (softmax on logits) of (CW) belonging to the false class ().

For the CW-based adversarial variant, the relatively higher predicted probability it has on the false class, the larger in Eq. (9). In this paper, we consider the decreasing function with the form

| (10) |

where and are hyper-parameters of each attack. In Eq. (10), adversarial variants with higher are given lower weights. We present our locally reweighted adversarial training in Algorithm 1. LRAT uses different given attacks (e.g., PGD, Algorithm 2 in Appendix A) to obtain different adversarial variants, and leverages the attack-dependent reweighting strategy for obtaining their corresponding weights. For each mini-batch, LRAT reweights the loss of different adversarial variants according to our vulnerability-based reweighting strategy, and then updates the model parameters by minimizing the sum of the reweighted loss on each instance.

| Methods | Natural | PGD | CW | AA |

| ResNet-18 | ||||

| AT | 82.88 | 51.16 0.13 | 49.74 0.17 | 48.57 0.11 |

| GAIRAT | 80.97 | 56.29 0.19 | 45.77 0.13 | 32.57 0.15 |

| LRAT | 82.80 | 53.01 0.13 | 50.49 0.16 | 48.60 0.20 |

| WRN-32-10 | ||||

| AT | 83.42 | 53.13 0.18 | 52.26 0.14 | 46.21 0.14 |

| GAIRAT | 82.11 | 62.74 0.08 | 44.63 0.18 | 44.63 0.18 |

| LRAT | 83.02 | 55.01 0.19 | 53.72 0.26 | 46.13 0.15 |

| Methods | Natural | PGD | CW | AA | ||||

| ResNet-18 | ||||||||

| Epoch | 60th | 100th | 60th | 100th | 60th | 100th | 60th | 100th |

| TRADES | 78.90 | 83.15 | 53.26 0.17 | 52.62 0.19 | 51.74 0.16 | 50.38 0.18 | 48.15 0.12 | 47.80 0.11 |

| GAIR-TRADES | 78.55 | 82.19 | 60.90 0.18 | 58.17 0.13 | 43.39 0.16 | 41.27 0.19 | 35.29 0.14 | 33.24 0.18 |

| LRAT-TRADES | 78.83 | 82.91 | 55.21 0.15 | 54.77 0.17 | 52.97 0.23 | 52.09 0.14 | 48.24 0.19 | 47.81 0.20 |

| WRN-32-10 | ||||||||

| Epoch | 60th | 100th | 60th | 100th | 60th | 100th | 60th | 100th |

| TRADES | 82.96 | 86.21 | 55.11 0.16 | 54.27 0.18 | 54.19 0.19 | 53.09 0.13 | 52.14 0.12 | 51.70 0.15 |

| GAIR-TRADES | 82.20 | 85.35 | 63.34 0.17 | 61.27 0.19 | 45.31 0.12 | 43.32 0.14 | 37.82 0.13 | 35.88 0.09 |

| LRAT-TRADES | 82.74 | 85.99 | 57.68 0.13 | 56.89 0.19 | 55.12 0.23 | 54.27 0.17 | 52.17 0.14 | 51.78 0.24 |

5 Experiments

In this section, we justify the efficacy of LRAT using networks with various model capacity. In the experiments, we consider -norm bounded perturbation that in both training and evaluations. All images of the CIFAR-10 are normalized into [0,1].

5.1 Baselines

We compare LRAT with the no-reweighting strategy (i.e., SAT [18]) and the global-reweighting strategy (i.e., GAIRAT [38]). Rice et al. [24] show that, unlike in standard training, overfitting in robust adversarial training decays test set performance during training. Thus, as suggested by Rice et al. [24], we compare different methods on the performance of the best checkpoint model (the early stopping results at epoch 60). Besides, we also design LRAT for TRADES [36], denoted as (LRAT-TRADES), and the details of the algorithm are in Appendix B. Accordingly, we also compare LRAT-TRADES with TRADES [36] and GAIR-TRADES [38]. Trades-based methods effectively mitigate the overfitting Rice et al. [24], so we compare different methods on both the best checkpoint model and the last checkpoint model (used by Madry et al. [18]), respectively.

5.2 Experimental Setup

We employ the small-capacity network, ResNet (ResNet-18) [14], and the large-capacity network, Wide ResNet (WRN-32-10) [35]. Our experimental setup follows previous works [18, 31, 38]. All networks are trained for 100 epochs using SGD with momentum. The initial learning rate is , divided by at epoch and , respectively. The weight decay is 0.0035. For generating the PGD adversarial data for updating the network, -norm bounded perturbation ; the maximum PGD step ; step size . For generating the CW adversarial data [5], we follow the setup in [3, 37], where the confidence , and other hyper-parameters are the same as that of PGD above. Robustness to adversarial data is the main evaluation indicator in adversarial training [6, 8, 9, 10, 21, 33, 40]. Thus, we evaluate the robust models based on four evaluation metrics, i.e., standard test accuracy on natural data (Natural), robust test accuracy on adversarial data generated by projected gradient descent attack (PGD) [18], Carlini and Wagner attack (CW) [5] and AutoAttack (AA). In testing, -norm bounded perturbation , the maximum PGD step , and step size . There is a random start in training and testing, i.e., uniformly random perturbations ( and ) added to natural instances. Due to the random start, we test our methods and baselines five times with different random seeds.

5.3 Performance Evaluation

Tables 1 and 2 report the medians and standard deviations of the results. In our experiments of LRAT, we simulate the PGD and CW in training ( in Eq. (5)). For adversarial variants, we use our vulnerability-based reweighting strategy (Eq. (7)) to obtain their corresponding weights, where follows Eq. (8) and follows Eq. (9). We choose the three hyper-parameters ( in Eq. (5), in Eq. (10)) that , , and , and we analyze it in Appendix C.

Compared with SAT, LRAT significantly boosts adversarial robustness under PGD and CW, and the efficacy of LRAT does not diminish under AA. The results show that our local-reweighting strategy is superior to the no-reweighting strategy. Compared with GAIRAT, LRAT has a great improvement under CW and AA. Although GAIRAT improves the performance under PGD, it is not enough to be an effective adversarial defense. The adversarial defense could be described as a barrel Effect. For example, if there is a short slab, the barrel would leak. Similarly, if there is a weakness in defending against some attacks, the classifier would fail to predict. In contrast, LRAT reduces the threat of any potential attacks as an effective defense. Thus, the results also show that our local-reweighting strategy is superior to the global-reweighting strategy. In general, the results affirmatively confirm the efficacy of LRAT. We admit that LRAT has minor improvement under AA (AA does not simulate in training), and the reason behind the limited improvement is the inconsistent vulnerability in different views. Since it is impractical to simulate all attacks in training, and thus we recommend that practitioners simulate multiple attacks and assign some weight to the natural (or PGD adversarial) data term for each instance during training.

| Symbol | Objective function | Symbol | Objective function |

| (P) | ([N]) | ||

| (C) | ([P]) |

| Ablation | Natural | PGD | CW | AA |

| (P) | 80.21 | 52.66 0.14 | 49.20 0.22 | 47.72 0.09 |

| (C) | 79.55 | 50.57 0.10 | 51.13 0.16 | 47.81 0.12 |

| (P+C) | 82.40 | 53.52 0.15 | 50.71 0.08 | 47.80 0.17 |

| ([N]+P+C) | 82.80 | 53.01 0.13 | 50.49 0.16 | 48.60 0.20 |

| ([P]+P+C) | 82.08 | 53.33 0.18 | 50.60 0.19 | 48.90 0.12 |

5.4 Ablation Study

This subsection validates that each component in LRAT can improve the adversarial robustness. P (or C) represents that only PGD (or CW) with its corresponding reweighting strategy is simulated during training. [N] (or [P]) represents assigning some weights to the natural (or PGD adversarial) data, which serves as a gentle lower bound to avoid discarding instances during training. The objective functions are in Table 3, where is the adversarial data under PGD, and is the adversarial data under CW. The results are reported in Table 4. Results show that the robustness of global reweighting (P) and (C) is lower than that of the other three local reweighting when tested on attacks different from the given attack simulated during training. Results also show that the robustness of ([N]+P+C) and ([P]+P+C) is higher than that of the other three with no lower bounds when tested on AA, which confirms that a gentle lower bound on instance weights is useful for defending against potentially adaptive adversarial attacks.

6 Conclusion

It has been showing great potential to improve adversarial robustness by reweighting adversarial variants during AT. This paper provides a new perspective to this promising direction and aims to train a robust classifier to defend against various attacks. Our proposal, locally reweighted adversarial training (LRAT), pairs each instance with its adversarial variants and performs local reweighting inside each pair. LRAT will not skip any pairs during adversarial training such that it can passively defend against different attacks in future. Experiments show that LRAT works better than both IRAT (i.e., global reweighting) and the standard AT (i.e., no reweighting) when trained with an attack and tested on different attacks. As a general framework, LRAT provides insights on how to design powerful reweighted adversarial training under any potentially adversarial attacks.

References

- Bai et al. [2019] Y. Bai, Y. Feng, Y. Wang, T. Dai, S.-T. Xia, and Y. Jiang. Hilbert-based generative defense for adversarial examples. In ICCV, 2019.

- Balunovic and Vechev [2019] M. Balunovic and M. Vechev. Adversarial training and provable defenses: Bridging the gap. In ICLR, 2019.

- Cai et al. [2018] Q.-Z. Cai, M. Du, C. Liu, and D. Song. Curriculum adversarial training. In IJCAI, 2018.

- Carlini and Wagner [2017a] N. Carlini and D. Wagner. Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, 2017a.

- Carlini and Wagner [2017b] N. Carlini and D. Wagner. Towards evaluating the robustness of neural networks. In CVPR, 2017b.

- Carlini et al. [2019] N. Carlini, A. Athalye, N. Papernot, W. Brendel, J. Rauber, D. Tsipras, I. Goodfellow, A. Madry, and A. Kurakin. On evaluating adversarial robustness. arXiv preprint arXiv:1902.06705, 2019.

- Chen et al. [2015] C. Chen, A. Seff, A. Kornhauser, and J. Xiao. Deepdriving: Learning affordance for direct perception in autonomous driving. In ICCV, 2015.

- Chen et al. [2020] T. Chen, S. Liu, S. Chang, Y. Cheng, L. Amini, and Z. Wang. Adversarial robustness: From self-supervised pre-training to fine-tuning. In CVPR, 2020.

- Cohen et al. [2019] J. Cohen, E. Rosenfeld, and Z. Kolter. Certified adversarial robustness via randomized smoothing. In ICML, 2019.

- Du et al. [2021] X. Du, J. Zhang, B. Han, T. Liu, Y. Rong, G. Niu, J. Huang, and M. Sugiyama. Learning diverse-structured networks for adversarial robustness. In ICML, 2021.

- Finlayson et al. [2019] S. G. Finlayson, J. D. Bowers, J. Ito, J. L. Zittrain, A. L. Beam, and I. S. Kohane. Adversarial attacks on medical machine learning. Science, 2019.

- Gao et al. [2021] R. Gao, F. Liu, J. Zhang, B. Han, T. Liu, G. Niu, and M. Sugiyama. Maximum mean discrepancy is aware of adversarial attacks. In ICML, 2021.

- Goodfellow et al. [2015] I. J. Goodfellow, J. Shlens, and C. Szegedy. Explaining and harnessing adversarial examples. In ICLR, 2015.

- He et al. [2016] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, 2016.

- He et al. [2018] W. He, B. Li, and D. Song. Decision boundary analysis of adversarial examples. In ICLR, 2018.

- Kurakin et al. [2017] A. Kurakin, I. Goodfellow, S. Bengio, et al. Adversarial examples in the physical world. In ICLR, 2017.

- Ma et al. [2021] X. Ma, Y. Niu, L. Gu, Y. Wang, Y. Zhao, J. Bailey, and F. Lu. Understanding adversarial attacks on deep learning based medical image analysis systems. Pattern Recognition, 2021.

- Madry et al. [2018] A. Madry, A. Makelov, L. Schmidt, D. Tsipras, and A. Vladu. Towards deep learning models resistant to adversarial attacks. In ICLR, 2018.

- Miyato et al. [2017] T. Miyato, A. M. Dai, and I. Goodfellow. Adversarial training methods for semi-supervised text classification. In ICLR, 2017.

- Nguyen et al. [2015] A. Nguyen, J. Yosinski, and J. Clune. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In CVPR, 2015.

- Pang et al. [2019] T. Pang, K. Xu, C. Du, N. Chen, and J. Zhu. Improving adversarial robustness via promoting ensemble diversity. In ICML, 2019.

- Papernot et al. [2016] N. Papernot, P. McDaniel, A. Sinha, and M. Wellman. Towards the science of security and privacy in machine learning. arXiv:1611.03814, 2016.

- Raghunathan et al. [2020] A. Raghunathan, S. M. Xie, F. Yang, J. Duchi, and P. Liang. Understanding and mitigating the tradeoff between robustness and accuracy. In ICML, 2020.

- Rice et al. [2020] L. Rice, E. Wong, and Z. Kolter. Overfitting in adversarially robust deep learning. In ICML, 2020.

- Shafahi et al. [2020] A. Shafahi, M. Najibi, Z. Xu, J. Dickerson, L. S. Davis, and T. Goldstein. Universal adversarial training. In AAAI, 2020.

- Szegedy et al. [2014] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus. Intriguing properties of neural networks. In ICLR, 2014.

- Tsipras et al. [2019] D. Tsipras, S. Santurkar, L. Engstrom, A. Turner, and A. Madry. Robustness may be at odds with accuracy. In ICLR, 2019.

- Van der Maaten and Hinton [2008] L. Van der Maaten and G. Hinton. Visualizing data using t-sne. Journal of machine learning research, 2008.

- Wang et al. [2020a] H. Wang, T. Chen, S. Gui, T.-K. Hu, J. Liu, and Z. Wang. Once-for-all adversarial training: In-situ tradeoff between robustness and accuracy for free. In NeurIPS, 2020a.

- Wang et al. [2019] Y. Wang, X. Ma, J. Bailey, J. Yi, B. Zhou, and Q. Gu. On the convergence and robustness of adversarial training. In ICML, 2019.

- Wang et al. [2020b] Y. Wang, D. Zou, J. Yi, J. Bailey, X. Ma, and Q. Gu. Improving adversarial robustness requires revisiting misclassified examples. In ICLR, 2020b.

- Wu et al. [2020] D. Wu, S.-T. Xia, and Y. Wang. Adversarial weight perturbation helps robust generalization. In NeurIPS, 2020.

- Yang et al. [2021] S. Yang, T. Guo, Y. Wang, and C. Xu. Adversarial robustness through disentangled representations. In AAAI, 2021.

- Yang et al. [2020] Y.-Y. Yang, C. Rashtchian, H. Zhang, R. Salakhutdinov, and K. Chaudhuri. A closer look at accuracy vs. robustness. In NeurIPS, 2020.

- Zagoruyko and Komodakis [2016] S. Zagoruyko and N. Komodakis. Wide residual networks. In BMVC, 2016.

- Zhang et al. [2019] H. Zhang, Y. Yu, J. Jiao, E. P. Xing, L. E. Ghaoui, and M. I. Jordan. Theoretically principled trade-off between robustness and accuracy. In ICML, 2019.

- Zhang et al. [2020a] J. Zhang, X. Xu, B. Han, G. Niu, L. Cui, M. Sugiyama, and M. Kankanhalli. Attacks which do not kill training make adversarial learning stronger. In ICML, 2020a.

- Zhang et al. [2021] J. Zhang, J. Zhu, G. Niu, B. Han, M. Sugiyama, and M. Kankanhalli. Geometry-aware instance-reweighted adversarial training. In ICLR, 2021.

- Zhang et al. [2020b] Y. Zhang, Y. Li, T. Liu, and X. Tian. Dual-path distillation: A unified framework to improve black-box attacks. In ICML, 2020b.

- Zhu et al. [2021] J. Zhu, J. Zhang, B. Han, T. Liu, G. Niu, H. Yang, M. Kankanhalli, and M. Sugiyama. Understanding the interaction of adversarial training with noisy labels. arXiv preprint arXiv:2102.03482, 2021.

Appendix A Adversarial Attack

Appendix B TRadeoff-inspired Adversarial DEfense via Surrogate-loss Minimization

The objective function of TRadeoff-inspired Adversarial DEfense via Surrogate-loss minimization (TRADES) [36] is

| (13) |

where

| (14) |

is a regularization parameter. Others remain the same with standard adversarial training. The approximation method for searching adversarial data in TRADES is as follows:

| (15) |

Instead of the loss function in Eq. (3), TRADES use that the divergence between the prediction of natural data and their adversarial variants, i.e.:

| (16) |

TRADES generates the adversatial data with a maximum predicted probability distribution difference from the natural data, instead of generating the most adversarial data in AT. We also design LRAT for TRADES that LRAT-TRADES in Algorithm 4.

Appendix C Experimental Details

Weighting Normalization. In our experiments, we impose another constraint on our objective Eq. (5):

| (17) |

to implement a fair comparison with baselines.

Selection of Hyper-parameters. It is an open question how to define the decreasing function in Eq. (7). (or given the definition of in Eq. (10), how to select the hyper-parameters under different situations.) In our experiments, given an alternative value set , we choose in Eq. (10) that , , and given an alternative value set , we choose in Eq. (5) that . Our experiments show that the performance has a minor variation between different hyper-parameters, and we choose the optimal hyper-parameters depending on whose robustness attacked by PGD is the best.

Natural Data Term. To avoid discarding instances during training, we impose the non-negative coefficient in Eq. (5) to assign some weight to the natural data term. The definition of the natural data term can vary as required by different tasks. When practitioners only focus on the robustness under attacks simulated in training, this term can be eliminated. When practitioners focus on the robustness under attacks simulated in training and the accuracy on natural data, this term can be defined as the loss of the natural instance. When practitioners focus on the robustness under attacks both simulated and unseen in training, this term can be defined as the loss of the adversarial variant. In our experiments, natural data term in LRAT (the ([N]+P+C) in our ablation study) is the loss sum of the natural instance and the PGD adversarial variant, which aims to achieve robustness and accuracy.

Appendix D Experimental Resources

We implement all methods on Python (Pytorch ) with a NVIDIA GeForce RTX 3090 GPU with AMD Ryzen Threadripper 3960X 24 Core Processor. The CIFAR-10 dataset and the SVHN dataset can be downloaded via Pytorch. See the codes submitted. Given the images from the CIFAR-10 training set and digits from the SVHN training set, we conduct the adversarial training on ResNet-18 and Wide ResNet-32 for classification. DNNS are trained using SGD with momentum, the initial learning rate of and the batch size of for epochs.

Appendix E Additional Experiments on the SVHN

We also justify the efficacy of LRAT on the SVHN. In the experiments, we employ ResNet-18 and consider -norm bounded perturbation that in both training and evaluations. All images of the SVHN are normalized into [0,1]. Tables 5 reports the medians and standard deviations of the results. Compared with SAT, LRAT significantly boosts adversarial robustness under PGD and CW, and the efficacy of LRAT does not diminish under AA. Compared with GAIRAT, LRAT has a great improvement under CW and AA.

| Methods | Natural | PGD | CW | AA |

| AT | 92.38 | 55.97 0.18 | 52.90 0.21 | 47.84 0.17 |

| GAIRAT | 90.31 | 62.96 0.10 | 47.17 0.17 | 38.74 0.19 |

| LRAT | 92.30 | 59.76 0.14 | 55.47 0.18 | 47.65 0.27 |

Appendix F Discussions on the defense against unseen attacks

As a general framework, LRAT provides insights on how to design powerful reweighting adversarial training under different adversarial attacks. Duo to the inconsistent vulnerability in different views, reweighting adversarial training has the risk of weakening the ability to defend against unseen attacks. Thus, we recommend that practitioners simulate diverse attacks during training. Note that it does not mean that practitioners should use different attacks indiscriminately—for instance, during standard adversarial training, mixing some weak adversarial data into PGD adversarial data will weaken the robustness on the contrary. The recommended diversity is diverse information focused on during adversarial data generation, such as the difference between misleading the classifier with the lowest probability of predicting the correct label (PGD) and misleading the classifier with the highest probability of predicting a wrong label (CW).