Local Graph Embeddings Based on Neighbors Degree Frequency of Nodes

Abstract.

We propose a local-to-global strategy for graph machine learning and network analysis by defining certain local features and vector representations of nodes and then using them to learn globally defined metrics and properties of the nodes by means of deep neural networks. By extending the notion of the degree of a node via Breath-First Search, a general family of parametric centrality functions is defined which are able to reveal the importance of nodes. We introduce the neighbors degree frequency (NDF), as a locally defined embedding of nodes of undirected graphs into euclidean spaces. This gives rise to a vectorized labeling of nodes which encodes the structure of local neighborhoods of nodes and can be used for graph isomorphism testing. We add flexibility to our construction so that it can handle dynamic graphs as well. Afterwards, the Breadth-First Search is used to extend NDF vector representations into two different matrix representations of nodes which contain higher order information about the neighborhoods of nodes. Our matrix representations of nodes provide us with a new way of visualizing the shape of the neighborhood of a node. Furthermore, we use these matrix representations to obtain feature vectors, which are suitable for typical deep learning algorithms. To demonstrate these node embeddings actually contain some information about the nodes, in a series of examples, we show that PageRank and closeness centrality can be learned by applying deep learning to these local features. Our constructions are flexible enough to handle evolving graphs. In fact, after training a model on a graph and then modifying the graph slightly, one can still use the model on the new graph. Moreover our methods are inductive, meaning that one can train a model based on a known graph and then apply the trained model on another graph with a similar structure. Finally, we explain how to adapt our constructions for directed graphs. We propose several methods of feature engineering based on higher order NDF embeddings and test their performance in several specific machine learning algorithms on graphs.

Key words and phrases:

Graph Embeddings, Vector Representation of Nodes, Matrix Representation of Nodes, Local Features of Graphs, Centrality Measures, Closeness Centrality, PageRank, Parametric Centrality Measures, Dynamic Graphs, Inductive Graph Deep Learning Models, Graph Machine Learning, Graph Feature Engineering.1. Introduction

A fundamental challenge in graph machine learning is that graphs in real world applications (aka networks) have a large and varying number of nodes (vertices) and edges (links or relationships). Therefore machine learning models on evolving graphs require a considerable time for feature extraction, training and inference. This limits their practical usage in real time applications concerning dynamic graphs. On the other hand, many graph algorithms and machine learning algorithms on graphs rely on operations involving the adjacency matrix or the Laplacian matrix of graphs which are computationally very expensive on large graphs. Time complexity problem also arises in the computation of various globally defined centrality measures, such as closeness centrality. Therefore, for machine learning on large dynamic graphs and generally for large network analytics, one wishes for two things: feature engineering based on local structures of graphs and inductive algorithms suitable for dealing with dynamic graphs.

In this work, we try to fulfill both these wishes by proposing various locally defined embeddings of nodes into euclidean vector spaces and then designing inductive algorithms to learn globally defined features and properties of nodes of graphs. We only use the adjacency lists of graphs and never get involved with complexity of matrix computations on adjacency matrices or Laplacian matrices of graphs. Among other benefits, this makes our methods more suitable for parallel and distributed computations, although we do not propose any parallel algorithm in this article.

The adjective “local” in our nomenclature has a very specific and concrete meaning. It means we use the Breadth-First Search (BFS) algorithm to traverse around the neighborhood of a node and collect the local information. Therefore, implementations of our methods may include a natural number parameter to determine how many layers of the BFS should be performed. Another basic concept that we use is the degree of nodes. Only by combining these two very basic notions, we are able to design several ruled based node representations that contain a great deal of information about the local structure of the neighborhoods of nodes.

These representations of nodes have several theoretical and machine learning applications. They can be used in graph isomorphism testing. They gives rise to a local approximation of neighborhoods of nodes by simple trees. They provide a simple way of visualizing the neighborhoods of nodes as color maps. They can be considered as feature vectors to be fed into deep learning algorithms to learn global properties of nodes. Finally we show how one can perform feature engineering on them to extract richer feature vectors suitable for specific downstream tasks. These applications are just the tip of iceberg and we expect our methods of graph representation find more applications in other areas of machine learning on graphs and network analysis.

We begin with exploiting the BFS and the degrees of nodes to define a general family of parametric centrality functions on undirected graphs in Section 2. These centrality functions are defined as certain summations. We demonstrate the prospect of these parametric centrality functions on very small graphs appearing in network science. However, they are not our primary objective, but they serve us mainly as the simple prototypes and introductory constructions that guide us to more sophisticated methods and constructions.

To achieve our aforementioned goals in machine learning on huge dynamic graphs, we propose a decomposition of the degrees of nodes into vectors in Section 3. Our way of decomposing the degrees of nodes, which is the main source of our various vector representations, is the counting of occurrences of different degrees among the neighbors of nodes, hence the name neighbors degree frequency or shortly NDF. It is a vector representation of nodes, namely a mapping that maps a node to its NDF vector inside a euclidean vector space. In other words, it is a node embedding. The NDF vector of a node contains not only the information of its degree, but also the information of the degrees of its neighbors. This two layers of information naturally lays out a perspective of the neighborhood of the node and it can be interpreted and utilized in several ways. For instance, we show that the NDF vector of a node amounts to an approximation of its neighborhood by means of a tree of height 2. We also interpret the NDF vectors as a labeling of nodes that needs to be preserved by isomorphisms of graphs. In this way, NDF vectors and their higher order generalizations find application in graph isomorphism testing. The details of these topics are presented in Subsections 3.2 and 5.2.

Whenever there exit a larger number of different degrees in the graph, bookkeeping of the frequency of degrees among neighbors of nodes can increase the space complexity of algorithms. On the other hand, the set of occurring degrees in a dynamic graph can change with every node/edge added or removed from the graph. Therefore we propose a form of aggregation in the degree frequency counting in Section 4. This gives rise to a diverse family of dynamic NDF vector representations which are capable of both reducing the dimension of the NDF vectors and handling changes in dynamic graphs easily. Dynamic NDF vectors depend on how we partition the set of possible degrees in a graph, so we discuss several practical and intuitively reasonable ways of implementing these partitions.

Two layers of information about each node does not seem enough for employing NDF vectors as feature vectors to fed into deep learning algorithms. Therefore we extend the definition of NDF vector representations into two different higher order NDF matrix representations in Section 5. Every row of these matrix representations of nodes is an aggregation of NDF vectors of layers of the BFS (NDFC matrices) or the degree frequency of layers (CDF matrices). Besides using these matrix representations as feature vectors in deep learning algorithms, they have other applications. Like NDF vectors, higher order NDF matrices can be used in graph isomorphism testing. They perform better than color refinement algorithm in several examples. One can also use these matrices to visualize the neighborhoods of nodes in the form of color maps.

In Section 6, we flatten NDFC matrices as one dimensional arrays and consider them as feature vectors associated to nodes of graphs. We feed these feature vectors into a simple feedforward neural network model and obtain a supervised deep learning algorithm to learn and predict PageRanks of nodes in undirected graphs. We test some other ways of feature engineering and a special form of convolutional neural networks too. We also show how our method for learning PageRank is applicable for predicting PageRank in dynamic and evolving graphs and even in unseen graph.

We delve further into feature engineering using our higher order NDF matrix representations in Section 7. We consider the un-normalized version of CDF matrices and perform a specific type of aggregation on them. The result is a set of feature vectors suitable for learning the closeness centrality of nodes by means of feedforward neural networks. Our method in this section are also inductive and easily applicable to dynamic graphs.

PageRanks of nodes are usually computed in directed graphs, specifically in the network of webpages and hyperlinks in the internet. Therefore one would like to extend the method developed for learning PageRanks of undirected graphs, in Section 6, to directed graphs. We explain how NDF embeddings and their higher order generalizations can be defined on directed graphs in Section 8. We also propose a minor change in the definition of NDFC matrices which is needed to take into account the adverse effect of certain directed edges in PageRank. Afterwards, we apply the directed version of NDFC matrices for learning the PageRanks of directed graphs.

Our methods and constructions depend only on very basic concepts in graph theory. On the other hand, they have shown several early successes in learning PageRank and closeness centrality by means of deep learning. Therefore we believe the theory of NDF embeddings and its higher order generalizations will find a wide range of applications in graph theory, network science, machine learning on graphs and related areas. Besides, it appears that our constructions are new in graph theory and computer science, and so there are so many details that need a through investigation to mature the subject.

Related works. The author is new in network science, graph representation learning and machine learning on graphs and does not claim expertise in these research areas. That being said, we believe almost all constructions and methods developed in this article are new and have not appeared in the work of other researchers. However, near to the completion of this work, we found out that a very special case of what we call dynamic NDF vectors appeared before in the work of Ai et al [1]. They introduced a centrality measure based on the distribution of various degrees among the neighbors of nodes. They use iterated logarithm to associate a vector to each node. In our terminology, this vector is the dynamic NDF vector associated to the list of starting points , see [1] and Section 4 for details and precise comparison. We also notice that what we call the NDF-equivalence among nodes of a graph coincides with the coloring of the nodes obtained after two rounds of color refinement, see Subsection 3.2 for details.

In the following sections, all graphs are assumed undirected and simple, with no loops and multiple edges, except in Section 8 where we deal with directed graphs. We denote graphs using the notation , meaning is the graph, is the set of its vertices that we call them nodes, and is the set of edges of the graph.

2. Parametric Centrality Functions

The Breadth-First Search algorithm in graphs has a mathematical interpretation in terms of a metric structure on the set of nodes. Here, we review this point of view and use it to associate a certain sequence of numbers to each node of a graph. We show how these sequences of numbers may be used to construct parametric centrality measures applicable in network science.

In mathematics, a metric space is a set equipped with a metric function such that for all , we have

-

(i)

(symmetry).

-

(ii)

, and the equality holds if and only if (positivity).

-

(iii)

(triangle inequality).

A metric space is usually denoted by the pair .

The natural metric space structure on the set of nodes of a graph is defined by the distance of every two nodes as the metric function. We recall that the distance of two nodes is defined as the number of edges in any shortest path connecting and . In order to this definition makes sense, here, we assume that is connected. With this assumption, it is easy to verify the axioms of a metric space for . By adding to the target space of the metric function and defining the distance of two nodes in two distinct components to be , one can extend this definition to all undirected graphs, possibly disconnected. We use this extended definition when the graph under consideration is not connected. Although is a metric on the set , we may interchangeably call it the natural metric on the graph . One notes that this metric structure on induces a discrete topology on , meaning every subset of is both open and closed in this topology. Therefore we drop the terms open and closed in our discussion.

The notion of a metric function extends the euclidean distance in . Thus similar to intervals, disks and balls in , and , respectively, we can define corresponding notions in any metric space as well. However, since most graph drawings are in two dimensions, we borrow the terms ”disk” and ”circle” from to use in our terminology.

Given an undirected graph , for every node and integers , we define the disk of radius centered at by

and similarly, the circle of radius centered at is defined by

Next, computing the cardinality of the above circles defines a sequence of functions on , i.e.

Here stands for the word “size” and let us call this sequence the sizes of circles around a node. Now, we are ready to define the general family of parametric functions on graphs.

Definition 2.1.

Let be an undirected graph. To any given sequence of real numbers, we associate a parametric function defined by

We call this function the parametric centrality function associated with .

When is known, we may drop it from our notation. We note the followings:

Remarks 2.2.

-

(i)

Since we are dealing with finite graphs, the number of non-zero functions in the sequence is bounded by the diameter of the graph (plus one). Therefore the above series is always finite and well defined.

-

(ii)

By choosing and otherwise, we get the degree centrality (up to a scaling factor).

-

(iii)

In practice, we let only first few parameters in to be non-zero. Thus these parametric functions are indeed locally defined features on graph nodes.

- (iv)

-

(v)

Even the sequence contains some information about the local structure of the graph around its nodes. For instance, comparing the value against another local feature of the graph gives rise to a measure of the inter-connectivity of neighborhoods of nodes, see Proposition 3.4.

-

(vi)

The elements of the sequence increase in higher density neighborhoods. Alternatively, one can use the sequence (or better for some ) in lieu of to define a family of parametric functions such that they decrease on nodes in higher density neighborhoods.

-

(vii)

When nodes or edges are weighted, one can incorporate those weights in the definition of the sequence to obtain more meaningful centrality measures on graph nodes.

In the following examples, we expand on the prospect of the parametric centrality functions in network science. Here, we consider a relatively simple way of defining the sequence of parameters. For some , we set for and . For the sake of simplicity, let us call the parametric centrality function associated to this the -centrality function and denote it simply by . It is interesting to note that this very simple family of parametric centrality measures can mimic (to some extent) some of the well known centrality measures.

Example 2.3.

Let be the network of neighboring countries in a continent, i.e. the nodes are countries and two countries are connected if they share a land border. It is a reasonable assumption that the financial benefits of mutual trades between two countries decrease by some factor for every other country lying in a shortest path connecting them. Therefore the total economical benefits of continental trade for each country depends on both the distance of countries and the number of other countries in each circle around the country. In mathematical terms, for a given country , the financial benefits of trade between and countries with distance from is , because there are exactly countries in any shortest path connecting them. The total benefits of continental trade is the summation over all distances , i.e. and this is exactly . Therefore the -centrality function models the total financial benefits of continental trade for each country according to the graph structure of this network (and our assumptions!).

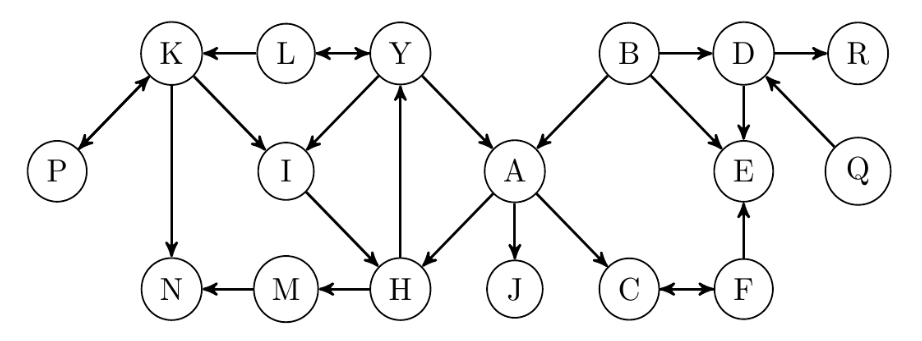

We use the graph shown in Figure 1 in several occasions in this paper to explain various concepts and constructions. It was originally designed to explain the content of Section 3. However, it appears to be explanatory for prospects of parametric centrality functions as well.

Example 2.4.

Let be the undirected graph shown in Figure 1. We heuristically set and compute -centrality function on nodes of . The result is divided by the scaling factor 21.15 in order to be comparable to closeness centrality. In Table 1, we compare the sorted dictionary of -centrality function with the sorted dictionaries of closeness, eigenvector and betweenness centrality measures (computed using the Python package NetworkX [4]). In each case, we arrange the result in decreasing order to show that the order of nodes according to -centrality function is similar to the order with respect to closeness centrality. One observes that those nodes which are not in the same order happen to have very close -centrality as well as closeness centrality. The order of values of -centrality function shows less similarity with the order of values of eigenvector centrality. One can also spot more disarray while comparing -centrality function with betweenness centrality. In Table 2, we compare the values of -centrality function (again divided by the scaling factor 21.15) with the values of closeness centrality. It shows that -centrality function fluctuates around closeness centrality with an average error of about 3.938 percent.

The diameter, the maximum distance between two nodes, of graph is 7. So for computing -centrality function, we only need to consider circles of radius at most 7. If we restrict our computations to circles with strictly smaller radiuses, we get less accurate results. For instance, the average percentage of differences between closeness and -centrality (the last row of Table 2) will be 4.149 percent (respectively 4.411, 5.868, 13.401, 30.941 and 65.599 percent) if we use circles of radius at most 6 (respectively 5, 4, 3, 2 and 1). This suggests that the higher maximum radius, the more precise estimation of closeness centrality. It also shows that by restricting our computations to circles of radius at most 5, we lose about 0.473 percentage of accuracy, but it decreases the computational burden. Therefore, in larger graphs, the maximum radius of circles involved in the computation can be a notable hyperparameter to establish a balance between precision and time complexity of the computation. Finally we must mention that in a low maximum radius computation of -centrality, one should consider a different scaling factor to achieve a better estimation. For example, in the computation of -centrality function of involving circles of at most radius 2 and with the scaling factor 15.7, the average percentage of differences drops to 14.631 percent (from 30.941 percent with the scaling factor 21.15).

| Closeness | -Centrality | Eigenvector | Betweenness | ||||

| Centrality | Function | Centrality | Centrality | ||||

| A | 0.485 | A | 0.485 | Y | 0.444 | A | 0.613 |

| H | 0.444 | H | 0.448 | A | 0.437 | B | 0.346 |

| Y | 0.432 | Y | 0.437 | H | 0.436 | H | 0.261 |

| B | 0.410 | B | 0.418 | I | 0.347 | D | 0.242 |

| I | 0.364 | I | 0.378 | K | 0.236 | Y | 0.229 |

| C | 0.356 | D | 0.361 | B | 0.224 | K | 0.156 |

| L | 0.348 | E | 0.353 | L | 0.211 | I | 0.128 |

| M | 0.340 | C | 0.351 | M | 0.175 | M | 0.086 |

| D, E, J | 0.333 | K | 0.348 | C | 0.165 | C | 0.083 |

| F | 0.314 | L | 0.347 | E | 0.143 | L | 0.061 |

| K | 0.308 | M | 0.336 | D | 0.141 | E | 0.046 |

| N | 0.291 | F | 0.311 | J | 0.136 | F, N | 0.021 |

| Q, R | 0.254 | J | 0.310 | N | 0.128 | J, P, Q, R | 0.0 |

| P | 0.239 | N | 0.298 | F | 0.096 | ||

| Q, R | 0.235 | P | 0.073 | ||||

| P | 0.227 | Q, R | 0.044 | ||||

| Node | Closeness | -Centrality | Percentage of |

| Centrality | Function | Difference | |

| A | 0.485 | 0.485 | 0.014% |

| H | 0.444 | 0.448 | 0.766% |

| B | 0.410 | 0.418 | 1.898% |

| Y | 0.432 | 0.437 | 0.941% |

| I | 0.364 | 0.378 | 3.852% |

| C | 0.356 | 0.351 | 1.149% |

| L | 0.348 | 0.347 | 0.127% |

| E | 0.333 | 0.353 | 5.886% |

| D | 0.333 | 0.361 | 8.155% |

| J | 0.333 | 0.310 | 7.041% |

| M | 0.340 | 0.336 | 1.289% |

| F | 0.314 | 0.311 | 0.759% |

| K | 0.308 | 0.348 | 12.979% |

| N | 0.291 | 0.298 | 2.594% |

| Q | 0.254 | 0.235 | 7.381% |

| R | 0.254 | 0.235 | 7.381% |

| P | 0.239 | 0.227 | 4.739% |

| Average percentage of Differences | 3.938% | ||

In the next example, we again compare certain -centrality functions to closeness centrality on three famous graphs in network science and we obtain similar results as the above example.

Examples 2.5.

We use the Python library NetworkX, [4], for generating the following graphs and for computing the closeness centrality of their nodes. To compute -centrality functions for all three cases, we use circles of radius at most 5. We refer the reader to [4] for corresponding references for each graph.

-

(i)

For Zachary’s Karate Club graph, we choose . Then by dividing the -centrality function by the scaling factor , we get an estimation of the closeness centrality function by an average error of about 2 percent.

-

(ii)

For the graph of Florentine families, we set and let the scaling factor be . Then, the average differences between -centrality function and closeness centrality is about 3.416 percent.

-

(iii)

For the graph of co-occurrence of characters in the novel Les Miserables, we set and let the scaling factor be . In this case, the average differences between -centrality function and closeness centrality is about 5.46 percent.

A word of caution is in order regarding the above examples. Our primary goal is not to prove that -centrality function is similar to (or approximates) other centrality measures. These examples only suggest that -centrality functions and more generally parametric centrality functions can equally be considered as certain measures to evaluate the importance of nodes in a graph. Furthermore, since we compute parametric centrality functions for each node based on its local neighborhood, the computation of these functions are more practical and computationally cheaper than other centrality measures, which are computed based on the information of the whole graph. Therefore, in certain applications in network science, they may offer cheaper and more flexible substitutes for well-known centrality measures.

In certain problems in network science, the factor can be the probability of some interaction (transmission of information or diseases, etc) between nodes in a network. In these cases, one might need to define in lieu of for and the appropriate parametric centrality function would be . The bottom line is our definition has enough flexibility to be useful in many situations occurring in real world problems in network science.

Counting the number of nodes in each circle (layer of the BFS) around graph nodes reveals only a small portion of the local structure of graphs. To extract more refined local information of each node, we continue with constructing a vector representation of nodes in the next section.

3. The Neighbors Degree Frequency (NDF) Vector Representation of Nodes

In this section, we construct the simple version of our graph embedding and discuss its interpretations, usages and shortcomings. This leads us to more mature versions of our construction in upcoming sections.

Definition 3.1.

Let be an undirected graph and let be the maximum degree of nodes in . For every node , we define a vector by setting to be the number of neighbors of of degree for all . We call the function the vanilla neighbors degree frequency embedding of nodes of , and briefly vanilla NDF.

The main point of our definition is to count the frequency of various degrees among neighbors of a node, hence the name Neighbors Degree Frequency and the acronym NDF. We basically decompose the degree of a node into a vector consisting of degree frequencies of its neighbors, as one computes . Using , one can also find an upper bound for the size of the circle of radius 2 around , i.e. , see Proposition 3.4.

The vanilla version of neighbors degree frequency embedding has three obvious shortcomings: First it is wasteful, because there might exist numbers between 1 and that never occur as a degree of a node in . For example, consider a star shape graph with 10 nodes. It has 9 nodes with degree 1 that are adjacent to the central node of degree 9. The vanilla NDF requires 9 euclidean dimensions, but only the first and the last entries are used in the image of the mapping . To remedy this issue, we propose a minimal version of NDF embedding in Definition 3.2 below.

The second problem occurs when networks grow. Since the nodes with higher degrees usually attract most of the new links (for instance, because of preferential attachment process), there is a good chance that the maximum degree of graph nodes increases in growing graphs, and so a fixed wouldn’t be sufficient for the above definition any more. This issue on small dynamic graphs has an easy solution: One can count all nodes of degree greater or equal to as degree .

The third problem is the curse of dimensionality for graphs having nodes with very high degrees. For instance, graphs appearing in social networks may contain nodes with millions of links. Clearly, a vector representation in a euclidean space of several million dimensions is not a practical embedding!. For the case of big dynamic graphs, we propose a dynamic version of NDF in Section 4. Our solution for big dynamic graph is perfectly applicable to the above cases too. However, since the vanilla and minimal NDF vector representations have several conceptual and theoretical importance, we devote a few more paragraphs to them.

Definition 3.2.

Let be an undirected graph. Let be the ascending list of all occurring degrees of nodes in , which we call it the degrees list of the graph . The minimal neighbors degree frequency embedding of nodes of is defined as

where is the number of neighbors of of degree , for all .

The minimal NDF is essentially the same as vanilla NDF except that we have omitted never occurring degrees while recording the frequency of degrees of neighbors of each node. The important point we need to emphasize here is that we substitute the role played by the “maximum degree” of graph nodes with “degrees list” of the graph. This paves the way for more variations of NDF by upgrading the concept of “degrees list” into “intervals list”. This is our main pivot to define more practical versions of NDF for huge dynamic graphs in Section 4. In the following example, we examine some aspects of vanilla (and minimal) NDF:

Example 3.3.

Let be the graph shown in Figure 1. The ascending list of degrees in is . Hence the minimal NDF and the vanilla NDF are the same and they map the nodes of into . The NDF vector representations of nodes of are shown in Table 3. One immediately notices the followings:

-

(i)

Nodes are all of degree 3, but they have very different NDF vector representations. This shows how NDF is much more detailed criterion than degree centrality in classifying nodes.

-

(ii)

Nodes and have the same NDF vector representations, because their neighbors have similar degree frequency. But the circles of radius 2 around them do not have the same size. In fact, one easily checks that and . This hints that in order to define a more faithful representation of nodes, we cannot content ourselves to just NDF vectors. Using the sequence is one way to gain more in depth knowledge of the neighborhood topology. We shall also propose two extensions of NDF vectors to circles of higher radius around a node to extract more information about the bigger neighborhoods of nodes in Section 5.

-

(iii)

Nodes and cannot be distinguished from each other by all these measures. They essentially have the same statistics of degree frequency and circles sizes at all levels. In this case, it is clear that the permutation which only switches these two nodes is an automorphism on (an isomorphism from into itself). Therefore it is expected that they have the same NDF vector representation, see also Example 3.9.

| Node(s) | NDF Vector |

|---|---|

| A | (1, 1, 1, 2, 0) |

| D | (2, 0, 2, 0, 0) |

| K | (1, 2, 1, 0, 0) |

| Y, H | (0, 1, 1, 1, 1) |

| B | (0, 0, 1, 1, 1) |

| E | (0, 1, 1, 1, 0) |

| I | (0, 0, 0, 3, 0) |

| C | (0, 1, 0, 0, 1) |

| F | (0, 1, 1, 0, 0) |

| L | (0, 0, 0, 2, 0) |

| N, M | (0, 1, 0, 1, 0) |

| J | (0, 0, 0, 0, 1) |

| P, Q, R | (0, 0, 0, 1, 0) |

3.1. Tree approximations of neighborhoods of nodes

One might interpret vanilla and minimal NDF vector representations of nodes as an extension of degree of nodes into an embedding of nodes into vector spaces. However there is a more explanatory picture of NDF which is the subject of this subsection. Here we explain how NDF gives rise to an approximation of local neighborhoods of nodes by means of trees. The following proposition with minor modifications is valid for the minimal NDF too. For the sake of simplicity, we state it for the vanilla NDF only. First, we need some notations.

Let be an undirected graph. We recall that the ego subgraph of a graph centered at of radius , which we denote it by , is the induced graph whose set of nodes equals , the disk of radius around , and whose set of edges consists of those edges of that both ends belong to , see [4].

Proposition 3.4.

With the notation of Definition 3.1, we define a function by setting , i.e. is defined as the dot product of and the vector . Then, for every , we have the followings:

-

(i)

, for all .

-

(ii)

If is a tree, then .

-

(iii)

Let be the graph obtained from by omitting the edges whose both ends lie in . Then if and only if is a tree.

Proof.

Given a node , let denote , the set of neighbors of besides . Then we have

Taking the cardinality of both sides of this inclusion proves (i).

Assertion (ii) follows from (iii). To prove (iii), we only need to notice that the equality is logically equivalent to the presence of the following two conditions:

And this equivalence is clear from the above inclusion. ∎

Example 3.5.

In this example, we apply the content of the above proposition to some selected nodes of graph in Figure 1.

-

(i)

One computes and . This is because is not a tree; first and are not subsets of , secondly .

-

(ii)

We observe that is a tree and we have .

-

(iii)

We have , but the ego subgraph is not a tree, because of the triangle . However, the graph is a tree (after omitting the edge (B, D) from ).

The insight gained in Proposition 3.4 leads us to the following concept of local approximation:

Remark 3.6.

For every node in a graph , we define a rooted tree of height 2 as follows: We call the root of the tree and the set of its children is . In other words the children of the root are indexed by neighbors of . Next, for every neighbor of , we associate children to the node . With this setting in place, the intuition behind NDF and Proposition 3.4 is that the vanilla (and minimal) NDF simplifies (or approximates) every local neighborhood of a node in a graph as the tree . In fact, if and only if there is an isomorphism of graphs such that .

As an example for the last statement of the above remark consider node in the graph in Figure 1. Then the mapping defined as , , , , , and is an isomorphism of graphs, so .

This approximation is similar to the role played by tangent spaces in geometry of smooth manifolds, because they locally approximate the neighborhood of points on a manifold by means of flat vector spaces. For example consider the tangent line at a point in a smooth curve lying in . At a small neighborhood of that point, the tangent line is a locally good approximation of the curve. Here by local approximation, we mean that there is an invertible function between an open neighborhood of the point on the curve and an open interval around zero in the tangent line such that both and are smooth mappings.

One notes that this analogy is not perfect. For instance, tangent spaces vary “smoothly” when points vary smoothly in the manifold. But there is no notion of smoothness in graphs (at least nothing that I am aware of), because graph nodes are essentially discrete creatures. Another important gap in this analogy is the notion of “dimension”: In smooth (or even topological) manifolds, every point in a connected manifold enjoys a tangent space with the same dimension, which is the dimension of the manifold. Again, we do not have a notion of dimension in graphs or trees yet (to my knowledge). However this analogy helps us to think about higher order approximations of the neighborhood of a node in the graph. In Section 5, we expand the idea of counting the frequency of degrees to higher circles around each node.

3.2. NDF-equivalence as a requirement for graph isomorphisms

Another application of NDF embeddings is in graph isomorphism testing problem. It starts with the observation that if is an isomorphism between two graphs and , then for every node in , we must have . Therefore, vanilla NDF can serve as an easy test for two graphs not being isomorphic. In other words, a necessary condition for two undirected graphs to be isomorphic is that the vanilla NDF embeddings of them should have the same image with the same multiplicity for each element in the image. When studying the group of automorphisms of a graph, vanilla NDF can be used similarly to filter the set of potential permutations of nodes eligible to be an automorphism of the graph, see Part (ii) of Example 3.8 and Example 3.9. In order to formulate these ideas in mathematical terms, we need to define two levels of equivalence relations; between nodes and between graphs.

Definition 3.7.

-

(i)

Two nodes in an undirected graph are called NDF-equivalent if their vanilla NDF vectors are the same. The partition of induced by this equivalence relation is called the NDF-partition.

-

(ii)

Let and be two undirected graphs. The graphs and are called NDF-equivalent if the following conditions hold:

-

(a)

The vanilla NDF embedding on them has the same target space, equivalently the maximum degrees of and are equal.

-

(b)

The images of the vanilla NDF embedding on and are equal, that is .

-

(c)

For every vector , the inverse images of in and have the same number of nodes. That is

-

(a)

These equivalence relations are defined with respect to vanilla NDF. Similar definitions are possible for other types of NDF embeddings including higher order NDF embeddings. When it is necessary to distinguish between them, we will add an adjective (for example “vanilla’ or “minimal”, etc) to specify what kind of NDF was used to define the equivalence. It is straightforward to see that two isomorphic graphs are NDF-equivalent. But the converse is not true, even for trees, see Part (i) of the following example:

Example 3.8.

Let and be the following graphs:

-

(i)

Clearly, and are not isomorphic, but they are NDF-equivalent, see Table 4.

-

(ii)

If an automorphism of (resp. ) does not change nodes 1 and 9, it must be the trivial automorphism. On the other hand, since nodes 1 and 9 are NDF-equivalent in graph (resp. ), every non-trivial automorphism on (resp. ) should switch nodes 1 and 9. Therefore, with a little of analysis, one observes that there is only one non-trivial automorphism on given with the following permutation:

However, since 4 has a unique NDF vector in , it is mapped to itself by all automorphisms of . Therefore if an automorphism wants to switches 1 and 9, it should map isomorphically the path onto the path , which is impossible. Thus, there is no non-trivial automorphism on graph .

| Nodes in | Nodes in | The Associated NDF Vector |

| 1, 9 | 1, 9 | (0, 1, 0) |

| 2, 8 | 2, 8 | (1, 1, 0) |

| 3, 7 | 6, 7 | (0, 2, 0) |

| 4, 6 | 3, 5 | (0, 1, 1) |

| 5 | 4 | (1, 2, 0) |

| 10 | 10 | (0, 0, 1) |

Example 3.9.

In this example, we apply the vanilla NDF of the graph shown in Figure 1 to prove that the only non-trivial automorphism of is the permutation switching only nodes and . According to the vanilla NDF of nodes of listed in Table 3, we have three NDF-equivalence classes with more than one element. Regarding our discussion at the beginning of this subsection, these are the only chances for having a non-trivial automorphism on . Again using NDF vectors, we try to eliminate most of these chances. Nodes and both have only one neighbor of degree 2, i.e. respectively, and . If an automorphism switches and , then it must switch and too, which is impossible because . Similar arguments show that no automorphism can switch the pairs (, ), (, ) and (, ). Therefore, the only possibility for an automorphism on graph is the permutation on the set of nodes which switches nodes and . And one easily checks that this permutation is actually an automorphism on .

Color refinement or 1-dimensional Weisfeiler-Leman algorithm is an iterative algorithm to partition the set of nodes of undirected graphs and is a popular algorithm for isomorphism testing, see [3] for an introduction to the subject. To show the relationship between NDF-equivalence and color refinement, we only need to recall its definition. At the start of this algorithm, a single color is associated to all nodes. In each round (iteration) of color refinement, two nodes and with the same color are assigned different colors only if there is a color, say , such that the number of neighbors of with color is different than the number of neighbors of with color . So, after the first round of color refinement the set of nodes of the graph are partitioned according to their degrees, that is and have the same color if and only if they have the same degree. After the second round, two nodes and have the same color if and only if for every occurring degree, say , the numbers of neighbors of of degree is equal to the numbers of neighbors of of degree . This is exactly the NDF-partition.

We shall come back to this subject in Subsection 5.2, where we show how RNDFC-equivalence (a version of higher order NDF-equivalence) performs better than color refinement in certain examples.

4. NDF Embeddings for Dynamic Graphs

To address the problems arising from the abundance of possible degrees in big graphs and the ever-changing nature of dynamic graphs appearing in real world applications, our main approach is to replace the degrees list appearing in the definition of the minimal NDF, see Definition 3.2, with a list of intervals and then compute the NDF vector representation of nodes with respect to the intervals in this list. To this work, we have to impose three necessary conditions:

-

(1)

The union of intervals must cover all natural numbers.

-

(2)

The intervals must be mutually disjoint, that is the intersection of every two different intervals must be empty.

-

(3)

The list of starting numbers of intervals must be in ascending order.

The first two conditions mean that the set of intervals must be a partition of the set of natural numbers. The third condition determines the order of components of the NDF vector. These conditions imply that the first interval must start with 1 and the last interval has to be infinite (with no end). Furthermore, since we intend to use the NDF vectors as features in machine learning algorithms, we impose a rather non-mandatory condition too:

-

(4)

The last interval must include the maximum degree of nodes in the graph.

Condition (4) guarantees that the last entry of the NDF vector of (at least) one node is non-zero. Otherwise, the last entry would be redundant. One may wish to impose a stronger version of Condition (4) to ensure that every interval in the intervals list contains some occurring degree, but this can be a requirement that is very hard to satisfy in general. We formalize requirements (1)-(4) in the following definition:

Definition 4.1.

Let be an undirected graph.

-

(i)

An intervals list for graph can be realized by a finite sequence of natural numbers such that , where is the maximum degree of nodes in . We call such a sequence the list of starting points of the intervals list. Then the intervals list is the ordered set , where for and .

-

(ii)

To any given intervals list for graph as above, we associate a vector representation called the dynamic neighbors degree frequency embedding of nodes of defined as where for , is the number of neighbors of node whose degrees lie in .

What we are referring to as an “interval” here can also be called a “range”. However we prefer the word interval because each interval is indeed a usual interval in real numbers restricted to natural numbers. Although the definition of a dynamic NDF embedding depends on the intervals list employed in its construction, for the sake of simplicity, we omit the intervals list from our notation. When it is important one may want to emphasis this dependency by denoting the dynamic NDF by .

It is clear from the above definition that the dimension of vectors obtained in dynamic NDF equals the number of intervals in the chosen intervals list. In the following example, we explain that our definition of dynamic NDFs has enough flexibility to cover dynamic versions of vanilla and minimal NDF as well as degree centrality.

Example 4.2.

In this example, let be an undirected graph.

-

(i)

Let denote the maximum degree of nodes in . Then the intervals list constructed from the list of starting points gives rise to the dynamic version of vanilla NDF. For future reference, we call the vanilla intervals list.

-

(ii)

As Definition 3.2, let be the ascending list of all occurring degrees of nodes in . If , we change it to 1. Then it is a list of starting points and defines an intervals list, which we call it the minimal intervals list. The dynamic NDF associated to this intervals list is the dynamic version of minimal NDF.

-

(iii)

The singleton can be considered as a list of starting points. The intervals list associated to it consists of only one interval which is the whole natural numbers and the dynamic NDF defined on top of this intervals list is simply the assignment of degree to each node of the graph. Up to some normalizing factor, this one dimensional vector representation of nodes is the same as degree centrality on .

The above example demonstrates the relationship between dynamic NDF embeddings and previously defined NDF embeddings. In the following definition, we present two general methods to construct intervals lists. Based on the intuition gained from network science, we assume that most nodes of a graph have low degrees and substantially fewer nodes have high degrees. This suggests that we devote more intervals to lower degrees and fewer intervals to higher degrees. On the other hand, nodes with higher degrees appear to be the neighbors of much greater number of nodes, and so it is equally important to differentiate between high degree nodes too. We also note that the distance between higher degrees are much bigger than the distance between lower degrees, which suggests smaller intervals around lower degrees and bigger intervals around higher degrees. Based on these observations, we propose an “adjustable design” for partitioning the set of natural numbers into intervals lists. Therefore, we include some parameters in our construction to create more flexibility and control on the design of intervals lists.

Definition 4.3.

Let be an undirected graph and let be the maximum degree of nodes in . We set a maximum length for intervals.

-

(i)

As the first approach, we try to partition the set of natural numbers into intervals of fix length (besides the last interval which has to be infinite!). Due to the possible remainder, we let the potentially smaller interval to be the first interval. Therefore our algorithm for choosing a “uniform” list of starting points of the intervals list would be as follows:

Input: Integers and// : the maximum degree of nodes in// : the maximum length of intervalsOutput: A list of starting points// Assign an empty list towhile do.append()if then.append()breakend ifend while// Reverse the order of.reverse()returnAlgorithm 1 Generating a uniform list of starting points -

(ii)

As the second approach, we try to generate an intervals list such that the lengths of the intervals are increasing. In Algorithm 2, we set a starting length and a maximum length for intervals. Then in each step, we increase the length of intervals by the ratio until we reach the maximum length.

Input: Integers and a real number// : the maximum degree of nodes in// : the maximum length of intervals// : the starting length of intervals// : the ratio of the length of intervalsOutput: A list of starting pointswhile dominint.append()end whileif then.pop()end ifreturnAlgorithm 2 Generating an increasing list of starting points

One notes that if we set (the starting length being equal to maximum length) in Algorithm 2, we do not necessarily get the same intervals list as obtained in Algorithm 1 with the same maximum length, even though there is no increase in the length of intervals in both cases. In this scenario, the intervals list obtained from Algorithm 2 consists of intervals of fixed length except the last interval that contains the maximum degree of nodes and has to be infinite. However the intervals list obtained from Algorithm 1 consists of intervals of length and the last interval which starts from the maximum degree of nodes and possibly the first interval that might have length less than .

Example 4.4.

Let be the graph shown in Figure 1. We define two intervals lists as follows:

The intervals list can be generated by Algorithm 2 by setting , any integer greater than or equal to and any real number greater than or equal to . Similarly, can be generated by Algorithm 2 by setting , and any real number greater than or equal to . The dynamic NDF embeddings of nodes of with respect to and are shown in Table 5. We notice that by reducing the dimension of NDF embedding from 5 (for vanilla NDF) to 3 (for dynamic NDF w.r.t ) or even to 2 (for dynamic NDF w.r.t ), we do not lose a whole lot of precision. One can expect this phenomenon to occur more often and more notably in graphs whose maximum degrees are very large.

| Node(s) | Dynamic NDF w.r.t | Node(s) | Dynamic NDF w.r.t |

|---|---|---|---|

| A | (2, 3) | A | (1, 2, 2) |

| D | (2, 2) | D | (2, 2, 0) |

| K | (3, 1) | K | (1, 3, 0) |

| Y, H | (1, 3) | Y, H | (0, 2, 2) |

| B, I | (0, 3) | B | (0, 1, 2) |

| I | (0, 0, 3) | ||

| E | (1, 2) | E | (0, 2, 1) |

| C, F, M, N | (1, 1) | C, M, N | (0, 1, 1) |

| F | (0, 2, 0) | ||

| L | (0, 2) | L | (0, 0, 2) |

| J, P, Q, R | (0, 1) | J, P, Q, R | (0, 0, 1) |

The above algorithms demonstrate only two possible ways of generating starting points for intervals lists, and consequently two ways of defining reasonable dynamic NDFs on graphs.

Remark 4.5.

The only feature of the graph used in these methods is the maximum degree of nodes in the graph and the rest of parameters are set by the user. The best way of defining an intervals list for a specific graph depends on the distribution of degrees of nodes in natural numbers. Therefore, for a given graph with a particular structure, in order to define the most relevant dynamic NDF embedding, one has to incorporate more detailed information about the set of occurring degrees. There are also situations that we need to define a common intervals list for two or more graphs. In these situations, we choose an auxiliary point less than the maximum degree (call it the “last point”). Then, we use one of the above algorithms to choose a list of starting points up to the last point. To cover the rest of degrees, we choose a complementary list of points according to the task in hand and the set of degrees of nodes. The concatenation of these two lists of starting points yields the final list of starting points. For example, assume we have a graph whose set of occurring degrees up to 100 can be perfectly covered by Algorithm 2 and it has only 5 nodes whose degrees are greater than 100, say . We let the last point be 100 and choose the primary list of starting points using Algorithm 2. We heuristically set the complementary list of points to be . Then the concatenation of these two lists is our final list of starting points. While choosing the complementary list , we didn’t care about any particular growth rate for the lengths of intervals. Instead, We tried to impose two things: First, to avoid any empty interval, an interval which contains none of the degrees in the set . Secondly, intervals should contain some margin around the degrees they contain, (e.g. the first interval contains some numbers less than 108 and some numbers greater than 111). We use this method several times in this article to build mixed lists of starting points, for instance see Examples 6.4, 8.2 and 8.3.

We note that by choosing appropriate values for the parameters of the above algorithms, we can control the dimension of the vector representation obtained from dynamic NDF embeddings. For instance, two and three dimensional dynamic NDF embeddings of the graph shown in Figure 1 are discussed in Example 4.4. To some extent, this resolves the “curse of dimensionality” explained after Definition 3.1. But aggressively reducing the dimension of the embedding by this method imposes a coarse aggregation among nodes, because all neighbors of a node whose degrees lie in an interval are considered the same type. An alternative way of reducing the dimension of the embedding would be to apply a deep neural network algorithm equipped with some appropriate objective function on the vectors obtained from dynamic NDFs. Then the number of neurons in the last layer (before applying the objective function) determines the dimension of the final embedding. Since the objective function should be designed according to the downstream tasks, we postpone the detailed analysis of these types of the compositions of neural networks and NDF embeddings to future works.

Dynamic NDF embeddings employ some sort of aggregation while counting the degree frequency of neighbors. Therefore, some precision has been lost and we cannot repeat the content of Subsection 3.1 for dynamic NDFs. However, it is still true that the degree of a node equals the sum of all entries of its dynamic NDF vector, i.e. for all nodes in the graph.

The content of Subsection 3.2 can be repeated for dynamic embeddings as well, although it is obvious that dynamic NDF embeddings have less expressive power than vanilla and minimal NDF embeddings, see Example 4.4. To some extent, the expressive power of dynamic NDF embeddings can be increased by considering higher order NDF embeddings, which is the subject of the next section.

5. Higher Order NDF Embeddings as Matrix Representations of Nodes

So far for NDF embeddings, we have been considering the degree frequency of neighbors of a node and driving a vector representation from it. For higher order generalizations of NDF embeddings, we have two natural choices to drive vectors from circles around the node:

In the first approach, we consider the previously defined NDF vector as the radius zero NDF. That is, for a node , we computed neighbors degree frequency of elements of , the circle of radius zero centered at . So naturally for , the radius NDF vector would be an aggregation of neighbors degree frequency of elements of . The aggregation method we adopt here is simply the mean of NDF vectors of elements of , but one can try other aggregation methods, depending on downstream tasks. More advanced option would be to learn the aggregation method, perhaps, similar to what has been done in [5], but in the level of each circle. Computing mean amounts to dividing the sum of NDF vectors of elements of by the size of , i.e. . Dividing by is necessary, because it makes the set of NDF vectors associated to different radiuses more homogeneous and comparable to each other. Whenever is empty for some , the radius NDF vector is simply defined to be the zero vector.

In the second approach, we consider the previously defined NDF vector as the radius one degree frequency (DF). The justification is that the NDF vector of a node is computed by counting the “degree frequency” of elements of . Therefore for an arbitrary natural number , the order generalization of the NDF vector could be the degree frequency of elements of . This method produces a sequence of vectors with sharply varying magnitude and thus not comparable to each other, for instance see Examples 5.2(iii) below. In order to normalize the degree frequency vectors in different radiuses, we divide each nonzero DF vector of radius by . Again when is empty (i.e. ), the DF vector of radius is defined to be the zero vector. One checks that is exactly the -norm of the DF vector of radius centered at node , so this method of normalization is called the -normalization. It converts every nonzero DF vector (including the previously defined NDF vector) into a probability vector. In this approach, one should also note that we use the phrase “degree frequency” (DF) in lieu of “neighbors degree frequency” (NDF), because the degree frequency of very elements of circles are computed, not the ones of their neighbors!

Finally, these methods of extending NDF vector representations even without dividing by the size of circles (normalization) can be useful in certain topics, so we record both versions for later references. The formal definitions of these generalizations of NDF embeddings are as follows:

Definition 5.1.

Let be an undirected graph and let be an intervals list for .

-

(i)

The order matrix of neighbors degree frequency of circles (NDFC matrix) with respect to the intervals list associates an matrix to each node such that the -th row of is computed as follows:

and the zero vector of dimension when .

The order RNDFC matrix, the raw version of NDFC matrix, of a node is denoted by and is defined similarly except that the -th row of is not divided by .

-

(ii)

The order matrix of circles degree frequency (CDF matrix) with respect to the intervals list assigns an matrix to each node such that when , the -th entry of is the number of nodes in whose degrees belong to divided by , that is

When , the entire -th row of is defined to be the zero vector of dimension .

The order RCDF matrix, the raw version of the CDF matrix, of a node is denoted by and is defined similarly except that the -th entry of is not divided by .

One notes that the first row of NDFC (respectively RNDFC and RCDF) matrix is the same as the dynamic NDF vector. However the first row of CDF matrix is the -normalized version of the dynamic NDF vector, i.e. where is the -norm. In the following examples, we highlight some of the features of these matrix representations of nodes of a graph:

Examples 5.2.

Let be the graph in Figure 1. Let be the vanilla intervals list of and let be as defined in Example 4.4. In the following, we say a matrix representation separates two nodes if its values on these nodes are different. We round all float numbers up to three decimal digits.

-

(i)

The order 3 NDFC matrix w.r.t. for nodes and are as follows:

Although the vanilla NDF vectors of and are the same, others rows of the NDFC matrix of these nodes are different. In fact, even the order 1 NDFC matrix representation of nodes of w.r.t. can separate all nodes of , except and for which there is an automorphism of that flips them, so they must have identical neighborhood statistics. This emphasizes the fact that higher order NDF representations of nodes have more expressive power, namely they are better at revealing the differences between neighborhoods of the nodes of a graph. This is in line with our discussion in Subsection 3.2 and will be explained more in Subsection 5.2.

-

(ii)

The order 5 NDFC matrix w.r.t. for nodes and are as follows::

Although nodes are have different vanilla NDF vectors, the first two rows of their NDFC matrices w.r.t. are the same. In fact, it take the order 2 NDFC matrix representation of nodes of w.r.t. to separate all nodes of , (of course, except and ).

-

(iii)

The order 3 CDF and RCDF matrices w.r.t. of nodes and are as follows:

One can easily check that the CDF matrix representation has to be of order at least 3 to be able to separate the nodes of (except and ), while order 2 is enough for RCDF to do the same task. This example clearly shows that RCDF can be more efficient than CDF in separating the nodes of .

To use our matrix representations of nodes in our exemplary machine learning algorithms in next sections, we shall apply certain aggregations to turn them into 1-dimensional arrays. However the present 2-dimensional array structure of these representations offers certain secondary applications.

5.1. Shapes of neighborhoods of nodes

The matrix representations of nodes as 2-dimensional arrays can be used to produce a simple visualization of the neighborhoods of nodes in a graph. For this purpose, we use only NDFC matrices because of several reasons: (1) In NDFC matrices, all rows are NDF vectors or their means (aggregations). So, there is a meaningful relationship between different rows. (2) The rows of RNDFC and RCDF matrices are not comparable to each other and heavily populated rows suppress less populated rows. So one cannot compare them by looking at the visualization. For example, consider in Part (iii) of Example 5.2, there is no obvious and meaningful way to compare the second row with the first row. (3) The row-by-row -normalization of CDF matrices might suppress some important values. For instance, consider in Part (iii) of Example 5.2. In the matrix , we observe that there are two nodes in whose degrees are greater than 2, while only one node in has a degree greater than 2. However, in , the entry is less than the entry , which is clearly counter-intuitive.

We slightly modify NDFC matrices to obtain a more extensive visualization. With the notation of Definition 5.1, for every node in the graph, we define a vector of dimension whose all entries are zero except the -th entry which is 1 and is the index such that the degree of belongs to . We call this vector the degree vector of with respect to the intervals list . We add this vector as the first row to the NDFC matrix and shift other rows below. In this way, we can view the degree of the node as the NDF vector of an imaginary circle of radius -1 around each node and take it into account while visualizing other NDF vectors of circles. Let us call the matrix representation obtained in this way the visual NDFC (or VNDFC) matrix representation of nodes of the graph and denote the order VNDFC matrix representation of a node by .

Example 5.3.

We choose 7 nodes , , , , , , and of the graph in Figure 1 and use the Python package matplotlib to draw the (gray) color map of the order 7 VNDFC matrix representations of these nodes w.r.t. the vanilla intervals list. The order 7 is chosen because the diameter of is 7 and all 9 rows of the are non-zero. Figure 2 illustrates the visualizations of these nodes. It is worth noting that since nodes and are connected to the rest of graph via nodes and , respectively, they have very similar visualizations to the ones of and , respectively. One observes less similarity between the visualizations of nodes and , because only connects to the left part of the graph. One also notices some similarity between visualizations of nodes and , which is not surprising regarding the similarity of their neighborhoods in .

The representation of nodes by 2-dimensional arrays and the above visualizations suggest the idea that one can feed the higher order NDF matrices into convolutional neural network models and extract some patterns. However, unfortunately, there is an important reason that prevents two dimensional convolutions to be able to detect and reveal meaningful patterns in these matrices. The reason is that the rows and columns of these matrices have totally different natures, and so unlike images, the patterns in higher order NDF matrices are not equivariant under most two dimensional geometric transformations. That being said, inspired by the observations made in Example 5.3, there are some hopes for one dimensional convolutional operations to be useful in detecting certain patterns among these matrices. Specifically, we expect the vertical (column-wise) convolution operations be able to detect hidden patterns in higher order NDF matrices. Further evidences for this idea are provided by the success of applying certain column-wise aggregations to the RCDF matrix representations of nodes in Section 7. Example 6.2 shows an instance of such a convolutional neural networks, though it has less success than the other neural network that consists of fully connected linear layers.

5.2. RNDFC-equivalence vs color refinement

We have four options for higher order generalizations of NDF-equivalence; equivalences based on NDFC, RNDFC, CDF, and RCDF matrix representations of nodes. During normalization of rows in NDFC and CDF matrices, we loose some information, so raw versions of these matrix representations, i.e. RNDFC and RCDF, work better for separating the nodes of a graph. On the other hand, since RNDFC matrices are computed based on neighbors degree frequency, they are more similar to color refinement and heuristically better than RCDF matrices, which are computed based on degree frequency. Therefore we work only with vanilla RNDFC-equivalence of graphs and nodes here. The definition of RNDFC-equivalence is the same as Definition 3.7. Also, one checks that isomorphism of graphs implies RNDFC-equivalence.

Here, we content ourselves with two examples in which RNDFC-equivalence can detect two non-isomorphic graphs while color refinement cannot and postpone the detailed study of RNDFC-equivalence as a tool for graph isomorphism testing to our future works.

Example 5.4.

Let be the cycle graph of length 6 and let be the disjoint union of two cycle graphs of length 3. They are both 2-regular graphs, and so color refinement does not change the colors of nodes. Therefore it cannot tell the difference between these graphs. On the other hand the diameter of is 1. This implies that only first two rows of RNDFC-matrices of its nodes are non-zero. However, the diameter of is 3 and so there are 4 non-zero rows in the order RNDFC matrices of nodes of for all . This clearly shows that these graph are not RNDFC-equivalent and so they are not isomorphic.

In the above example, we didn’t have to even compute RNDFC matrices and our argument was based on the diameter of the graphs. This shows the advantage of RNCDF-equivalence versus color refinement. But the main superiority of RNCDF-equivalence over color refinement is based on the fact that it labels nodes by means of concrete matrices. Therefore in cases that the color refinement and RNCDF-equivalence both partition the set of nodes similarly, the matrices associated to each equivalence class might tell the difference between two graphs.

Example 5.5.

Let and be the graphs illustrated in Figure 3. Because of vertical and horizontal symmetries in both graphs, the color refinement partitions them similarly as follows:

So color refinement cannot tell they are not isomorphic. Again because of those symmetries, the RNDFC-partitions of these graphs are the same too. However, the RNDFC matrices associated to classes and are different in each graph. To see this, we list the order 5 RNDFC matrices (w.r.t. vanilla intervals list) of each RNDFC-equivalence class in graphs and :

-

()

-

()

Therefore, and are not RNDFC-equivalent. Consequently they cannot be isomorphic. In fact, order 3 RNDFC matrices were enough to prove this.

6. Learning PageRank

In this section, we present several simple examples to show how the higher order NDF matrix representations can be fed as feature vectors into simple feedforward neural networks consisting of only fully connected linear layers to learn PageRanks of graphs. We run these experiments/examples on two undirected graphs appearing in social networks: The first graph is the network of facebook pages of 14113 companies. The second graph is the network of 27917 facebook pages of media. The datasets of these graphs are available in [9]. They are big enough to contain a sufficient number of nodes for training deep learning models and small enough to make it possible to test each sensible scenario in less than an hour on an ordinary PC. Along the way, we also examine various options for feature engineering and the architecture of the models. After training our model on the first graph, we modify the graph slightly and observe that the trained model still has a good predictive power on the modified version of the graph. This proves that our method is capable of handling dynamic graphs. In order to show that our method of learning PageRank is inductive, we next consider two random graphs generated by the Barabási–Albert algorithm with different seeds. We train our model on one of the graphs and show it is able to predict the PageRank of the other graph as well.

Example 6.1.

Let be the graph of facebook pages of companies. The nodes represent the pages and edges represent the mutual likes. It has 14113 nodes and average degrees of nodes is 7. One finds more specifics in the webpage of the graph [9]. To produce an intervals list, we use algorithm 2 with the parameters , , and . For the higher order NDF matrix representation, we choose the order 5 NDFC matrices associated to this intervals list. The result is 14113 matrices of size indexed by nodes of . We flatten these matrices as vectors with 102 entries and the result is the set of feature vectors for training/testing of our model. For the target variable, we compute the PageRanks of nodes using the Python package NetworkX, [4]. In order to establish a rough balance between target variables and feature vectors, we multiply all PageRanks of nodes by 10000. The dataset is shuffled and divided into train/test sets, 10000 nodes for training and the rest of 4113 nodes for evaluating the model success. The following feedforward neural network model is used to learn the PageRank of the graph:

Our objective function is the Mean Square Error function and we apply Adam optimizer with the learning rate 0.001. In each epoch, the training dataset is shuffled again and divided into 25 batches of size 400, so 25 rounds of gradient decent is performed in each epoch. After training this model for 2000 epochs, our model is able to predict the PageRanks of nodes in the test set with around 90% accuracy (more precisely; 9.651% inaccuracy on average). One should note that due to some random factors in our algorithm, like shuffling the data and parameter initialization, retraining the model may result slightly different accuracy rate. After saving this model, we randomly remove 500 edges from and add 500 new edges in such a way the modified graph stays connected. We apply the same feature engineering on the modified graph and compute the PageRanks of its nodes using NetworkX as targets. Our saved model is able to predict the PageRanks of the nodes of the modified graph with an average inaccuracy rate of 9.783%.

We have also run an experiment to train a neural network model equipped with convolutional layers to learn the PageRanks of nodes. Although the final accuracy rate is worse than the above example, we present this experiment here as an example for typical convolution neural networks applied on higher NDF matrices, as explained at the end of Subsection 5.1.

Example 6.2.

Every little detail of this experiment is similar to Example 6.1, except that we do not reshape the matrices as vectors and the model starts with two convolution layers and a pooling is applied after the first convolution layer. Afterwards we flatten the data to continue with the fully connected linear layers. The size of the kernels of convolution layers are and , respectively. The specifics of these layers are listed in Listing 2.

The trained model predicts the PageRank of nodes in the test set with around 64% accuracy on average.

In the following example, we discuss our experiments concerning deep learning models for PageRank of the network of facebook pages of media:

Example 6.3.

Let be the graph of facebook pages of media. The nodes represent the pages and edges represent the mutual likes. It has 27917 nodes and average degrees of nodes is 14. One finds more specifics in the webpage of the graph [9]. To build an intervals list, we apply algorithm 2 with the parameters , , and . For the higher order NDF, we take the order 5 NDFC matrices w.r.t. to this intervals list. The result is matrices of size , and after flattening them the feature vectors have 138 entries. Again the PageRanks of nodes are the target variables. To balance the target variables with the feature vectors, this time, we multiply PageRanks by 24000. The dataset is shuffled and divided into 20000 nodes for training and 7917 nodes for evaluating the model. The feedforward neural network model is the same as the model described in Example 6.1. Again we train the model for 2000 epochs and the average inaccuracy of the prediction on the test set is 12.438%. Obviously, there are some random processes in our training algorithm such as shuffling and splitting the data, initialization of parameters, etc. To make sure that these elements of randomness do not undermine the promise of our methods, we repeat the training, and in the second try, the average inaccuracy on the test set is 13.551%. We have also trained the same model with the order 6 CDF matrix representation in lieu of the order 5 NDFC matrix representation and the trained model had the average inaccuracy of 28.44% on test set. This suggests that the NDFC matrix representation is a better choice than the CDF matrix representation for learning PageRank.

In order to demonstrate that our method of learning PageRank based on the higher order NDF matrix representation is inductive, we run another experiment in the following example.

Example 6.4.

We use Python package NetworkX to build two random graphs with 20000 nodes. The method we apply to generate these random graphs is based on the preferential attachment and is called the dual Barabási–Albert algorithm, see [7, 4] for details. It has the following parameters:

-

•

: The number of nodes. We set for both graphs.

-

•

: A probability, i.e. . We set for both graphs.

-

•

: The number of edges to link each new node to existing nodes with probability . We set for both graphs.

-

•

: The number of edges to link each new node to existing nodes with probability . We set for both graphs.

-

•

: The seed indicating the random number generation state. We set and for the first graph and the second graph, respectively.

-

•

: The initial graph to start the preferential attachment process. This parameter is set “None” for both graphs.

The graphs we obtain both have around 40000 edges with an average degree of around 4. However the list of degrees occurring in these graphs are very different especially for higher degrees, For instance the maximum degree of the first graph is 280, while it is 314 for the second graph. Therefore to choose a common list of starting points, we have to use the method explained in Remark 4.5, and our choice for the list of starting points is as follows:

We compute the order 5 NDFC matrix representations of both graphs w.r.t. this list of starting points and obtain a matrix of size for each node of these graphs. We reshape these matrices as 1-dimensional arrays with 126 entries and consider them as the feature vectors. As for targets, we compute the PageRanks of nodes of these graphs. To balance the target variables with the feature vectors, we multiply PageRanks by 1000. Afterwards, we train the same feedforward neural network model described in Example 6.1 with 10000 randomly chosen feature vectors of the first graph as the feature set and the PageRank of the corresponding nodes as the target set. The average error of the trained model on the rest of 10000 nodes is 8.296%. Then, we apply the trained model to the whole dataset of the second graph. The average error of predicting PageRanks of nodes of the second graph is 8.926%.

This example shows that the “local” feature vectors obtained from the order 5 NDFC matrix representation of the first graph contain enough statistical information about the “global” structure of the graph to be useful for learning and predicting not only the PageRanks of nodes in the first graph, but also the PageRanks of nodes in the second graph. In other words, the above example confirms that our method is a local-to-global strategy. It also clearly shows that our method can learn PageRank using the data of a known graph, and then predict the PageRank of another graph, as long as these graphs share some statistical similarities. Hence our method of learning PageRank is inductive. We shall return to the subject of leaning of PageRank in Section 8, after adopting a modification inspired by directed graphs.

7. Aggregations and learning the closeness centrality

The operation of concatenating rows (resp. columns) is one of the most basic methods of aggregation on entries of a matrix which amounts to reshaping the matrix (resp. the transpose of the matrix) into a 1-dimensional array. Some of the other basic aggregation methods are minimum, maximum, summation, mean and median which can be performed column-wise, row-wise or on all entries of the matrix. However since the order of rows in our matrix representations comes from the Breadth-First Search (or equivalently, the radius of the circles around nodes), we have a natural order in each column. On the other hand, the order of columns comes from the natural increasing order of starting points of the underlying intervals list, so there is a meaningful order between entries of each row too. Exploiting these vertical and horizontal orderings gives us a pivot and more creative opportunities for feature engineering based on these matrix representations. The following definition which was inspired by our previous constructions in Section 2 is only an instance of new types of possible aggregations:

Definition 7.1.

Let be a graph and let be a matrix representation of nodes of as matrices. For any given sequence of parameters of real numbers, we define a vector representation as follows:

In other words, , where is the vector (or the row matrix) . We call the parametric aggregation of with respect to .

More precisely, the above aggregation is a column-wise parametric aggregation. Obviously, we can repeat this definition for row-wise parametric aggregation which replaces each row by the weighted sum of its elements. Even more generally, one can consider an matrix of parameters and perform a 2-dimensional aggregation of the given matrix representation or apply any other pooling technique to obtain a lower dimensional (matrix or vector) representation of nodes. Of course, they all depend on the prior knowledge about the structure of the graph and the downstream task in hand. Inspired by -centrality function discussed in Section 2, in the following definition, we introduce a special case of parametric aggregation for our matrix representations of nodes:

Definition 7.2.

Let and be as Definition 7.1. For a given real number , the -aggregation of the matrix representation is the parametric aggregation of with respect to the parameter sequence . and it is denoted simply by .