Linear Time Kernel Matrix Approximation via Hyperspherical Harmonics

Abstract

We propose a new technique for constructing low-rank approximations of matrices that arise in kernel methods for machine learning. Our approach pairs a novel automatically constructed analytic expansion of the underlying kernel function with a data-dependent compression step to further optimize the approximation. This procedure works in linear time and is applicable to any isotropic kernel. Moreover, our method accepts the desired error tolerance as input, in contrast to prevalent methods which accept the rank as input. Experimental results show our approach compares favorably to the commonly used Nyström method with respect to both accuracy for a given rank and computational time for a given accuracy across a variety of kernels, dimensions, and datasets. Notably, in many of these problem settings our approach produces near-optimal low-rank approximations. We provide an efficient open-source implementation of our new technique to complement our theoretical developments and experimental results.

1 Introduction

Kernel methods are a popular class of techniques in machine learning problems. They typically involve the implicit definition of an infinite dimensional feature space using an explicitly defined kernel function that describes similarity between data points. Examples of techniques based around the use of kernels include kernel support vector machines, kernel ridge regression, spectral clustering, kernel PCA, and Gaussian process regression (Shawe-Taylor et al., 2004; Scholkopf & Smola, 2018). In practice, it is important that an appropriate kernel be chosen for the given task and a wealth of literature exists on proper choices of kernel functions and their hyperparameters.

In this work, our focus is on computational problems that generically arise in the use of kernel methods. Let be an isotropic kernel function and let be a set of points in . The associated kernel matrix is defined as

Simply populating and storing the kernel matrix requires time and space which grow quadratically with the size of the dataset. Typically, kernel methods will also require operations on the kernel matrix which naïvely take cubic time, including solving linear systems and/or computing eigendecompositions. For even modestly sized datasets standard approaches are infeasible. This paper introduces a novel technique for constructing an approximation to the kernel matrix in linear time that subsequently enables the computation of matrix vector products with in linear time. This procedure can be used to, for example, substantially accelerate the solution of linear systems via iterative methods (Greenbaum, 1997; Shewchuk, 1994).

Accelerating computations involving kernel matrices has been the subject of extensive research. One family of algorithms uses inducing points as a means by which to approximately represent the kernel matrix in an easier-to-use form. One example is the Nyström method (Nyström, 1930) which uses kernel interactions between data points and a set of inducing points as basis functions for a low-rank approximation of the matrix. For example, if is a subset of datapoints, then the Nyström approximation is given by

| (1) |

This low rank approximation allows for accelerated matrix-vector multiplies within iterative schemes as well as the opportunity to use the Sherman-Morrison-Woodbury formula in settings where the kernel matrix has a diagonal matrix added (such as for regularization).

Other techniques leverage analytic properties of the kernel function itself to arrive at more manageable forms of the kernel matrix. For example, the Fast Multipole Method and Fast Gauss Transform (Greengard & Rokhlin, 1987; Greengard & Strain, 1991; Yang et al., 2003) both use truncated expansions of the kernel function to compute low-rank matrix representations without ever having to examine the original kernel matrix. The method of random Fourier features (Rahimi et al., 2007) uses a representation of the kernel as the expectation of a random function to approximate the kernel matrix via a Monte-Carlo scheme. Recent work (Solin & Särkkä, 2020) leverages the spectral density of the kernel to compute coefficients in a harmonic expansion. Whereas the techniques of the previous paragraph use kernel interactions among data points without explicitly analyzing the kernel function itself, these analytically motivated methods use only properties of the kernel as the basis of their approximation. While data-independent approximations benefit from being easy to update with new datapoints, they often yield higher-rank representations than necessary. In addition, they are often kernel-targeted, requiring knowledge of a known expansion for a given kernel, or the kernel’s spectral density.

1.1 Contributions

We present a new technique which leverages both the analytic properties of the underlying kernel (without being targeted to any specific kernel), and additional data-dependent compression to generate a low-rank approximation to the kernel matrix. The time and space cost of the approximation are linear in the size of the dataset. The main baseline technique with which we compare our results is the Nyström method for high-accuracy kernel-independent approximations. Our experiments show favorable comparison with the Nyström method for a host of kernels, dimensions, datasets, and error tolerances. In fact, we show a number of settings in which our method achieves a nearly-optimal tradeoff between rank and error, while still taking less time than the Nyström method.

2 Prior Work

Given a kernel function and dataset there are several common techniques for devising a fast matrix vector product with the associated kernel matrix One broad class of methods computes low-rank approximations to as when applicable. The SVD is the optimal choice if accuracy for a given rank is the only consideration. For sufficiently small scale problems this may be feasible. However, for larger problems computing the SVD becomes prohibitively expensive since, at a minimum, methods targeting the top singular values/vectors require repeated matrix vector products with —the very problem we want to address.

The Nyström method (Nyström, 1930) is a general approach to this problem that is much more computationally efficient at the expense of accuracy for a fixed rank approximation (i.e., it is suboptimal relative to the SVD). The most commonly used variants within machine learning are sampling based methods that yield low-rank approximations of as in (1). 111The Nyström method was originally introduced within the integral equations community (Nyström, 1930) and subsequently found use within machine learning (Williams & Seeger, 2001; Gittens & Mahoney, 2013). The key complexities of this methods are: (1) selecting an appropriate rank for the approximation and (2) selecting the subset In principle, the theory of pseudoskeleton approximations (Goreinov et al., 1997) provides generic upper bounds for the rank given a desired accuracy and there is significant work within the machine learning and theoretical computer science communities to sharply characterize the best way to select (see, e.g., (Musco & Musco, 2017; Gittens & Mahoney, 2013; Drineas et al., 2005)). Another broad class of methods that fall into this category are so-called random features methods such as random Fourier features (Rahimi et al., 2007). In this setting, the low-rank factors are produced by approximating the feature expansion related to a given kernel. While these methods are quite efficient, they often struggle to achieve high accuracy without taking the approximation to be of excessively large rank. For a systematic comparison of these methods see (Yang et al., 2012).

Another set of methods broadens these techniques to cases where the kernel matrix may not be globally low rank, but it exhibits so-called rank-structure. This is often captured using domain decomposition schemes which can leverage analytic expansions of kernel functions as in (Greengard & Rokhlin, 1987; Ryan et al., 2021), or algebraic data-dependent methods as in (Ying et al., 2004; Ying, 2006; Si et al., 2017). These methods typically require the ability to compute low-rank approximations of subblocks of —our present work solves this problem making it complementary to these schemes rather than in direct competition.

Another class of methods for solving this problem represent as a product where is a set of points chosen such that admits fast matrix vector products and is an appropriately chosen interpolation/aggregation matrix. The most common set of methods in this class let be a regular grid over the domain of interest and forcing to be sparse (i.e., using local interpolation). In this setting can be efficiently applied using the fast Fourier transform. This form of method arose as the so-called pre-corrected FFT (Phillips & White, 1994; White et al., 1994) and gained popularity in the machine learning community as structured kernel interpolation (SKI) (Wilson & Nickisch, 2015) (in this context, it can be seen as an acceleration of inducing point methods (Snelson & Ghahramani, 2005)). While potentially highly efficient in moderate dimension if sparse interpolation and aggregation operators are used (i.e., is very sparse), as with sampling based Nyström methods they do not easily scale to higher accuracy regimes.

3 Methods

3.1 Overview

Here we derive our analytic expansion and the subsequent additional compression step which comprise our new algorithm, which we refer to as a Harmonic Decomposition Factorization (HDF). We will begin by showing why an analytic expansion can lead to a low-rank approximation of a kernel matrix. Then we will show how a Chebyshev expansion of the kernel function can be expanded and rearranged into an expansion in hyperspherical harmonics. From there, our additional compression step examines the functions which form the coefficients in the harmonic expansion—when evaluated on the data points, these functions can be further reduced in number, thus reducing the overall rank of the factorization. Finally we present error and complexity analyses for the factorization.

3.2 Methodology

We achieve a linear time low-rank approximation by analyzing the kernel’s functional form rather than algebraically operating on its associated matrix. Let be the kernel matrix whose entry in the th row and th column is where are -dimensional points in datasets respectively, and is a piecewise smooth and continuous isotropic kernel. Our goal is to find matrices with so that the kernel matrix may be approximated by

| (2) |

We achieve this by finding functions so that

| (3) |

Then we may define and as

This is the typical mechanism of analytic methods for kernel matrix approximation— and are formed by analyzing the kernel function and applying related functions to the data, rather than by examining the original kernel matrix. Thus our main goal is to find so that (3) can be computed efficiently and is sufficiently accurate for as small as possible.

3.3 Analytic Expansion

First we assume without loss of generality that for all .222Mathematically, we’re absorbing the diameter of the point set into a lengthscale parameter in the kernel function. We begin by defining

| (4) |

and then forming a truncated Chebyshev expansion of for

where is the th Chebyshev polynomial of the first kind. The coefficients may be computed efficiently and accurately using e.g. the discrete cosine transform, see (Clenshaw & Curtis, 1960). This yields

| (5) |

for . Furthermore for odd since is even by definition. To see this, note that , and when is odd the integrand is an even function times an odd function, hence the integral vanishes. Consequently, we henceforth assume that is even.

The coefficients in the Chebyshev polynomials are defined as so that

| (6) |

and, by definition of the Chebyshev polynomials,

Noting and plugging (6) into (5) yields

Applying the multinomial theorem and rearranging the sums (see Section A for details) results in

| (7) |

where we have absorbed , and the multinomial coefficients into constants .

Since where is the angle between the vectors , we may write

Plugging this into (3.3) and performing additional rearranging/reindexing yields

| (8) |

where , is the Gegenbauer polynomial of the th order, and are constants which depend on the kernel but not on .

We may finally put this in the form of (3) by using the hyperspherical harmonic addition theorem (Wen & Avery, 1985):

| (9) |

where is a normalization term, are hyperspherical harmonics333We use a real basis of hyperspherical harmonics to avoid complex arithmetic. which are -dimensional generalizations of the spherical harmonics (see (Avery, 1989) for their definition) and

Substituting (9) into (8) yields

| (10) |

3.4 Additional Data-Dependent Compression

The number of functions in the approximate expansion (which is in (3)) will henceforth be referred to as the rank of the expansion. The cost of computing the low-rank expansion is the cost of populating the matrices and , which is linear in the number of entries in the matrices. To achieve efficiency, we’d like the rank of the expansion to be as small as possible while still achieving the desired level of accuracy. Unfortunately, the rank of (3.3) is still large—proportional to . In this section, we incorporate the datasets into a scheme that efficiently reduces the rank of (3.3).

First, we define

| (11) |

so that (3.3) may be written as

The function has rank ,444 is nonzero only if have the same parity, see Section A. but if we can find an approximation with smaller rank and within our error tolerance (when evaluated on the data), then it may be substituted into (3.3) to reduce the overall rank.

We will find this low-rank approximation by efficiently computing a low-rank approximation of the associated matrix defined by Notably, can be written as where

| (12) |

Letting and be factorizations we have

| (13) |

where is an SVD of the matrix . Using an appropriate error tolerance , we may find a lower rank representation of by truncating all singular values of less than . This results in

| (14) |

where

where the notation refers to the first columns of , means the first rows and columns of , and is the index of the smallest singular value in greater than . The selection of is discussed in Section 3.5.

Remark 1.

When , the matrix is symmetric, hence the SVD may be replaced with an eigendecomposition. In fact, only the factorization of is needed in this case. Furthermore, we will have in (2), so the memory requirements are halved. From a practical point of view, it is efficient to include a diagonal matrix containing these eigenvalues so that we may store with all real entries instead of with possibly complex entries in the case of non-positive definite matrices (see (Chen et al., 2009) for examples of indefinite learning tasks).

Remark 2.

Since the hyperspherical harmonics are orthogonal functions, using the same truncation criteria for different yields approximately the same error for each . In practice, this may result in some being completely dropped because even their first singular value is below the threshold—our interpretation is that the th order harmonics in the expansion are unnecessary to achieve the desired level of accuracy, and so this additional compression step allows us to drop them and greatly reduce the rank of the approximation.

3.5 Error

Error is introduced into our approximation in two ways: the truncation of the Chebyshev expansion in (4) and the data-driven approximation to in (14). Suppose we use a threshold of to truncate singuar values/vectors in (13), then the error of the approximation for any pair of points will satisfy

For the first part, note that (see (Kim et al., 2012) Eq (1.1)). Further, since for any matrix , we have

For the second part, (Elliott et al., 1987) gives

Therefore, the total error satisfies

| (15) |

where

Remark 3.

In practice, may be easily approximated and we may adaptively choose and given an input error tolerance such that the desired accuracy is achieved. In fact, since (15) is often quite loose, tuning how is chosen with respect to the input error tolerance can yield significantly smaller ranks while maintaining desired accuracy.

3.6 Complexity

The algorithm is summarized in Algorithm 1. Note that is an adaptively chosen parameter so that (4) is sufficiently accurate. The Chebyshev transform takes time via the discrete cosine transform. If an initial multiplication of Vandermonde matrices is performed outside the loop, the factorizations can be performed in time each (see, for example, (Brubeck et al., 2021)) and so contribute to the total cost. Forming takes each, contributing to the total cost, where . The SVDs take time each and so contribute to the total cost.

The total number of harmonics needed by our algorithm will be bounded above by the number of harmonics of order less than or equal to —in Section B we derive this to be Each harmonic costs time to evaluate per datapoint, hence the cost of computing the necessary harmonics will be . Once they are computed, the cost to populate the and matrices will be where is the final rank of the approximation. This rank is given by

Thus the total runtime complexity for the factorization is . After the factorization, matrix-vector multiplies may be performed in time.

3.7 Limitations

We have described an additional data-dependent compression step based on matrices formed out of the polynomials within the analytic expansion. We currently make no attempt to perform further compression based on the observed rank of the harmonic components. This is logical when the number of points is high relative to the dimension—matrices formed from the harmonics will have little singular value decay owing to the orthogonality of the harmonic functions. However, if the number of dimensions is large relative to the the number of points, then the points are not space filling and the lack of additional compression based on the harmonics will result in poor efficiency, especially as the number of harmonics grows polynomially with the dimension (see Section C for further discussion).

Vandermonde matrices are notoriously ill-conditioned (Pan, 2016), and the accuracy of the factorizations in the current presentation of our additional compression step can suffer when is large unless this is addressed. In our testing, this degradation only arises for very small length-scale parameters and/or very small error tolerances (notably, outside the range seen in our experiments). One step towards preventing potential conditioning issues could be to arrange the expansion so that Legendre polynomials of are used instead of powers of (in an analogous way to our replacement of powers of cosine with Gegenbauer polynomials of cosine, see Section A for details).

In many kernel matrix approximation algorithms, a non-negligible cost of generating the low-rank matrices is that matrix entries typically cost time to generate. For example, this is the case when a kernel evaluation is required between a point and a benchmark point, as in inducing point methods. On the one hand, our factorization benefits from the costs being confined555The Vandermonde-like matrices require only a single dimension-dependent computation of the norms of all datapoints. to the computation of the harmonics, each of which is reused across rows where is the rank in (14). On the other hand, this depends on an efficient implementation of hyperspherical harmonic computation—although we provide a moderately tuned implementation, this is the subject of further work as there are few available good pre-existing routines for this procedure.

4 Experiments

We have implemented the Harmonic Decomposition factorization in Algorithm 1 in Julia as part of an open source toolkit, and have performed synthetic and real-world regression experiments single-threaded on a 2020 Apple Macbook Air with an M1 CPU and 8GB of RAM. All recorded times are averaged across 10 trials.

4.1 Synthetic Data

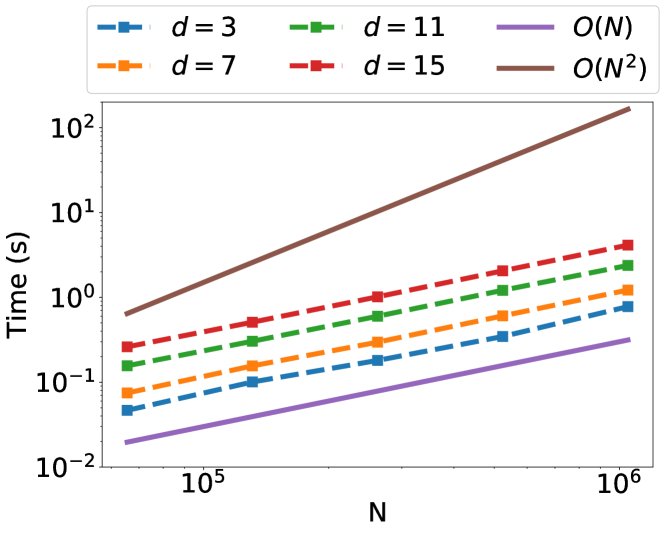

To test the runtime of our algorithm for constructing the factorization, we generate datasets of points drawn from a normal distribution and subsequently scaled so that the maximum norm of a point in a dataset is . Using the Cauchy kernel with lengthscale parameter , we vary the number of points and dimensions of this dataset and record the time required by the implementation to create the low-rank approximation. The relative error tolerance is fixed at . Figure 1 vizualizes the results of this experiment—across a variety of dimensions, our factorization requires linear time in the number of points.

To illustrate how efficiently we can tradeoff time and accuracy, we compare our factorization with the popular Nyström method where inducing points are chosen uniformly at random.666Times for the Nyström method include populating the interpolation matrix as well as factoring the inducing point matrix. We use a dataset of 10,000 points in three dimensions generated the same as before and using the same Cauchy kernel. This time, the error tolerance is varied and the factorization time and relative errors are recorded. Figure 2 shows that our factorization is consistently cheaper to produce than the Nyström factorization that achieves the same accuracy. This further translates to faster matrix vector products at the same accuracy. This is due largely to the HDF’s lower-rank approximation, which we investigate next.

To further explore the tradeoff between rank and relative error of our approximation, we conduct experiments where the error tolerance is varied and the rank of the necessary factorization along with the actual final relative error is collected. In this experiment, we use a dataset of 5000 points in five dimensions generated in the same way as above and use the Cauchy kernel , Gaussian kernel (), Matérn kernel with () and Matérn kernel with (). Figure 3 compares results between our method, the Nyström method, and the SVD (which provides the optimal rank/error tradeoff). In all cases our method has greater accuracy than the Nyström method for the same rank. Additionally, our method shows nearly optimal performance for lower rank approximations. This experiment also highlights the generality of our method—most existing analytic methods cannot be applied to this breadth of kernels and are, therefore, omitted from the experiment.

4.2 Kernel Ridge Regression on California Housing Data

We also apply our factorization within the training process of kernel ridge regression and evaluate the predictions. Kernel ridge regression (Shawe-Taylor et al., 2004) is a kernel method which involves finding a weight vector that solves the minimization problem

where are the training points, are the training labels, and is a regularization parameter. The solution to this problem is

| (16) |

Predictions on test data are then performed using , where are the test points and are our predictions of the test labels.

We use the California housing data set from the UCI Machine Learning Repository (Dua & Graff, 2017), which contains data drawn from the 1990 U.S. Census. The labels are the median house values, and the features are all other attributes, leading to a feature space of dimensions for a dataset of points. We scale features to be in , and for each of five trials perform a random shuffle of the data followed by a - train-test split.

We apply our factorization to the problem by generating low-rank approximations for the diagonal blocks of the kernel matrix in (16). The diagonal blocks are chosen to be associated with 30 clusters of points generated by -means clustering (Lloyd, 1982), so that the th block along the diagonal is given by . A host of techniques exist for compressing the off-diagonal blocks (Ying et al., 2004; Ying, 2006; Ambikasaran et al., 2015; Ryan et al., 2021), but we perform no off-diagonal compression for this experiment to simplify the implementation. The associated linear system is then solved using the method of conjugate gradients (Greenbaum, 1997; Shewchuk, 1994), where the matrix-vector multiplies are accelerated by our compression. As a preconditioner we use a block diagonal matrix whose blocks are the inverses of the blocks of the true kernel matrix associated with the clusters.

For comparison, we also applied the Nyström method using the same ranks for the diagonal blocks. We record the relative errors of the diagonal block approximations, as well as the final mean squared errors (MSEs) when compared with the ground truth test labels. Figure 4 visualizes our findings. For all kernels, our method tends to find a more accurate approximation than Nyström for the same rank, as also demonstrated for the synthetic data in Figure 3. Further, owing to the more accurate approximations, our method yields MSEs closer to those found using dense operations.

5 Conclusion

We have presented a new method for low-rank approximation of kernel matrices that pairs a novel analytic expansion with an efficient data-dependent compression step. The technique works for any isotropic kernel, and is particularly efficient when the dimension of the data is small relative to the size of the dataset. Our method runs in linear time and has a configurable error tolerance along with theoretical guarantees on the size of the error. This work presents results from a suite of experiments demonstrating linear scaling and favorable comparative metrics against the Nyström method, both for synthetic and real-world data. An open-source implementation of our method is included alongside this paper. This work also leads to many interesting and promising questions for future exploration. One currently unexplored facet is whether an additional compression step may be applied to the harmonics. For our areas of application this has not been necessary, as the assumption of moderate dimension has meant that the matrices of harmonics are not numerically low rank. However, for high and high desired accuracy, the high orders of harmonics required are too numerous for an efficient low-rank approximation (see Figure 5 in Section C). This problem could potentially be mitigated by a similar data-dependent compression technique as is used in this work, or another dimension-reduction technique.

| Dense | 1.72 [1.62, 1.77]e-2 | 1.76 [1.71, 1.80]e-2 |

| HDF | 1.71 [1.62, 1.77]e-2 | 1.76 [1.70, 1.80]e-2 |

| Nyst. | 1.78 [1.71, 2.08]e-2 | 1.79 [1.71, 1.83]e-2 |

| Matérn | Matérn | |

| Dense | 1.58 [1.54,1.62]e-2 | 1.69 [1.66,1.73]e-2 |

| HDF | 1.58 [1.55,1.62]e-2 | 1.69 [1.65,1.73]e-2 |

| Nyst. | 2.52 [1.70,7.94]e-2 | 1.69 [1.68,1.72]e-2 |

Our expansion was formed by transforming to a basis of Gegenbauer polynomials in and then splitting into harmonics. This approach is inspired by the analytic expansions underlying fast multipole methods, but may not be ideal for high dimensional problems if the hyperspherical harmonics are too onerous to compute. For higher dimensional problems it is conceivable that a more manageable basis for the angular functions would be preferred for a fast implementation. A different such basis could also be engineered to allow for efficiency in an additional compression as described in the previous paragraph, to parallel how the Vandermonde structure of (12) allows for efficiency in our additional compression step.

We have provided the theoretical underpinnings for off-diagonal compression based on our scheme and future work will incorporate our routine into a hierarchical domain decomposition framework. It is plausible that an opportunity exists to reuse matrices or at least harmonic computations between on- and off-diagonal block approximations.

References

- Ambikasaran et al. (2015) Ambikasaran, S., Foreman-Mackey, D., Greengard, L., Hogg, D. W., and O’Neil, M. Fast direct methods for gaussian processes. IEEE transactions on pattern analysis and machine intelligence, 38(2):252–265, 2015.

- Avery (1989) Avery, J. S. Hyperspherical Harmonics. Springer, 1989. doi: https://doi.org/10.1007/978-94-009-2323-2.

- Brubeck et al. (2021) Brubeck, P. D., Nakatsukasa, Y., and Trefethen, L. N. Vandermonde with arnoldi. SIAM Rev., 63:405–415, 2021.

- Chen et al. (2009) Chen, Y., Gupta, M. R., and Recht, B. Learning kernels from indefinite similarities. In Proceedings of the 26th Annual International Conference on Machine Learning, ICML ’09, pp. 145–152, New York, NY, USA, 2009. Association for Computing Machinery. ISBN 9781605585161. doi: 10.1145/1553374.1553393. URL https://doi.org/10.1145/1553374.1553393.

- Clenshaw & Curtis (1960) Clenshaw, C. W. and Curtis, A. R. A method for numerical integration on an automatic computer. Numerische Mathematik, 2(1):197–205, 1960. doi: 10.1007/BF01386223. URL https://doi.org/10.1007/BF01386223.

- Drineas et al. (2005) Drineas, P., Mahoney, M. W., and Cristianini, N. On the nyström method for approximating a gram matrix for improved kernel-based learning. journal of machine learning research, 6(12), 2005.

- Dua & Graff (2017) Dua, D. and Graff, C. UCI machine learning repository, 2017. URL http://archive.ics.uci.edu/ml.

- Elliott et al. (1987) Elliott, D., Paget, D., Phillips, G., and Taylor, P. Error of truncated chebyshev series and other near minimax polynomial approximations. Journal of Approximation Theory, 50(1):49–57, 1987. ISSN 0021-9045. doi: https://doi.org/10.1016/0021-9045(87)90065-7. URL https://www.sciencedirect.com/science/article/pii/0021904587900657.

- Gittens & Mahoney (2013) Gittens, A. and Mahoney, M. Revisiting the nystrom method for improved large-scale machine learning. In International Conference on Machine Learning, pp. 567–575. PMLR, 2013.

- Goreinov et al. (1997) Goreinov, S. A., Tyrtyshnikov, E. E., and Zamarashkin, N. L. A theory of pseudoskeleton approximations. Linear algebra and its applications, 261(1-3):1–21, 1997.

- Greenbaum (1997) Greenbaum, A. Iterative Methods for Solving Linear Systems. Society for Industrial and Applied Mathematics, USA, 1997. ISBN 089871396X.

- Greengard & Rokhlin (1987) Greengard, L. and Rokhlin, V. A fast algorithm for particle simulations. Journal of computational physics, 73(2):325–348, 1987.

- Greengard & Strain (1991) Greengard, L. and Strain, J. The fast gauss transform. SIAM Journal on Scientific and Statistical Computing, 12(1):79–94, 1991.

- Kim et al. (2012) Kim, D. S., Kim, T., and Rim, S.-H. Some identities involving gegenbauer polynomials. Advances in Difference Equations, 2012(1):219, 2012. doi: 10.1186/1687-1847-2012-219. URL https://doi.org/10.1186/1687-1847-2012-219.

- Lloyd (1982) Lloyd, S. Least squares quantization in pcm. IEEE Transactions on Information Theory, 28(2):129–137, 1982. doi: 10.1109/TIT.1982.1056489.

- Musco & Musco (2017) Musco, C. and Musco, C. Recursive sampling for the nyström method. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 3836–3848, 2017.

- Nyström (1930) Nyström, E. J. Über die praktische auflösung von integralgleichungen mit anwendungen auf randwertaufgaben. Acta Mathematica, 54:185–204, 1930.

- Pan (2016) Pan, V. Y. How bad are vandermonde matrices? SIAM Journal on Matrix Analysis and Applications, 37(2):676–694, 2016. doi: 10.1137/15M1030170. URL https://doi.org/10.1137/15M1030170.

- Phillips & White (1994) Phillips, J. R. and White, J. A precorrected-fft method for capacitance extraction of complicated 3-d structures. In ICCAD, volume 94, pp. 268–271. Citeseer, 1994.

- Rahimi et al. (2007) Rahimi, A., Recht, B., et al. Random features for large-scale kernel machines. In NIPS, volume 3, pp. 5. Citeseer, 2007.

- Ryan et al. (2021) Ryan, J. P., Ament, S., Gomes, C. P., and Damle, A. The fast kernel transform. arXiv preprint arXiv:2106.04487, 2021.

- Scholkopf & Smola (2018) Scholkopf, B. and Smola, A. J. Learning with kernels: support vector machines, regularization, optimization, and beyond. Adaptive Computation and Machine Learning series, 2018.

- Shawe-Taylor et al. (2004) Shawe-Taylor, J., Cristianini, N., et al. Kernel methods for pattern analysis. Cambridge university press, 2004.

- Shewchuk (1994) Shewchuk, J. R. An introduction to the conjugate gradient method without the agonizing pain. Technical report, USA, 1994.

- Si et al. (2017) Si, S., Hsieh, C.-J., and Dhillon, I. S. Memory efficient kernel approximation. Journal of Machine Learning Research, 18(20):1–32, 2017. URL http://jmlr.org/papers/v18/15-025.html.

- Snelson & Ghahramani (2005) Snelson, E. and Ghahramani, Z. Sparse gaussian processes using pseudo-inputs. Advances in neural information processing systems, 18:1257–1264, 2005.

- Solin & Särkkä (2020) Solin, A. and Särkkä, S. Hilbert space methods for reduced-rank gaussian process regression. Statistics and Computing, 30(2):419–446, mar 2020. ISSN 0960-3174. doi: 10.1007/s11222-019-09886-w. URL https://doi.org/10.1007/s11222-019-09886-w.

- Wen & Avery (1985) Wen, Z. and Avery, J. Some properties of hyperspherical harmonics. Journal of Mathematical Physics, 26(3):396–403, 1985. doi: 10.1063/1.526621. URL https://doi.org/10.1063/1.526621.

- White et al. (1994) White, J., Phillips, J., and Korsmeyer, T. Comparing precorrected-fft and fast multipole algorithms for solving three-dimensional potential integral equations,”. In Proceedings of the Colorado Conference on Iterative Methods, pp. 4–10. Citeseer, 1994.

- Williams & Seeger (2001) Williams, C. K. and Seeger, M. Using the nyström method to speed up kernel machines. In Advances in neural information processing systems, pp. 682–688, 2001.

- Wilson & Nickisch (2015) Wilson, A. and Nickisch, H. Kernel interpolation for scalable structured gaussian processes (kiss-gp). In International Conference on Machine Learning, pp. 1775–1784, 2015.

- Yang et al. (2003) Yang, C., Duraiswami, R., Gumerov, N. A., and Davis, L. Improved fast gauss transform and efficient kernel density estimation. IEEE, 2003.

- Yang et al. (2012) Yang, T., Li, Y.-F., Mahdavi, M., Jin, R., and Zhou, Z.-H. Nyström method vs random fourier features: A theoretical and empirical comparison. Advances in neural information processing systems, 25:476–484, 2012.

- Ying (2006) Ying, L. A kernel independent fast multipole algorithm for radial basis functions. Journal of Computational Physics, 213(2):451–457, 2006.

- Ying et al. (2004) Ying, L., Biros, G., and Zorin, D. A kernel-independent adaptive fast multipole algorithm in two and three dimensions. Journal of Computational Physics, 196(2):591–626, 2004.

Appendix A Derivation of the Analytic Expansion

Here we present a detailed derivation of the analytic expansion which forms the foundation of our algorithm. Starting from the Chebyshev expansion

Using the definition of the Chebyshev polynomial

we get

Applying the multinomial theorem yields

Swapping sums via

gives

Let so that , then we have

Swapping the and sums gives

Using gives

We will use the identity

where , is the Gegenbauer polynomial, when , and

when . The notation refers to the rising factorial, i.e. . Using this to expand the term yields

Swapping the and sums yields

Let so that

Swapping the and sums gives

Let so that

Swapping the and sums yields

where

The final form of the expansion follows from applying the hyperspherical harmonic addition theorem.

Appendix B Number of Harmonics of Order

Here we show that

Note that (Wen & Avery, 1985) gives

Thus the LHS forms a telescoping sum and we are left with

Appendix C Compression of Matrices of Harmonics

The algorithm we presented includes an additional data-dependent compression step which involves finding a low-rank approximation to the matrices as in (14). We could, in an analogous fashion, attempt to find a data-dependent compression of the matrix of harmonics defined by

For problems where the dimension is large relative to the size of the dataset, this matrix can have fast-decaying singular values, as seen in Figure 5, and hence it would be useful to perform additional compression in those cases. Future research will search for efficient ways to perform this compression when it is called for, or prevent the need for it with some suitable data transformation.