Linear Simple Cycle Reservoirs at the edge of stability perform Fourier decomposition of the input driving signals

Abstract.

This paper explores the representational structure of linear Simple Cycle Reservoirs (SCR) operating at the edge of stability. We view SCR as providing in their state space feature representations of the input-driving time series. By endowing the state space with the canonical dot-product, we “reverse engineer” the corresponding kernel (inner product) operating in the original time series space. The action of this time-series kernel is fully characterized by the eigenspace of the corresponding metric tensor. We demonstrate that when linear SCRs are constructed at the edge of stability, the eigenvectors of the time-series kernel align with the Fourier basis. This theoretical insight is supported by numerical experiments.

Recurrent Neural Networks (RNNs) are machine learning methods for modeling temporal dependencies in sequential data, but their training can be computationally demanding. Reservoir Computing (RC), a simplified subset of RNNs, circumvents this problem by fixing the internal dynamics of the network (the reservoir) and focusing training on the readout layer. Simple Cycle Reservoir (SCR) is a type RC model that stands out for its minimalistic design and proven capability to universally approximate a wide class of processes operating on time series data (namely time-invariant fading memory filters) even in the linear dynamics regime (and non-linear static readouts). We show that, interestingly, when linear SCR is constructed at the edge of stability, it implicitly represents the time series according to a well known and widely used technique of Fourier signal decomposition. This insight demonstrates that deep connections can exist between recurrent neural networks, classical signal processing techniques and statistics, paving the way for their enhanced understanding and innovative applications.

1. Introduction

Recurrent Neural Networks (RNNs) are input-driven parametric state-space machine learning models designed to capture temporal dependencies in sequential input data streams. They encode time series data into a latent state space, dynamically storing temporal information within state-space vectors.

Reservoir Computing (RC) models is a subset of RNNs that operate with a fixed, non-trainable input-driven dynamical system (known as the reservoir) and a static trainable readout layer producing model responses based on the reservoir activations. This design uniquely simplifies the training process by concentrating adjustments solely on the readout layer (thus avoiding back-propagating the error information backwards through time), leading to enhanced computational efficiency. The simplest implementations of RC models include Echo State Networks (ESNs) [Jae01, MNM02, TD01, LJ09].

Simple Cycle Reservoirs (SCR) represent a specialized class of RC models characterized by a single degree of freedom in the reservoir construction (modulo the state space dimensionality), structured through uniform ring connectivity and binary input weights with an aperiodic sign pattern. Recently, SCRs were shown to be universal approximators of time-invariant dynamic filters with fading memory over and respectively in [LFT24, FLT24], making them highly suitable for integration in photonic circuits for high-performance, low-latency processing [BVdSBV22, LBFM+17, HVK+20].

Understanding the intricacies of SCRs in depth is essential. In this work, we employ the kernel view of linear ESNs introduced in [Tin20], in which the state-space ‘reservoir’ representation of (potentially left-infinite) input sequences is treated as a feature map corresponding to the given reservoir model through the associated reservoir kernel. For linear reservoirs, the canonical dot product of two input sequences’ feature representations is analytically expressible as the (semi-)inner product of the sequences themselves. The corresponding metric tensor reveals the representational structure imposed by the reservoir on the input sequences, in particular in the metric tensor’s eigenspace containing dominant projection axes (time series ’motifs’) and scaling (‘importance’ factors).

To assess the “richness” of linear SCR state-space representations, [Tin20] proposed analyzing the relative area of motifs under the Fast Fourier Transform (FFT). It was observed that the richness of these representations collapses at the edge of stability when the spectral radius of the dynamic coupling matrix equals to 1.

In this paper we theoretically analyze the collapse of motif richness at the edge of stability and show that when the SCR kernel motifs correspond to Fourier basis. We begin by reviewing the notion of SCRs [RT10], kernel view of ESNs [Tin20], and Reservoir Motif Machines [TFL24] in Section 2. The contribution of this paper, in the subsequent sections, are outlined as follows:

-

(1)

In Section 4, we show in that motifs of linear SCR at the edge of stability are harmonic functions.

-

(2)

In Section 5, we show in that dimensional linear SCR has symmetric motifs and skew-symmetric motifs.

-

(3)

In Section 6, we combine the results of the previous two sections and demonstrate numerically that in , the motifs of linear SCR at edge of stability are exactly the columns of real Fourier basis matrix.

-

(4)

Finally in Section 7, we conclude the paper with numerical experiments supporting our findings.

2. Simple Cycle Reservoir and its temporal kernel

Let or be a field. We first formally define the principal object of our study - parametrized linear driven dynamical system with a (possibly) non-linear readout.

Definition 2.1.

A linear reservoir system over is is the triplet where the dynamic coupling is an matrix over , the input-to-state coupling is an matrix, and the state-to-output mapping (readout) is a (trainable) continuous function.

The corresponding linear dynamical system is given by:

| (2.1) |

where , , and are the external inputs, states and outputs, respectively. We abbreviate the dimensions of by .

We make the following assumptions for the system:

- (1)

-

(2)

We assume the input stream is is uniformly bounded. In other words, there exists a constant such that for all .

The contractiveness of and the uniform boundedness of input stream imply that the images of the inputs under the linear reservoir system live in a compact space . With slight abuse of mathematical terminology we call a state space.

Definition 2.2.

Let be an matrix. We say C is a permutation matrix if there exists a permutation in the symmetric group such that

We say a permutation matrix C is a full-cycle permutation111Also called left circular shift or cyclic permutation in the literature. if its corresponding permutation is a cycle permutation of length . Finally, a matrix is called a contractive full-cycle permutation if and C is a full-cycle permutation.

The idea of simple cycle reservoir was presented in [RT10] as a reservoir system with a very small number of design degrees of freedom, yet retaining performance capabilities of more complex or (unnecessarily) randomized constructions. In fact, it can be shown that even with such a drastically reduced design complexity, SCR models are universal approximators of fading memory filters [LFT24, FLT24].

Definition 2.3.

A linear reservoir system with dimensions is called a Simple Cycle Reservoir (SCR) 222We note that the assumption on the aperiodicity of the sign pattern in is not required for this study if

-

(1)

W is a contractive full-cycle permutation, and

-

(2)

.

One possibility to understand inner representations of the input-driving time series forming inside the reservoir systems is to view the reservoir state space as a temporal feature space of the associated reservoir kernel [Tin20, GGO22] . Consider a linear reservoir system over with dimensions operating on univariate input.

Let denote the length of the look back window and consider two sufficiently long time series of length ,

| u | |||

and

| v | |||

we consider the reservoir states reached upon reading them (with zero initial state) their feature space representations [Tin20]:

The canonical dot product (reservoir kernel)

can be written in the original time series space as a semi-inner product , where

| (2.2) |

Since the (semi-)metric tensor is symmetric and positive semi-definite, it admits the following eigen-decomposition:

| (2.3) |

where is a diagonal matrix consisting of non-negative eigenvalues of Q with the corresponding eigenvectors (columns of M). The eigenvectors of M with positive eigenvalues are called the motifs of . We have:

In particular, the reservoir kernel is a canonical dot product of time series projected onto the motif space spanned by :

where

| (2.4) | ||||

Reservoir Motif Machine (RMM)[TFL24] is a predictive model motivated by the kernel view of linear Echo State Networks described above. By projecting the -blocks of input time series onto the reservoir motif space given by , RMM captures temporal and structural dynamics in a computationally efficient feature map.

In particular, rather than relying on the motif weights determined by the reservoir in Equation (2.4), RMM introduces a set of adaptable motif coefficients, denoted as , to define its feature map as follows:

| (2.5) |

This feature map is used to train a predictive model, such as linear regression or kernel-based methods, directly in the motif space.

Remark 2.4.

To streamline the theoretical analysis of SCR kernels, in line with [Tin20], we assume that the length of the look-back window (past horizon) is an integer multiple of the dimension of the state space , i.e. there exists such that . In particular, denoting by m a SCR motif calculated with , it is shown in [Tin20] that when , the corresponding motif is a concatenation of copies of m, scaled by , . Recall that is the spectral radius of the dynamic coupling . Hence, to study SCR motifs with past horizon , for any , it is sufficient to study only the base case .

3. On the edge of stability of Simple Cycle Reservoirs

Having defined the reservoir temporal kernel, one can ask how “representationally rich” is the associated feature space (span of the reservoir motifs). To quantify the ‘richness’ of the reservoir feature space of a linear reservoir system over , [Tin20] proposed the following procedure:

Consider a linear reservoir system with dimensions over . Suppose W has spectral radius . Recall from Remark 2.4 that: to study the SCR motif structure of, it is sufficient to consider the past horizon .The motif matrix is constructed according to Equation (2.3) from the matrix Q (metric tensor of the inner product of the reservoir kernel).

First, Fast Fourier Transform (FFT) is applied to the kernel motifs (columns of M), considering only those with motif weights upto a threshold of of the highest motif weight. This yields an matrix of Fourier coefficients over with . These Fourier coefficients are then collected in a (multi)set .

To evaluate the diversity and spread of the Fourier coefficients in the complex plane, [Tin20] proposed calculating the coarse-grained area occupied by . In particular, the box in the complex plane is partitioned into a grid of cells (following [Tin20] we use side length ). The relative area covered by is defined as the ratio of the number of cells visited by the coefficients to the total number of cells in the grid. An example of the distribution of Fourier coefficients of linear SCR with at and w being the first digits of binary expansion of is presented in Figure 1 333In particular, is where the relative area peaks at Figure 2..

Replicating the experiment in [Tin20] in Figure 2, we observe that the “richness” of the motif space of SCR, as measured by relative area under the current setup, increases as the spectral radius approaches approximately . Beyond this point, the measure sharply declines, aligning with the results for a randomly generated reservoir. This decline is also observed in is also shown in Figure 2, as increases from approximately (the peak of Figure 2) to the edge of stability at .

In this paper, we aim to investigate this collapse of representational richness of SCR models at the edge of stability . In particular, we will show that at the edge of stability, the motifs of linear SCR are (sampled) harmonic functions.

The next two section will be a two-part exposition of the properties of the motifs of linear SCR:

- (1)

- (2)

Combining these two results, we conclude that at , the motifs alternate between real and imaginary components of the first Fourier basis, which correspond to cosines and sines, respectively. We supplement our theoretical findings with numerical simulations of the motif space of SCR in the next section, which then lead to the numerical experiments in the final section.

4. Unit spectral radius SCR implies harmonic motifs in Complex Domain

We first show that, in the complex domain , the motifs of SCR can be derived explicitly. In particular, in this section we set and show that when the spectral radius , the motifs of linear SCR are harmonic, i.e. they are precisely the Fourier basis (columns of the Fourier matrix).

Consider a linear reservoir system over with dimensions and W of spectral radius . Let denote the metric tensor of the reservoir kernel (Eq. (2.2).

In the spirit of [LFT24], we begin by considering a more general setting of , where U is a unitary matrix in (i.e. ) and . We then move to the special case where is a cyclic permutation. Since U is unitary, its eigenvalues all have norm . We let its eigenvalues be with corresponding eigenvectors , and we know each .

By construction:

The matrix is a matrix. Denote

and,

Notice that is an matrix while is an matrix. Then by construction, . Notice that since and U is unitary, we can rewrite as follows:

Finally, we let

With the ultimate goal of computing the eigen-decomposition of , we first characterize the eigenvalues and eigenvectors of . For the eigenvalues we first observe the following:

Lemma 4.1.

The matrix satisfies .

Proof.

Multiplying the -th row of with its -th column, we obtain:

This proves the desired equality. ∎

As a result, we can now fully characterize the eigenvalues of .

Corollary 4.2.

The eigenvalues of are either or . Moreover, the multiplicity of the eigenvalue is .

Proof.

Let be an eigenvector of with eigenvalue . Then , and thus

This implies that either or .

Moreover, . But the trace also equals the sum of all the eigenvalues. Since the eigenvalues of can only be or , we conclude that the multiplicity of the eigenvalue is precisely . ∎

We now turn to characterize the non-zero eigenvectors of . We will then use these vectors to compute the motif matrix of . Recall are the eigenvectors of U with the corresponding eigenvalues . For each , define

Lemma 4.3.

Each is an eigenvector of with eigenvalue . Moreover, is an orthonormal set.

Proof.

First, since U is unitary, we have and . Therefore, and for all non-negative integers , or, stated more compactly, for all integers .

Expanding , we observe that its -th block entry equals:

This is precisely times the -th block entry of . This proves that .

Since each is a unit vector and each , we have that . Hence, is a vector of norm . Finally, eigenvectors of any unitary matrix form an orthonormal basis, so . This implies:

which is when . This proves that , , are pairwise orthogonal. ∎

Since all the other eigenvalues of are , we immediately have the desired eigen-decomposition of the matrix as:

Recall an full-cycle permutation matrix is given by:

For the rest of the section, we set and is a full-cycle permutation and we will now compute eigen-decomposition of the metric tensor of the corresponding reservoir kernel.

From elementary matrix analysis, we know the eigenvalues of a full-cycle permutation C are precisely the -th root of unities . Its normalized eigenvectors for each eigenvalue is given by the Fourier basis:

| (4.1) |

We will now compute the eigenvalues and eigenvectors of Q explicitly. First, we see that

where . Define .

While, following Remark 2.4, we focused on the case where , the results of this section up to this point (Lemma 4.1, Corollary 4.2, and Lemma 4.3) hold for general .

Theorem 4.4.

Consider a linear SCR system over with dimensions and with the full cycle permutation matrix C as its dynamical coupling. Let Q denote metric tensor of the reservoir kernel under the past horizon . Then, the normalized eigenvectors , of C (eq. (4.1)) are also eigenvectors of Q with the corresponding eigenvalues equal to the squared projections of the input coupling vector w onto , . In other words, has motifs , with motif weights , .

Proof.

Define an matrix,

Then , and we have:

Here, by the assumption that , we can define two matrices and . Note that is the discrete Fourier transform matrix, so we know . Therefore,

Here, is a unitary matrix. The -th column of , which is precisely , is the eigenvector for ∎

Remark 4.5.

When , we can still reach a similar decomposition as above, but the matrix is an matrix. When is not an integer multiple of in particular, its rows may not be orthogonal to each other in general, so it is hard to characterize the eigenvectors of Q. However, when is an integer multiple of , the rows of F are orthogonal to each other. In this case, the eigenvectors of Q is given by the of these dimensional vectors .

As a result, in the case when and the matrix is a full-cycle permutation, the motif matrix for Q consists of , which is precisely the discrete Fourier transform matrix. In other words, the columns of the motif matrix are precisely the Fourier basis.

Remark 4.6.

Notice that , so that the eigenvalues and eigenvectors of the motif matrix come in pairs. Here, and share the same eigenvalue .

5. Motifs of Orthogonal Dynamics with Unit Spectral Radius in Real Domain

In this section we return to the real domain, i.e. and show that at unit spectral radius, the motifs of SCR consists of a fixed number of symmetric and skew symmetric vectors. As in [FLT24], we begin by deriving properties of the motif space of linear reservoir systems with orthogonal dynamical coupling and then move on to the special case of cyclic permutation.

Let be the dynamical coupling matrix of a reservoir system over . Suppose W is orthogonal with spectral radius . We now show that the matrix corresponding to the reservoir kernel is Toeplitz.

Q is Toeplitz if and only if for all . By construction of Q, this is satisfied if and only if:

Now, orthogonality of W implies . Without loss of generality assume that . Then

showing that Q is indeed Toeplitz.

Let J denote the exchange matrix with on the antidiagonal and everywhere else:

Lemma 5.1.

Symmetric Toeplitz square matrices are centrosymmetric.

Proof.

Let T denote a symmetric Toeplitz matrix. Let denote the generating sequence of T such that T is expressed as:

Consider:

The shows that T satisfies the definition of centro-symmetry. The second last equality is given by symmetry of T and the last equality is due to T being Toeplitz.

∎

Corollary 5.2.

Let W be the dynamical coupling matrix of a reservoir system . If W is orthogonal with spectral radius , then Q is symmetric centrosymmetric.

Proof.

Q is symmetric by construction. Moreover, when W is orthogonal with spectral radius , Q is Toeplitz and hence centrosymmetric by lemma 5.1. ∎

Definition 5.3.

Let be an arbitrary field. A -dimensional vector over is symmetric if:

Similarly, is skew-symmetric if:

By [CB76]: symmetric centrosymmetric matrices of order n admits an orthonormal basis of eigenvectors with:

-

(1)

symmetric eigenvectors,

-

(2)

skew-symmetric eigenvectors

5.1. Motif structure of cyclic permutation dynamics with unit spectral radius

Following Remark 2.4, we focus on the case where below. In the special case when the dynamical coupling is a cyclic permutation, the corresponding matrix Q is circulant. A circulant matrix is a specific type of Toeplitz matrix in which each row is a cyclic shift of the previous row, with elements shifted one position to the right.

The cyclic permutation can equivalently be expressed as the linear map:

| (5.1) |

Then by construction we have:

-

C.1

.

-

C.2

Theorem 5.4.

Let be the dynamical coupling matrix of a reservoir system . If W is a cyclic permutation with spectral radius . Then the metric tensor of the reservoir kernel is circulant. Moreover, there exists an orthogonal basis such that has symmetric motifs and skew-symmetric motifs.

Proof.

Let denote the row of the matrix . It suffices to show that . By construction, for :

By C.1 and C.2, the component of can be rewritten as:

| (5.2) |

By Equation 5.1 and C.1, the component of can be written as:

which coincides with Equation 5.1 and thus is circulant.

Circulant matrices are Toeplitz. Since is symmetric circulant, it is symmetric centrosymmetric of order . Thus by [CB76], admits an orthonormal basis spanning the space of space of motif consisting of symmetric eigenvectors of and skew-symmetric eigenvectors of . ∎

6. Linear SCR at unit spectral radius is Fourier transform

This section bridges the theoretical results and numerical experiments by explicitly constructing the Fourier basis matrix corresponding to a linear SCR over . We present numerical simulations to validate our theoretical findings and outline the components of the numerical experiments in the final section.

Combining the results of the previous sections, we conclude that: At unit spectral radius, the motifs of linear SCR over with neurons of look back window are:

We now demonstrate the explicit construction of the Fourier basis matrix corresponding to the motifs of a linear SCR at unit spectral radius.

For a linear reservoir system over , the motifs must also be real. By conditions R.1 and R.2, each motif must then correspond to either the real or imaginary parts of the Fourier basis. More precisely, for and (The ceiling function accounts for Theorem 5.4.), the real Fourier basis matrix is defined as

| (6.1) | ||||

We claim that the motifs of a linear SCR are exactly the first columns of F.

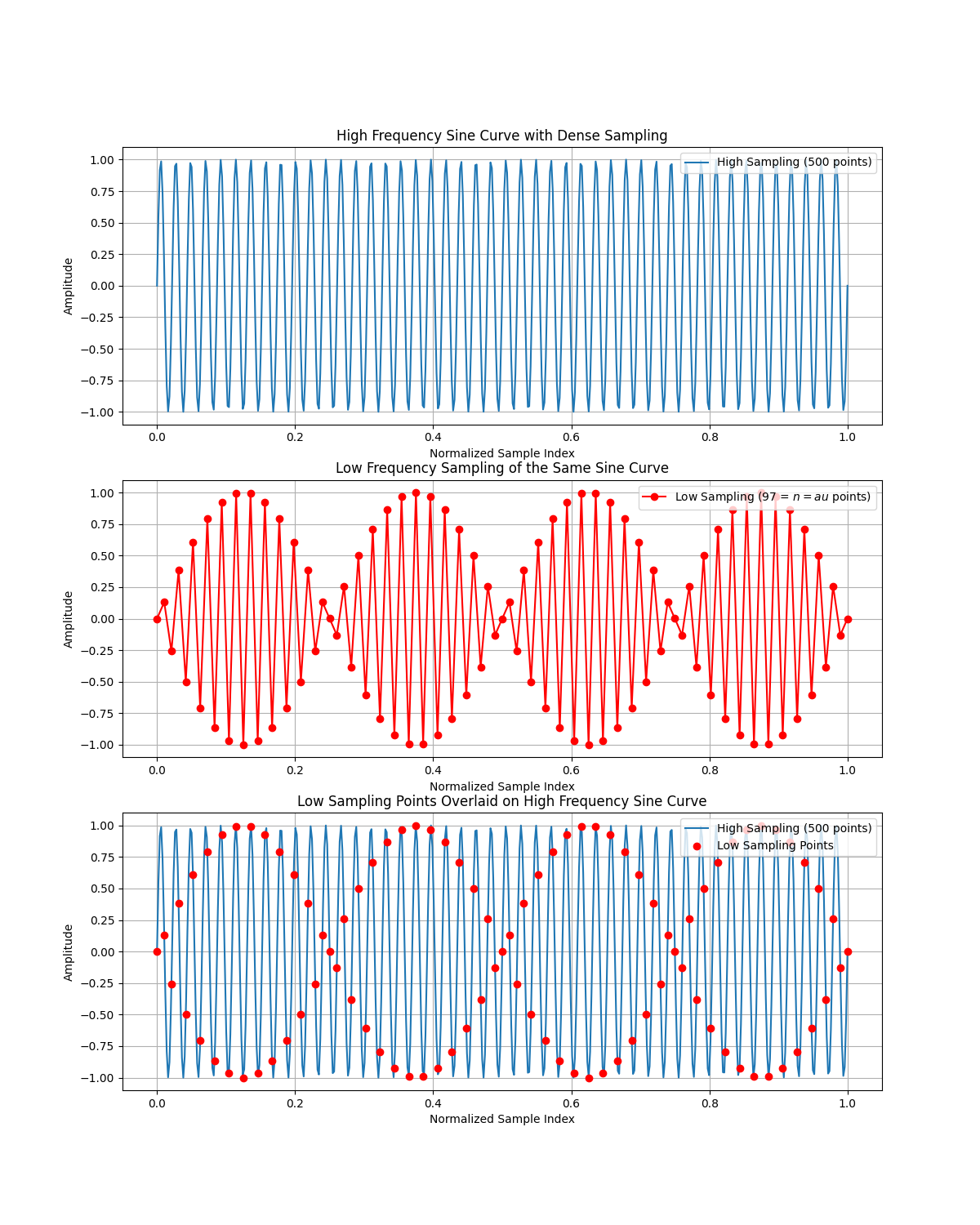

In Figure 3 and Figure 4, we present the Fourier analysis of motifs of the SCR at unit spectral radius when .

In Figure 4, we present examples of randomly chosen motifs and their corresponding Fourier basis in F. While some motifs and their corresponding Fourier basis are shifted by a fixed phase of or reflected over the -axis, their Fourier spectra align as illustrated in Figure 3. Furthermore, Figure 3, we observe that the eigenvalues come in pairs, as discussed in 4.6. The motifs may not be strictly harmonic in the classical sense due to the coarseness of the discretization grid (non-integer division of period) (See Remark 6.1 below).

Remark 6.1.

In practice, motifs represent harmonic functions sampled at specific frequencies. This explains why certain motifs may not visually appear as harmonic functions (e.g., motif 93 in Figure 4), yet their Fourier spectra reveal characteristics consistent with harmonic functions (see Figure 3). The effect of sampling is further demonstrated in Figure 5, which compares the 93rd Fourier basis generated according to Equations 6.1 at two different sampling sizes.

On the first plot the sine curve is being sampled a high frequency with 500 sampling points; whereas the sampling points are reduced to on the second plot. On the third plot we see that they are generated by the exact same function but with different sampling frequency. Notice that the first plot appears to be harmonic but the second one does not.

Therefore, While this function may not visually resemble a harmonic function at the default sampling size of , increasing the sampling size to makes the function progressively align with the visual properties of a harmonic function.

The reason the Fourier spectra align with those of harmonic functions in the computational process is due to the matching of sampling frequencies within the FFT algorithm.

7. Illustrative Examples on Time Series Forecasting

We conclude the paper with numerical experiments illustrating our findings. We compare time series forecasting results using the so-called Reservoir Motif Machines (RMM) [TFL24] and the linear SCR. RMM is a simple time series forecasting method based on the feature space representation of time series derived from linear reservoirs and has demonstrated remarkable predictive performance, even surpassing that of more complex transformer models on univariate time series forecasting tasks. A brief exposition of RMM is presented in Section 2.

We use RMM because it allows us to explicitly define the feature space representation by imposing a set of motifs, rather than having the feature space representation implicitly defined as in classical ESNs, such as SCR. This provides an ideal platform to showcase our theoretical findings: that the feature space representation of the motif space of a linear SCR is the same as that defined by the Fourier basis matrix F in Equation 6.1.

For reproducibility of the experiments, all experiments are CPU-based and are performed on Apple M3 Max with 128GB of RAM. The source code and data of the numerical analysis is openly available at https://github.com/Lampertos/motif_Fourier.

We compare the prediction results on univariate time series forecasting across the following models:

-

(1)

Lin-RMM with SCR motifs and unit spectral radius,

-

(2)

Lin-RMM with Fourier basis motifs as discussed in Section 6, and

-

(3)

Linear SCR with unit spectral radius.

It is essential to emphasize that this experiment is not intended to showcase the predictive capability of the models, but rather to highlight the similarities in feature space representation between the linear SCR model over and the Fourier basis motifs introduced in Section 6. Consequently, hyperparameters are kept constant throughout the experiments to underscore this feature space comparison.

The fixed set of hyperparameters for all experiments are as follows: for input weights, for the number of reservoir neurons, and for the look-back window length. All models are trained using ridge regression with a ridge coefficient of . The prediction horizon is set to .

7.1. Datasets

To facilitate the comparison of our results with the state-of-the art, we have used the same datasets and the same experimental protocols used in the recent time series forecasting papers [ZZP+20]. Those are briefly described below for the sake of completeness.

ETT

The Electricity Transformer Temperature dataset444https://github.com/zhouhaoyi/ETDatasetconsists of measurements of oil temperature and six external power-load features from transformers in two regions of China. The data was recorded for two years, and measurements are provided either hourly (indicated by ’h’) or every minutes (indicated by ’m’). In this paper we used oil temperature of the ETTh1, ETTm1 dataset for univariate prediction with train/validation/test split being // months.

ECL

The Electricity Consuming Load555https://archive.ics.uci.edu/dataset/321/

electricityloaddiagrams20112014 consists of

hourly measurements of electricity consumption in kWh for 321 Portuguese clients during two years.

In this paper we used client MT 320 for univariate prediction. The train/validation/test split is 15/3/4 months.

Weather

The Local Climatological Data (LCD) dataset666https://www.ncei.noaa.gov/data/local-climatological-data/ consists of hourly measurements of climatological observations for 1600 weather stations across the US during four years. The dataset was used for univariate prediction of the Wet Bulb Celcius variable.

7.2. Discussion

From Figure 6 and Figure 7 we see that there’s virtually no difference between Lin-RMM with unit spectral radius SCR motifs and Lin-RMM with Fourier basis. This confirms our observations in the previous sections. Furthermore Figure 6 also confirms that Lin-RMM with unit spectral radius SCR motifs (red bar) has superior performance against classical SCR with unit spectral radius, this affirms the studies in [TFL24].

Amongst RMM’s we compare the MSE loss in Figure 7. Notice that the difference between the MSE loss between RMM under Fourier motifs and SCR motifs are around which is negligible compared to the MSE loss of the models against standardized input signals.

8. Conclusion

Linear recurrent neural networks (RNN), such as Echo State Networks (ESN) can be thought of as providing feature representations of the input-driving time series in their state space [Tin20, GGO22]. By endowing the feature (state) space with the canonical dot-product, one can “reverse engineer” the corresponding inner product in the space of time series (time series kernel) that is defined through the the dot product in the RNN state space [Tin20]. This in turn helps to shed light on the inner representational schemes employed by the RNN to process the input-driving time series. In particular, the induced (semi-)inner product in the time series space can be theoretically analyzed through eigen-decomposition of the corresponding metric tensor. The eigenvectors (time series motifs) define the projection basis of the induced feature space and the (decay of) eigenvalues its dominant subspace and effective dimensionality. The induced time series kernels by the Simple Cycle Reservoir (SCR) models were shown to be superior (in terms of dimensionality, motif variability, and memory) to several alternative ESN constructions [Tin20].

In this paper we have shown a rather surprising result: When SCR is constructed at the edge of stability, the basis of its induced time series feature space correspond to the well known and widely used basis for signal decomposition - namely the Fourier basis.

This insight also explains the reduction in relative area covered by Fourier representations of SCR motifs observed by [Tin20] at the edge of stability. Our results imply that the feature space representation of a linear SCR at unit spectral radius effectively performs a weighted projection onto the Fourier basis.

This observation is supported by numerical experiments, in which we compared the time series forecasting accuracy of Lin-RMM with the motif space defined by a linear SCR at unit spectral radius and the Fourier basis, respectively.

References

- [BVdSBV22] Ian Bauwens, Guy Van der Sande, Peter Bienstman, and Guy Verschaffelt. Using photonic reservoirs as preprocessors for deep neural networks. Frontiers in Physics, 10:1051941, 2022.

- [CB76] A. Cantoni and P. Butler. Eigenvalues and eigenvectors of symmetric centrosymmetric matrices. Linear Algebra and its Applications, 13(3):275–288, 1976.

- [FLT24] Robert Simon Fong, Boyu Li, and Peter Tiňo. Universality of real minimal complexity reservoir. arXiv preprint arXiv:2408.08071, 2024.

- [GGO22] Lukas Gonon, Lyudmila Grigoryeva, and Juan-Pablo Ortega. Reservoir kernels and volterra series. arXiv preprint arXiv:2212.14641, 2022.

- [HVK+20] Krishan Harkhoe, Guy Verschaffelt, Andrew Katumba, Peter Bienstman, and Guy Van der Sande. Demonstrating delay-based reservoir computing using a compact photonic integrated chip. Optics express, 28(3):3086–3096, 2020.

- [Jae01] H. Jaeger. The ”echo state” approach to analysing and training recurrent neural networks. Technical report gmd report 148, German National Research Center for Information Technology, 2001.

- [LBFM+17] Laurent Larger, Antonio Baylón-Fuentes, Romain Martinenghi, Vladimir S Udaltsov, Yanne K Chembo, and Maxime Jacquot. High-speed photonic reservoir computing using a time-delay-based architecture: Million words per second classification. Physical Review X, 7(1):011015, 2017.

- [LFT24] Boyu Li, Robert Simon Fong, and Peter Tiňo. Simple Cycle Reservoirs are Universal. Journal of Machine Learning Research, 25(158):1–28, 2024.

- [LJ09] M. Lukosevicius and H. Jaeger. Reservoir computing approaches to recurrent neural network training. Computer Science Review, 3(3):127–149, 2009.

- [MNM02] W. Maass, T. Natschlager, and H. Markram. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Computation, 14(11):2531–2560, 2002.

- [RT10] Ali Rodan and Peter Tiňo. Minimum complexity echo state network. IEEE transactions on neural networks, 22(1):131–144, 2010.

- [TD01] P. Tiňo and G. Dorffner. Predicting the future of discrete sequences from fractal representations of the past. Machine Learning, 45(2):187–218, 2001.

- [TFL24] Peter Tiňo, Robert Simon Fong, and Roberto Fabio Leonarduzzi. Predictive modeling in the reservoir kernel motif space. In 2024 International Joint Conference on Neural Networks (IJCNN), pages 1–8, 2024.

- [Tin20] Peter Tino. Dynamical systems as temporal feature spaces. J. Mach. Learn. Res., 21:44–1, 2020.

- [ZZP+20] Haoyi Zhou, Shanghang Zhang, Jieqi Peng, Shuai Zhang, Jianxin Li, Hui Xiong, and Wan Zhang. Informer: Beyond efficient transformer for long sequence time-series forecasting. In AAAI Conference on Artificial Intelligence, 2020.