Linear MIMO Precoders Design for Finite Alphabet Inputs via Model-Free Training

Abstract

This paper investigates a novel method for designing linear precoders with finite alphabet inputs based on autoencoders (AE) without the knowledge of the channel model. By model-free training of the autoencoder in a multiple-input multiple-output (MIMO) system, the proposed method can effectively solve the optimization problem to design the precoders that maximize the mutual information between the channel inputs and outputs, when only the input-output information of the channel can be observed. Specifically, the proposed method regards the receiver and the precoder as two independent parameterized functions in the AE and alternately trains them using the exact and approximated gradient, respectively. Compared with previous precoders design methods, it alleviates the limitation of requiring the explicit channel model to be known. Simulation results show that the proposed method works as well as those methods under known channel models in terms of maximizing the mutual information and reducing the bit error rate.

Index Terms:

Autoencoders, deep learning, finite alphabet, linear precoders, MIMO.I Introduction

Linear precoding prevails as a key technology in multiple-input multiple-output (MIMO) systems, which can improve both the transmission rate and communication quality [1]. To achieve the MIMO system capacity, various precoding methods based on the Gaussian channel input assumption have been proposed. Among them, water-filling (WF) [2] has been proved to be the theoretically optimal precoding method, which can achieve the channel capacity. However, in practical systems, channel inputs are usually drawn from finite alphabets, such as phase-shift keying (PSK) signals, quadrature-amplitude modulation (QAM) signals, etc. Therefore, those methods based on the Gaussian input assumption would inevitably cause performance loss, making it more valuable to study the design methods of precoders taking into account the impacts of finite alphabets inputs [3]. Under this premise, the precoding design goal is usually to maximize the channel input-output mutual information [3]. For instance, [3] proposes mercury/water-filling (MWF), which is an optimal power allocation method for independent parallel additive white Gaussian noise (AWGN) channel. Also, [4] have proved that the mutual information is a concave function with respect to (w.r.t.) the squared singular values of the precoding matrix if its right singular vectors are fixed. Thus, for a general vector Gaussian channel, the design of precoding matrix can be reduced to the power distribution matrix design and the right singular matrix design by the singular value decomposition (SVD) of the precoding matrix [5].

In recent years, due to the widespread use of machine learning (ML), the transmitter and receiver in a communication system based on autoencoders (AE) can be optimized in pairs, rather than the separated approach as in traditional methods, which helps achieve better performance over the whole system [6]. Particularly, [7] has proved that the process of AE training on MIMO precoders and receivers can maximize the mutual information, by properly selecting the activation function and loss function. It is worth mentioning that the time complexity in the optimization process can be reduced significantly [7], as the network training process avoids the explicit calculation of the mutual information and its gradient.

Despite the promised potential performance gain, these aforementioned precoding design methods are based on the assumption that a complete channel model can be obtained at the transmitter. In fact, on the one hand, the channel model in practical systems is hard to derive or estimate and only the input and output data can be observed; on the other hand, channel estimation with errors would inevitably occur and lead to performance loss[8]. As such, [9] proposes an alternating model-free algorithm for training AEs in point-to-point single-input single-output (SISO) communication systems without channel models. Unfortunately, [9] focuses only on the AWGN and Rayleigh block-fading channels without considering the role of precoding. In addition, it does not make the channel inputs be drawn from finite alphabets. Instead, it sends the message directly to the channel with a normalized energy through the neural networks (NN). Therefore, this method is difficult to be applied in practical MIMO systems.

In this paper, a novel AE-based design method for linear MIMO precoders with finite alphabet inputs is presented, which can be applied when the specific channel model is unknown. In such cases, the channels are non-differentiable and thus conventional gradient-based training through backpropagation is not applicable, e.g., [7]. To circumvent this problem, we provide an iterative training algorithm to optimize the precoder and the receiver, which can be regarded as two independent parameterized functions. This model-free algorithm iterates between training receivers with the true gradients and training precoders with the estimated gradients by treating the channel inputs as random variables.

To summarize, the main contributions of this paper are as follows: i) By alleviating the limitation of existing precoding design schemes in requiring a complete channel model, precoders can be designed under directly observable channel information, which avoids the bad influence of channel modeling error on the precoders design; ii) Jointly optimizing the receiver and the precoder in MIMO systems for the practical channel information. Simulation results show that the proposed method can achieve similar performance to the model-based algorithm in maximizing the mutual information and has admitted a good performance in reducing the bit error rate (BER).

Notations: Boldface uppercase (lowercase) letters denote matrices (column vectors). denotes the identity matrix. is the set of real (complex) numbers. and denote the real part and the imaginary part of the complex matrix, respectively. is the Gaussian distribution with mean and covariance . denotes the information entropy of the random variables. represents the expectation w.r.t. . denotes the trace of the matrix. means a block diagonal matrix. The gradient and Jacobian operators w.r.t. the set of parameters are both denoted by ; and the superscripts , , and represent transpose, conjugate transpose operations, and -norm, respectively.

II System Model

Considering a MIMO communication system which has antennas at the transmitter and antennas at the receiver, the received signal can be expressed as:

| (1) |

where is the channel matrix, is the linear precoder, is the circularly symmetric white Gaussian noise whose covariance matrix is , and is the input signal of zero mean and covariance . Based on the assumption of finite alphabet inputs, the input signal is equiprobably drawn from a set of discrete constellations such as -ary PSK or QAM with .

Aiming at maximizing the mutual information between channel input and output , the design problem of a precoder with the finite alphabet inputs can be formulated as:

| (2) |

where the mutual information can be described as [10]:

| (3) |

with

III Algorithm Design

The MIMO communication system based on the AE presented in this paper includes three parts: the transmitter, the channel, and the receiver, as shown in Fig. 1. First, the transmitter part is equivalent to the signal modulation and precoding process. Second, the channel corresponds to a random system [9], whose output follows , i.e., a conditional probability distribution w.r.t. input . In the AE, it can be regarded as a layer of untrainable network parameters, which transmits signals to the receiver through forward propagation. Finally, the receiver recovers the transmitted information from the received signal through NNs. In this paper, both the precoding and receiver network are the parts to be optimized by minimizing the loss function.

III-A Model-free Training Process Overview of Precoders Design based on AE

In the proposed method, we regard the precoder and the receiver as two independent parameterized functions to optimize: (i) the precoder is presented by the function , where is the parameter matrix of the precoder; (ii) the receiver is implemented as , where is the parameter vector of receivers, and is the probability vector of the transmitted information. Since the NN implementation is limited to the range of real numbers, in this paper, the real and imaginary parts of complex signals involved need to be reshaped into real number vectors before further processing.

Considering the channel follows a conditional probability distribution w.r.t. the channel input, the loss function of the system can be expressed as:

| (4) |

where represents the precoded signal, denotes the received signal, as well as the channel output, and is the sample loss function defined as the categorical cross-entropy (CE) [9, 7] between the th input signal and the th received signal , is the batch size of training samples. The approximation in (4) means that we can use the sample mean to estimate the mathematical expectation.

Then, the corresponding optimization problem is formulated as:

| (5) |

When Softmax is adopted as the activation function of the output layer, inspired by [7], then we have

| (6) |

Since , the problem in (5) is nearly the same as the problem in (2), i.e., the precoder obtained by training the AE can also maximize the mutual information.

The training process requires the derivative of the loss function, i.e.,. To enable the training for the proposed framework in the absence of the channel model, inspired by [9], we present an alternating training method with the exact gradient and the approximated gradient, respectively, as shown in Algorithm 1. It should be noted that since the loss function in this algorithm is defined as a categorical CE function, the transmitted information should be in the form of one-hot and then mapped to corresponding discrete constellation points, as shown in Fig. 1. We adopt the Adam optimizer [13] for training.

III-B Receiver Training

According to (4), the gradient of w.r.t. can be expressed as:

| (7) |

It can be seen that there is no need to acquire the channel model, as the calculation process only needs to sample the received signal.

Algorithm 2 is the detailed algorithm for training the receiver. First, the signal source randomly generates a batch of one-hot transmitted information , which is a -by- matrix and then mapped to the finite alphabet input signals . Next, the signals are multiplied by to obtain the precoded signals , and then enters the channel for transmission. The receiver acquires the channel output . After is fed to the receiver network, a batch of probability matrix over the transmitted information are given for the calculating loss . Finally, can perform a one-step update using by (7). As the channel model is unknown, lines 4, 5 in Algorithm 2 only carry out forward propagation and do not need to record the gradient.

III-C Precoder Training

According to (4), the gradient of w.r.t. is:

| (8) |

Since the channel model is unknown and cannot be calculated, inspired by [9], we resort to another approach that relax the channel input into a random variable , which follows a distribution of , where refers to the delta distribution. The position of the relaxation operation in the algorithm process is shown in the dotted box in Fig. 1. Then, the relaxed system loss can be expressed as:

| (9) |

Besides, can be approximated by the following expression:

| (10) |

where represents the approximated probability distribution function of , whose variance is , since is non-differentiable. As such, the function requiring the derivative of is subtly transformed from the unknown channel model to the known distribution . Through the estimated gradient in (10), we can complete the training process of precoders without the need of the channel model.

Algorithm 3 is the detailed algorithm for training the precoder. The signal source randomly generates the transmitted information and maps them to the input signals . After precoding, can be obtained. For the approximated gradient calculation, it is necessary to relax into random variables , and then sends the random variables to the channel for transmission to obtain the received signals . The receiver then establishes the probability matrix of the received signals over the transmitted information and then calculates the per-sample loss . However, at this time, does not directly calculate the gradient of , as shown in (10), and should be used to calculate the approximated gradient. Then, can perform a step update through , which still needs to satisfy the power constraints of the precoders. In order to ensure that can be estimated and receiver parameters are not affected, lines 6, 7 in Algorithm 3 only carry out forward propagation and do not need to record the gradient. In other words, the channel in this algorithm is only used to observe its input and the corresponding output, and the complete channel model itself does not participate in the precoders design process.

III-D Network Structure

Fig. 2 shows the network structure of the algorithm proposed in this paper, where Fig. 2 is the precoding part and Fig. 2 is the receiver part. As shown in the figure, the precoding matrix is implemented by a linear layer without bias, whose parameters are . The network structure of the receiver is mainly composed of two parts: the first one is the “equalization” network, which consists of two linear layers, and the activation function between is the function; the second one is the decision network, which is composed of multiple linear layers, between which the activation function is ReLU, and Softmax at the output layer. It should be noted that since the proposed method is used in the case of unknown channel models, the receiver may show poor performance [9] without prior information. Therefore, in order to improve the accuracy of the receiver, the “equalization” network is added to extract part of implicit prior channel information from the received signals. Then, it calculates the product of and the received signal , similar to the channel equalization in traditional communication systems. Next, the received signals after “equalization” can be exploited to establish the probability matrix over the input information through the decision network, and finally the loss function and the corresponding gradient can be calculated to train the whole network. It should be noted that is just an intermediate variable of the receiver, which does not correspond strictly to the true channel model. Instead, it can be regarded as the implicit channel information, explaining the reason why such a structure can improve the performance of the receiver. The method itself does not need to assume the channel model in advance.

III-E Improvement and Some Applications of the Proposed Linear Precoders Design Method

As described in Section III-A, when Softmax and the categorical CE are adopted, the sizes of layers in the AE grow exponentially with the number of , which is very expensive in large systems. Therefore, inspired by [7], we use bits to represent one training sample. Then, the loss function and the activation function at the last layer of the receiver will be adjusted to binary CE function and Sigmoid accordingly. Such a choice may make the process of training AE equivalent to maximizing the lower bound of mutual information [7]. Even so, the proposed method is easier to generalize to more complex scenarios, such as MIMO Multiple Access Channels (MAC) with finite discrete inputs, MIMO orthogonal frequency-division multiplexing (MIMO-OFDM) systems, etc. The accurate channel models of these systems are complex, while the assumptions of the channel models are relatively simple in the traditional research methods, or the channel model contains some non-differentiable components (such as preamble insertion in MIMO-OFDM), so the simulation results may differ greatly from the actual performance. The proposed method without the knowledge of an accurate channel model would have inherent advantages in these systems and may alleviate this problem to some extent.

For example, in a -user MIMO MAC communication system, considering the signal model:

| (11) |

where represents the complex channel matrix between the th transmitter and the receiver; is each user’s precoding matrix; contains the signal of all transmitters, assuming of different users are independent from each other; the receiver noise , and . Suppose there are antennas at the receiver and each user has transmit antennas.

According to [14], the boundary of the constellation-constrained capacity region can be characterized by the solution of the sum rate optimization problem. Then, the proposed precoders design methods can be applied by: i) using bits to represent one training target; ii) the parameter of the precoders is adjusted to Bdiag, where ; iii) the receiver function correspondingly becomes as . Since the channel model of each user is unknown, the precoder and receiver parts still need the true and estimated gradients for training, respectively. It should be noted that because the optimal precoders of different users depend on each other [14], we should iteratively optimize one user’s precoder at a time with others fixed.

IV Numerical Results

In this section, simulation results are provided to evaluate the performance of the proposed method of linear precoders design, in terms of maximizing the mutual information and the impacts on the BER of the MIMO communication system, compared with some existing design approaches.

In the receiver part of the AE, the decision network is parameterized with 3 dense layers with size 128 each in this experiment while the “eqalization” part has 2 dense layers with size 64 each. The maximum iteration number of the Algorithm 1 is set as 5000 and the batchsize . The learning rate for Adam step on the receiver and precoder training are both set as . Also, the SNR is defined as .

When training the precoder, we utilize the normal distribution with mean and variance to relax the channel input, i.e., , where and the variance will be appropriately selected to adapt different scenarios. The smaller the variance is, the estimated gradient can be more accurate, but it would lead to a slower convergence rate, which would reflect in the mutual information fluctuation of the low SNR region. However, when the variance is larger, the algorithm may converge to the local optimum, and the mutual information in the high SNR regime would have a significant loss. Therefore, in this paper, is selected from .

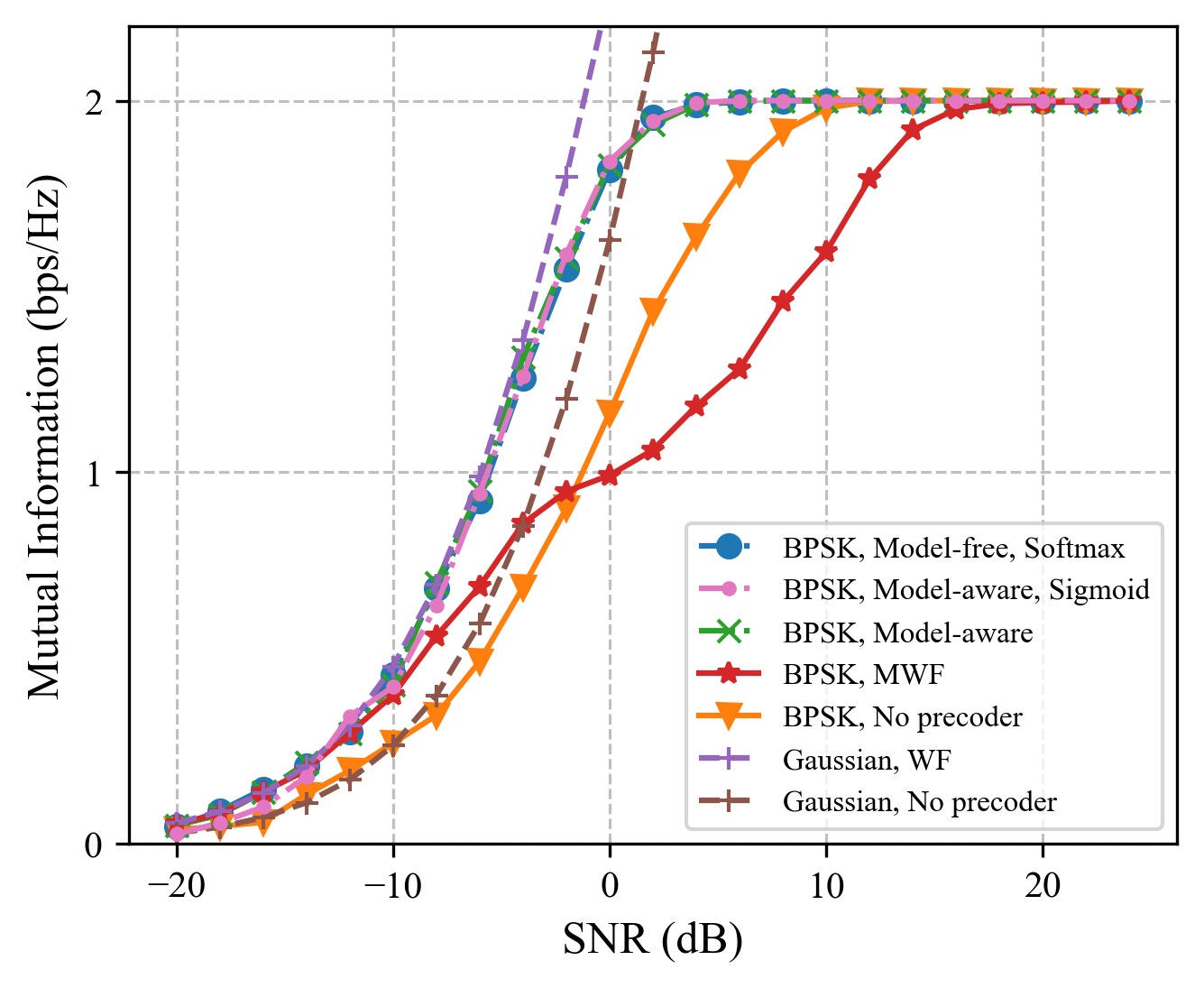

Fig. 3 shows the mutual information results of several precoder design methods with different channels and modulation orders, where and . We regard the DNN-based precoders design scheme with the complete channel model proposed by [7] as the model-aware method, compared with the model-free method proposed in this paper. Analyzing Fig. 3, in the low SNR region, the mutual information maximization problem is approximated to the power allocation problem. With the SNR increasing, the power allocation method MWF has a large loss for giving up the part of searching space related to the right singular matrix of the precoder. In this case, the precoders design method based on AE shows a large gain and the model-free method can be almost consistent with the model-aware method since the estimated gradient in this approach is close to the true one as much as possible by adjusting . It can also be seen that the performance of the model-free method with Sigmoid is slightly worse than that with Softmax, because it maximizes the lower bound of the mutual information rather than the mutual information itself.

Fig. 4 shows the BER performance of the proposed method with and BPSK modulation. In the simulation, the (648, 486) low-density parity-check (LDPC) code in the IEEE 802.11 is adopted for error correction and the maximum a posterior probability (MAP) criterion is used for detection. The iteration between the MAP detector and the LDPC decoder is 5. In this simulation, both the model-free and the model-aware methods are trained at SNR dB. We observe that the performance of the model-free (no matter with Softmax or Sigmoid activation function) methods approach the BER of the model-aware method closely, both of which have a significant gain over the case of MWF or no precoder. This is consistent with the conclusion of the mutual information results.

V Conclusion

In this paper, we solved the linear precoding design problem for finite alphabet inputs in MIMO systems without a channel model. Taking the advantages of the model-free network based AE, the alternating training on the receiver and the precoder was performed through the exact and estimated gradients, respectively. The proposed method obtained not only the precoders for maximizing the mutual information between channel inputs and outputs, but also the corresponding receiver, alleviating the high requirement of the existing methods on the perfect channel state information. The simulation results showed that this no-channel-model design method achieved the performance of the complete-channel-model design method in terms of mutual information and bit error rate, offering practical insights for linear MIMO precoders design.

References

- [1] N. Fatema, G. Hua, Y. Xiang, D. Peng, and I. Natgunanathan, “Massive MIMO linear precoding: A survey,” IEEE Syst. J, vol. 12, no. 4, pp. 3920–3931, Dec. 2018.

- [2] G. Scutari, D. P. Palomar, and S. Barbarossa, “The MIMO iterative waterfilling algorithm,” IEEE Trans. Signal Process., vol. 57, no. 5, pp. 1917–1935, May 2009.

- [3] A. Lozano, A. M. Tulino, and S. Verdaú, “Optimum power allocation for parallel Gaussian channels with arbitrary input distributions,” IEEE Trans. Inf. Theory, vol. 52, no. 7, pp. 3033–3051, Jul. 2006.

- [4] M. Payaró and D. P. Palomar, “On optimal precoding in linear vector Gaussian channels with arbitrary input distribution,” in Proc. IEEE Int. Symp. Inf. Theory, Seoul, Korea (South), 2009, pp. 1085–1089.

- [5] A. A. Lu, X. Gao, Y. R. Zheng, and C. Xiao, “Linear precoder design for SWIPT in MIMO broadcasting systems with discrete input signals: Manifold optimization approach,” IEEE Trans. Commun., vol. 65, no. 7, pp. 2877–2888, Apr. 2017.

- [6] T. O’Shea and J. Hoydis, “An introduction to deep learning for the physical layer,” IEEE Trans. Cogn. Commun. Netw., vol. 3, no. 4, pp. 563–575, Dec. 2017.

- [7] S. Jing and C. Xiao, “Linear MIMO precoders with finite alphabet inputs via stochastic optimization and deep neural networks (DNNs),” IEEE Trans. Signal Process., vol. 69, pp. 4269–4281, Jul. 2021.

- [8] S. Dörner, S. Cammerer, J. Hoydis, and S. T. Brink, “Deep learning based communication over the air,” IEEE J. Sel. Topics Signal Process., vol. 12, no. 1, pp. 132–143, Feb. 2018.

- [9] F. A. Aoudia and J. Hoydis, “Model-free training of end-to-end communication systems,” IEEE J. Sel. Areas Commun., vol. 37, no. 11, pp. 2503–2516, Nov. 2019.

- [10] C. Xiao, Y. R. Zheng, and Z. Ding, “Globally optimal linear precoders for finite alphabet signals over complex vector Gaussian channels,” IEEE Trans. Signal Process., vol. 59, no. 7, pp. 3301–3314, Jul. 2011.

- [11] Y. R. Zheng, M. Wang, W. Zeng, and C. Xiao, “Practical linear precoder design for finite alphabet multiple-input multiple-output orthogonal frequency division multiplexing with experiment validation,” IET Commun., vol. 7, no. 9, pp. 836–847, Jun. 2013.

- [12] T. Ketseoglou and E. Ayanoglu, “Zero-forcing per-group precoding for robust optimized downlink massive MIMO performance,” IEEE Trans. Commun., vol. 67, no. 10, pp. 6816–6828, Jul. 2019.

- [13] D. P. Kingma and J. Ba, “ADAM: A method for stochastic optimization,” in Proc. Int. Conf. Learn. Representations, Banff, Canada, 2014.

- [14] M. Wang, W. Zeng, and C. Xiao, “Linear precoding for MIMO multiple access channels with finite discrete inputs,” IEEE Trans. Wireless Commun., vol. 10, no. 11, pp. 3934–3942, Nov. 2011.