Likelihood-free Inference with Mixture Density Network

Abstract

In this work, we propose using the mixture density network (MDN) to estimate cosmological parameters. We test the MDN method by constraining parameters of the CDM and CDM models using Type Ia supernovae and the power spectra of the cosmic microwave background. We find that the MDN method can achieve the same level of accuracy as the Markov Chain Monte Carlo method, with a slight difference of . Furthermore, the MDN method can provide accurate parameter estimates with forward simulation samples, which are useful for complex and resource-consuming cosmological models. This method can process either one data set or multiple data sets to achieve joint constraints on parameters, extendable for any parameter estimation of complicated models in a wider scientific field. Thus, the MDN provides an alternative way for likelihood-free inference of parameters.

2022 September 2

1 Introduction

In research on cosmology, precise parameter estimation is one of the most important steps to understanding the physical processes in the universe. The most commonly used method for parameter estimation is the Markov Chain Monte Carlo (MCMC) method. However, we often encounter scenarios where although we can generate simulated data through forward simulations, the likelihood is intractable in practice. In addition, simulations may consume a lot of time and computational resources, making parameter inference unpragmatic—for example, for physics related to nonlinear structure formation on small scales (Springel, 2005; Klypin et al., 2011) and the epoch of reionization (Mesinger et al., 2016; Kern et al., 2017). Therefore, we need to develop new methods to circumvent these problems.

Recently, the artificial neural network (ANN) with many hidden layers has achieved significant improvement in many fields including cosmology and astrophysics. For example, it performs excellently in searching for and estimating the parameters of strong gravitational lenses (Hezaveh et al., 2017; Jacobs et al., 2017; Petrillo et al., 2017; Pourrahmani et al., 2018; Schaefer et al., 2018; Li et al., 2020, 2021), analyzing gravitational waves (George et al., 2018a; George & Huerta, 2018b, c; Shen et al., 2019; Li et al., 2020), classifying the large-scale structure of the universe (Aragon-Calvo, 2019), discriminating between cosmological and reionization models (Schmelzle et al., 2017; Hassan et al., 2018), recovering the cosmic microwave background (CMB) signal from contaminated observations (Petroff et al., 2020; Casas et al., 2022; Wang et al., 2022), reconstructing functions from observational data (Wang et al., 2020b; Escamilla-Rivera et al., 2020; Wang et al., 2021), and even estimating cosmological and astrophysical parameters (Shimabukuro & Semelin, 2017; Fluri et al., 2018; Schmit & Pritchard, 2018; Fluri et al., 2019; Ribli et al., 2019; Wang et al., 2020a; Ntampaka et al., 2020; Nygaard et al., 2022).

As an application of the ANN, the mixture density network (MDN; Bishop 1994) is designed to model the conditional probability density . The MDN is a combination of the ANN and mixture model, which in principle represents an arbitrary conditional probability density. Much of recent literature has used the MDN to model parameters (Zen & Senior, 2014; Alsing et al., 2018, 2019; Kruse, 2020; Zhao et al., 2022). In Alsing et al. (2018), the MDN was used to model the joint probability density , where is the summary statistics of the data. Then, the posterior distribution was obtained by evaluating the joint probability density at the observational summary , . In comparison, in Alsing et al. (2019), the MDN was used to model the conditional probability density , and then the likelihood could be obtained at the observational summary . The posterior distribution could be obtained by multiplying the likelihood and the prior: .

In this work, we show that for one-dimensional observational data, the MDN is capable of estimating cosmological parameters with high accuracy. The MDN used here aims to model the conditional probability density , and the posterior distribution can be obtained at the observational data . Two mixture models are considered in our analysis: the Gaussian mixture model and the beta mixture model. We test the MDN method by estimating parameters of the CDM and CDM cosmologies using Type Ia supernovae (SN-Ia) and the angular power spectra of the CMB. The code and examples are available online.000https://github.com/Guo-Jian-Wang/mdncoper

This paper is organized as follows: In Section 2, we illustrate the method of estimating parameters using the MDN, which includes an introduction to the ANN, mixture model, MDN, and training and parameter inference method. Section 3 shows the application of the MDN method to the Pantheon SN-Ia. Section 4 presents a joint constraint on parameters using the power spectra of the Planck CMB and the Pantheon SN-Ia. Section 5 shows the effect of hyperparameters of the MDN on the parameter inference. In Section 6, we illustrate the beta mixture model. A discussion of the MDN method is presented in Section 7. We then conclude in Section 8.

2 Methodology

2.1 ANNs

An ANN is a computing system inspired by the biological neural network. The unit of an ANN is called a neuron (see Figure 1), and an ANN consists of many neurons. Each neuron transforms the input from other neurons and gives an output

| (1) |

where is the input of a neuron, is a nonlinear function that is usually called the activation function, and and are parameters to be learned by the network. The parameters of an ANN model can be optimized by minimizing the loss function in the training process. The loss function used in this work will be described in Section 2.3. In our analysis, we consider the randomized leaky rectified linear unit (RReLU; Xu et al. 2015) as the activation function:

| (2) |

where is a random number sampled from a uniform distribution , where . Here we adopt the default settings of and in PyTorch111https://pytorch.org/docs/stable/index.html. A discussion about the effect of activation functions on the result will be shown in Section 5.3.

The general structure of an ANN is composed of many layers, and each layer contains many neurons. There are three types of layers in a network: an input layer, one or more hidden layers, and an output layer. Data is fed to the input layer, and then the information is passed through each hidden layer and finally computed from the output layer. Furthermore, the batch normalization technique (Ioffe & Szegedy, 2015) is applied before each nonlinear layer to facilitate optimization and speedup convergence.

In general, there is no theory to determine exactly how many neurons should be used in each hidden layer. Here we adopt the model architecture used in Wang et al. (2020a), i.e. the number of neurons in each hidden layer is decreased in proportion to the layer index. The number of neurons in the th hidden layer is therefore

| (3) |

where is the number of neurons in the input layer and is a decreasing factor defined by

| (4) |

where is the number of neurons in the output layer and is the number of hidden layers. In our analysis, we consider a network with three hidden layers. We discuss the effect of the number of hidden layers on the result of estimation in Section 5.2.

2.2 Mixture Model

A mixture model is a probabilistic model that assumes all data points are generated from a mixture of a finite number of distributions with unknown parameters, where the distribution can be of any type. In Figure 2 we show an example of a mixture model of a parameter. For measurement and parameters , the probability density of with components has the form

| (5) |

where is a mixture weight representing the probability that belongs to the th component. The mixture weights are nonnegative and their sum is normalized, i.e. .

The normal (or Gaussian) distribution acts as the foundation for many modeling approaches in statistics. Therefore, in the following analysis, we mainly consider the Gaussian distribution as the unit of the mixture model. In Section 6, we also discuss the applications of the beta mixture model in the parameter estimation. Therefore, for the case of only one parameter, Equation (5) becomes

| (6) |

For multiple parameters, Equation (5) becomes

| (7) |

where is the number of parameters.

2.3 MDN

An MDN is a combination of an ANN and a mixture model. It learns a mapping between the measurement and parameters of the mixture model. For an MDN with Gaussian components specifically, it learns a mapping between the measurement and the parameters of the Gaussian mixture model (, , and (or for the case of only one parameter)). Figure 3 shows the general structure of an MDN with Gaussian components. The left side of the MDN accepts the measurement as input and then the parameters of the mixture model are computed from the right side.

The MDN model shown in the Figure 3 is for the case of single observational data. But in cosmology we usually need to combine multiple data sets to estimate cosmological parameters. Therefore, following Wang et al. (2020a), we design a multibranch MDN for a joint constraint on parameters using multiple data sets, as shown in Figure 4. There are multiple branches on the left side of the model that can accept multiple observational data sets as input. Then, the information will be combined in the middle of the model (the yellow part of Figure 4), and finally the MDN outputs the parameters of the mixture model.

The parameters (e.g. and in Equation (1)) of an MDN can be learned by minimizing the loss function (Kruse, 2020):

| (8) | |||||

where the loss is averaged over the minibatch input samples to the network. Specifically, for an MDN with Gaussian components and the case of only one parameter, Equation (8) becomes

| (9) |

Because small values in the exponent will give very small values in the logarithm that can lead to numerical underflow, we therefore, use the Log-Sum-Exp trick and carry out the calculations in the logarithmic domain for numerical stability. Thus, Equation (9) can be rewritten as

| (10) |

where

| (11) |

For the case with multiple parameters, Equation (8) becomes

| (12) | |||||

Considering the numerical stability, this equation can be written as

| (13) |

where

| (14) | |||||

Note that for a valid multivariate Gaussian distribution, and here must be positive-definite matrices. Therefore, the precision matrix can be characterized by its upper Cholesky factor :

| (15) |

where is an upper triangular matrix with strictly positive diagonal entries. There are nonzero entries for , which is much less than that of ( entries). Thus, if the MDN learns instead of , there will be more neurons in the network, which will increase the training time. Also, the output of the network may also make a non-positive-definite matrix. Therefore, in order to make the convergence of the network more stable, the MDN is actually learns . Outputs of the network can be either positive or negative numbers. Thus, to ensure the diagonal entries of are positive, we use a Softplus function to enforce the positiveness of the diagonal entries:

| (16) |

where is the output of the network, and

| (17) |

where is a parameter and we set throughout the paper. This offers an efficient way to calculate the matrix in Equation (14):

| (18) |

Therefore,

| (19) |

In addition,

| (20) | |||||

Therefore, Equation (14) can be finally written as

| (21) |

2.4 Training Strategy

For parameter estimation with the MDN, we will first train the MDN with simulated data and then estimate parameters with the observational data. In this section, we describe the processes of training and parameter estimation.

2.4.1 Training Set

The basic principle that the MDN can be used for parameter estimation lies in the fact that it can learn a mapping from the input data space to the output parameter space of the mixture model. Note that the parameters of the mixture model are functions of the cosmological parameters to be estimated. Therefore, the parameter space of the cosmological model in the training set should be large enough to cover the posterior distribution of the cosmological parameters. Following Wang et al. (2020a), we set the range of parameters in the training set to , where is the best-fitting value of the posterior distribution of cosmological parameters and is the corresponding error. Note that the best-fitting value here refers to the mode of the posterior distribution. In this parameter space, the cosmological parameters in the training set are simulated according to the uniform distribution.

From the illustrations of Sections 2.2 and 2.3, one can see that the error of the cosmological parameters should be calculated by the standard deviation or covariance matrix of the mixture model, which should be determined by the covariance matrix of the observational measurement. This means that the MDN should know what the error looks like. Therefore, inspired by Wang et al. (2020a), we add noise to the training set based on the covariance matrix of the observational measurement. Specifically, Gaussian noise is generated and added to the samples of the training set at each epoch of the training process. Note that the noise level is equal to the observational errors, which is different from Wang et al. (2020a) where multiple levels of noise can be added to the training set. For more details, we refer interested readers to Wang et al. (2020a).

The orders of magnitude of the cosmological parameters may be quite different, which will have an effect on the performance of the network. To address this, data preprocessing techniques should be used before feeding the data to the MDN. Following Wang et al. (2020a), we conduct two steps to normalize the data. First, we divide the cosmological parameters in the training set by their estimated values (here the mean of the parameters in the training set is taken as the estimated value) to ensure that they all become of order of unity; then, we normalize the training set using the -score normalization technique (Kreyszig, 2011):

| (22) |

where and are the mean and standard deviation of the measurement or the corresponding cosmological parameters . This data preprocessing method can reduce the influence of differences between parameters on the result, thus making the MDN a general method that can be applied to any cosmological parameters.

2.4.2 Training and Parameter Estimation

Here we illustrate how to train an MDN and estimate parameters using a well-trained MDN model. The key steps of the training process are as follows:

-

1.

Provide a cosmological model and the initial conditions of the corresponding cosmological parameters.

-

2.

Simulate the training set using the method illustrated in Section 2.4.1.

-

3.

Add noise and conduct data preprocessing on the training set using the method illustrated in Section 2.4.1.

-

4.

Feed the training set to the MDN and train the model.

-

5.

Feed the observational measurement to the well-trained MDN model to obtain the parameters of the mixture model.

- 6.

-

7.

Calculate the best-fitting values and errors of the cosmological parameters by using the ANN chain. Then, the parameter space to be learned will be updated according to the estimated parameters.

- 8.

Note that the initial conditions of cosmological parameters set in step 1 are general ranges of the parameters. This means that the true parameters can be located outside of these ranges. Therefore, the parameter space should be updated in step 7 to continue training.

3 Application to SN-Ia

In this section, we apply the MDN method to the SN-Ia data set to estimate parameters of the CDM cosmology.

3.1 SN-Ia data

To test the capability of the MDN for estimating parameters from one data set, we constrain and of the CDM model using the Pantheon SN-Ia (Scolnic et al., 2018), which contain 1048 data points for the redshift range [0.01, 2.26]. The distance modulus of the Pantheon SN-Ia can be rewritten as

| (23) |

where is the corrected apparent magnitude, and is the -band absolute magnitude (Scolnic et al., 2018). The variable is the coefficient of the relation between luminosity and stretch, is the coefficient of the relation between luminosity and color, and is a distance correction based on predicted biases from simulations.

The measurement of the Pantheon SN-Ia is the corrected apparent magnitudes. Therefore, the input of the MDN is for the observational SN-Ia, and for the simulated data generated by the CDM model. Specifically, we have the distance modulus

| (24) |

and the luminosity distance

| (25) |

where is the speed of light, and is the Hubble constant. The function describes the evolution of the Hubble parameter:

| (26) |

where is the matter density parameter and is the equation of state of dark energy. Equation (26) assumes the spatial flatness. Then we have

| (27) |

As we already know, the absolute magnitude of SN-Ia is strongly degenerated with the Hubble constant . Therefore, we combine and into a new parameter and constrain it with the cosmological parameters simultaneously. To do this, we define a dimensionless luminosity distance

| (28) |

Then Equation (27) can be rewritten as

| (29) |

where is a new nuisance parameter to be estimated with the cosmological parameters simultaneously.

| Methods | ||

|---|---|---|

| Parameters | MCMC | MDN |

3.2 Result

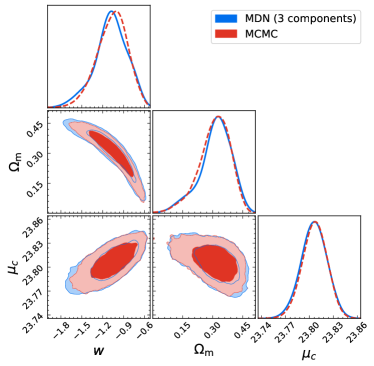

We first constrain , , and the nuisance parameter simultaneously using the Pantheon SN-Ia data without considering systematic uncertainties. Here two methods are considered in our analysis: standard MCMC and the MDN. For the MCMC method, we use the public package emcee (Foreman-Mackey et al., 2013) for parameter estimation, which is a Python module that achieves the MCMC method. During the constraining procedures, an MCMC chain with 100,000 steps is generated after burn-in. The best-fitting values with 1 errors of the parameters are calculated from the MCMC chains with coplot,222https://github.com/Guo-Jian-Wang/coplot as shown in Table 1. We also draw the corresponding one-dimensional and two-dimensional contours in Figure 5. For the MDN method, we consider three Gaussian components and using the setting illustrated in Sections 2.1, 2.3, and 2.4. There are 3000 samples in the training set and 500 samples in the validation set. After training, we feed the corrected apparent magnitudes to the well-trained MDN model to obtain the parameters of the mixture model, and then get an ANN chain of the cosmological parameters. The results are shown in Table 1 and Figure 5. Obviously, the results of the MDN method are almost the same as those of the MCMC method.

We show the losses of the training and validation sets in Figure 6. The loss of the training set is large at the beginning of the training process, and gradually decreases and stabilizes with the epoch. For the validation set, the trend of loss is similar to that of the training set. After 400 epochs, the loss of the validation set is smaller than that of the training set. Obviously, there is no overfitting for the MDN. The reason why the loss of the validation set is smaller than that of the training set is that multiple sets of noise are added to each sample, which means that multiple sets of noise will be generated and added to a sample to ensure that the MDN knows that measurement may differ due to the presence of noise. Our analysis shows that the adding of multiple sets of noise to measurement is beneficial to the stability of the MDN and will make the final estimated cosmological parameters more accurate, especially for the multibranch MDN.

Furthermore, we consider the systematic uncertainties in our analysis to test the capability of the MDN for dealing with observations with covariance. We use the systematic covariance matrix (Scolnic et al., 2018) to generate noise then add it to the training set (see Section 2.4.1). We then constrain the parameters with the MCMC and MDN (with three Gaussian components) methods. The results are shown in Table 2 and Figure 7. The difference between the MDN results and the MCMC results can then be calculated using

| (30) |

where , and and are the best-fitting parameters for the MCMC and MDN methods, respectively. Specifically, the differences for the parameters are , , and , respectively, which are slightly larger for the parameter .

| Methods | ||

|---|---|---|

| Parameters | MCMC | MDN |

We can see that the shapes of both the one-dimensional and two-dimensional contours based on the MDN method are slightly different from those of the MCMC method. The reason is that the joint probability distribution of and deviates from the multivariate Gaussian distribution, which makes it more difficult to model their joint probability distribution. We take two approaches to solve this problem. One is to increase the number of components ( function in Equation (5)), which diversifies the function forms that the MDN can fit. Specifically, we use nine Gaussian components and reestimate the cosmological parameters with the MDN, then plot the one-dimensional and two-dimensional contours in Figure 8. One can see that the MDN result converges to the MCMC result. Quantitatively, the differences between the MDN results and the MCMC results are , , and . These deviations are smaller than those of using three components, especially for parameter . Another way is to more efficiently sample the parameter space. As we illustrated in Section 2.4.1, samples are generated uniformly in the range of the posterior distribution, which might not be the most efficient way. We will explore different sampling methods in future works.

| Methods | ||

|---|---|---|

| Parameters | MCMC | MDN |

4 Joint Constraint on Parameters

Cosmological research often combines multiple data sets to constrain cosmological parameters. So, in this section, we estimate parameters using the multibranch MDN. Our purpose is to verify the feasibility of this method. Therefore, for simplicity of our analysis, we will only consider the use of Planck-2015 temperature and polarization power spectra COM_PowerSpect_CMB_R2.02.fits333http://pla.esac.esa.int/pla/#cosmology and Pantheon SN-Ia data to constrain parameters. These parameters include the -band absolute magnitude (nuisance parameter in SN-Ia) and six parameters in the standard CDM model, i.e. the Hubble constant , the baryon density , the cold dark matter density , the optical depth , and the amplitude and spectral index of primordial curvature perturbations (, ). There are four branches in the multibranch MDN model, and each of them accepts one measurement, namely the TT, EE, and TE power spectra of the CMB, and the corrected apparent magnitudes of the Pantheon SN-Ia. We calculate the power spectra of the CMB with the public code CAMB444https://github.com/cmbant/CAMB (Lewis et al., 2000).

We first constrain these parameters using the MCMC method, and an MCMC chain with 100,000 steps is generated. Then the best-fitting values with the errors of these parameters are calculated using the MCMC chain, as shown in Table 3. The corresponding one-dimensional and two-dimensional marginalized distributions with and contours are shown in Figure 9.

Then, following the same procedure in Section 3, we constrain the parameters using the MDN method. In the training process, 3000 samples are used to train the MDN model, and 500 samples are used as a validation set. After training of the MDN model, we feed the observational TT, EE, and TE power spectra of the Planck CMB, as well as the corrected apparent magnitudes of the Pantheon SN-Ia, to the MDN model to obtain the parameters of the mixture model. Finally, we generate samples using the mixture model to obtain an ANN chain of the cosmological parameters. The best-fitting values and errors calculated using the chain are shown in Table 3, and the corresponding one-dimensional and two-dimensional contours are shown in Figure 9. We can see that both the best-fitting values and the errors are almost the same as those of the MCMC method. The deviations of the parameters between the two methods are , , , , , , and , which are quite small on average. This indicates the MDN method can combine multiple observational data to jointly constrain cosmological parameters, which is generally applicable to all cosmological parameter estimations.

5 Effect of Hyperparameters

There are many hyperparameters that can be selected manually in the MDN model, such as the number of components, the number of hidden layers, the activation functions, the number of training samples, and the number of epochs. In this section, we discuss the effect of these hyperparameters on results. All results in this section are evaluated on observational data or simulated observations that can be taken as the test set.

5.1 The Number of Components

As we have shown in Section 3.2 the number of components of the mixture model may affect the constraints of parameters. Here, we further discuss the effect of the number of components on the result. Specifically, with the same procedure, we estimate and of the CDM model with Pantheon SN-Ia data by considering one, three, five, and seven Gaussian components. The two-dimensional contours of the parameters are shown in Figure 10.

For the case of one component, the constraint is

| (31) |

This result converges to the MCMC result (see Table 2), and both the best-fitting values and errors are similar to those of the MCMC method. However, the two-dimensional contour (the upper left panel of Figure 10) is quite different from the contour of the MCMC method. The reason, as we illustrated in Section 3.2, is that the joint probability distribution of and deviates significantly from the multivariate Gaussian distribution.

For the cases with three, five, and seven components, the results all converge to the MCMC result, and all the shapes of the two-dimensional contours are similar to the MCMC result. This suggests that for and of the CDM model, three Gaussian components can characterize the joint probability distribution. Therefore, we recommend using multiple components, such as three or more, when estimating parameters of other models. However, it is not recommended to use a very large number of components (e.g. more than 10), especially for multiple-parameters cases, because the MDN will be unstable and thus it will become difficult to constrain these components.

5.2 The Number of Hidden Layers

To test the effect of hyperparameters in the following sections, we simulate SN-Ia data based on a future Wide-field Infrared Survey Telescope (WFIRST) experiment (Spergel et al., 2015) in a flat CDM model with the following fiducial values of parameters: , , and . The total number of SN-Ia samples is 2725 in the range of . We then estimate and using the MDN method with three Gaussian components. Finally, we calculate the mean deviation between the MDN results and the fiducial values:

| (32) |

where is the number of cosmological parameters, is the fiducial parameter, and and are the predicted best-fitting value and error of the cosmological parameter, respectively.

First, we test the effect of the number of hidden layers of the MDN model. We design five different MDN structures with the number of hidden layers varying from one to five. We consider RReLU here as the activation function. We train the MDN models with 3000 samples, and after 2000 epochs, we obtain the corresponding five ANN chains by feeding the simulated observations. We then calculate the mean deviations between the MDN results and the fiducial values. The mean deviation as a function of the number of hidden layers is shown in the upper left panel of Figure 11. The mean deviations of these five cases are , , , , and , respectively. We can see that there is a large deviation for MDNs with fewer or more hidden layers. An MDN with one hidden layer may not have enough layers to build a mapping between the input data and the output parameters, while an MDN with four or five hidden layers will have more difficulty learning the mixture model. We note that the training time will increase as the number of hidden layers increases. As a result, MDNs with many hidden layers become unpragmatic because of the training time.

5.3 Activation Function

The settings of the activation function may also affect the parameter estimation. Here we select several commonly used activation functions and test their effects on the performance of the MDN model: rectified linear unit (ReLU), exponential linear unit (ELU), RReLU, sigmoid function, and hyperbolic tangent (Tanh). The ReLU activation function is defined as (see also Nair & Hinton 2010)

| (33) |

The ELU is (Clevert et al., 2016)

| (34) |

where is a parameter and we set it to 1. The sigmoid function is defined by (see also Han & Moraga 1995)

| (35) |

and the hyperbolic tangent is (Malfliet, 1992)

| (36) |

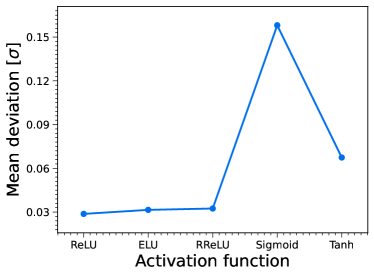

The MDN model contains three hidden layers in our work. Using the data simulated in Section 5.2, we train another five MDN models with 3000 samples to estimate and . After 2000 epochs, we obtain five ANN chains, and then calculate the corresponding deviations. The mean deviation of the parameters between the MDN results and the fiducial values as a function of the activation function is shown in the upper right panel of Figure 11. One can see that the MDN with the sigmoid function has the maximum deviation of , while the deviations of the other MDNs are all smaller than . Therefore, in our specific application of the MDN, the ReLU, ELU, RReLU, and Tanh activation functions can all be used for the MDN method.

5.4 The Number of Training Samples

To test the effect of the number of samples in the training set, we train several MDN models with the number of samples varying from 1000 to 10,000. The MDN model used here contains three hidden layers, the RReLU nonlinear function is taken as the activation function, and the MDN is trained with 2000 epochs. The mean deviation of parameters between the MDN results and the fiducial values is shown in the lower left panel of Figure 11. We can see that the mean deviation varies in the interval as the number of training samples changes. But most of the deviations are less than . Therefore, in our specific application of the MDN, thousands of samples can make an MDN model well trained. Note that the time of training of an MDN model is related to the number of samples in the training set. Therefore, considering the performance and training time of the MDN, the number of samples should be selected reasonably.

5.5 The Number of Epochs

The number of epochs may also affect the performance of the MDN. To test this, we train several MDN models with the epoch varying from 500 to 5000. The MDN models used here contain three hidden layers, the activation function is RReLU, and 3000 samples are used to train the models. With the same procedure, we train and calculate deviations between the MDN results and the fiducial values. The mean deviation as a function of the number of epochs is shown in the lower right panel of Figure 11. We can see that as the number of epochs increases, the mean deviation oscillates in the range of . Also, in our case, there is little improvement by training for more than 2000 epochs. Since the training time is also related to the number of epochs, the number of epochs should also be selected reasonably.

6 Beta Mixture Model

6.1 One Parameter

The analysis above is focused on the Gaussian distribution as the unit of the mixture model. Since the unit of the mixture model can be any type of distribution, we use a beta distribution as the unit of the mixture model in this section. A beta mixture model with components has the form

| (37) |

where is the mixture weight, are two shape parameters to be determined, and is the gamma function defined as

| (38) |

Here we use the Softplus function (Equation (17)) to guarantee and are positive. Note that the beta distribution is defined on the interval [0, 1]; thus we normalize the parameters in the training set (i.e. the target) to the range [0, 1] by using the minmax normalization technique:

| (39) |

The parameters of the beta mixture model can be estimated by minimizing the loss function

| (40) | |||||

For numerical stability, we also use the Log-Sum-Exp trick; then Equation (40) can be rewritten as

| (41) |

where

| (42) | |||||

| Methods | ||

|---|---|---|

| MCMC | ||

| MDN-Gaussian | ||

| MDN-Beta |

Since the beta distribution is for one random variable, thus to test the capability of the beta mixture model for parameter inference, we use the Pantheon SN-Ia to constrain of the CDM model. In our analysis, the Hubble constant is set to , and the absolute magnitude is set to . We consider two cases of the equation of state of dark energy: and , respectively. The case considered here is completely set manually, which is to test the case where the posterior distribution of the parameter is a truncated distribution under the physical limit. We constrain using the MCMC method and the MDN method. For the MDN method, we consider two mixture models: the Gaussian mixture model and the beta mixture model.

For the case of , we first constrain using the MCMC method. After generating an MCMC chain, the best-fitting value with error of can be calculated (see Table 4). The corresponding marginalized distribution is shown in Figure 12 with a green dashed line. We then use the MDN method with three Gaussian components to constraint and find the result (the blue solid line in Figure 12) is almost the same as that of the MCMC method for both the best-fitting value and the error. We also constrain using the MDN method with one beta component and obtain the same result of the MCMC method.

With the same procedure, we constrain for the case of . The result of the MCMC method is shown in Table 4 and the marginalized distribution is shown in Figure 13 with a green dashed line. We can see the distribution is a truncated distribution due to the physical limit. Then, we constrain with the MDN method with Gaussian components and find both the best-fitting value and the error deviate slightly from the MCMC result, and the shape of the distribution (the blue solid line in Figure 13) differs slightly from that of the MCMC method. Finally, the result of based on the MDN method with beta components is almost the same as that of the MCMC method, and the shape of the distribution (the red solid line in Figure 13) is consistent with the one based on the MCMC method.

These results show that MDN models with beta components are able to constrain parameters, and even have advantages over MDN models with Gaussian components in the case of truncated distribution. In the case of truncated distribution, the reason for the beta mixture model performing better than the Gaussian mixture model is that the beta distribution has more shape features (see Figure 14 for examples), which enables it to fit better for the posterior distribution of parameters.

6.2 Multiple Parameters

We now switch to multiparameter estimation. If parameters are not independent, the beta mixture model cannot be used when doing the MDN parameter estimation. But if the correlations between parameters are negligible, the beta mixture model can be used for multiple-parameter tasks to obtain the one-dimensional distribution of each parameter. In this case, the probability density in Equation (6.1) becomes

| (43) |

where is the number of parameters. To test this, we constrain , , and the nuisance parameter using the Pantheon SN-Ia. The Softplus function is used as the activation function considering the stability of the MDN. The one-dimensional distributions of these parameters are shown in Figure 15. We can see that the results based on the MDN with beta components are almost the same as those of the MCMC method. Our analysis shows the instability of the MDN when using Equation (43) as the probability density. The reason may be that there are correlations between parameters, but they are not considered by the MDN. Besides, there are too many components ( components in total) for the probability density in Equation (43), especially for cases of many cosmological parameters, which will also increase the instability of the MDN. Therefore, the beta mixture model based on Equation (43) should be further investigated.

As a comparison, we also constrain , , and without considering correlations between them, by using an MDN with a Gaussian mixture model. In this case, the probability density in Equation (2.2) becomes

| (44) |

The one-dimensional distributions of these three parameters are shown as green dotted lines in Figure 15. Obviously, this result is similar to that based on the beta mixture model. We note that the Gaussian mixture model performs poorly for parameters with truncated distribution (see Figure 13), and correlations between parameters will not be obtained. Therefore, the Gaussian mixture model based on Equation (2.2) is recommended for multiparameter estimates.

7 Discussion

The MDN method proposed in this paper combines an ANN and a mixture model. The main idea is to assume that the posterior distribution of parameters is a mixture of several elemental simple distributions, such as the Gaussian distribution. Once the basic distribution is determined, the posterior distribution of the parameters can be calculated. Therefore, the neural network here aims to determine the basic distribution. In general, the MDN method models a connection between measurements and parameters, where the function of the mixture model is to model the parameters, and the function of the neural network is to extract information from the measurement. The neural network used here is a fully connected network that can deal with one-dimensional data. In future work, we will generalize this technique to two- and three-dimensional cosmological data sets.

As we illustrated in Section 2.4.2 that the parameter space is updated in the training process, the parameter space will gradually approach and cover the actual values of the cosmological parameters. Therefore, the MDN method is not sensitive to the initial conditions, suggesting that the initial conditions can be set freely with an arbitrary range of parameters. This fact is an advantage over the MCMC method and is beneficial for models that lack prior knowledge.

Many hyperparameters can be chosen when using the MDN, such as the number of hidden layers, the activation function, the number of training samples, the number of epochs, and even the number of components. The analysis in Section 5 uses specific simulated (or observational) data to test the effect of these hyperparameters. Due to the selection of hyperparameters and randomization in the training process (e.g. the initialization of the network parameters (, ) in Equation (1) and the random noise added to the training set), the final estimated parameters have certain instability. But this shortcoming does not affect the fact that the MDN can get an accurate estimation with a mean deviation level of , after selecting appropriate hyperparameters (e.g. using thousands of samples and thousands of epochs).

8 Conclusions

In this work, we conducted parameter inference with the MDN method on both one data set and multiple data sets to achieve joint constraints on parameters using the Pantheon SN-Ia and the CMB power spectra of the Planck mission. Our analysis shows that a well-trained MDN model can estimate parameters at the same level of accuracy as the traditional MCMC method. Furthermore, compared to the MCMC method, the MDN method can provide accurate parameter estimates with far fewer forward simulation samples.

We considered two mixture models in the MDN model: the Gaussian mixture model and the beta mixture model. The Gaussian mixture model can theoretically be applied to any parameter, while the beta mixture model is only applicable to a case with one parameter or multiple independent parameters. Our results show that the beta mixture model outperforms the Gaussian mixture model for parameters with truncated distributions.

The MDN is an ANN-based method, making it a flexible method that is extendable to two- and three-dimensional data, which may be of great use in extracting information from high-dimensional data.

9 Acknowledgement

Y.-Z.M. acknowledges the support of the National Research Foundation with grant Nos. 120385 and 120378. J.-Q.X. is supported by the National Science Foundation of China under grant No. U1931202. All computations were carried out using the computational cluster resources at the Centre for High Performance Computing, Cape Town, South Africa. This work was part of the research program “New Insights into Astrophysics and Cosmology with Theoretical Models Confronting Observational Data” of the National Institute for Theoretical and Computational Sciences of South Africa.

References

- Aragon-Calvo (2019) Aragon-Calvo, M. A. 2019, MNRAS, 484, 5771-5784

- Alsing et al. (2018) Alsing, J., Wandelt, B., Feeney, S., 2018, MNRAS, 477, 2874-2885

- Alsing et al. (2019) Alsing, J., Charnock, T., Feeney, S., Wandelt B., 2019, MNRAS, 488, 4440-4458

- Bishop (1994) Bishop C. M., 1994, Tech. Rep. NCRG/94/004, Neural Computing Research Group, Aston University

- Casas et al. (2022) Casas, J. M., Bonavera, L., González-Nuevo, J., et al. 2022, arXiv:2205.05623

- Clevert et al. (2016) Clevert, D. A., Unterthiner, T., Hochreiter, S., 2016, International Conference on Learning Representations (ICLR), arXiv:1511.07289

- Escamilla-Rivera et al. (2020) Escamilla-Rivera, C., Quintero, M. A. C., and Capozziello, S., 2020, JCAP, 03, 008

- Fluri et al. (2018) Fluri, J., Kacprzak, T., Lucchi, A., Refregier, A., Amara, A., Hofmann, T. 2018, Phys. Rev. D 98, 123518

- Fluri et al. (2019) Fluri, J., Kacprzak, T., Lucchi, A., Refregier, A., Amara, A., Hofmann, T., Schneider, A. 2019, Phys. Rev. D 100, 063514

- Foreman-Mackey et al. (2013) Foreman-Mackey, D., Hogg, D. W., Lang, D. & Goodman, J., 2013, PASP, 125, 306

- George et al. (2018a) George, D., Shen, H. & Huerta, E. A., 2018a, PRD, 97, 101501

- George & Huerta (2018b) George, D. & Huerta, E. A., 2018b, Physics Letters B, 778, 64-70

- George & Huerta (2018c) George, D. & Huerta, E. A., 2018c, PRD, 97, 044039

- Han & Moraga (1995) Han, J. and Moraga C., 1995, IWANN, vol 930. Springer, Berlin, Heidelberg, 195-201

- Hassan et al. (2018) Hassan, S., Liu, A., Kohn, S. et al. 2018, Proc. IAU, Vol. 12 (Cambridge University Press) 47-51

- Hezaveh et al. (2017) Hezaveh, Y. D., Levasseur, L. P. & Marshall, P. J., 2017, Nature 548, 555-557

- Ioffe & Szegedy (2015) Ioffe, S., Szegedy, C., 2015, arXiv:1502.03167

- Jacobs et al. (2017) Jacobs, C., Glazebrook, K., Collett, T., More, A. & McCarthy, C., 2017, MNRAS, 471, 167-181

- Kern et al. (2017) Kern N. S., Liu A., Parsons A. R., Mesinger A., Greig B., 2017, ApJ, 848, 23

- Kreyszig (2011) Kreyszig, E., Advanced Engineering Mathematics 10th Edition, 2011, Wiley

- Kruse (2020) Kruse, J., 2020, arXiv:2003.05739

- Klypin et al. (2011) Klypin A. A., Trujillo-Gomez S., Primack J., 2011, ApJ, 740, 102

- Lewis et al. (2000) Lewis, A., Challinor, A. & Lasenby, A. 2000, ApJ, 538, 473-476

- Li et al. (2020) Li, R., Napolitano, N. R., Tortora, C., et al. 2020, ApJ, 899, 30

- Li et al. (2021) Li, R., Napolitano, N. R., Spiniello, C., et al. 2021, ApJ, 923, 16

- Li et al. (2020) Li, X., Yu, W. & Fan, X., 2020, Front. Phys. 15, 54501

- Malfliet (1992) Malfliet, W., 1992, Am. J. Phys., 60, 650-654

- Mesinger et al. (2016) Mesinger A., Greig B., Sobacchi E., 2016, MNRAS, 459, 2342-2353

- Nair & Hinton (2010) Nair, V. and Hinton, G. E., 2010, ICML, 27, 807

- Ntampaka et al. (2020) Ntampaka, M., Eisenstein, D. J., Yuan, S., Garrison, L. H., 2020, ApJ, 889, 151

- Nygaard et al. (2022) Nygaard, A., Holm, E. B., Hannestad, S., et al. 2022, arXiv:2205.15726

- Petrillo et al. (2017) Petrillo, C. E., Tortora, C., Chatterjee, S., et al., 2017, MNRAS, 472, 1129-1150

- Petroff et al. (2020) Petroff, M. A., Addison, G. E., Bennett, C. L., Weiland, J. L., 2020, ApJ, 903, 104

- Pourrahmani et al. (2018) Pourrahmani, M., Nayyeri, H. & Cooray, A., 2018, ApJ, 856, 68

- Ribli et al. (2019) Ribli, D., Pataki, B. Á., Matilla, J. M. Z. et al. 2019, MNRAS, 490, 1843

- Schaefer et al. (2018) Schaefer, C., Geiger, M., Kuntzer, T. & Kneib, J.-P., 2018, A&A, 611, A2

- Schmelzle et al. (2017) Schmelzle, J., Lucchi, A., Kacprzak, T., et al., 2017, arXiv:1707.05167

- Schmit & Pritchard (2018) Schmit, C. J. & Pritchard, J. R., 2018, MNRAS, 475, 1213-1223

- Scolnic et al. (2018) Scolnic, D. M., Jones, D. O., Rest, A. et al. 2018, ApJ, 859, 101

- Shen et al. (2019) Shen, H., George, D., Huerta, E. A., & Zhao, Z., 2019, ICASSP, 3237, arXiv:1711.09919

- Shimabukuro & Semelin (2017) Shimabukuro, H. & Semelin, B., 2017, MNRAS, 468, 3869-3877

- Spergel et al. (2015) Spergel, D., Gehrels, N., Baltay, C. et al., 2015, arXiv:1503.03757

- Springel (2005) Springel V., 2005, MNRAS, 364, 1105-1134

- Xu et al. (2015) Xu, B., Wang, N., Chen, T., Li, M., 2015, arXiv:1505.00853

- Wang et al. (2020a) Wang, G.-J., Li, S.-Y., Xia, J.-Q., 2020a, ApJS, 249, 25

- Wang et al. (2020b) Wang, G.-J., Ma, X.-J., Li, S.-Y., Xia, J.-Q., 2020b, ApJS, 246, 13

- Wang et al. (2021) Wang, G.-J., Ma, X.-J., Xia, J.-Q. 2021, MNRAS, 501, 5714-5722

- Wang et al. (2022) Wang, G.-J., Shi, H.-L., Yan, Y.-P. et al., 2022, ApJS, 260, 13

- Zen & Senior (2014) Zen, H. and Senior, A., 2014, 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 3844-3848

- Zhao et al. (2022) Zhao, X., Mao, Y., Cheng, C., Wandelt, B. D., 2022, ApJ, 926, 151